Objective Metrics and Gradient Descent Algorithms for … · 2020-06-16 · Objective Metrics and...

Transcript of Objective Metrics and Gradient Descent Algorithms for … · 2020-06-16 · Objective Metrics and...

Objective Metrics and Gradient Descent Algorithms forAdversarial Examples in Machine Learning

Uyeong JangUniversity of WisconsinMadison, [email protected]

Xi Wu∗Google

Somesh JhaUniversity of WisconsinMadison, [email protected]

ABSTRACTFueled bymassive amounts of data, models produced bymachine-

learning (ML) algorithms are being used in diverse domains

where security is a concern, such as, automotive systems, fi-

nance, health-care, computer vision, speech recognition, natural-

language processing, and malware detection. Of particular

concern is use of ML in cyberphysical systems, such as driver-

less cars and aviation, where the presence of an adversary can

cause serious consequences. In this paper we focus on attacks

caused by adversarial samples, which are inputs crafted by

adding small, often imperceptible, perturbations to force a ML

model to misclassify. We present a simple gradient-descent

based algorithm for finding adversarial samples, which per-

forms well in comparison to existing algorithms. The second

issue that this paper tackles is that of metrics. We present a

novel metric based on few computer-vision algorithms for

measuring the quality of adversarial samples.

KEYWORDSAdversarial Examples, Machine Learning

ACM Reference Format:Uyeong Jang, Xi Wu, and Somesh Jha. 2017. Objective Metrics andGradient Descent Algorithms for Adversarial Examples in MachineLearning. In Proceedings of December 4–8, 2017, San Juan, PR, USA(ACSAC 2017,). ACM, New York, NY, USA, 15 pages. https://doi.org/https://doi.org/10.1145/3134600.3134635

1 INTRODUCTIONMassive amounts of data are currently being generated in

domains such as health, finance, and computational science.

Fueled by access to data,machine learning (ML) algorithms are

also being used in these domains, for providing predictions

of lifestyle choices [7], medical diagnoses [17], facial recog-

nition [1], and more. However, many of these models that

are produced by ML algorithms are being used in domains

where security is a big concern – such as, automotive sys-

tems [26], finance [20], health-care [2], computer vision [21],

speech recognition [14], natural-language processing [29], and

cyber-security [8, 31]. Of particular concern is use of ML in

∗Work was done while at University of Wisconsin

Permission to make digital or hard copies of part or all of this work for personal

or classroom use is granted without fee provided that copies are not made or

distributed for profit or commercial advantage and that copies bear this notice

and the full citation on the first page. Copyrights for third-party components of

this work must be honored. For all other uses, contact the owner/author(s).

ACSAC 2017„ 2017© 2017 Copyright held by the owner/author(s).

ACM ISBN 978-1-4503-5345-8/17/12. . . $15.00

https://doi.org/https://doi.org/10.1145/3134600.3134635

Figure 1: To humans, these two images appear to be thesame. The image on the left is an ordinary image ofa stop sign. The image on the right was produced byadding a small, precise perturbation that forces a par-ticular image-classification DNN to classify it as a yieldsign.

cyberphysical systems, where the presence of an adversary can

cause serious consequences. For example, much of the technol-

ogy behind autonomous and driver-less vehicle development

is driven by machine learning [3, 4, 10]. Deep Neural Networks(DNNs) have also been used in airborne collision avoidance

systems for unmanned aircraft (ACAS Xu) [18]. However, indesigning and deploying these algorithms in critical cyberphysi-cal systems, the presence of an active adversary is often ignored.

In this paper, we focus on attacks on outputs or models that

are produced by machine-learning algorithms that occur aftertraining or “external attacks”, which are especially relevant

to cyberphysical systems (e.g., for a driver-less car the ML-

algorithm used for navigation has been already trained once

the “car is on the road”). These attacks are more realistic, and

are distinct from “insider attacks”, such as attacks that poison

the training data (see the paper [15] for a survey such attacks).

Specifically, we focus on attacks caused by adversarial exam-ples, which are inputs crafted by adding small, often impercep-

tible, perturbations to force a trained ML model to misclassify.

As a concrete example, consider a ML algorithm that is used

to recognize street signs in a driver-less car, which takes the

images, such as the one depicted in Figure 1, as input. While

these two images may appear to be the same to humans, the

image on the left [32] is an ordinary image of a stop sign while

the right image was produced by adding a small, precisely

crafted perturbation that forces a particular image-classifier to

classify it as a yield sign. Here, the adversary could potentially

use the altered image to cause the car to behave dangerously,

if the car did not have additional fail-safes such as GPS-based

maps of known stop-sign locations. As driver-less cars become

more common, these attacks are of a grave concern.

Our paper makes contributions along two dimensions: a

new algorithm for finding adversarial samples and better met-

rics for evaluating quality of adversarial samples. We summa-

rize these contributions below.

Algorithms: Several algorithms for generating adversarial

samples have been explored in the literature. Having a diverse

suite of these algorithms is essential for understanding the

nature of adversarial examples and also for systematically

evaluating the robustness of defenses. The second point is

underscored quite well in the following recent paper [6]. In-

tuitively, diverse algorithms for finding adversarial examples,

exploit different limitations of a classifier and thus stress the

classifier in a different manner. In this paper, we present a

simple gradient-descent based algorithm for finding adversar-

ial samples. Our algorithm is described in section 3 and an

enhancement to our algorithm appears in the appendix. Even

though our example is quite simple (although we discuss some

enhancements to the basic algorithm), it performs well in com-

parison to existing algorithms. Our algorithm, NewtonFool,

successfully finds small adversarial perturbations for all test

images we use, but also it does so by significantly reducing the

confidence probability. Detailed experimental results appear

in section 4.

Metrics: The second issue that this paper tackles is the

issue of metrics. Let us recall the adversary’s goal: given an

image I and a classifier F , the adversary wishes to find a "small"

perturbation δ , such that F (I ) and F (I + δ ) are different but Iand I + δ “look the same” to a human observer (for targeted

misclassification the label F (I + δ ) should match the label that

an adversary desires). The question is– how does one formal-

ize "small" perturbation that is not perceptible to a human

observer? Several papers have quantified "small perturbation"

by using the number of pixels changed or the difference in

the L2 norm between I and I + δ . On the other hand, in the

computer-vision community several algorithms have been

developed for tasks that humans perform quite easily, such

as edge detection and segmentation. Our goal is to leverage

these computer-vision algorithms to develop better metrics for

to address the question given before. As a first step towards

this challenging problem, we use three algorithms from the

computer-vision literature (which is described in our back-

ground section 2) for this purpose, but recognize that this is

a first step. Leveraging other computer-vision algorithms to

develop even better metrics is left as future work.

Related work: Algorithms for generating adversarial ex-

amples is a very active area of research, and we will not pro-

vide a survey of all the algorithms. Three important related

algorithms are described in the background section 2. How-

ever, interesting algorithms and observations about adversar-

ial perturbations are constantly being discovered. For exam-

ple, Kurakin, Goodfellow, and Bengio [22] show that even in

physical-world scenarios (such as deployment of cyberphysical

systems), machine-learning systems are vulnerable to adver-

sarial examples. They demonstrate this by feeding adversarial

images obtained from cell-phone camera to an ImageNet In-

ception classifier and measuring the classification accuracy of

the system and discover that a large fraction of adversarial ex-

amples are classified incorrectly even when perceived through

the camera. Moosavi-Dezfooli et al. [24] propose a systematic

algorithm for computing universal perturbations1In general,

the area of analyzing of robustness of machine-learning al-

gorithms is becoming a very important and several research

communities have started working on related problems. For

example, the automated-verification community has started

developing verification techniques targeted for DNNs [16, 19].

2 BACKGROUNDThis section describes the requisite background. We need

Moore-Penrose pseudo-inverse of a matrix for our algorithm,

which is described in section 2.1. Three techniques that are

used in our metrics are described in the next three sub-sections.

Formulation of the problem is discussed in section 2.5. Some

existing algorithms for crafting adversarial examples are dis-

cussed in section 2.6. We conclude this section with a discus-

sion section 2.7.

2.1 Moore-Penrose Pseudo-inverseGiven a n×m matrixA, a matrixA+ is called it Moore-Penrose

pseudo-inverse if it satisfies the following four conditions (AT

denotes the transpose of A):(1) AA+A = A(2) A+AA+ = A+

(3) A+A = (A+A)T

(4) AA+ = (AA+)T

For any matrix A, a Moore-Penrose pseudo-inverse exists and

is unique [11]. Given an equation Ax = b, x0 = A+b is thebest approximate solution to Ax = b (i.e., for any vector xsatisfying the equation, ∥ Ax0 − b ∥ is less than or equal to

∥ Ax−b ∥).

2.2 Edge DetectorsGiven an image, edges are defined as the pixels whose value

changes drastically from the values of its neighbor. The con-

cept of edges has been used as a fundamental local feature in

many computer-vision applications. The Canny edge detector(CED) [5] is a popular method used to detect edges, and is de-

signed to satisfy the following desirable performance criteria:

high true positive, low false positive, the distance between a

detected edge and a real edge is small, and there is no duplicate

detection of a single edge. In this section we will describe CED.

Preprocess – noise reduction.Most images contain random

noise that can cause errors in edge detection. Therefore, filter-

ing out noise is an essential preprocessing step to get stable

results. Applying convolution with a Gaussian kernel, also

know as Gaussian blur, is a common choice to smooth a given

image. For any n ∈ N, (2n + 1) × (2n + 1) Gaussian kernel is

defined as follows.

K (x ,y) =1

2πσ 2e−x2+y2

2σ 2where − n ≤ x ,y ≤ n

The denoising is dependent on the choice of n and σ .Computing the gradient. After the noise-reduction step,

CED computes the intensity gradients, by convolving with

1A single vector, which when added to an image causes its label to change.

Sobel filters. For a given image I ,the following shows an ex-

ample convolutions of 3 × 3 Sobel filters, which has been used

in our experiments (in the equations given below ∗ represents

convolution).

Gx =

−1 0 1

−2 0 2

−1 0 1

∗ I

Gy =

−1 −2 −1

0 0 0

1 2 1

∗ I

Gx and Gy encodes the variation of intensity along x-axisandy-axis respectively, therefore we can compute the gradient

magnitude and direction of each pixel using the following

formula.

G =√G2

x +G2

y

θ = tan−1

(Gy

Gx

)The angle θ is rounded to angles corresponding horizontal

(0◦), vertical (90

◦), and diagonal directions (45

◦,135◦).

Non-maximum suppression. The intensity gradient gives

us enough information about the changes of the values over

pixels, so edges can be computed by thresholding the intensity

magnitude. However, as we prefer thin and clear boundary

with no duplicated detection, Canny edge detector performs

edge thinning, which is done by suppressing each gradient

magnitude to zero unless it achieves the local maxima along

the gradient direction. Specifically, for each pixel point, among

its eight neighboring pixels, Canny edge detector chooses

two neighbors to compare according to the rounded gradient

direction θ .

• If θ = 0◦, choose the neighboring pixels at the east and

west

• If θ = 45◦, choose the neighboring pixels at the north

east and south west

• If θ = 90◦, choose the neighboring pixels at the north

and south

• If θ = 135◦, choose the neighboring pixels at the north

west and south east

These choices of pixels correspond to the direction perpen-

dicular to the possible edge, and the Canny edge detector tries

to detect a single edge achieving the most drastic change of

pixel intensity along that direction. Therefore, it compares the

magnitude of the pixel to its two neighbors along the gradient

direction, and set the magnitude to be 0 when it is smaller

than any magnitudes of its two neighbors.

Thresholding with hysteresis. Finally, the Canny edge de-

tector thresholds gradients using hysteresis thresholding. In

hysteresis thresholding, we first determine a strong edge (pixel

with gradient bigger than θhiдh ) and a weak edge (pixel with

gradient between θlow and θhiдh ), while suppressing all non-

edges (pixels with gradient smaller than θlow ). Then, theCanny edge detector checks the validity of each weak edge,

based on its neighborhood.Weak edges with at least one strong

edge neighbor will be detected as valid edges, while all the

other weak edges will be suppressed.

The performance of Canny edge detector depends highly

on the threshold parameters θlow and θhiдh , and those param-

eters should be adjusted according to the properties of input

image. There are various heuristics to determine the thresh-

olds, and we use the following heuristics in our experiments

• MNIST: While statistics over pixel values (e.g mean,

median) are usually used to determine thresholds, pixel

values in MNIST images are mostly 0, making such sta-

tistics unavailable. In this work, we empirically searched

the proper values for thresholds, sufficiently high to be

able to ignore small noise, and finally used θlow = 300

and θhiдh = 2 · θlow .

• GTSRB:When distribution of pixel value varies, usu-

ally statistics over pixel value are used to adjust thresh-

olds, because pixel gradient depends on overall bright-

ness and contrast of image. Since those image properties

varies in GTSRB images, we put thresholds as follows.

θlow = (1 − 0.33)µ

θhiдh = (1 + 0.33)µ

where µ is the mean of the values on pixels.

2.3 Fourier TransformIn signal processing, spectral analysis is a technique that trans-

forms signals into functions with respect to frequency, rather

than directly analyzing the signal on temporal or spatial do-

main. There are various mathematical operators (transforms)

converting signals into spectra, and Fourier transform is one

of the most popular operator among them. In this section we

mainly discuss two dimensional Fourier transform as it is an

operation on spatial domain where an image lies in and is

commonly applied to image analysis.

Considering an image as a function f of intensity on two

dimensional spatial domain, the Fourier transform F is written

in the following form.

F (u,v ) =

∫ ∞

−∞

∫ ∞

−∞

f (x ,y)e−2π i (ux+vy )dxdy

While this definition is written for continuous spatial do-

main, values in an image are sampled for a finite number of

pixels. Therefore, for computational purposes, the correspond-

ing transform on discrete two dimensional domain, or discrete

Fourier transform, is used in most applications. For a function

f of on a discrete grid of pixels, the discrete Fourier transform

maps it to another function F on frequency domain as follows.

F (k,l ) =M−1∑x=0

N−1∑y=0

f (x ,y) exp

[−2πi

(kx

M+ly

N

)]While naive computation of this formula requires quadratic

time complexity, there are several efficient algorithms comput-

ing discrete Fourier transformwith time complexityO (n logn),called Fast Fourier Transform (FFT), and we use the two dimen-

sional FFT in our analysis of perturbations.

Fourier transform on two dimensional spatial domain pro-

vides us spectra on two dimensional frequency (or spatial fre-

quency) domain. These spectra describe the periodic structures

across positions in space, providing us valuable information

of features of an image. Specifically, low spatial frequency cor-

responds to the rough shape structure of the image, whereas

spectrum on high spatial frequency part conveys detailed fea-

ture, such as sharp change of illumination and edges.

2.4 Histogram of Oriented GradientsThe histogram of oriented gradients (HOG) [9] is a feature

descriptor, widely used for object detection. Simply, HOG de-

scriptor is a concatenation of a number of locally normalized

histograms. Each histogram contains local information about

the intensity gradient directions, each local set of neighboring

histograms is normalized to improve the overall accuracy. By

concatenating those histogram vectors, HOG outputs a more

compact description of the shape. For object detection, a ma-

chine learning algorithm is trained over HOG descriptors of

training set images, to classify if any part of an input image

has HOG descriptor should be labeled as “detected”

Computing the gradient. Similarly to the edge detector in

2.2, HOG starts from computing gradient for each pixel. While

3×3 Sobel filters are used in Canny edge detector, HOG applies

the following simpler one dimensional filters to the input

image.

Gx =[−1 0 1

]∗ I

Gy =

−1

0

1

∗ I

Using the same formula used for Canny edge detector, HOG

computes the gradient magnitude G and direction θ of each

pixel. However, HOG does not round the angle θ , as it givesan important information for the next step.

Histogram construction. To construct histograms to be con-

catenated, HOG first divides the input image into smaller cells,consisting of pixels (e.g. 8 × 8 pixels) then compute a his-

togram for each cell. Each histogram has several bins and

each bin is assigned an angle. In [9], 9 bins corresponding to

0◦,20◦, . . . ,160◦ are used in each histogram.

Histograms are computed by the weighted vote for each

pixels in a cell by voting the weighted magnitude to one or two

bins. The weights are determined by the gradient direction θ ,based on how close the angle is from its closest two bins.

Block normalization. Since the magnitude of gradients is

highly dependent to the local illumination and contrast, each

histogram should be locally normalized. Block, consisting ofseveral number of cells (e.g. 2× 2 cells), is the region that HOG

applies the normalization to the histograms of the contained

cells. While there are various way to normalize a single block,

Dalal et.al.[9] reported that the performance is seldom influ-

enced by the choice of normalization method, except for the

case of using L1 normalization.

The resulting feature vector is dependent on the parameters

introduced above: the number of pixels per cell, the number

of cells per block, and the number of histogram bins. In our

experiments, we use the following values (which are also used

in [9]: 8 × 8 pixels per cell, 2 × 2 cells per block, and 9 bins

for each histogram. For normalization method, we use L2normalization.

2.5 FormulationThe adversarial goal is to take any input vector x ∈ ℜn

(vec-

tors will be denoted in boldface) and produce a minimally al-

tered version of x, adversarial sample denoted by x⋆, that hasthe property of being misclassified by a classifier F : ℜn → C.

For our proposes, a classifier is a function fromℜnto C, where

C is the set of class labels. Formally, speaking an adversary

wishes to solve the following optimization problem:

min∆∈ℜn µ (∆)such that F (x+∆) ∈ C

∆ ·M = 0

The various terms in the formulation are µ is a metric on

ℜn,C ⊆ C is a subset of the labels, andM (called the mask) is

a n-dimensional 0 − 1 vector of size n. The objective functionminimizes themetric µ on the perturbation∆. Next we describevarious constrains in the formulation.

• F (x+∆) ∈ CThe set C constrains the perturbed vector x+∆ 2

to

have the label (according to F ) in the set C . For mis-classification problems (the label of x and x+∆) aredifferent we have C = C −{F (x)}. For targeted mis-classification we have C = {l } (for l ∈ C), where l is thetarget that an attacker wants (e.g., the attacker wants lto correspond to a yield sign).

• ∆ ·M = 0

The vector M can be considered as a mask (i.e., an at-

tacker can only perturb a dimension i if M[i] = 0),

i.e., if M[i] = 1 then ∆[i] is forced to be 0.3Essen-

tially the attacker can only perturb dimension i if thei-th component of M is 0, which means that δ lies in

k-dimensional space where k is the number of non-zero

entries in ∆.• ConvexityNotice that even if the metric µ is convex (e.g., µ is

the l2 norm), because of the constraint involving Fthe optimization problem is not convex (the constraint∆ ·M = 0 is convex). In general, solving convex opti-

mization problems is more tractable non-convex opti-

mization [25].

Generally, machine-learning algorithms use a loss func-

tion ℓ(F ,x,y) to penalize predictions that are “far away” from

the true label tl (x) of x. For example, if we use 0 − 1 loss

function, then ℓ(F ,x,y) = δ (F (x),y), where δ (z,y) is equalto 1 iff z = y (i.e., if z , y, then δ (z,y) = 0). For nota-

tional convenience, we will write LF (·) for ℓ(F , ·, ·), whereLF (x) = ℓ(F ,x,tl (x)). Some classifiers F (x) are of the formargmaxl Fs (x) (i.e., the classifier F outputs the label with the

maximum probability). For example, in a deep-neural network

(DNN) that has a softmax layer, the output of the softmax layer

2The vectors are added component wise

3the i-the component of a vectorM is written asM[i].

is a probability distribution over class labels (i.e., the probabil-

ity of a label y intuitively means the belief that the classifier

has in the example has label y). Throughout the paper, we

sometimes refer to the function Fs as the softmax layer. In

these case, we will consider the probability distribution cor-

responding to a classifier. Formally, let c = | C | and F be a

classifier, we let Fs be the function that maps Rn to Rc suchthat ∥Fs (x)∥1 = 1 for any x (i.e., Fs computes a probability

vector). We denote F ls (x) to be the probability of Fs (x) at labell .

2.6 Some Existing AlgorithmsIn this section, we describe few existing algorithms. This sec-

tion is not meant to be complete, but simply to give a “flavor”

of the algorithms to facilitate the discussion.

Goodfellow et al. attack - This algorithm is also known as

the fast gradient sign method (FGSM) [13]. The adversary craftsan adversarial sample x⋆ = x+∆ for a given legitimate sample

x by computing the following perturbation:

∆ = ε sign(∇LF (x)) (1)

The gradient of the function LF is computed with respect

to x using sample x and label y = tl (x) as inputs. Note that∇LF (x)) is an n-dimensional vector and sign(∇LF (x)) is an-dimensional vector whose i-th element is the sign of the

∇LF (x))[i]. The value of the input variation parameter ε fac-toring the sign matrix controls the perturbation’s amplitude.

Increasing its value, increases the likelihood of x⋆ being mis-

classified by the classifier F but on the contrary makes adver-

sarial samples easier to detect by humans.

Papernot et al. attack - This algorithm is suitable for tar-

geted misclassification [28]. We refer to this attack as JSMA

throughout the rest of the paper. To craft the perturbation

∆, components are sorted by decreasing adversarial saliency

value. The adversarial saliency value S (x,t )[i] of component ifor an adversarial target class t is defined as:

S (x,t )[i] =

0 if∂Ft∂ x[i] (x) < 0 or

∑j,t

∂Fj∂ x[i] (x) > 0

∂Ft∂ x[i] (x)

����∑j,t

∂Fj∂ x[i] (x)

���� otherwise(2)

where matrix JF =[

∂Fj∂ x[i]

]

i jis the Jacobian matrix for Fs .

Input components i are added to perturbation ∆ in order of

decreasing adversarial saliency value S (x,t )[i] until the result-ing adversarial sample x⋆ = x+∆ achieves the target label t .The perturbation introduced for each selected input compo-

nent can vary. Greater individual variations tend to reduce the

number of components perturbed to achieve misclassification.

Deepfool. The recent work of Moosavi-Dezfooli et al. [23]

try to achieve simultaneously the advantages of the methods

in [33] and [12]: Being able to find small directions (compet-

itive with [33]), and being fast (competitive with [12]). The

result is the DeepFool algorithm, which is an iterative algo-

rithm that, starting at x0, constructs x1,x2, . . . . Suppose weare at xi , the algorithm first linearly approximates Fks for each

k ∈ C at xi :

Fks (x) ≈ Fks (xi ) + ∇Fks (xi ) · (x− xi )

With these approximations, thus at xi we want to find the

direction d such that for some l ′ , l

F l′

s (xi ) + ∇F l′

s (xi ) · d > F ls (xi ) + ∇Fls (xi ) · d

In other words, at iteration i let Pi be the polyhedron that

Pi =⋂k ∈C

{d : Fks (xi ) + ∇F

ks (xi ) · d

≤ F ls (xi ) + ∇Fls (xi ) · d

}

Therefore the d we are seeking for is dist(xi ,Pci ), the dis-tance of xi to the complement of Pi is the smallest. Solving this

problem exactly and iterating until a different label is obtained,results in the DeepFool algorithm.

2.7 DiscussionSeveral algorithms for crafting adversarial samples have ap-

peared in the literature. These algorithms differ in three di-

mensions: the choice of the metric µ, the set of target labelsC ⊆ L, and the maskM . None of these methods use a maskM ,

so we do not include it in the figure. We describe few attacks

according to these dimensions in Figure 2 (please refer to the

formulation of the problem at the beginning of the section).

3 OUR ALGORITHMWe now devise new algorithms for crafting adversarial pertur-

bations. Our starting point is similar to Deepfool, and assumes

that the classifier F (x) is of the form argmaxl Fs (x) and the

softmax output Fs is available to the attacker. Suppose that

F (x0) = l ∈ C, then F ls (x0) is the largest probability in Fs (x0).Note that F ls is a scalar function. Our method is to find a small

d such that F ls (x0 + d) ≈ 0. We are implicitly assuming that

there is a point x′, nearby x, that F “strongly believes” x′ doesnot belong to class l (as the belief probability is “close to 0”),

while it believes that x does. While our assumption is “aggres-

sive”, it turns out in our experiments this assumption works

quite well in practice.

More specifically, with the above discussion, we now want

to decrease the value of the function F ls as fast as possible to

0. Therefore, the problem is to solve the equation F ls (x) = 0

starting with x0. The above condition can be easily general-

ized to the condition if the probability of label l goes below

a specified threshold (i.e. F ls (x) < ϵ). We solve this problem

based on Newton’s method for solving nonlinear equations.

Specifically, for i = 0,1,2, . . . , suppose at step i we are at xi ,we first approximate F ls at xi using a linear function

F ls (x) ≈ F ls (xi ) + ∇Fls (xi ) · (x− xi ). (3)

Let di = x− xi be the perturbation to introduce at iteration

i , and pi = F ls (xi ) be the current belief probability of xi asin class l . Assuming that we have not found the adversarial

example, pi must assume the largest value at the softmax layer,

thus in particular pi ≥ 1/| C |. Finally, denote gi = ∇Fls (xi ) be

the gradient of F ls at xi . Then our goal is to solve the following

linear system for di :

pi + gi · di = pi+1 (4)

Method C Short Description

FGSM C −{F (x)} Adds a perturbation which is proportional to the sign of the

gradient of the loss function at the image x.Papernot et al. {l } Constructs a saliency matrix S (x,t ), where x is the image

JSMA t is the target label. In each iteration, change the pixel according to the saliency matrix.

Deepfool C −{F (x)} In each iteration, tries to push the probabilities of

other labels l ′ higher than the current label l .

Figure 2: The second column indicates whether the method is suited for targeted misclassification. The last columngives a short description of the method.

where pi+1 < pi is some appropriately chosen probability

value we want to achieve in the next iteration. Denote δi =pi − pi+1 be the decrease of probability. Now we are ready to

describe the basic version of our new algorithm called New-tonFool.

Basic Version of NewtonFool. We start with a simple ob-

servation: Given pi+1, the minimal-norm solution to (4) is

d∗i = g†i (pi+1 − pi ) = −δi g†i where g

†i is the Moore-Penrose

inverse of gi (see the background section for a description of

Moore-Penrose inverse). For rank-1 matrix gi , g†i is precisely

gi /∥ gi ∥2, and so

d∗i = −δi gi∥ gi ∥2

(5)

Therefore the question left is how to pick pi+1. We make two

observations: First, it suffices that pi+1 < 1/| C | in order to

“fool” the classifier into a different class. Therefore we have an

upper bound of δi , namely

δi < pi − 1/| C |.

Second, note that we want small perturbation, which means

that ∥ d∗i ∥ is small. This can be formalized as ∥ d∗i ∥ ≤ η∥ x0 ∥for some parameter η ∈ (0,1). Since ∥ d∗i ∥ = δi/∥ gi ∥, thisthus translates to

δi ≤ η∥ x0 ∥∥ gi ∥

Combining the two conditions above we thus have

δi ≤ min

{η∥ x0 ∥∥ gi ∥,pi −

1

| C |

}We can thus pick

δ∗i = min{η∥ x0 ∥∥ gi ∥,pi − 1/| C |}. (6)

Plugging this back into (5), we thus get direction

d∗i = −δ∗i gi /∥ gi ∥

2 .

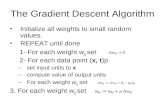

This gives the algorithm shown in 1.

Astute readers may realize that this is nothing but gradient

descent with step size δ∗i /∥∇Fls (xi )∥2. However, there is an

important difference: The step size of a typical gradient descent

procedure is tuned on a complete heuristic basis. However,

in our case, exploiting the structure of softmax layer and our

assumption on the vulnerability of the neural network, the

step size is determined once the intuitively sensible parameter

η is fixed (which controls how small the perturbation we want).

Note also that the step size changes over time according to

Algorithm 1

Input: x0,η, maxIter , a neural network F with a softmax

layer Fs .1: function NewtonFoolMinNorm(x0,η,maxIter ,F )2: l ← F (x0)3: d← 04: for i = 0,1,2, . . . ,maxIter − 1 do5: δ∗i ← min

{η∥ x0 ∥∥∇F ls (xi )∥,F ls (xi ) −

1

| C |

}

6: d∗i ← −δ ∗i ∇F

ls (xi )

∥∇F ls (xi ) ∥2

7: xi+1 ← xi + d∗i8: d← d+ d∗i9: return d

(6): If initially pi − 1/| C | is too large, the step size is then

determined by gi , the gradient vector we obtained on the

current image xi . Otherwise, as pi becomes closer and closer

to 1/| C |, we will instead adjust the step size according to

pi − 1/| C |.

3.1 EnhancementsOur basic algorithm attempts to drive down the probability

of the label l with the maximum probability according Fs . We

have extended this algorithm to consider multiple labels, but

we have not implemented this enhancement. Our enhance-

ment tries to “drive down” the probability of all labels in a

set L+ and “drive up” the probability of all labels in the set

L− (presumably an adversary wants an adversarial example

whose label is in L−). Our enhanced algorithm is described in

the appendix A.

3.2 Possible Benefits in Convergence withNewton’s Method

We now give some heuristic arguments regarding the benefits

of using Newton’s method. The main possible benefit is faster

convergence to an adversarial example. At the high level, the

main reason under the hood is actually that Newton’s method

is locally quadratically convergent to a zero x∗ when solving a

non-linear system of equations [30].

To start with, let us first consider an extreme case where,

actually, the convergence of Newton’s method does not apply

directly. Suppose that in a reasonably small neighborhood

N (x ,δ ) (under some norm), we have x∗ so that F ls (x∗) = 0.

That is, x∗ is a perfect adversarial example where we believe

that it is not label l with probability 1. Note that in this case x∗

is also a local minimal of F ls because Fls is non-negative. In this

case, the gradient of F ls is however singular (0), so we cannot

apply the convergence result of Newton’s method to conclude

fast convergence to x∗, to give that F ls (x∗) = 0. However, a

crucial point now is that while we will not converge to x∗

exactly, at some point in this iterative process, F ls (xk ) will besmall enough so that the network will no longer predict xk as

label l (which is the correct label), and thus gives an adversarialexample. If we let k∗ be the first iterate such that xk∗ is anadversarial example, our goal is thus to argue fast convergence

to xk∗ (where the gradient exists but not singular).

Suppose now that F ls (xk∗ ) = p > 0, then if we instead solve

that F ls (x ) −p = 0, then the zero point (for example xk∗ ) has anon-singular gradient, and so the convergence result of New-

ton’s method applies and says that we will converge quadrati-

cally to xk∗ . But this is exactly what NewtonFool algorithm

is doing, modulo picking p: Starting with the hypothesis that

nearby we have a adversarial point with very low confidence

in l , we pick a heuristic p, and apply Newton’s method to solve

the equation so that the confidence hopefully decreases to p –

If our guess is somewhat accurate, then we will quickly con-

verge to an adversarial point. Of course, the crucial problem

left is to pick the p – which we give some sufficient condition

(such as 1/| C |) to get guarantees.

4 EXPERIMENTSIn this section we present an empirical study comparing our

algorithms with previous algorithms in producing adversarial

examples. Our goals are to examine the tradeoff achieved

by these algorithms in their (1) effectiveness in producing

“indistinguishable” adversarial perturbations, and (2) efficiency

in producing respective perturbations. More specifically, the

following are three main questions we aim to answer:

(1) How effective is NewtonFool in decreasing the confidenceprobability at the softmax layer for the correct label inorder to produce an adversarial example?

(2) How is quality of the adversarial examples produced byNewtonFool compared with previous algorithms?

(3) How efficient is NewtonFool compared with previous al-gorithms?

In summary, our findings are the following:

• (1)+(3) NewtonFool achieves a better effectiveness-efficiencytradeoff than previous algorithms. On one hand, New-

tonFool is significantly faster than either DeepFool (up

to 49X faster) or JSMA (up to 800X faster), and is only

moderately slower than FGSM. This is not surprising

since NewtonFool does not need to examine all classes

for complex classification networks with many classes.

On the other hand, under the objective metric of Cannyedge detection, NewtonFool produces competitive and

sometimes better adversarial examples when compared

with DeepFool, and both of them produce typically sig-

nificant better adversarial examples than JSMA and

FGSM.

• (2) NewtonFool is not only effective in producing goodadversarial perturbations, but also significantly reduces

the confidence probability of the correct class. Specifically,in our experiments not only NewtonFool successfully

finds small adversarial perturbations for all test images

we use, but also it does so by significantly reducing the

confidence probability, typically from 0.8-0.9 to 0.2-0.4.

While previous work have also shown that confidence

probability can be significantly reduced with small ad-

versarial perturbations, it is somewhat surprising that

a gradient descent procedure, constructed under an ag-gressive assumption on the vulnerabilities of deep neuralnetworks against adversarial examples, can achieve sim-

ilar effects in such a uniform manner.

Two remarks are in order. First, we stress that, different

from DeepFool and JSMA where these two algorithms are

“best-effort” in the sense that they leverage first-order informa-

tion from all classes in a classification network to construct

adversarial examples, NewtonFool exploits an aggressive as-

sumption that, “nearby” the original data point, there is an-

other point where the confidence probability in the “correct

class” is significantly lower. The exploitation of this assump-

tion is similar to the structural assumption made by FGSM, yet

NewtonFool gives significantly better adversarial examples

than FGSM. Second, note that typically the training of a neuralnetwork is gradient descent on the hypothesis space (i.e., pa-

rameters of the network) with features (training data points)

fixed. In this case, NewtonFool is “dual” in the sense that it is

a gradient descent on the feature space (i.e., training features)

with network parameters fixed. As a result of the above two

points, we believe that the success of NewtonFool in produc-

ing adversarial examples gives a more explicit demonstration

of vulnerabilities of deep neural networks against adversarial

examples than previous algorithms.

In the rest of this section we give more details of our ex-

periments. We begin by presenting experimental setup in Sec-

tion 4.1. Then in Section 4.2 we provide experimental results

on the effectiveness of NewtonFool. Finally in Section 4.3 and

Section 4.4 we report perturbation quality and efficiency of

NewtonFool compared with previous algorithms.

4.1 Experimental SetupThe starting point of our experiments is the cleverhans projectby Papernot et al. [27], which implements FGSM, JSMA and a

convolutional neural network (CNN) architecture for study-

ing adversarial examples. We reuse their implementations

of FGSM and JSMA, and the network architecture in our ex-

periments to ensure fair comparisons. Our algorithms are

implemented as extensions of cleverhans4 using Keras andTensorFlow. We also ported the publicly available implemen-

tation of DeepFool [23] to cleverhans. All the experiments

are done on a system with Intel Xeon E5-2680 2.50GHz CPUs

(48-core), 64GB RAM, and CUDA acceleration using NVIDIA

Tesla K40c.

Datasets. In our experiments we considered two datasets.

4cleverhans is a software library that provides standardized reference implemen-

tations of adversarial example construction techniques and adversarial training

and can be found at https://github.com/tensorflow/cleverhans.

MNIST. MNIST dataset consists of 60,000 grayscale images of

hand-written digit. There are 50,000 images for training and

10,000 images for testing. Each image is of size 28 × 28 pixels,

and there are ten labels {0, . . . ,9} for classification.

GTSRB. GTSRB dataset consists of colored images of traffic

signs, with 43 different classes. There are in total 39,209 images

for training and 12,630 images for testing. In official GTSRB

dataset, image sizes vary from 15 × 15 to 250 × 250 pixels, and

we use the preprocessed dataset containing all images rescaled

to size 32 × 32.

CNN Architecture. The CNN we used in experiments for

both datasets is the network architecture defined in cleverhans.This networks has three consecutive convolutional layers with

ReLu activation, followed by a fully connected layer outputting

softmax function values for each classes. We train a CNN

classifier achieving 94.14% accuracy for MNIST testset after

6-epoch training, and another CNN classifier achieving 86.12%

accuracy for GTSRB testset after 100-epoch training.

ExperimentalMethodology. Since JSMA andDeepFool take

too long to finish on the entire MNIST and GTSRB datasets, we

use sampling based method for evaluation. More specifically,

for MNIST we run ten independent experiments, where in

each experiment we randomly choose 50 images, for each of

the ten labels in {0, . . . ,9}. We do similar things for GTSRB

except that we choose 20 images at random for each of the

6 chosen classes among 43 possible classes. Those 6 classes

were intentionally chosen to include diverse shapes, different

colors, and varying complexity of embedded figures, of traffic

signs.

With the sampled images, we then attack each CNN classi-

fier using different adversarial perturbation algorithms , and

evaluate the quality of perturbation and efficiency. Specifically:

(1) For NewtonFool, given data input x0 where it is classifiedas l , F ls (x0) − F ls (x0 +d) in order to evaluate the effectiveness

of NewtonFool in reducing confidence probability in class l .(2) To evaluate the perturbation quality, we use three algo-

rithms (Canny edge detector, FFT, and HOG) to evaluate the

quality of the perturbed example.

(3) Finally we record running time of the attacking algorithm

in order to evaluate efficiency.

Tuning ofDifferentAdversarial PerturbationAlgorithms.Finally, we describe how we tune different adversarial pertur-

bation algorithms in order to ensure a fair comparison.

FGSM. To find the optimal value for ϵ minimizing the final

perturbation ∆, we iteratively search from 0 to 10, increasing

by 10−3. We use the first ϵ achieving adversarial perturbation

to generate the adversarial sample. Searching time for the best

ϵ value is also counted towards the running time.

JSMA. Since target label l must be specified in JSMA, we try

all possible targets, and then choose the best result that the

adversarial example achieves the minimum number of pixel

changes. For each trial, we use fixed parameters ϒ = 14.5%,

θ = 1, which are valued used in [28]. We always increase the

pixel values for perturbations. For all target labels, attack times

are counted to the running time.

DeepFool. We fix η = 0.02, which is the value used in [23] to

adjust perturbations in each step.

NewtonFool. We fix η = 0.01, which is the parameter used

to determine the step size δi/∥∇Fls (xi )∥2 in gradient descent

steps.

4.2 Effectiveness of NewtonFoolThis section reports results in evaluating the effectiveness

of NewtonFool. We do so by measuring (1) success rate of

generating adversarial examples, and (2) changes in CNN clas-

sifier’s confidence in its classification. Table 1 summarizes

results for both. Specifically, column 5 and 6 gives the total

number of images we use to test and the total number of

successful attacks. In our experiments, NewtonFool achieves

perfect success rate. Column 4 gives the success probability

if ones makes a uniformly random guess, which is the value

NewtonFool algorithm aims to achieve in theory. Column 2

and 3 gives results on the reduction of confidence before and

after attacks, respectively, for both MNIST and GTSRB. We see

that in practice while the confidence we end at is larger than

1/| C |, NewtonFool still significantly reduce the confidence

at the softmax layer, typically from “almost sure” (0.8-0.9) to

“not sure” (0.2-0.4), while succeeding to produce adversarial

perturbations.

4.3 Quality of Adversarial PerturbationNow we evaluate the quality of perturbations using an ob-jective metric: Recall that an objective metric measures the

quality of perturbation independent of the optimization objec-

tive, and thus serves a better role in evaluating to what degree

a perturbation is “indistinguishable.”. Specifically, in our exper-

iments we use the classic techniques of computer vision, as the

objective metrics: Canny edge detection, fast Fourier transform,

and histogram of oriented gradients.Given an input image, Canny edge detection finds a wide

range of edges in an image, which is a critical task in computer

vision. We use Canny edge detection in the following way: We

count the number of edges detected by the detector and use

that as a metric for evaluation. Intuitively, an indistinguish-

able perturbation should maintain the number of edges after

perturbation very close to the original image. Therefore, by

measuring how close this count is to the count on the original

image, we have a metric on the quality of the perturbation:

Smaller the metric, better the perturbation. Note that there are

many edge detection methods.We chose Canny edge detection

since it is one of the most popular and reliable edge detection

algorithms.

Discrete Fourier transform maps images to the two dimen-

sional spartial domain, allowing us to analyse the perturba-

tions according to their spectra.When analysing an imagewith

its spectrum of spatial frequencies, higher frequency corre-

sponds to feature details and abrupt change of values, whereas

lower frequency corresponds to global shape information. As

adversarial perturbations does not change the general shape

but corrupt detailed features of the input image, measuring

the size of the high frequency part of the spectrum is desir-

able as the metric for feature corruption. Therefore, we first

Dataset Confidence be-

fore attack

Confidence af-

ter attack1/|C|

Number of attacked

samples

Number of successful

attacks

MNIST 0.926 (0.135) 0.400 (0.065) 0.1 5000 5000

GTSRB 0.896 (0.201) 0.245 (0.102) 0.023 1200 1200

Table 1: Confidence reduction and success rate of NewtonFool. Column 2 and 3 gives results on the reduction ofconfidence before and after attacks. Column 4 gives the success probability if one makes a uniformly random guess,which is the value NewtonFool algorithm aims to achieve in theory. Column 5 and 6 gives the success rate results.

compute the Fourier transform of the perturbation, discard

the values lies in the low frequency part, then measure the

l2 norm difference of the remaining part as a metric: Smaller

metric implies that less feature corruption has been induced

by the adversarial perturbation. For the low frequency part

to be discarded, we chose the intersection of lower halves of

the frequency domain along each dimensions (horizontal and

vertical).

Object detection with HOG descriptor is done by sweep-

ing a large image with a fixed size window, computing the

HOG descriptor of the image restricetd in the window, and

using a machine learning algorithm (e.g. SVM) to classify the

descriptor vector as “detected” and “not detected”. That is, if

a perturbed image has HOG descriptor relatively close to the

HOG descriptor of the original image, then the detecting algo-

rithm is more likely to detect the perturbed image whenever

the original image is detected. From this, we suggest another

objective metric that measures the l2 norm difference between

two HOG descriptor vectors: One computed from the original

image and the other from the perturbed image. The perturba-

tions resulting smaller HOG vector difference are considered

to have better quality.

In summary, in our experiments we find that: (1) Among all

algorithms DeepFool and NewtonFool generally produce the

best results, and typically are significantly better than FGSM

and JSMA. (2) Further, DeepFool and NewtonFool are stableon both datasets and both of them give good results on both

datasets. On the other hand, while FGSM performs relatively

well on MNIST, it gives poor results on GTSRB, and JSMA

performs the opposite: It performs well on GTSRB, but not

on MNIST. (3) Finally, for all tests we have, NewtonFool gives

competitive and sometimes better results than DeepFool. In

the rest of this section we give detailed statistics. Results for

HOG are provided in the appendix B.

Table 2 and Table 3 reports the number of edges we find on

MNIST and GTSRB, respectively. Each column gives the results

on the class represented by the image at the top, and each row

presents the mean and standard deviation of the number of

detected edges. Specifically, the first row gives the statistics

on the original image, and the other rows gives the statistics

on the adversarial examples found by different adversarial

perturbation algorithms.

On MNIST (Table 2), FGSM, DeepFool and NewtonFool

give similar results, where the statistics is very close to the

statistics on the original images. DeepFool and NewtonFoolare

especially close. On the other hand, we note that JSMA pro-

duces significantly more edges compared to other methods.

The result suggests that perturbations from JSMA can be more

perceivable than perturbations produced by other algorithms.

On GTSRB dataset, the situation changes a little. Now FGSM

produces theworst results among all algorithms (instead of JSMA),

and it produces significantly more edges been detected on

its produced adversarial examples. While the quality of ad-

versarial examples produced by JSMA improves significantly,

it is still slightly worse than those produced by DeepFool

and NewtonFool in most cases (except for the last sign). Inter-

estingly, NewtonFool gives the best result for all signs (again,

except the last one).

Table 4 and Table 5 presents the distance on the domain of

high spatial frequency we computed on MNIST and GTSRB,

respectively. Again, each column gives the results on the class

represented by the image at the top, and each row presents the

mean and standard deviation of the hight frequency distances.

For each row, we first generated adversarial perturbations

using the algorithm of the row, computed the fast Fourier

transform of the perturbation, then computed the l2 norm of

the high spatial frequency part.

On both of MNIST dataset and GTSRB dataset, DeepFool

and NewtonFool produce very close results, achieving smaller

values than the other two algorithms. On the other hand, the

statistics on FGSM and JSMA are relatively worse. Especially,

JSMA changes the high frequency part significantly more.

From this result, perturbations from JSMA seem to corrupt

more feature details than other algorithms. Remarkably, New-

tonFool maintains the best result over all experiments on our

Fourier transform metric.

4.4 EfficiencyIn the final part of our experimental study we evaluate the

efficiency of different algorithms. We measure the end-to-end

time to produce adversarial examples. In short, we find that

NewtonFool is substantially faster than either DeepFool (up

to 49X) or JSMA (up to 800X), while being only moderately

slower than FGSM. This is not surprising because NewtonFool

does not need to examine first-order information for all classesof a complex classification network for many classes. However,

it is somewhat surprising that, NewtonFool, a gradient proce-dure exploiting an aggressive assumption on the vulnerability

of CNN, achieves substantially better efficiency while produc-

ing competitive and often times better adversarial examples.

Due to space limitations detailed results are provided in the

appendix B.

Original image 1.71 (0.49) 1.07 (0.35) 1.36 (0.59) 1.17 (0.52) 1.22 (0.53)

FGSM 1.62 (0.54) 1.07 (0.34) 1.37 (0.66) 1.20 (0.55) 1.25 (0.57)

JSMA 2.34 (0.84) 2.39 (1.30) 2.00 (1.01) 1.69 (0.96) 1.67 (0.82)

DeepFool 1.60 (0.53) 1.06 (0.31) 1.34 (0.61) 1.17 (0.53) 1.26 (0.60)

NewtonFool 1.62 (0.54) 1.07 (0.34) 1.34 (0.61) 1.19 (0.55) 1.25 (0.59)

Original image 1.22 (0.52) 1.78 (0.78) 1.08 (0.34) 2.23 (0.90) 1.56 (0.62)

FGSM 1.23 (0.51) 1.76 (0.78) 1.12 (0.38) 2.20 (0.90) 1.57 (0.68)

JSMA 1.97 (1.08) 2.47 (1.21) 1.87 (1.07) 2.95 (1.21) 2.21 (0.97)

DeepFool 1.23 (0.53) 1.75 (0.81) 1.11 (0.38) 2.14 (0.91) 1.53 (0.63)

NewtonFool 1.23 (0.55) 1.75 (0.81) 1.11 (0.38) 2.15 (0.91) 1.54 (0.64)

Table 2: MNIST: Results with Canny edge detection. FGSM, DeepFool and NewtonFool give close results, with New-tonFool being especially close with DeepFool. JSMA, on the other hand, produces significantly larger statistics withrespect to the Canny edge detection metric.

Original image 13.73 (6.65) 17.85 (6.84) 11.69 (6.00) 18.14 (7.41) 10.37 (5.42) 13.66 (6.28)

FGSM 18.88 (7.71) 22.63 (7.76) 19.11 (7.56) 20.97 (8.01) 17.89 (7.92) 20.45 (9.46)

JSMA 15.04 (6.62) 18.54 (6.69) 12.95 (5.86) 19.18 (7.83) 11.20 (5.53) 14.71 (6.24)

DeepFool 14.84 (6.61) 18.82 (7.34) 12.96 (5.67) 18.40 (7.03) 11.16 (5.30) 15.06 (7.13)

NewtonFool 14.68 (6.88) 18.34 (7.11) 12.81 (5.73) 18.37 (7.25) 10.62 (5.20) 15.02 (7.08)

Table 3: GTSRB: Results with Canny edge detection. Now JSMA, DeepFool and NewtonFool give close results, whileFGSM produces significantly worse results. NewtonFool gives the best results across all tests (except for the last sign).

FGSM 20.50 (8.13) 13.01 (3.38) 15.96 (8.56) 13.56 (7.72) 11.95 (6.64)

JSMA 44.19 (7.29) 50.28 (8.37) 44.73 (8.53) 42.00 (9.24) 36.60 (6.52)

DeepFool 10.26 (3.57) 5.14 (1.13) 8.88 (4.33) 6.78 (3.49) 5.98 (2.73)

NewtonFool 9.57 (3.53) 4.62 (1.08) 8.26 (4.11) 6.17 (3.30) 5.39 (2.69)

FGSM 12.57 (6.70) 15.33 (6.07) 15.79 (7.95) 11.99 (6.52) 10.43 (5.11)

JSMA 42.37 (9.04) 48.67 (9.42) 45.07 (8.69) 49.44 (11.69) 44.41 (9.35)

DeepFool 6.39 (3.11) 8.54 (3.17) 7.37 (3.09) 7.42 (3.82) 6.70 (3.01)

NewtonFool 5.95 (2.96) 7.83 (3.01) 6.60 (2.91) 6.76 (3.62) 5.96 (2.80)

Table 4: MNIST: Results with fast Fourier transform. DeepFool and NewtonFool give close results. FGSM and JSMAproduce worse results with JSMA being significantly larger. NewtonFool produces the best results for all labels.

5 LIMITATIONS AND FUTUREWORKWe have not implemented the enhancement to our algorithm

described in the appendix. In the future, we will implement

the enhancement and compare its performance to our basic

algorithm. The computer-vision research literature has sev-

eral algorithms for analyzing images, such as segmentation,

edge detection, and deblurring. In our evaluation we use one

algorithm (i.e., edge detection) as a metric. Incorporating other

computer-vision algorithms into a metric is a very interesting

avenue for futurework. For example let µ1,µ2, · · · ,µk bek met-

rics (e.g., based on number of pixels, edges, and segments). We

could consider a weighted metric (i.e. the difference between

two images x1 and x2 is∑ki=1wi µi (x1,x2)). The question re-

mains – what weights to choose? Perhaps mechanical turk

FGSM 46.86 (36.94) 37.50 (15.97) 44.34 (27.72) 37.81 (27.04) 15.12 (10.47) 50.84 (22.82)

JSMA 54.95 (28.17) 66.81 (18.04) 79.87 (23.89) 72.62 (29.64) 56.14 (15.56) 78.91 (24.02)

DeepFool 9.67 (7.18) 7.33 (2.70) 8.07 (3.96) 7.88 (6.43) 4.38 (1.21) 8.78 (4.41)

NewtonFool 9.02 (7.66) 6.16 (2.54) 6.84 (3.84) 6.93 (6.12) 3.49 (0.86) 7.95 (4.34)

Table 5: GTSRB: Results with fast Fourier transform. Again, DeepFool and NewtonFool give close results, while FGSMand JSMA produce worse results. Across all tests, NewtonFool achieves the best results.

studies can be used to train the appropriate weights. Moreover,

the weighted metric

∑ki=1wi µi (x1,x2) could also be incorpo-

rated into algorithms for constructing adversarial examples.

All these directions are very important avenues for future

work.

6 CONCLUSIONThis paper presented a gradient-descent based algorithm for

finding adversarial examples.We also presented edge detectors

as a way for evaluating the quality adversarial examples gen-

erated by different algorithms. The research area of crafting

adversarial examples is very active. On the other hand, metrics

for evaluating the quality of adversarial examples has not been

well studied. We believe that incorporating computer-vision

algorithms into metrics is worthwhile goal and worthy of fur-

ther research. Moreover, having a diverse set of algorithms

for crafting adversarial examples is very important towards a

thorough evaluation of proposed defenses.

ACKNOWLEDGMENTSWe are grateful to the ACSAC reviewers for their valuable

comments and suggestions. We also thank Adam Hahn for

his patience during the submission process. This material is

based upon work supported by the Army Research Office

(ARO) under contract number W911NF-17-1-0405. Any opin-

ions, findings, conclusions and recommendations expressed in

this material are those of the authors and do not necessarily

reflect the views of the funding agencies.

REFERENCES[1] DeepFace: Closing the Gap to Human-Level Performance in Face Verifica-

tion. In Conference on Computer Vision and Pattern Recognition (CVPR).[2] Babak Alipanahi, Andrew Delong, Matthew T Weirauch, and Brendan J

Frey. 2015. Predicting the sequence specificities of DNA-and RNA-binding

proteins by deep learning. Nature biotechnology (2015).

[3] M. Bojarski, D. Del Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L.

Jackel, M. Monfort, U. Muller, J. Zhang, X. Zhang, J. Zhao, and K. Zieba.

2016. End to End Learning for Self-Driving Cars. Technical Report.[4] Mariusz Bojarski, Davide Del Testa, Daniel Dworakowski, Bernhard Firner,

Beat Flepp, Prasoon Goyal, Lawrence D. Jackel, Mathew Monfort, Urs

Muller, Jiakai Zhang, Xin Zhang, Jake Zhao, and Karol Zieba. 2016. End

to End Learning for Self-Driving Cars. CoRR abs/1604.07316 (2016). http:

//arxiv.org/abs/1604.07316.

[5] J Canny. 1986. A Computational Approach to Edge Detection. IEEE Trans.Pattern Anal. Mach. Intell. 8, 6 (June 1986), 679–698. https://doi.org/10.

1109/TPAMI.1986.4767851

[6] Nicholas Carlini and David Wagner. 2017. Towards Evaluating the Robust-

ness of Neural Networks. In IEEE Symposium on Security and Privacy.[7] Chih-Lin Chi, W. Nick Street, Jennifer G. Robinson, and Matthew A. Craw-

ford. 2012. Individualized Patient-centered Lifestyle Recommendations:

An Expert System for Communicating Patient Specific Cardiovascular Risk

Information and Prioritizing Lifestyle Options. J. of Biomedical Informatics45, 6 (Dec. 2012), 1164–1174.

[8] George E Dahl, Jack W Stokes, Li Deng, and Dong Yu. 2013. Large-scale

malware classification using random projections and neural networks. In

Proceedings of the IEEE International Conference on Acoustics, Speech andSignal Processing (ICASSP). IEEE, 3422–3426.

[9] Navneet Dalal and Bill Triggs. 2005. Histograms of Oriented Gradients

for Human Detection. In Proceedings of the 2005 IEEE Computer SocietyConference on Computer Vision and Pattern Recognition (CV PR’05) - Volume1 - Volume 01 (CVPR ’05). IEEE Computer Society, Washington, DC, USA,

886–893. https://doi.org/10.1109/CVPR.2005.177

[10] Nathan Eddy. 2016. AI, Machine Learning Drive

Autonomous Vehicle Development. http://www.

informationweek.com/big-data/big-data-analytics/

ai-machine-learning-drive-autonomous-vehicle-development/d/

d-id/1325906. (2016).

[11] Leslie Hogben (Editor). 2013. Handbook of Linear Algebra. Chapman and

Hall/CRC.

[12] Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. 2014. Explaining

and Harnessing Adversarial Examples. CoRR (2014).

[13] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. 2015. Explain-

ing and Harnessing Adversarial Examples. In Proceedings of the 2015 In-ternational Conference on Learning Representations. Computational and

Biological Learning Society.

[14] Geoffrey Hinton, Li Deng, Dong Yu, George E Dahl, Abdel-rahman

Mohamed, Navdeep Jaitly, Andrew Senior, Vincent Vanhoucke, Patrick

Nguyen, Tara N Sainath, et al. 2012. Deep neural networks for acoustic

modeling in speech recognition: The shared views of four research groups.

IEEE Signal Processing Magazine 29, 6 (2012), 82–97.[15] Ling Huang, Anthony D Joseph, Blaine Nelson, Benjamin IP Rubinstein,

and JD Tygar. 2011. Adversarial machine learning. In Proceedings of the4th ACM workshop on Security and artificial intelligence. ACM, 43–58.

[16] X. Huang, M. Kwiatkowska, S. Wang, and M. Wu. 2017. Safety Verification

of Deep Neural Networks.

[17] International Warfarin Pharmacogenetic Consortium. 2009. Estimation of

the Warfarin Dose with Clinical and Pharmacogenetic Data. New EnglandJournal of Medicine 360, 8 (2009), 753–764.

[18] K. Julian, J. Lopez, J. Brush, M. Owen, and M. Kochenderfer. 2016. Policy

Compression for Aircraft Collision Avoidance Systems. In Proc. 35th DigitalAvionics Systems Conf. (DASC).

[19] Guy Katz, Clark Barrett, David Dill, Kyle Julian, and Mykel Kochenderfer.

2017. An Efficient SMT Solver for Verifying Deep Neural Networks.

[20] Eric Knorr. 2015. How PayPal beats the bad guys with machine learn-

ing. http://www.infoworld.com/article/2907877/machine-learning/how-

paypal-reduces-fraud-with-machine-learning.html. (2015).

[21] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. 2012. Imagenet

classification with deep convolutional neural networks. In Advances inneural information processing systems. 1097–1105.

[22] A. Kurakin, I. J. Goodfellow, and S. Bengio. 2016. Adversarial Examples in

the Physical world. (2016).

[23] Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, and Pascal Frossard.

2015. DeepFool: a simple and accuratemethod to fool deep neural networks.

CoRR (2015).

[24] Seyed Mohsen Moosavi Dezfooli, Alhussein Fawzi, Omar Fawzi, and Pascal

Frossard. 2017. Universal adversarial perturbations. In Proceedings of 2017IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[25] Jorge Nocedal and Stephen Wright. 2006. Numerical Optimization.Springer.

[26] NVIDIA. 2015. NVIDIA Tegra Drive PX: Self-Driving Car Computer. (2015).

http://www.nvidia.com/object/drive-px.html

[27] Nicolas Papernot, Ian Goodfellow, Ryan Sheatsley, Reuben Feinman, and

Patrick McDaniel. 2016. cleverhans v1.0.0: an adversarial machine learning

library. arXiv preprint arXiv:1610.00768 (2016).[28] Nicolas Papernot, Patrick McDaniel, Somesh Jha, Matt Fredrikson,

Z. Berkay Celik, and Ananthram Swami. 2016. The Limitations of Deep

Learning in Adversarial Settings. In Proceedings of the 1st IEEE EuropeanSymposium on Security and Privacy. arXiv preprint arXiv:1511.07528.

[29] Jeffrey Pennington, Richard Socher, and Christopher D Manning. 2014.

Glove: Global vectors for word representation. Proceedings of the EmpiricialMethods in Natural Language Processing (EMNLP 2014) 12 (2014), 1532–1543.

[30] Alfio Quarteroni, Riccardo Sacco, and Fausto Saleri. 2000. Numericalmathematics. p.307.

[31] Eui Chul Richard Shin, Dawn Song, and Reza Moazzezi. 2015. Recogniz-

ing functions in binaries with neural networks. In 24th USENIX SecuritySymposium (USENIX Security 15). 611–626.

[32] J. Stallkamp, M. Schlipsing, J. Salmen, and C. Igel. 2012. Man vs. computer:

Benchmarking machine learning algorithms for traffic sign recognition.

Neural Networks 0 (2012), –. https://doi.org/10.1016/j.neunet.2012.02.016[33] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru

Erhan, Ian J. Goodfellow, and Rob Fergus. 2013. Intriguing properties of

neural networks. CoRR abs/1312.6199 (2013).

A EXTENSIONA.1 Problem FormulationIn general, given a point x0, we can consider two disjoint sets

of labels L+ and L− as follows.

• L+ = {l+1, . . . ,l+m } is the set ofm labels achieving high

confidences F ls (x0) of the classifier• L− = {l

−1, . . . ,l−n } is the set of n labels achieving low

confidences F ls (x0) of the classifier

The attacker tries to decrease (resp. increase) all Fs values oflabels in L+ (resp. in L−). Specifically, for a given point x0 anda pair of parameters α , β , the attacker tries to find a sample xnearby x0 such that,

• For all label l in L+, Fls (x) < α .

• For all label l in L−, Fls (x) > β .

Formally, the attack can be formulated with the following

optimization problem.

minimize ∥ d ∥

subject to F ls (x0 + d) < α for all l ∈ L+

F ls (x0 + d) > β for all l ∈ L−

A.2 Generalizing the Solution of 3Again, let di = x− xi be the perturbation to introduce at itera-

tion i , and let p(l )i = F ls (xi ) be the current belief probability of

xi as in class l . Similarly, denote g(l )i = ∇Fls (xi ) be the gradient

of F ls at xi . Finally, denote δ(l )i = p

(l )i − p

(l )i+1 be the decrease

of probability in class l .From equation 4, for each label l ∈ L+∪L−, our perturbation

di should satisfy the following equation,

g(l )i · di = p(l )i+1 − p

(l )i = −δ

(l )i (7)

In other words, di is a solution to a linear system consisting

of (m + n) equations. For notational convenience, we assignnumbers 1,2, . . . ,m + n to each label l ∈ L+ ∪ L−. Then, we

can define a matrix Gi whose jth row is g(j )i , and a vector δi

whose jth element is −δ(j )i , for j = 1,2, . . . ,m + n.

Gi =

*.......,

g(1)ig(2)i...

g(m+n)i

+///////-

δi =

−δ(1)i

−δ(2)i...

−δ(m+n)i

(8)

Now, we have the following linear system determining di .

Gi · di = δi (9)

In usual, g(j )i ’s are gradient vectors in a high dimensional

vector space, and (m + n) is much smaller than the dimension.

Therefore, the linear system (9) is underdetermined. For an un-

derdetermined linear system, ifGi is of full rank and δ(j )i ’s are

fixed for all j , then the min-norm solution d∗i can be computed

as,

d∗i = G†i · δi (10)

where G†i is the Moore-Penrose pseudoinverse of Gi . This

solution d∗i satisfies all constraints of the form (7) for each

label l , and achieves the minimum norm among vectors in the

solution set. In this section, we assume that Gi is always of

full rank, and the solution to the other case will be discussed

at §A.3. As long as Gi is of full rank, G†i can be defined, so

the only remaining task to compute (10) is to determine each

values in δi .

A.2.1 Trivial Constraints on δi . For any lable l ∈ L+ ∪ L−,

its corresponding δ(l )i should satisfy the following condition.

• If l ∈ L+, we want to decrease p(l )i , so δ

(l )i = p

(l )i −

p(l )i+1 ≥ 0

• If l ∈ L−, wewant to increasep(l )i , so δ

(l )i = p

(l )i −p

(l )i+1 ≤

0

Also,

• If l ∈ L+, it suffices to get p(l )i+1 < α , so we get an upper

bound of the decrease δ(l )i ,

δ(l )i ≤ max{0,p

(l )i − α }

• If l ∈ L−, it suffices to get p(l )i+1 > β , so we get an upper

bound of the increase −δ(l )i ,

−δ(l )i ≤ max{0,β − p

(l )i }

Note that themax fucntion is used to ensure no changes for the

labels which the adversarial goal is already achieved. There-

fore, for labels that allow more changes, we have the following

ranges that δ(l )i ’s can be adjusted.

0 ≤ δ(l )i ≤ p

(l )i − α for l ∈ L+

p(l )i − β ≤ δ

(l )i ≤ 0 for l ∈ L−

(11)

A.2.2 Enforcing the Norm Bound of d∗i . In this section, we

consider how to ensure small perturbation in each iteration, i.e.

∥ d∗i ∥ ≤ η∥ x0 ∥. From the minimal-norm solution d∗i = G†i ·δi ,

we have the following inequality.

∥ di ∥ = ∥G†i · δi ∥ ≤ ∥G

†i ∥∥δi ∥ (12)

Note that if Gi = g(1)i has only one row and δi = −δ(1)i is

a scalar value, then equality is satisfied, so we have ∥ di ∥ =|δi |∥ gi ∥

, which corresponds to the norm of the previous minimal-

norm solution 5. However, the equality may not hold for (12)

in general.

Let Gi = U ΣV⊤ be the singular value decomposition of

Gi . It is known that G†i = V Σ†U⊤, so the 2-norm of G†i is

∥G†i ∥ = ∥Σ†∥ = 1

σmin

where σmin is the smallest singluar

value of Gi . Combining with (12),

∥ di ∥ ≤∥δi ∥

σmin

To enforce the norm bound of d∗, we can set the 2-norm con-

straint of δi as follows.

∥δi ∥ ≤ ησmin∥ x0 ∥

To get constraints for individual δ(j )i , we strengthen the re-

striction further using the relationship between 2-norm and

∞-norm.

∥δi ∥ ≤√|L+ ∪ L− | max

l ∈L+∪L−|δ(l )i | ≤ ησmin∥ x0 ∥

With |L+ ∪ L− | =m + n, we get the following constraints thatensures the norm bound of d∗i .

|δ(l )i | ≤

ησmin∥ x0 ∥√m + n

for each label l ∈ L+ ∪ L− (13)

Combining the bounds (11) and (13), we get the following rules

to set δ(l )i for label l at iteration i . These rules are designed

to maximize the amount of possible change, i.e. |δ(l )i |, while

satisfying (11) and (13).

• If l ∈ L+,

– If p(l )i < α , we don’t need to decrease this probability.

Set δ(l )i = 0

– If not, choose δ(l )i = min{p

(l )i − α ,

ησmin ∥ x0 ∥√m+n

}

• If l ∈ L−,

– if p(l )i > β , we don’t need to increase this probability.

Set δ(l )i = 0

– If not, choose δ(l )i = max{p

(l )i − β ,−

ησmin ∥ x0 ∥√m+n

}

A.3 What if Gi has some linearlydependent rows?

In usual, Gi is not guaranteed to be a full-rank matrix, and

may contain linearly dependent rows, making it impossible

to define G†i . However, we can control the values of δ(l )i to

resolve the dependency. In this section, we will present how

to remove dependencies by setting δ(l )i .

Without loss of generality, assume we have two dependent

rows inGi , correnponding to labels l1 and l2. Then, there is a

nonzero constant c such that g(l1 )i = c · g(l2 )i . One naïve choice

to remove dependecy is just setting δ(l1 )i = δ

(l2 )i = 0. Then, the

constraints g(l1 )i · d = 0 and g(l2 )i · d = 0 become equivalent,

thus removing one of the dependent rows from Gi does not

change the solution set. Then, the algorithm proceeds with no

changes of probabilities in those labels, hoping that g(l1 )i+1 and

g(l2 )i+1 will become linearly independent at the next iteration.

The better solution is to make use of the constant factor c todefine another constraint between δ

(l1 )i and δ

(l2 )i . In previous

setting of two dependent labels, if we set δ(l1 )i = c · δ

(l2 )i ,

then the constraints g(l1 )i · d = δ(l1 )i and g(l2 )i · d = δ

(l2 )i are

equivalent upto multiplying constant c , so it is again safe to

ignore one dependent row from Gi as it does not change the

solution set.

Now, we summarize how to set δ(l )i ’s for two labels l1 and l2

to remove dependencies, while the added constraint is satisfied.

There are several cases depending on the membership of labels

l1, l2 and the sign of c .

(1) l1,l2 ∈ L+ (resp. l1,l2 ∈ L−)(a) If c > 0,

• If p(l1 )i < α (resp. p

(l1 )i > β), then δ

(l1 )i = 0 by our

rules at §A.2.2. To satisfy the dependency condition

δ(l1 )i = c · δ

(l2 )i , we set δ

(l1 )i = δ

(l2 )i = 0

• If p(l2 )i < α (resp. p

(l2 )i > β), then δ

(l2 )i = 0 by our

rules at §A.2.2. To satisfy the dependency condition

δ(l1 )i = c · δ

(l2 )i , we set δ

(l1 )i = δ

(l2 )i = 0

• If both probabilities can be changed, we first set

the δ(l )i with larger change |δ

(l )i | possible while

satisfying the constraint, then use δ(l1 )i = c · δ

(l2 )i ,

to determine the other value.

(b) If c < 0,

• sign(δ(l1 )i ) = sign(δ

(l2 )i ). To satisfy δ

(l1 )i = c · δ

(l2 )i

with c < 0, set δ(l1 )i = δ

(l2 )i = 0

(2) l1 ∈ L+, l2 ∈ L− (resp. l1 ∈ L−, l2 ∈ L+)(a) If c > 0,

• sign(δ(l1 )i ) , sign(δ

(l2 )i ). To satisfy δ

(l1 )i = c · δ

(l2 )i

with c > 0, set δ(l1 )i = δ

(l2 )i = 0

(b) If c < 0,

• If p(l1 )i < α (resp. p

(l1 )i > β), then δ

(l1 )i = 0 by our

rules at §A.2.2. To satisfy the dependency condition

δ(l1 )i = c · δ

(l2 )i , we set δ

(l1 )i = δ

(l2 )i = 0

• If p(l2 )i > β (resp. p

(l2 )i < α ), then δ

(l2 )i = 0 by our

rules at §A.2.2. To satisfy the dependency condition

δ(l1 )i = c · δ

(l2 )i , we set δ

(l1 )i = δ

(l2 )i = 0

• If both probabilities can be changed, we first set

the δ(l )i with larger change |δ

(l )i | possible while

satisfying the constraint, then use δ(l1 )i = c · δ

(l2 )i ,

to determine the other value.

The following pseudocode incorporates the rules in §A.2.2

and §A.3.

Algorithm 2

Input: x0,η,maxIter , α , β , L+, L−, a neural network F with

a softmax layer Fs .1: function NewtonFoolGeneral-

ized(x0,η,maxIter ,α ,β ,L+,L−,F )2: m← |L+ |3: n← |L− |4: d← 05: for i = 0,1,2, . . . ,maxIter − 1 do6: Compute g(l )i = ∇F

ls (xi ) for all l ∈ L+ ∪ L−

7: Construct Gi as defined in (8)

8: Remove dependencies of Gi using the tricks in

§A.3, record added constraints on δ(l )i ’s

9: Find the smallest singular value σmin of Gi10: for l ∈ L+ do11: if p (l )i > α then

12: δ(l )i ← min{p

(l )i − α ,

ησmin ∥ x0 ∥√m+n

}

13: else14: δ

(l )i ← 0

15: for l ∈ L− do16: if p (l )i < β then

17: δ(l )i ← max{p

(l )i − β ,−

ησmin ∥ x0 ∥√m+n

}

18: else19: δ

(l )i ← 0

20: Check if the constraints added at step 8 are all

satisfied. If not, adjust one of two δ(l )i , in the way that

|δ(l )i | always decreases.

21: d∗i ← G†i · δi22: xi+1 ← xi + d∗i23: d← d+ d∗i24: return d

B ADDITIONAL EXPERIMENTB.1 Results for HOGTable 6 and Table 7 shows the distances between the HOG

feature vectors of the original image and the perturbed image,

on MNIST and GTSRB, respectively. Again, each column gives

the results on the class represented by the image at the top,

and each row presents the mean and standard deviation. To

be specific, for each row, adversarial examples were generated

by the designated algorithm, then their HOG feature vectors

were compared to those of the corresponding orignal images.

OnMNIST dataset, FGSM gives the best results, while Deep-

Fool and NewtonFool give similar values in general with an

exception of the second label (digit 1). JSMA produces bigger

statistics except for the fifth label (digit 4), which implies that

the perturbed image is less likely to be detected when the

original image is detected correctly.

On GTSRB dataset, the situation changes entirely that now

JSMA produces noticeably better results. Among the other

three algorithms, NewtonFool gives the best results for all