Multiple Gradient Descent Algorithm

Transcript of Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Multiple Gradient Descent Algorithm

Outi Wilppu

March 1, 2013

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

1 Introduction

2 Multiple Gradient Descent AlgorithmSteepest Descent MethodTheoretical backroundAlgorithm

3 MGDA for non-smooth convex problemsTheoretical backroundAlgorithmExampleFuture work

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Motivation

Many real life problems have multiobjective nature

• Problem has several goals such that they should all be asgood as possible

• Goals are conflicting and have to make compromises

• For example in economics and engineering

• Many problems have also non-smooth nature

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Problem

Definition

We consider a multiobjective problem of form

min f1(x), . . . , fk(x) (1)

s. t. x ∈ S

where

• Objective functions fi (x) : Rn −→ R• Set S ⊆ Rn is a set of feasible solutions

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Pareto optimality

Definition

A solution x∗ ∈ S is Pareto optimal if there is no other solutionx ∈ S such that fi (x) ≤ fi (x

∗) for all i = 1, . . . , k andfi (x) < fi (x

∗) for at least one index i .

• Usually there exists a lot of Pareto optimal solutions, calledPareto optimal set

• All Pareto optimal solution are mathematically equally good

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

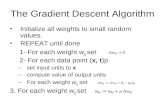

Steepest Descent Method

• A method to solve smooth unconstrained optimizationproblem with single objective function

min f (x), s. t. x ∈ Rn

• Search direction d = − ∇f (x)

‖ ∇f (x) ‖, which is the steepest

descent direction at the point x

• Stepsize λ can be found with line search

• A new point is of the form x+ = x + λd, wheref (x+) < f (x) until the optimum is reached

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

About MGDA

• Assume that in problem (1) we have

– All objective functions fi are continiously differentiable– Problem has no constrains and feasible set is Rn

• The main idea is to find a common descent direction for allobjective functions by defining the convex hull of gradients ofobjective functions and finding the minimum norm element ofthe convex hull

• With this method we obtain one Pareto stationary solution

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Pareto stationary

Definition

Objective functions fi are said to be Pareto stationary at the pointx∗ if

k∑i=1

αi∇fi (x∗) = 0, αi ≥ 0 ∀i ,

k∑i=1

αi = 1.

• Pareto stationarity is a necessary condition for Paretooptimality

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Theorem

Let objective funtions fi , i = 1, . . . , k be continiously differentiableand

U = {w ∈ Rn | w =k∑

i=1

αi∇fi (x), αi ≥ 0 ∀ i ,k∑

i=1

αi = 1}

Let vector w∗ be a minimum norm element of convex hull of U i.e.w∗ = argmin{‖ w ‖ |w ∈ conv U}. Then

1 either w∗ = 0 and objective functions fi , i = 1, . . . , k arePareto stationary at the point x∗

2 or w∗ 6= 0 and −w∗ is common descent direction for allobjective functions. Additionally, if w∗ ∈ U thenuTw∗ =‖ w∗ ‖2 for all u ∈ conv U.

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Search direction

• To find the vector w we need to solve the problem

min ‖k∑

i=1

αi∇fi (x)‖2

s. t. αi ≥ 0∀ i ,k∑

i=1

αi = 1.

• In case of two objective functions we have

α1 =

‖v‖2−uTv

‖u‖2+‖v‖2−2uTv, if uTv < min{‖u‖2, ‖v‖2}

0, if min{‖u‖, ‖v‖} = ‖v‖1, if min{‖u‖, ‖v‖} = ‖u‖

where u = ∇f1(x), v = ∇f2(x) and α2 = 1− α1.

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Stepsize

• Stepsize λ is the largest strictly positive real number such thatall the functions gi (t) = fi (x− tw) are monotonicallydecreasing over the interval [0, t].

• A new point x+ is x+ = x− λw• Algorithm stops when w = 0

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

About MGDA for non-smooth convex problems

• Assume that in the problem (1)

– Objective functions fi are convex functions, not necessarilydifferentiable

– Problem has no constrains and the feasible set is Rn

– The subdifferentials of objective functions fi are known

• The basic idea is the same as before but now instead ofgradients we use the steepest descent subgradients

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Subdifferential and subgradient

Definition

Let function f be convex. A subdifferential of f at the point x is aset

∂f (x) = {ξ ∈ Rn | f ′(x;d) ≥ ξTd}

for all d ∈ Rn. A vector ξ ∈ ∂f (x) is called subgradient offunction f at the point x.

Theorem

Let function f : Rn −→ R be convex and differentiable at the pointx ∈ Rn. Then

∂f (x) = {∇f (x)}.

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Steepest descent direction

• To determine the steepest descent direction we need to solvethe problem

min f ′(x;d) s. t. ‖d‖ = 1 (2)

• The problem (2) can be written

min ‖ξ‖ s. t. ξ ∈ ∂f (x)

• Thus the steepest descent direction at the point x is thesmallest norm subgradient in the subdifferential ∂f (x)

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Algorithm

• To obtain a search direction we need to define the steepestdescent subgradient for every objective function at the pointx. After that we can solve problem

min ‖k∑

i=1

αiξi‖2

s. t. αi ≥ 0 ∀ i ,k∑

i=1

αi = 1

where ξi ∈ ∂fi (x) is the steepest descent subgradient.

• Stepsize and new point are determined as in smooth case

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

• With this method we obtain one Pareto stationary solutionsince

k∑i=1

αiξi = 0, αi ≥ 0 and

k∑i=1

αi = 1.

• In convex case Pareto stationarity is necessary and sufficientcondition to Pareto optimality

⇒ We obtain one Pareto optimal solution

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Example

Consider the following problem:

min {f1(x), f2(x)}s. t. x ∈ R2

where

f1(x) = max{x21 + (x2 − 1)2, (x1 + 1)2}

f2(x) = max{2x1 − x2, x21 + x2}

and starting point x0 = (1, 0.5).

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

The steepest descent subgradients at the point x0:

– ξ1 = (4, 0)

– To determine ξ2 we need to find ρ ∈ [0, 1] such that we findthe minimum norm of subdifferential

∂f2(x0) = ρ(2,−1) + (1− ρ)(2, 1) = (2, 1− 2ρ).

To obtain the steepest descent subgradient ρ =1

2and

ξ2 = (2, 0).

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

A common descent search direction:

– w = αξ1 + (1− α)ξ2

– We obtain α from formula

α =

‖ξ2‖2−ξT1 ξ2

‖ξ1‖2+‖ξ2‖2−2ξT1 ξ2, if ξT1 ξ2 < min{‖ξ1‖2, ‖ξ2‖2}

0, if min{‖ξ1‖, ‖ξ2‖} = ‖ξ2‖1, if min{‖ξ1‖, ‖ξ2‖} = ‖ξ1‖

– Thus w = (2, 0)

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Stepsize:

– Function g1(t) = f1(x0 − tw) is descent if t ∈ [0, 0.6875]

– Function g2(t) = f2(x0 − tw) is descent if t ∈ [0, 0.5]

⇒ Stepsize λ = 0.5

New point:

– x1 = x0 − λw = (0, 0.5)

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

k ξ1 ξ2 α w λ xk+1

0 (4, 0) (2, 0) 0 (2, 0) 0.5 (0, 0.5)

1 (2, 0) (0, 1) 0.2 (0.4, 0.8) 0.403 (−0.161, 0.178)

2 (1.678, 0) (−0.322, 1) 0.329 (0.336; 0.671)

Solution: x∗ = (−0.161, 0.178), f1(x∗) ≈ 0.704 andf2(x∗) ≈ 0.203

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Future work

• The biggest drawback is that we need to know wholesubdifferential

• The aim is to find an effective way to get a common descentdirection for all objective functions

• We do not need more than one arbitrary subgradient offunction

Outi Wilppu Multiple Gradient Descent Algorithm

Outline Introduction Multiple Gradient Descent Algorithm MGDA for non-smooth convex problems

Thank you for your attention!

Outi Wilppu Multiple Gradient Descent Algorithm