004 architecture andadvanceduse

-

Upload

scott-miao -

Category

Technology

-

view

1.472 -

download

11

Transcript of 004 architecture andadvanceduse

S C O T T M I A O 2 0 1 2 / 7 / 1 9

ARCHITECTURE & ADVANCED USAGE

AGENDA

• Course Credit

• Architecture

• More…

• Advanced Usage

• More…

2

COURSE CREDIT

• Show up, 30 scores

• Ask question, each question earns 5 scores

• Quiz, 40 scores, Pls see TCExam

• 70 scores will pass this course

• Each course credit will be calculated once for

each course finished

• The course credit will be sent to you and your

supervisor by mail

3

ARCHITECTURE

• Seek V.S. Transfer

• Storage

• Write Path

• Files

• Region Splits

• Compactions

• HFile Format

• KeyValue Format

• Write-Ahead Log

• Read Path

• Regions Lookup

• Region Life Cycle

• Replication

4

SEEK V.S. TRANSFER

• HBase use Log-Structure Merge Trees (LSM-Trees) data structure as it’s underlying Store File operation mechanism • Derived from B+ Trees

• Easy to handle data with optimized layout

• WAL Log

• MemStore

• Operates at the Disk Transfer

• B+ Trees • Many RDBMSs use B+ Trees

• Use OPTIMIZATION process periodically

• Operates at the Disk Seek

5

SEEK V.S. TRANSFER

• Disk Transfer • Moving data between the disk surface and the host system

• CPU, RAM, and disk size double every 18–24 months

• Disk Seek • Measures the time it takes the head assembly on the actuator

arm to travel to the track of the disk where the data will be read or written

• Seek time remains nearly constant at around a 5% increase in speed per year

• Conclusion • At scale seek, is inefficient compared to transfer

6

https://www.research.ibm.com/haifa/Workshops/ir2005/papers/DougCutti

ng-Haifa05.pdf

SEEK V.S. TRANSFER – LSM TREES

7

STORAGE

8

STORAGE - COMPONENTS

• Zookeeper

• -ROOT-, .META. Tables

• HMaster

• HRegionServer

• HLog (WAL, Write-Ahead Log)

• HRegion

• Store => ColumnFamily

• StorageFile => HFile

• DFS Client

• HDFS, Amazon S3, Local File System, etc

9

WRITE PATH

1. A Write to a

region server 2. Write to

WAL log

3. Write to a

corresponding

MemStore after WAL

log persistent

4. Flush a new Hfile if

MemStore size reach the

threshold

10

FILES

• Root-Level files

• Table-Level files

• Region-Level files

• A txt file for reference

11

REGION SPLITS

• Split one region to two half-size regions

• Triggered while • hbase.hregion.max.filesize reached, default is 256MB

• Hbase Shell split, HBaseAdmin.split(…)

• Following Steps the Region server will take… • Create a folder called “split” under parent region folder

• Close the parent region, so it can not service any request

• Prepare two new daughter regions (with multiple threads), inside the split folder, including…

• region folder structure, reference Hfile, etc

• Move this two daughter regions into Table folder if above steps completed

12

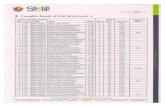

REGION SPLITS

• Here is an example of how this looks in the .META. Table

row: testtable,row-500,1309812163930.d9ffc3a5cd016ae58e23d7a6cb937949.

column=info:regioninfo, timestamp=1309872211559, value=REGION => {NAME => \

'testtable,row-500,1309812163930.d9ffc3a5cd016ae58e23d7a6cb937949. \

TableName => 'testtable', STARTKEY => 'row-500', ENDKEY => 'row-700', \

ENCODED => d9ffc3a5cd016ae58e23d7a6cb937949, OFFLINE => true,

SPLIT => true,}

column=info:splitA, timestamp=1309872211559, value=REGION => {NAME => \

'testtable,row-500,1309872211320.d5a127167c6e2dc5106f066cc84506f8. \

TableName => 'testtable', STARTKEY => 'row-500', ENDKEY => 'row-550', \

ENCODED => d5a127167c6e2dc5106f066cc84506f8,}

column=info:splitB, timestamp=1309872211559, value=REGION => {NAME => \

'testtable,row-550,1309872211320.de27e14ffc1f3fff65ce424fcf14ae42. \

TableName => [B@62892cc5', STARTKEY => 'row-550', ENDKEY => 'row-700', \

ENCODED => de27e14ffc1f3fff65ce424fcf14ae42,} 13

REGION SPLITS

• The name of the reference file is another random

number, but with the hash of the referenced region

as a postfix /hbase/testtable/d5a127167c6e2dc5106f066cc84506f8/colfam1/ \

6630747383202842155.d9ffc3a5cd016ae58e23d7a6cb937949

14

COMPACTIONS

• The store files are monitored by a background

thread

• The flushes of memstores slowly build up an

increasing number of on-disk files

• The compaction process will combine them to a

few, larger files

• This goes on until

• The largest of these files exceeds the configured maximum

store file size and triggers a region split

• Type

• Minor

• Major

15

COMPACTIONS

• Compaction check triggered while…

• A memstore has been flushed to disk

• The compact or major_compact shell commands/API calls

• A background thread

• Called CompactionChecker

• Each region server runs a single instance

• Run it less often than the other thread-based tasks

16

hbase.server.thread.wakefrequency X

hbase.server.thread.wakefrequency.multiplier (default set to 1000)

COMPACTIONS - MINOR

• Rewriting the last few files into one larger one

• The number of files is set with the

hbase.hstore.compaction.min property

• Default is 3

• Needs to be at least 2 or more

• A number too large…

• Would delay minor compactions

• Also would require more resources and take longer

• The maximum number of files is set with

hbase.hstore.compaction.max property

• Default is 10

17

COMPACTIONS - MINOR • The all files that are under the limit, up to the total

number of files per compaction allowed

• hbase.hstore.compaction.min.size property

• Any file larger than the maximum compaction size is

always excluded

• hbase.hstore.compaction.max.size property

• Default is Long.MAX_VALUE

18

COMPACTIONS - MAJOR

• Compact all files into a single file

• Also drop predicate deletion KeyValues • Action is Delete

• Version

• TTL

• Triggered while… • major_compact shell command/majorCompact() API call

• hbase.hregion.majorcompaction property • Default is 24 hours

• hbase.hregion.majorcompaction.jitter property • Default is 0.2 • Without the jitter, all stores would run a major compaction at the

same time, every 24 hours

• Minor compactions might be promoted to major compactions • Due to only affect store files whose size less than the

configured maximum files per compaction

19

HFILE FORMAT

• The actual storage files are implemented by the

HFile class

• Store HBase’s data efficiently

• Blocks

• Fixed size

• Trailer, File Info

• Others are variable size

20

HFILE FORMAT

• Default block size is 64KB

• Some recommendation written in API docs

• block size between 8KB to 1MB for general usage

• Larger block size is preferred for sequential access usecase

• Smaller block size is preferred for random access usecase

• Require more memory to hold the block index

• May be slower to create (leads more FS I/O flushes)

• The smallest possible block size would be around 20KB-30KB

• Each block contains

• A magic header

• A number of serialized KeyValue instances

21

HFILE FORMAT

• Each block is about as large as the configured

block size

• In practice, it is not an exact science

• Store a KeyValue that is larger than the block size, the writer has to accept this

• Even with smaller values, the check for the block size is done

after the last value was written

• The majority of blocks will be slightly larger

• Using a compression algorithm

• Will not have much control over block size

• final store file contain the same number of blocks, but the

total size will be smaller since each block is smaller

22

HFILE FORMAT – HFILE BLOCK SIZE V.S. HDFS BLOCK SIZE

• Default HDFS block size is 64 MB

• Which 1,024 times the HFile default block size (64KB)

• HBase stores its files transparently into a filesystem

• No correlation between these two block types

• It is just a coincidence

• HDFS also does not know what HBase stores

23

HFILE FORMAT – HFILE CLASS

• Access an HFile directly

• hadoop fs –cat <hfile>

• hbase org.apache.hadoop.hbase.io.hfile.HFile –f

<hfile> -m –v- p

• Actual data stored as serialized KeyValue instances

• HFile.Reader properties and the trailer block details

• File info block values

24

KEYVALUE FORMAT • Each KeyValue in the HFile is a low-level byte array

• Fixed-length Numbers • Key Length

• Value Length

• If you deal with small values • Try to keep the key small

• Choose a short row and column key

• family name with a single byte and the qualifier equally short

• Compression should help mitigate the overwhelming key size problem

• The sorting of all KeyValues in the store file helps to keep similar keys close together

25

WRITE-AHEAD LOG

• Region servers keep data in-memory until enough is

collected to warrant a flush to disk

• Avoiding the creation of too many very small files

• The data resides in memory it is volatile, not persistent

• Write-Ahead Logging

• A common approach to solve above issue, even in most of

RDBMSs

• Each update (edit) is written to a log, then to real persistent data store

• The server then has the liberty to batch or aggregate the data in memory as needed

26

WRITE-AHEAD LOG

• The WAL is the lifeline that is needed when disaster

strikes

• The WAL records all changes to the data

• If the server crashes

• WAL can effectively replay the log to get everything up to

where the server should have been just before the crash

• if writing the record to the WAL fails

• The whole operation must be considered a failure

• The actual WAL log resides on HDFS

• HDFS is a replicated filesystem

• Any other server can open the log and start replaying the edits

27

WRITE-AHEAD LOG – WRITE PATH

28

WRITE-AHEAD LOG – MAIN CLASSES

29

WRITE-AHEAD LOG – OTHER CLASSES

• LogSyncer Class

• HTableDescriptor.setDeferredLogFlush(boolean)

• Default is false

• Every update to WAL log will be synced into filesystem

• Set to true

• Background process instead

• hbase.regionserver.optionallogflushinterval property

• Default is 1 second

• There is a chance of data loss in case of a server failure

• Can only applies to user tables, not catalog tables (-ROOT-

, .META.)

30

WRITE-AHEAD LOG – OTHER CLASSES

• LogRoller Class • Takes care of rolling logfiles at certain intervals

• hbase.regionserver.logroll.period property • Default is 1 hour

• Other parameters • hbase.regionserver.hlog.blocksize property

• Default is 32MB

• hbase.regionserver.logroll.multiplier property • Default is 0.95 • Rotate logs when they are at 95% of the block size

• Logs are rotated • A certain amount of time has passed

• Considered full

• Whatever comes first

31

WRITE-AHEAD LOG – SPLIT & REPLAY LOGS

32

WRITE-AHEAD LOG – DURABILITY

• WAL Log

• Sync them for every edit

• Set the log flush times to be as low as you want

• Still dependent on the underlying filesystem

• Especially the HDFS

• Use Hadoop 0.21.0 or later

• Or a special 0.20.x with append support patches

• I used 0.20.203 before

• Otherwise, you can very well face data loss !!

33

READ PATH

34 Due to timestamp and Bloom

filter exclusion process

ColFam2

REGION LOOKUPS

• Catalog Tables

• -ROOT-

• Refer to all regions in the .META. table

• .META.

• Refer to all regions in all user tables

• A Three Level B+ tree-like lookup scheme

• A node stored in ZooKeeper

• Contains the location of the root table’s region

• Lookup of a matching meta region from the -ROOT- table

• Retrieval of the user table region from the .META. table

35

REGION LOOKUPS

36

THE REGION LIFE CYCLE

37

ZOOKEEPER

• ZooKeeper as HBase distributed coordination

service

• Use HBase shell

• hbase zkcli

Znode Description

/hbase/hbaseid Cluster ID, as stored in the hbase.id file on HDFS

/hbase/master Holds the master server name

/hbase/replication Contains replication details

/hbase/root-region-

server

Server name of the region server hosting the -ROOT-

regions 38

ZOOKEEPER

Znode Description

/hbase/rs The root node for all region servers to list themselves when

they start. It is used to track server failures.

/hbase/shutdow

n

Is used to track the cluster state. It contains the time when

the cluster was started, and is empty when it was shut down

/hbase/splitlog All log-splitting-related coordination. States including

unassigned, owned and RESCAN

/hbase/table Disabled tables are added to this znode

/hbase/unassig

ned

Used by the AssignmentManager, to track region states

across the entire cluster. It contains znodes for those regions

that are not open, but are in a transitional state. 39

REPLICATION

• A way to copy data between HBase deployments

• It can serve as a

• Disaster recovery solution

• Provide higher availability at the HBase layer

• (HBase cluster) Master-push

• One master cluster can replicate to any number of slave

clusters, and each region server will participate to replicate

its own stream of edits

• Eventual consistency

40

REPLICATION

41

ADVANCED USAGE

• Key Design

• Secondary Indexes

• Search Integration

• Transactions

• Bloom Filters

42

KEY DESIGN

• Two fundamental key structures

• Row Key

• Column Key

• A column family name + a column qualifier

• Use these keys

• to solve commonly found problems when designing storage

solutions

• Logical V.S. Physical layout

43

LOGICAL V.S. PHYSICAL LAYOUT

44

READ PERFORMANCE AND QUERY CRITERIA

45

KEY DESIGN – TALL-NARROW V.S. FLAT-WIDE TABLES

• Tall-narrow table layout

• A table with few columns but many rows

• Flat-wide table layout

• Has fewer rows but many columns

• Tall-narrow table layout is recommended

• Due to a single row could outgrow the maximum file/region

size and work against the region split facility under Flat-wide

table design

46

KEY DESIGN – TALL-NARROW V.S. FLAT-WIDE TABLES

• A email system as example

• Flat-wide layout

• Tall-narrow

<userId> : <colfam> : <messageId> : <timestamp> : <email-message>

12345 : data : 5fc38314-e290-ae5da5fc375d : 1307097848 : "Hi Lars, ..."

12345 : data : 725aae5f-d72e-f90f3f070419 : 1307099848 : "Welcome, and ..."

12345 : data : cc6775b3-f249-c6dd2b1a7467 : 1307101848 : "To Whom It ..."

12345 : data : dcbee495-6d5e-6ed48124632c : 1307103848 : "Hi, how are ..."

<userId>-<messageId> : <colfam> : <qualifier> : <timestamp> : <email-message>

12345-5fc38314-e290-ae5da5fc375d : data : : 1307097848 : "Hi Lars, ..."

12345-725aae5f-d72e-f90f3f070419 : data : : 1307099848 : "Welcome, and ..."

12345-cc6775b3-f249-c6dd2b1a7467 : data : : 1307101848 : "To Whom It ..."

12345-dcbee495-6d5e-6ed48124632c : data : : 1307103848 : "Hi, how are ..."

Empty Qualifier !! 47

PARTIAL KEY SCANS

• Make sure to pad the value of each field in composite row key, to ensure the right sorting order you expected

48

PARTIAL KEY SCANS

• Set startRow and stopRow

• Set startRow with exact user ID

• Scan.setStartRow(…)

• Set stopRow with user ID + 1

• Scan.setStopRow(…)

• Control the sorting order

• Long.MAX_VALUE - <date-as-long>

•

String s = "Hello,";

for (int i = 0; i < s.length(); i++) {

print(Integer.toString(s.charAt(i) ^ 0xFF, 16));

}

b7 9a 93 93 90 d3

49

PAGINATION

• Use Filters

• PageFilter

• ColumnPaginationFilter

• Steps

1. Open a scanner at the start row

2. Skip offset rows

3. Read the next limit rows and return to the caller

4. Close the scanner

• Usecase

• On web-based email client

• Read first 1 ~ 50 emails, then 51 ~ 100, etc

50

TIME SERIES DATA

• Dealing with stream processing of event

• Most common use case is time series data

• Data could be coming from

• A sensor in a power grid

• A stock exchange

• A monitoring system for computer systems

• Their row key represents the event time

• The sequential, monotonously increasing nature of

time series data

• Causes all incoming data to be written to the same region

• Hot spot issue

51

TIME SERIES DATA

• Overcome this problem

• By prefixing the row key with a nonsequential prefix

• Common choices

• Salting

• Field swap/promotion

• Randomization

52

TIME SERIES DATA - SALTING

• Use a salting prefix to the key that guarantees a

spread of all rows across all region servers

• Which results

byte prefix = (byte) (Long.hashCode(timestamp) %

<number of regionservers>);

byte[] rowkey = Bytes.add(Bytes.toBytes(prefix),

Bytes.toBytes(timestamp);

0myrowkey-1

0myrowkey-4

1myrowkey-2

1myrowkey-5

...

53

TIME SERIES DATA - SALTING

• Access to a range of rows must be fanned out

• Read with <number of region servers> get or scan

calls

• Is it good or not good ?

• Use multiple threads to read this data from distinct servers

• Need more further study on the access pattern and try run

54

TIME SERIES DATA – SALTING USECASE

• A open source crash reporter named Socorro from

Mozilla organization

• For Firefox and Thunderbird

• Reports are subsequently read and analyzed by the Mozilla

development team

• Technologies

• Python-based client code

• Communicates with the HBase cluster using Thrift

55 Mozilla wiki for Socorro - https://wiki.mozilla.org/Socorro

TIME SERIES DATA – SALTING USECASE

• How the client is merging the previously salted,

sequential keys when doing a scan operation

56

for salt in '0123456789abcdef':

salted_prefix = "%s%s" % (salt,prefix)

scanner = self.client.scannerOpenWithPrefix(table, salted_prefix, columns)

iterators.append(salted_scanner_iterable(self.logger,self.client,

self._make_row_nice,salted_prefix,scanner))

TIME SERIES DATA – FIELD SWAP/PROMOTION

• Use the composite row key concept

• Move the timestamp to a secondary position in the row key

• If you already have a row key with more than one

field

• Swap them

• If you have only the timestamp as the current row

key

• Promote another field from the column keys into the row

key

• Promote even the value

• You can only access data, especially time ranges,

for a given swapped or promoted field 57

TIME SERIES DATA – FIELD SWAP/PROMOTION USECASE

• OpenTSDB • A time series database • Store metrics about servers and

services, gathered by external collection agents

• All of the data is stored in HBase

• System UI enables users to query various metrics, combining and/or downsampling them—all in real time

• The schema promotes the metric ID into the row key • <metric-id><base-timestamp>...

58 http://opentsdb.net/

TIME SERIES DATA – FIELD SWAP/PROMOTION USECASE

• Example

59 OpenTSDB Schema - http://opentsdb.net/schema.html

TIME SERIES DATA

60

TIME-ORDERED RELATIONS

• You can also store related, time-ordered data

• By using the columns of a table

• Since all of the columns are sorted per column

family

• Treat this sorting as a replacement for a secondary index

• For a small number of indexes, you can create a column

family for them

• If the large amount of indexes, you shall consider the

Secondary-Indexes approaches in later of this ppt

• HBase currently (0.95) does not do well with

anything above two or three column families

• Due to flushing and compactions are done on a per Region basis

• Can make for a bunch of needless i/o loading

61

http://hbase.apache.org/book/number.of.cfs.html

TIME-ORDERED RELATIONS – EXAMPLE

• Colum name = <indexId> + “-” + <value>

• Column value

• Key in data column family

• Redundant values from data column family for performance

• Denormalization

62

… //data

12345 : data : 5fc38314-e290-ae5da5fc375d : 1307097848 : "Hi Lars, ..."

12345 : data : 725aae5f-d72e-f90f3f070419 : 1307099848 : "Welcome, and ..."

12345 : data : cc6775b3-f249-c6dd2b1a7467 : 1307101848 : "To Whom It ..."

12345 : data : dcbee495-6d5e-6ed48124632c : 1307103848 : "Hi, how are ..."

... //ascending index for from email address

12345 : index : [email protected] : 1307099848 : 725aae5f-d72e...

12345 : index : [email protected] : 1307103848 : dcbee495-6d5e...

12345 : index : [email protected] : 1307097848 : 5fc38314-e290...

12345 : index : [email protected] : 1307101848 : cc6775b3-f249...

...// descending index for email subjects

12345 : index : idx-subject-desc-\xa8\x90\x8d\x93\x9b\xde : \

1307103848 : dcbee495-6d5e-6ed48124632c

12345 : index : idx-subject-desc-\xb7\x9a\x93\x93\x90\xd3 : \

1307099848 : 725aae5f-d72e-f90f3f070419

SECONDARY INDEXES

• HBase has no native support for secondary indexes

• There are use cases that need them

• Look up a cell with not just the primary coordinates

• The row key, column family name and qualifier

• But also an alternative coordinate

• Scan a range of rows from the main table, but ordered by the

secondary index

• Secondary indexes store a mapping between the

new coordinates and the existing ones

63

SECONDARY INDEXES - CLIENT-MANAGED

• Moving the responsibility into the application layer

• Combines a data table and one (or more)

lookup/mapping tables

• Write data

• Into the data table, also updates the lookup tables

• Read data

• Either a direct lookup in the main table

• A lookup in secondary index table, then retrieve data from

main table 64

SECONDARY INDEXES - CLIENT-MANAGED

• Atomicity

• No cross-row atomicity

• Writing to the secondary index tables first, then write to the

data table at the end of the operation

• Use asynchronous, regular pruning jobs

• It is hardcoded in your application

• Needs to evolve with overall schema changes, and new requirements

65

SECONDARY INDEXES - INDEXED-TRANSACTIONAL HBASE

• Indexed-Transactional HBase (ITHBase) project

• It extends HBase by adding special implementations of the

client and server-side classes

• Extension

• The core extension is the addition of transactions

• It guarantees that all secondary index updates are consistent

• Most client and server classes are replaced by ones that

handle indexing support

• Drawbacks

• May not support the latest version of HBase available

• Adds a considerable amount of synchronization overhead

that results in decreased performance

66 https://github.com/hbase-trx/hbase-transactional-tableindexed

SECONDARY INDEXES - INDEXED HBASE

• Indexed HBase (IHBase) • Forfeits the use of separate tables for each index but

maintains them purely in memory

• this approach is very fast than previous one

• Indexes related • The indexes are generated when

• A region is opened for the first time

• A memstore is flushed to disk

• The index is never out of sync, and no explicit transactional control is necessary

• Drawbacks • It is quite intrusive, requires additional JAR and a config file

• It needs extra resources, it trades memory for extra I/O requirements

• It may not be available for the latest version of HBase 67 https://github.com/ykulbak/ihbase

SECONDARY INDEXES - COPROCESSOR

• Implement an indexing solution based on

coprocessors

• Using the server-side hooks, e.g. RegionObserver

• Use coprocessor to load the indexing layer for every region,

which would subsequently handle the maintenance of the

indexes

• Use of the scanner hooks to transparently iterate over a

normal data table, or an index-backed view on the same

• Currently in development

• JIRA ticket

• https://issues.apache.org/jira/browse/HBASE-2038

68

SEARCH INTEGRATION

• Using indexes

• Still confined to the available keys user-predefined

• Search-based lookup

• Use arbitrary nature of keys

• Often backed by full search engine integration

• Following are a few possible approaches

69

SEARCH INTEGRATION - CLIENT-MANAGED

• Example Facebook inbox search

• The schema is built roughly like this

• Every row is a single inbox, that is, every user has a

single row in the search table

• The columns are the terms indexed from the

messages

• The versions are the message IDs

• The values contain additional information, such as

the position of the term in the document

70

<inbox>:<COL_FAM_1>:<term>:<messageId>:<additionalInfo>

SEARCH INTEGRATION - LUCENE

• Apache Lucene • Lucene Core

• Provides Java-based indexing and search technology

• Solr

• High performance search server built using Lucene Core

• Steps 1. HBase only stores the data

2. BuildTableIndex class scans an entire data table and builds the Lucene indexes

3. End up as directories/files on HDFS

4. These indexes can be downloaded to a Lucene-based server for locally use

5. A search performed via Lucene, will return row keys for next random lookup into data table for specific value

71

SEARCH INTEGRATION - COPROCESSORS

• Currently in development

• Similar to the use of Coprocessors to build

secondary indexes

• Complement a data table with Lucene-based

search functionality

• Ticket in JIRA

• https://issues.apache.org/jira/browse/HBASE-3529

72

TRANSACTION

• It is a immature aspect of HBase

• Due to it is a compliant for CAP theorem

• Here are a two possible solutions

• Transactional HBase

• Comes with the aforementioned ITHBase

• Zookeeper

• Comes with a lock recipe that can be used to implement a

two-phase commit protocol

• http://zookeeper.apache.org/doc/trunk/recipes.html#sc_recip

es_twoPhasedCommit

73

BLOOM FILTERS

• Problem

• Cell count

• 16,384 blocks = 64KB block size / 1GB store file size

• 5,000,000 (million) cell amount = 200 bytes cell size / 1GB store

file size

• Block index => index start row key of each block

• Store file

• A number of store files within one column family

• Allowing you to improve lookup times. Since they

add overhead in terms of storage and memory,

they are turned off by default.

74

BLOOM FILTERS – WHY USE IT ?

75

BLOOM FILTERS – DO WE NEED IT ?

76

• If possible, you should try to use the row-level Bloom

filter

• A good balance between the additional space

requirements and the gain in performance

• Only resort to the more costly row+column Bloom

filter

• Gain no advantage from using the row-level one

77

BACKUPS

QK一下好不好 (  ̄ 3 ̄)Y▂Ξ

78

![Hunter [004]](https://static.fdocuments.in/doc/165x107/568c3ace1a28ab0235a7ab2f/hunter-004.jpg)