Visually grounded generation of entailments from premises · 2019. 11. 30. · SNLI...

Transcript of Visually grounded generation of entailments from premises · 2019. 11. 30. · SNLI...

Proceedings of The 12th International Conference on Natural Language Generation, pages 178–188,Tokyo, Japan, 28 Oct - 1 Nov, 2019. c©2019 Association for Computational Linguistics

178

Visually grounded generation of entailments from premises

Somaye JafaritazehjaniIRISA, Universite de Rennes I, France

Albert GattLLT, University of Malta

Marc TantiLLT, University of Malta

Abstract

Natural Language Inference (NLI) is the taskof determining the semantic relationship be-tween a premise and a hypothesis. In this pa-per, we focus on the generation of hypothesesfrom premises in a multimodal setting, to gen-erate a sentence (hypothesis) given an imageand/or its description (premise) as the input.The main goals of this paper are (a) to investi-gate whether it is reasonable to frame NLI as ageneration task; and (b) to consider the degreeto which grounding textual premises in visualinformation is beneficial to generation. Wecompare different neural architectures, show-ing through automatic and human evaluationthat entailments can indeed be generated suc-cessfully. We also show that multimodal mod-els outperform unimodal models in this task,albeit marginally.

1 Introduction

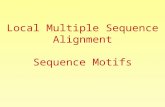

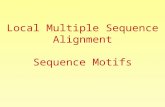

Natural Language Inference (NLI) or Recogniz-ing Textual Entailment (RTE) is typically formu-lated as a classification task: given a pair consist-ing of premise(s) P and hypothesis Q, the task isto determine if Q is entailed by P, contradicts it,or whether Q is neutral with respect to P (Daganet al., 2006). For example, in both the left andright panels of Figure 1, the premise P in the cap-tion entails the hypothesis Q.

In classical (i.e. logic-based) formulations (e.g.,Cooper et al., 1996), P is taken to entail Q if Q fol-lows from P in all ‘possible worlds’. Since thenotion of ‘possible world’ has proven hard to han-dle computationally, more recent approaches haveconverged on a probabilistic definition of the en-tailment relationship, relying on data-driven clas-sification methods (cf. Dagan et al., 2006, 2010).In such approaches, P is taken to entail Q if, ‘typ-ically, a nhuman reading P would infer that Q ismost likely true’ (Dagan et al., 2006). Most ap-

proaches to RTE seek to identify the semantic re-lationship between P and Q based on their textualfeatures.

With a few exceptions (Xie et al., 2019; Lai,2018; Vu et al., 2018), NLI is defined in unimodalterms, with no reference to the non-linguistic‘world’. This means that NLI models remaintrapped in what Roy (2005) described as a ‘sen-sory deprivation tank’, handling symbolic mean-ing representations which are ungrounded in theexternal world (cf. Harnad, 1990). On the otherhand, the recent surge of interest in NLP tasks atthe vision-language interface suggests ways of in-corporating non-linguistic information into NLI.For example, pairing the premises in Figure 1 withtheir corresponding images could yield a more in-formative representation from which the seman-tic relationship between premise and hypothesiscould be determined. This would be especiallyuseful if the two modalities contained differenttypes of information, effectively making the tex-tual premise and the corresponding image com-plementary, rather than redundant with respect toeach other.

While NLI is a classic problem in NaturalLanguage Understanding, it also has consider-able relevance for generation. Many text genera-tion tasks, including summarisation (Nenkova andMcKeown, 2011), paraphrasing (Androutsopoulosand Malakasiotis, 2010), text simplification (Sid-dharthan, 2014) and question generation (Piwekand Boyer, 2012), depend on the analysis and un-derstanding of an input text to generate an outputthat stands in a specific semantic relationship tothe input.

In this paper, we focus on the task of generat-ing hypotheses from premises. Here, the challengeis to produce a text that follows from (‘is entailedby’) a given premise. Arguably, well-establishedtasks such as paraphrase generation and text sim-

179

(a) P : Four guys in wheelchairs on a basketball court twoare trying to grab a ball in midairQ: Four guys are playing basketball.

(b) P : A little girl walking down a dirt road behind agroup of other walkersQ: The girl is not at the head of the pack of people

Figure 1: Images with premises and hypotheses

plification are specific instances of this more gen-eral problem. For example, in paraphrase genera-tion, the input-output pair should be in a relation-ship of meaning preservation.

In contrast to previous work on entailment gen-eration (Kolesnyk et al., 2016; Starc and Mladenic,2017), we explore the additional benefits derivedfrom grounding textual premises in image data.We therefore assume that the data consists oftriples 〈P, I,Q〉, such that premises are accompa-nied by their corresponding images (I) as well asthe hypotheses, as in Figure 1. We compare mul-timodal models to a unimodal setup, with a viewto determining to what extent image data helps ingenerating entailments.

The rest of this paper is structured as follows.In Section 2 we discuss further motivations forviewing NLI and entailment generation as mul-timodal tasks. Section 3 describes the datasetand architectures used; Section 4 presents ex-perimental results, including a human evaluation.Our conclusion (Section 5) is that it is feasibleto frame NLI as a generation task and that in-corporating non-linguistic information is poten-tially profitable. However, in line with recent re-search evaluating Vision-Language (VL) models(Shekhar et al., 2017; Wang et al., 2018; Vu et al.,2018; Tanti et al., 2019), we also find that currentarchitectures are unable to ground textual repre-sentations in image data sufficiently.

2 NLI, Multimodal NLI and Generation

Since the work of Dagan et al. (2006), researchon NLI has largely been data-driven (see Sam-mons et al., 2012, for an overview). Most re-cent approaches rely on neural architectures (e.g.Rocktaschel et al., 2015; Chen et al., 2016; Wanget al., 2017), a trend which is clearly evident in thesubmissions to recent RepEval challenges (Nangiaet al., 2017).

Data-driven NLI has received a boost fromthe availability of large datasets such as SICK(Marelli et al., 2014), SNLI (Bowman et al., 2015)and MultiNLI (Williams et al., 2018). Some re-cent work has also investigated multimodal NLI,whereby the classification of the entailment rela-tionship is done on the basis of image features(Xie et al., 2019; Lai, 2018), or a combination ofimage and textual features (Vu et al., 2018). Inparticular, Vu et al. (2018) exploited the fact thatthe main portion of SNLI was created by reusingimage captions from the Flickr30k dataset (Younget al., 2014) as premises, for which entailments,contradictions and neutral hypotheses were sub-sequently crowdsourced via Amazon MechanicalTurk (Bowman et al., 2015). This makes it pos-sible to pair premises with the images for whichthey were originally written as descriptive cap-tions, thereby reformulating the NLI problem as aVision-Language task. There are at least two im-portant motivations for this:

180

1. The inclusion of image data is one way tobring together the classical and the proba-bilistic approaches to NLI, whereby the im-age can be viewed as a (partial) representa-tion of the ‘world’ described by the premise,with the entailment relationship being deter-mined jointly from both. This is in line withthe suggestion by Young et al. (2014), thatimages be considered as akin to the ‘possi-ble worlds’ in which sentences (in this case,captions) receive their denotation.

2. A multimodal definition of NLI also servesas a challenging testbed for VL NLP mod-els, in which there has been increasing in-terest in recent years. This is especially thecase since neural approaches to fundamentalcomputer vision (CV) tasks have yielded sig-nificant improvements (LeCun et al., 2015),while also making it possible to use pre-trained CV models in multimodal neural ar-chitectures, for example in tasks such as im-age captioning (Bernardi et al., 2016). How-ever, recent work has cast doubt on the extentto which such models are truly exploiting im-age features in a multimodal space (Shekharet al., 2017; Wang et al., 2018; Tanti et al.,2019). Indeed, Vu et al. (2018) also findthat image data contributes less than expectedto determining the semantic relationship be-tween premise-hypothesis pairs in the classicRTE labelling task.

There have also been a few approaches toentailment generation, which again rely on theSNLI dataset. Kolesnyk et al. (2016) employeda sequence-to-sequence architecture with attention(Sutskever et al., 2014; Bahdanau et al., 2015)to generate hypotheses from premises. They ex-tended this framework to the generation of in-ference chains by recursively feeding the modelwith the generated hypotheses as the input. Starcand Mladenic (2017) proposed different genera-tive neural network models to generate a streamof hypotheses given 〈premise, Label〉 pairs as theinput.

In seeking to reframe NLI as a generation task,the present paper takes inspiration from these ap-proaches. However, we are also interested in fram-ing the task as a multimodal, VL problem, in linewith the two motivations noted at the beginning ofthis section. Given that recent work has suggested

shortcomings in the way VL models adequatelyutilise visual information, a focus on multimodalentailment generation is especially timely, since itpermits direct comparison between models utilis-ing unimodal and multimodal input.

As Figure 1 suggests, given the triple 〈P, I,Q〉,the hypothesis Q could be generated from thepremise P only, from the image I , or from a com-bination of P + I . Our question is whether we cangenerate better entailments from a combination ofboth, compared to only one of these input modali-ties.

3 Methodology

3.1 Data

We focus on the subset of entailment pairs in theSNLI dataset (Bowman et al., 2015). The major-ity of instances in SNLI consist of premises thatwere originally elicited as descriptive captions forimages in Flickr30k (Young et al., 2014; ands Li-wei Wang et al., 2015).1

In constructing the SNLI dataset, AmazonMechanical Turk workers were shown the cap-tions/premises without the corresponding images,and were asked to write a new caption that was(i) true, given the premise (entailment); (ii) false,given the premise (contradiction); and (iii) possi-bly true (neutral).

Following Vu et al. (2018), we use a multimodalversion of SNLI, which we refer to as V-SNLI,which was obtained by mapping SNLI premises totheir corresponding Flickr30k images (Xie et al.,2019, use a similar approach). The resulting V-SNLI therefore contains premises which are as-sumed to be true of their corresponding image.Since we focus exclusively on the entailment sub-set, we also assume, given the instructions given toannotators, that the hypotheses are also true of theimage. Figure 1 shows two examples of imageswith both premises and hypotheses.

Each premise in the SNLI dataset includes sev-eral reference hypotheses by different annotators.In the original SNLI train/dev/test split, somepremises show up in both train and test sets, albeitwith different paired hypotheses. For the presentwork, once premises were mapped to their cor-responding Flickr30k images, all premises cor-responding to an image were grouped, and the

1A subset of 4000 cases in SNLI was extracted from theVisualGenome dataset (Krishna et al., 2017). Like Vu et al.(2018), we exclude these from our experiments.

181

Figure 2: Architecture schema that is instantiated in the different models. The encoder returns a source represen-tation which conditions the decoder into generating the entailed hypothesis. The encoder of multimodal modelseither (1) initialises the encoder RNN with the image features (init-inject) and returns the final state; or (2) con-catenates the encoder RNN’s final state with the image features (merge) and returns the result; or (3) returns theimage features as is without involving the encoder RNN. The unimodal model encoder returns the encoder RNN’sfinal state as is. The source representation is concatenated to the decoder RNN’s states prior to passing them to thesoftmax layer.

dataset was resplit so that the train/test partitionshad no overlap, resulting in a split of 182,167(train), 3,291 (test) and 3,329 (dev) entailmentpairs. This also made it possible to use a multi-reference approach to evaluation (see Section 3.3).

Prior to training, all texts were lowercased andtokenized.2 Vocabulary items with frequency lessthan 10 were mapped to the token 〈UNK〉. Theunimodal model (see Section 3.2) was trained ontextual P-Q pairs from the original SNLI data,while the multimodal models were trained on pairsfrom V-SNLI where the input consisted of P+I (forthe multimodal text+image model), or I only (forthe image-to-text model), to generate entailments.

3.2 ModelsFigure 2 is an illustration of the architectureschema that is employed for both multimodal andunimodal architectures. A sequence-to-sequenceencoder-decoder architecture is used to predict theentailed hypothesis from the source. The sourcecan be either a premise sentence, an image, orboth.

Both the encoder and the decoder use a 256DGRU RNN and both embed their input wordsusing a 256D embedding layer. In multimodal

2Experiments without lowercasing showed only marginaldifferences in the results.

models, the encoder either produces a multimodalsource representation that represents the sentence-image mixture or produces just an image repre-sentation. In the unimodal model, the encoderproduces a representation of the premise sentenceonly.

This encoded representation (multimodal orunimodal) is then concatenated to every state inthe decoder RNN prior to sending it to the soft-max layer, which will predict the next word in theentailed hypothesis.

All multimodal models extract image featuresfrom the penultimate layer of the pre-trainedVGG-19 convolutional neural network (Simonyanand Zisserman, 2014). All models were imple-mented in Tensorflow, and trained using the theAdam optimizer (Kingma and Ba, 2014) with alearning rate of .0001 and a cross-entropy loss.

The models compared are as follows:

1. Unimodal model: A standard sequence-to-sequence architecture developed for neuralmachine translation (NMT). It uses separateRNNs for encoding the source text and fordecoding the target text. This model encodesthe premise sentence alone and generates theentailed hypothesis. In the unimodal archi-tecture, the final state of the encoder RNN is

182

used as the representation of the premise P.

2. Multimodal, text+image input, init-inject(T+I-Init): A multimodal model in whichthe image features are incorporated at the en-coding stage, that is, image features are usedto initialise the RNN encoder. This architec-ture, which is widely used in image caption-ing (Devlin et al., 2015; Liu et al., 2016) isreferred to as init-inject, following Tanti et al.(2018), on the grounds that image featuresare directly injected into the RNN.

3. Multimodal, text+image input, merge (T+I-Merge): A second multimodal model inwhich the image features are concatenatedwith the final state of the encoder RNN. Fol-lowing Tanti et al. (2018), we refer to this asthe merge model. This too is adapted from awidely-used architecture in image captioning(Mao et al., 2014, 2015a,b; Hendricks et al.,2016). The main difference from init-inject isthat here, image features are combined withtextual features immediately prior to decod-ing at the softmax layer, so that the RNN doesnot encode image features directly.

4. Multimodal, image input only (IC): Thismodel is based on a standard image caption-ing setup (Bernardi et al., 2016) in which theentailed hypothesis is based on the image fea-tures only, with no premise sentence. This ar-chitecture leaves out the encoder RNN com-pletely. Note that, while this is a standardimage captioning setup, it is put to a some-what different use here, since we do not trainthe model to generate captions (which corre-spond to the premises in V-SNLI) but the hy-potheses (which are taken to follow from thepremises).

3.3 Evaluation metricsWe employed the evaluation metrics BLEU-1 (Pa-pineni et al., 2002), METEOR (Lavie and Agar-wal, 2007) and CIDEr (Vedantam et al., 2015) tocompare generated entailments against the goldoutputs in the model. We also compare the modelperplexity on the test set.

As noted earlier, we adopted a multi-referenceapproach to evaluation, exploiting the fact thatin our dataset we grouped all reference hypothe-ses corresponding to each premise (and its corre-

sponding image). Thus, evaluation metrics are cal-culated by comparing the generated hypotheses toa group of reference hypotheses. This is advanta-geous, since n-gram based metrics such as BLEUand METEOR are known to yield more reliable re-sults when multiple reference texts are available.

4 Results

4.1 Metric-based evaluation

The results obtained by all models are shownin Table 1. The T+I-Merge architecture outper-forms all other models on CIDEr and METEOR,while the unimodal model, relying only on textualpremises, is marginally better on BLEU and hasslightly lower perplexity.

The lower perplexity of the unimodal model isis unsurprising given that in the unimodal model,the decoder is only conditioned on textual fea-tures without images. However, it should also benoted that the unimodal model also fares quite wellon the other two metrics, and is only marginallyworse than T+I-Merge. Its score on BLEU-1 isalso competitive with that reported by Kolesnyket al. (2016) for their seq2seq model, which ob-tained a BLEU score of 0.428. Note, however,that this score was not obtained using a multi-reference approach, that is, each generated can-didate was compared to each reference candidate,rather than the set of references together. Recom-puting our BLEU-1 score for the Unimodal modelin this way, we obtain a score of 0.395, which ismarginally lower than that reported by Kolesnyket al. (2016).

The IC model, which generates entailments ex-clusively from images, ranks lowest on all met-rics. This is probably due to the fact that, contraryto the usual setup for image caption generation,this model was trained on image-entailment pairsrather than directly on image-caption pairs. Al-though, based on the instructions given to annota-tors (Bowman et al., 2015), entailments in SNLIare presumed to be true of the image, it must benoted that the entailments were not elicited as de-scriptive captions with reference to an image, un-like the premises.

We discuss these results in more detail in Sec-tion 4.3, after reporting on a human evaluation.

4.2 Human evaluation

We conducted a human evaluation experiment, de-signed to address the question whether better en-

183

Model BLEU-1 METEOR CIDEr PerplexityUnimodal 0.695 0.267 0.938 7.23T+I-Init 0.634 0.239 0.763 10.73T+I-Merge 0.686 0.271 0.955 7.26IC 0.474 0.16 0.235 13.7

Table 1: Automatic evaluation results for unimodal and multimodal models

tailments are generated from textual premises, im-ages, or a combination of the two. In the metric-based evaluation (Section 4.1), the best multi-modal model was T+I-Merge; hence, we use theoutputs from this model, comparing them to theoutputs from the unimodal and the image-only ICmodel.

Participants Twenty self-reported native or flu-ent speakers of English were recruited through so-cial media and the authors’ personal network.

Materials A random sample of 90 instancesfrom the V-SNLI test set was selected. Eachconsisted of a premise, together with an imageand three entailments generated using the Uni-modal, IC and I+T-Merge models, respectively.The 90 instances were randomly divided into threegroups. Participants in the evaluation were simi-larly allocated to one of three groups. The groupswere rotated through a latin square so that eachparticipant saw each of the 90 instances, one thirdin each of the three conditions (Unimodal, IC orI+T-Merge). Equal numbers of judgments werethus obtained for each instance in each condition,while participants never saw a premise more thanonce.

Procedure Participants conducted the evalua-tion online. Test items were administered inblocks, by condition (text-only, image-only ortext+image) and presented in a fixed, randomisedorder to all participants. However, participantsevaluated items in the three conditions in differ-ent orders, due to the latin square rotation. Eachtest case was presented with an input consisting oftextual premise, an image, or both. Participantswere asked to judge to what extent the generatedsentence followed from the input. Answers weregiven on an ordinal scale with the following quali-tative responses: totally; partly; not clear or not atall. Figure 3 shows an example with a generatedentailment in the text+image condition.

Figure 3: Example of an evaluation test item, with asentence generated from both text and image.

Figure 4: Proportion of responses in each category foreach input condition.

184

Results Figure 4 shows the proportion of re-sponses in each category for each of the three con-ditions. The figure suggests that human judgmentswere in line with the trends observed in Section4.1. More participants judged the generated en-tailments as following totally or partly from theinput, when the input consisted of text and im-age together (T+I-Merge), followed closely by thecase where the input was text only (Unimodal). Bycontrast, in the case of the image-only (IC) condi-tion, the majority of cases were judged as not fol-lowing at all.

Possible reasons for these trends are discussedin the following sub-section in light of furtheranalysis. First, we focus on whether the advantageof incorporating image features with text is statis-tically reliable. We coded responses as a binomialvariable, distinguishing those cases where partici-pants responded with ‘totally’ (i.e. the generatedentailment definitely follows from the input) fromall others. Note that this makes the evaluation con-servative, since we focus only on the odds of re-ceiving the most positive judgment in a given con-dition.

We fitted logit mixed-effects models. All mod-els included random intercepts for participants anditems (premise IDs) and random slopes for par-ticipants and items by condition. Where a modeldid not converge, we dropped the by-items randomslope term. All models were fitted and tested usingthe lme4 package in R (Bates et al., 2014).

The difference between the three types of inputCondition significantly affected the odds of a pos-itive (‘totally’) response (z = 8.06, p < .001).

We further investigated the impact of incorpo-rating image features with or without text throughplanned comparisons. A mixed-effects modelcomparing the image-only to the text+image con-dition showed that the latter resulted in signif-icantly better output as judged by our partici-pants (z = 7.80, p < .001). However, thetext+image model did not significantly outper-form the text-only unimodal model, despite thehigher percentage of positive responses in Figure4 (z = 1.61, p > .1). This is consistent withthe CIDEr and METEOR scores, which show thatT+I-Merge outperforms the Unimodal model, butonly marginally.

4.3 Analysis and discussion

Two sets of examples of the outputs of differentmodels are provided in Table 2, corresponding tothe images with premises and entailments shownin Figure 1a.

These examples, which are quite typical, sug-gest that the IC model is simply generating a de-scriptive caption which only captures some enti-ties in the image. We further noted that in a num-ber of cases, the IC model also generates repeti-tive sentences that are not obviously related to theimage. These are presumably due to predictionsrelying extensively on the language model itself,yielding stereotypical ‘captions’ which reflect fre-quent patterns in the data. Together, these reasonsmay account for the poor performance of the ICmodel on both the metric-based and the humanevaluations.

The main difference between the Unimodal andthe multimodal T+I models seems to be that theygenerate texts that correspond to different, but ar-guably valid, entailments of the premise. How-ever, the fact remains that the ungrounded Uni-modal model yields good results that are closeto those of T+I-Merge. While humans judgedthe T+I-Merge entailments as better than the uni-modal ones overall, this is not a significant differ-ence, at least as far as the highest response cate-gory (‘totally’) is concerned.

These limitations of multimodal models echoprevious findings. In image captioning, for exam-ple, it has been shown that multimodal models aresurprisingly insensitive to changes in the visual in-put. For instance Shekhar et al. (2017) found thatVL models perform poorly on a task where theyare required to distinguish between a ‘correct’ im-age (e.g. one that corresponds to a caption) anda completely unrelated foil image (Shekhar et al.,2017). Similarly, Tanti et al. (2019) find that im-age captioning models exhibit decreasing sensitiv-ity to the input visual features as more of the cap-tion is generated. In their experiments on multi-modal NLI, Vu et al. (2018) also found that imagefeatures contribute relatively little to the correctclassification of pairs as entailment, contradictionor neutral. These results suggest that current VLarchitectures do not exploit multimodal informa-tion effectively.

Beyond architectural considerations, however,there are also properties of the dataset which mayaccount for why linguistic features play such an

185

Source SentenceP (Figure 1a) Four guys in wheelchairs on a basketball court two are trying to grab a ball in midair.Q (ref) Four guys are playing basketball.IC A man is playing a game.T+I-Init Two men are jumping.T+I-Merge The basketball players are in the court.Unimodal The men are playing basketball.P (Figure 1b) A little girl walking down a dirt road behind a group of other walkers.Q (ref) The girl is not at the head of the pack of people.IC A man is wearing a hat.T+I-Init A young girl is walking down a path.T+I-Merge A group of people are walking down a road.Unimodal A girl is walking .

Table 2: Premises and reference captions, with output examples. These test cases correspond to the images inFigure 1.

important role in generating hypotheses. We dis-cuss two of these in particular.

V-SNLI is not (quite) multimodal SNLI hy-potheses were elicited from annotators in a uni-modal setting, without reference to the images.Although we believe that our assumption concern-ing the truth of entailments in relation to imagesholds (see Section 3.1), the manner in which thedata was collected potentially results in hypothe-ses which are in large measure predictable fromlinguistic features, accounting for the good per-formance of the unimodal model. If correct, thisalso offers an explanation for the superior per-formance of the T+I-Merge model, compared toT+I-Init. In the former, we train the encoder lan-guage model separately, mixing image features ata late stage, thereby allocating more memory tolinguistic features in the RNN, compared to T+I-Init, where image features are used to initialise theencoder. Apart from yielding lower perplexity (seeTable 1), this allows the T+I-Merge model to ex-ploit linguistic features to a greater extent than ispossible in T+I-Init.

Linguistic biases Recently, a number of authorshave expressed concerns that NLI models maybe learning heuristics based on superficial syntac-tic features rather than classifying entailment re-lationships based on a deeper ‘understanding’ ofthe semantics of the input texts (McCoy et al.,2019). Indeed, studies on the SNLI dataset haveshown that it contains several linguistic biases(Gururangan et al., 2018), such that the semanticrelationship (entailment/contradiction/neutral) be-

comes predictable from textual features of the hy-potheses alone, without reference to the premise.Gururangan et al. (2018) identify a ‘hard’ subsetof SNLI where such biases are not present.

Many of these biases are due to a high degreeof similarity between a premise and a hypothe-sis. For example, contradictions were often for-mulated by annotators by simply including a nega-tion, while entailments are sometimes substringsof the premises, as in the following pair:

P : A bicyclist riding down the road wearing ahelmet and a black jacket.

Q : A bicyclist riding down the road.

Such biases could also account for the non-significant difference in performance betweenthe two best models, Unimodal and T+I-Merge(which also allocates all RNN memory to textualfeatures, unlike T+I-Init). In either case, it is pos-sible that a hypothesis is largely predictable fromthe textual premise, irrespective of the image, andgeneration boils down to ‘rewriting’ part of thepremise to produce a similar string (see, e.g., thelast example in Table 2).

If textual similarity is indeed playing a role,then we would expect a model to generate entail-ments (Qgen) with a better CIDEr score, in thosecases where there is a high degree of overlap be-tween the premise and the reference hypothesis(Qref ).

We operationalised overlap in terms of the Dice

186

(a) Histogram of Dice overlap values (b) CIDEr scores for Low vs High overlap test cases

Figure 5: Dice overlap vs CIDEr scores (T+I-Merge)

coefficient3, computed over the sets of words in Por Qref , after stop word removal.4 As shown bythe histogram in Figure 5a, a significant proportionof the P -Qref pairs have a relatively high Dice co-efficient ranging from 0.4 to 0.8. We divided testset instances into those with ‘low’ overlap (Dice≤0.3; n = 536) and those with high overlap (Dice> 0.3; n = 2755). Figure 5b displays the meanCIDEr score between entailments generated by theT+I-Merge model, and reference entailments, as afunction of whether the reference entailment hadhigh overlap with the hypothesis.

Clearly, for those cases where the overlap be-tween P and Qref was high, the T+I-Mergemodel obtains a higher CIDEr score between gen-erated and reference outputs. This is confirmedby Pearson correlation coefficients between Dicecoefficient and CIDEr, computed for each of thehigh/low overlap subsets: On the subset with highDice overlap, we obtain a significant positive cor-relation (r = 0.20, p < .001); on the subset withlow overlap, the correlation is far lower and doesnot reach significance (r = .06, p > .1).

5 Conclusion

This paper framed the NLI task as a generationtask and compared the role of visual and linguisticfeatures in generating entailments. To our knowl-edge, this was the first systematic attempt to com-pare unimodal and multimodal models for entail-ment generation.

3

Dice(P,Qref ) =2× |P ∩Qref ||P |+ |Qref |

4Stopwords were removed using the built-in English stop-word list in the Python NLTK library.

We find that grounding entailment generation inimages is beneficial, but linguistic features alsoplay a crucial role. Two reasons for this may beadduced. On the one hand, the data used was notelicited in a multimodal setting, despite the avail-ability of images. It also contains linguistic biases,including relatively high degrees of similarity be-tween premise and hypothesis pairs, which mayresult in additional image information being lessimportant. On the other hand, it has become in-creasingly clear that Vision-Language NLP mod-els are not grounding language in vision to thefullest extent possible. The present paper adds tothis growing body of evidence.

Several avenues for future work are open, ofwhich three are particularly important. First,in order to properly assess the contribution ofgrounded language models for entailment gener-ation, it is necessary to design datasets in whichthe textual and visual modalities are complemen-tary, rather than redundant with respect to eachother. Second, while the present paper focusedexclusively on entailments, future work shouldalso consider generating contradictions. Finally,further research should investigate more sophis-ticated Vision-Language architectures, especiallyincorporating attention mechanisms.

Acknowledgments

The first author worked on this project while atthe University of Malta, on the Erasmus MundusEuropean LCT Master Program. The third au-thor was supported by the Endeavour ScholarshipScheme (Malta). We thank Raffaella Bernardi,Claudio Greco and Hoa Vutrong and our anony-mous reviewers for their helpful comments.

187

ReferencesIon Androutsopoulos and Prodromos Malakasiotis.

2010. A survey of paraphrasing and textual entail-ment methods. Journal of Artificial Intelligence Re-search, 38:135–187.

Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Ben-gio. 2015. Neural Machine Translation By JointlyLearning To Align and Translate. In Proc. ICLR’15.

Douglas Bates, Martin Maechler, and Ben Bolke. 2014.lme4: Linear mixed-effects models using S4 classes.

Raffaella Bernardi, Ruket Cakici, Desmond Elliott,Aykut Erdem, Erkut Erdem, Nazli Ikizler-Cinbis,Frank Keller, Adrian Muscat, and Barbara Plank.2016. Automatic description generation from im-ages: A survey of models, datasets, and evalua-tion measures. Journal of Artificial Intelligence Re-search, 55(1):409–442.

Samuel R Bowman, Gabor Angeli, Christopher Potts,and Christopher D Manning. 2015. A large anno-tated corpus for learning natural language inference.In Proc. EMNLP’15.

Qian Chen, Xiaodan Zhu, Zhenhua Ling, Si Wei,Hui Jiang, and Diana Inkpen. 2016. EnhancedLSTM for Natural Language Inference. arXiv,1609.06038(2016).

Robin Cooper, Dick Crouch, Jan Van Eijck, ChrisFox, Johan Van Genabith, Jan Jaspars, Hans Kamp,David Milward, Manfred Pinkal, Massimo Poesio,and Steve Pulman. 1996. Using the framework.Technical Report Technical Report LRE 62-051 D-16, The FraCaS Consortium.

Ido Dagan, Bill Dolan, Bernardo Magnini, and DanRoth. 2010. The fourth pascal recognizing textualentailment challenge. Natural Language Engineer-ing.

Ido Dagan, Oren Glickman, Bernardo Magnini, andRamat Gan. 2006. The PASCAL RecognisingTextual Entailment Challenge. In J. Quinonero-Candela, I. Dagan, B. Magnini, and F D’Alche-Buc,editors, Machine Learning Challenges, pages 177–190. Springer, Berlin and Heidelberg.

Jacob Devlin, Hao Cheng, Hao Fang, Saurabh Gupta,Li Deng, Xiaodong He, Geoffrey Zweig, and Mar-garet Mitchell. 2015. Language models for imagecaptioning: The quirks and what works. In Proc.ACL’15.

Suchin Gururangan, Swabha Swayamdipta, OmerLevy, Roy Schwartz, Samuel R. Bowman, andNoah A. Smith. 2018. Annotation Artifacts in Nat-ural Language Inference Data. ArXiv, 1803.02324.

Stevan Harnad. 1990. The symbol grounding problem.Physica, D42:335–346.

Lisa Anne Hendricks, Subhashini Venugopalan, Mar-cus Rohrbach, Raymond Mooney, Kate Saenko, andTrevor Darrell. 2016. Deep Compositional Caption-ing: Describing Novel Object Categories withoutPaired Training Data. In Proc. CVPR’16.

Diederik P. Kingma and Jimmy Lei Ba. 2014. Adam:A method for stochastic optimization. arXiv,abs/1412.6980.

Vladyslav Kolesnyk, Tim Rocktaschel, and SebastianRiedel. 2016. Generating natural language inferencechains. arXiv, abs/1606.01404.

Ranjay Krishna, Yuke Zhu, Oliver GrothJustin, John-son Kenji Hata, Joshua Kravitz, Stephanie Chen,Yannis Kalantidis, Li-Jia Li, David A. Shamma,Michael S. Bernstein, and Li Fei-Fei. 2017. Vi-sual genome: Connecting language and vision us-ing crowdsourced dense image annotations. Inter-national Journal of Computer Vision, 123(1):32–73.

Alice Yingming Lai. 2018. Textual entailment from im-age caption denotation. Phd thesis, University ofIllinois at Urbana-Champaign.

Alon Lavie and Abhaya Agarwal. 2007. METEOR: AnAutomatic Metric for MT Evaluation with ImprovedCorrelation with Human Judgments. In Proc. ACLWorkshop on Intrinsic and Extrinsic EvaluationMeasures for Machine Translation and/or Summa-rization, Ann Arbor, Michigan.

Yann LeCun, Yoshua Bengio, and Geoffrey Hinton.2015. Deep learning. Nature, 521(7553):436–444.

Siqi Liu, Zhenhai Zhu, Ning Ye, Sergio Guadarrama,and Kevin Murphy. 2016. Optimization of imagedescription metrics using policy gradient methods.ArXiv, 1612.00370.

Bryan A. Plummer ands Liwei Wang, Chris M. Cer-vantes, Juan C. Caicedo, Julia Hockenmaier, andSvetlana Lazebnik. 2015. Flickr30k entities: Col-lecting region-to-phrase correspondences for richerimage-to-sentence models. arXiv, abs/1505.04870.

Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, ZhihengHuang, and Alan Yuille. 2015a. Deep Captioningwith Multimodal Recurrent Neural Networks (m-RNN). Proc. ICLR’15.

Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, ZhihengHuang, and Alan Yuille. 2015b. Learning like aChild: Fast Novel Visual Concept Learning fromSentence Descriptions of Images. In Proc. ICCV’15.

Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, andAlan L. Yuille. 2014. Explain images with multi-modal recurrent neural networks. Proc. NIPS’14.

Marco Marelli, Stefano Menini, Marco Baroni, LuisaBentivogli, Raffaella bernardi, and Roberto Zampar-elli. 2014. A SICK cure for the evaluation of com-positional distributional semantic models. In Proc.LREC’14.

188

R. Thomas McCoy, Ellie Pavlick, and Tal Linzen.2019. Right for the Wrong Reasons: DiagnosingSyntactic Heuristics in Natural Language Inference.In Proc. ACL’19.

Nikita Nangia, Adina Williams, Angeliki Lazaridou,and Samuel R Bowman. 2017. The RepEval 2017Shared Task: Multi-Genre Natural Language Infer-ence with Sentence Representations. In Proc. 2ndWorkshop on Evaluating Vector Space Representa-tions for NLP.

Ani Nenkova and Kathleen R McKeown. 2011. Au-tomatic Summarization. Foundations and Trends inInformation Retrieval, 5(2-3):103–233.

Kishore Papineni, Salim Roukos, Todd Ward, andWei-jing Zhu. 2002. BLEU : a Method for Auto-matic Evaluation of Machine Translation. In Proc.ACL’02), Philadelphia, PA.

Paul Piwek and Kristy Elizabeth Boyer. 2012. Varietiesof question generation: Introduction to this specialissue. Dialogue and Discourse, 3(2):1–9.

Tim Rocktaschel, Edward Grefenstette, Karl MoritzHermann, Tomas Kocisky, and Phil Blunsom. 2015.Reasoning about entailment with neural attention.arXiv, abs/1509.06664.

Deb Roy. 2005. Semiotic schemas: A framework forgrounding language in action and perception. Artifi-cial Intelligence, 167(1-2):170–205.

Mark Sammons, Vinod Vydiswaran, and Dan Roth.2012. Recognising Textual Entailment. In DanielM. Bikel Zitouni and Imed, editors, MultilingualNatural Language Processing, pages 209–281. IBMPress, Westford, MA.

Ravi Shekhar, Sandro Pezzelle, Yauhen Klimovich,Aurelie Herbelot, Moin Nabi, Enver Sangineto, andRaffaella Bernardi. 2017. FOIL it! Find One mis-match between Image and Language caption. InProc. ACL’17.

Advaith Siddharthan. 2014. A survey of research ontext simplification. International Journal of AppliedLinguistics, 165(2):259–298.

Karen Simonyan and Andrew Zisserman. 2014. VeryDeep Convolutional Networks for Large-Scale Im-age Recognition. arXiv, 1409.1556.

Janez Starc and Dunja Mladenic. 2017. Constructing aNatural Language Inference dataset using generativeneural networks. Computer Speech and Language,46:94–112.

Ilya Sutskever, Oriol Vinyals, and Quoc V Le. 2014.Sequence to sequence learning with neural net-works. In Proc. NIPS’14.

M Tanti, A Gatt, and K Camilleri. 2018. Where to putthe image in an image caption generator. NaturalLanguage Engineering, 24:467–489.

M Tanti, A Gatt, and K Camilleri. 2019. Quantifyingthe amount of visual information used by neural cap-tion generators. In Proc. Workshop on Shortcomingsin Vision and Language, pages 124–132.

Ramakrishna Vedantam, C. Lawrence Zitnick, andDevi Parikh. 2015. CIDEr: Consensus-based imagedescription evaluation. In Proc. CVPR’15.

Hoa Trong Vu, Claudio Greco, Aliia Erofeeva, So-mayeh Jafaritazehjani, Guido Linders, Marc Tanti,Alberto Testoni, Raffaella Bernardi, and Albert Gatt.2018. Grounded textual entailment. In Proc. COL-ING’18.

Josiah Wang, Pranava Madhyastha, and Lucia Specia.2018. Object Counts! Bringing Explicit Detec-tions Back into Image Captioning. In Proc. NAACL-HLT’18.

Zhiguo Wang, Wael Hamza, and Radu Florian. 2017.Bilateral Multi-Perspective Matching for NaturalLanguage Sentences. In Proc. IJCAI’17.

Adina Williams, Nikita Nangia, and Samuel R. Bow-man. 2018. A Broad-Coverage Challenge Corpusfor Sentence Understanding through Inference. InProc. NAACL-HLT’18.

Ning Xie, Farley Lai, Derek Doran, and Asim Ka-dav. 2019. Visual Entailment: A Novel Taskfor Fine-Grained Image Understanding. ArXiv,1901.06706v1.

Peter Young, Alice Lai, Micah Hodosh, and JuliaHockenmaier. 2014. From image descriptions tovisual denotations: New similarity metrics for se-mantic inference over event descriptions. Transac-tions of the Association for Computational Linguis-tics, 2:67–78.