neural controller of dc motor

-

Upload

ganesan-ganesh -

Category

Documents

-

view

147 -

download

7

Transcript of neural controller of dc motor

NEURAL NETWORK CONTROLLER FOR DC MOTOR USING

MATLAB APPLICATION

ABSTRACT

The purpose of this study is to control the speed of direct current (DC)

motor with Artificial Neural Network (ANN) controller using MATLAB

application. The Artificial Neural Network Controller will be design and

must be tune, so the comparison between simulation result and experimental

result can be made. The scopes includes the simulation and modeling of

direct current (DC) motor, implementation of Artificial Neural Network

Controller into actual DC motor and comparison of MATLAB simulation

result with the experimental result. This research was about introducing the

new ability of in estimating speed and controlling the separately exited direct

current (SEDC) motor. In this project, ANN Controller will be used to

control the speed of DC motor. The ANN Controller will be programmed to

control the speed of DC motor at certain speed level. The sensor will be used

to detect the speed of motor. Then, the result from sensor is fed back to

ANN Controller to find the comparison between the desired output and

measured output.

TABLE OF CONTENT

CHAPTER TITLE PAGE

ABSTRACT i

TABLE OF CONTENTS ii

LIST OF TABLES iii

LIST OF FIGURES ix

1 INTRODUCTION 1

1.1 General Introduction to Motor Drives

1.1.1 DC Motor Drives

1.2 Permanent Magnet Direct Current (PMDC) Motor

1.2.1 Introduction

1.2.2 Classification of PM Motor

1.2.3 Brushless DC (BLDC) Motor

1.2 Problem Statement

1.3 Problems encountered and solutions

1.4 Objectives

1.5 Scopes

2 LITERATURE REVIEW

2.1 Artificial Neural Network Controller

Introduction

2.1.2 Classification of ANNs

2.1.3 Neuron Structures

2.1.3.1 Neurons

2.1.3.2 Artificial Neural Networks

2.1.4 Learning Algorithm

2.1.4.1 Back Propagation Algorithm

2.1.5 Application of Neural Network

2.1.6 Neural Network Software

2.2 Matlab 7.5

3 METHODOLOGY

3.1 Methodology

3.2 PMDC Motor

3.2.1 Modeling of DC Motor

3.2.1.1 Schematic Diagram

3.2.1.2 Equations

3.2.1.3 S-Domain block diagram of PMDC Motors

3.2.1.4 Values of motor modeling component

3.2.2 Clifton Precision

3.2.2.1 Features of Driver Motor

3.2.3.1 MOSFET-Drive Driver Motor

Data Acquisition Card (DAQ Card)

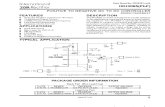

3.2.4.1 PCI1710HG

3.3 Step Response of control system

3.3.1 Step response

3.3.2 Step response formula analysis

3.3.2.1 Maximum Overshoot

3.3.2.2 Settling Time

3.3.2.3 Rise Time

3.3.2.5 Steady-state error

3.4 Model Reference Controller

3.4.1 Steps to generate Model Reference Controller

3.4.1.1 Steps 1

3.4.1.2 Steps 2

3.4.1.3 Steps 3

3.4.1.4 Steps 4

3.4.1.5 Steps 5

3.5 Interfacing of Matlab Simulink with DAQ Card

3.5.1 DAQ Card Interfacing

4 RESULT AND DISCUSSION

4.1 Simulation Result

4.1.1 The result without Neural Network Controller

4.1.1.1 Simulink Structure Graph

4.1.2 The result with Neural Network Controller

4.1.2.1 Simulink Structure

4.1.2.3 Graph

4.2 Discussion

Comparison between without controller and with

controller

Result without Neural Network controller

Result with Neural Network controller

Disturbance factor

5 COSTING AND COMMERCIALIZATION,CONCLUSION AND RECOMMENDATION

5.1 Costing and commercialization

5.1.1 Costing

5.1.2 Commercialization

5.2 Conclusion

5.3 Recommendation

REFERENCES

APPENDIX

LIST OF TABLES

TABLE TITLE PAGE

1 Values of motor modeling component

2 Cliffton Precision Technical Data

3 Total cost of project

LIST OF FIGURES

FIGURE TITLE PAGE

1 Simple neural network

2 Example of a two-layer neural network

3 Developed system with PC interfacing through DAQ card

4 Simulink Block Diagram of DC motor with Artificial Neural

Network (ANN) controller

5 Basic structure of DC motor

6 Schematic Diagram

7 Block diagram of permanent magnet DC motors

8 Driver IR2109 circuit diagram

9 PCI1710HG

10 Typical Response of a control system

11 Figure 1

12 Figure 2

13 Figure 3

14 Figure 4

15 Figure 5

16 Figure 6

17 Figure 7

18 Figure 8

19 Figure 9

20 Figure 10

21 Figure 11

22 Figure 12

23 Figure 13

24 Figure 14

25 Figure 15

26 Figure 16

27 Complete system of ANN Controller

28 Figure 17

29 Figure 18

30 Figure 19

30 Simulink Structure

31 DC Motor Modeling Simulink Block diagram

32 Graph of result without Neural Network Controller

33 Simulink Structure of ANN Controller

34 Figure 20

35 From DC motor modeling box

36 Graph with ANN Controller

37 Figure 21

38 Figure 22

39 Figure 23

40 Figure 24

LIST OF SYMBOLS/ABBREVIATIONS

ANN - Artificial Neural Network

BLDC - Brushless Direct Current

DAQ - Data Acquisition Card

DC - Direct Current

LIST OF APPENDICES

LIST OF SYMBOLS/ABBREVIATIONS

ANN - Artificial Neural Network

SEDC - Separately Exited Direct Current

DC - Direct Current

CHAPTER 1

INTRODUCTION

1.1 General Introduction to DC Motor Drives

1.1.1 DC Motor Drives

Conventional direct current electric machines and alternating current

induction and synchronous electric machines have traditionally been the three

cornerstones serving daily electric motors needs from small household appliances

to large industrial plants.

Recent technological advances in computing power and motor drive

systems have allowed an even further increase in application demands on electric

motors. Through the years, even AC power system clearly winning out over DC

system, DC motors still continued to be significant fraction in machinery

purchased each year.

There were several reasons for the continued popularity of DC motors. One

was the DC power systems are still common in cars and trucks. Another

application for DC motors was a situation in which wide variations in speed in

needed. Most DC machines are like AC machines in that they have AC voltages

and currents within them, DC machines have a DC output only because a

mechanism exists that converts the internal AC voltages to DC voltages at their

terminals.

The greatest advantage of DC motors may be speed control. Since speed is

directly proportional to armature voltage and inversely proportional to the

magnetic flux produced by the poles, adjusting the armature voltage and/or the

field current will change the rotor speed. Today, adjustable frequency drives can

provide precise speed control for AC motors, but they do so at the expense of

power quality, as the solid-state switching devices in the drives produce a rich

harmonic spectrum. The DC motor has no adverse effects on power quality.

1.2 DC motor

1.2.1 Introduction

A DC motor uses these properties to convert electricity into motion. As the

magnets within the DC motor attract and repel one another, the motor turns.

A DC motor requires at least one electromagnet. This electromagnet

switches the current flow as the motor turns, changing its polarity to keep the

motor running. The other magnet or magnets can either be permanent magnets or

other electromagnets. Often, the electromagnet is located in the center of the motor

and turns within the permanent magnets, but this arrangement is not necessary.

To imagine a simple DC motor, think of a wheel divided into two halves

between two magnets. The wheel of the DC motor in this example is the

electromagnet. The two outer magnets are permanent, one positive and one

negative. For this example, let us assume that the left magnet is negatively charged

and the right magnet is positively charged.

Electrical current is supplied to the coils of wire on the wheel within the

DC motor. This electrical current causes a magnetic force. To make the DC motor

turn, the wheel must have be negatively charged on the side with the negative

permanent magnet and positively charged on the side with the permanent positive

magnet. Because like charges repel and opposite charges attract, the wheel will

turn so that its negative side rolls around to the right, where the positive permanent

magnet is, and the wheel's positive side will roll to the left, where the negative

permanent magnet is. The magnetic force causes the wheel to turn, and this motion

can be used to do work.

When the sides of the wheel reach the place of strongest attraction, the

electric current is switched, making the wheel change polarity. The side that was

positive becomes negative, and the side that was negative becomes positive. The

magnetic forces are out of alignment again, and the wheel keeps rotating. As the

DC motor spins, it continually changes the flow of electricity to the inner wheel,

so the magnetic forces continue to cause the wheel to rotate.

DC motors are used for a variety of purposes, including electric razors, electric

car windows, and remote control cars. The simple design and reliability of a DC

motor makes it a good choice for many different uses, as well as a fascinating way

to study the effects of magnetic fields.

Advantages of DC motor:

Ease of control

Deliver high starting torque

Near-linear performance

Disadvantages:

High maintenance

Large and expensive (compared to induction motor)

Not suitable for high-speed operation due to commutator and brushes

Not suitable in explosive or very clean environment

1.2.2 Classification of DC Motor

Separately exited DC motor

Self-exited DC motor

These are furtherly classified into several types,

DC shunt motor

DC series motor

Brushless DC motor

Compound motors

Etc,

1.2.3 Brushless DC (BLDC) Motor

A brushless DC motor (BLDC) is a synchronous electric motor which is

powerdriven by direct-current electricity (DC) and which has an electronically

controlled commutation system, instead of a mechanical commutation system

based on brushes. In such motors, current and torque, voltage and rpm are linearly

related. In BLDC motor, there are two sub-types used which are the Stepper Motor

type that may have more poles on the stator and the Reluctance Motor.

In a conventional (brushed) DC motor, the brushes make mechanical

contact with a set of electrical contacts on the rotor or also called the commutator,

forming an electrical circuit between the DC electrical source and the armature

coil-windings. As the armature rotates on axis, the stationary brushes come into

contact with different sections of the rotating commutator. The commutator and

brush system form a set of electrical switches, each firing in sequence, such that

electrical-power always flows through the armature coil closest to the permanent

magnet that is used as a stationary stator.

In a BLDC motor, the electromagnets do not move; but, the permanent

magnets rotate and the armature remains static. This gets around the problem of

how to transfer current to a moving armature. The method which is the brush-

system/commutator assembly is replaced by an electronic controller is used. The

controller performs the same power distribution found in a brushed DC motor, but

using a solid-state circuit rather than a commutator/brush system.

In this motor, the mechanical "rotating switch" or commutator/brush gear

assembly is replaced by an external electronic switch synchronized to the rotor's

position. Brushless motors are typically 85-90% efficient, whereas DC motors

with brush gear are typically 75-80% efficient. BLDC motors also have several

advantages over brushed DC motors, including higher efficiency and reliability,

reduced noise, longer lifetime caused by no brush erosion in it; elimination of

ionizing sparks from the commutator, and overall reduction of electromagnetic

interference (EMI).

With no windings on the rotor, they are not subjected to centrifugal forces,

and because the electromagnets are located around the perimeter, the

electromagnets can be cooled by conduction to the motor casing, requiring no

airflow inside the motor for cooling. This means that the motor's internals can be

entirely enclosed and protected from dirt or other foreign matter.

The maximum power that can be applied to a BLDC motor is exceptionally

high, limited almost exclusively by heat, which can damage the magnets. BLDC's

main disadvantage is higher cost, which arises from two issues. First, BLDC

motors require complex electronic speed controllers to run. Brushed DC motors

can be regulated by a comparatively trivial variable resistor (potentiometer or

rheostat), which is inefficient but also satisfactory for cost-sensitive applications.

Second, many practical uses have not been well developed in the commercial

sector.

BLDC motors are considered to be more efficient than brushed DC motors.

This means that for the same input power, a BLDC motor will convert more

electrical power into mechanical power than a brushed motor, mostly due to the

absence of friction of brushes.

The enhanced efficiency is greatest in the no-load and low-load region of

the motor's performance curve. Under high mechanical loads, BLDC motors and

highquality brushed motors are comparable in efficiency. Brushless DC motors are

commonly used where precise speed control is necessary, as in computer disk

drives or in video cassette recorders, the spindles within CD, and etc.

1.2.4 Separately Exited DC motor

A separately excited speed-controlled D.C. motor which has constant

armature current and pulse-controlled field current has its armature connected by

way of a bridge rectifier and an inductor to an A.C. source of substantially greater

r.m.s. voltage than the rated supply voltage of the armature, the inductor serving to

regulate the armature current. The field winding is connected to a field excitation

supply and to a thyristor chopper and the mean current through the field winding

controlled by the thyristor chopper thereby to control the armature torque and

therefore the speed. The field winding may comprise two field coils arranged to

produce opposing magnetic fields, the thyristor chopper serving to control the net

magnitude and direction of the motor field. A thyristor chopper may be provided

in the armature circuit to maintain a constant armature current during plugging of

the motor.

1.3. OPERATION

• When a separately excited motor is excited by a field current of if and an

armature current of ia flows in the circuit, the motor develops a back emf

and a torque to balance the load torque at a particular speed.

• The if is independent of the ia .Each windings are supplied separately. Any

change in the armature current has no effect on the field current.

• The if is normally much less than the ia.

1.4 Problem Statement

When commerce with DC motor, the problem come across with it are

efficiency and losses. In order for DC motor to function efficiently on a job, it

must have some special controller with it. Thus, the Artificial Neural Network

Controller will be used. There are too many types of controller nowadays, but

ANN Controller is chosen to interface with the DC motor because in ANN, Non-

adaptive control systems have fixed parameters that are used to control a system.

These types of controllers have proven to be very successful in controlling linear,

or almost linear, systems.

1.5 Problems encountered and solutions

Problem encountered:-

i) Control of DC motor speed;

ii) Interface of DC motor with software (MATLAB/SIMULINK);

iii) To acquire data from the DC motor

Solutions:-

i) Use of ANN controller to the system;

ii) Implementation of DAQ card to the control board;

iii) Use of encoder from the DC motor to the control board;

1.6 Objectives

The objective of the Artificial Neural Network Controller Design for DC motor

using MATLAB an application is it must control the speed of DC motor with

Artificial Neural Network controller using MATLAB application which the design

of the ANN controller is provided and can be tune. Each of the experimental result

must be compared to the result of simulation, as a way to attain the closely

approximation value that can be achieved in this system.

1.7 Scopes

The scopes that will be figure out in this research are:

i) Simulation and Modeling of DC motor;

ii) Implementation of ANN controller to actual DC motor;

iii) Comparison of MATLAB simulation result with the experimental result.

CHAPTER 2

LITERATURE REVIEW

2.1 Artificial Neural Network Controller

2.1.1 Introduction

Nowadays, the field of electrical power system control in general and motor

control in particular has been researching broadly. The new technologies are

applied to these in order to design the complicated technology system. One of

these new technologies is Artificial Neural Network (ANNs) which based on the

operating principle of human being nerve neural. It is composed of a large number

of highly interconnected processing elements (neurons) working in unison to solve

specific problems. ANNs, like people, learn by example. An ANN is configured

for a specific application, such as pattern recognition or data classification,

through a learning process. Learning in biological systems involves adjustments to

the synaptic connections that exist between the neurons. This is true of ANNs as

well.

2.1.2 NETWORKS

One efficient way of solving complex problems is following the lemma

“divide and conquer”. A complex system may be decomposed into simpler

elements, in order to be able to understand it. Also simple elements may be

gathered to produce a complex system (Bar Yam, 1997). Networks are one

approach for achieving this. There are a large number of different types of

networks, but they all are characterized by the following components: a set of

nodes, and connections between nodes.

The nodes can be seen as computational units. They receive inputs, and

process them to obtain an output. This processing might be very simple (such as

summing the inputs), or quite complex (a node might contain another network...)

The connections determine the information flow between nodes. They can be

unidirectional, when the information flows only in one sense, and bidirectional,

when the information flows in either sense. The interactions of nodes though the

connections lead to a global behaviour of the network, which cannot be observed

in the elements of the network. This global behaviour is said to be emergent. This

means that the abilities of the network supercede the ones of its elements, making

networks a very powerful tool.

Networks are used to model a wide range of phenomena in physics,

computer science, biochemistry, ethology, mathematics, sociology, economics,

telecommunications, and many other areas. This is because many systems can be

seen as a network: proteins, computers, communities, etc. Which other systems

could you see as a network? Why?

2.1.3 Artificial neural networks

One type of network sees the nodes as ‘artificial neurons’. These are called

artificial neural networks (ANNs). An artificial neuron is a computational model

inspired in the natural neurons. Natural neurons receive signals through synapses

located on the dendrites or membrane of the neuron. When the signals received are

strong enough (surpass a certain threshold), the neuron is activated and emits a

signal though the axon. This signal might be sent to another synapse, and might

activate other neurons.

Figure 1. Natural neurons (artist’s conception).

The complexity of real neurons is highly abstracted when modelling

artificial neurons. These basically consist of inputs (like synapses), which are

multiplied by weights (strength of the respective signals), and then computed by a

mathematical function which determines the activation of the neuron. Another

function (which may be the identity) computes the output of the artificial neuron

(sometimes in dependance of a certain threshold). ANNs combine artificial

neurons in order to process information.

The higher a weight of an artificial neuron is, the stronger the input which

is multiplied by it will be. Weights can also be negative, so we can say that the

signal is inhibited by the negative weight. Depending on the weights, the

computation of the neuron will be different. By adjusting the weights of an

artificial neuron we can obtain the output we want for specific inputs. But when

we have an ANN of hundreds or thousands of neurons, it would be quite

complicated to find by hand all the necessary weights. But we can find algorithms

which can adjust the weights of the ANN in order to obtain the desired output

from the network. This process of adjusting the weights is called learning or

training.

The number of types of ANNs and their uses is very high. Since the first

neural model by McCulloch and Pitts (1943) there have been developed hundreds

of different models considered as ANNs. The differences in them might be the

functions, the accepted values, the topology, the learning algorithms, etc. Also

there are many hybrid models where each neuron has more properties than the

ones we are reviewing here.

Because of matters of space, we will present only an ANN which learns

using the backpropagation algorithm (Rumelhart and cClelland, 1986) for

learning the appropriate weights, since it is one of the most common models used

in ANNs, and many others are based on it. Since the function of ANNs is to

process information, they are used mainly in fields related with it. There are a

wide variety of ANNs that are used to model real neural networks, and study

behaviour and control in animals and machines, but also there are ANNs which are

used for engineering purposes, such as pattern recognition, forecasting, and data

compression.

2.1.4 Neuron Model

Simple Neuron

A neuron with a single scalar input and no bias appears on the left below.

The scalar input p is transmitted through a connection that multiplies its

strength by the scalar weight w, to form the product wp, again a scalar. Here

the weighted input wp is the only argument of the transfer function f, which

produces the scalar output a. The neuron on the right has a scalar bias, b. You

may view the bias as simply being added to the product wp as shown by the

summing junction or as shifting the function f to the left by an amount b. The

bias is much like a weight, except that it has a constant input of 1.

The transfer function net input n, again a scalar, is the sum of the weighted

input wp and the bias b. This sum is the argument of the transfer function f. Here f

is a transfer function, typically a step function or a sigmoid function, which takes

the argument n and produces the output a.

Examples of various transfer functions are given in the next section. Note

that w and b are both adjustable scalar parameters of the neuron. The central idea

of neural networks is that such parameters can be adjusted so that the network

exhibits some desired or interesting behavior. Thus, we can train the network to do

a particular job by adjusting the weight or bias parameters, or perhaps the network

itself will adjust these parameters to achieve some desired end.

Transfer Functions

Many transfer functions are included in this toolbox. A complete list of

them can be found in “Transfer Function Graphs” in Chapter 14. Three of the most

commonly used functions are shown below.

Hard-Limit Transfer Function

The hard-limit transfer function shown above limits the output of the

neuron to either 0, if the net input argument n is less than 0; or 1, if n is greater

than or equal to 0. We will use this function in Chapter 3 “Perceptrons” to create

neurons that make classification decisions. The toolbox has a function, hardlim, to

realize the mathematical hard-limit transfer function shown above. Try the code

shown below.

It produces a plot of the function hardlim over the range -5 to +5.

All of the mathematical transfer functions in the toolbox can be realized with

a function having the same name.

The linear transfer function is shown below.

Linear Transfer Function

The sigmoid transfer function shown below takes the input, which may have

any value between plus and minus infinity, and squashes the output into the

range 0 to 1.

Log-Sigmoid Transfer Function

This transfer function is commonly used in backpropagation networks, in

part because it is differentiable.

The symbol in the square to the right of each transfer function graph shown

above represents the associated transfer function. These icons will replace the

general f in the boxes of network diagrams to show the particular transfer

function being used.

2.2 NETWORK ARCHITECTURES

Two or more of the neurons shown earlier can be combined in a layer, and

a particular network could contain one or more such layers. First consider a

single layer of neurons.

2.2.1 A Layer of Neurons

A one-layer network with R input elements and S neurons follows.

In this network, each element of the input vector p is connected to each

neuron input through the weight matrix W. The ith neuron has a summer that

gathers its weighted inputs and bias to form its own scalar output n(i). The various

n(i) taken together form an S-element net input vector n. Finally, the neuron layer

outputs form a column vector a. We show the expression for a at the bottom of the

figure. Note that it is common for the number of inputs to a layer to be different

from the number of neurons (i.e., R ¦ S). A layer is not constrained to have the

number of its inputs equal to the number of its neurons.

You can create a single (composite) layer of neurons having different

transfer functions simply by putting two of the networks shown earlier in parallel.

Both networks would have the same inputs, and each network would create some

of the outputs.

The input vector elements enter the network through the weight matrix W.

Note that the row indices on the elements of matrix W indicate the

destination neuron of the weight, and the column indices indicate which source is

the input for that weight. Thus, the indices in say that the strength of the

signal from the second input element to the first (and only) neuron is

The S neuron R input one-layer network also can be drawn in abbreviated

notation.

Here p is an R length input vector, W is an SxR matrix, and a and b are S

length vectors. As defined previously, the neuron layer includes the weight

matrix, the multiplication operations, the bias vector b, the summer, and the

transfer function boxes.

2.2.2 Multiple Layers of Neurons

A network can have several layers. Each layer has a weight matrix W, a

bias vector b, and an output vector a. To distinguish between the weight matrices,

output vectors, etc., for each of these layers in our figures, we append the number

of the layer as a superscript to the variable of interest. You can see the use of this

layer notation in the three-layer network shown below, and in the equations at the

bottom of the figure.

The network shown above has R1 inputs, S1 neurons in the first layer, S2

neurons in the second layer, etc. It is common for different layers to have different

numbers of neurons. A constant input 1 is fed to the biases for each neuron.

Note that the outputs of each intermediate layer are the inputs to the

following layer. Thus layer 2 can be analyzed as a one-layer network with S1

inputs, S2 neurons, and an S2xS1 weight matrix W2. The input to layer 2 is a1; the

output is a2. Now that we have identified all the vectors and matrices of layer 2,

we can treat it as a single-layer network on its own. This approach can be taken

with any layer of the network.

The layers of a multilayer network play different roles. A layer that

produces the network output is called an output layer. All other layers are called

hidden layers. The three-layer network shown earlier has one output layer (layer 3)

and two hidden layers (layer 1 and layer 2). Some authors refer to the inputs

as a fourth layer. We will not use that designation.

The same three-layer network discussed previously also can be drawn using

our abbreviated notation.

Multiple-layer networks are quite powerful. For instance, a network of two

layers, where the first layer is sigmoid and the second layer is linear, can be

rained to approximate any function (with a finite number of discontinuities)

arbitrarily well.

Here we assume that the output of the third layer, a3, is the network output

of interest, and we have labeled this output as y. We will use this notation to

specify the output of multilayer networks.

2.2.3 NN Predictive Control

The neural network predictive controller that is implemented in the Neural

Network Toolbox uses a neural network model of a nonlinear plant to predict

future plant performance. The controller then calculates the control input that will

optimize plant performance over a specified future time horizon. The first step in

model predictive control is to determine the neural network plant model (system

identification). Next, the plant model is used by the controller to predict future

performance.

The following section describes the system identification process. This is

followed by a description of the optimization process. Finally, it discusses how to

use the model predictive controller block that has been implemented in Simulink.

System Identification

The first stage of model predictive control is to train a neural network to

represent the forward dynamics of the plant. The prediction error between the

plant output and the neural network output is used as the neural network training

signal. The process is represented by the following figure.

The neural network plant model uses previous inputs and previous plant

outputs to predict future values of the plant output. The structure of the neural

network plant model is given in the following figure.

This network can be trained offline in batch mode, using data collected from

the operation of the plant.

2.2.4 Predictive Control

The following block diagram illustrates the model predictive control

process. The controller consists of the neural network plant model and the

optimization block. The optimization block determines the values of that minimize

and then the optimal is input to the plant. The controller block has been

implemented in Simulink, as described in the following section.

2.3 Exercise

This exercise is to become familiar with artificial neural network concepts.

Build a network consisting of four artificial neurons. Two neurons receive inputs

to the network, and the other two give outputs from the network.

There are weights assigned with each arrow, which represent information

flow. These weights are multiplied by the values which go through each arrow, to

give more or less strength to the signal which they transmit. The neurons of this

network just sum their inputs. Since the input neurons have only one input, their

output will be the input they received multiplied by a weight. What happens if this

weight is negative? What happens if this weight is zero?

The neurons on the output layer receive the outputs of both input neurons,

multiplied by their respective weights, and sum them. They give an output which

is multiplied by another weight. Now, set all the weights to be equal to one. This

means that the information will flow unaffected. Compute the outputs of the

network for the following inputs: (1,1), (1,0), (0,1), (0,0), (-1,1), (-1,-1). Good.

Now, choose weights among 0.5, 0, and -0.5, and set them randomly along the

network. Compute the outputs for the same inputs as above. Change some weights

and see how the behaviour of the networks changes.

Which weights are more critical (if you change those weights, the outputs

will change more dramatically)? Now, suppose we want a network like the one we

are working with, such that the outputs should be the inputs in inverse order (e.g.

(0.3,0.7)->(0.7,0.3)). That was an easy one! Another easy network would be one

where the outputs should be the double of the inputs. Now, let’s set thresholds to

the neurons. This is, if the revious output of the neuron (weighted sum of the

inputs) is greater than the threshold of the neuron, the output of the neuron will be

one, and zero otherwise. Set thresholds to a couple of the already developed

networks, and see how this affects their behaviour. Now, suppose we have a

network which will receive for inputs only zeroes and/or ones.

Adjust the weights and thresholds of the neurons so that the output of the

first output neuron will be the conjunction (AND) of the network inputs (one when

both inputs are one, zero otherwise), and the output of the second output neuron

will be the disjunction (OR) of the network inputs (zero in both inputs are zeroes,

one otherwise). You can see that there is more than one network which will give

the requested result. Now, perhaps it is not so omplicated to adjust the weights of

such a small network, but also the capabilities of this are quite limited. If we need

a network of hundreds of neurons, how would you adjust the weights to obtain the

desired output? There are methods for finding them, and now we will expose the

most common one.

2.4 STRUCTURE

In order to give a primitive impress on ANNs and its work mechanism, here

shows an example. This example of ANNs learning is provided by Pomer-

luau’s(1993) system ALVINN, which uses a learned ANN to steer an au-

tonomous vehicle driveing at normal speeds on public highwarys. The input of

ANNs is a 30x32 grid of pixel intensities obtained from forward-faced camera

mounted on the vehicle. The output is the direction in which the vehicle is steered.

The ANN is trained to steer the vehicle after at first a human

steers it some time. In Figure 1.3 shows the main structure of this ANN. In the

figure 1.3, each node(circle) is a single network unit, and the lines entering the

node from below are its inputs. As can be seen, four units receive inputs directly

from all of the 30X32 pixels from the camera in vehicle. These are called ”hidden”

units because their outputs are only available to the coming units in the network,

but not as a part of the global network.

The second layer composed of 30 units, which use the outputs from hidden

units as their inputs, are called ”output” units. In this example, each output unit

corresponds to a particular steering direction.

This example shows a simple structure of the ANN, more exactly this is a

feedforward network, which is one of the commen artificial network, The

feedforward network is composed of a set of nodes and connections arranged in

layers. The connections are typically formed by connecting each of the nodes in a

given layer to all of the neurons in the next layer. In this way every node in a given

layer is connected to every other node in the next layer.

In this example typically there are three layers(at least) to a feedforward

network - an input layer, a hidden layer, and an output layer. The input layer does

no processing - it is simply at which the data vector (one dimensional data array) is

fed into the network. The input layer then feeds into the hidden layer. The hidden

layer, in turn, feeds into the output layer. The actual processing in the network

occurs in the nodes of the hidden layer and the output layer.

CHAPTER 3

BACK PROPAGATION ALGORITHM

3.1 INTRODUCTION

Backpropagation, or propagation of error, is a common method of

teaching artificial neural networks how to perform a given task. It was first

described by Arthur E. Bryson and Yu-Chi Ho in 1969, but it wasn't until 1986,

through the work of David E. Rumelhart, Geoffrey E. Hinton and Ronald J.

Williams, that it gained recognition, and it led to a “renaissance” in the field of

artificial neural network research.

It is a supervised learning method, and is an implementation of the Delta

rule. It requires a teacher that knows, or can calculate, the desired output for any

given input. It is most useful for feed-forward networks (networks that have no

feedback, or simply, that have no connections that loop). The term is an

abbreviation for "backwards propagation of errors". Backpropagation requires that

the activation function used by the artificial neurons (or "nodes") is differentiable.

3.1.1 Learning with Back Propagation Algorithm

The back propagation algorithm is an involved mathematical tool; however,

execution of the training equations is based on iterative processes, and thus is

easily implement able on a computer.

Weight changes for hidden to output weights just like Widrow-Hoff

learning rule.

Weight changes for input to hidden weights just like Widrow-Hoff learning

rule but error signal is obtained by "back-propagating" error from the

output units.

During the training session of the network, a pair of patterns is presented (Xk,

Tk), where Xk in the input pattern and Tk is the target or desired pattern. The Xk

pattern causes output responses at teach neurons in each layer and, hence, an

output Ok at the output layer. At the output layer, the difference between the

actual and target outputs yields an error signal. This error signal depends on the

values of the weights of the neurons I each layer. This error is minimized, and

during this process new values for the weights are obtained. The speed and

accuracy of the learning process-that is, the process of updating the weights-also

depends on a factor, known as the learning rate.

Before starting the back propagation learning process, we need the following:

The set of training patterns, input, and target

A value for the learning rate

A criterion that terminates the algorithm

A methodology for updating weights

The nonlinearity function (usually the sigmoid)

Initial weight values (typically small random values)

The process then starts by applying the first input pattern Xk and the

corresponding target output Tk. The input causes a response to the neurons of the

first layer, which in turn cause a response to the neurons of the next layer, and so

on, until a response is obtained at the output layer.

That response is then compared with the target response; and the difference

(the error signal) is calculated. From the error difference at the output neurons, the

algorithm computes the rate at which the error changes as the activity level of the

neuron changes. So far, the calculations were computed forward (i.e., from the

input layer to the output layer). Now, the algorithm steps back one layer before

that output layer and recalculate the weights of the output layer (the weights

between the last hidden layer and the neurons of the output layer) so that the

output error is minimized.

The algorithm next calculates the error output at the last hidden layer and

computes new values for its weights (the weights between the last and next-to-last

hidden layers). The algorithm continues calculating the error and computing new

weight values, moving layer by layer backward, toward the input. When the input

is reached and the weights do not change, (i.e., when they have reached a steady

state), then the algorithm selects the next pair of input-target patterns and repeats

the process. Although responses move in a forward direction, weights are

calculated by moving backward, hence the name back propagation.

3.2 IMPLEMENTATION OF BACK PROPAGATION ALGORITHM

The back-propagation algorithm consists of the following steps:

Each Input is then multiplied by a weight that would either inhibit the input

or excite the input. The weighted sum of then inputs in then calculated

First, it computes the total weighted input Xj, using the formula:

Where yi is the activity level of the jth unit in the previous layer and Wij is

the weight of The connection between the ith and the jth unit.

Then the weighed Xj is passed through a sigmoid function that would scale

the output in between a 0 and 1.

Next, the unit calculates the activity yj using some function of the total

weighted input. Typically we use the sigmoid function:

Once the output is calculated, it is compared with the required output and

the total Error E is computed.

Once the activities of all output units have been determined, the network

computes the error E, which is defined by the expression:

3.3 OVERVIEW OF BACK PROPAGATION ALGORITHM

Minsky and Papert (1969) showed that there are many simple problems

such as the exclusive-or problem which linear neural networks can not solve. Note

that term "solve" means learn the desired associative links. Argument is that if

such networks can not solve such simple problems how they could solve complex

problems in vision, language, and motor control. Solutions to this problem were as

follows:

Select appropriate "recoding" scheme which transforms inputs

Perceptron Learning Rule -- Requires that you correctly "guess" an

acceptable input to hidden unit mapping.

Back-propagation learning rule -- Learn both sets of weights

simultaneously.

Back propagation is a form of supervised learning for multi-layer nets, also

known as the generalized delta rule. Error data at the output layer is "back

propagated" to earlier ones, allowing incoming weights to these layers to be

updated. It is most often used as training algorithm in current neural network

applications. The back propagation algorithm was developed by Paul Werbos in

1974 and rediscovered independently by Rumelhart and Parker. Since its

rediscovery, the back opagation algorithm has been widely used as a learning

algorithm in feed forward multilayer neural networks.

What makes this algorithm different than the others is the process by which the

weights are calculated during the learning network. In general, the difficulty with

multilayer Perceptrons is calculating the weights of the hidden layers in an

efficient way that result in the least (or zero) output error; the more hidden layers

there are, the more difficult it becomes. To update the weights, one must calculate

an error. At the output layer this error is easily measured; this is the difference

between the actual and desired (target) outputs. At the hidden layers, however,

there is no direct observation of the error; hence, some other technique must be

used. To calculate an error at the hidden layers that will cause minimization of the

output error, as this is the ultimate goal.

The back propagation algorithm is an involved mathematical tool; however,

execution of the training equations is based on iterative processes, and thus is

easily implementable on a computer.

3.4 USE OF BACK PROPAGATION NEURAL NETWORK SOLUTION

A large amount of input/output data is available, but you're not sure how to

relate it to the output.

The problem appears to have overwhelming complexity, but there is clearly

a solution.

It is easy to create a number of examples of the correct behavior.

The solution to the problem may change over time, within the bounds of the

given input and output parameters (i.e., today 2+2=4, but in the future we

may find that 2+2=3.8).

Outputs can be "fuzzy", or non-numeric.

One of the most common applications of NNs is in image processing. Some

examples would be: identifying hand-written characters; matching a photograph of

a person's face with a different photo in a database; performing data compression

on an image with minimal loss of content. Other applications could be voice

recognition; RADAR signature analysis; stock market prediction. All of these

problems involve large amounts of data, and complex relationships between the

different parameters.

It is important to remember that with a NN solution, you do not have to

understand the solution at all. This is a major advantage of NN approaches. With

more traditional techniques, you must understand the inputs, and the algorithms,

and the outputs in great detail, to have any hope of implementing something that

works. With a NN, you simply show it: "this is the correct output, given this

input". With an adequate amount of training, the network will mimic the function

that you are demonstrating. Further, with a NN, it is ok to apply some inputs that

turn out to be irrelevant to the solution - during the training process; the network

will learn to ignore any inputs that don't contribute to the output. Conversely, if

you leave out some critical inputs, then you will find out because the network will

fail to converge on a solution

CHAPTER 4

MATLAB 7.5

4.1 Introduction

MATLAB, which stands for MATrix LABoratory, is a state-of-the-art

mathematical software package, which is used extensively in both academia and

industry. It is an interactive program for numerical computation and data

visualization, which along with its programming capabilities provides a very

useful tool for almost all areas of science and engineering. Unlike other

mathematical packages, such as MAPLE or MATHEMATICA, MATLAB cannot

perform symbolic manipulations without the use of additional Toolboxes. It

remains however, one of the leading software packages for numerical

computation.

As you might guess from its name, MATLAB deals mainly with matrices.

A scalar is a 1-by-1 matrix and a row vector of length say 5, is a 1-by-5 matrix.

We will elaborate more on these and other features of MATLAB in the sections

that follow. One of the many advantages of MATLAB is the natural notation used.

It looks a lot like the notation that you encounter in a linear algebra course. This

makes the use of the program especially easy and it is what makes MATLAB a

natural choice for numerical computations.

The purpose of this tutorial is to familiarize the beginner to MATLAB, by

introducing the basic features and commands of the program. It is in no way a

complete reference and the reader is encouraged to further enhance his or her

knowledge of MATLAB by reading some of the suggested references at the end of

this guide.

4.1.1 MATLAB BASICS

The basic features:

Let us start with something simple, like defining a row vector with

components the numbers 1, 2, 3, 4, 5 and assigning it a variable name, say x.

» x = [1 2 3 4 5]

x =

1 2 3 4 5

Note that we used the equal sign for assigning the variable name x to the

vector, brackets to enclose its entries and spaces to separate them. (Just like you

would using the linear algebra notation). We could have used commas ( , ) instead

of spaces to separate the entries, or even a combination of the two. The use of

either spaces or commas is essential!

To create a column vector (MATLAB distinguishes between row and

column vectors, as it should) we can either use semicolons ( ; ) to separate the

entries, or first define a row vector and take its transpose to obtain a column

vector. Let us demonstrate this by defining a column vector y with entries 6, 7, 8,

9, 10 using both techniques.

» y = [6;7;8;9;10]

y =

6

7

8

9

10

» y = [6,7,8,9,10]

y =

6 7 8 9 10

» y'

ans =

6

7

8

9

10

Let us make a few comments. First, note that to take the transpose of a

vector (or a matrix for that matter) we use the single quote ( ' ). Also note that

MATLAB repeats (after it processes) what we typed in. Sometimes, however, we

might not wish to “see” the output of a specific command. We can suppress the

output by using a semicolon ( ; ) at the end of the command line.

Finally, keep in mind that MATLAB automatically assigns the variable

name ans to anything that has not been assigned a name. In the example above,

this means that a new variable has been created with the column vector entries as

its value. The variable ans, however, gets recycled and every time we type in a

command without assigning a variable, ans gets that value.

It is good practice to keep track of what variables are defined and occupy

our workspace. Due to the fact that this can be cumbersome, MATLAB can do it

for us. The command whos gives all sorts of information on what variables are

active.

4.1.2 Vectors and matrices:

We have already seen how to define a vector and assign a variable name to

it. Often it is useful to define vectors (and matrices) that contain equally spaced

entries. This can be done by specifying the first entry, an increment, and the last

entry. MATLAB will automatically figure out how many entries you need and

their values. For example, to create a vector whose entries are 0, 1, 2, 3, …, 7, 8,

you can type

» u = [0:8]

u =

0 1 2 3 4 5 6 7 8

Here we specified the first entry 0 and the last entry 8, separated by a colon

( : ). MATLAB automatically filled-in the (omitted) entries using the (default)

increment 1. You could also specify an increment as is done in the next example.

To obtain a vector whose entries are 0, 2, 4, 6, and 8, you can type in the following

line:

» v = [0:2:8]

v =

0 2 4 6 8

Here we specified the first entry 0, the increment value 2, and the last entry

8. The two colons ( :) “tell” MATLAB to fill in the (omitted) entries using the

specified increment value.

MATLAB will allow you to look at specific parts of the vector. If you

want, for example, to only look at the first 3 entries in the vector v, you can use the

same notation you used to create the vector:

» v(1:3)

ans =

0 2 4

Note that we used parentheses, instead of brackets, to refer to the entries of

the vector. Since we omitted the increment value, MATLAB automatically

assumes that the increment is 1. The following command lists the first 4 entries of

the vector v, using the increment value 2 :

» v(1:2:4)

ans =

0 4

4.2 Plotting:

We end our discussion on the basic features of MATLAB by introducing

the commands for data visualization (i.e. plotting). By typing help plot you can see

the various capabilities of this main command for two-dimensional plotting, some

of which will be illustrated below.

If x and y are two vectors of the same length then plot(x,y) plots x versus y.

For example, to obtain the graph of y = cos(x) from – π to π, we can first define

the vector x with components equally spaced numbers between – π and π, with

increment, say 0.01.

» x=-pi:0.01:pi;

We placed a semicolon at the end of the input line to avoid seeing the

(long) output. Note that the smallest the increment, the “smoother” the curve will

be. 1 – 2 Next, we define the vector y

» y=cos(x);

(using a semicolon again) and we ask for the plot

» plot(x,y)

At this point a new window will open on our desktop in which the graph (as seen

below) will appear.

It is good practice to label the axis on a graph and if applicable indicate

what each axis represents. This can be done with the xlabel and ylabel commands.

» xlabel('x')

» ylabel('y=cos(x)')

Inside parentheses, and enclosed within single quotes, we type the text that

we wish to be displayed along the x and y axis, respectively.

We could even put a title on top using » title('Graph of cosine from - \pi to \pi')

as long as we remember to enclose the text in parentheses within single quotes.

The back-slash ( \ ) in front of pi allows the user to take advantage of LaTeX

commands. If you are not familiar with the mathematical typesetting software

LaTeX (and its commands), ignore the present commend and simply type

» title('Graph of cosine from -pi to pi')

Both graphs are shown below.

These commands can be invoked even after the plot window has been opened and

MATLAB will make all the necessary adjustments to the display.

CHAPTER 5

DC MOTOR CONTROLLED BY ANN

5.1 INTRODUCTION

Nowadays, the field of electrical power system control in general, and

motor control in particular have been researching broadly. The new technologies

are applied to these in order to design the complicated technology system. One of

these new technologies is Artificial Neural Networks (ANNs) which base on the

operating principle of human being nerve neural. There are a number of articles

that use ANNs applications to identify the mathematical DC motor model. And

then this model is applied to control the motor speed. They also uses inverting

forward ANN with two input parameters for adaptive control of DC motor.

However, these researches were not interested in the ability of forecasting

and estimating the DC motor speed. ANNs are applied broadly because of the

following special qualities:

1. All the ANN signals are transmitted in one direction, the same as in

automatically control system.

2. The ability of ANNs to learn the sample.

3. The ability to creating the parallel signals in Analog as well as in the discrete

system.

4. The adaptive ability.

With the special qualities mentioned above, ANNs can be trained to display

the nonlinear relationships that the conventional tools could not implemented. It

also is applied to control complicated electro- mechanic system such as DC motor

and synchronous machines.

To train ANNs, we have to determine the input and output datasheets first,

and then design the ANNs net by optimizing the number of hidden layers, the

number of neural of each layer as well as the input/output number and the transfer

function.

The following is to find the ANNs net learning algorithm. ANNs are trained

relying on two basic principal: supervisor and unsupervisor. According to

supervisor, ANNs learn the input/ output data (targets) before being used in the

control system.

5.2 POSIBILITY OF THE APLICATION ANN IN MOTOR CONTROL

In real life aplication of the motor control are usage of the neural networks

efficient for solve folowing problems:

5.2.1 FUNCTION APPROXIMATION (REGRESSION ANALYSIS)

This feature is very good usefull in non-linear regulation systems, where

can not realize matematical model or design of matematical model is very

difficult. In real aplications we know three types. The first type is a common

learning. The learning stage is presented in figure . After learning realize neural

network regulator.

Fig. 3: Learning stage of the common learning

5.2.2 DC MOTOR CONTROL MODEL WITH ANNS

The DC motor is the obvious proving ground for advanced control

algorithms in electric drives due to the stable and straight forward characteristics

associated with it. From a control systems point of view, the DC motor can be

considered as SISO plant, thereby eliminating the complications associated with a

multi-input drive system.

A. Mathematical model of DC motor:

The separately excited DC motor is described by the following equations:

KFωp(t) = – Raia(t) – La[dia(t)/dt] + Vt(t) (1)

KFia(t) = J[dωp(t)/dt] + Bωp(t) + TL(t) (2)

where,

ωp(t) - rotor speed (rad/s)

Vt(t) - terminal voltage (V)

ia(t) - armature current (A)

TL(t) - load torque (Nm)

J - rotor inertia (Nm2)

KF - torque & back emf constant (NmA-1 )

B - viscous friction coefficient (Nms)

Ra - armature resistance (Ω)

La - armature inductance (H)

From these equations we can create mathematical model of

the DC motor. The model is presented in Figure1.

Where,

Ta -Time constant of motor armature circuit and Ta=La/Ra (s)

Tm – Mechanical time constant of the motor Tm=J/B (s)

Figure 1. The mathematical model of a separately DC motor

B. The conventional control systems of DC motor

There are different methods to synthesize a control systems of DC motor,

but for a comparison with method used ANNs authors presented a conventional

control system of DC motor, where the regulator current and regulator speed are

synthesized by Bietrage-optimum to reduce the over-regulation.

Figure 2. Conventional model of control system DC motors

In the conventional model current and voltage sensors are very important

elements and they are a main role during regulation of speed alongside with

regulator current and regulator speed.

B. The control system of DC motor using ANNs

The control system of DC motor using ANNs is presented in the Figure3, where

ANN1, ANN2 are trained to emulate a function: ANN 1 to estimate the speed,

ANN2 to control terminal voltage.

Figure 3. DC motor control model with ANNs

C. The structure and the process of learning ANNs.

ANNs have been found to be effective systems for learning discriminates for

patterns from a body of examples. Activation signals of nodes in one layer are

transmitted to the next layer are through links which either attenuate or amplify

the signal.

An ANNs are trained to emulate a function by presenting it with a

representative set of input/output functional patterns. The back-propagation

training technique adjusts the weights in all connecting links and thresholds in the

nodes so that the difference between the actual output and target output are

minimized for all given training patterns.

In designing and training an ANN to emulate a function, the only fixed

parameters are the number of inputs and outputs to the ANN, which are based on

the input/output variables of the function. It is also widely accepted that maximum

of two hidden layers are sufficient to learn any arbitrary nonlinearity . However,

the number of hidden neurons and the values of learning parameters, which are

equally critical for satisfactory learning, are not supported by such well established

selection criteria. The choice is usually based on experience. The ultimate

objective is to find a combination of parameters which gives a total error of

required tolerance a reasonable number of training sweeps.

Figure 4. Structure of ANN1

The ANN1 and ANN2 structure is shown in Figure4, and Figure5. It

consists of an input layer, output layer and one hidden layer. The input and hidden

layers are tansig-sigmoid activation functions, while the output layer is a linear

function.

Three inputs of ANN1 are a reference speed , a terminal voltage

and an armature current . And output of ANN1 is an estimated speed

The ANN2 has four inputs: reference speed , a terminal voltage ,

an armature current and an estimated speed from ANN1. The output of

ANN2 is the control signal for converter Alpha.

Figure 5. Structure of ANN2

The ANNs are trained off-line using inputs patterns of - for

ANN1, and of –for ANN2. The reference speed – training data

is shown in Figure 6. Other inputs patterns are taken from actual hardware

and the corresponding output target is shown in Figure 7.

The motor speed is estimated by the trained ANN predictor as :

For ANN1,

Figure 6. The Reference speed DC motor

Figure 7. The target speed DC motor

For ANN2 Alpha (4)

The training program of ANN1 and ANN2 are written in the Neural

Network of Matlab program under m-file and it uses the Levenberg – Marquardt

back propagation. There is no any reference that mention to the optimal number of

neural in each layer, so collecting the neural networks becomes more complicated.

In order to choose the optimal number of neural, the neural network is

trained by m-file program, reducing the number of neural in ANNs hidden layer

until the learning error can be accepted.

The ANNs and the training effort are briefly described by the following

statistics.

5.3. SIMULATION OF CONTROL SYSTEMS DC MOTOR

To simulation the conventional control system and control system with

ANNs we used A Simulink/Matlab program the toolbox of Neural-network. The

DC motor , which is used in models has the follows parameter 5HP, 240V, 1750

RPM, field 150V,

To compare the quality of two control systems we consider different

operating modes of the DC motor:

a) When parameters of the DC motor are constant.

The start of DC motor and regulation of DC motor speed for two

models of control systems is shown in Figure 7,8,9,10. The results

show that both models have a good performance and the control

quality is same for two models.

b) When parameters of the DC motor are not constant.

Figures 11,12,13 show a start of the DC motor for conventional

model and model with ANNs when mechanical time constant of the motor

reduces and gives : Tm1=75%Tm; Tm2=50%Tm ; Tm3=30%Tm . Where Tm is

the mechanical time constant of the motor when parameters of the DC

motor are constant.

Figures 14,16 show a regulation of speed for conventional model

when Tm2=50%Tm ; Tm3=30%Tm . Figures 15,17 show a regulation of

speed for model with ANNs when Tm2=50%Tm ; Tm3=30%Tm . Obviously,

that model with ANNs has the better performance when Tm is variation.

Thus, in the conventional model a speed of the DC motor is fluctuating and

the system may be instability.

5.3. THE SIMULATING RESULTS

MATLAB SIMULATION:

Simulation Results

The result from the simulation of the motor model in SIMULINK is shown in

Figure

Figure 5.1: Simulated output for the armature current, torque and rotor speed forinitial conditions.

Figure 5.6: Constant torque load applied to the motor

Fig Simulation results of separately exited dc machine under no-load.

Fig Simulation results of separately exited dc machine under load.

CHAPTER 6

MATLAB CODE

6.1 The following is a listing of the MATLAB code that uses the serial port

to interface with the QET. This function is called by the GUI to complete the

user specified control task.

function [Data, DataCount, SamplePeriod, Error] = ...RunQET(SigType, SigMagRate, SigFreq, RunTime, hScopeCallback, handles)%RunQET - Runs a case on the Quanser engineering trainer and returns the%results%% [Data, DataCount, SamplePeriod, Error] = RunQET(SigType, SigMagRate,% SigFreq, RunTime, hScopeCallback, handles) runs the specified case on% the Quanser Engineering Trainer and returns a matrix of results along% with the number of time samples, the sample period, and an error flag.%% The input SigType specifies the signal type to run. This can be one of% the following values:% 1 - Impulse signal% 2 - Step signal% 3 - Ramp signal% 4 - Parabolic signal% 5 - Triangle wave signal% 6 - Sawtooth wave signal% 7 - Square wave signal% 8 - Sine wave signal% 9 - Cosine wave signal% 10 - Stair step signal%% The input SigMagRate defines the signal magnitude or rate depending on% the signal type. For a ramp or parabolic signal it specifies a rate.

% For all the other signal types it specifies a magnuitude. For more% information please see the associated help file.%% The input SigFreq specifies the signal frequency in Hz, if applicable% to the speicifed signal type. If the frequency is not applicable to% the specified signal type (such as for a step signal) then this should% be set to 0.%% The input RunTime specifies the total run time in seconds.%% The input hScopeCallback specifies the handle to the scope callback% procedure. If the callback isn't desired then this value should be set% to zero.%% The input handles is the handles array that will be passed to the scope% callback function. If the scope callback value is set to zero then% this should also be set to zero.%% The output Data contains all of the returned data. This array will% have DataCount rows in it and will contain seven columns. The columns% are:% 1 - The data index for this row returned by the QET% 2 - The reference signal% 3 - The actual angle of the motor% 4 - The voltage being applied to the motor% 5 - The current being drawn by the motor% 6 - The first derivative of the motor angle using the simple method% 7 - The first derivative of the motor angle using the filtering method%% The output SamplePeriod is the sample period, in seconds, being used by the QET%% The output Error is a boolean flag as to whether an error occured.%

% For additional information please see the help file.Data = [];DataCount = 0;SamplePeriod = 0;Error = true;

if nargout ~= 4msgbox('Invalid number of return arguments for RunQET!')returnendif nargin < 4msgbox('Invalid number of arguments for RunQET!')returnend%Set current elapsed timeTimeNow = 0;DataCount = 0;%Close all serial port objectsCloseAllSerialPorts%Create serial port objectS = serial(ComPortName, 'BaudRate', 115200, 'Parity', 'none', 'DataBits', 8,'StopBits', 1, 'InputBufferSize', 50000, 'Timeout', 1);%Open serial portfopen(S);%Get sampling frequencypause(0.1);if ~WriteToQET(S, 'f', 'uchar') fclose(S); return; end;if ~WriteToQET(S, 0, 'float32') fclose(S); return; end;PauseCount = 0;PauseStep = 0.1;while S.BytesAvailable < 5pause(PauseStep);PauseCount = PauseCount + 1;if PauseCount > 2/PauseStepSmsgbox('The QET is not responding. Please verify it is reset and try again')fclose(S);returnendendCmd = fread(S, 1, 'uchar');if Cmd ~= 'f'disp('Unexcected return value for frequency');returnendSamplePeriod = fread(S, 1, 'float32');

%Determine number of signals to readEndCnt = ceil(RunTime/SamplePeriod-0.0001);if (EndCnt > 2^16)msgbox('Too long of a runtime was entered for the sample rate. Please choose ashorter run time or lower the sample rate. Reset the QET and try again.')returnend%Set the end of time count on QETif ~WriteToQET(S, 'E', 'uchar') fclose(S); return; end;if ~WriteToQET(S, EndCnt, 'int16') fclose(S); return; end;if ~WriteToQET(S, 0, 'int16') fclose(S); return; end;%Initially size data arrayData = zeros(EndCnt,7);

%Set reference signal type and dataif ~WriteToQET(S, 'S', 'uchar') fclose(S); return; end;if ~WriteToQET(S, SigType, 'int16') fclose(S); return; end;if ~WriteToQET(S, 0, 'int16') fclose(S); return; end;if ~WriteToQET(S, 'M', 'uchar') fclose(S); return; end;if ~WriteToQET(S, SigMagRate, 'float32') fclose(S); return; end;if ~WriteToQET(S, 'F', 'uchar') fclose(S); return; end;if ~WriteToQET(S, SigFreq, 'float32') fclose(S); return; end;%Send start flagif ~WriteToQET(S, 'G', 'uchar') fclose(S); return; end;if ~WriteToQET(S, 0, 'float32') fclose(S); return; end;%Read dataCmd = 0;BytesReq = 0;RunLoop = true;while RunLoop%disp(S.BytesAvailable);if Cmd == 0%Read command character if it is availableif S.BytesAvailable >= 1Cmd = fread(S, 1, 'uchar');%Determine number of bytes required to complete this commandif Cmd == 'X'%This is the stop flagCmd = 0;BytesReq = 0;RunLoop = false;break;elseif Cmd == 'd'

%This is the data flag (1 long integer and 4 floating point numbers)BytesReq = 2 + 4*4;DataCount = DataCount + 1;endendend%Check for command data availableif Cmd ~= 0 && BytesReq ~= 0if S.BytesAvailable >= BytesReqif Cmd == 'd'%Read dataQETDataIndex = fread(S, 1, 'uint16');RefSig = fread(S, 1, 'float32');ActAng = fread(S, 1, 'float32');Volt = fread(S, 1, 'float32');Current = fread(S, 1, 'float32');AngFD = fread(S, 1, 'float32');AngFDFilt = fread(S, 1, 'float32');%Save dataData(DataCount, 1) = QETDataIndex;Data(DataCount, 2) = RefSig;Data(DataCount, 3) = ActAng;

Data(DataCount, 5) = Current;Data(DataCount, 6) = AngFD;Data(DataCount, 7) = AngFDFilt;if nargin == 6 && isa(hScopeCallback, 'function_handle')hScopeCallback(QETDataIndex*SamplePeriod, RefSig, ActAng, Volt,Current, AngFD, handles);endendCmd = 0;endendend%Close serial port and clean upfclose(S);delete(S);clear S;Error = false;function CloseAllSerialPortsPorts = instrfind({'Port'}, {ComPortName});for i = size(Ports,1):-1:1fclose(Ports(i));delete(Ports(i));end

function [PortName] = ComPortName()if evalin('base', 'exist(''QETPortName'')')PortName = evalin('base', 'QETPortName');elsePortName = 'COM1';endfunction [Success] = WriteToQET(S, Val, Format)%Since MATLAB sporadically times out on this statement we'll try it up to 5 times%KNOWN BUG: see http://www.mathworks.com/support/bugreports/details.html?rp=250986Success = false;for i=1:5Errored = false;tryfwrite(S, Val, Format);catchErrored = true;endif ~ErroredSuccess = true;break;elseif i == 5disp('Unable to write to QET device. Please reset it and try again.');return;endend

CONCLUSION:

The DC motor has been successfully controlled using an ANN. Two ANNs

are trained to emulate functions: estimating the speed of DC motor and controlling

the DC motor, Therefore , and so ANN can replace sensors speed in the model of

the control systems. Using ANN, we don’t have to calculate the parameters of the

motor when designing the system control.

It is shown an appreciable advantage of control system using ANNs above

the conventional one, when parameters of the DC motor is variable during the

operation of the motors. The satisfied ability of the system control with ANNs is

much better than the conventional system control. ANN application can be used in

adaptive controlling in the control system machine with complicated load.

Nowadays, in order to implement the control systems using ANNs for DC motor

on actual hardware, the ANN micro processor is being used.

FUTURE SCOPE:

The ability to deal with a noisy operating environment is always an

important consideration for electric drives control. Noise can be introduced due to

several reasons. Two main causes are motor-load parameter drift and quantization

and resolution errors of the speed and/or position encoders. A high performance

drive controller should be robust enough to maintain accurate tracking

performance regardless of the noisy operating environment. ANN 69 based

controllers have the inherent noise rejection capability of the ANN built into them.

Therefore ANN based controllers are expected to display superior performance

under noisy operating environments.

The investigation of the controller performance under noise can be a cruitial

phenomenon that can be studied through the necessary simulations.

REFERENCES

1. Antsaklis, P. J. (April, 1990). Neural Networks in control systems. IEEE control systems magazine, volume-10 , 3-5.

2.Cajueiro, D. O., & Hemerly, E. M. (December, 2003). Direct Adaptive Control using FeedforwardNeural Networks.

3.Revista Control & Automation, Vol.14, No.4 , 348-358.Chen, S., Billings, S., & Grant, P. (April, 1990). Non-linear system identification using neural networks.

4.IEEE Transactions, volume-51 , 1191-1214.DOURATSOS, I., & BARRY, J. (2007). NEURAL NETWORK BASED MODEL REFERENCE ADAPTIVE.

5.International journal of information and systems sciences, volume-3 , 161-179.Dzung, P., & Phuong, L. (2002). ANN - Control System DC Motor. IEEE Transactions , 82-90.

6.El-Sharkawi, M. A., & Weerasooriya, S. (September, 1989). Adaptive tracking control for high performance dc drives. IEEE Transactions on Energy Conversion, volume-5 , 122-128.

7.El-Sharkawi, M. A., & Weerasooriya, S. (December, 1991). Identification and control of a dc motor using back-propagation neural networks. IEEE Transactions on energy conversion, volume-6 , 663- 669.

8.Hagan, M. T., Demuth, H. B., & Jesus, O. (2000). An Introduction To The Useof Neural Networks.Electrical and Computer Engg. , 1-23.