IW Stanford Heuristic JulyProgramming Proiect 1979 MemoHPP ... · Stanford Heuristic...

Transcript of IW Stanford Heuristic JulyProgramming Proiect 1979 MemoHPP ... · Stanford Heuristic...

"IW

Stanford Heuristic Programming Proiect MemoHPP-79-17

Computer Science Department Report No. STAN-CS-79-749

1^

July 1979

^ Applications-oriented AI Research: Education

O by

William J. Clancey, James S. Bennett and Paul R. Cohen

o

a section of the

Handbook of Artificial Intelligence

edited by

Avron Barr and Edward A. Feigenbaum

COMPUTER SCIENCE DEPARTMENT School of Humanities and Sciences

STANFORD UNIVERSITY

D D C Eraizmiim

OCT 24 1979

SDTTE B

DISTRIBUTION STATEMENT A

Approved for public release; Distribution Unlimited

79 22 22 130 »i» «fmimimmmmm

l / vit jj^1'——" |■| '' 'i^ .-.■r--.. .. ^ l (|

UNCLASSIFIED SECURITY CLASSIFICATION OF THIS PACE (ntten Dom r.nlncd)

a I. "tEPORT,

REPORT DOCUMENTATION PAGE

.HPP-79V^f/sTM-cs-79-7^9,/ l-^'t T — ' ' ■ TtTLE (ami Stibtllle) -^/jr—

mmmtmmtm — I ~ —im» nr i inim - wmm

2. COVT ACCESSION NO

Applications-oriented AI Research: Education ,

James S./Bennetti and Willalm J./Clancey, Paul ^,4 Cohen (edited by Avron Barr & Edward A. Feigenbaum)

/Bennett/

READ INSTRUCTIONS BEFORE COMPLETING FORM

3- RECIPIENT'S CATALOG NUMBER

5. TYPE OF fj^PORT 6, pJEHioJCOVERED

9/technicari Ml ERFORMING ÖRG. REPORT NUMBER

HPP-79-17 (STM-CS-79-749)

9. PERFORMING ORGANIZATION NAME AND ADDRESS

Department of Computer Science / Stanford University Stanford, California 94305 USA

i 8. CONTRACT OR GRANT NUMBERfs)

11, CONTROLLING OFFICE NAMt AND ADDRESS

Defense Advanced Research Projects Agency Information Processing Techniques Office }k00 Wilson Ave., Arlington, VA 22209

MDA 903-77-C-/3322.

I sMA* PA rtrc\Q Y ■ .Ifcii'^WOtrR'AM' f CewgTW*-F^»»»fc tt T I" V ^ Bl«

AREA 4 WORK UNIT NUMBERS

/7ii2 R6r»F1L,BATE

V 'j' July—^79; ^ -^MII IIUWBUHOF »AGES

62 i«. MONITORING AGENCY NAME ft ADDRESSf'/rf/'»ei-en( Iron} Conlrollint Oltice)

Mr. Philip Surra, Resident Representative Office of Naval Research^ Stanford University

I

lb. SECURITY CLASS, (of thla report)

Unclassified

'5». DSCLASSIFI CATION/DOWNGRADING SCHEDULE

16 DISTRIBUTION ST AJ EMEHT (of 'hi s Report) DISTRIBUTION STATEMENT A

Approved fox public release; Distribution Unlimited

Reproduction in whole or in part is permitted for any purpose of the U.S. Government.

17. DISTRIBLmON STATEMENT (of the abstract entered In Block 20, It different from Report)

IB. SUPPLEMENT ARY NOTES

19. KEY WORDS (Continue on reverse side it nccensary and Identify by block number)

20. ABSTRACT (Continue on reverse ltiJ«s If necessary end Identify by block number)

(see reverse side)

cyWJjn S^K DD 1 JAN 73 1473 EDITION OF 1 NOV 65 IS OBSOLETE

UNCLASSIFIED

p 7 SECURITY CLASSIFICATION OF THIS PAGE (When Data Entared)

■PMHHMMMMiaWHI NMMMHKWMMMMMMHMBI

/

sa&* ■^js^^r rf«r^j^^4#^-^^--^--ss---

UNCLASSIFIED S! riir(IT>y CL ASSIUCATIO^ OF THIS PAGEfHTie" ftola Fnlomt) ir-y

Those of us involved in the creation of the Handbook of Artificial Intelligence, both writers and editors, have attempted to make the concepts, methods, tools, and main results of artificial intelligence research accessible to a broad scientific and engineering audience. Currently, Al work Is familiar mainly to its practicing specialists and other interested computer scientists. Yet the field Is of growing interdisciplinary interest and practical Importance. With this book we are trying to build bridges that are easily crossed by engineers, scientists in other fields, and our own computer science colleagues. )

In the Handbook we intend to cover the breadth and depth of Al, presenting general overviews of the scientific Issues, >»s well as detailed discussions of particular techniques and important Al systems, Throughout we have tried to keep in mind the reader who is not a specialist in Al.

As the cost of computation continues to fall, new areas of computer applications become potentially viable. For many of these areas, there do not exist mathematical "cores" to structure calculational use of the computer. Such areas will inevitably be served by symbolic models and symbolic inference techniques. Yet those who understand symbolic compulation have been speaking largely to themselves for twenty years. We feel that it is urgent for Al to "go public" In the manner Intended by the Handbook.

Several other writers have recognized a need for more widespread knowledge of Al and have attempted to help fill the vacuum. Lay reviews, in particular Margaret Boden's Artificial Intelligence and Natural Man, have tried to explain what is important and lnteres*ing a^oi/t Al, and how research In Al progresses through our programs. In addition, there are. a iew textbooks that attempt to present a more detailed view of selected areas of Al, for the serious student of computer science. But no textbook can hope to describe all of the sub-areas, to present brief explanations of the important ideas and techniques, and to review the forty or fifty most important Al systems.

The Handbook contains several different types of articles, Key Al ideas and techniques are described In core articles (e.g., basic concepts in heuristic search, semantic nets). Important Individual Al programs (e.g., SHRDLU) are described In separate articles that indicate, among other things, the designer's goal, the techniques e ployed, and the reasons why the program is important. Overview articles discuss the problems and approaches in each major area. The overview articles should be particularly useful to those who seek a summary of the underlying issues that motivate Al research.

Eventually the Handbook will contain approximately two hundred articles. We hope that the appearance of this material will stimulate interaction and cooperation with other Al research sites. We look forward to being advised of errors of omission and commission. For a field as fast moving as Al, It is Important that Its practitioners alert us to important developments, so that future editions will reflect this new material. We intend that the Handbook of Artificial Intelligence be a living and changing reference work.

The articles In this edition of the Handbook were written primarily by graduate students in Al at Stanford University, with assistance from graduate students and Al professionals at other institutions. We wish particularly to acknowledge the help from those at Rutgt ^ University, SRI International, Xerox Palo Alto Research Center, MIT, and the RAND Corporation.

The authors oHthis-roport» whichJcontalns the section of ihe Handbook on educational applications research, ore William Clancey, James Bennett, and Paul Cohen. Others who contrihuted to or commented on earlier versions of this section Include Lee Blaine, John Seely Brown, Richard Burton, Adele Goldberg, Ira Goldstein, Albert Stevens, and Keith Wescourt.

UNCLASSIFIED SECURITY CLASSIFICATION OF THIS PAGEflWion DKIB EnferKd)

* «mnmmnmm

c-i^^-c "M-: raKssaaB'^sfi&sMEi-'-.-c-i-crf^M-»'^.'' J-VW. ^^rv^-?-»—^-

/

! " :

Applications-oriented AI Research: Education by

William J. Clancey, James S. Bennett, and Paul R. Cohen

a sectioii of the

Handbook of Artificial Intelligence

edited by

Avron Barr and Edward A. Feigenbaum

This research was supported by both the Defense Advanced Research Projects Agency (ARPA Order No. 3423, Contract Ho. t^mM2L-JZz£:gQ22) and the National Institutes of Health (Contract No. NIH RR-OOZSS-OßTThe views and conclusions of this document should not be interpreteia~"BS"TIBCeS5arily representing the official policies, either expressed or implied, of the Defense Advanced Research Projects Agency, the National Institutes of Health, or the United States Government.

Copyright Notice: The material herein Is copyright protected. Permission to quote or reproduce in any form must be obtained from the Editors. Such permission is hereby granted to agencies of the United States Government.

I^^^Ä^^^TJ^E^Cw-A

"PPS

TSB^HSi^SSri^h» Ml*-' •r~~^äCa JW-«««CI r-arjp >

Al Applications in Education

Table of Contents

A. Historical Overview * B. Issues in ICAI Systems Design T? C. ICAI Systems \l

1. SCHOLAR \l 2. WHY H 3. SOPHIE H 4. WEST " 5. WUMPUS if. 6. BUGGY il 7. EXCHECK ',-5

References

Index 55

ACCESSION for

NTIS White Section

DDC Buff Section □

UNANNOUNCED Q

JUSTIFICATION _______

BY ...

DISTIilByTION/AyAIUlBlLITy C00[C Dist. AVAIL, and/or SPFTCIÄL

A

S

Foreword

Those of us involved in the creation of the Handbook of Artificial Intelligence, both writers and editors, have attempted to make the concepts, methods, tools, and main results of artificial Intelligence research accessible to a broad scientific and engineering audience. Currently, Al work is familiar mainly to its practicing specialists and other interested computer scientists. Yet the field is of growing interdisciplinary interest and practical importance. With this book we are trying to build bridges that are easily crossed by engineers, scientists In other fields, and our own computer science colleagues.

in the Handbook we Intend to cover the breadth and depth of Al, presenting general overviews of the scientific issues, as well as detailed discussions of particular techniques and important Al systems. Throughout we have tried to keep in mind the reader who is not a specialist in Al.

As the cost of computation continues to fall, new areas of computer applications become potentially viable. For many of these areas, there do not exist mathematical "cores" to structure calculatlonal use of the computer. Such areas will inevitably be served by symbolic models and symbolic Inference techniques. Yet those who understand symbolic computation have been sneaking largely to themselves for twenty years. We feel that it is urgent for Al to "go publh" in the manner Intended by the Handbook.

Several other writers have recognized a need for more widespread knowledge of Al and have attempted to help fill the vacuum. Lay reviews, in particular Margaret Boden's Artificial Intelligence and Natural Man, have tried to explain what is important and interesting about Al, and how research In Al progresses through our programs. In addition, there are. a few textbooks that attempt to present a more detailed view of selected ar4as of Al, for the «erious student of computer science. But no textbook can hope to describe all of the sub-areas, to present brief explanations of the important ideas and techniques, and to review the forty or fifty most important Al systems.

The Handbook contains several different types of articles. Key Al ideas and techniques are described In core articles (e.g., basic concepts in heuristic search, semantic nets). Important Individual Al programs (e.g., SHRDLU) are described in separate articles that indicate, among other things, the designer's goal, the techniques employed, and the reasons why the program is important. Overview articles discuss the problems and approaches in each major area. The overview articles should be particularly useful to those who seek a summary of the underlying issues that motivate Al research.

• .^^.■^v'^^«C^^x.r~'^*"^S53 ffiaaew s

Eventually the Handbook will contain approximately two hundred articles. We hope that the appearance of this material will stimulate interaction and cooperation with other A! research sites. We look forward to being poised of errors of omission and commission. For a field as fast moving as Al, it is important that Its practitioners alert us to important developments, so that future editions will reflect this new material. We intend that the Handbook of Artificial Intelligence be a living and changing reference work.

'

Tho articles in this edition of the Handbook were written primarily by graduate students in Al at Sta iford University, with assistance from graduate students and Al professionals at other institutions. We wish particularly to acknowledge the help from those at Rutgers University, SRI International, Xerox Palo Alto Research Center, MIT, and the RAND Corporation.

The authors of this'report, which contains the section of the Handbook on educational applications research, are William Clancey, James Bennett, and Paul Cohen. Others who contributed to or commented on earlier versions of this section include Lee Biaine, John Seely Brown, Richard Burton, Adele Goldberg, Ira Goldstein, Albert Stevens, and Keith Wescourt.

Avron Barr Edward Feigenbaum

Stanford University July, 1979

'

fi*a*^£&*~m*:^^ auarijfcaiinr »%■''-'»" '->»- -—!?«■•«--«

Handbook of Artificial Intelligence

Topic Outline

Volumes I and II

Introduction

The Handbook of Artificial Intelligence Overview of Al Research History of Al An Introduction to the Al Literature

Search

Overview Problem Representation Search Methods for State Spaces, AND/OR Graphs, and Game Trees Six Important Search Programs

Representation of Knowledge

Issues and Problems in Representation Theory Survey of Representation Techniques Seven Important Representation Schemes

A! Programming Languages

Historical Overview of Al Programming Languages Comparison of Data Structures and Control Mechanisms In Ai Languages LISP

Natural Language Understanding

Overview - History and Issues Machine Translation Grammars Parsing Techniques Text Generation Systems The Early NL Systems Six Important Natural Language Processing Systems

Speech Understanding Systems

Overview - History and Design Issues Seven Major Speech Understanding Projects

\ v^vsr

mmmmm

I '(V'^.&f**-*

Applications-oriented Al Research — Part 1

Overview TEIRESIAS - Issues in Expert Systems Design Research on Al Applications in Mathematics (MACSYMA and AM) Miscellaneous Applications Research

Applications-oriented Al Research — Part 2: Medicine

Overview of Medical Applications Research Six important Medical Systems

Applications-oriented Al Research -- Part 3: Chemistry

Overview of Applications in Chemistry Applications in Chemical Analysis The DENDRAL Programs CRYSALIS Applications in Organic Synthesis

Applications-oriented Al Research — Part 4: Education

Historical Overview of Al Research in Educational Applications Issues and Componets of Intelligent CAI Systems Seven Important ICAI Systems

Automatic Programming

Overview Techniques for Program Specification Approaches to AP Eight Important AP Systems

volume: The following sections of the Handbook are still in preparation and will appear in the third

Theorem Proving Vision Robotics Information Processing Psychology Learning and Inductive Inference Planning and Related Problem-solving Technique«

/

•sm&ps&'i*****'*''

•

A. Historical Overview

Educational applications of computer technology have been under development since the early 1960s. These applications have Included scheduling courses, managing teaching aids, and grading tests. The predominant application, however, has involved using the computer as a device that Interacts directly with the student, rather than as an assistant to the human teacher. For this kind of application, there have been three general approaches.

The "ad lib" or "environmental approach" Is typified by Papert's LOGO laboravnry (Papert, 1970), that allowed students more or less free-style use of the machine. Students are Involved in programming; It is conjectured that learning problem-solving methods takf s place as a side effect of using tools that are designed to suggest good problem-solv^ig strategies to the student. The second approach uses games and simulations as instructional tools; once aga'n the student Is Involved in an activity—for example, doing simulated genetics experiments—for which learning is an expected side effect. The third computer application In education is computer-assisted instruction (CAI). Unlike the first two approaches, CAI makes an explicit attempt to Instigate and control learning (Howe, 1973). This third use of computer technology In education Is the focus of the following discussion.

^The goal of CAI research is to construct instructional programs that incorporate well- prepared course material in lessons that are optimized for each student. Early procjrams were either electronic "page-turners" which printed prepared text or drill-and-practice monitors, which printed problems and responded to the student's solutions using prestored answers and remedial comments. In the Intelligent CAI (ICAI) programs of the 1970s, course material Is represented independently of teaching procedures so that problems and remedial comments can be generated differently for each student. Research today focuses on the design of programs that can offer instruction in a manner that is sensitive to the student's strengths, weaknesses, and preferred style of learning. The role of Al in computer-based Instructional applications Is seen as making possible a new kind of learning environment.^-

This overview surveys how Al techniques have been used in research attempting to create intelligent computer-based tutors. In the next article, some design issues are discussed and typical components of ICAI systems are described. Subsequent articles describe some important applications of artificial intelligence techniques in instructional programs.

Frame-oriented CAI Systems

The first instructional programs took many forms, but all adhered to essentially the same pedagogical philosophy. The student was usually given some instructional text (sometimes without using the computer) and asked a question that required a brief answer. After the student responded, he was told whether his answer was right or wrong. The student's response was sometimes used to determine his "path" through the curriculum the sequence of problems he Is given (see Atkinson & Wilson, 1969). When the student made an error, the program branched to remedial material.

The courseware author attempts to anticipate every wrong response, prespecifying branches to appropriate remedial material based on his Ideas about what might be the underlying misconceptions that would cause each wrong response. Branching on the basis of

rmmmmmmmmmmmmmmmmmrmmmifmmimmmmmmmmmm

'^S^tiR*^^

Al Applications in Education

response was the first step toward individualization of instruction (Crowder, 1962). This style of CAI has been dubbed ad-hoc, frame-oriented (AFO) CAI by Carbonell (1970b), to stress its dependence on author-specified units of information. (The term "frame" as it is used in this context predates the more recent usage in Al—see Article RBpresBntation.B7--and refers to a block or page or unit of information or text.) Design of ad-hoc frames was originally based on Skinnerian stimulus/response principles. The branching strategies of some AFO programs have become quite involved, incorporating the best learning theory that mathematical psychology has produced (Atkinson, 1972; Fletcher. 1976; Kimball. 1973). Many of these systems have been used succesfuily and are available commercially.

Intelligent CAI

In spite of the widespread application of AFO CAI to many problem areas, many researchers believe that most AFO courses are not the best use of computer technology:

In most CAI systems of the AFO type, the computer does little more than what a programmed textbook can do, and one may wonder why the machine is used at all....When teaching sequences are extremely simple, perhaps trivial, one should consider doing away with the computer, and using other devices or techniques more related to the task. (Carbonell, 1970b, pp. 32, 193)

In this pioneering paper, Carbonell goes on to define a second type of CAI that is known today as "knowledge-based" or "intelligent" CAI (ICAI). Knowledge-based systems and the previous CAI systems both have representations of the subject matter they teach, but ICAI systems also carry on a natural language dialogue with the student and use the student's mistakes to diagnose his ml3<'nderstandings.

Early u.5es of Al techniques in CAI were called "generative CAI" (Wexler, 1970), since they stressed the ability to generate problems using a large database representing the subject they taught. (See Koffman & Blount, 1976, for a review of some early generative CAI programs and an example of the possibilities and limitations of this style of courseware.) However, the kind of courseware that Carbonell was describing In his paper was to be more than just a problem generator—it was to be a computer tutor that had the inductive powers of its human counterparts. ICAI programs offer what Brown (1977) calls a reactive learning environment, in which the student is actively engaged with the instructional system and his Interests and misunderstandings drive the tutorial dialogue. This goal was expressed by other researchers trying to write CAI programs that extended the medium beyond the limits of frame selection:

Often it is not sufficient to tell a studen» he is wrong and Indicate the correct solution method. An intelligent CW system should be able to make hypotheses based on a student's error history as to where the real source of his difficulty lies. (Koffman & Blount, 1976)

The Use of Al Techniques in ICAI

The realization of the computer-based tutor has involved increasingly complicated

w

a

3WSS^y5*«»^*■■'^i^l/,**^iPr,*TO,,—**"£* ■—*— -r

A Historical Overview 3

computer programs and has prompted CAI researchers to use artificial Intelligence techniques. Artificial Intelligence work in natural language understanding, representation of knowledge, and methods of inference, as well as specific Al applications like algebraic simplification, calculus, and theorem proving, have been applied by various researchers toward making CAI programs that «re more intelligent and more effective. Early research on ICAI systems focused on represen.ation of the subject matter. Benchmark efforts include SCHOLAR, the geography tutor of Carbonell and Collins (see article Cl), EXCHECK, the logic and set theory tutors by Suppes et al. (article F7), and SOPHIE, the electronics troubleshooting tutor of Brown and Burton (article C3). The high level of domain expertise in these proflrams permits them to be responsive in a wide range of problem-solving

Interactions.

These ICAI programs are quite different from even the most complicated frame- oriented, branching program.

Traditional approaches to this problem using decision theory and stochastic models have reached a dead end due to their oversimplified representation of learning It appears within reach of Al methodology to develop CAI systems that act more like human teachers. (Laubsch, 1975)

However, an Al system that is expert in a particular domain is not necessarily an expert teacfter of the material-'ICAI systems cannot be Al systems warmed over" (Brown, 1977). A teacher needs to understand what the student is doing, not Just what he is supposed to do. Al programs often use very powerful problem-solving methods that do not resemble those used by humans; in many cases. CAI researcners borrowed Al techniques for representing subject domain expertise but had to modify them, often making the inference routines 'ess powerful, in order to force them to follow human reasoning patterns, so as to better explain their methods to the student, as well as to understand his methods (Smith. 1976; Goldberg.

1973).

In the mid-1970s, a second phase in the development of ICAI tutors has been characterized by the inclusion of expp-tise in the tutor regarding (a) the student's learning behavior and (b) tutoring strategies (Brown & Goldstein. 1977). Al techniques are used to construct models of the learner that represent his knowledge in terms of "issues" (see article C4) or "skills" (Barr & Atkinson. 1975) that should be learned. This model then controls tutoring strategies for presenting the material. Finally, some ICAI programs are now using Al techniques to explicitly represent these tutoring strategies, gaining the advantages of flexibility and modularity of representation and control (Burton & Brown. 1979; Goldstein. 1977; Clancey, 1979a).

References

The best general review of research In ICAI is Brown & Goldstein (1977). Several papers on recent work are collected in a special Issue of the International Journal of Man- Machine Studies. Volume 11, 1979.

m

^''^^^S^'^^ —'■' 'y "^-'

TW-

AI Applications in Education

B Issues in ICAI Systems Design

ThP main components of ICAI systems are (a) its problem-solving expertise, the Knowledge tnLt the ^em tries to im/art to the student, (b) the ^udent mode, .nd.cat.ng what the student does and does not know, and (c) tutoring strateg-es, wh.ch specify how the System Presents material to the student). (See Reif. 197/i. for an excellent d.scuss.ono he^iTfe'renct and ir^errelations of tM typ.s of Knowledge ^^^^^ ^

proaram ) Not all of tK components a,c fully developed In evt.ry system. Beca^e oT J'1^ s ze and compLity of .uelligent CAI programs, most researchers tend t0 concen rate the.r

effort on the development of a single part of what would constitute a fully usable system.

Each component is described briefly below.

The Expertise Module—Representing Domain Knowledge

The "expert" component of an ICAI system Is charged with the task of generating

problems and evaluating'the correctness of student -,UtiT/^ t^bL^taHncoTp^a fd of the subject matter was originally envisioned as a huge static database that incorporated a the acs to be taught. Thfs idea was implicit in the early drill-and-practice P-grams and was made explicit in generative CAI (see Article A). Representation of subject matte expertise in this way, using semantic nets (Article RepresenlalioaBa), has been useful for

gene ting and answering questions involving causal --^-^-«^Sj^tr WHY Collins, 1973; Laubsch, 1975; and see Articles Cl and C3 on the SCHOLAR and WHY

systems).

Recent systems have used procedural representation of/t07in

QknoW^ f°;^amp^

how to take measurements and make deductions (see Article RB^e^|nla; ^f^. .T^'

knowledge is represented as procedural experts that correspond to subsk.lls ^at ytudent must learn In order to acquire the complete skill being ta.ght ^^Bw^Jf'^l Production rules (Article Rapr«l«it.HoaB3) havV^ "S^Q;° ^17 97^ In representations of skills and problem-sükinc, methods (Goldstein, 1977; Clancey. tO^aX. In

addition. Brown & Burton (1975) have pointed out XM multiple ^^I^^^BI useful for answering student questions and for evaluating partial solul.ons to a > roblern ^'^j a aemantlo net of facts about an electronic circuit and procedures simulating the functiona SehaX Of the c""t) Stevens & Collins (1978) considered an evolving series o "slmul^cn" models .hat can be used to reason metaphorically «bout the behavior of causal

systems.

It should be noted that not all ICAI systems can actually solve the P^blJ» they poae to a student. For example, BIP, the BASIC Instructional Program (Barr, Beard, & Atkmson, 1076) can" write or analyze computer programs: BIP uses sample input/output pflfa suppled by the course authors) to test students" programs. Similarly, the procedu al

exper s in SOPHIE-I could not debug an electronic circuit. In contrast, the product.on rule representation of domain knowledge used in WUMPUS and ^IDON enables these prog^ soh/e problems independently, as well as to criticize student solutions .(Go,f ^"l,if/7'^ Clancey 1979a). Being able to solve the problems, preferrably in all possible way. clrrecÄ and incorrect is necessary if the ICAI program Is to make f.ne-grained suggestions p.oout the completion of partial solutions.

An impcrtant idea in this connection is that of an articuiate expert (Goldstein. 1977).

mmm

Issues in ICAI Systems Design 6

Whereas typical expert Al progt. is have data structures and processing algorithms that do not necessarily mimic the reasoning steps used by humans and are, therefore, considered "opaque" to the user, an articulate expert for an ICAI system must be designed to enable the explanatior of each problem-solving decision that it makes in terms that correspond (at some level of abstraction) to those of a human problem solver. For example, the electronic circuit simulator underlying SOPHIE-I (see Article C3), which is used to check the consistency of a student's hypotheses and to answer some of his questions, is an opar,iie expert on the functioning of the circuit. It is a complete, accurate and efficient model of ihe circuit, but its mechanisms are never revealed to the student since they are certainly not the mechanisms that he is expected to acquire. In WEST, on the other hand, while a (compete and efficient) opaque expert Is used to determine the range of possible moves that the student could have made with a given roll of the dice, an articulate expert, which only models pieces of the game-playing expertise, is used to determine possible causes for less-than-optimal student moves.

ICAI systems are distinguished from earlier CAI approaches by the separation of teaching strategies from the subject expertise to be taught. However, the separat an of subject-area knowledge from instructional planning requires a structure for organizing the expertise that captures the difficulty of various problems and the interrelationships of course material. Modeling a student's understanding of a subject is closely related conceptually to figuring out a representation for the subject itself or for the language used to discuss it.

Trees and lattices showing prerequisite interactions have b(;en used to organize the introduction of new knowledge or topics (Koffman & Blount, 1975). In BIP this lattice took the form of a curriculum net that related the skills to be taught to example programming tasks that exercised each skill (Barr, Beard, & Atkinsoh, 1976). Goldstein (1979) called the lattice a syllabus in the WUMPUS program and emphasized the developmental path that a learner takes in acquiring new skills. For arithmetic skills used in WEST, Burton & Brown (1976) use levels of issues. Issues proceed from the use of arithmetic operators to strategies for winning the game, to meta-level considerations for improving performance. Burton and Brown believe that when the skills are "structurally independent," the order of their presentation is not particularly crucial. This representation is useful for modeling the student's knowledge and coaching him on different levels of abstraction. Stevens, Collins, & Goldin (1978) have argued further that a good human tutor does not merely traverse a predetermined network of knowledge in selecting material to present. Rather, it is the process of ferreting out student misconceptions that drives the dialogue.

The Student Model

The modeling module is used to represent the student's understanding of the material to be taught. Much recent ICAI research has focused on this component. The purpose of modeling the student Is to make hypotheses about his misconceptions and suboptimal performance strategies so that the tutoring module can point them out, indicate why they are wrong, and suggest corrections. It is advantageous for the system to be able to recognize alternate ways of solving problems, including the incorrect methods that the student might use resulting from systematic misconceptions about the problem or from inefficient strategies.

5 Al Application« in Education

Some early frame-oriented CAI systems used mathematical stochastic learning models, but this approach failed because it only modeled the probability that a student would give a specific response to a stimulus. In general, knowing the probability of a response is not the same as knowing what a student understands-the former has little d.agnost.c power

(Laubsch, 1975),

Typical uses of Al techniques for modeling student knowledge include (a) simple pattern recognition applied to the student's response history and (b) flags in the subject matter semantic net or in the rule base representing areas that the student has mastered. In these ICAI systems, a student model is formed by comparing the student's behavior to that of the computer-based "expert" in the same environment. The modeling component marks each skill according to whether evidence indicates that the student knows the material or not. Carr & Goldstein (1977) have termed this component an overlay model-Xhe student's understanding is represented completely in terms of the expertise component of the program (see Article

C5).

In contrast, another approach is to model the student's knowledge not as a subset of the expert's, but rather as a perturbation or deviation from the expert's knowledge—a "bug", (r-e, for example, the SOPHIE and BUGGY systems-Articles C3 and CB.) There is a major difference between the overlay and "buggy" approaches to modelling; In the latter approach it is not assumed that, except for "knowing" less, the student reasons as the expert does; the student's reasoning can be substantially different from expert rear jnmg.

Other information that might be accumulated in the student model includes the student's preferred modes for interacting with the program, a rough characterization of his level of ability, a consideration of what he seems to forget over time, and an indication of what his goals and plans seem to be for learning the subject matter.

Major sources of evidence used to maintain the student model can be characterized as: (a) implicit, from student problem-solving behavior; (b) explicit, from direct questions asked of the student; (c) historical, from assumptions based on the student's experience; and (d) structural, from assumptions based on some measure of the difficulty of the subject material (Goldstein, 1977). Historical evidence is usually determined by asking the student to rate his level of expertise on a scale from "beginner" to "expert." Early programs like SCHOLAR used only explicit evidence. Recent programs have concentrated on inferring "implicit" evidence from the student's problem-solving behavior. This approach is complicated because it is limited by the program's ability to recognize and describe the strategies being used by the student. Specifically, when the expert program indicates that an inference chain is required for a correct result and the student's observable behavior is wrong, how is the modeling program to know which of the intermediate steps are unknown or wrongly applied by the student? This Is the apportionment of creditlblame problem; it has been an important focus of WEST research.

Because of inherent limitations in the modeling procöss, it is useful for a "critic" in the modeling component to measure how closely the student mode! actually predicts the student's behavior. Extreme inconsistency or an unexpected demonstration of expertise in solving problems might Indicate that the representation being used uy the program does not capture the student's approach. Finally, Goldstein (197/) has suggested that the modeling process should attempt both tc measure whether or not the student is actually learning and to discern what teaching methods are most effective. Much work remains to be done in this area.

' '

Issues in ICAI Systems Design

The Tutoring Moduie

The tutoring module of ICAI systems must integrate knowledge about natural language dialogues, teaching methods, and the subject area to be taught. This is the module that communicates with the student: selecting problems for him to solve, monitoring and critic!, ng his performance, providing assistance upon request, and selecting .Gmed.al matenal The design of this module involves issues like "When is It appropriate to offer a hint?' or How far should the student be allowed to go down the wrong track?"

These are Just some of the problems which stem from the basic fact that teaching Is a skill which requires knowledge additional to the knowledge comprising mastery of the subject domain. (Brown, 1977)

This additional knowledge, beyond the representation of the subject domain and of the student's knowledge, is about how to teach.

Most ICAI research has explored teaching methods based on diagnostic modeling in which the program debugs the student's understanding by posing tasks and evaluating his response (Collins, 1976; Brown & Burton, 1975; Koffman & Biount, 1975). The student is expected to learn from the program's feedback which skills he uses wrongly, which skills he does not use (but could use to good advantage), etc. Recently, there has been more concern with the possibility of saying Just the right thing to the student so that he will realize his own inadequacy and switch to a better method (Carr & Goldstein, 1977; Burton & Brown, 1979; Norman, Centner, and Stevens, 1976). This new direction is based on attempts to make a bug "constructive" by establishing for the student that there is something inadequate in his approach, and giving enough information so that the student can use what he already knows to focus on the bug and characterize it so that he avoids this failing In the

future.

However, it is by no means clear how "Just the right thing" is to be said to the student. We do know that it depends on having a very good model of his understanding process (the methods and strategies he used to construct a solution). Current research is focussing on means for representing and isolating the bugs themselves (Stevens, Collins, & Goldm, 1978;

Brown & Burton, 1978).

Another approach is to provide an environment that encourages the student to think in terms of debugging his own knowledge. In one B1P experiment (Wescourt and Hemphill. 1978) explicit debugging strategies (for computer programming) were conveyed in a written document and then a controlled experiment was undertaken to see whether this traingmg fostered a more rational approach for detecting faulty use of (programming) skills.

Brown, Colllfrs, and Harris (1978) suggest that one might foster the ability to construct hypotheses and test them (the basis of understanding in their model) by setting up problems In which the student's first guess is likely to be wrong, thus "requiring him to focus on how he detects that his guess is wrong and how he then intelligently goes about revising it.

The Socratic method used in WHY (Stevens & Collins, 1977) involves questioning the student in a way that will encourage him to reason about what he knows and thereby modify his conceptions. The tutor's strategies are constructed by analyzing protocols of real-world student/teacher Interactions.

Al Applications in Education

t

Another teaching strategy that has been successfully implemented on several systems is called coaching (Goldstein, 1977). Coaching programs are not concerned with covering a predetermined lesson plan within a fixed time (in contrast with SCHOLAR). Rather, the goa! of coaching is to develop the acquisition of skill and general problem-solving abilities, and it works by engaging the student in a computer game (see Article A). In a coaching situation, the Immediate aim of the student is to have fun, and skill acquisition is an indirect consequence. Tutoring comes about when the computer coach, which is "observing" the student's play of the game, interrupts him and offers new information or suggests new strategies. A successful computer coach must be able to discern what skills or knowledge the student might acquire, based on his playing style, and to judge effective ways to intercede in the game and offer advice. WEST and WUMPUS (Articles C4 and C5) are both coaching programs.

Socratic tutoring and coaching represent different styles for communicating with the student. All mixed-initiative tutoring involves following some dialogue strategy, which involves decisions about when and how often to question the student and methods for presentation of new material and review. For example, a coaching program, by design, is non-intrusive and only rarely lectures. On the other hand, a Socratic tutor questions repetitively, requiring the student to pursue certain lines of reasoning. Recently ICAI research has turned to making explicit these alternative dialogue management principles. Collins (1976) has pioneered the careful investigation and articulation of teaching strategies. Recent work has explored the representation of these strategies as production rules (see CJancey, 1979a and Article 02 on Collins and Stevens' WHY system).

For example, the tutoring module in the GUIDON program, which discusses MYCIN-like "case diagnosis" tasks with a student (see Clancey, 1979a, and Article C1 on MYCIN), has an explicit representation of discourse knowledge. Tutoring rules select alternative dialogue formats on the basis of economy, domain logic, and tutoring or student modeling goals. Arranged into procedures, these rules cope with various recurrent situations in the tutorial dialogue, for example: introducing a new topic, examining a student's understanding after he asks a question that indicates unexpected expertise, relating an inference to one just discussed, giving advice to the student after he makes a hypothesis about a subpr^ipm, and wrapping up the discussion of a topic.

Conclusion

In genera!, ICAI programs have only begun to deal with the problems of representing and acquiring teaching expertise and of determining how this knowledge should be integrated with general principles of discourse. The programs described in the articles to follow have all investigated some aspect of this problem, and none offer an "answer" to the question of how to build a computer-tutor. Nevertheless, these programs have demonstrated potential tutorial skill, sometimes often snowing striking Insight Into students' misconceptions. Research continues toward making viable Al contributions to computer-based education.

References

Goldstein (1977) gives a clear discussion of the distinctions between the modules discussed here, concentrating on the broader, theoretical issues. Burton & Brown (1976)

y.

B Issues in ICAI Systems Design

also discuss the components of ICAI systems and their interactions and P^sent a good example. Self (1974) is a classic discussion of the kinds of knowledge needed in a

computer-based tutor.

, '

«PUMW

' / y.

10 Al Applications in Education

C. ICAI Systems

C1. SCHOLAR

An important aspect of tutoring is the ability to generate appropriate questions for the student. These questions can be used by the tutor to indicate the relevant material to be learned, to determine the extent of a student's knowledge of the problem domain, and to identify any misconceptions that he might have. Given that the knowledge base of a tutorinc) program can't contain all of the "facts" that are true about the domain, the tutor should be able to reason about what it knows and make plausible inferences about facts in the domain. In addition to responding to the student's questions, the tutor should be able to take the initiative during a tutoring dialogue by generating good tutorial questions.

SCHOLAR . one such mixed-initiative computer-based tutorial system; both the system and the student can initiate conversation by asking questions. SCHOLAR was the pioneering effort in the development of computer tutors capable of coping with unanticipated student questions and of generating subject matter in varying levels of detail, according to the context of the dialogue. Both the student's input and the program's output are in English sentences.

The original system, created by Jaime Carbonell, Allan Collins, and their colleagues at Bolt, Beranek end Newman, Inc., tutored students about simple facts in South American geography (Carbonell, 1970b). SCHOLAR uses a number of tutoring strategies for composing relevant questions, determining whether or not the student's answers are correct, and answering questions from the student. Both the knowledge representation scheme (see below)^ and the tutorial capabilities are applicable to other domains besides geography. For example, NLS-SCHd-AR was developed to tutor computer-naive people in the use of a complex text-editing program (Grignetti, Hausman, & Gould, 1975).

In addition to investigating the nature of tutorial dialogues and human plausible reasoning, the SCHOLAR research project explored a number of Al issues, including:

\ 1. How can real-world knowledge be stored effectively for the fast, easy

retrieval of relevant facts needed in tutoring?

2. What general reasoning strategies are needed to make appropriate inferences from the typically incomplete database of the tutor program?

3. To what extent can these strategies 'le independent of the domain being discussed (i.e., be dependent or om of the representation)?

The Knowledge Base~-Semantic Nets

In SCHOLAR, knowledge about the domain being tutored is represented in a semantic net (see Article RepresentatioaBa). Each node or "unit" In the net, corresonding to some geographical object or concept, Is composed of the name associated with that node and a set of properties. These properties are lists of attribute-value pairs. For example, Figure 1 shovja a representation of the unit for Peru:

Ml ■■■"■'-

r. SCHOLAR ^

PERU: ((EXAMPLE-NOUN PERU))

,, 0) "importance' of unit is high

(SUPERC (I 0) COUNTRY) (SUPERP (I 6) SOUTH/AMERICA)

link to superordinate units

(LOCATION (I 0) values of LOCATION attribute follow:

(IN (I 0) (SOUTH/AMERICA (I 0) WESTERN)) (ON (I 0) (COAST (I 0) (OF (I 0) PACIFIC)) (LATITUDE (I 4) (RANGE (10)-18 0)) (LONGITUDE (I 5) (RANGE (I 0) -82 -68)) (BORDERING/COUNTRIES (I 1)

(NORTHERN (I 1) (LIST COLUMBIA ECUADOR)) (EASTERN (I 1) BRAZIL)

Figure 1. The unit for PERU,

Attributes can be English words (other units) that are de^ed elsewhere in ;'- n^ ^^ several special attributes such as "SUPRA" for superattnbute, "SUPERC for superconcepi or supero din te "SUPERP" for superpart. or case structure attributes "f^'" P«^..^ below). An example of SUPRA might be the fact that »fertile« refers to so^ ad so refers to "topography"; of SUPERP, that Peru Is part of South ^er.ce; of SUPERC hat Peru is a country Values can also be importance tags, like the expressions "(I 0) after LOCATION n Figure and "ID" after EASTERN: the lower the number, the more important the property. SCHOLAR uses these tags to measure the relevance of a node with respect to the top.c

under discussion (see below).

Tutorial Dialogue

An Important part of the SCHOLAR research has been an emphasis on the nature of tutorial drogue in particular the Socratic style of tutoring, where the tutor a tempts to diaanoL the student's misconceptions and then present material that will force the student to irhia own errws Collins. Warnock. «. Passaflume. 1974). Many human tutodal protoco s have been analysed to see what strategies human students follow, m order to try to incoUate these strategies In the system (see also Article Ca on the groups con.numg esear h fn th" direction). The fol.owing example is an excerpt '^ « ""l

d0;d^„^

South American geography tutor. The student's questions and answers are preceded by .

and annotations are shown in italics:

B ^

f

12 Al Applications in Education

What is the language of Argentina? «* PROBABLY SPANISH

Very good. Approx what is the area of Argentina?

Student changes topic...

«« TELL ME SOMETHING ABOUT PERU

Peru is a country. It is located in South America The capital of Peru is Lima. Now answer the question you didn't answer before.

...and finally answers the question.

** THE AREA OF ARGENTINA IS 1,100,000 SQUARE MILES

Approximately true, but a more exact value is 1,200,000. The eastern bordering country of Peru is •--.

Student makes an error and is corrected...

«* CATHOLICISM

Wrong. Catholicism Is not a country but a religion. The correct answer Is: Brazil Where is Peru?

...and once again changes topic.

** Is Asuncion hot? Since Asuncion is part of Paraguay, yes.

** WHAT IS THE MOST IMPORTANT DIFFtRENCE BETWEEN PERU AND PARAGUAY? The primary difference is: The population of Peru is larger by a factor of 7.8.

The natural language processing used by SCHOLAR is based on a case grammar system (Article Natural Languaoe.B3b), and the semantic Interpretation of a student's response is heavily guided by the geography knowledge in the semantic net. Matural language processing Is important In SCHOLAR because it allows the student to take the initiative and ask questions that were not anticipated by the program. However, comprehension of English Input is facilitated by a taxonomy of expected "question types." The case grammar is also used by the system to constrt ^t questions and presentations of new material from the semantic network. English output Is composed of short, simple sentences, with no embedded clauses and a limited repertoire of verbs—generally some form of the verb "to be."

A simple agenda is used to keep track of topics that are being discussed. Timing

/

C1 SCHOLAR 13

i

considerations and relevance (Importance tags) affect the generation «"J Pruning oMopk* on this agenda. Continuity between questions is weak, however, since SCHOLAR does not plan a series of questions to make a point. SCHOLAR is capable of diagnosing a student s confusion only by following up one question with a related question.

Making Inferences

SCHOLAR'S Inference strategies, for answering student questions and evaluating student answers to Its questions, are designed to cope with the Incompleteness of the information stored In the semantic net database. Some of the Important strateg.es used to reason with Incomplete knowledge are given below. These abilities Jave been explored further in current research dealing with default reasoning (Relther. 1978) and plausible

reasoning (Collins, 1978).

Intersection search. Answering questions of the form "Can X be a Y7" ie;0;. "•» B"eno8 Aires a city In Brazil?") Is done by an Intersection search: The superconcept (SUPERC) arcs of both nodes for X and Y are traced until an Intersection Is found (,e « common superconcept node is found). If there Is no Intersection, the answer is "NO. if there is an Intersection node Q, SCHOLAR answers as follows:

If 0=Y, then "YES"; If Q=X, then "NO, Y IS AN X."

For example, the question "Is Buenos Aires In Brazil?" is answered YES because Brazil is a SUPERC of Buenos Aires in the net (Q=Y):

SOUTH AMERICA

/ (Superconcept)

BRAZIL (Y)

(Superconcept)

BUENOS AIRES (X)

But. the question "is Brazil In Buenos Aires?" gets the response "NO. BRAZIL is a country."

SOUTH AMERICA

/ (Superconcept)

BRAZIL (X)

(Superconcept)

BUENOS'AIRES (Y)

Common superordinate. Otherwise, if Q Is not X or Y. the program focuses on the two elements that have Q as a crmmon superordinate. if they are contradictory (contain suitable

/ s

14 Al Appiisfltlons in Education

CONTRA properties) or have distinguishing, mutually exclusive properties (e.g., different LOCATIONS), the answer is "NO"; otherwise the system answers "I DON'T KNOW." Answering "Is X a part of Y7" questions is similar, except SUPERP (superpart) arcs are used for thn intersection process.

Open and closed sets. In order to look for all objects in the system that satisfy some condition (e.g., "How many cities in Columbia are on the Atlantic?"), a distinction must be made about whether the resulting set of these objects Is closed (explicitly contains all such objects) or open (contains some and need not contain all such objects). In SCHOLAR'S net, sets are tagged by the course author as either open or closed, thus giving an indication of the incompleteness of the system's knowledge.

While it Is easy for SCHOLAR to answer questions (such as, "Is France a country in South America?") about closed sets, similar questions about open sets require the use of special techniques—SCHOLAR must be able to reason about the extent of its knowledge. For example, if we ask SCHOLAR whether or not rubber is a product of, say, Guyana and if there is no explicit information about Guyanese rubber production in the semantic net, SCHOLAR starts to reason with what It knows: It compares the Importance tags of the agricultural products of Guyana with the importance tags for rubber in countries that it knows produce rubber. It happens that Peru is a known rubber producer. SCHOLAR looks at the i-tag of rubber for Peru and compares this tag to the largest (least important) l-tags associated with Guyana. If it finds that these are comparable, SCHOLAR infers that it knows as much about Guyana (up to some large l-tag value) as it knows about Peru. Therefore SCHOLAR infers that It would know about rubber production in Guyana if it were important. S.nce rubber is not listed for Guyana, SCHOLAR makes the plausible (though uncertain) conclusion that rubber is not produced there. At this point SCHOLAR answers that it doesn't know for certain and gives the information about rubber and Peru.

SCHOLAR'S use of knowledge about the extent of Its knowledge in this kind of plausible reasoning Is unique in Al research and represents an application of meta-level knowledge (see HepresentB t lon.Overview).

Summary

The inferenclng strategies used by SCHOLAR are independent of the confnt of the semantic net, and are applicable In different domains. The inferences produced are fairly natural; that Is, they cope with the incomplete knowledge by employing reasoning processes similar to those that people use. The SCHOLAR project as a whole provides an ongoing environment for research on discourse, teaching strategies, and human plausible reasoning (see Article CS on recent research, including the WHY system).

References

Carbonell (1970a) Is a classic paper, defining the field of ICAI and introducing the SCHOLAR system Collins (1976) is an illuminating study of human tutorial dialogues. Collins et al. (1976) discusses Inference mechanisms, and Collins (1978) reports extei-ded research on human plausible reasoning. Grignettl, Hausman, & Gould (1976) describes NLS- SCHOLAR.

&- mi. ■iiiiiiip«iiiiMwiiiiii"i»inwi''

■ Uni j».. .MI j — ». '

C2 WHY

lö

C2. WHY

Recent .sea.. .Y ..an C=^ r^^^-P-^" ^ ^'^ ^ Beranek and Newman. Inc. has focused on devetop g PR ^ a system t

discuss complex systems. Their P^^;.8"*'^ °nm t0 investigate the nature of tutor.al

tutors facts about South American geography ^ *' *" J^-where the causal and temporal dialogues about subject matter that ""^^XBTIJM'BSX and where student's interrelations between the concepts m the doma'" ^^^^ tton8 about why processes errors could involve not only forf ^^^ ^^^fj.^ing a new system, called WHY. that work the way they do. Stevens «. Co^\(1

c9/Je

a;ieoph^cal process that is a function of r^r^i.--:-Siar^rb^ted L< . ^ »^ ^ sufficient to account for rainfall.

1978)!

below.)

2 Wha, types o. .isconceptlons do „u.ep.s h.ve? How do lu..r3 di.c,n„se ' r." . miscooooptions from the errors stodents make?

3. Whe. ere .h. .betreotteo. end viewpoints «,.. tutors use to expiain Physioai

processes?

By a„.,y2.n8 tutoriai dieiogues ^'--^'r^^^^rporerMo'rTu'Jai tZ™. Identify eiements of a theory of ,u'0;1"V. ^('"tS for further investigation. The which is then XtV^s^rrhoTaTerlero/iteratiohs of this sort. Th. wo* ZlXt'^lZ^ TZl topic above, the nature of Socratic tutor.ng.

Socratic Tutoring Heuristics

Collins (1976) argues that ^^^ ^ ^^ tryZ'to ^rS is best accomplished by dealing with ^f^J^Xr^orm complex subjects where from"them. Socratic dialogue is especial y ap^ri^J°[^7 the phenomenon under 'actors interact and where ^JlTe^Z ^TeTJ^

consideration, in an effort to ^^^^ ""ch control the student/system version of the WHY system incorporates 24 heuristics wmc interaction. An example heuristic is:

,/ the student gives as an expian.tion of caus.i dependence one or more

factors that are not necessary,

,*„ pick a counterex.Wie with the wrong v.;ue of «h" <.ctor and ask the student why his causal depondeoco doeso', hold In that case.

*r<EiC_iÄäjf"v~ ^

(Section Omitted)

Heuristic 4: Ask for subsequent causes.

What happens after the moisture-filled air reaches the land? ** I DON'T KNOW

Heuristic 5.- Suggest a factor and ask if it is a cause.

16 Al Applications in Education

This rule forces the student to consider the necessity of a particular factor. For example, if the student gives rainfall-- as a reason for growing rice, then the computer-generated counterexample "Why do they grow rice in Egypt, where there isn't much rainfall?" challenges "he student's explanation of rice growing. These heuristic rules are designed to facilitate tutorial dialogues in which students must consider combinations of factors that are necessary for rainfall, eliminate irrelevant factors, and attempt to generalize from specific cases to general conditions. (See Collins, 1976, for a complete discussion of the tutoring rules.)

s

WHY's knowledge base about rainfall is represented in script-\'\ke data structures, which encode temporal relations In the rainfall process; for example, "First water evaporates f-om an ocean or sea, then the water-laden air mass is carried over land by winds, which in turn causes the air mass to cool, which causes precipitation." (See Schänk & Abelson, 1977, on scripts, as well as Article RepresentatioaB?.) This knowledge representation is adequate for capturing many of the characteristics of a tutorial dialogue, but there are other kinds of knowledge about rainfall that aren't represented here, discussed below.

The following excerpt is from a dialogue with the current version of the WHY system. The system prompts the student to suggest causes of rainfall, to look for prior or intermediate causes, and finally to suggest a general rule. When such a rule is proposed, the system finds a counterexample and forces revision of the rule to account for it. The system proceeds In this way until a rule of sufficient generality is found. The protocol is annotated with the heuristics employed by the system at each Juncture in the conversation.

Heuristic 1: Ask for a prediction about a particular case.

Do you think the Amazon jungle has he«vy rainfall or light rainfall? ** HEAVY RAINFALL

Heuristic 2: Ask for prior causes.

Why doe^j the Amazon jungle have heavy rainfall? ** BECAUSE ITS NEAR THE ATLANTIC Yes, the Amazon jungle is near the equatorial Atlantic.

Heuristic 3: Ask for intermediate causes.

How does that affect rainfall In the Amazon jungle? *« THE WATER IN THE CURRENT IS WARM

V-^vörd<-'\_' ''-ti ' ?r T ^Imiitr h i '*"""! Il'l'l llllliijnrTii"" "'i III1 '^StxJUifi-irr^iia^itp.

'

C2 WHY 17

Do you think the Andes mountain range has any effecl on the rainfall in the Amazon Jungle? *• YES

Heuriitic 3: Ask for intermediate causes.

How? ** THE PREVAILING WIND HITS THE ANDES AND THE AIR COOLS

Heuristic 3; Ask for intermediate causes.

How does the fact that prevailing wind hits the Andes, lead to air cooling?

(5ecfton Omitted)

Heuristic 6: Form a general rule for an Insufficient cause

Do you think that any place with mountains has heavy rainfall? ** YES

Heuristic 7: Pick a counterexample for an insufficient cause, and Heuristic 2: Ask for prior causes

Southern California has mountains. Why doesn't Southrrn California have heavy rainfall?

Current Research

Tutorial goals. One of the shortcomings of the existing system is that it doesn't have long-term "goals" for the tutorial dialogue. Implicit In the tutorial rules is some idea about local management of the Interaction, but a global strategy about the tutoring session is absent. Human tutors, however, admit to having goals like "Concentrate on one particular part of the causal structure of rainfall at a time," or "Clear up one misconception before discussing another." Stevens & Collins (1977) set about codifying hese goals and strategies for incorporation into the WHY system. They analyzed tutoring protocols in which human tutors commented on what they thought the students did and didn't know, and on why they responded to the students as they did. From this unalysis. two top-level goals became

apparent:

1. Refine the student's caudal structure, starting with the most important factors In a particular process and gradually incorporating more subtle factors.

2. Refine the student's procedures for applying his causal model to novel situations.

■««■msn»»*1

I '5'i;^C~:"1*^äw

,/jMi^ ,/j^ VB ..«^f - *^---« -■" -Tg»-'., 'i,». »ii

18 Al Applications in Education

Student misconceptions. The top-level goals involve subgoals of identifying and correcting the student's misconceptions. Stevens & Collins (1977) classified these subgoals into five categories corresponding to types of bugs and how to correct them:

Factual Bugs. Dealt with by correcting the student, Teaching facts is not the goal of Socratic tutoring; interrelationships of facts are more important.

Outside-domain bugs. Misconceptions about causal 'tructure, which the tutor chooses not to explain in detail. For example, the "correct" relationship between the temperature of air and its moisture holding capacity is often . stated by the tutor as a fact, without futher explanation.

Overgeneralization. When a student makes a general rule from an insufficient set of factors (e.g., any place with mountains has heavy rainfall), the tutor will find counterexamples to probe for more factors.

Overdifferentiation. When a student counts factors as necessary when they are not, the tutor will generate counterexamples to show that they are not.

Reasoning bugs. Tutors will attempt to teach students skills such as forming and testing hypotheses and collecting enough information before drawing a conclusion.

If a student displays mtw« than one bug, human tutors will employ a set of heuristics to decide which one to correct first;

1. Correct errors before omissions.

2. Correct causally p;ior factors before later ones.

3. Make short corrections before longer ones.

4. Correct low-level bugs (in the causal network) before correcting higher level ones.

Functional relationships. The bugs Just discussed are all domain independent, that is, they would occur in tutorial dialogues about other complex processes besides rainfall. But some bugs are the results of specific misconceptions about the functional interrelationships of the concepts of the specific domain. For example, one common misconception about rainfall Is that "cooling causes air to rise" (Stevens, Collins, & Goldin, 1978). This is not a simple factual misconception, nor is It domain Independent. It is best characterized as an error in the student's functional model of rainfall.

In fact, the script representation used In the WHY system for capturing the temporal and causal relations of land, air, and water masses In rainfall proved inadequate to get at all of the types of student misconceptions. Recent work has investigated a more flexible representation of functional relationships, which allows the description of the processes that collectively determine rainfall from multiple viewpoints—e.g., temporal-causal-subprocess view captured in the scripts, functional viewpoint which emphasizes the roles that different

amp / X

C2 WHY 19

objects play in the various processes (Stevens. Collins. & Goldin. 1978). Misconceptions about rainfall are represented as errors in the student's model of K***'***™*™**-* functional relationship has four components: (a) a set of actors, each w.th a rde In he process; (b) a set of factors that affect the process--the factors are all attributes of the actors e.g.. water is an actor In the Evaporation relationship and its temperature is a factor); (c) the result of the process--this is always a change in an a tribute of o^ of the actors and (d) the relationship that holds between the actors and the result, or how en attribute gets changed. These funtional relationships may be the result Rodels f^mo her domains that are applied metaphorically to the domain under discuss.on (Stevens & Collins.

1978).

Summary

The WHY system started as en extension of SCHOLAR by the implementation of rules that characterize Socratic tutoring heuristics. Subsequently, an effort was made to describe the global strategies used by human tutors to guide the dialogue. Since these were d.rected towards dispelling students' misconceptions, five classes of m;.sconcept,on

ts H^

established, as well as means for correcting them. Many misconceptions are not domain independent and the key to more versatile tutoring lies in continuing research on knowledge

representation.

References

The most recent reference on the research reported here is SXe^%Co^s'^Go^ (1978). The tutorial rules are discussed fully in an excellent article by Collins (1976). The later work on the goal structure of a tutor is reported in Stevens & Collms. 1977 Fmally recent work on conceptual models and multiple viewpoints of complex systems is discussed

In Stevens & Collins (1978).

MMK*"

/

'|

t^Q,™-^ —*"ysr- ■-IWiii'i». - ■■■—

20 Al Applications in Education

C3. SOWIE

SOPHIE (a SOPHisticated Instructional Environment) is an ICAI system developed by John Seely Brown, Richard Burton, and their colleagues at Bolt, Beranek and Newman, Inc., to explore the objective of a wider range of student initiatives during the tutorial interaction (Brown, Burton, & Bell, 1975). The SOPHIt system provides the student with a learning environment in which he learns problem-solving skills by trying out his ideas, rather than by instruction. The system has a model of the problem-solving knowledge in its domain as well as numerous heuristic strategies for answering the student's questions, criticizing his hypotheses, and suggesting alternative theories for his current hypotheses. SOPHIE enables the student to have a one-to-one relationship with an "expert" who helps him create his own ideas, experiment with these ideas and, when necessary, debug them.

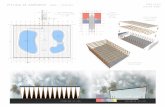

Figure 1 illustrates the component modules of the original SOPHIE-I system (Brown, Rubinstein, & Burton, 1976) and the additional capabilities added for the SOPHIE-II system, discussed later in this article.

Natural Language

SOPHIE-I SYSTEM

Hypothesis Evaluator

Semantic Network

Question Answerer

Team Game Monitor

\

SOPHIE Menu

AUGMENTATIONS FOR SOPHIE-II

Articulate Expert Debugger/ Explainer

/

Figure 1. SOPHIE-I and SOPHIE-II.

r!&^^-?iK5«J,,~r*^LÄ A

-■^2?** --Vai*.** "tJM^jsxmA >

C3 SOPHIE 21

SOPHIE-I

SOPHIE teaches problem-solving skills in the context of a simulated electronics laboratory. The problem facing the student is to find the "faults" in a malfunctioning piece of equipment whose characteristics have been given to him by taking "measurements." The student obtains a sequence of measurements (such as voltages, currents, resistances, etc.) to determine what Is wrong with the equipment and asks questions about the measurements and about what fault hypotheses he should consider, given the information he has discovered so far. SOPHIE evaluates the hypotheses by considering ail of the Information that the student should have been able to derive from his current set of measurements. The system also judges the merits of a student's suggestion for a new measurement in light of the prior sequence of measurements. SOPHIE can decide If a measurement is valuable, depending on whether it eliminates or isolates a fault, and will inform the student when a proposed measurement provides no new information.

The following portion of a sample protocol illustrates some of the linguistic and inferential capabilities of the SOPHIE system (from Brown & Burton, 1975). SOPHIE randomly selects a fault (in the performance of a circuit component), inserts it into a model or simulation of the circuit, and tells the student how the controls are set. The student is given a schematic diagram of the circuit, and the dialogue refers to components in the schematic diagram (e.g., resistor 6 (R8), node connection 1 (N1), capacitor 6 (05)). The student begins his troubleshooting by performing measurements in the faulted circuit.

Welcome to Sophie, a reactive learning environment. The piece of equipment you are studying is a regulated power supply. Would you like to start with a faulted instrument that needs fixing? ** YES

The instrument has a fault and its present control settings are:

CURRENT CONTROL CURRENT RANGE SWITCH LOAD RESISTANCE IN OHMS

VOLTAGE CONTROL VOLTAGE RANGE SWITCH

** WHAT IS THE OUTPL "f*

The output voltage is 11.7 volts.

Notice that "output" was disambiguated as "output voltage."

«« WHAT IS IT IN A WORKING INSTRUMENT?

In a working circuit the output voltage is 19.9 volts.

In case the student does not know what the required value of measurement should have been, he can ask for any measurement in a properly functioning instrument. " ^ student then requests an internal measurement.

** WHAT IS THE VOLTAGE BETWEEN R8 AND 017

cc 1.0 CR HIGH LOAD 20 VC 1.0 VR HIGH

■il ' ". .' . ' ''

/ /

*^Är*\^ __ ^y" TVr-f^Timini ,, j "^TirrffriTir^ir^Yn i Tr- r^w" r nn m i ■*»^ 'mum-* .'imm*

m

22 Al Applications in Education

The voltage at N1 is 47.6 volts.

** REPLACE Q6

The student believes that he has discovered the problem (in transistor Q6) and tries to fix it. Before a component is replaced, however, the system queries him as to how he thinks it is faulted.

I am going to ask you some questions about how Q6 is faulted. Are any junctions shorted?

** I DON'T KNOW

Are any terminals open?

** YES

Which terminal(s) are open?

*« THE BASE

06 is not faulted in that manner. Q6 not replaced.

After learning that Q6 does not have an open base, the student asks if this was at least a reasonable hypothesis.

«* IS IT POSSIBLE THAT Q6 HAS AN OPEN BASE?

Before the system's evaluation procedures can be applied to the student's idea or hypothesis, the hypothesis must it completely specified. To help the student out, the system suggests a plausible complete specification of it in an abbreviated form familiar to the student.

Is the complete fault mode EB/EC/BC = Open/Ok/Open what you intended?

** SURE

That fault is consistent with all of the measurements you have made.

The hypothesis is reasonable, but other measurements will indicate that another component is faulty.

ii UIIIIJ.IMIM. " /*

^^^^""^^ -,-*! " WWIM^I

C3 SOPHIE 23

Natural Language Processing—Semantic Grammar

In an instruc'cional environment, it is important that the student be provided with a convenient way in which to communicate his ideas to the system. The student wilf become quickly frustrated If he has to try several ways of expressing an idea before the system can understand it. SOPHIE'S natural language understander copes with various linguistic problems such as anaphoric references and context-dependent deletions and ellipsis, which occur frequently In natural dialogues. >

SOPHIE'S natural language capabilities are based on the concept of a semantic grammar In which the usual syntactic categories such as noun, verb, and adjective are replaced by semantically meaningful categories (Burton, 1976b, and Burton and Brown, 1979b). These categories represent concepts known to the system-such as "measurements," "circuit elements," "transistors" and "hypotheses." For each concept there is a grammar rule that gives the alternate ways of expressing that concept in terms of its constituent concepts. Each rule Is encoded as a LISP procedure that specifies the order of application of the various alternatives In each rule.

A grammar centered around semantic categories allows the parser to deal with a certain amount of "fuzziness" or uncertainty in its understanding of the words in a given statement; that is, If the parser is searching for a particular instantiation of a semantic category, and the current word In the sentence falls to satisfy this instantiation, it skips over that word and continues searching. Thus, if the student uses certain words or concepts that the system doesn't know, the parser can ignore these words and try to make sense of what remains. In order to limit the negative consequences that may result from a misunderstood question, SOPHIE responds to the student's question with a full sentence that tells him what question Is being answered. (See Article Natural Language.F? about the semantic grammar used in the LIFER system).

Inferencing Strategies

In order to Interact with the student, SOPHIE performs several different logical and tutorial tasks, Firss there is the task of answering hypothetical questions. For example, the student might ask, "If the base-emitter junction of the voltage limiting transistor opens, then what happens to the output voltage?"

A second task SOPHIE must perform is that of hypothesis evaluation, where the student asks, "Given the measurements I have made so far, could the base of transistor Q3 be open?" The problem here Is not to determine if the assertion "the base of Q3 Is open" is true, but whether this assertion Is logically consistent with the data that have already been collected by the student. If It Is not consistent, the program explains why it Is not. When it Is consistent, SOPHIE Identifies which Information supports the assertion and which information is Independent of it.

A third task that SOPHIE must perform is hypothesis generation. In Its simplest form this Involves constructing all possible hypriheses that are consistent with the known information. This procedure enables SOPHIE to answer questions like, "What could be wrong with the circuit (given the measurements that I have taken)?" The task Is solved using the generate- and-test paradigm with the hypothesis evaluation task described above performing the "test" function.

■ ""' ■ ■■■WHMiWiil --;■

24 Al Application* In Education

Finally SOPHIE can determine whether a given measurement is redundant, that is, if the results of Se measurement could have been predicted from a complete theory of the circuit,

given the previous measurements.

SOPHIE accomplishes all of these reasoning tasks using an inference ««^^J*^ relies principally on a general-purpose simulator of the circuit under discussion. For example oanswTr a question about a changed voltage resulting from a hypothetical nodlflcation to a

^rcursOPHE^st interprets the question with its parser and t'-n. using this interpre^t.o. simulates the desired modification. The result is a Voltage Table that represents the voTages at each terminal in the modified circuit. The original question is then answered in

terms of these voltages.

The tasks of hypothesis evaluation and hypothesis generation are handled in a similar manner using the simulator. When evaluating hypotheses. SOPHIE attempts to determine e Togical consistency of a given hypothesis. To accomplish this task« ^7 *<7 ° the

hypothesis is performed on the circuit model and measurements are taken o^le resu,^ „ the values of any of these measurements are not equivalent to the measurements taken by Ihe student then a counterexample has been established and it is used to critique the

student's hypothesis.

When generating hypotheses. SOPHIE attempts to determine the set of possible faults or hypo heses that are consistent with the observed behavior of the faulteo "»trument. This task Is performed by a set of specialist procedures that propose a possible set of Spotfese to' expll a measurement and then simulate them to make sure that they explam the output voltage and all of the measurements that the student has taken. Hypothes.s generation ca be used to suggest possible paths to explore when the student has run ou of ideas for what could be wrong with the circuit or when he wishes to understand the full rmpSons of his last measurement. It Is also used by SOPHIE to determ.ne when a

measurement Is redundant.

SOPHIE-II: The Augmented SOPHIE Lab

Extensions to SOPHIE Include: (a) a troubleshooting game involving two teams of students and (b) the development of an articulate expert debuggerlexplainer The ^P'« react'v« learning environment has also been augmented by the development of ^e:o;'«n^ C*1

lesson material, used to prepare the student for the laboratory mteract.on (Brown, Rubinste:. I Burton. 1976). The articulate expert not only locates ^^'Xove ^ in a given instrument but can articulate exactly the deductions that led to its discovery, as well as the more global strategies that guide the trouble-shooting scenario.

Experience with SOPHIE indicates that its major weakness is an inability to ^"°w UP °" student errors. Since SOPHIE is to be reactive to the student, it will not M***™^** explore a student's understanding or suggest approaches that he does not consider However, the competitive environment of the troubleshooting game, in which partners share a Noblem and work it out together, was found to be an effective means of exercising the student's knowledge of the operation of the instrument ^«/^"M^;/'"^' !J experiment Involving a minicourse-and exposure to the frame-based *e*Xs'"fj*^'*"* the original SOPHIE Lab-indicated that long-term use of the system is ™re effect.ve than a single, concentrated exposure to the material (Brown, Rubinstein. & Burton. 1976).

/ / >

■

C3 SOPHIE 25

Summary