Evaluation of 2012 PSU Stream 1.5 Candidate 21 May 2012 · 2012. 9. 5. · Evaluation of 2012 PSU...

Transcript of Evaluation of 2012 PSU Stream 1.5 Candidate 21 May 2012 · 2012. 9. 5. · Evaluation of 2012 PSU...

1

Evaluation of 2012 PSU Stream 1.5 Candidate

21 May 2012

TCMT Stream 1.5 Analysis Team: Louisa Nance, Mrinal Biswas, Barbara Brown, Tressa

Fowler, Paul Kucera, Kathryn Newman, and Christopher Williams

Data Manager: Kathryn Newman

Inventory

The Pennsylvania State University (PSU) team delivered 72 retrospective PSU ARW (APSU)

forecasts for 18 storms in the Atlantic Basin for the 2008-2011 hurricane seasons. When

generating the interpolated or early model versions, both the CARQ record and storm

information from the NHC Best Track must be available for each case. This requirement was

not satisfied for 5 cases. Hence, early model versions are only available for 67 of the delivered

cases. In addition, the storm was not classified as tropical or subtropical at the initial time of the

early model version for 2 cases. Given the NHC verification package requires a tropical or

subtropical classification at the initial time for a case to be verified, the total sample used in this

analysis consisted of 65 cases. The evaluation of APSU focused on three primary analyses: (1)

a direct comparison between APSU and each of last year’s top-flight models, (2) an assessment

of how APSU performed relative to last year’s top-flight models as a group, and (3) an

evaluation of APSU’s impact on operational consensus forecasts. Given all aspects of the

evaluation are based on homogeneous samples for each type of analysis, the number of cases

may vary depending on the availability of the specific operational baseline.

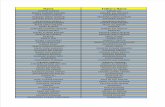

Table 1 contains descriptions of the configurations used in the evaluation that are associated with

APSU forecasts, as well as their corresponding ATCF ids. Definitions of the operational

baselines and their corresponding ATCF ids can be found in the “2012 Stream 1.5 Methodology”

write-up. Table 2 contains a summary of the baselines used to evaluate APSU. Note that only

early versions of all model guidance were considered in this analysis. Cases were aggregated

over ‘land and water’ for track metrics; ‘land and water’ as well as ‘water only’ for intensity

metrics. Except when noted, results are for aggregation of cases over both land and water.

Top-flight Models

Track

The APSI track errors for the Atlantic Basin appear to be on average very similar to those of the

global top-flight models at least through day 2 (see Fig. 1), whereas APSI appears to have

smaller track errors than those for GHMI. Note that the lack of confidence intervals on the track

errors for the longer lead times stems from the fact that the effective sample size for these lead

times is too small to meaningfully estimate variability and confidence. Pair-wise differences

2

between the global top-flight models and APSI do not produce any statistically significant (SS)

differences (Table 3). On the other hand, the comparison between APSI and GHMI led to SS

differences favoring APSI for four lead times out to day three. Percent improvements of APSI

over GHMI for these SS lead times range from 17 to 23%. Along-track errors (not shown)

indicate the top-flight models as well as APSI have a tendency to have a slow bias for this

sample out to day 4. Cross-track errors (not shown) show APSI and the global top-flight models

have no distinct bias out to day 3, whereas GHMI has a slight left bias.

A comparison of APSI’s track performance to that of all three top-flight models (see Fig. 2) does

not reveal any strong evidence toward APSI improving upon or degrading the top-flight model

guidance for track. Although it appears that APSI is more likely to produce the lowest track

errors (rank 1) between 60 and 108 h, the CIs for rank 1 include the 25% line for all but one lead

time, so the percent of cases with rank 1 cannot, for the most part, be deemed statistically distinct

from 25%. Note that the CIs for all other ranks also overlap the CIs for rank 1 for these lead

times, which indicates rank 1 cannot be deemed statistically distinct from the other ranks. The

lack of any SS results for the APSI ranking analysis may simply stem from its small sample size,

especially for longer lead times.

Intensity

The APSI mean absolute intensity errors for the Atlantic Basin appear to be fairly similar to

those of LGEM (i.e., CIs overlap for all lead times that CIs can be meaningfully estimated), and

are definitely smaller than those of DSHP and GHMI for at least a few lead times (see Fig. 3).

The pair-wise differencing analysis produced SS differences favoring APSI over GHMI and

DSHP for four lead times out to 84 h (Table 4). All SS differences favoring APSI are of large

practical significance (> 2 knots) and correspond to percent improvements between 19 and 39%.

The comparison between the APSI and LGEM intensity errors reveals no SS differences. When

the verification results are limited to over-water cases, no SS differences were found for the

comparisons with GHMI and DSHP; this change in the results is mainly due to the large

reduction in the effective sample sizes. The comparison with LGEM for cases over water only

resulted in one SS difference corresponding to a degradation of large practical significance at 12

h. Mean intensity errors for the top-flight models and APSI indicate all four models produce SS

over-prediction biases for at least some of the lead times where the estimated biases associated

with the GHMI forecasts appear to be larger than those for the other three models (see Fig. 4).

Examination of APSI’s performance for intensity forecasts relative to the performance of the

three top-flight models (see Fig. 5) leads to no meaningful conclusions due to the overlap of the

confidence intervals for the rankings. This overlap is likely due to the very small sample sizes

for this comparison (i.e., method lacks power for determining SS rank differences for small

sample sizes because small changes in the distribution of the rankings, possibly due to random

differences in round-off, will lead to large differences between the percent of cases in each

ranking). There is some indication that APSI has the smallest intensity errors (rank 1) from 36 to

3

96 h, but the CIs for rank 1 include the 25% line for all but three of these lead times. In addition,

the CIs for ranks 2 and 3, and for some lead times rank 4, overlap those for rank 1. The position

of rank 4 also appears to suggest that APSI is least likely to be worst after day 2, but once again

the wide confidence bounds include the 25% line for all but one lead time and overlap the CI

bounds for the other ranks, which leads to inconclusive results due to the lack of statistical

significance.

Conventional Model Consensus

Track

The mean track errors for the consensus with APSI (CAPS) appear to be on average very similar

to the track errors for the variable operational track consensus (TVCA), with a suggestion of

slightly smaller errors associated with CAPS beyond two days (see Fig. 6). The pair-wise

differencing analysis produces five SS differences between days 1 and 3.5 that all correspond to

improvements in consensus track guidance of small practical significance. On the other hand,

these SS differences correspond to percent improvements between 5 and 7%. The mean along-

and cross-track errors for CAPS and TVCA are very similar; consensus guidance is too slow at

least through day 3 and to the left of the observed location through day 2 (not shown).

Intensity

The mean absolute intensity errors (see Fig. 7) for CAPS appear to be slightly smaller than those

of the fixed operational intensity consensus (ICON). The pair-wise difference analysis produces

SS differences for four lead times between 36 and 84 h (Table 5). These SS differences are

greater than 1 knot for three lead times, with percent improvements ranging from 7 to 11%.

Limiting the sample to forecasts for which the storm is over water reduces the SS differences

favoring CAPS to one lead time; however, the small effective sample sizes make any confidence

assessment impossible beyond day 2. Mean intensity errors for CAPS and ICON both show a

positive bias of similar magnitude (not shown).

Overall Evaluation

The comparison between APSI and the individual top-flight models indicates APSI does not

provide any SS improvement over the top-flight global track guidance, but does improve upon

the operational GFDL track guidance. The SS improvements over the operational GFDL track

guidance easily meet the 4% criteria for selection as a Stream 1.5 model. On the other hand, the

rank analysis did not provide any conclusive results, which is likely due to the very small sample

sizes. A comparison with the individual top-flight models for intensity indicates APSI improves

upon intensity guidance provided by the two top-flight models GHMI and DSHP, whereas the

comparison with LGEM leads to a single SS degradation, but only when the sample is limited to

cases over water. All SS differences favoring APSI correspond to percent improvements greater

than 3%. While the ranking assessment suggests APSI has a tendency to produce forecasts with

4

the smallest intensity errors for a number of lead times, these results could not be deemed SS.

Once again, the lack of SS is likely in large part due to the small sample sizes. Hence, the results

of the individual model comparisons are somewhat favorable for APSI being selected as a 2012

Stream 1.5 model for explicit track and intensity guidance in the Atlantic Basin (i.e., APSI is

able to improve upon guidance from one top-flight model for track and two top-flight models for

intensity), but the results should be interpreted with caution due to the rather small sample sizes.

Adding APSI to the variable consensus for track guidance provided SS improvement to the

operational guidance, with the small reductions in track errors (< 10 nm) corresponding to

percent improvements greater than 5% for five lead times. The addition of APSI to the fixed

operational intensity consensus produced SS improvements at four lead times, with reductions in

intensity errors between 0.9 and 1.4 knots. These SS differences correspond to percent

improvements between 7 and 11%. Limiting the sample to cases over water reduced the number

of SS improvements, which can be attributed to the reduction in effective sample sizes with this

aggregation. Hence, the consensus comparisons appear to be favorable for APSI to be included

in the 2012 Stream 1.5 consensus guidance for both track and intensity in the Atlantic Basin, but

once again, these results should be interpreted with caution due to the small sample sizes.

5

Table 1: Descriptions of the various PSU-related configurations used in this evaluation and their

assigned ATCF ids.

ID Description of configuration

APSU Late model version (non-interpolated)

APSI Early model version (interpolated, adjustment window 18 to 30 h)

CAPS

Average of

EMXI/GFSI/EGRI/GHMI/HWFI/GFNI/APSI (Atlantic track)

Track: variable - at least three members must be present and APSI must be available

DSHP/LGEM/GHMI/HWFI/APSI (intensity)

Intensity: fixed – all members must be present

Table 2: Summary of baselines used for evaluation of APSI for the specified metrics.

ID

Variables Verified

Aggregation

Track

land and water

Intensity

land and water

Intensity

water only

EMXI ●

GFSI ●

GHMI ● ● ●

LGEM ● ●

DSHP ● ●

ICON ● ●

TVCA ●

HOMG ● ● ●

6

Table 3: Inventory of statistically significant (SS) pair-wise differences for track stemming from the comparison of each individual top-flight

model and the Stream 1.5 candidate. See 2012 Stream 1.5 methodology write-up for description of entries.

Forecast Hour

0 12 24 36 48 60 72 84 96 108 120

Atl

an

tic

Basin

GFSI 0.0 -0.3 0.9 -0.4 4.8 17.9 38.8 18.2 49.7 24.8 47.9

Track 0% -1% 2% -1% 6% 16% 25% 12% 31% 15% 26%

Land/Wate

r

- 0.132 0.303 0.069 0.504 0.853 0.904 0.562 - - -

EMXI 0.0 0.3 1.0 1.6 -2.8 1.6 7.4 11.7 27.7 19.9 52.3

Track 0% 1% 3% 3% -4% 2% 6% 9% 20% 13% 32%

Land/Wate

r

- 0.113 0.175 0.206 0.272 0.098 0.508 0.527 - - -

GHMI 0.0 6.8 7.4 12.2 11.3 25.6 33.4 22.1 49.9 64.5 90.0

Track 0% 23% 17% 20% 14% 21% 22% 14% 31% 32% 39%

Land/Wate

r

- 0.998 0.961 0.984 0.866 0.935 0.979 0.802 0.904 - -

7

Table 4: Inventory of statistically significant (SS) pair-wise differences for intensity stemming from the comparison of each individual top-flight

model and the Stream 1.5 candidate. See 2012 Stream 1.5 methodology write-up for description of entries.

Forecast Hour

0 12 24 36 48 60 72 84 96 108 120

Atl

an

tic

Basin

GHMI 0.0 -0.9 2.8 6.0 6.2 4.2 5.5 5.3 8.0 4.5 2.8

Intensity 0% -8% 19% 34% 39% 28% 32% 32% 43% 25% 15%

Land/Water - 0.735 0.964 0.998 0.999 0.899 0.914 0.976 - - -

GHMI 0.0 -0.6 1.5 4.8 2.8 2.8 2.1 0.2 4.1 6.6 7.3

Intensity 0% -5% 9% 27% 18% 16% 12% 1% 27% 32% 28%

Water Only - 0.448 0.645 0.934 - - - - - - -

LGEM 0.0 -1.4 -0.3 1.1 3.1 1.2 2.2 0.6 2.1 -4.3 -6.0

Intensity 0% -13% -2% 9% 24% 10% 16% 5% 16% -45% -62%

Land/Water - 0.833 0.158 0.456 0.908 0.542 0.466 0.112 - - -

LGEM 0.0 -2.3 -2.4 -1.3 -1.5 -1.3 -3.2 -3.9 2.1 -3.6 -2.7

Intensity 0% -24% -20% -11% -14% -11% -29% -33% 16% -35% -17%

Water Only - 0.974 0.881 0.478 0.336 - - - - - -

DSHP 0.0 -0.9 0.4 3.0 5.3 3.3 4.6 5.4 7.4 2.2 -1.5

Intensity 0% -8% 3% 20% 35% 23% 28% 32% 40% 14% -11%

Land/Water - 0.679 0.240 0.898 0.997 0.954 0.972 0.959 - - -

DSHP 0.0 -1.8 -0.9 2.2 3.9 2.9 2.4 4.9 12.0 4.6 2.7

Intensity 0% -18% -7% 15% 25% 18% 14% 24% 52% 25% 12%

Water Only - 0.948 0.475 0.632 0.750 - - - - - -

8

Table 5: Inventory of statistically significant (SS) pair-wise differences for the comparison of the two consensus forecasts. See 2012 Stream 1.5

methodology write-up for description of entries.

Forecast Hour

0 12 24 36 48 60 72 84 96 108 120

Atl

an

tic

Basin

TVCA 0.0 0.5 1.6 2.4 3.1 8.2 7.7 7.7 15.8 19.4 32.3

Track 0% 2% 5% 5% 5% 7% 6% 5% 10% 11% 15%

Land/Water - 0.899 0.990 0.996 0.908 0.999 0.997 0.986 - - -

ICON 0.0 -0.2 0.5 1.4 1.4 0.9 1.4 1.3 1.9 1.3 2.3

Intensity 0% -2% 4% 10% 11% 7% 9% 8% 10% 8% 14%

Land/Water - 0.679 0.899 0.999 0.999 0.970 0.946 0.984 - - -

ICON 0.0 -0.2 0.3 1.1 1 0.5 0.6 0.3 1.6 0.2 0

Intensity 0% -2% 2% 7% 7% 4% 4% 2% 8% 1% 0%

Water Only - 0.677 0.676 0.964 0.941 - - - - - -

9

Figure 1: Mean track errors and 95% confidence

intervals with respect to lead time for EMXI and APSI

(top left panel), GFSI and APSI (top right panel) and

GHMI and APSI (bottom left panel) for the Atlantic

Basin.

10

Figure 2: Rankings with 95% confidence intervals (dotted lines) for APSI compared to the three top-flight models for track guidance with respect

to lead time. The grey horizontal solid line highlights the 25% frequency for reference. Black numbers indicate the frequencies of the first and

fourth rankings where the candidate model is assigned the better (lower) ranking for all ties.

11

Figure 3: Mean absolute intensity errors and 95%

confidence intervals with respect to lead time for

LGEM and APSI (top left panel), DSHP and APSI (top

right panel) and GHMI and APSI (bottom left panel)

for the Atlantic Basin.

12

Figure 4: Mean intensity errors and 95% confidence

intervals with respect to lead time for LGEM and APSI

(top left panel), DSHP and APSI (top right panel) and

GHMI and APSI (bottom left panel) for the Atlantic

Basin.

13

Figure 5: Rankings with 95% confidence intervals (dotted lines) for APSI compared to the three top-flight models for intensity guidance with

respect to lead time. The grey horizontal solid line highlights the 25% frequency for reference. Black numbers indicate the frequencies of the first

and fourth rankings where the candidate model is assigned the better (lower) ranking for all ties.

14

Figure 6: Mean track errors and 95% confidence intervals with respect to lead time for variable

operational consensus for track (TVCA) and CAPS for the Atlantic Basin.

Figure 7: Mean absolute intensity errors and 95% confidence intervals with respect to lead time

for fixed operational consensus for intensity (ICON) and CAPS for the Atlantic Basin.

![savefallsroad.orgTranslate this pagesavefallsroad.org/wp-content/uploads/2012/05/citizens...%PDF-1.5 %âãÏÓ 1261 0 objstream xÚŒ”Mn 1 …¯Â ŒDý ‚¬º)Œ F’]Ñ…‘N»J\](https://static.fdocuments.in/doc/165x107/5b0acbcf7f8b9a99488cb6ca/this-pagesavefallsroadorgwp-contentuploads201205citizenspdf-15-1261.jpg)