Avoiding Manufacturing - Advantage Industrial · PDF fileAvoiding Manufacturing ... Work in...

Transcript of Avoiding Manufacturing - Advantage Industrial · PDF fileAvoiding Manufacturing ... Work in...

Avoiding Manufacturing Application Downtime

The Downside of Lean Application Downtime, Your Productivity Killer How Virtualization Rocks Your World Resources About Stratus Technologies

The Downside of Lean

To be truly lean, manufacturers require IT strategies designed to reduce or eliminate waste-creating downtime of critical applications -- the real 'enemy of lean.' By Frank Hill

This article is not about lean manufacturing.

It’s about the assumption plant operations make when they implement lean strategies to purge waste in pursuit of customer value -- the assumption that the manufacturing lines are actually running and products are actually being produced.

Downtime is the enemy of lean. Downtime is, in fact, waste.

Idled lines do not add value. Restarting production after unplanned downtime requires more effort, usually expended with less efficiency and worker productivity. This reintroduces waste, which creates added costs that customers do not pay for, yet must be absorbed into cost of goods sold.

With this at stake, implementing IT strategies that reduce or eliminate unplanned downtime of critical manufacturing applications should be integral to lean program strategies. This often requires a change in mindset.

Most IT and operations people prepare for rapid recovery from failure, assuming it is inevitable. Instead, proactively preventing downtime from happening in the first place

Table of Contents

yields better outcomes; it’s also more efficient in practice and cost-effective over the long term.

Further, most organizations -- manufacturing or otherwise -- do not know the real cost when downtime strikes critical applications. Without knowing the value of downtime, the ability to choose the correct technology to protect the applications you need most, and weighing the risk/benefit of each choice, is suspect.

A Greenfield Opportunity

Is unplanned downtime a way of life for manufacturers or a target-rich area for new lean initiatives?

Consider this: A survey conducted by IndustryWeek magazine of 500 subscribers (sponsored by Stratus Technologies) found that manufacturers had unplanned downtime an average of 3.6 times annually. Nearly one out in three experienced an outage in the first three months of 2012.

The survey also found that two out of three manufacturers have no strategy or IT solutions for high availability in their plants. The downtime-recovery choice for 64% of respondents is passive back-up, the least effective method shy of doing nothing at all.

In that same survey, respondents estimated their downtime cost per incident. The composite average was approximately $66,000 annually. For manufacturers with revenues above $1 billion per year, the average cost was more than $146,000 spread across an average of 4.5 annual downtime incidents.

In our experience, these average cost estimates are low, which often happens when you directly ask the question without additional probing into factors behind the answer. Many professional research firms and industry consultants do conduct in-depth analysis of downtime costs. Cross-industry estimates range from $110,000 to $150,000 per hour and higher for the average company.

A 2010 study by Coleman Parkes Research Ltd, on behalf of Computer Associates, pegged the average cost for manufacturing companies specifically at $196,000 per hour. That same research found that the average North American organization suffers from 12 hours of downtime per year with an additional eight hours of recovery time; numbers are slightly higher for European firms. Two thousand organizations participated in the survey.

Calculating Downtime Cost

Determining the total of hard and soft downtime costs is not easy, which is why it’s often not done well if at all.

One would think that tallying direct wage cost absorbed during an outage would be a simple matter. When is an outage over? When the problem is fixed or when full production is resumed? Employees do not immediately return to full productivity during the recovery period, and may not for hours; the full value of their labor is not being realized.

Work in process may need to be discarded, as may work produced during the recovery period that doesn’t meet specifications. The line can lose sequence, generate a lot of scrap, or even damage tools because systems recover to an unknown state. You may incur repair costs and

outside service expenses. Internal IT resources are diverted from doing something else to fix your problem.

Highly integrated systems can push the effects of downtime on the production line to administrative functions upstream and down, into the supplier pipeline, to customer order fulfillment and product delivery. Downtime can invoke financial penalties if contract conditions are breached, or if regulatory requirements are violated. Customer relationships get damaged and reputations tarnished.

All these, and many other variables particular to your own manufacturing processes and environment, are legitimate contributors to the cost of downtime and should be included.

Availability Options

Some production-line applications are more critical than others. The first applications you want back online usually are the ones that need higher levels of uptime protection.

A full analysis of availability products, technologies and approaches is beyond the scope of this article. Suffice it to say, each has its place, its benefits and tradeoffs, and very real differences in the ability to protect against application downtime.

A common way to assess a solution’s efficacy is by looking at its “nines,” i.e. its promised level uptime protection. The chart below shows the expected period of downtime for each level, and how that might translate into financial losses. Note that downtime per year could be a single instance, or spread across multiple incidents. Also note that higher uptime does not

necessarily mean a solution is more costly; software licensing, configuration restrictions, required skills, ease of use and management are just a few of the many factors affecting total cost of ownership.

As we mentioned at the outset, most high-availability solutions focus on recovery after failure. Very few are engineered to prevent failure from occurring.

Virtualization

The benefits of virtualization are indisputable. More and more manufacturers are using virtualization software on the plant floor to consolidate servers and applications, and to reduce operating and maintenance costs. Some rely on its availability attributes to guard against downtime, as well.

By its nature, virtualization creates critical computing environments. Consolidating many applications onto fewer servers means that the impact of a server outage will affect many more workloads than the one server/one application model typically used without virtualization. The software itself

does nothing to prevent server outages, and you can’t migrate applications off a dead server.

Restarting the applications on another server takes time, depending on the number and size of applications to be restarted. Data that was not written to disk will be lost. The root cause of the outage will remain a mystery. If data was corrupted, then corrupted data may be introduced to the failover server.

This may not pose a problem, depending on your tolerance for certain applications being offline for a few minutes to an hour, perhaps more. However, many companies are reluctant to virtualize truly critical applications for this reason. High availability software and fault tolerant servers are viable options to standalone servers, hot back-up or server clusters as a virtualization platform, and worth evaluating. These technologies are sometimes overlooked because of a lack of familiarity, or outdated notions that they are complex or budget-busters.

Summary

Manufacturers are squeezing more efficiency, productivity and value out of existing resources by using lean manufacturing techniques. IT systems downtime derails these initiatives by reintroducing cost, production inefficiencies, lost customer value … i.e. waste. Preventing downtime from occurring is a more efficient, effective strategy than recovering from failure after it has occurred. Determining the cost of downtime is a necessary step to evaluating which applications require the greatest

protection against downtime. Only then can appropriate availability technologies and products be chosen based on need, available resources to manage them, and ROI.

Application Downtime, Your Productivity Killer By John Blanchard and Greg Gorbach, ARC Advisory Group

Overview

Today, manufacturing enterprises are faced with intense competitive pressure, limited IT resources, and increasing IT costs. They increasingly recognize that modern automation technology and services, properly selected and deployed, can help them improve productivity, better ensure product quality, and improve margins. They are automating and integrating most every aspect of manufacturing operations. This includes moving from dedicated to consolidated computer resources, which is critical to controlling IT hardware, software, staffing, and facilities cost to reduce total cost of ownership (TCO). Consolidation also means that even a minor computer outage can have severe and immediate consequences. As a result, more and more manufacturers are deploying new uptime assurance solutions that combine hardware, software, and services designed to prevent computer outages.

Trends in Plant Application Adoption

In today’s increasingly competitive global manufacturing environment, manufacturing enterprises are deploying an increasing number of mission-critical applications in production operations to improve manufacturing efficiency and effectiveness. This is true across every industry and every geographic region of the world, but particularly so in both the fast and slow moving consumer goods manufacturing

industries, as well as many consumer facing industries. These applications include materials management, finite scheduling, quality and performance monitoring and reporting, plant historians, asset lifecycle management, facilities management, procurement, order management, other ERP functions, as well as computer aided manufacturing (CAM) and process planning.

Some industry trends also help explain why we expect requirements for uptime assurance to continue to increase into the foreseeable future. Many companies face a lack of available manpower with the proper skill sets. In fact, about 5 percent of skill jobs in the US remain unfilled. Over 50 percent of manufacturers surveyed by ARC further indicated that they felt this shortage of skilled workers would continue to grow worse. Companies are also finding ways to “do more with less.” They are automating more and more traditionally semi-manual and manual operations.

Traditional manufacturing organizational structures, best practices, and automation architectures are being transformed to meet the needs of the new real-time, on-demand and highly competitive global manufacturing environment. The traditional “siloed” organizational structure is inefficient. The days of having a department head be responsible for determining best practices, standard operating procedures, and automation technology within his or her own department can no longer meet today’s business requirements. It's now necessary to consolidate these systems and applications. Even the practice of standardizing on hardware from a single vendor to reduce costs is being challenged, as companies look to reduce total cost of ownership (TCO) and use technology to gain performance improvements.

Why is Application Uptime Important?

As companies consolidate disparate departmentalized systems, they have less tolerance for downtime. In addition, manufacturers are using an increasing number of applications to help minimize production costs and product variability on a weekly and even daily basis. Many of these applications work with real-time data, rather than analyzing historic data offline. With fewer production facilities, many operations run around the clock. This has produced a proliferation of productivity-enhancing applications in manufacturing, which are often woven together into an integrated, mutually dependent “solution.” Production machinery such as CNC machines, robotic packaging machines, and other processes are also increasingly becoming mission critical - where traditional process controls are no longer sufficient to meet uptime needs. Even slight interruptions to any of these applications and equipment controls can have severe and immediate consequences. ARC research shows that direct downtime costs vary from several thousand to tens of thousands USD per hour, dependent upon the site, product, etc. This does not even take into account lost revenues from customers who decide to take their business elsewhere, never to return.

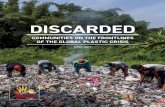

Loss of MES Applications Can Shut Down Operations at Kraft Paper Mill

KapStone Paper and Packaging provides a good example of the need for uptime assurance and the cost of unscheduled downtime caused by the lack of availability of its mission-critical production management software. The company produces unbleached kraft paper that goes into the packages, wraps, and surfaces that are

a part of everyday life. Emerson Beach, Kapstone’s Director of IT Operations, explained, “If the MES system stops, we can make reels of paper coming off the paper machines for a little while. But we can't cut the rolls to match our orders because the size of the pieces comes from the production management system. So we only have a little time before we would have to shut down the paper machines.” Since the company operates 24/7, that translates into about $33,000 per hour per machine.

Loss or Lack of Data Can Result in Unsalable Product and High Inventory Costs

Loss of data can also have considerable financial impact. Information about the components or ingredients that went into the manufacture of the product, as well as information about the actual manufacturing of the product, is often required to be able to sell the product. Loss of information on a single batch of product in the pharmaceutical industry can translate into millions of dollars in raw material costs and lost revenue. In this case uptime assurance requires not just a fault-tolerant server, but also fault-tolerant communications and a fault-tolerant data storage system.

Many companies also lack visibility to important information due to the many disparate legacy systems that are remnants of traditional organizational structures and practices. For example, one key to lowering the cost of goods sold is to lower inventory carrying costs. However, few companies have accurate information on raw materials, in-process, and finished goods inventories. Consolidation into mutually interdependent integrated applications brings greater data visibility and greater need for uptime assurance.

Evaluating Infrastructure Options Using TCO

As legacy mission critical hardware and software reach the end of their lifecycles, manufacturers must evaluate technology options to replace them. Most legacy production-level systems rely upon a single high-reliability server and backup data storage. IT departments commonly use cluster computing and virtual environments for their business systems. The financial services and banking industries typically use high-availability software and physically remote, fault-tolerant servers with dedicated high-speed Ethernet connections. This architecture ensures uninterrupted continuation of services in case of a disaster. Specially designed fault-tolerant computers and subsystems, with closely coupled fully redundant components sourced from a single supplier, provide the next highest availability option. The optimum uptime assurance solution is integrated hardware, software, and services designed to prevent failure, and provided by a single supplier.

Hot Backup or Clustering Solutions vs. Fault-Tolerant Servers

While traditional clustering or hot back up approaches to uptime provide enhanced availability, they also pose significant challenges. In contrast to true hardware fault tolerance, which prevents server failure in a manner that is both automatic and transparent to the user, hot back up and cluster solutions rely on complex "failure recovery" mechanisms that incur varying degrees of downtime. During these failover/recovery periods (that can range from a few seconds to minutes) a backup system automatically restarts the applications and logs

on users. Performance and throughput may be compromised and all in-memory content is lost.

Built from combinations of conventional servers, software and enabling technologies, clusters require failover scripting and testing that must be repeated whenever changes are made to the environment. Clustering may also require licensing and installing multiple copies of software as well as software upgrades and application modifications. Hot backup solutions and clusters typically provide 99.9 percent availability or more. Actual availability levels depend on the environment and the sophistication and skill set of the team responsible for setup, administration, and management. In contrast, fault-tolerant servers inherently offer 99.999 percent and greater availability and are as easy to install, use, and maintain as a single conventional system.

Fault-Tolerant Virtualization

Many manufacturing applications are now certified for virtualization. Virtualization has become an important technology in efforts to reduce automation and plant IT costs. It increases server utilization, enables server consolidation, simplifies the migration of legacy applications to higher performance servers, and enables better disaster recovery. It provides an environment that is much more efficient for IT staff to manage. However, placing more applications on a single server increases the impact of a server failure. This creates even greater business risk if these are mission critical applications. With fault tolerant servers, the risk of virtualizing mission critical applications is eliminated.

Determining Total Cost of Ownership

Each manufacturing operation has its own unique requirements in terms of uptime assurance, acceptable degree of business risk, available IT skill sets, etc. Since this is an investment over the lifecycle of the technology, it should be evaluated based upon TCO and any “anticipated” changes in business requirements and risk, available IT skill sets, etc. TCO is the sum of the installed cost plus the net present value of the recurring costs and the cost of retirement. What is the full cost of downtime? Will there be sufficient IT skill sets in the coming years or will much of it be outsourced? What support services does the technology supplier provide? What is the cost/value of this service? Given today’s intensely competitive manufacturing environment, limited resources, and increasing business risks, many manufacturers are selecting single-supplier sourced, fault-tolerant computing platforms and services. As we move more and more toward supplier-agnostic automation and plant IT architectures, “smart” technology, and increased use of Internet services, companies should continuously reevaluate their IT best practices.

Conclusion

To improve efficiency and effectiveness in today’s intensely competitive global environment, manufacturing is becoming more and more automated. As a result, manufacturers have increasingly less tolerance for downtime or data loss. Uptime assurance has become critical to the success of business operations. The need for greater uptime assurance continues to grow and it will

be a critical component to the future success of manufacturing enterprises.

This paper was written by ARC Advisory Group on behalf of Stratus Technologies. The opinions and observations stated are those of ARC Advisory Group. For further information or to provide feedback on this paper, please contact the authors at [email protected] or [email protected]. ARC Briefs are published and copyrighted by ARC Advisory Group. The information is proprietary to ARC and no part of it may be reproduced without prior permission from ARC Advisory Group.

How Virtualization Rocks Your World Operating systems can't tell the difference between a virtual machine and a physical machine, but manufacturers are seeing -- and celebrating -- the notable difference virtualization is having on their bottom line.

By Dallas West and Anthony Baker

With aging plant infrastructures, tighter regulations, data security issues and numerous other challenges to address, process engineers want to get the most from their IT-based plant assets. To do this, many have included virtualization in their automation migration plans. Virtualization is rapidly transforming the IT landscape, and fundamentally changing the way you use hardware resources.

And the return on investment (ROI) is nearly immediate because virtualization helps you build an infrastructure that better leverages manufacturing resources and delivers high availability.

What is Virtualization?

Virtualization is a software technology that decouples the physical hardware of a computer from its operating system (OS) and software applications, creating a pure software instance of the former physical computer -- commonly referred to as a Virtual Machine (VM). A VM behaves exactly like a physical computer, contains it own

"virtual" CPU, RAM hard disk and network interface card, and runs as an isolated guest OS installation within your host OS. The terms "host" and "guest" are used to help distinguish the software that runs on the actual machine (host) from the software that runs on the virtual machine (guest).

Virtualization works by inserting a layer of software called a "hypervisor" directly on the computer hardware or on a host OS. A hypervisor allows multiple OSs, "guests," to run concurrently on a host computer (the actual machine on which the virtualization takes place). Conceptually, a hypervisor is one level higher than a supervisory program. It presents to the guest OS a virtual operating platform and manages the execution of the guest OSs.

Virtualization software allows virtual machines to access the physical hardware resources of the run multiple VMs on one physical computer allows for the optimization of server and workstation physical assets as most server-based computers are significantly underutilized. Organizations typically run one application per server to avoid the risk of vulnerabilities in one application affecting the availability of another application on the same server. As a result, typical x86 server deployments achieve an average utilization of only 10-15 percent of total capacity. Virtualization allows applications to share computers, allowing manufacturers to re-adjust how many computers are needed.

Virtualization in Action

But the benefits of virtualization go far beyond the consolidation

of computers. Many manufacturers use virtualization to extend their software's longevity. Consider the case of Genentech, a biotech company based in South San Francisco, California. Genentech specializes in using human genetic information to develop and manufacture medicines to treat patients with serious or life-threatening medical conditions.

The company estimated that the costs to upgrade one of its Windows 95 PC-based HMIs to a Windows Server 2003-based system would be approximately $40,000. Final figures topped $100,000 because of costs associated with validating the system for use in a regulated industry. Assuming that OSs are updated about every five years, costs quickly become a limiting factor in keeping an installed base of manufacturing computers up-to-date.

Additional factors also contribute to the cost of upgrades. "Computer hardware changes even more frequently than operating systems," says Anthony Baker, system engineer at Rockwell Automation. "Each change incurs engineering expenses and possibly production downtime."

So instead of investing in the upgrades, Genentech implemented virtualization.

According to Dallas West, at Genentech, one of the most lasting effects of virtualization is that it allows legacy operating systems, such as Windows 95, Windows NT, etc., to be run successfully on computers manufactured today. This extends HMI product lifecycles from 5-7 years to 10-15 years and possibly longer.

"Having the ability to extend the useful life of a computer system allows a manufacturer to create a planned,

predictable upgrade cycle commensurate with its business objectives," West says.

"No longer is a business forced to upgrade its systems because a software vendor has come out with a new version. Upgrading systems can once again be driven by adding top-line business value by choosing to upgrade when new features become available that will provide an acceptable return on investment," he explains.

Why Virtualization is a Big Deal

Virtualized assets also help increase productivity. By not having to maintain physical hardware, administrators are able to carry a heavier workload. A recent study by IT analyst firm IDC found that administrators manage an average of about 30 servers. After virtualization, they can manage 60-90 servers -- a significant increase in capacity. They're also able to spend more time architecting their infrastructure for higher levels of productivity.

"Gone are the days of "server sprawl," where a new server is needed for each new application or tool and each ends up running at only 8-10% utilization," Baker says.

Virtualization allows companies to create a scalable infrastructure, where new VMs can be added without the need to continuously buy new hardware and other physical devices. When manufacturers start to consolidate, they find they can buy and allocate the appropriate amount of resources for each VM, which reduces system maintenance and energy consumption costs.

Another feature of virtualization is that the system doesn't know it's "virtualized." This allows administrators to take hardware offline while the system is up and running.

"Since the VMs are not attached to a physical computer, the VMs can be migrated between servers while the system is still running," notes Baker.

During a planned outage, administrators can shift their workloads so the server can be taken down with no impact to the system. When the planned outage is complete, the server can be placed back into service.

Advantages of a Virtual Machine:

• Hardware independence • Fault tolerance • Application-load balancing • Rapid disaster recovery • Recovery to non-identical hardware • Ability to pre-test OS patches or vendor updates • Ability to roll-back incompatible OS patches or vendor

updates • Reduced TCO resulting from consistent datacenter

environment • Reduced power usage

Applying Virtual Assets

Using specially designed host hardware available on the market today, a virtual server can be sized with extensive memory and CPU resources. This allows a large number of VMs to be consolidated onto a single machine without impacting performance.

You can create a data center by running a group of servers with hypervisors managed via a virtualization infrastructure management interface. The bare-metal environment frees up a large portion of resources, which can then be dedicated to running the VMs.

Tips for Getting Started

If you're thinking about virtualization for your manufacturing facility, consider the following advice:

1. Being able to run legacy software indefinitely doesn't mean you should. If you find a software bug in an old system, don't count on it being fixed. You'll also want to consider the security implications of running legacy software. Virtualization should be used as a tool to moderately extend the life cycle of a computer system to allow an upgrade to take place in a planned and predictable manner when a suitable business driver emerges.

2. Not all network protocols are easily virtualized. The use of standard Ethernet is most widely supported by the various virtualization vendors.

3. Non x86-based systems (ie. SPARC, DEC-Alpha, etc.) cannot be virtualized to run on a x86-based machine, such as Intel, Advanced Micro Devices (AMD), etc.

4. Third-party vendor support for virtualized systems has been limited. There is an added risk of running virtualized software in a production environment that has not been thoroughly tested and endorsed by a respective third-party vendor.

Resources Ironclad Uptime Reliability for Real-Time Manufacturing Needs This Analyst Connection from IDC discusses how top plant managers and IT managers employ high availability solutions to protect their MES and plant applications from downtime. This report will help you understand which solutions are best for your plant. Server Virtualization in Manufacturing Learn the best practices to put in place as you virtualize your mission-critical manufacturing applications. This paper offers best practices you can use to benefit from server virtualization today, while avoiding mistakes that could affect the availability and performance of mission-critical manufacturing IT. Total Cost of Downtime Calculator for Manufacturing Do you know your total cost of downtime? You rely on your automated systems to keep manufacturing operations running efficiently and profitably. System failures or application unavailability can have a serious impact on your business — and your bottom line. Without the ability to quantify downtime costs, it can be difficult to budget appropriately for IT investments aimed at preventing server downtime and optimizing application availability. This calculator will help you determine how much is at stake when your critical applications go offline.

Rexam Rexam’s Agile IT Initiative Keeps Plants Running This article by Manufacturing Business Technology provides an overview of how Rexam employed fault tolerant servers with their SAP solution to ensure uninterrupted production at their plants. Stratus Uptime Assurance for Manufacturing Preventing downtime for mission-critical applications at the heart of plant operations Fault tolerance and virtualization are making big waves in manufacturing. When it comes to industry-leading uptime, Stratus solutions pick up where virtualization technology leaves off, automatically protecting your most critical plant operations software from downtime and data loss, simply and cost effectively. Stratus solutions all but eliminate downtime from plant operations with virtually no hands-on support by in-house staff.

About Stratus

Keep Your Critical Manufacturing Applications Up and Running. All theTime.

Stratus provides high-availability solutions that prevent downtime. For essential industrial applications like MES, automation, and data historians, even a few minutes of unplanned downtime can inflict serious consequences that only grow worse with the length of the outage. Stratus solutions are engineered to automatically prevent outages and data loss, ensuring your critical applications are up and running 24/7.

Stratus is partnered with leading industrial application companies including Siemens, GE IP, Invensys, Emerson, Rockwell Automation, ABB, Yokogawa, SAP AG and others. With our partners, Stratus has hundreds of implementations in major industrial sectors such as pharmaceuticals, power and energy, water management, food and beverage, oil and gas, chemicals, consumer goods, automotive, and more.

Our team integrates proactive monitoring services with reliable hardware, software and service offerings to deliver high availability solutions to keep your critical applications up and running.

For more information about the High Availability solutions for Manufacturers, visit our site.

Sponsored by

Presented by

© 2012 Penton Media. All rights reserved. Use, duplication, or sale of this service, or data contained

herein, is strictly prohibited.