Autoregressive Integrated Moving Average (ARIMA) models

description

Transcript of Autoregressive Integrated Moving Average (ARIMA) models

1

Autoregressive Integrated Moving Average (ARIMA) models

2

- Forecasting techniques based on exponential smoothing-General assumption for the above models: times series data are represented as the sum of two distinct components (deterministc & random)- Random noise: generated through independent shocks to the process-In practice: successive observations show serial dependence

3

- ARIMA models are also known as the Box-Jenkins methodology

- very popular : suitable for almost all time series & many times generate more accurate forecasts than other methods.

- limitations: If there is not enough data, they may not be better at forecasting than the decomposition or exponential smoothing techniques. Recommended number of observations at least 30-50 - Weak stationarity is required- Equal space between intervals

ARIMA Models

4

5

6

Linear Models for Time series

7

Linear Filter

- It is a process that converts the input xt, into output yt

- The conversion involves past, current and future values of the input in the form of a summation with different weights

- Time invariant do not depend on time- Physically realizable: the output is a linear function of the current and

past values of the input- Stable if

In linear filters: stationarity of the input time series is also reflected in the output

ii

i

8

Stationarity

9

A time series that fulfill these conditions tends to return to its mean and fluctuate around this mean with constant variance.

Note: Strict stationarity requires, in addition to the conditions of weak stationarity, that the time series has to fulfill further conditions about its distribution including skewness, kurtosis etc.

-Take snaphots of the process at different time points & observe its behavior: if similar over time then stationary time series

-A strong & slowly dying ACF suggests deviations from stationarity

Determine stationarity

10

11

12

Infinite Moving AverageInput xt stationary

THEN, the linear process with white noise time series εt

Is stationary

Output yt

Stationary, with &

εt independent random shocks, with E(εt)=0 &

13

t

ti

ii

tttt

B

B

y

)( 0

22110

autocovariance function

Linear Process

Infinite moving average

14

The infinite moving average serves as a general class of models for any stationary time series

THEOREM (World 1938):

Any no deterministic weakly stationary time series yt can be represented as

where

INTERPRETATION A stationary time series can be seen as the weighted sum of the present and past disturbances

15

Infinite moving average: - Impractical to estimate the infinitely weights- Useless in practice except for special cases: i. Finite order moving average (MA) models : weights set to 0, except for a finite number of weights ii. Finite order autoregressive (AR) models: weights are generated using only a finite number of parameters iii. A mixture of finite order autoregressive & moving average models (ARMA)

16

Finite Order Moving Average (MA) process

Moving average process of order q(MA(q))

noisewhite

y

t

qtqttt

11

MA(q) : always stationary regardless of the values of the weights

t

i

q

i

ii

tq

qt

B

B

BBy

1

)1(

1

1

17

Expected value of MA(q)

11 qtqttt EyE

Variance of MA(q) 22

12

11

1

0

q

qtqttyt VaryVar

Autocovariance of MA(q) qkqk

qktqktktqtqtty

qqkkk

Ek.,,2,1,

,0

1111

112

Autocorelation of MA(q)

qkqk

y

yy

qqqkkkk

k .,,2,1, 1/,0

22111

0

εt white noise

18

ACF function: Helps identifying the MA model & its appropriate order as its cuts off after lag k

Real applications: r(k) not always zero after lag q; becomes very small in absolute value after lag q

19

First Order Moving Average Process MA(1)

Autocovariance of MA(q)

Autocorelation of MA(q)

21

11

1, 0)(1

1

21

1

21

1

y

y

y

kk

q=1

20

- Mean & variance : stable- Short runs where

successive observations tend to follow each other

- Positive autocorrelation

- Observations oscillate successively

- negative autocorrelation

21

Second Order Moving Average MA(2) process

t

tttt

BB

y

2

21

2211

1

Autocovariance of MA(q)

Autocorelation of MA(q)

22

The sample ACF cuts off after lag 2

23

Finite Order Autoregressive Process

- World’s theorem: infinite number of weights, not helpful in modeling & forecasting

- Finite order MA process: estimate a finite number of weights, set the other equal to zero

Oldest disturbance obsolete for the next observation; only finite number of disturbances contribute to the current value of time series- Take into account all the disturbances of the past : use autoregressive models; estimate infinitely many weights that follow a distinct pattern with a small number of parameters

24

First Order Autoregressive Process, AR(1)

Assume : the contributions of the disturbances that are way in the past are small compared to the more recent disturbances that the process has experienced

Reflect the diminishing magnitudes of contributions of the disturbances of the past,through set of infinitely many weights in descending magnitudes , such as

The weights in the disturbances starting from the current disturbance and going back in the past:

,, , , 1 32

Exponential decay pattern

25

1

1

22

1

32

211

0

22

1

tt

tt

tttt

tttt

iit

i

tttt

yy

y

THEN

y

y

First order autoregressive process AR(1)

AR(1) stationary if

where

WHY AUTOREGRESSIVE ?

26

Mean AR(1)

Autocovariance function AR(1) 2,1,0, 11

22

kk k

Autocorrelation function AR(1) 2,1,0, 0

kkk k

The ACF for a stationary AR(1) process has an exponential decay form

27

Observe: - The observations exhibit up/down movements

28

Second Order Autoregressive Process, AR(2)

1 , 2211 tttt yyy

This model can be represented in the infinite MA form & provide the conditions of stationarity for yt in terms of φ1 & φ2

WHY?

tt

tt

tttt

tttt

yByBB

yBByy

yyy

)1( 221

221

2211

1. Infinite MA

Apply 1 B

29

0

0

11

it

ii

iiti

t

tt

B

B

BBy

where

BBB

B

i

ii

0

1

1

&

30

Calculate the weights i

BBBi

ii

0

1 1 BB

1

11

22112

021120110

2210

221

jjjj BBB

BBBB

,3,2,0

01

2211

011

0

jallforjjj

We need

31

Solutions

The satisfy the second-order linear difference equationThe solution : in terms of the 2 roots m1 and m2 from

j

24

,

0

2211

21

212

mm

mm

AR(2) stationary:

0

21 ,1,

ii

mmif

Condition of stationarity for complex conjugates a+ib:

122 b

AR(2) infinite MA representation: 1, 21 mm

32

Mean

21

21

2211

1

ttt yEyEyE

tystationarinonmFor :1,1 21

Autocovariance function

0,0,021

2211

2211

2

21

,cov,cov,cov,cov

,cov

kifkif

kttkttktt

ktttt

ktt

kk

yyyyyyyy

yyk

For k=0: 221 210

For k>0: 2,1,21 21 kkkk Yule-Walker equations

33

Autocorrelation function

,2,1,21 21 kkkk

Solutions

A. Solve the Yule-Walker equations recursively

2

1

21

11

101

123

12

21

21

B. General solutionObtain it through the roots m1 & m2 associated with the polynomial

0212 mm

34

Case I: m1, m2 distinct real roots

,2,1,0,2211 kmcmck kk

c1, c2 constants: can be obtained from ρ (0) ,ρ(1)

stationarity: ACF form: mixture of 2 exponentially decay terms

1, 21 mm

e.g. AR(2) model It can be seen as an adjusted AR(1) model for which a single exponential decay expression as in the AR(1) is not enough to describe the pattern in the ACF and thus, an additional decay expression is added by introducing the second lag term yt-2

35

Case II: m1, m2 complex conjugates in the form iba

,2,1,0,sincos 21 kkckcRk k

sin)cos(

/)sin(

/)cos(

22

iRiba

Rb

Ra

bamR i

c1, c2: particular constantsACF form: damp sinusoid; damping factor R; frequency ; period /2

36

Case III: one real root m0; m1= m2=m0

,2,1,0,021 kmkcck k

ACF form: exponential decay pattern

37

AR(2) process :yt=4+0.4yt-1+0.5yt-2+et

Roots of the polynomial: real ACF form: mixture of 2 exponential decay terms

38

AR(2) process: yt=4+0.8yt-1-0.5yt-2+et

Roots of the polynomial: complex conjugatesACF form: damped sinusoid behavior

39

General Autoregressive Process, AR(p)

Consider a pth order AR model

noisewhiteyyyy ttptpttt ,2211 or

pp

tt

BBBBwhere

yB

2211

,

40

AR(P) stationary

If the roots of the polynomial

022

11

pppp mmm

are less than 1 in absolute value

AR(P) absolute summable infinite MA representation

Under the previous condition

0

1

0

&i

i

iititt

BB

By

41

Weights of the random shocks

1 BB

,2,1,01

0,0

2211

0

jallfor

j

pjpjjj

j

as

42

For stationary AR(p)

p

tyE

211

0,0,0

1

2211

2

,

,

kifkif

p

ii

kttptptt

ktt

ik

yyyyCov

yyCovk

2

1

1

2

10

0

p

ii

p

ii

i

i

43

,2,1,1

kikkp

ii

ACF

pth order linear difference equations

AR(p) : -satisfies the Yule-Walker equations-ACF can be found from the p roots of the associated polynomiale.g. distinct & real roots :

- In general the roots will not be real ACF : mixture of exponential decay and damped sinusoid

kpp

kk mcmcmck 2211

44

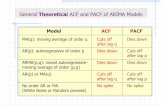

ACF- MA(q) process: useful tool for identifying order of processcuts off after lag k- AR(p) process: mixture of exponential decay & damped sinusoid

expressionsFails to provide information about the order of AR

45

Partial Autocorrelation Function

Consider : - three random variables X, Y, Z & - Simple regression of X on Z & Y on Z

The errors are obtained from

46

Partial correlation between X & Y after adjusting for Z: The correlation between X* & Y*

Partial correlation can be seen as the correlation between two variables after being adjusted for a common factor that affects them

47

Partial autocorrelation function (PACF) between yt & yt-k

The autocorrelation between yt & yt-k after adjusting for yt-1, yt-2, …yt-k

AR(p) process: PACF between yt & yt-k for k>p should equal zero

Consider - a stationary time series yt; not necessarily an AR process- For any fixed value k , the Yule-Walker equations for the ACF of

an AR(p) process

48

Matrix notation

1321

311223111211

kkk

kkk

kkkP

Solutionskkk P 1

For any given k, k =1,2,… the last coefficient is called the partial autocorrelation coefficient of the process at lag k

kk

AR(p) process: pkkk ,0

Identify the order of an AR process by using the PACF

49

Cuts off after 1st lag

Decay pattern

AR(2)

MA(1) MA(2)18.040 ttty

21 28.07.040 tttty

Decay pattern

AR(1)

ttt yy 18.08

AR(1)

ttt yy 18.08

AR(2)

tttt yyy 21 5.08.08

Cuts off after 2nd lag

50

Invertibility of MA models

Invertible moving average process:

The MA(q) process kkk P 1is invertible if it has an absolute summable infinite AR representation

It can be shown:

The infinite AR representation for MA(q)

11

,i

iti

itit yy

51

Obtain i

111 221

221 BBBBB q

q

We need

0,0&1

0

00

0

11

2112

11

jj

qjqjj

Condition of invertibility

The roots of the associated polynomial be less than 1 in absolute value

022

11

qqqq mmm

An invertible MA(q) process can then be written as an infinite AR process

52

PACF of a MA(q) process is a mixture of exponential decay & damp sinusoid expressions

In model identification, use both sample ACF & sample PACF

PACF possibly never cuts off

53

Mixed Autoregressive –Moving Average (ARMA) Process

ARMA (p,q) model

q

iitit

p

iiti

qtqtttptpttt

y

yyyy

11

22112211

noisewhiteByB ttt ,

Adjust the exponential decay pattern by adding a few terms

54

Stationarity of ARMA (p,q) process

Related to the AR component

ARMA(p,q) stationary if the roots of the polynomial less than one in absolute value

022

11

pppp mmm

ARMA(p,q) has an infinite MA representation

BBBBy ti

itit

1

0

,

55

Invertibility of ARMA(p,q) process

Invertibility of ARMA process related to the MA componentCheck through the roots of the polynomial

022

11

qqqq mmm

If the roots less than 1 in absolute value then ARMA(p,q) is invertible & has an infinite representation

BBBB

yB tt

11 &

Coefficients:

pipiqiqiii

i ,,1,,02211

56

ARMA(1,1)

Sample ACF & PACF: exponential decay behavior

57

58

59

60

Non Stationary Process

Not constant level, exhibit homogeneous behavior over time

yt is homogeneous, non stationary if -It is not stationary -Its first difference, wt=yt-yt-1=(1-B)yt or higher order differences wt=(1-B)dyt produce a stationary time series

Yt autoregressive intergrated moving average of order p, d,q –ARIMA(p,d,q)

If the d difference , wt=(1-B)dyt produces a stationary ARMA(p,q) process

ttd ByBB 1ARIMA(p,d,q)

61

The random walk process ARIMA(0,1,0)

Simplest non-stationary model

ttyB 1

First differencing eliminates serial dependence & yields a white noise process

62

yt=20+yt-1+et

Evidence of non-stationary process-Sample ACF : dies out slowly-Sample PACF: significant at the first lag -Sample PACF value at lag 1 close to 1

First difference-Time series plot of wt: stationary-Sample ACF& PACF: do not show any significant value-Use ARIMA(0,1,0)

63

The random walk process ARIMA(0,1,1)

tt ByB 11

Infinite AR representation, derived from:

1,1,10,01

iiiii

ttt

ititit

yy

yy

21

1

1

ARIMA(0,1,1)= (IMA(1,1)): expressed as an exponential weighted moving average (EWMA) of all past values

64

ARIMA(0,1,1)-The mean of the process is moving upwards in time-Sample ACF: dies relatively slow-Sample PACF: 2 significant values at lags 1& 2

-First difference looks stationary-Sample ACF & PACF: an MA(1) model would be appropriate for the first difference , its ACF cuts off after the first lag & PACF decay pattern

Possible model :AR(2)Check the roots

65

ttt yy 195.02

66