Using hand gestures to fly UAVs

-

Upload

david-qorashi -

Category

Engineering

-

view

96 -

download

3

Transcript of Using hand gestures to fly UAVs

Exploring Alternative

Control Modalities for

Unmanned Aerial VehiclesThesis Presentation

David Qorashi

Grand Valley State University

April 2015

Committee Members

Dr. Engelsma, Dr. Alsabbagh, Dr. Dulimarta

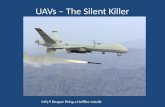

Unmanned Aerial Vehicles

(UAVs)• Primary Usage

• Defense & Security Sectors

• Potential Usage

• Private Security

• News Gathering (CNN)

• Agricultural Practices

• Product Transport

• Aerial Advertising

• … Source: New York Daily News (2014)

–Business Insider (2014)

“We predict that 12% of an estimated $98 billion

in cumulative global spending on aerial drones

over the next decade will be for commercial

purposes.”

Problems with current

approaches

• Steep Learning Curve

• Failing to pilot the drone in initial attempts

• Damage to drone/environment

• Comprise safety of pilot and/or bystanders

Endangers People’s Safety

Roman Pirozek was killed while flying a

remote-controlled model helicopter on

September 2013 (Source: Wall Street

Journal).

Human robot interaction: A

new field in HCI• Goodtich & Olsen defined a general Interface on designing effective UIs

• Dury et.al defined a set of HRI taxonomies

• Quigley et al. used a numeric parameter-based interface for controlling

• Quigley et al. used voice control interaction

• Natural User Interfaces

• Gesture-based Interaction for collocated ground robots (Rogolla et al.

2002)

• Gesture-based Interaction for collocated Drones (Ng et al. 2011)

Microsoft

Kinect

Source: Microsoft Corp. (2014)

New Approach: Using motion sensing input devices

A Kinect-Base Natural Interface for Quadrotor Control (Sanna et al. 2012)

Challenges using Body Parts

Movements

• Not very comforting

• Not very receptive by the pilots

• Very hard to do in a long run

Thesis Hypothesis

• Increasing efficiency in piloting

• Adding overall enjoyable experience in piloting

Core Experiment

Implemented gesture-based interface vs. conventional

multi-touch methods

Comparative Analysis

• Sample: A subset of students

• First, they were asked to complete a very simple mission

in an specific route using multi-touch approach

• Second, we ask them to pilot the drone in the same

route using the implemented approach

• Finally, we ask them to fill a questionnaire to rate each

approach.

• Also, the trainer examined the accuracy of landings after

each flight

What needs to be done?

• Controller for sending the commands to the drone

• Gesture Recognition System

• Interfacing Gesture system with Drone Controller

Drone Controller

• Bebop Drone released in December 2014

• Documentations for the new API: Extremely weak

• A high-level wrapper was created around C API

• https://github.com/gvsucis/c-bebop-drone

Implemented Functions• ARDrone3SendSettingsAllSettings

• ARDrone3SendCommonAllStates

• ARDrone3SendTakeoffCommand

• ARDrone3SendLandCommenad

• ARDrone3SendPCMD

• ARDrone3SendSendSpeedSettingsHullProtection

• ARDrone3SendPilotingFlatTrim

• ARDrone3SendYawRightCommand

• ARDrone3SendYawLeftCommand

• ARDrone3SendAscendCommand

• ARDrone3SendDescendCommand

• ARDrone3SendHoverCommand

• ARDrone3SendMoveForwardCommand

• ARDrone3SendMoveBackwardCommand

Gesture Recognition System

• Q: What method of implementation would be better?

(better here, means general and accurate)

• A: Machine Learning

Gesture Recognizer

• Method in use: Supervised Machine Learning

• Steps:

• Sample Collection And Tagging

• Machine Leaning Recognizer Code

Every ML algorithm needs

some features

• Features are extracted from the data

• Features in use for this application:

• Tips’ positions

• Center of Palm position

• Velocities

With huge set of data all these methods converge

Domingos, Pedro. "A few useful things to know about machine

learning." Communications of the ACM 55.10 (2012): 78-87.

For this App, we chose $P

• Why?

• We didn’t have lots of training data

• $P is optimized for gesture recognition

Cloud Recognizer: a 2-D gesture recognizer designed for rapid prototyping of gesture

Why?

• Gesture controller involves nondeterministic factors

• Quality of the sensor

• Quality of data gathered during training phase

• Lighting conditions of the environment during

the test

• Suitableness of algorithm used for training

Raised Questions During

Research??

• How should we handle combinatorial commands?

• How can we make GR 100% accurate and more

robust?

• How can we define more intuitive gestures?

Future work?

• Use another kind of sensor for gathering data

• Run image processing algorithms instead of relying

on the API provided by Leap

• Make a huge data set instead of using just two

people’s hand gestures

• Considering multimodal controls