Statistical Data Analysis 2011/2012 M. de Gunst Lecture 3.

-

Upload

osborn-brooks -

Category

Documents

-

view

220 -

download

0

description

Transcript of Statistical Data Analysis 2011/2012 M. de Gunst Lecture 3.

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis

2011/2012

M. de Gunst

Lecture 3

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 2

Statistical Data Analysis: Introduction

TopicsSummarizing dataExploring distributions (continued)Bootstrap (first part)Robust methodsNonparametric testsAnalysis of categorical dataMultiple linear regression

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 3

Today’s topics:Exploring distributions (Chapter 3: 3.5.2, 3.5.3 )Bootstrap (Chapter 4: 4.1, 4.2)

Exploring distributions3.5. Tests for goodness of fit 3.5.1. Shapiro-Wilk test for normal distribution (last week)3.5.2. Kolmogorov-Smirnov test for general distribution 3.5.3. Chi-square test for goodness of fit for general distribution

Bootstrap4.1. Simulation (read yourself)4.2. Bootstrap estimators for distribution Parametric bootstrap estimators Empirical bootstrap estimators

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 4

3.5. Exploring distributions: reminder

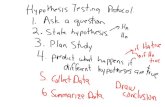

TestingIngredients of test? Hypotheses H0 and H1 Test statistic T Distribution of T under H0 and know how it is changed/shifted under H1 Rule for when H0 will be rejected:

Rejection rule either based on critical region or on p-value

How to perform test? Describe the above Choose significance level α Calculate and report value t of T Report whether t is in critical region, or whether p-value < α Formulate conclusion of test: “H0 rejected” or “H0 not rejected” If possible translate conclusion to practical context

NB. When asked to perform a test,you have to do

all 6 steps!

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 5

Tests for goodness of fit: for (one) general distribution

Situation independent realizations from unknown distribution F

now: , one specific distribution:

Which statistic gives information about distribution F?

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 6

3.5.2. Kolmogorov-Smirnov test (1)

independent realizations from unknown distribution F

Idea: use empirical distribution function

Makes sense: is r.v., ~ binom(n, F(x))

so that for n → ∞,

Then also under H0 , for n → ∞,

Base test on distance between and

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 7

Kolmogorov-Smirnov test (2)

Test statistic:

Distribution of Dn under H0: same for all continuous F0: Dn is distribution free over class of continuous distribution functions K-S test is nonparametric test

Because

When is H0 rejected? For large values of Dn

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 8

Kolmogorov-Smirnov test (3)

Test statistic:

p-values from tables or computer package.

Note: standard K-S test with these p-values not suitable for composite H0 Then adjusted K-S test with adjusted p-values

Example: for “H0: F is normal” adjusted test statistic for K-S test is

What is difference?

adj

Additional stochasticity!

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 9

Kolmogorov-Smirnov test (4)

Data: x H0: F = N(0,1) H1 : F ≠ N(0,1)

Test statistic:

R:> ks.test(x,pnorm)

One-sample Kolmogorov-Smirnov test

data: x D = 0.1163, p-value = 0.4735alternative hypothesis: two-sided

H0 rejected?

of xExample

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 10

Kolmogorov-Smirnov test (5)

Data: yH0: F is normal ← composite null hypothesisH1 : F is not normal Test statistic:

R:> ks.test(y,pnorm)D = 0.6922, p-value = 6.661e-16

> ks.test(y,pnorm,mean=mean(y),sd=sd(y))D = 0.1081, p-value = 0.5655> mean(y)[1] 3.62158> sd(y)[1] 3.043356

adj

Incorrect: this is test for H0: F = N(0,1) H1: F ≠ N(0,1)

Incorrect : this is test for

H0: F = N(3.62158,(3.04335)2)

H1: F ≠ N(3.62158,(3.04335)2)

of y

Example

We have not used Dadj ! ! p-value should be

0.126 (next week)

Correct?

Correct?

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 11

3.5.3. Chi-square test for goodness of fit (1)

independent realizations from unknown distribution F

Idea: use empirical distribution in different way:divide real line in intervals I1, … ,Ik and compare number of data in intervals with expected number in intervals under H0

Ni = number of observations in Ii

pi = probability of observation in Ii under F0

Then npi = expected number in intervals under H0

Test statistic:

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 12

Chi-square test for goodness of fit (2)

Test statistic:

Distribution of X2 under H0: different for different F0, but for n → ∞ distribution of X2 under H0 : chi-square with k-1 dfsame for all F0

For large enough n, X2 distribution free chi-square test nonparametric test

When is H0 rejected? For large values of X2

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 13

Chi-square test for goodness of fit (3)

Test statistic:

How to choose intervals I1, … ,Ik ?

How many?More is better, but not too manyRule of Thumb: at least 5 observations expected in each interval under H0

Where?About same number expected in each interval under H0

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 14

Chi-square test for goodness of fit (4)

Data: y H0: F = N(4,9) H1 : F ≠ N(4,9)

Test statistic: R: > chisquare(y,pnorm,k=8, lb=0, ub=16,mean=4,sd=3)$chisquare[1] 13.11222$pr[1] 0.06942085$N (0,2] (2,4] (4,6] (6,8] (8,10] (10,12] (12,14] (14,16] 14 13 9 4 5 0 1 0 $np[1] 8 12 12 8 3 0 0 0 #Expected numbers under H0 do not satisfy rule of thumbBetter: choose suitable vector b of `breaks’ > chisquare(y,pnorm,breaks=b,mean=4,sd=3)

of y

Example

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 15

Chi-square test for goodness of fit (5)

Test statistic:

under H0: χ2k-1

Standard chi-square test not suitable for composite H0 Then adjusted chi-square test with adjusted chi-square distribution

Example: for “H0: F is normal” adjusted chi-square test statistic is

under H0: χ2k-m-1 only for one specific type of estimators

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 16

Recap

Exploring distributions3.5. Tests for goodness of fit 3.5.2. Kolmogorov-Smirnov test for general distribution 3.5.3. Chi-square test for goodness of fit for general distribution

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 17

4. Bootstrap

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 18

Bootstrap: Introduction(1) Data: 59 melting temperatures of beewax

P unknown true underlying distribution of beewax data Estimator of location of P? Tn = (sample) Mean Estimate of location of P?tn = mean(beewax) = 63.589

How accurate is estimate?How good is estimator?Distribution of Tn ? Broad/narrow?

Main question:How to estimate unknown distribution of estimator TnNotation: QP

Example

R:> beewax [1] 63.78 63.34

63.36 63.51 ….> mean(beewax)[1] 63.58881 > sd(beewax)[1] 0.3472209> var(beewax)[1] 0.1205624

R:> beewax [1] 63.78 63.34

63.36 63.51 ….> mean(beewax)[1] 63.58881 > sd(beewax)[1] 0.3472209> var(beewax)[1] 0.1205624

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 19

Bootstrap: Introduction(2) (Continued)

Simple case: assume P ~ N(μ,σ2) Tn = (sample) Mean

What is distribution QP of Tn ? We estimate: N(63.589, 0.121/59)

How did we find this?

i) Estimator of P: N((sample) Mean, (sample) Variance)ii) Estimate: N(63.589,0.121)iii) QP is distribution of Mean of 59 independent observations from Piv) Estimator of QP : N((sample) Mean, (sample)Variance/59)v) Estimate of QP : N(63.589, 0.121/59)

Example

R:> beewax [1] 63.78 63.34

63.36 63.51 ….> mean(beewax)[1] 63.58881 > sd(beewax)[1] 0.3472209> var(beewax)[1] 0.1205624> length(beewax) [1] 59

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 20

Bootstrap: Introduction(3) (Continued)

Other case: assume P ~ N(μ,σ2) Now Tn = (sample) Median What is distribution QP of Tn ?

How to proceed now?i) Estimator of P: N((sample) Mean, (sample) Variance)ii) Estimate: N(63.589,0.121)iii) QP is distribution of Median of 59 independent observations from Piv) Estimator of QP : ?v) Estimate of QP : ?

This is what bootstrap is about: estimate distribution QP of function Tn of 59 independent observations from unknown P n

Example

R:> beewax [1] 63.78 63.34

63.36 63.51 ….> mean(beewax)[1] 63.58881 > sd(beewax)[1] 0.3472209> var(beewax)[1] 0.1205624> length(beewax) [1] 59

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 21

Bootstrap: Introduction(4) (Continued)

Again other case: no assumption about PTn = (sample) MeanWhat is distribution QP of Tn ?

How to proceed now?i) Estimator of P: ?ii) Estimate: ?iii) QP is distribution of Mean of 59 independent observations from Piv) Estimator of QP : ?v) Estimate of QP : ?

This is what bootstrap is about: estimate distribution QP of function Tn of 59 independent observations from unknown P n

Example

R:> beewax [1] 63.78 63.34

63.36 63.51 ….> mean(beewax)[1] 63.58881 > sd(beewax)[1] 0.3472209> var(beewax)[1] 0.1205624> length(beewax) [1] 59

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 22

4.2. Bootstrap estimators for a distribution

This is what bootstrap is about: estimate distribution QP of function Tn of n independent observations from unknown P

Situation realizations of , independent, unknown distr.

P

Goal Estimate distribution of estimator

Cases1. Assume P is some parametric distribution with unknown parameters2. Assume nothing about P

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 23

Bootstrap estimators for a distribution;Case 1: parametric bootstrap estimator (1)

(Beewax; case 1)

Case 1: Assume P ~ N(μ,σ2) ; Tn = (sample) Median What is distribution QP of Tn ?

How to proceed?i) Estimator of P: N( X ,S2) = P ii) Estimate: N(63.589,0.121)iii) QP is distribution of Median of 59 independent observations from Piv) Estimator of QP : distribution of Median of 59 independent observations from N( X ,S2) = Pv) Estimate of QP : distribution of Median of 59 independent observations from N(63.589,0.121) Unknown: use computer to generate realizations from estimate of QP

Empirical distribution of generated set is parametric bootstrap estimate of QP

Example

θ̂n

θ̂n

Which distribution is this?

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 24

Bootstrap estimators for a distribution;Case 1: parametric bootstrap estimator (2)

(Continued; case 1)

How to generate realizations from estimate of QP : i.e. from distribution of Median of 59 independent observations from N(63.589,0.121)?# 1. Generate one bootstrap sample:> xstar=rnorm(59, 63.589,sqrt(0.121)) # Check:> xstar [1] 63.84819 62.88915 63.71705 64.06793…..[57] 63.56481 64.03403 63.75276 #Note: xstar is of same length as beewax

# 2. Now compute one bootstrap value tstar from xstar:> tstar=median(xstar)> tstar[1] 63.70498

# 3. Do 1 and 2 B times. The B values tstar are generated realizations from estimate of QP

Example

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 25

Bootstrap estimators for a distribution;Case 1: parametric bootstrap estimator (3)

(Continued; case 1)

The B values tstar are generated realizations from estimate of QP

i.e. from distribution of Median of 59 independent observations from N(63.589,0.121)

Recall: empirical distribution of generated set is parametric bootstrap estimate of QP

Also: sample variance 0.0038 of generated set is parametric bootstrap estimate of variance of QP

Example

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 26

Bootstrap estimators for a distribution;Case 2: empirical bootstrap estimator (1)

(Beewax; case 2)

Case 2: Assume nothing about P; Tn = (sample) Mean What is distribution QP of Tn ?

How to proceed?i) Estimator of P: empirical distribution of data = Pn ii) Estimate: empirical distribution of beewax data iii) QP is distribution of Mean of 59 independent observations from Piv) Estimator of QP : distribution of Mean of 59 independent observations from empirical distribution of data v) Estimate of QP : distribution of Mean of 59 independent observations from empirical distribution of beewax data Unknown: use computer to generate realizations from estimate of QP

Empirical distribution of generated set is Empirical bootstrap estimate of QP

Example

Which distribution is this?

^

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 27

Bootstrap estimators for a distribution;Case 2: empirical bootstrap estimator (2)

(Continued; case 2)

How to generate realizations from estimate of QP : i.e. from distribution of Mean of 59 independent observations from empirical distribution of beewax data ?# 1. Generate one bootstrap sample:> xstar=sample(beewax, replace = TRUE) # Check:> xstar [1] 63.69 64.42 63.30 63.03 63.13 63.13 63.08 63.27 63.08 64.12 64.21 63.43 …..#Note: xstar is of same length as beewax and consists of values sampled from the set of #beewax values.

# 2. Now compute one bootstrap value tstar from xstar:> tstar=mean(xstar)> tstar[1] 63.60271

# 3. Do 1 and 2 B times. The B values tstar are generated realizations from estimate of QP

Example

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 28

Bootstrap estimators for a distribution;Case 2: empirical bootstrap estimator (3)

(Continued; case 2)

The B values tstar are generated realizations from estimate of QP

i.e. from distribution of Mean of 59 independent observations from empirical distribution of beewax data

Recall: empirical distribution of this generated set is empirical bootstrap estimate of QP

Also: sample variance 0.00193 of this generated set is empirical bootstrap estimate of variance of QP

Note: this value is comparable to value 0.121/59 = 0.0020of estimate of variance of QP under normality assumption for P

Example

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 29

Empirical bootstrap with R

# Can be done in one go with local R-function bootstrap:> bootstrap = function(x, statistic, B = 100., ...){# returns a vector of B bootstrap values of real-valued statistic.# statistic(x) should be R-function ; arguments of # statistic can be inserted on ...# resampling is done from empirical distribution of x y <- numeric(B) for(j in 1.:B) y[j] <- statistic(sample(x, replace = TRUE), ...) y}

# Compute 1000 bootstrap values tstar:> tstarvector=bootstrap(beewax,mean,B=1000)

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 30

Bootstrap: two errors

Recall goal: to estimate distribution QP of function Tn of n independent observations from unknown P Note: in bootstrap estimation procedure two types of “errors” are made; Which ones?

Given the data: - Estimate of QP : distribution of function Tn of n independent observations from estimate of P

- Which is estimated in turn by empirical distribution of computer generated realizations of this distribution

How can we make these errors small?Size first error depends on quality estimator of P Size second error can be made small by taking B large

First errorSecond error

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 31

Recap

Bootstrap4.1. Simulation (read yourself)4.2. Bootstrap estimators for distribution Parametric bootstrap estimators Empirical bootstrap estimators

-4 -2 0 2 4

0.0

0.1

0.2

0.3

0.4

x

Statistical Data Analysis 32

Exploring distributions/Bootstrap

The end