S h ad ow N et: A P latform for R ap id an d S afe N etw ...kobus/docs/shadownet.pdf · S h ad ow N...

Transcript of S h ad ow N et: A P latform for R ap id an d S afe N etw ...kobus/docs/shadownet.pdf · S h ad ow N...

-

ShadowNet: A Platform for Rapid and Safe Network EvolutionXu Chen Z. Morley Mao Jacobus Van der MerweUniversity of Michigan AT&T Labs - Research

AbstractThe ability to rapidly deploy new network services,

service features and operational tools, without impact-ing existing services, is a significant challenge for allservice providers. In this paper we address this prob-lem by the introduction of a platform called ShadowNet.ShadowNet exploits the strong separation provided bymodern computing and network equipment between log-ical functionality and physical infrastructure. It allowslogical topologies of computing servers, network equip-ment and links to be dynamically created, and then in-stantiated to and managed on the physical infrastruc-ture. ShadowNet is a sharable, programmable and com-posable infrastructure, consisting of carrier-grade equip-ment. Furthermore, it is a fully operational networkthat is connected to, but functionally separate from theprovider production network. By exploiting the strongseparation support, ShadowNet allows multiple technol-ogy and service trials to be executed in parallel in a real-istic operational setting, without impacting the produc-tion network. In this paper, we describe the ShadowNetarchitecture and the control framework designed for itsoperation and illustrate the utility of the platform. Wepresent our prototype implementation and demonstratethe effectiveness of the platform through extensive eval-uation.

1 IntroductionEffecting network change is fundamentally difficult.This is primarily due to the fact that modern networksare inherently shared and multi-service in nature, andany change to the network has the potential to negativelyimpact existing users and services. Historically, pro-duction quality network equipment has also been propri-etary and closed in nature, thus further raising the bar tothe introduction of any new network functionality. Thenegative impact of this state of affairs has been widelyrecognized as impeding innovation and evolution [23].Indeed at a macro-level, the status quo has led to callsfor a clean slate redesign of the Internet which in turn hasproduced efforts such as GENI [3] and FEDERICA [2].In the work presented in this paper we recognize that

at a more modest micro-level, the same fundamentalproblem, i.e., the fact that network change is inher-ently difficult, is a major operational concern for serviceproviders. Specifically, the introduction of new services

or service features typically involves long deploymentcycles: configuration changes to network equipment aremeticulously lab-tested before staged deployments areperformed in an attempt to reduce the potential of anynegative impact on existing services. The same appliesto any new tools to be deployed in support of networkmanagement and operations. This is especially true asnetwork management tools are evolving to be more so-phisticated and capable of controlling network functionsin an automated closed-loop fashion [25, 9, 7]. The op-eration of such tools depends on the actual state of thenetwork, presenting further difficulties for testing in alab environment due to the challenge of artificially recre-ating realistic network conditions in a lab setting.In this paper we address these concerns through a plat-

form called ShadowNet. ShadowNet is designed to be anoperational trial/test network consisting of ShadowNetnodes distributed throughout the backbone of a tier-1provider in the continental US. Each ShadowNet nodeis composed of a collection of carrier-grade equipment,namely routers, switches and servers. Each node is con-nected to the Internet as well as to other ShadowNetnodes via a (virtual) backbone.ShadowNet provides a sharable, programmable and

composable infrastructure to enable the rapid trial or de-ployment of new network services or service features,or evaluation of new network management tools in a re-alistic operational network environment. Specifically,via the Internet connectivity of each ShadowNet node,traffic from arbitrary end-points can reach ShadowNet.ShadowNet connects to and interacts with the providerbackbonemuch like a customer network would. As suchthe “regular” provider backbone, just like it would pro-tect itself from any other customers, is isolated from thetesting and experimentation that take place within Shad-owNet. In the first instance, ShadowNet provides themeans for testing services and procedures for subsequentdeployment in a (separate) production network. How-ever, in time we anticipate ShadowNet-like functionalityto be provided by the production network itself to di-rectly enable rapid but safe service deployment.ShadowNet has much in common with other test net-

works [10, 27, 22]: (i) ShadowNet utilizes virtualizationand/or partitioning capabilities of equipment to enablesharing of the platform between different concurrentlyrunning trials/experiments; (ii) equipment in ShadowNet

-

nodes are programmable to enable experimentation andthe introduction of new functionality; (iii) ShadowNetallows the dynamic composition of test/trial topologies.What makes ShadowNet unique, however, is that this

functionality is provided in an operational network oncarrier-grade equipment. This is critically importantfor our objective to provide a rapid service deploy-ment/evaluation platform, as technology or service tri-als performed in ShadowNet should mimic technologyused in the provider network as closely as possible.This is made possible by recent vendor capabilities thatallow the partitioning of physical routers into subsetsof resources that essentially provide logically separate(smaller) versions of the physical router [16].In this paper, we describe the ShadowNet architec-

ture and specifically the ShadowNet control framework.A distinctive aspect of the control framework is that itprovides a clean separation between the physical-levelequipment in the testbed and the user-level slice speci-fications that can be constructed “within” this physicalplatform. A slice, which encapsulates a service trial, isessentially a container of the service design includingdevice connectivity and placement specification. Onceinstantiated, a slice also contains the allocated physicalresources to the service trial. Despite this clean separa-tion, the partitioning capabilities of the underlying hard-ware allows virtualized equipment to be largely indistin-guishable from their physical counterparts, except thatthey contain fewer resources. The ShadowNet controlframework provides a set of interfaces allowing users toprogrammatically interact with the platform to manageand manipulate their slices.We make the following contributions in this work:• Present a sharable, programmable, and composablenetwork architecture which employs strong separa-tion between user-level topologies/slices and theirphysical realization (§2).

• Present a network control framework that allowsusers to manipulate their slices and/or the physicalresource contained therein with a simple interface(§3).

• Describe physical-level realizations of user-levelslice specifications using carrier-grade equipmentand network services/capabilities (§4).

• Present a prototype implementation (§5) and evalu-ation of our architecture (§6).

2 ShadowNet overviewIn this paper, we present ShadowNet which serves as aplatform for rapid and safe network change. The pri-mary goal of ShadowNet is to allow the rapid composi-tion of distributed computing and networking resources,contained in a slice, realized in carrier-grade facilitieswhich can be utilized to introduce and/or test new ser-

vices or network management tools. The ShadowNetcontrol framework allows the network-wide resourcesthat make up each slice to be managed either collectivelyor individually.In the first instance, ShadowNet will limit new ser-

vices to the set of resources allocated for that purpose,i.e., contained in a slice. This would be a sufficient so-lution for testing and trying out new services in a real-istic environment before introducing such services intoa production network. Indeed our current deploymentplans espouse this approach with ShadowNet as a sep-arate overlay facility [24] connected to a tier-1 produc-tion network. Longer term, however, we expect the basefunctionality provided by ShadowNet to evolve into theproduction network and to allow resources and function-ality from different slices to be gracefully merged underthe control of the ShadowNet control framework.In the remainder of this section we first elaborate on

the challenges network service providers face in effect-ing network changes. We describe the ShadowNet archi-tecture and show how it can be used to realize a sophis-ticated service. Several experimental network platformsare compared against it, and we show that ShadowNetis unique in terms of its ability to provide realistic net-work testing. Finally we describe the architecture of theprimary system component, namely the ShadowNet con-troller.

2.1 Dealing with network changeThere are primarily three drivers for changes in modernservice provider networks:

Growth demands: Fueled by an increase in broadbandsubscribers and media rich content, traffic volumes onthe Internet continue to show double digit growth ratesyear after year. The implication of this is that serviceproviders are required to increase link and/or equipmentcapacities on a regular basis, even if the network func-tionality essentially stays the same.New services and technologies: Satisfying customerneeds through new service offerings is essential to thesurvival of any network provider. “Service” here spansthe range from application-level services like VoIP andIPTV, connectivity services like VPNs and IPv4/IPv6transport, traffic management services like DDoS miti-gation or content distribution networks (CDNs), or moremundane (but equally important and complicated) ser-vice features like the ability to signal routing preferencesto the provider or load balancing features.New operational tools and procedures: Increasing useof IP networks for business critical applications is lead-ing to growing demands on operational procedures. Forexample, end-user applications are often very intolerantof even the smallest network disruption, leading to the

-

deployment of methods to decrease routing convergencein the event of network failures. Similarly, availabil-ity expectations, in turn driven by higher level businessneeds, make regularly plannedmaintenance events prob-lematic, leading to the development of sophisticated op-erational methods to limit their impact.As we have alluded to already, the main concern of

any network change is that it might have an impact onexisting network services, because networks are inher-ently shared with known and potentially unknown de-pendencies between components. An example would bethe multi-protocol extensions to BGP to enable MPLS-VPNs or indeed any new protocol family. The changeassociated with rolling out a new extended BGP stackclearly has the potential to impact existing IPv4 BGPinteractions, as bugs in new BGP software could nega-tively impact the BGP stack as a whole.Note also that network services and service fea-

tures are normally “cumulative” in the sense that oncedeployed and used, network services are very rarely“switched off”. This means that over time the dependen-cies and the potential for negative impact only increasesrather than diminishes.A related complication associated with any network

change, especially for new services and service features,is the requirement for corresponding changes to a vari-ety of operational support systems including: (i) con-figuration management systems (new services need tobe configured typically across many network elements),(ii) network management systems (network elementsand protocols need to be monitored and maintained),(iii) service monitoring systems (for example to ensurethat network-wide service level agreements, e.g., loss,delay or video quality, are met), (iv) provisioning sys-tems (e.g., to ensure the timely build-out of popular ser-vices). ShadowNet does not address these concerns perse. However, as described above, new operational solu-tions are increasingly more sophisticated and automated,and ShadowNet provides the means for safely testing outsuch functionality in a realistic environment.Our ultimate goal with the ShadowNet work is to de-

velop mechanisms and network management primitivesthat would allow new services and operational tools to besafely deployed directly in production networks. How-ever, as we describe next, in the work presented here wetake the more modest first step of allowing such actionsto be performed in an operational network that is sepa-rate from the production network, which is an importanttransitional step.

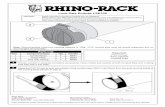

2.2 ShadowNet architectureDifferent viewpoints of the ShadowNet network archi-tecture are shown in Figures 1(a) and (b). Figure 1(a)shows the topology from the viewpoint of the tier-1

!"#$%&'()*

)+,-./0#10.-#

)+,-./0#10.-#

)+,-./0#10.-# )+,-./0#1

0.-#

'(21#$2#1'3.22#31"4"15)+,-./0#1''6,378.2#'9.22#31"4"15

:,;'()*'

-

(Note that modern routers are also programmable in bothcontrol and data planes [18].) Logical interfaces canbe multiplexed from one physical interface via config-uration and then assigned to different logical routers.We also take advantage of virtual machine technologyto manage server resources [5]. This technology en-ables multiple operating systems to run simultaneouslyon the same physical machine and is already heavilyused in cloud computing and data-center environments.To facilitate sharing connectivity, the physical devices ineach ShadowNet node are connected via a configurableswitching layer, which shares the local connectivity, forexample using VLANs. The carrier-supporting-carriercapabilities enabled by MPLS virtual private networks(VPNs) [11, 15] offer strong isolation and are thereforean ideal choice to create the ShadowNet backbone.

As depicted in Figure 2, central to ShadowNet func-tionality is the ShadowNet Controller. The controllerfacilitates the specification and instantiation of a ser-vice trial in the form of a slice owned by a user. Itprovides a programmatic application programming in-terface (API) to ShadowNet users, allowing them to cre-ate the topological setup of the intended service trial ordeployment. Alternatively users can access ShadowNetthrough a Web-based portal, which in turn will interactwith the ShadowNet Controller via the user-level API.The ShadowNet Controller keeps track of the physicaldevices that make up each ShadowNet node by con-stantly monitoring them, and further manages and ma-nipulates those physical devices to realize the user-levelAPIs, while maintaining a clean separation between theabstracted slice specifications and the way they are re-alized on the physical equipment. The user-level APIsalso enable users to dynamically interact with and man-age the physical instantiation of their slices. Specifically,users can directly access and configure each instantiatedlogical device.

ShadowNet allows a user to deactivate individual de-vices in a slice or the slice as a whole, by releasing theallocated physical resources. ShadowNet decouples thepersistent state from the instantiated physical devices, sothat the state change associated with a device in the spec-ification is maintained even if the physical instantiationis released. Subsequently, that device in the specificationcan be re-instantiated (assuming that sufficient resourcesare available), the saved state restored and thus the userperceived slice remains intact. For example, the config-uration change made by the user to a logical router canbe maintained and applied to a new instantiated logicalrouter, even if the physical placement of that logical de-vice is different.

!"#$"#!"#$"#

%&'

!'()*+

%&'

,

,-*+"

./0)",/1)#/2

!"#$"#!"#$"#

%&'

!'()*+

%&'

,-*+"

%&'

%&' %&'

,,

,

,

&13405"##"60"5)5 &13405"#

#"60"5)5&13405"##"60"5)5

Figure 3: Usage scenario: load-aware anycast CDN.

2.3 Using ShadowNetIn this section we briefly describe an example usage sce-nario that illustrates the type of sophisticated networkservices that can be tested using the ShadowNet infras-tructure. We discuss the requirements for testing theseservices and explain why existing platforms fall short inthese scenarios.Assume that ShadowNet is to be used to run a cus-

tomer trial of a load-aware anycast content distributionnetwork (CDN) [9]. Figure 3 depicts how all the com-ponents of such a CDN can be realized on the Shad-owNet platform. Specifically, a network, complete withprovider edge (PE) and core (C) routers, can be dynami-cally instantiated to represent a small backbone network.Further, servers in a subset of the ShadowNet nodes canbe allocated and configured to serve as content caches.A load-aware anycast CDN utilizes route control to in-form BGP selection based on the cache load, i.e., usingBGP, traffic can be steered away from overloaded cacheservers. In ShadowNet, this BGP speaking route controlentity can be instantiated on either a server or a router de-pending on the implementation. Appropriate configura-tion/implementation of BGP, flow-sampling, and serverload monitoring complete the infrastructure picture. Fi-nally, actual end-user requests can be directed to this in-frastructure, e.g., by resolving a content URL to the any-cast address(es) associated with and advertised by theCDN contained in the ShadowNet infrastructure.Using this example we can identify several capabili-

ties required of the ShadowNet infrastructure to enablesuch realistic service evaluation (see Table 1): (i) to gainconfidence in the equipment used in the trial it shouldbe the same as, or similar to, equipment used in the pro-duction network (production-grade devices); (ii) to thor-oughly test load feedback mechanisms and traffic steer-ing algorithms, it requires participation of significantnumbers of customers (realistic workloads); (iii) this inturn requires sufficient network capacity (high capacitybackbone); (iv) realistic network and CDN functionality

-

SN EL PL VNProduction grade devices Y N N NRealistic workloads Y N Y YHigh capacity backbone Y N N YGeographical coverage Y N Y YDynamic reconfiguration Y N N N

Table 1: Capability comparison between ShadowNet(SN), EmuLab (EL), PlanetLab (PL) and VINI (VN)

require realistic network latencies and geographic distri-bution (geographic coverage); (v) finally, the CDN con-trol framework could dynamically adjust the resourcesallocated to it based on the offered load (dynamic recon-figuration).While ShadowNet is designed to satisfy these require-

ments, other testing platforms, with different designgoals and typical usage scenarios, fall short in provid-ing such support, as we describe next.Emulab achieves flexible network topology throughemulation within a central testbed environment. Thereis a significant gap between emulation environments andreal production networks. For example, software routerstypically do not provide the same throughput as pro-duction routers with hardware support. As EmuLab isa closed environment, it is incapable of combining realInternet workload into experiments. Compared to Em-uLab, the ShadowNet infrastructure is distributed, thusthe resource placement in ShadowNet more closely re-sembles future deployment phases. In EmuLab, an ex-periment in a slice is allocated a fixed set of resourcesduring its life cycle — a change of specification wouldrequire a “reboot” of the slice. ShadowNet, on the otherhand, can change the specification dynamically. In theCDN example, machines for content caches and net-work links can be dynamically spawned or removed inresponse to increased or decreased client requests.PlanetLab has been extremely successful in academicresearch, especially in distributed monitoring and P2Presearch. It achieves its goal of amazing geographicalcoverage, spanning nodes to all over the globe, obtain-ing great end-host visibility. The PlanetLab nodes, how-ever, are mostly connected to educational networks with-out abundant upstream or downstream bandwidth. Plan-etLab therefore lacks the capacity to realize a capablebackbone between PlanetLab nodes. ShadowNet, on theother hand, is built upon a production ISP network, hav-ing its own virtual backbone with bandwidth and latencyguarantees. This pushes the tested service closer to thecore of the ISP network, where the actual production ser-vice would be deployed.VINI is closely tied with PlanetLab, but utilizes In-ternet2 to provide a realistic backbone. Like EmuLab

Figure 4: The ShadowNet controller

and PlanetLab, VINI runs software routers (XORP andClick), the forwarding capacity of which lags behindproduction devices. This is mostly because its focus is touse commodity hardware to evaluate new Internet archi-tectures, which is different from the service deploymentfocus of ShadowNet. VINI and PlanetLab are based onthe same control framework. Similar to EmuLab, it lacksthe capability of changing slice configurations dynam-ically, i.e., not closing the loop for more adaptive re-source management, a functionality readily available inShadowNet.

2.4 The ShadowNet ControllerThe ShadowNet controller consists of a user-level man-ager, a physical-level manager, a configuration effectorand a device monitor, as shown in Figure 4. We describeeach component below. The current ShadowNet designutilizes a centralized controller that interacts with andcontrols all ShadowNet nodes.

2.4.1 User-level managerThe user-level manager is designed to take the input ofuser-level API calls. Each API call corresponds to anaction that the users of ShadowNet are allowed to per-form. A user can create a topological specification of aservice trial (§3.1), instantiate the specification to physi-cal resources (§3.2), interact with the allocated physicalresources (§3.3), and deactivate the slice when the testfinishes (§3.4). The topology specification of a slice isstored by the user-level manager in persistent storage,so that it can be retrieved, revived and modified overtime. The user-level manager also helps maintain andmanage the saved persistent state from physical instan-tiations (§3.3). By retrieving saved states and applyingthem to physical instantiations, advanced features, likedevice duplication, can be enabled (§3.5).The user-level manager is essentially a network ser-

vice used to manipulate configurations of user experi-ments. We allow the user-level manager to be accessedfrom within the experiment, facilitating network control

-

in a closed-loop fashion. In the example shown in Fig-ure 3, the route control component in the experiment candynamically add content caches when user demand ishigh by calling the user-level API to add more comput-ing and networking resource via the user-level manager.

2.4.2 Physical-level managerThe physical-level manager fulfills requests from theuser-level manager in the form of physical-level APIcalls by manipulating the physical resources in Shad-owNet. To do this, it maintains three types of informa-tion: 1) “static” information, such as the devices in eachShadowNet node and their capabilities; 2) “dynamic”information, e.g., the online status of all devices andwhether any interface modules are not functioning; 3)“allocation” information, which is the up-to-date usageof the physical resources. Static information is changedwhen new devices are added or old devices are removed.Dynamic information is constantly updated by the de-vice monitor. The three main functions of the physical-level manager is to configure physical devices to spawnvirtualized device slivers (§4.1) for the instantiation ofuser-level devices (§4.1.1) and user-level connectivities(§4.1.2), to manage their states (§4.4) and to delete ex-isting instantiated slivers. A sliver is a share of the phys-ical resource, e.g., a virtual machine or a sliced physicallink. The physical-level manager handles requests, suchas creating a VM, by figuring out the physical device toconfigure and how to configure it. The actual manage-ment actions are performed via the configuration effec-tor module, which we describe next.

2.4.3 Configuration effectorThe configuration effector specializes in realizing con-figuration changes to physical devices. Configlets areparametrized configuration or script templates, saved inthe persistent storage and retrieved on demand. To real-ize the physical-level API calls, the physical-level man-ager decides the appropriate configlet to use and gener-ates parameters based on the request and the physical re-source information. The configuration effector executesthe configuration change on target physical devices.

2.4.4 Device monitorA device monitor actively or passively determines thestatus of physical devices or components and propagatesthis “dynamic” information to the physical-level man-ager. Effectively, the device monitor detects any phys-ical device failures in real time. As the physical-levelmanager receives the update, it can perform appropri-ate actions to mitigate the failure. The goal is to mini-mize any inconsistency of physical instantiation and userspecifications. We detail the techniques in §4.5. Deviceor component recovery can be detected as well, and as

Figure 5: The slice life cycle

S1

NY

Internet

R3

M5 M6

L5 L6

TX

Internet

R2

M3 M4

L3 L4

CA

Internet

R1

M1 M2

L1 L2

L7

L10

L8 L9

L11 L12 L13 L14 L15

$SL = AddUsrSlice();$S1 = AddUsrSwitch($SL);$R1 = AddUsrRouter($SL,"CA");$M1 = AddUsrMachine($SL,"CA","Debian");$M2 = AddUsrMachine($SL,"CA","Windows");$L1 = AddUsrLink($M1,$R1); # similar for M2$L10 = AddUsrLink($M1,$S1); # similar for M2$L7 = AddToInternet($R1, "141.212.111.0/24");# similar for "TX" and "NY"

Figure 6: Example of user-level API calls

such the recovered resource can again be considered us-able by the physical-level manager.

3 Network service in a sliceA user of ShadowNet creates a service topology in theform of a slice, which is manipulated through the user-level API calls supported by the ShadowNet controller.The three layers embedded in a slice and the interactionsamong them are depicted in Figure 5 and detailed below.In this section, we outline the main user-exposed func-tionalities that the APIs implement.

3.1 Creating user-level specificationTo create a new service trial, an authorized user of Shad-owNet can create a slice. As a basic support, and usu-ally the first step to create the service, the user speci-fies the topological setup through the user-level API (ain Figure 5). As an example, Figure 6 depicts the in-tended topology of a hypothetical slice and the API callsequence that creates it.The slice created acts like a placeholder for a collec-

tion of user-level objects, including devices and connec-tivities. We support three generic types of user-level de-vices (UsrDevice): router (UsrRouter), machine (Usr-Machine), and switch (UsrSwitch). Two UsrDevices canbe connected to each other via a user-level link (Usr-Link). User-level interfaces (UsrInt) can be added to

-

a UsrDevice explicitly by the slice owner; however, inmost cases, they are created implicitly when a UsrLinkis added to connect two UsrDevices.Functionally speaking, a UsrMachine (e.g., M1 in

Figure 6) represents a generic computing resource,where the user can run service applications. A Us-rRouter (e.g., R1) can run routing protocols, forwardand filter packets, etc. Further, UsrRouters are pro-grammable, allowing for custom router functionality. AUsrLink (e.g., L1) ensures that when the UsrDevice onone end sends a packet, the UsrDevice on the otherend will receive it. A UsrSwitch (e.g., S1) provides asingle broadcast domain to the UsrDevices connectingto it. ShadowNet provides the capability and flexibil-ity of putting geographically dispersed devices on thesame broadcast domain. For example, M1 to M6, al-though specified in different locations, are all connectedto UsrSwitch S1. Besides internal connectivity amongUsrDevices, ShadowNet can drive live Internet trafficto a service trial by allocating a public IP prefix for aUsrInt on a UsrDevice. For example, L7 is used toconnect R1 to the Internet, allocating an IP prefix of141.212.111.0/24.Besides creating devices and links, a user of Shad-

owNet can also associate properties with different ob-jects, e.g., the OS image of a UsrMachine and the IPaddresses of the two interfaces on each side of a Usr-Link. As a distributed infrastructure, ShadowNet allowsusers to specify location preference for each device aswell, e.g., California forM1,M2 andR1. This locationinformation is used by the physical layer manager wheninstantiation is performed.

3.2 InstantiationA user can instantiate some or all objects in her sliceonto physical resources (b in Figure 5). From this pointon, the slice not only contains abstracted specification,but also has associated physical resources that the in-stantiated objects in the specification are mapped to.ShadowNet provides two types of instantiation strate-

gies. First, a user can design a full specification for theslice and instantiate all the objects in the specificationtogether. This is similar to what Emulab and VINI pro-vide. As a second option, user-level objects in the speci-fication can be instantiated upon request at any time. Forexample, they can be instantiated on-the-fly as they areadded to the service specification.This is useful for userswho would like to build a slice interactively and/or mod-ify it over time, e.g., extend the slice resources based onincreased demand.Unlike other platforms, such as PlanetLab and Emu-

Lab, which intend to run as many “slices” as possible,ShadowNet limits the number of shares (slivers) a phys-ical resource provides. This simplifies the resource al-

location problem to a straightforward availability check.We leave more advanced resource allocation methods asfuture work.

3.3 Device access & persistent slice stateShadowNet allows a user to access the physical instanti-ation of the UsrDevices and UsrLinks in her slice, e.g.,logging into a router or tapping into a link (c in Figure 5).This support is necessary for many reasons. First, theuser needs to install software on UsrMachines or Usr-Routers and/or configure UsrRouters for forwarding andfiltering packets. Second, purely from an operationalpoint of view, operators usually desire direct access tothe devices (e.g., a terminal window on a server, or com-mand line access to a router).For UsrMachines and UsrRouters, we allow users to

log into the device and make any changes they want(§4.3). For UsrLinks and UsrSwitches, we providepacket dump feeds upon request (§4.3). This supportis crucial for service testing, debugging and optimiza-tion, since it gives the capability and flexibility of sniff-ing packets at any place within the service deploymentwithout installing additional software on end-points.Enabling device access also grants users the ability to

change the persistent state of the physical instantiations,such as files installed on disks and configuration changeson routers. In ShadowNet, we decouple the persistentstates from the physical instantiation. When the physicalinstantiation is modified, the changed state also becomepart of the slice (d in Figure 5).

3.4 DeactivationThe instantiated user-level objects in the specificationof a slice can be deactivated, releasing the physical in-stantiations of the objects from the slice by giving themback to the ShadowNet infrastructure. For example, auser can choose to deactivate an under-utilized slice asa whole, so that other users can test their slices whenthe physical resources are scarce. While releasing thephysical resource, we make sure the persistent state isextracted and stored as part of the slice (f in Figure 5).As a result, when the user decides to revive a whole sliceor an object in the slice, new physical resources will beacquired and the stored state associated with the objectapplied to it (e in Figure 5). Operationally speaking, thisenables a user to deactivate a slice and reactivate it later,most likely on a different set of resources but still func-tioning like before.

3.5 Management supportAbstracting the persistent state from the physical instan-tiation enables other useful primitives in the context ofservice deployment. If we instantiate a new UsrDeviceand apply the state of an existing UsrDevice to it, we ef-

-

Figure 7: Network connectivity options.

fectively duplicate the existing UsrDevice. For example,a user may instantiate a new UsrMachine with only thebasic OS setup, log into the machine to install necessaryapplication code and configure the OS. With the supportprovided by ShadowNet, she can then spawn several newUsrMachines and apply the state of the first machine.This eases the task of creating a cluster of devices serv-ing similar purposes. From the ShadowNet control as-pect, this separation allows sophisticated techniques tohide physical device failures. For example, a physicalrouter experiences a power failure, while it hosts manylogical routers as the instantiation of UsrRouters. In thiscase, we only need to create new instantiations on otheravailable devices of the same type, and then apply thestates to them. During the whole process, the slice spec-ification, which is what the user perceives, is intact. Nat-urally, the slice will experience some downtime as a re-sult of the failure.

4 Physical layer operationsWhile conceptually similar to several existing sys-tems [10, 27], engineering ShadowNet is challengingdue to the strong isolation concept it rests on, theproduction-grade qualities it provides and the distributednature of its realization. We describe the key methodsused to realize ShadowNet.

4.1 Instantiating slice specificationsThe slice specification instantiation is performed by theShadowNet controller in a fully automated fashion. Themethods to instantiate on two types of resource are de-scribed as follows.

4.1.1 User-level routers and machinesShadowNet currently utilizes VirtualBox [5] from SunMicrosystems, and Logical Routers [16] from JuniperNetworks to realize UsrMachines and UsrRouters re-spectively. Each VM and logical router created is con-sidered as a device sliver. To instantiate a UsrRouteror a UsrMachine, a ShadowNet node is chosen basedon the location property specified. Then all matchingphysical devices on that node are enumerated for avail-

ability checking, e.g., whether a Juniper router is capa-ble of spawning a new logical router. When there aremultiple choices, we distribute the usage across devicesin a round-robin fashion. Location preference may beunspecified because the user does not care about wherethe UsrDevice is instantiated, e.g., when testing a routerconfiguration option. In this case, we greedily choosethe ShadowNet node where that type of device is theleast utilized. When no available resource can be allo-cated, an error is returned.

4.1.2 User-level connectivityThe production network associated with ShadowNetprovides both Internet connection and virtual backboneconnectivity to each ShadowNet node. We configure alogical router, which we call the head router of the Shad-owNet node, to terminate these two connections. Withthe ShadowNet backbone connectivity provided by theISP, all head routers form a full-mesh, serving as thecore routers of ShadowNet. For Internet connectivity,the head router interacts with ISP’s border router, e.g.,announcing BGP routes.Connecting device slivers on the same ShadowNet

node can be handled by the switching layer of that node.The head routers are used when device slivers acrossnodes need to be connected. In ShadowNet, we makeuse of the carrier-supporting-carrier (CsC) capabilitiesprovided by MPLS enabled networks. CsC utilizes theVPN service provided by the ISP, and stacks on top ofit another layer of VPN services, running in parallel butisolated from each other. For example, layer-2 VPNs (socalled pseudo-wire) and VPLS VPNs can be stacked ontop of a layer-3 VPN service [15].This approach has three key benefits. First, each

layer-2 VPN or VPLS instance encapsulates the networktraffic within the instance, thus provides strong isolationacross links. Second, these are off-the-shelf production-grade services, which are much more efficient than man-ually configured tunnels. Third, it is more realistic forthe users, because there is no additional configurationneeded in the logical routers they use. The layer-2VPN and VPLS options that we heavily use in Shad-owNet provides layer-2 connectivity, i.e., with routerprogrammability, any layer-3 protocol besides IP can runon top of it.Figure 7 contains various examples of enabling con-

nectivity, which we explain in detail next.UsrLink: To instantiate a UsrLink, the instantiations ofthe two UsrDevices on the two ends of the UsrLink arefirst identified. We handle three cases, see Figure 7a).(We consider the UsrLinks connected to a UsrSwitchpart of that UsrSwitch, which we describe later):1) Two slivers are on the same physical device: forexample, V M1 and V M2 are on the same server; LR2

-

and Head1 are on the same router. In this case, we uselocal bridging to realize the UsrLink.2) Two slivers are on the same ShadowNet node, butnot the same device: for example, V M1 and LR1,LR1 and LR2. We use a dedicated VLAN on that nodefor each UsrLink of this type, e.g.,, LR1 will be config-ured with two interfaces, joining two different VLANsegments, one for the link to V M1, the other one toLR2.3) Two slivers are on different nodes: for example,LR2 and LR3. In this case, we first connect each sliverto its local head router, using the two methods above.Then the head router creates a layer-2 VPN to bridge theadded interfaces, effectively creating a cross-node tunnelconnecting the two slivers.

In each scenario above, the types of the physical inter-faces that should be used to enable the link are decided,the selected physical interfaces are configured, and theresource usage information of the interfaces is updated.MPLS-VPN technologies achieve much higher lev-

els of realism over software tunnels, because almost noconfiguration is required at the end-points that are be-ing connected. For example, to enable the direct linkbetween LR2 and LR3, the layer-2 VPN configurationonly happens on Head1 and Head2. As a result, if theuser logs into the logical router LR2 after its creation,she would only sees a “physical” interface setup in theconfiguration, even without IP configured, yet that inter-face leads to LR3 according to the layer-2 topology.User-view switches: Unlike for UsrMachines and Usr-Routers, ShadowNet does not allocate user-controllabledevice slivers for the instantiation of UsrSwitches, butrather provide an Ethernet broadcasting medium. (SeeFigure 7b).)To instantiate a UsrSwitch connecting to a set of Us-

rDevices instantiated on the same ShadowNet node, weallocate a dedicated VLAN-ID on that node and config-ure those device slivers to join the VLAN (i.e., LR5 andLR6). If the device slivers mapped to the UsrDevicesdistribute across different ShadowNet nodes, we firstrecursively bridge the slivers on the same node usingVLANs, and then configure one VPLS-VPN instance oneach head router (i.e., Head3 and Head4) to bridge allthose VLANs. This puts all those device slivers (i.e.,V M3, LR5, LR6) onto the same broadcast domain.Similar to layer-2 VPN, this achieves a high degree ofrealism, for example on LR5 and LR6, the instantiatedlogical router only shows one “physical” interface in itsconfiguration.Internet access: We assume that ShadowNet nodes canuse a set of prefixes to communicate with any end-pointson the Internet. The prefixes can either be announcedthrough BGP sessions configured on the head routers to

the ISP’s border routers, or statically configured on theborder routers.To instantiate a UsrDevice’s Internet connectivity, we

first connect the UsrDevice’s instantiation to the headrouter on the same node. Then we configure the headrouter so that the allocated prefix is correctly forwardedto the UsrDevice over the established link and the routefor the prefix is announced via BGP to the ISP. For ex-ample, a user specifies two UsrRouters connecting to theInternet, allocating them with prefix 136.12.0.0/24and 136.12.1.0/24. The head router should in turnannounce an aggregated prefix 136.12.0.0/23 tothe ISP border router.

4.2 Achieving isolation and fair sharingAs a shared infrastructure for many users, ShadowNetattempts to minimize the interference among the physi-cal instantiation of different slices. Each virtual machineis allocated with its own memory address space, disk im-age, and network interfaces. However, some resources,like CPU, are shared among virtual machines, so thatone virtual machine could potentially drain most of theCPU cycles. Fortunately, virtual machine technology isdeveloping better control over CPU usage of individualvirtual machines [5].A logical router on a Juniper router has its own config-

uration file and maintains its own routing table and for-warding table. However, control plane resources, suchas CPU and memory are shared among logical routers.We evaluate this impact in §6.3.The isolation of packets among different UsrLinks is

guaranteed by the physical device and routing protocolproperties. We leverage router support for packet filter-ing and shaping, to prevent IP spoofing and bandwidthabusing. The corresponding configuration is made onhead routers, where end-users cannot access. For eachUsrLink, we impose a default rate-limit (e.g., 10Mbps),which can be upgraded by sending a request via the user-level API. We achieve rate limiting via hardware trafficpolicers [19] and Linux kernel support [4].

4.3 Enabling device accessConsole or remote-desktop access: For each VM run-ning on VirtualBox, a port is specified on the hostingserver to enable Remote Desktop protocol for graphicalaccess restricted to that VM. If the user prefers commandline access, a serial port console in the VM images is en-abled and mapped to a UNIX domain socket on the host-ing machine’s file system [5]. On a physical router, eachlogical router can be configured to be accessible throughSSH using a given username and password pair, whileconfining the access to be within the logical router only.Though the device slivers of a slice can be connected

to the Internet, the management interface of the actual

-

physical devices in ShadowNet should not be. For ex-ample, the IP address of a physical server should be con-tained within ShadowNet rather than accessible globally.We thus enable users to access the device slivers throughone level of indirection via the ShadowNet controller.Sniffing links: To provide packet traces from a partic-ular UsrLink or UsrSwitch, we dynamically configure aSPAN port on the switching layer of a ShadowNet nodeso that a dedicated server or a pre-configured VM cansniff the VLAN segment that the UsrLink or UsrSwitchis using. The packet trace can be redirected through thecontroller to the user in a streaming fashion or saved asa file for future downloading. There are cases where noVLAN is used, e.g., for two logical routers on the samephysical router connected via logical tunnel interfaces.In this case, we deactivate the tunnel interfaces and re-instantiate the UsrLink using VLAN setup to supportpacket capture. This action, however, happens at thephysical-level and thus is transparent to the user-level,as the slice specification remains intact.

4.4 Managing stateTo extract the state of an instantiated UsrMachine, whichessentially is a VM, we keep the hard drive image ofthe virtual machine. The configuration file of a logicalrouter is considered as the persistent state of the corre-sponding UsrRouter. Reviving stored state for a Usr-Machine can be done by attaching the saved disk im-age to a newly instantiated VM. On the other hand, Us-rRouter state, i.e., router configuration files, need ad-ditional processing. For example, a user-level inter-face may be instantiated as interface fe-0/1/0.2 andthus appear in the configuration of the instantiated log-ical router. When the slice is deactivated and instan-tiated again, the UsrInt may be mapped to a differentinterface, say ge-0/2/0.1. To deal with this com-plication, we normalize the retrieved configuration andreplace physical-dependent information with user-levelobject handles, and save it as the state.

4.5 Mitigating and creating failuresUnexpected physical device failures can occur, and as anoption ShadowNet tries to mitigate failures as quicklyas possible to reduce user perceived down time. Onebenefit of separating the states from the physical instan-tiation is that we can replace a new physical instantia-tion with the saved state applied without affecting theuser perception. Once a device or a physical compo-nent is determined to be offline, ShadowNet controlleridentifies all instantiated user-level devices associated toit. New instantiations are created on healthy physicaldevices and saved states are applied if possible. Notethat certain users are specifically interested in observingservice behavior during failure scenarios. We allow the

users to specify whether they want physical failures topass through, which is disabling our failure mitigationfunctionality. On the other hand, failure can be injectedby the ShadowNet user-level API, for example tearingdown the physical instantiation of a link or a device inthe specification to mimic a physical link-down event.For physical routers, the device monitor performs pe-

riodic retrieval of the current configuration files, preserv-ing the states of UsrRouters more proactively. When awhole physical router fails, the controller creates newlogical routers with connectivity satisfying the topologyon other healthy routers and applies the saved configu-ration, such as BGP setup. If an interface module fails,the other healthy interfaces on the same router are usedinstead. Note that the head router is managed in thesame way as other logical routers, so that ShadowNetcan also recover from router failures where head routersare down.A physical machine failure is likely more catas-

trophic, because it is challenging to recover files froma failed machine and it is not feasible to duplicate largefiles like VM images to the controller. One potential so-lution is to deploy a distributed file system similar to theGoogle file system [13] among the physical machineswithin one ShadowNet node. We leave this type of func-tionality for future work.

5 Prototype ImplementationIn this section, we briefly describe our prototype im-plementation of the ShadowNet infrastructure, includingthe hardware setup and management controller.

5.1 Hardware setupTo evaluate our architecture we built two ShadowNetnodes and deployed them locally. (At the time of writ-ing, a four node ShadowNet instance is being deployedas an operational network with nodes in Texas, Illinois,New Jersey and California. Each node has two giga-bit links to the production network, one used as regularpeering link and the other used as the dedicated back-bone.)Each prototype node has two Juniper M7i routers run-

ning JUNOS version 9.0, one Cisco C2960 switch, aswell as four HP DL520 servers. The M7i routers areequipped with one or two Gigabit Ethernet PICs (Physi-cal Interface Cards), FastEthernet PIC, and tunneling ca-pability. Each server has two gigabit Ethernet interfaces,and we install VirtualBox in the Linux Debian operatingsystem to host virtual machines. The switch is capableof configuring VLANs and enabling SPAN ports.In the local deployment, two Cisco 7206 routers act as

an ISP backbone. MPLS is enabled on the Cisco routersto provide layer-3 VPN service as the ShadowNet back-bone. BGP sessions are established between the head

-

router of each node and its adjacent Cisco router, en-abling external traffic to flow into ShadowNet. We con-nect the network management interface fxp0 of Ju-niper routers and one of the two Ethernet interfaceson machines to a dedicated and separate managementswitch. These interfaces are configured with privateIP addresses, and used for physical device managementonly, mimicking the out-of-band access which is com-mon in ISP network management.

5.2 ControllerThe ShadowNet controller runs on a dedicated machine,sitting on the management switch. The controller iscurrently implemented in Perl. A Perl module, withall the user-level APIs, can be imported in Perl scriptsto create, instantiate and access service specifications,similar to the code shown in Figure 6. A mysqldatabase is running on the same machine as the con-troller, serving largely, though not entirely, as the per-sistent storage connecting to the controller. It savesthe physical device information, user specifications, andnormalized configuration files, etc. We use a differ-ent set of tables to maintain physical-level information,e.g.,, phy_device_table, and user-level informa-tion, e.g.,, usr_link_table. The Perl module re-trieves information from the tables and updates the ta-bles when fulfilling API calls.The configuration effector of the ShadowNet con-

troller is implemented within the Perl module as well.We make use of the NetConf XML API exposed by Ju-niper routers to configure and control them. Configletsin the form of parametrized XML files are stored onthe controller. The controller retrieves the configura-tion of the physical router in XML format periodicallyand when UsrRouters are deactivated. We wrote a spe-cialized XML parser to extract individual logical routerconfigurations and normalize relative fields, such as in-terface related configurations. The normalized config-urations are serialized in text format and stored in themysql database associating to the specific UsrRouter.Shell and Perl scripts, which wrap the VirtualBox

management interface, are executed on the hostingservers to automatically create VMs, snapshot runningVMs, stop or destroy VMs. The configuration effectorlogs into each hosting server and executes those scriptswith the correct parameters. On the servers, we runlow-priority cron jobs to maintain a fair amount of de-fault VM images of different OS types. In this case,the request of creating a new VM can be fulfilled fairlyquickly, amortizing the overhead across time. We use thefollowing steps to direct the traffic of an interface usedby a VM to a particular VLAN. First, we run tunctlon the hosting server to create a tap interface, which isconfigured in the VMM to be the “physical” interface of

the VM. Second, we make use of 802.1Q kernel mod-ule to create VLAN interfaces on the hosting server, likeeth1.4, which participates in VLAN4. Finally we usebrctl to bridge the created tap interface and VLANinterface.Instead of effecting one configuration change per ac-

tion, the changes to the physical devices are batched andexecuted once per device, thus reducing authenticationand committing overheads. All devices are manipulatedin parallel. We evaluate the effectiveness of these twoheuristics in §6.1.The device monitor module is running as a daemon

on the controller machine. SNMP trap messages are en-abled on the routers and sent over the management chan-nel to the controller machine. Ping messages are sentperiodically to all devices. The two sources of infor-mation are processed in the background by the monitor-ing daemon. When failures are detected, the monitoringmodule calls the physical-level APIs in the Perl module,which in response populates configlets and executes onthe routers to handle failures. An error message is alsoautomatically sent to the administrators.

6 Prototype EvaluationIn this section, we evaluate various aspects of Shad-owNet based on two example slices instantiated on ourprototype. The user specifications are illustrated on theleft side of Figure 8; the physical realization of that spec-ification is on the right. In Slice1, two locations arespecified, namely LA and NY. On the LA side, one Us-rMachine (M1) and one UsrRouter (R1) are specified.R1 is connected to M1 through a UsrLink. R1 is con-nected to the Internet through L2 and to R2 directly viaL5. The setup is similar on NY side. We use mini-mum IP and OSPF configuration to enable the correctforwarding between M1 and M2. Slice2 has essentiallythe same setup, except that the two UsrRouters do nothave Internet access.The right side of Figure 8 shows the instantiation of

Slice1 and Slice2. VM1 and LR1 are the instantiationof M1 and R1 respectively. UsrLink L1 is instantiatedas a dedicated channel formed by virtualized interfacesfrom physical interfaces, eth1 and ge-0/1/0, con-figured to participate in the same VLAN. To create theUsrLink L5, ShadowNet first uses logical tunnel inter-faces to connect LR1 and LR2 with their head routers,which in turn bridge the logical interfaces using layer-2VPN.

6.1 Slice creation timeTable 2 shows the creation time for Slice1, brokendown into instantiation of machine and router, alongwith database access (DB in the table.) Using a naiveapproach, the ShadowNet controller needs to spend 82

-

L1

L2 L3

L4

LA NY

M1 R1 M2R2

L5

L6 L8

M3 M4R3 R4

Slice1

Slice2

Internet

L7

Vlan3

Vlan1

LTs

ge-0/1/0

LR1

HeadInternet

VPN

JuniperRouter1

Vlan4

Vlan2

LTs

ge-0/1/0

Internet

VPN

JuniperRouter2

Internet

VPN

Internet

VPN

VM3

VM1

Eth1.3

Eth1.1

Server1

Eth1VM4

VM2

Eth1.4

Eth1.2

Server2

Eth1

SwitchISPSwitch

LR3 LR4

LR2

Head

LTs stands for Logical Tunnels

For L2VPN that connects LR1 to LR2For L2VPN that connects LR3 to LR4

For Internet access to LR1/LR2

Slice specif ication Actual instant iat ion

Figure 8: User slices for evaluation

Router Machine DB TotalDefault (ms) 81834 11955 452 94241Optimized (ms) 6912 5758 452 7364

Table 2: Slice creation time comparison

bandwidth packet Observed Delta(Kbps) size bandwidth (%)

56 64 55.9 .181500 55.8 .36

384 64 383.8 .051500 386.0 .52

1544 64 1537.2 .441500 1534.8 .605000 1500 4992.2 .16NoLimit 1500 94791.2 NA

Table 3: Cross-node link stress test

seconds on the physical routers alone by making 13changes, resulting a 94-second execution time in total.For machine configuration, two scripts are executed forcreating the virtual machines, and two for configuringthe link connectivity. With the two simple optimizationheuristics described in §5.2, the total execution time isreduced to 7.4 seconds. Note that the router and ma-chine configurations are also parallelized, so that wehave total = DB + max(Routeri, Machinej). Par-allelization ensures that the total time to create a slicedoes not increase linearly with the size of the slice. Weestimate creation time for most slices to be within 10seconds.

6.2 Link stress testWe perform various stress tests to examine ShadowNet’scapability and fidelity. We make L5 the bottleneck link,setting different link constraints using Juniper router’straffic policer, and then test the observed bandwidth M1and M2 can achieve on the link by sending packets asfast as possible. Packets are dropped from the head ofthe queue. The results are shown in Table 3, demon-strating that ShadowNet can closely mimic different link

0.01

0.1

1

10

100

1000

100 1000 10000 100000

Proc

essi

ng ti

me

(sec

ond)

Routes to receive

w/o impactw/ impact

(a) Impact of shared controlplanes

0

2000

4000

6000

8000

10000

12000

0 5 10 15 20 25 30 35 40 45

Band

wid

th (k

bps)

Time (second)

Packet rate

(b) Hardware failure recovery

Figure 9: Control plane isolation and recovery test.capacities.When no constraint is placed on L5, the throughput

achieved is around 94.8Mbps, shown as “NoLimit” inthe table. This is close to maximum, because the routerswe used as ISP cores are equipped with FastEthernet in-terfaces, which have 100Mbps capacity and the VM isspecified with 100Mbps virtual interface. Physical gi-gabit switches are usually not the bottleneck, as we ver-ified that two physical machines on the same physicalmachines connected via VLAN switch can achieve ap-proximately 1Gbps bandwidth.As we are evaluating on a local testbed, the jitter and

loss rate is almost zero, while the delay is relatively con-stant. We do not expect this to hold in our wide-areadeployment.

6.3 Slice isolationWe describe our results in evaluating the isolation assur-ance from the perspectives of both the control and dataplane.

6.3.1 Control planeTo understand the impact of a stressed control plane onother logical routers, we run software routers, bgpd ofzebra, on both M1 and M3. The two software routersare configured to peer with the BGP processes on LR1and LR3. We load the software routers with BGP rout-ing tables of different sizes, transferred to LR1 and LR3.The BGP event log on the physical router is analyzed bymeasuring the duration from the first BGP update mes-sage to the time when all received routes are processed.In Figure 9(a), the bottom line shows the processing

-

0 100 200 300 400 500 600 700 800 900

1000

0 200 400 600 800 1000 1200 1400

Rec

eivi

ng ra

te o

n M

2 (k

bps)

Sending rate on M1 (kbps)

L1 900

920

940

960

980

1000

0 200 400 600 800 1000 1200 1400

Rec

eivi

ng ra

te o

n M

2 (k

bps)

Sending rate on M3 (kbps)

L1

(a) Variable packet rate (b) Max packet rate(L6’s rate is maxed) (L6’s rate is variable)

Figure 10: Data plane isolation test.

time of the BGP process on LR1 to process all the routesif LR3 is BGP-inactive. The top line shows the process-ing time for LR1 when LR3 is also actively processingthe BGP message stream. Both processing times in-crease linearly with the number of routes received. Thetwo lines are almost parallel, meaning that the delay isproportional to the original processing time. The differ-ence of receiving 10k routes is about 13 seconds, 73 sec-onds for 50k routes. We have verified that the CPU usageis 100% even if only LR1 is BGP-active. We have alsoused two physical machines to peer with LR1 and LR3and confirmed that the bottleneck is due to the Juniperrouter control processor. If these limitations prove tobe problematic in practice, solutions exist which allow ahardware separation of logical router control planes [17].

6.3.2 Data planeL1 and L6 share the same physical interfaces, eth1on Server1 and ge-0/1/0 on JuniperRouter1. Werestrict the bandwidth usage of both L1 and L6 to be1Mbps by applying traffic policer on the ingress inter-faces on LR1 and LR3. From the perspective of a givenUsrLink, say L1, we evaluate two aspects: regardlessof the amount of traffic sent on L6, (1) L1 can alwaysachieve the maximum bandwidth allocated (e.g., 1Mbpsgiven a 100Mbps interface); (2) L1 can always obtainits fair share of the link. To facilitate this test, we applytraffic policer on the ingress interfaces (ge-0/1/0) onLR1 and LR3, restricting the bandwidth of L1 and L6 to1Mbps. Simultaneous traffic is sent from M1 via L1 toM2, and from M3 via L6 to M4.Figure 10(a) shows the observed receiving rate on M2

(y-axis) as the sending rate of M1 (x-axis) increases,while M3 is sending as fast as possible. The receivingrate matches closely with the sending rate, before reach-ing the imposed 1Mbps limit, This demonstrates that L1capacity is not affected, even if L6 is maxed out. Fig-ure 10(b) shows the max rate ofL1 can achieve is alwaysaround 980kbps no matter how fastM2 is sending.

6.4 Device failure mitigationWe evaluate the recovery time in response to a hardwarefailure in ShadowNet. While Slice1 is running,M1 con-tinuously sends packets to M2 via L1. We then phys-

ically yanked the Ethernet cable on the Ethernet mod-ule ge-0/1/0, triggering SNMP LinkDown trap mes-sage and the subsequent reconfiguration activity. A sep-arate interface (not shown in the figure) is found to be us-able, then automatically configured to resurrect the downlinks. Figure 9(b) shows the packet rate that M2 ob-serves. The downtime is about 7.7 seconds, mostly spenton effecting router configuration change. Failure detec-tion is fast due to continuous SNMP messages, and sim-ilarly controller processing takes less than 100ms. Thisexemplifies the benefit of strong isolation in ShadowNet,as the physical instantiation is dynamically replaced us-ing the previous IP and OSPF configuration, leaving theuser perceived slice intact after a short interruption. Tofurther reduce the recovery time, the ShadowNet con-troller can spread a UsrLink’s instantiation onto multiplephysical interfaces, each of which provides a portion ofthe bandwidth independently.

7 Related workShadowNet has much in common with other test/trialnetworks [10, 27, 22]. However, to our knowledge,ShadowNet is the first platform to exploit recent ad-vances in the capabilities of networking equipment toprovide a sharable, composable and programmable in-frastructure using carrier-grade equipment running ona production ISP network. This enables a distinct em-phasis shift from experimentation/prototyping (enabledby other test networks), to service trial/deployment (en-abled by ShadowNet). The fact that ShadowNet uti-lizes production quality equipment frees us from havingto deal with low-level virtualization/partitioning mech-anisms, which typically form a significant part of othersharable environments.A similar service deployment incentive to that es-

poused by ShadowNet was advocated in [21]. Their ser-vice definition is, however, narrower than ShadowNet’sscope which also includes network layer services. Ama-zon’s EC2 provides a platform for rapid and flexibleedge service deployment with a low cost [1]. This plat-form only rents computing machines with network ac-cess, lacking the ability to control the networking as-pects of service testing, or indeed network infrastructureof any kind. PLayer [14] is designed to provide a flexibleand composable switching layer in data-center environ-ment. It achieves dynamic topology change with lowcost; however, it is not based on commodity hardware.Alimi et al. proposed the idea of shadow configura-

tion [8], a new set of configuration files that first run inparallel with existing configuration and then either com-mitted or discarded. The shadow configuration can beevaluated using real traffic load. The downside is thatthe separation between the production network and theshadowed configurationmay not be strongly guaranteed.

-

This technique requires significant software and hard-ware modification on proprietary network devices.We heavily rely on hardware-based and software-

based virtualization support [6] in the realization ofShadowNet, for example virtual machines [5] and Ju-niper’s logical router [16]. The isolation between thelogical functionality and the physical resource can bedeployed to achieve advanced techniques, like routermigration in VROOM [26] and virtual machine migra-tion [20, 12], which can be used by ShadowNet.

8 ConclusionIn this paper, we propose an architecture called Shad-owNet, designed to accelerate network change in theform of new networks services and sophisticated net-work operation mechanisms. Its key property is that theinfrastructure is connected to, but functionally separatedfrom a production network, thus enabling more realisticservice testing. The fact that production-grade devicesare used in ShadowNet greatly improves the fidelity andrealism achieved. In the design and implementationof ShadowNet, we created strong separation betweenthe user-level representations from the physical-levelinstantiation, enabling dynamic composition of user-specified topologies, intelligent resource managementand transparent failure mitigation. Though ShadowNetcurrently provides primitives mainly for service testingpurposes, as a next step, we seek to broaden the applica-bility of ShadowNet, in particular, to merge the controlframework into the production network for allowingservice deployment.

Acknowledgment: We wish to thank our shepherdJaeyeon Jung as well as the anonymous reviewers fortheir valuable feedback on this paper.

References[1] Amazon Elastic Compute Cloud. http://aws.amazon.

com/ec2/.[2] FEDERICA: Federated E-infrastructure Dedicated to European

Researchers Innovating in Computing network Architectures.http://www.fp7-federica.eu/.

[3] GENI: Global Environment for Network Innovations. http://www.geni.net/.

[4] Traffic Control HOWTO. http://linux-ip.net/articles/Traffic-Control-HOWTO/.

[5] VirtualBox. http://www.virtualbox.org.[6] K. Adams and O. Agesen. A comparison of software and hard-

ware techniques for x86 virtualization. In Proceedings of the12th international conference on Architectural support for pro-gramming languages and operating systems, 2006.

[7] M. Agrawal, S. Bailey, A. Greenberg, J. Pastor, P. Sebos, S. Se-shan, K. van der Merwe, and J. Yates. Routerfarm: Towards adynamic, manageable network edge. SIGCOMM Workshop onInternet Network Management (INM), September 2006.

[8] R. Alimi, Y. Wang, and Y. R. Yang. Shadow configuration asa network management primitive. In Proceedings of ACM SIG-COMM, Seattle, WA, August 2008.

[9] H. Alzoubi, S. Lee, M. Rabinovich, O. Spatscheck, and J. Vander Merwe. Anycast CDNs Revisited. 17th International WorldWide Web Conference, April 2008.

[10] A. Bavier, N. Feamster, M. Huang, L. Peterson, and J. Rexford.In VINI veritas: realistic and controlled network experimenta-tion. SIGCOMM Comput. Commun. Rev., 36(4):3–14, 2006.

[11] Cisco Systems. MPLS VPN Carrier Supporting Car-rier. http://www.cisco.com/en/US/docs/ios/12_0st/12_0st14/feature/guide/csc.html.

[12] C. Clark, K. Fraser, S. Hand, J. G. Hansen, E. Jul, C. Limpach,I. Pratt, and A. Warfield. Live migration of virtual machines. InNSDI’05: Proceedings of the 2nd conference on Symposium onNetworked Systems Design & Implementation, 2005.

[13] S. Ghemawat, H. Gobioff, and S.-T. Leung. The google file sys-tem. SIGOPS Oper. Syst. Rev., 37(5):29–43, 2003.

[14] D. A. Joseph, A. Tavakoli, and I. Stoica. A policy-aware switch-ing layer for data centers. SIGCOMM Comput. Commun. Rev.,38(4), 2008.

[15] Juniper Networks. Configuring Interprovider and Carrier-of-Carriers VPNs. http://www.juniper.net/.

[16] Juniper Networks. Juniper Logical Routers. http://www.juniper.net/techpubs/software/junos/junos85/feature-guide-85/id-11139212.html.

[17] Juniper Networks. Juniper Networks JCS 1200 Control Sys-tem Chassis. http://www.juniper.net/products/tseries/100218.pdf.

[18] Juniper Networks. Juniper Partner Solution Development Plat-form. http://www.juniper.net/partners/osdp.html.

[19] Juniper Networks. JUNOS 9.2 Policy Framework Configura-tion Guide. http://www.juniper.net/techpubs/software/junos/junos92/swconfig-policy/frameset.html.

[20] M. Nelson, B.-H. Lim, and G. Hutchins. Fast transparent mi-gration for virtual machines. In ATEC ’05: Proceedings of theannual conference on USENIX Annual Technical Conference,pages 25–25, Berkeley, CA, USA, 2005. USENIX Association.

[21] L. Peterson, T. Anderson, D. Culler, and T. Roscoe. A Blueprintfor Introducing Disruptive Technology Into the Internet. In Proc.of ACM HotNets, 2002.

[22] L. Peterson, A. Bavier, M. E. Fiuczynski, and S. Muir. Expe-riences building planetlab. In OSDI ’06: Proceedings of the7th symposium on Operating systems design and implementa-tion. USENIX Association, 2006.

[23] L. Peterson, S. Shenker, and J. Turner. Overcoming the InternetImpasse through Virtualization. Proc. of ACM HotNets, 2004.

[24] J. Turner and N. McKeown. Can overlay hosting servicesmake ip ossification irrelevant? PRESTO: Workshop on Pro-grammable Routers for the Extensible Services of TOmorrow,May 2007.

[25] J. E. Van der Merwe et al. Dynamic Connectivity Managementwith an Intelligent Route Service Control Point. Proceedings ofACM SIGCOMM INM, October 2006.

[26] Y. Wang, E. Keller, B. Biskeborn, J. van der Merwe, and J. Rex-ford. Virtual routers on the move: live router migration as anetwork-management primitive. SIGCOMM Comput. Commun.Rev., 38(4), 2008.

[27] B. White, J. Lepreau, L. Stoller, R. Ricci, S. Guruprasad,M. Newbold, M. Hibler, C. Barb, and A. Joglekar. An Inte-grated Experimental Environment for Distributed Systems andNetworks. In Proc. of the Fifth Symposium on Operating Sys-tems Design and Implementation, 2002.