Roadrunner: Hardware and Software Overview · 2009. 1. 14. · Roadrunner hardware overview This...

Transcript of Roadrunner: Hardware and Software Overview · 2009. 1. 14. · Roadrunner hardware overview This...

ibm.com/redbooks Redpaper

Front cover

Roadrunner: Hardware and Software Overview

Dr. Andrew KomornickiGary Mullen-Schulz

Deb Landon

Review components that comprise the Roadrunner supercomputer

Understand Roadrunner hardware components

Learn about Roadrunner system software

International Technical Support Organization

Roadrunner: Hardware and Software Overview

January 2009

REDP-4477-00

© Copyright International Business Machines Corporation 2009. All rights reserved.Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP ScheduleContract with IBM Corp.

First Edition (January 2009)

This edition applies to the Roadrunner computing system.

Note: Before using this information and the product it supports, read the information in “Notices” on page v.

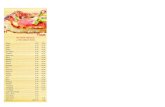

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .vTrademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viiThe team that wrote this paper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viiBecome a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viiiComments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Chapter 1. Roadrunner hardware overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11.1 What Roadrunner is . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 A historical perspective . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.2 Roadrunner hardware components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.2.1 TriBlade: a unique concept . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51.2.2 IBM BladeCenter QS22 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61.2.3 IBM BladeCenter LS21 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.3 Rack configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91.3.1 Compute node rack. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101.3.2 Compute node and I/O rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101.3.3 Switch and service rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.4 The Connected Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121.5 Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.1 Networks within a Connected Unit cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131.5.2 Networks between Connected Unit clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Chapter 2. Roadrunner software overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192.1 Roadrunner components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.1.1 Compute node (TriBlade) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202.1.2 I/O node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202.1.3 Service node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212.1.4 Master (management) node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.2 Cluster boot sequence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212.2.1 Boot scenarios . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.3 xCAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232.4 How applications are written and executed. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.4.1 Application core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232.4.2 Offloading logic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Appendix A. The Cell Broadband Engine (Cell/B.E.) processor . . . . . . . . . . . . . . . . . . 27Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28The processor elements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30The Element Interconnet Bus. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30Memory Flow Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

© Copyright IBM Corp. 2009. All rights reserved. iii

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37How to get Redbooks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

iv Roadrunner: Hardware and Software Overview

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult your local IBM representative for information on the products and services currently available in your area. Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM product, program, or service may be used. Any functionally equivalent product, program, or service that does not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not give you any license to these patents. You can send license inquiries, in writing, to: IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them as completely as possible, the examples include the names of individuals, companies, brands, and products. All of these names are fictitious and any similarity to the names and addresses used by an actual business enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming techniques on various operating platforms. You may copy, modify, and distribute these sample programs in any form without payment to IBM, for the purposes of developing, using, marketing or distributing application programs conforming to the application programming interface for the operating platform for which the sample programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore, cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2009. All rights reserved. v

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both:

AS/400®BladeCenter®Blue Gene/L™Blue Gene®Domino®GPFS™IBM PowerXCell™

IBM®iSeries®PartnerWorld®Power Architecture®POWER3™POWER5™PowerPC®

Redbooks®Redbooks (logo) ®RS/6000®System i®WebSphere®

The following terms are trademarks of other companies:

AMD, AMD Opteron, HyperTransport, the AMD Arrow logo, and combinations thereof, are trademarks of Advanced Micro Devices, Inc.

InfiniBand, and the InfiniBand design marks are trademarks and/or service marks of the InfiniBand Trade Association.

Cell Broadband Engine and Cell/B.E. are trademarks of Sony Computer Entertainment, Inc., in the United States, other countries, or both and is used under license therefrom.

Java, Sun, and all Java-based trademarks are trademarks of Sun Microsystems, Inc. in the United States, other countries, or both.

Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States, other countries, or both.

Intel Pentium, Intel, Pentium, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States, other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

vi Roadrunner: Hardware and Software Overview

Preface

This IBM® Redpaper publication provides an overview of the hardware and software components that constitute a Roadrunner system. This includes the actual chips, cards, and so on that comprise a Roadrunner connected unit, as well as the peripheral systems required to run applications. It also includes a brief description of the software used to manage and run the system.

The team that wrote this paper

This publication was produced by a team of IBM specialists working in collaboration with the International Technical Support Organization (ITSO), Rochester Center.

Dr. Andrew Komornicki is an accomplished computational scientist with many years of experience. Prior to joining IBM, his career included independent research, scientific management, government service, as well as work in the computer industry. During the 1990s, he spent two years as a rotator at the National Science Foundation as a program director, where he co-managed the program in computational chemistry. As a computational scientist, he also spent four years as the chair of the allocation committee at the San Diego Supercomputer Center. He has consulted extensively in both the computer and chemical industry. Upon his return from Washington, he spent several years at Sun™ Microsystems, where he worked as a business development executive tasked with the development of vertical markets in the chemistry and pharmaceutical markets. Three years ago, he joined the Advanced Technical Support group at IBM in the role of supporting scientific computing in the High Performance Computing (HPC) arena. His duties have included support of large scale procurements, benchmarks, and some software contributions.

Gary Mullen-Schulz is a Consulting IT Specialist at the ITSO, Rochester Center. He leads the team responsible for producing Roadrunner documentation, and was the primary author of IBM System Blue Gene Solution: Application Development, SG24-7179. Gary also focuses on Java™ and WebSphere®. He is a Sun Certified Java Programmer, Developer and Architect, and has three issued patents.

Deb Landon is an IBM Certified Senior IT Specialist in the IBM ITSO, Rochester Center. Debbie has been with IBM for 25 years, working first with the S/36 and then the AS/400®, which has since evolved to the IBM System i® platform. Before joining the ITSO in November of 2000, Debbie was a member of the PartnerWorld® for Developers iSeries® team, supporting IBM Business Partners in the area of Domino® for iSeries.

Thanks to the following people for their contributions to this project:

Bill BrandmeyerMike BrutmanChris EngelSusan LeeDave LimpertCamille MannAndrew SchramIBM Rochester

© Copyright IBM Corp. 2009. All rights reserved. vii

Prashant ManikalCornell WrightIBM Austin

Debbie LandonWade WallaceInternational Technical Support Organization, Rochester Center

Become a published author

Join us for a two- to six-week residency program! Help write a book dealing with specific products or solutions, while getting hands-on experience with leading-edge technologies. You will have the opportunity to team with IBM technical professionals, Business Partners, and Clients.

Your efforts will help increase product acceptance and customer satisfaction. As a bonus, you will develop a network of contacts in IBM development labs, and increase your productivity and marketability.

Learn more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our papers to be as helpful as possible. Send us your comments about this paper or other IBM Redbooks® in one of the following ways:

� Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

� Send your comments in an e-mail to:

� Mail your comments to:

IBM Corporation, International Technical Support OrganizationDept. HYTD Mail Station P0992455 South RoadPoughkeepsie, NY 12601-5400

viii Roadrunner: Hardware and Software Overview

Chapter 1. Roadrunner hardware overview

This chapter describes the hardware components that comprise the Roadrunner system. Specifically, this chapter examines the various components that make up a Connected Unit (CU) and then discusses how the CUs are tied together to create a complete Roadrunner cluster.

1

Note: This IBM Redpaper publication is not intended to be a detailed analysis, but rather a “big picture” discussion meant to acquaint the reader with the Roadrunner system.

© Copyright IBM Corp. 2009. All rights reserved. 1

1.1 What Roadrunner is

Roadrunner is the first general purpose computer system to reach the petaflop milestone. On June 10, 2008, IBM announced that this supercomputer had sustained a record-breaking petaflop, or 1015 floating point operations per second, as measured by the Linpack benchmark. As a result of this achievement, Roadrunner became the world’s fastest supercomputer.

Roadrunner was designed, manufactured, and tested at the IBM facility in Rochester, Minnesota. The actual initial petaflop run was done in Poughkeepsie, New York. Its final destination is the Los Alamos National Laboratory (LANL) in New Mexico, which will use this system for a variety of scientific efforts. Most notably, Roadrunner is the latest tool used by the National Nuclear Security Administration (NNSA) to ensure the safety and reliability of the US nuclear weapons stockpile.

This computer system has a number of unique characteristics. The most notable is its sheer size and the fact that this is the first modern heterogeneous system of its kind. As a petascale design, the Roadrunner system has the fewest number of compute nodes and the fewest number of cores of any of the outstanding designs considered to date. In a nutshell, the attributes of this system can be summarized with the following characteristics:

� Roadrunner is a cluster of clusters.

The fundamental building block of the Roadrunner system is a Connected Unit (CU). As originally designed, Roadrunner would have 18 such connected units, of which 17 have been delivered to LANL for the final system configuration. Roadrunner is made up of approximately 6500 AMD™ dual-core processors coupled with 12,240 Cell Broadband Engine™ (Cell/B.E.™) processors. The total peak (theoretical) performance of this hybrid system is in excess of 1.3 petaflops. The memory on this system consists of a total of 98 TB equally distributed between the Opteron and the Cell/B.E. nodes.

Each CU is made up of 180 compute nodes and 12 I/O nodes. A unique aspect of the Roadrunner design is the creation of a TriBlade as a fundamental building block for the CU. Each TriBlade consists of an AMD Opteron™ blade and two Cell/B.E. IBM BladeCenter® QS22 blades. The Opteron blade contains two dual-core processors, while the Cell/B.E. blades each contain two new Cell/B.E. eDP (double precision) processors. This architecture allows for a one-to-one mapping of Opteron cores to Cell/B.E. processors. As discussed in 1.2.1, “TriBlade: a unique concept” on page 5, this design architecture creates a master-subordinate relationship between the Opterons and the Cell/B.E. processors. Each Opteron core is connected to a Cell/B.E. chip through a dedicated PCIe link. Communications between Opteron nodes is accomplished through an extensive InfiniBand® network.

� Fedora Linux® is the operating system of choice for this system.

System management of this cluster of clusters is accomplished with the xCAT cluster management software tools.

It is worthwhile to note some of the physical characteristics of this system. The entire system consists of 278 racks that occupy approximately 5000 square feet of floor space. The weight of this system is approximately 500,000 pounds, or 250 tons. The networking required for both the compute and management tasks consists of 55 miles of InfiniBand (IB) cables. Lastly, even though the system consumes 2.4 MW of power, it is very energy efficient, delivering almost 437 megaflops per watt.

Roadrunner holds a unique position in the history of scientific computing. It was over ten years ago that the first teraflop (1012 floating point operations per second) computer was built. In 1997, a computer consisting of 7000+ Intel® Pentium® II processors sustained a teraflop

2 Roadrunner: Hardware and Software Overview

on the Linpack benchmark. Roadrunner in 2008 has demonstrated a thousand fold increase in sustained compute performance.

1.1.1 A historical perspective

Machines of Roadrunner’s size and capability are the direct result of the scientific needs of the weapons-physics communities. In October of 1992, the United States (U.S.) entered the start of the nuclear testing moratorium that banned all nuclear testing above and below ground. Prior to this moratorium, the US nuclear weapons stockpile was maintained through a combination of underground nuclear testing as well as the development of new weapons systems. When theory and experiment were combined, the Department of Energy could rely on much simpler models than those needed today. Without nuclear testing, weapons scientists must rely much more heavily on sophisticated hardware and software to simulate the complex aging process of both weapons systems as well as their components.

Established in 1995, the Advanced Simulation and Computing Program (ASC) is an integral part of the Department of Energy's National Nuclear Security Administration (NNSA) shift in emphasis from test-based to simulation-based programs. Under the ASC, computer simulation capabilities are continually developed to analyze and predict the performance, safety, and reliability of nuclear weapons and to certify their functionality. All of this work is integrated into the three weapons laboratories:

� Los Alamos National Laboratory (LANL)� Lawrence Livermore National Laboratory (LLNL)� Sandia National Laboratories (SNL)

The predecessor of the ASC was the Accelerated Strategic Computing Initiative (known as the ASCI program) in direct response to the National Defense Authorization Act of 1994, which required, in the absence of nuclear testing, for the Department of Energy to:

� Support a focused multifaceted program to increase the understanding of the existing nuclear stockpile.

� Predict, detect, and evaluate potential problems associated with the aging of the nuclear stockpile.

� Maintain the science and engineering institutions needed to support the national nuclear deterrent, now and in the future.

In response to this mandate, the ASCI program set the following objectives in order to meet the needs and requirements of the Stockpile Stewardship program. These were enumerated to include performance, safety, reliability, and renewal, and were articulated in the ASCI program plan, published by the Department of Energy Defense Programs on January 2000:

� Create predictive simulations of nuclear weapon systems to analyze behavior and asses performance in an environment without nuclear testing.

� Predict with high certainty the behavior of full weapon systems in complex accident scenarios.

� Achieve sufficient, validated predictive simulations to extend the lifetime of the stockpile, predict failure mechanisms, and reduce routine maintenance.

Note: The name Roadrunner was chosen by Los Alamos National Laboratory and is not a product name of the IBM Corporation. This supercomputer was designed and developed for the Department of Energy and Los Alamos National Laboratory under the project name Roadrunner. The project was named after the state bird of New Mexico.

Chapter 1. Roadrunner hardware overview 3

� Use virtual prototyping and modeling to understand how new production processors and materials affect performance, safety, reliability, and aging. This understanding helps define the right configuration of production and testing facilities necessary for managing the stockpile throughout the next several decades.

Throughout the history of this program, the IBM Corporation has been a key partner of the Department of Energy's National Nuclear Security Administration (NNSA) program. Here are several historical examples:

� In 1998, IBM delivered the ASCI Blue Pacific system, which consisted of 5,856 PowerPC® 604e microprocessors. The theoretical peak performance of this system was 3.8 teraflops.

� In 2000, IBM delivered the ASCI White system. This computer system was based on the IBM RS/6000® computer, which contained IBM POWER3™ nodes running at 375 MHz. This cluster consisted of 512 nodes, each of which had 16 processors for a total of 8,192 processors. The power requirements for this machine consisted of 3 MW for the computer and an additional 3 MW required for cooling. The theoretical peak processing power was 12.3 teraflops and a Linpack performance of 7.2 teraflops.

� In 2005, IBM delivered and installed the ASC Purple system at Lawrence Livermore Laboratories. This system was a 100 teraflop machine and was the successful realization of a goal set a decade earlier (1996) to deliver a 100 teraflop machine within the 2004 to 2005 time frame.

ASC Purple is based on the symmetric shared memory IBM POWER5™ architecture. The combined system contains approximately 12,500 POWER5 processors and requires 7.5 MW of electrical power for both the computer and cooling equipment.

� Another machine in the ASC program is the IBM System Blue Gene/L™ machine delivered by IBM to Lawrence Livermore Laboratories. The Blue Gene® architecture is unique in that it allows for a very dense packing of computer nodes. A single Blue Gene rack contains 1024 nodes. On March 24, 2005, the US Department of Energy announced that the Blue Gene/L installation at Lawrence Livermore Laboratory had achieved a speed of 135 teraflops on a system consisting of 32 racks. On October 27, 2005, Lawrence Livermore Laboratories and IBM announced that Blue Gene/L had produced a Linpack benchmark that exceeded 280 teraflops. This system consisted of 65,536 compute nodes housed in 64 Blue Gene racks.

As with each of the systems described above, the Roadrunner project is a partnership with IBM. The original contract was signed in September 2006 and projected for three phases. In phase 1, a base system was delivered consisting of Opteron nodes. A hybrid node prototype system was projected for phase 2. The delivery of a hybrid final system, one that would achieve a sustained petaflop in Linpack performance, was projected for phase 3.

For more information, refer to the Advanced Simulation and Computing Web site at:

http://www.sandia.gov/NNSA/ASC/about.html

Note: At the time these goals were set, computers were still at the gigaflop level and were still two years away from the realization of the first teraflop machine.

4 Roadrunner: Hardware and Software Overview

1.2 Roadrunner hardware components

A simple way to describe the Roadrunner system is that it is a heterogeneous cluster of clusters, each of which is accelerated by Cell/B.E. processors. The unique feature of this design is that each compute node consists of node-attached Cell/B.E. processors, rather than a simple cluster of Cell/B.E. processors. A collection of such compute and I/O nodes, all connected through a high speed switch fabric, makes up a scalable unit known as a Connected Unit (CU).

The fundamental building block of a CU is a compute node, each of which is a TriBlade. The TriBlade is an original design concept created for the Roadrunner system and allows for the integration of Cell/B.E. and Opteron blades. Architecturally, this design allows for the incorporation of these TriBlades into a IBM BladeCenter chassis.

1.2.1 TriBlade: a unique concept

The TriBlade makes up what is called a hybrid compute node. The components of this node consist of an IBM LS21 Opteron blade, two IBM BladeCenter QS22 Cell/B.E. blades, and a fourth blade that houses the communications fabric for the compute node. This expansion blade connects the two QS22 blades through four PCI Express x8 links to the Opteron blade and provides each node with an InfiniBand 4x DDR cluster interconnect. Figure 1-1 shows a schematic of a TriBlade.

Figure 1-1 TriBlade schematic

Chapter 1. Roadrunner hardware overview 5

The node design of the TriBlade offers a number of important characteristics. Since each node is accelerated by Cell/B.E. processors, by design there is one Cell/B.E. chip for each Opteron core. The TriBlade is populated with 16 GB of Opteron memory and an equal amount of Cell/B.E. memory. Since the new Cell/B.E. eDP processors are capable of delivering 102.4 gigaflops of peak performance, each TriBlade node is capable of approximately 400 gigaflops of double precision compute power. For additional information about the Cell/B.E. processor, see Appendix A, “The Cell Broadband Engine (Cell/B.E.) processor” on page 27.

The design of the TriBlade presents the user with a very specific memory hierarchy. The Opteron processors establish a master-subordinate relationship with the Cell/B.E. processors. Each Opteron blade contains 4 GB of memory per core, resulting in 8 GB of shared memory per socket. The Opteron blade thus contains 16 GB of NUMA shared memory per node.

Each Cell/B.E. processor contains 4 GB of shared memory, resulting in 8 GB of shared memory per blade. In total, the Cell/B.E. blades contain 16 GB of distributed memory per TriBlade node. It is important to note that not only is there a one-to-one mapping of Opteron cores to Cell/B.E. processors, but also each node consists of a distribution of equal memory among each of these components.

In order to sustain this compute power, the connectivity within each node consists of four PCI Express x8 links, each capable of 2 GBs transfer rates, with a 2 micro-second latency. The expansion slot also contains the InfiniBand interconnect, which allows communications to the rest of the cluster. The capability of the InfiniBand 4x DDR interconnect is rated at 2 GBs with a 2 micro-second latency.

1.2.2 IBM BladeCenter QS22

The IBM BladeCenter QS22 is based on the IBM PowerXCell™ 8i processor, a new generation processor based on the Cell/B.E. architecture. In contrast to its predecessors, the QS20 and QS21, the QS22 is based on the second generation processor of the Cell/B.E. architecture and offers single instruction multiple data (SIMD) vector capability along with strong parallelization. It performs double precision floating point operations at five times the speed of the previous generations of Cell/B.E. processors.

Due to its parallel nature and extraordinary computing speed, the QS22 is ideal for use in scientific applications, which is why it was chosen as an integral part of the Roadrunner system by IBM and Los Alamos. The QS22 is a single-wide blade server that offers an SMP with shared memory and two Cell/B.E. processors in a single blade enclosure.

Figure 1-2 on page 7 provides an illustration of the IBM BladeCenter QS22. Features of the QS22 include:

� Two 3.2 GHz IBM PowerXCell 8i processors� Up to 32 GB of PC2-6400 800 MHz DDR2 memory� 460 single-precision gigaflops per blade (peak)� 217 double-precision gigaflops per blade (peak)� Integrated dual 1 Gb Ethernet� IBM Enhance I/O Bridge chip� Serial Over LAN

The QS22 is based on the 64-bit IBM PowerXCell 8i processor. This processor operates at 3.2 GHz. Each of the eight SIMD vector processors is capable of producing four floating point results per clock period. The memory subsystem on the QS22 consists of eight DIMM slots, enabling configurations from 4 GB up to 32 GB of ECC memory.

6 Roadrunner: Hardware and Software Overview

For additional information about the Cell/B.E. processor, see Appendix A, “The Cell Broadband Engine (Cell/B.E.) processor” on page 27.

Figure 1-2 IBM BladeCenter QS22

For more information about the QS22, see the IBM BladeCenter QS22 Web page at:

http://www.ibm.com/systems/bladecenter/hardware/servers/qs22/index.html

1.2.3 IBM BladeCenter LS21

The IBM BladeCenter LS21 is a single width AMD Opteron-based server. The LS21 blade server supports up to two of the dual-core 2200 series AMD Opteron processors combined with up to 32 GB of ECC memory and one fixed SAS HDD.

The memory used in the LS21 are DDR2 and are ECC protected. The general memory configuration for the LS21 has to follow these guidelines:

� A total of eight DIMM slots (four per processor socket). Two of these slots (1 and 2) are preconfigured with a pair of DIMMs.

� Because memory is 2-way interleaved, the memory modules must be installed in matched pairs. However, one DIMM pair is not required to match the other in capacity.

� A maximum of 32 GB of installed memory is achieved when all DIMM sockets are populated with 4 GB DIMMs.

Important: The implementation chosen for the Roadrunner system consists of the standard blade populated with 16 GB of DDR2 memory. As with the Opteron blades, all of the Cell/B.E. based blades are diskless.

Important: The configuration used for the Roadrunner system contains two AMD Opteron processors running at 1.8 GHz, 16 GB of ECC memory, and no hard disk. The diskless configuration is an important implementation design, which eliminates additional moving parts and potential points of failure for a system with so many thousands of nodes.

Chapter 1. Roadrunner hardware overview 7

� For each installed microprocessor, a set of four DIMM sockets are enabled.

The processors used in these blades are standard low-power processors. The standard AMD Opteron processors draw a maximum of 95 W. Specially manufactured low-power processors operate at 68 W or less without any performance trade-offs. This savings in power at the processor level combined with the smarter power solution that IBM BladeCenter delivers make these blades very attractive for installations that are limited by power and cooling resources.

This blade is designed with power management capability to provide the maximum up time possible. In extended thermal conditions, rather than shut down completely or fail, the LS21 automatically reduces the processor frequency to maintain acceptable thermal levels.

A standard LS21 blade server offers these features:

� Up to two high-performance, AMD Dual-Core Opteron processors.

� A system board containing eight DIMM connectors, supporting 512 MB, 1 GB, 2 GB, or 4 GB DIMMs.

� Up to 32 GB of system memory is supported with 4 GB DIMMs.

� A SAS controller, supporting one internal SAS drive (36 or 73 GB) and up to three additional SAS drives with optional SIO blade.

� Two TCP/IP Offload Engine enabled Gigabit Ethernet controllers (Broadcom 5706S) as standard, with load balancing and failover features.

� Support for concurrent KVM (cKVM) and concurrent USB/DVD (cMedia) through Advanced Management Module and an optional daughter card.

� Support for a Storage and I/O Expansion (SIO) unit.

Dual Gigabit Ethernet controllers are standard, providing high-speed data transfers and offering TCP/IP Offload Engine support, load-balancing, and failover capabilities. The version used for Roadrunner uses optional InfiniBand expansion cards, allowing high speed communication between nodes. The InfiniBand fabric installed with Roadrunner provides 4x DDR connections that have a theoretical peak of 2 GB per second.

Finally, the LS21 supports both the Windows® and Linux operating systems. The Roadrunner implementation uses the Fedora version of Linux.

Figure 1-3 on page 9 shows a schematic of the planar of an LS21.

8 Roadrunner: Hardware and Software Overview

Figure 1-3 LS21 planar

For more information about the LS21, see the IBM BladeCenter LS21 Web page at:

http://www.ibm.com/systems/bladecenter/hardware/servers/ls21/features.html

1.3 Rack configurations

TriBlades are combined into racks to create assemblies of hybrid compute nodes. In addition, some racks contain other components for other required functionality. There are three different rack types:

� Compute node rack� Compute node and I/O rack� Switch and service rack

In general, these racks look very similar. Each can hold a maximum of 12 TriBlades and some hold additional components.

Chapter 1. Roadrunner hardware overview 9

1.3.1 Compute node rack

A compute node rack holds a total of 12 TriBlades, which means it holds 12 LS21s and 24 QS22s. A compute node rack looks similar to the picture shown in Figure 1-4.

Figure 1-4 Compute node rack

1.3.2 Compute node and I/O rack

A compute node and I/O rack contains 12 TriBlades, but also contains an IBM System x3655 (x3655) at the bottom of the rack. The x3655 performs input/output (I/O) services on behalf of the system. A compute and I/O node rack looks similar to the picture shown in Figure 1-5 on page 11.

The x3655 is a new rack-optimized server based on the AMD Opteron dual-core processor. The x3655 supports four processor sockets and 32 memory DIMM slots. The memory is 667 MHz DDR2, in sizes ranging from 512 MB to 4 GB per DIMM. This gives a total capacity of up to 128 GB of main system memory.

Note: The x3655 used in the Roadrunner system supports 16 GB or 32 GB of memory.

10 Roadrunner: Hardware and Software Overview

Figure 1-5 Compute and I/O node rack

1.3.3 Switch and service rack

The switch and service rack contains no TriBlades. This rack contains a Voltaire Grid Director ISR 9288 switch that is used to manage InfiniBand networking traffic. This is known in Roadrunner as a first-stage switch. See “First-stage InfiniBand switch” on page 14 for more information about its role and function.

You can learn more about the Voltaire switch technology on the Voltaire Web page at:

http://www.voltaire.com/Products/Grid_Backbone_Switches/Voltaire_Grid_Director_ISR_9288

In addition, this rack contains an IBM System x3655, which serves as the service node for the CU. The functions that the service node performs include the following:

� Holds the boot images used to IPL the Opteron and Cell/B.E. blades, as well as the I/O nodes.

� IPLs all elements in the CU when instructed to do so by the central management node.

Chapter 1. Roadrunner hardware overview 11

A switch and service rack looks similar to the picture shown in Figure 1-6.

Figure 1-6 Switch and service rack

1.4 The Connected Unit

The Connected Unit (CU) is a core concept in the Roadrunner system. Groups of the various rack configurations discussed in 1.3, “Rack configurations” on page 9 are put together to create a single CU. Table 1-1 lists the racks that comprise a single CU.

Table 1-1 Racks making up a Connected Unit

A CU can be thought of as a base cluster unit. The racks that make up a CU are connected to each other through first-stage switches. CUs are then tied together through second-stage switches to create a larger grid.

The size of a CU is largely determined by the capabilities of the first-stage switch. There are 180 TriBlades in a CU. This number of TriBlades means that a Connected Unit contains 180 AMD Opteron LS21s and 360 IBM BladeCenter QS22s. See Figure 1-7 on page 13.

MiscMiscMisc

Rack type Number of racks in the Connected Unit

Number of TriBlades in a rack

Total number of TriBlades

Compute node rack 3 12 36

Compute node and I/O rack 12 12 144

Switch and service rack 1 0 0

Total 16 N/A 180

12 Roadrunner: Hardware and Software Overview

Figure 1-7 Racks comprising a Connected Unit

1.5 Networks

Given the high number of racks and nodes in the Roadrunner system, it should come as no surprise that there are several different networks used to tie the system together. This section provides an overview of the different networks involved as well as their functional purpose.

1.5.1 Networks within a Connected Unit cluster

First-stage switches are used to connect all the racks making up a Connected Unit (CU) together and to allow the CU to communicate with the outside world (for example, a file system) and other CUs. The second-stage switches primarily serve as a hub to tie the 17 CUs together into a common computational system.

Note: As previously discussed in this chapter, the entire Roadrunner system or cluster is comprised of a total of 17 CUs.

Misc

Connected Unit

I/O + Compute rackx12

Compute rackx3

Switch andService rack

Chapter 1. Roadrunner hardware overview 13

First-stage InfiniBand switchAs discussed in 1.3.3, “Switch and service rack” on page 11, each CU contains a rack with a Voltaire Grid Director ISR 9288 switch. This switch allows for 288 different InfiniBand inputs, which are used as shown in Table 1-2.

Table 1-2 Connections in and out from a first-stage switch

InfiniBand Connected UnitThis network creates a “fat tree” that allows the AMD Opterons to communicate with each other using the industry-standard Message Passing Interface (MPI). It is built on top of the switched InfiniBand network. A “fat tree” is a special topology invented by Charles E. Leiserson of MIT. Unlike a traditional binary tree, a fat tree has “thicker branches” the closer you get to the tree’s root. In this way, you do not end up with a communications bottleneck at the root of the tree.

Figure 1-8 shows a traditional binary tree. Note that as messages flow up the tree, the single links to the root node can become a point of congestion.

Figure 1-8 Traditional binary tree

Figure 1-9 on page 15, on the other hand, shows a fat tree. Notice how the number of links between nodes increases as you get closer to the tree’s root. The number of links shown is just one example of a fat tree configuration; the actual number may be higher or lower between any two nodes depending on the given requirements.

Component Number of connections

Purpose

TriBlades InfiniBand link 180 Connects the AMD Opteron nodes together to allow them to participate in a network.

InfiniBand links to second-stage switch

96 Allows the CUs to be tied together into a single network.

InfiniBand links to I/O nodes 8 Provides the hybrid compute nodes access to the file system for application input and output.

Total 288

14 Roadrunner: Hardware and Software Overview

Figure 1-9 Fat tree

Fat tree topologies are becoming quite popular in InfiniBand clusters. For more information about fat trees and their usage with InfiniBand, see the article Performance Modeling of Subnet Management on Fat Tree InfiniBand Networks using OpenSM, which is available at the following Web site:

http://nowlab.cse.ohio-state.edu/publications/conf-papers/2005/vishnu-fastos05.pdf

10 Gigabit Ethernet file system LANEvery CU has twelve I/O nodes, each of which has a single InfiniBand connection to the CU's InfiniBand Switch. This allows the hybrid compute nodes (TriBlades) to retrieve and pass data to the I/O nodes over the InfiniBand network. The file system is connected through the I/O nodes, each of which have two 10 GB links to the file system LAN.

Gigabit Ethernet Control VLAN (CVLAN)The 1 GB Ethernet control VLAN is used to perform vital program and node control functions within each CU, such as Message Passing Information (MPI) required for program operation and communication.

Gigabit Ethernet Management VLAN (MVLAN)The 1 GB Ethernet Management VLAN is used to perform vital system management functions within each CU, such as passing the required operating system boot images from the CU's service node to the processors on the hybrid compute nodes and I/O nodes in order to IPL them.

PCI Express link between LS21 and Cell/B.E. bladesEach AMD Opteron has a one-to-one “master-subordinate” relationship with a Cell/B.E. processor. Although the Opterons participate in MPI communications with other Opteron nodes and access the file system through the I/O nodes, the Cell/B.E. processors only communicate with their “master” Opteron.

Important: This VLAN is used exclusively for control traffic, no user data flows across this network.

Chapter 1. Roadrunner hardware overview 15

The link between the AMD Opteron and its associated Cell/B.E. processor is through a direct, point-to-point PCI-Express connection. As discussed in 1.2.3, “IBM BladeCenter LS21” on page 7, each LS21 has an expansion card installed, a Broadcom HT-2100. This expansion card allows for PCI-Express (PCIe) communications to a Cell/B.E. processor.

The Cell/B.E. blades have PCIe functionality built into them directly, so no extra expansion card is needed. Low-level device drivers have been written to enable communications across the PCIe link. Higher-level APIs, such as Data Communication and Synchronization (DaCS) and the Accelerated Library Framework (ALF), will flow across this PCIe connection to enable Opteron-to-Cell/B.E. communications.

For more information about MPI, DaCS, and ALF, see Chapter 2, “Roadrunner software overview” on page 19.

1.5.2 Networks between Connected Unit clusters

As discussed previously, there are several networks in place within a Connected Unit to provide for cluster management, MPI communications, file I/O, and Opteron-to-Cell/B.E. communications. This section discusses the connectivity between CUs that serve to create a grid, which from an application perspective appears as a single computational unit.

Second-stage InfiniBand switchesThese InfiniBand switches serve as a way to interconnect the 17 CUs together to form a single system image. Like the first-stage switches, these are Voltaire Grid Director ISR 9288s. In this case, there are eight. Strictly speaking, only six units are actually mandatory; two are there for expansion and redundancy.

Figure 1-10 shows the role of the second-stage switches for Roadrunner. All connections to, from, and between switches are InfiniBand optical links.

Figure 1-10 Role of second stage InfiniBand switches in Roadrunner

CUA CUQ96 96 9696 96 96 96 96 9696 96 96 96 96 9696 96

CUA CUQ9696 9696 96969696 9696 9696 9696 9696 96969696 9696 9696 9696 9696 96969696 9696

16 Roadrunner: Hardware and Software Overview

Gigabit Ethernet management VLAN (MVLAN)The 1 GB Ethernet management VLAN is the grid-wide system management network. It is used for booting, system control, and status determination operations between the management nodes and the various managed elements throughout the cluster. The MVLAN does not have direct network access to the “internals” of a CU (for example, the hybrid compute nodes and I/O nodes). Management operations to those nodes occurs from the MVLAN to the CU's MVLAN through the service node to the desired target.

The MVLAN has no user or application data flow across this network. Only system management and control traffic flows across the MVLAN.

Chapter 1. Roadrunner hardware overview 17

18 Roadrunner: Hardware and Software Overview

Chapter 2. Roadrunner software overview

This chapter briefly describes the software used to run applications on the Roadrunner system.

2

Note: This IBM Redpaper publication is not intended to be a detailed analysis, but rather a “big picture” discussion meant to acquaint the reader with the Roadrunner system.

© Copyright IBM Corp. 2009. All rights reserved. 19

2.1 Roadrunner components

This section provides a brief explanation of the software used to run on the various components that comprise a Roadrunner system.

2.1.1 Compute node (TriBlade)

As described in 1.2.1, “TriBlade: a unique concept” on page 5, a TriBlade is made up of one IBM BladeCenter LS21 blade and two IBM BladeCenter QS22 blades. Each of these runs its own operating system image, but “shares” a common user application.

The following is the software that runs on the various components of the TriBlade:

� AMD Opteron LS21 for IBM BladeCenter

Each LS21 is standard except for the fact that it is diskless. The operating system is Fedora Linux. Since it is diskless, it is booted up from its Connected Unit’s service node.

� IBM BladeCenter QS22

Each QS22 is standard except for the fact that it is diskless. The operating system is Fedora Linux. Since it is diskless, it is booted up from its Connected Unit’s service node.

� Broadcom HT-2100 (PCIe adapter)

The dual Opteron host blade (LS21) is connected to the two QS22s through a PCI Express (PCIe) interconnect. Two HyperTransport™ x16 connections from the LS21 blade drive an expansion card containing two Broadcom HT-2100 HyperTransport to PCI Express bridge chips. Each Broadcom HT-2100 drives two PCI Express x8 connections to the two Axon Southbridge chips on one of the Cell Broadband Engine (Cell/B.E.) blades (QS22). This provides a dedicated PCIe x8 connection to each Cell/B.E processor.

The PCIe interconnect is supported by a low-level device driver that provides direct memory access (DMA) and a remote memory mapped small message area (SMA). DMA operations can be started by calls to the device driver from programs on either the LS21 or the QS22. The device driver initiates the DMA operation using a DMA controller in the Axon Southbridge. The small message area provides regions of memory that can be accessed remotely by user space instructions without a context switch to the kernel or device driver interaction. There is a unique device driver instance on both the Opteron and the Cell/B.E. blade for each Axon Southbridge. A virtual Ethernet driver (also replicated per Axon) supports point-to-point communications between the Opteron and each Cell/B.E processor.

2.1.2 I/O node

As mentioned previously in 1.3.2, “Compute node and I/O rack” on page 10, each I/O node is an IBM System x3655 server. I/O nodes are diskless and serve as “pipes” to the external file system across the 10 Gigabit Ethernet file system LAN.

Each I/O node runs Fedora Linux as its operating system. Since the node is diskless, it is booted up from its Connected Unit’s service node. The I/O node will run either the IBM

Note: From an IBM BladeCenter Advanced Management Module (AMM) perspective, the TriBlade still appears as separate blades. In other words, it appears as one LS21 and two QS22s. The logical grouping of the LS21 and QS22s is handled through the xCAT management tools. See 2.3, “xCAT” on page 23 for more information.

20 Roadrunner: Hardware and Software Overview

GPFS™ or Panasas PanFS client to communicate with the external file system, depending on what file system software is running there.

2.1.3 Service node

Service nodes are standard IBM System x3655 Opteron-based servers and are diskless. There is one dedicated service node per Connected Unit, so this image can be updated directly from the master node over the management network (MVLAN) described in “Gigabit Ethernet management VLAN (MVLAN)” on page 17.

Service nodes obtain copies of the boot images for the I/O nodes and compute nodes from the master node. These images are refreshed on an as needed basis. The images are loaded over the CVLAN (see “Gigabit Ethernet Control VLAN (CVLAN)” on page 15).

2.1.4 Master (management) node

The master node is a standard IBM System x3655 Opteron-based server and is booted from the local disk. The master node runs Fedora Linux.

2.2 Cluster boot sequence

The initial booting of the nodes is complicated by two factors in the Roadrunner system:

� All of the nodes except for the master node are diskless, so they must boot over the network.

� There are over 3,000 total nodes and 10,000 operating system images that need to be installed and booted.

There will be times when the entire system needs to be booted, and there will be times when only parts of the system need to be booted (while the rest of the system is still available but powered off). This places two distinct demands on the management network:

� It must be able to boot the entire system without causing timeouts on the management network such that no boot progress is being made.

� It must be able to boot substantial portions of the system without interfering with any status and control operations that are occurring on the running portion of the system.

Since the majority of nodes are diskless, a scalable way to move the boot images to each of the nodes is required. To this end, a hierarchy of management nodes has been created.

The solution to this concern is to use a bootstrap protocol (BOOTP) together with the trivial file transfer protocol (TFTP) subnet multicast to boot the diskless LS21 Opteron and QS22 Cell/B.E. blades. This method provides a broadcast of the common boot image that the LS21s and QS22s can pick up midstream. The multicast repeats until all requesting blades have received all packets of the boot image. There are unique boot images for the various configurations. The boot images are stored on the Connected Unit service nodes and multicast over the CVLAN. This method significantly reduces network traffic compared to sending individual boot images to each processor.

Note: There is only one master node for the entire Roadrunner cluster.

Chapter 2. Roadrunner software overview 21

2.2.1 Boot scenarios

This section describes in more detail what happens when a cluster (or parts of the cluster) are booted up.

Master (management) node (tier 1)This node is installed and booted with the required management node image. The management node boots from the local disk.

Service nodes (tier 2)There is only one service node per Connected Unit, so this image can be updated directly from the master node over the MVLAN at any time (not just at service node bring-up). Once booted, service nodes obtain copies of the boot images for the I/O nodes and compute nodes from the master node. These images are refreshed on an as-needed basis. The images are loaded over the CVLAN through the multicast boot process, which allows for far less network traffic and parallel image download.

I/O nodesOnce successfully booted, the service nodes begin transferring the required boot images down the CVLAN. The I/O nodes are standard Opteron Linux servers and are booted diskless with the required image. I/O nodes are connected to the 10 GB Global File System (GFS) to service the compute nodes’ file access requests. The image required to boot the I/O node is received from its local service node through the CVLAN network.

Compute nodes (TriBlades)Compute nodes (TriBlades) are either accelerated or non-accelerated, with the difference being that accelerated nodes will have their associated Cell/B.E. blades powered on and booted, while Cell/B.E. blades on the non-accelerated nodes are left powered off.

There is no need for a “heartbeat” function between the Opteron core and its associated Cell Broadband Engine processor. The general health of both resources is known by the xCAT software and reflected in the resource manager. Communication health status between the two resources is monitored and understood “on demand” by the application running on the Opteron side. The Data Communication and Synchronization (DaCS) API is notified of errors from the Cell/B.E. processor concerning any data transfer or communications request. Failures of these transactions is reported by the software structures. If the PCI Express connection between the Opteron and Cell/B.E. processor fails, an appropriate error event is posted and the application terminated.

Given the PCI Express interface between the Opteron and Cell/B.E. processor, it is necessary to boot the Cell/B.E. processor portions of a compute node (in the accelerated node pool) before the Opteron portion. This allows the proper initialization of the interconnect firmware and PCI Express device drivers. The Cell/B.E. PCI Express device drivers “listen” for the necessary firmware/driver handshakes from the LS21 and Broadcom HT-2100 (PCIe adapter) expansion card to establish communication. The process of insuring the correct booting sequence is controlled by the xCAT software.

Note: There is no “low power” mode for the Cell/B.E. blades, so some sort of “standby” mode is not possible. They are either on (accelerated) or off (non-accelerated).

22 Roadrunner: Hardware and Software Overview

2.3 xCAT

Setting up the installation and management of a cluster is a complicated task and doing everything manually can become very complicated. The development of xCAT grew out of the desire to automate a lot of the repetitive steps involved in installing and configuring a Linux cluster.

The development of xCAT is driven by customer requirements. Because xCAT itself is written entirely using scripting languages such as korn shell, Perl, and Expect, an administrator can easily modify the scripts should the need arise.

The main functions of xCAT are grouped as follows:

� Automated installation� Hardware management and monitoring� Software administration� Remote console support for text and graphics

For more information about xCAT, refer to the xCAT Web site at:

http://xcat.sourceforge.net

2.4 How applications are written and executed

This section discusses how applications are written and executed on the Roadrunner system. The unique architecture employed means that applications are designed and written in a revolutionary new manner compared to previous parallel processing applications.

2.4.1 Application core

The bulk of the user application, including initiation and termination, runs on the AMD Opteron processor (LS21). It uses Message Passing Interface (MPI) APIs to communicate with the other Opteron processors the application is running on in a typical single program, multiple data (SPMD) fashion. The number of compute nodes used to run the application is determined at program launch.

The MPI implementation of Roadrunner is based on the open-source Open MPI Project and therefore is standard MPI. In this regard, Roadrunner applications are similar to other typical MPI applications (such as those that run on the IBM Blue Gene solution). Where Roadrunner differs in the sphere of application architecture is how its Cell/B.E. “accelerators” are employed. At any point in the application flow, the MPI application running on each Opteron can offload computationally-complex logic to its “subordinate” Cell/B.E. processor.

For more information about Open MPI Project, refer to the Open MPI: Open Source High Performance Computing Web site at:

http://www.open-mpi.org/

Chapter 2. Roadrunner software overview 23

2.4.2 Offloading logic

Determining which logic routines get offloaded to the Cell/B.E. processor, and when that occurs, is one of the most challenging tasks facing an application developer of the Roadrunner system. But it is this very challenge that makes the opportunity for incredibly high application performance possible.

There are two primary techniques that a developer can employ to actually perform asynchronous offloads of logic. This section briefly describes each, and points to areas where you can find more detailed information.

DaCSThe Data Communication and Synchronization (DaCS) library provides a set of services that ease the development of applications and application frameworks in a heterogeneous multi-tiered system (for example, a 64-bit x86 system (x86_64) and one or more Cell/B.E. processor systems). The DaCS services are implemented as a set of APIs providing an architecturally neutral layer for application developers on a variety of multi-core systems. One of the key abstractions that further differentiates DaCS from other programming frameworks is a hierarchical topology of processing elements, each referred to as a DaCS Element (DE). Within the hierarchy, each DE can serve one or both of the following roles:

� A general purpose processing element, acting as a supervisor, control, or master processor. This type of element usually runs a full operating system and manages jobs running on other DEs. This is referred to as a Host Element (HE).

� A general or special purpose processing element running tasks assigned by an HE. This is referred to as an Accelerator Element (AE).

DaCS for Hybrid (DaCSH) is an implementation of the DaCS API specification that supports the connection of an HE on an x86_64 system to one or more AEs on Cell/B.E. processors. In SDK 3.0, DaCSH only supports the use of sockets to connect the HE with the AEs. Direct access to the Synergistic Processor Elements (SPEs) on the Cell/B.E. processor is not provided. Instead, DaCSH provides access to the PowerPC Processor Element (PPE), allowing a PPE program to be started and stopped and allowing data transfer between the x86_64 system and the PPE. The SPEs can only be used by the program running on the PPE.

For more information about DaCS, see IBM Software Development Kit for Multicore Acceleration Data Communication and Synchronization Library for Hybrid-x86 Programmer's Guide and API Reference, SC33-8408.

ALFThe Accelerated Library Framework (ALF) provides a programming environment for data and task parallel applications and libraries. The ALF API provides you with a set of interfaces to simplify library development on heterogeneous multi-core systems. You can use the provided framework to offload the computationally intensive work to the accelerators. More complex applications can be developed by combining the several function offload libraries. You can also choose to implement applications directly to the ALF interface.

ALF supports the multiple-program-multiple-data (MPMD) programming module where multiple programs can be scheduled to run on multiple accelerator elements at the same time.

24 Roadrunner: Hardware and Software Overview

The ALF functionality includes:

� Data transfer management� Parallel task management� Double buffering� Dynamic load balancing for data parallel tasks

With the provided API, you can also create descriptions for multiple compute tasks and define their execution orders by defining task dependency. Task parallelism is accomplished by having tasks without direct or indirect dependencies between them. The ALF run time provides an optimal parallel scheduling scheme for the tasks based on given dependencies.

For more information about ALF, see IBM Software Development Kit for Multicore Acceleration Accelerated Library Framework for Hybrid-x86 Programmer's Guide and API Reference, SC33-8406.

Chapter 2. Roadrunner software overview 25

26 Roadrunner: Hardware and Software Overview

Appendix A. The Cell Broadband Engine (Cell/B.E.) processor

Of all of the components that make up the Roadrunner cluster, the Cell/B.E. processor holds a special place in that it provides extraordinary compute power that can be harnessed from a single multi-core chip. This appendix provides a brief architectural overview of the current Cell/B.E. processor, the motivation for some of its features, as well as the general properties of this unique processor.

For additional information about the Cell/B.E. processor, refer to the following resources:

� Programming the Cell Broadband Engine·õ Architecture: Examples and Best Practices, SG24-7575

� IBM Software Development Kit for Multicore Acceleration Data Communication and Synchronization Library for Cell/B.E. Programmer's Guide and API Reference, SC33-8407

� IBM Software Development Kit for Multicore Acceleration Accelerated Library Framework for Cell/B.E. Programmer's Guide and API Reference, SC33-8333

� The Cell/B.E. project at IBM Research, found at:

http://www.research.ibm.com/cell/

� The Cell/B.E. resource center, found at:

http://www.ibm.com/developerworks/power/cell/

A

Note: Be aware that ample and extensive resources exist on the Cell/B.E. processor, the Cell/B.E. architecture, as well as tutorials for the interested programmer. It is not the intention of this publication to reproduce all of this information in this short section. We have utilized these extensive resources in our attempt to provide this summary.

© Copyright IBM Corp. 2009. All rights reserved. 27

Background

The Cell/B.E. architecture is designed to support a very broad range of applications. The first implementation is a single-chip multiprocessor with nine processor elements operating on a shared memory model, as shown in Figure A-1. In this respect, the Cell/B.E. processor extends current trends in PC and server processors. The most distinguishing feature of the Cell/B.E. processor is that, although all processor elements can share or access all available memory, their function is specialized into two types: the Power Processor Element (PPE) and the Synergistic Processor Element (SPE). The Cell/B.E. processor has one PPE and eight SPEs.

The architectural definition of the physical Cell/B.E. architecture-compliant processor is much more general than the initial implementation. A Cell/B.E. architecture-compliant processor can consist of a single chip, a multi-chip module (or modules), or multiple single-chip modules on a system board or other second-level package. The design depends on the technology used and performance characteristics of the intended design.

Logically, the Cell/B.E. architecture defines four separate types of functional components:

� PowerPC Processor Element (PPE)� Synergistic Processor Unit (SPU)� Memory Flow Controller (MFC)� Internal Interrupt Controller (IIC)

The computational units in the Cell/B.E. architecture-compliant processor are the PPEs and the SPUs. Each SPU must have a dedicated local storage, a dedicated MFC with its associated memory management unit (MMU), and a replacement management table (RMT). The combination of these components is called a Synergistic Processor Element (SPE).

Figure A-1 Cell/B.E. schematic

The first type of processor element, the PPE, contains a 64-bit PowerPC architecture core. It complies with the 64-bit PowerPC architecture and can run 32-bit and 64-bit applications. The second type of processor element, the SPE, is designed to run computationally intensive single-instruction multiple-data (SIMD)/vector applications. It is not intended to run a full featured operating system. The SPEs are independent processor elements, each running their own individual application programs or threads. Each SPE has full access to shared memory, including the memory-mapped I/O space implemented by multiple DMA units. There is a mutual dependence between the PPE and the SPEs. The SPEs depend on the PPE to run the operating system and, in many cases, the top-level thread control for a user code. The PPE depends on the SPEs to provide the bulk of compute power.

28 Roadrunner: Hardware and Software Overview

The SPEs are designed to be programmed in high level languages. They support a rich instruction set that includes extensive SIMD functionality. However, like conventional processors with SIMD extensions, use of SIMD data is preferred but not mandatory. For programming convenience, the PPE also supports the standard PowerPC architecture instruction set and the SIMD/vector multimedia extensions. To an application programmer, the Cell/B.E. processor looks like a single core, dual threaded processor with eight additional cores, each having their own local store. The PPE is more adept than the SPEs at control-intensive tasks and quicker at task switching. The SPEs are more adept at compute intensive tasks and slower than the PPE at task switching. Either processor element is capable of both types of functions. This specialization is a significant factor in accounting for the order-of magnitude improvement in peak computational performance and power efficiency that the Cell/B.E. processor achieves over conventional processors.

The more significant difference between the SPE and PPE lies in how they access memory. The PPE accesses memory with load and store instructions that move data between main storage and a set of registers, the contents of which may be cached. PPE memory access is like that of a conventional processor. The SPEs in contrast access main storage with direct memory access (DMA) commands that move data and instructions between main storage and a private local memory, called a local store (LS). An SPE's instruction fetches and load/store instructions access a private local store rather than the shared main memory.

This three-level organization of storage (registers, LS, and main memory), with asynchronous DMA transfers between LS and main memory, is a radical break from conventional architecture and programming models. It explicitly parallels computation with the transfer of data and instructions that feed computation and stores the results of computation in main memory.

A primary motivation for this new memory model is the realization that over the past twenty five years, memory latency, as measured in processor cycles, has increased by almost three orders of magnitude. The result is that application performance is, in most cases, limited by memory latency rather than peak compute capability, as measured by processor clock speeds. When a sequential program performs a load instruction that encounters a cache miss, program execution comes to a halt for several hundred cycles (techniques such as hardware threading attempt to hide these stalls, but it does not help single threaded applications). Compared to this penalty, the few cycles that it takes to set up a DMA transfer for an SPE is a much better trade off, especially considering the fact that each of the eight SPE's DMA controllers can maintain up to 16 DMA transfers in flight simultaneously. Anticipating DMA needs efficiently can provide “just in time delivery” of data, which may reduce this stall or eliminate it entirely. Conventional processors, even with deep and costly speculation, manage to get, at best, a handful of independent memory accesses in flight.

One of the SPE's DMA transfer methods supports a list (such as a scatter gather list) of DMA transfers that is constructed in an SPE's local store, so that the SPE's DMA controller can process the list asynchronously while the SPE operates on previously transferred data. In several cases, this approach of accessing memory has improved application performance by almost two orders of magnitude when compared to the performance of conventional processors This is significantly more than one would expect from the peak performance ratio (approximately 10x) between the Cell/B.E. processor and conventional PC processors.

Appendix A. The Cell Broadband Engine (Cell/B.E.) processor 29

The processor elements

The general Cell/B.E. architecture-compliant processor may contain one or more PPEs, while the current implementation consists of only one. The PPE contains a 64-bit, dual threaded PowerPC RISC core and supports a PowerPC virtual memory subsystem. The current PowerPC PPE runs at 3.2 GHz. It has 32 KB level-1 (L1) instruction and data caches and a 512 KB level-2 (L2) unified (instruction and data) cache. It is intended primarily for control processing, running an operating system, managing system resources, and managing SPE threads. It can run existing PowerPC architecture software and is well suited to executing system control code. The instruction set for the PPE is an extended version of the PowerPC instruction set. It includes the vector/SIMD multimedia extensions.

Each of the eight Synergistic Processor Elements (SPEs) contains a 3.2 GHz Synergistic Processor Unit (SPU) vector processor plus the 256 KB of local store that is directly addressable. Computationally, each of these SPEs is capable of producing four floating point results per clock period. Simple arithmetic shows that all eight of these SPEs have a peak compute power of 102.4 gigaflops.

The eight identical SPEs are single-instruction multiple-data (SIMD) processor elements that are intended for computationally intensive operations allocated to them by the PPE. Each SPE contains a RISC core, 256 KB software controlled local store for instructions and data, and a set of 128 registers, each of which is 128 bits wide. The SPEs support a special SIMD instruction set and a unique set of commands for managing DMA transfers and inter-processor messaging and control.

SPE DMA transfers access main memory using PowerPC effective addresses. As in the PPE, SPE address translation is governed by PowerPC architecture segment and page tables, which are loaded into the SPEs by privileged software running on the PPE. The SPEs are not intended to run an operating system.

An SPE controls DMA transfers and communicates with the system by means of channels that are implemented in and managed by the SPE's Memory Flow Controller (MFC). The channels are unidirectional message passing interfaces. The PPE and other devices on the system, including other SPEs, can also access this MFC state through the MFC's memory-mapped I/O (MMIO) registers and queues, which are visible to software in the main memory address space.

The Element Interconnet Bus

The SPEs, PPE, the Memory Interface Controller (MIC) and broadband interface, and the connection to other Cell/B.E. processors within an SMP are interconnected through a high speed Element Interconnect Bus (EIB). The EIB is the communication path for commands and data between all processor elements on the Cell/B.E. processor and the on chip controllers for memory and I/O. The EIB supports full memory coherent and symmetric multiprocessor (SMP) operations. A Cell/B.E. architecture processor is designed to be combined coherently with other Cell/B.E. architecture processors to produce a cluster. The Cell/B.E. blade is one such example where two Cell/B.E. processors are combined in a shared memory environment to produce an SMP.

The EIB consists of four 16 byte wide data rings, two in each direction, and a central arbiter. In the absence of path contention, each ring can perform three concurrent data transfers. Each ring transfers 128 bytes (one PPE cache line) at a time. Processor elements can drive and receive data simultaneously. The SPEs, PPE, and PIC each have 25.6 GBps links to and from the EIB. In aggregate, the EIB is capable of 204.8 GBps transfers. Figure A-1 on

30 Roadrunner: Hardware and Software Overview

page 28 shows each of these elements and the order in which the elements are connected to the EIB. The connection order is important to programmers seeking to minimize the latency of transfers on the EIB, where latency is a function of the number of connection hops. Transfers between adjacent elements have the shortest latencies, while transfers between elements separated by multiple hops have the longest latencies.

The EIB's internal maximum bandwidth is 96 bytes per processor clock cycle. Multiple transfers can be in process concurrently on each ring, including more than 100 outstanding DMA memory transfer requests between main storage and the SPEs in either direction. These requests also may include SPE memory to and from the I/O space. The EIB does not support any particular quality of service (QoS) behavior other than to guarantee forward progress. However, a resource allocation management (RAM) facility resides in the EIB. Privileged software can use it to regulate the rate at which resource requesters (the PPE, SPEs, and I/O devices) can use memory and I/O resources.

Memory Flow Controller

The Memory Flow Controller (MFC) is the data transfer engine. It provides the primary method for data transfer, protection, and synchronization between main storage and the associated local storage, or between the associated local storage and another local storage. An MFC command describes the transfer to be performed. A principal architectural objective of the MFC is to perform these data transfer operations in as fast and as fair a manner as possible, thereby maximizing the overall throughput of the processor.

Commands that transfer data are called MFC DMA commands. These commands are converted into DMA transfers between the local storage domain and main storage domain. Each MFC can typically support multiple DMA transfers at the same time and can maintain and process multiple MFC commands. To accomplish this, the MFC maintains and processes queues of MFC commands. Each MFC provides one queue for the associated SPU (MFC SPU command queue) and one queue for other processors and devices (MFC proxy command queue). Logically, a set of MFC queues is always associated with each SPU in a Cell/B.E. architecture-compliant processor.

The on-chip memory interface controller (MIC) provides the interface between the EIB and physical memory. The IBM BladeCenter QS22 uses normal DDR memory and additional hardware logic to implement the MIC. Memory accesses on each interface are 1 to 8, 16, 32, 64, or 128 bytes, with coherent memory ordering. Up to 64 reads and 64 writes can be queued. The resource allocation token manager provides feedback about queue levels. The MIC has multiple software controlled modes, including fast path mode (for improved latency when command queues are empty), high priority read (for prioritizing SPE reads in front of all other reads), early read (for starting a read before a previous write completes), speculative read, and slow mode (for power management). The MIC implements a closed page controller (bank rows are closed after being read, written, or refreshed), memory initialization, and memory scrubbing.

Appendix A. The Cell Broadband Engine (Cell/B.E.) processor 31

32 Roadrunner: Hardware and Software Overview

Glossary