OASIS4 – a coupling software for next generation earth system ... … · forms the parallel...

Transcript of OASIS4 – a coupling software for next generation earth system ... … · forms the parallel...

Geosci. Model Dev., 3, 87–104, 2010www.geosci-model-dev.net/3/87/2010/© Author(s) 2010. This work is distributed underthe Creative Commons Attribution 3.0 License.

GeoscientificModel Development

OASIS4 – a coupling software for next generation earthsystem modelling

R. Redler1,*, S. Valcke2, and H. Ritzdorf1,**

1NEC Research Laboratories, NEC Europe Ltd., Sankt Augustin, Germany2CERFACS, Toulouse, France* now at: Max-Plack-Institute for Meteorology, Hamburg, Germany** now at: NEC Deutschland GmbH, Dusseldorf, Germany

Received: 19 June 2009 – Published in Geosci. Model Dev. Discuss.: 7 July 2009Revised: 17 November 2009 – Accepted: 19 December 2009 – Published: 22 January 2010

Abstract. In this article we present a new version ofthe Ocean Atmosphere Sea Ice Soil coupling software(OASIS4). With this new fully parallel OASIS4 coupler wetarget the needs of Earth system modelling in its full com-plexity. The primary focus of this article is to describe thedesign of the OASIS4 software and how the coupling soft-ware drives the whole coupled model system ensuring thesynchronization of the different component models. The ap-plication programmer interface (API) manages the couplingexchanges between arbitrary climate component models, aswell as the input and output from and to files of each indivi-dual component. The OASIS4 Transformer instance per-forms the parallel interpolation and transfer of the couplingdata between source and target model components. As a newcore technology for the software, the fully parallel searchalgorithm of OASIS4 is described in detail. First bench-mark results are discussed with simple test configurationsto demonstrate the efficiency and scalability of the softwarewhen applied to Earth system model components. Typicallythe compute time needed to perform the search is in the orderof a few seconds and is only weakly dependant on the gridsize.

1 Introduction

Global coupled models (GCMs) have been used for climatesimulations since the late 1960s starting with the pioneeringwork by Manabe and Bryan(1969). With advances in com-puting power, coupled models have been in use for century-long simulations since the late 1980s. Since that time, we

Correspondence to:R. Redler([email protected])

observe a rapid and continuous increase of activity in globalcoupled modelling as additional computer resources have be-come available. Climate models can be considered as multi-disciplinary or multi-physics software tools to simulate theinteractions of the atmosphere, oceans, land surface, sea iceand other components of the climate system. They are usedfor a variety of purposes from studies of the dynamics of theweather and climate system to projections of future climate.These models can range from relatively simple to quite com-plex, that is from zero-dimensional models and Earth-systemModels of Intermediate Complexity (EMIC) to complex 3-DGlobal Climate Models (GCM) and Regional Climate Mo-dels (RCM).

A GCM aims to describe geophysical flow by integrat-ing a variety of fluid-dynamical, chemical, or even biologicalequations that are either derived directly from physical laws(for example Newton’s law) or constructed by more empiri-cal means. Classically, the two main constituents of a GCMare atmospheric and ocean circulation models. When cou-pled together (along with other components such as a seaice model and a land model) an ocean-atmosphere coupledgeneral circulation model forms the basis for a full climatemodel. A very recent trend in GCMs is to extend these tra-ditional coupled climate models to become full Earth systemmodels (ESM), by including further model components tocalculate atmospheric chemistry, marine biology or a carboncycle model (matter cycle in a more general sense) to morecorrectly simulate the interaction between the different sub-components. Typically, these different model componentsare developed independently by different research groups.

Numerical modelling in its full complexity is still in itsinfancy, primarily due to the immense complexity of thetask and insufficient empirical knowledge of many aspectsof climate related processes. This is not only the case for the

Published by Copernicus Publications on behalf of the European Geosciences Union.

88 R. Redler et al.: The OASIS4 software for coupled climate modelling

oceans where in particular the knowledge of the subsur-face circulation is rather poor, but also for the hydrolo-gical cycle where precipitation, evaporation and the three-dimensional distribution of water vapour and clouds are in-sufficiently known. Despite these uncertainties the devel-opment of global climate models is one of the main unify-ing components in the World Climate Research Programme(WCRP) of the World Meteorological Organisation (WMO).The output of these models is considered as fundamentaldeliverables from the whole WCRP and provides the basisfor the understanding and prediction of natural and human-made climate variations (CLIVAR Scientific Steering Group,1998).

In the past, the important role of climate models has beenaddressed in a variety of research programmes on a globalscale like the Climate Variability programme (CLIVAR) orthe Coupled Model Intercomparison Project (CMIP) as oneof the CLIVAR initiatives, while EuroCLIVAR, the BalticSea Experiment (BALTEX) or the North American RegionalClimate Change Assessment Program (NARCCAP) focusedon a regional scale. Most important, global climate modelsform one of the backbones of the Assessment Reports (AR)published by the Intergovernmental Panel of Climate Change(IPCC).

In addition to the model components used in an ESM, eachcommunity representing one of the physical components stillfeels a strong need for having separate stand-alone special-purpose versions of the individual components of the cou-pled climate models. These are used to investigate processesin the different subsystems and to test new physical parame-terisations in controlled scenario runs. The existence of dif-ferent research objectives and the need to estimate modeluncertainty from model intercomparison (like for the IPCCAR) support the idea of a multi-component approach and theneed for the interoperability of the different components. Toachieve this interoperability, two approaches are nowadaysconsidered: either some standard programming rules are fol-lowed by all component developer groups and the result-ing components are integrated into a single application, orthe component models independently developed remain sep-arate applications and an external coupling software ensuringthe lowest possible degree of interference in the componentcodes is used. As the first approach is almost impossible toapply when confronted with the heterogeneous developmentenvironment in Europe, we offer, with the OASIS4 couplerdescribed in this paper, an implementation of the second ap-proach.

With OASIS4, we continue the software development ofthe Ocean Atmosphere Sea Ice Soil (OASIS) coupling soft-ware which has its origins in the early nineties at the Eu-ropean Centre for Research and Advanced Training in Sci-entific Computing (CERFACS, Toulouse, France). With theemerging complexity of ESMs, their increasing size (with re-spect to the number of grid points to be treated) and higherdegree of parallelism, the limitations of OASIS3 (Valcke,

2006) and its predecessors have become visible and the needhas emerged to provide the ESM community with new fullyparallel coupling software. The development of OASIS4started during the Program for Integrated Earth System Mod-elling (PRISM) project funded by the European Commissionfrom 2001 to 2004. PRISM (Valcke et al., 2007) was or-ganized by the European Network for Earth System Mod-elling (ENES), and is currently continuing within the newFramework 7 Programme funded by the European Commis-sion with the IS-ENES (Infrastructure for the European Net-work for Earth System modelling) project.

At the time when the OASIS4 development started, cou-pling software performing field transformation already ex-isted, such as the Mesh based parallel Code Coupling Inter-face (MpCCI) (Joppich and Kurschner, 2006) or the Com-munity Climate System Model (CCSM) Coupler 6 (Cpl6)(Buja and Craig, 2002). MpCCI is not available as sourcecode, and for this particular reason the MpCCI software hasnot been accepted by the ESM community. With new ver-sions of the MpCCI software its developers have abandonedsome important aspects of parallelism. Compared to previ-ous versions all grid information is now assembled in a sin-gle MpCCI coupler instance for performing the neighbour-hood search and regridding. This approach makes it evenless attractive for the usage within an ESM with a high de-gree of parallelism. The concept of CCSM and Cpl6 is verymuch similar to OASIS4. However, earlier versions of Cpl6have targeted the specific needs of the CCSM and the cou-pler could only be executed within the complete CCSM. TheEarth System Modelling Framework (ESMF) software en-vironment (Hill et al., 2004) provides an implementation ofthe first interoperability approach described above. ESMF isa high-performance software infrastructure that can be usedto build a complex application as a hierarchy of individualunits, each unit having a coherent function, like modelling aphysical phenomenon or managing the input and output (IO),and a standard calling interface. While an ESMF application,being more integrated, will most probably be more efficientcompared to an OASIS4 coupled system, ESMF may requirea deeper level of intervention in the application code. Forexample, ESMF requires that components be split into ini-tialize, run, and finalize sections, with each callable as a sub-routine. Necessary modifications of the software to achievethe full benefit from ESMF is described briefly by (Hill et al.,2004). In contrast to ESMF, the OASIS4 application pro-grammer interface (API) can be integrated with only minormodifications in the original application code, and is for thisparticular reason more attractive to the European ESM com-munity with its less centralised modelling efforts.

OASIS4 does not include any assumptions about the phys-ical nature of the coupling fields. It may be considereda slight disadvantage that apart from providing some ge-neral arithmetic functions (like adding or multiplying withconstants or time averaging) and regridding functionalityany specific physically motivated pre-and post-processing

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 89

regarding the coupling of physical quantities remains underthe full responsibility of the application code. In compensa-tion, the flexibility of OASIS4 provides the ESM communitywith the ability to couple a wide range of ESM components.

With this article we address the questions we have receivedfrom the growing user community during the last couple ofyears. The originality of OASIS4 relies in its great flexi-bility which is explained in Sects.2–4, where the softwaredesign ideas, the driving and configuration mechanisms andthe application programmer interface to the communicationlibrary are detailed. Another new concept introduced withOASIS4 is the parallel 3-D neighbourhood search and regrid-ding based on the geographical description of the process lo-cal domains (see Sects.5 and6). The data flow, in particularthe common treatment of coupling and IO exchanges (a con-cept already available with OASIS3) is briefly described inSect.7.

We conclude the technical description with first perfor-mance measurements shown in Sect.8 and outlook to on-going and future work in Sect.9.

2 General design and overview

The key concept behind the OASIS4 software is to provide atool to the ESM community which is portable to any existingcomputing environment. Mainly this is achieved by adheringto programming standards that have emerged over time. Fora complete list of systems and environments which are sup-ported by OASIS4, the reader is invited to visit the OASIS4Wiki page1.

The OASIS4 software package provides the source codefor a Driver-Transformer executable and a library (calledPSMILe hereafter). At run-time, the OASIS4 Driver-Transformer and the component models remain separate(possibly parallel) executables. To communicate with therest of the coupled system, each component model needsto be linked against the PSMILe. To be as user-friendly aspossible, the PSMILe API includes a limited number of re-quired function calls, at the same time supporting all typicalESM component data structures. While it is not designedto handle the component internal communication, the librarycompletely manages the coupling data exchanges with othermodel components and the details of the I/O file access. Inthis article, we will use the term “coupled application” to de-scribe the ensemble formed by the component models cou-pled through the OASIS4 Driver-Transformer.

The major task of OASIS4 is to handle the data exchangebetween ESM components. These exchanges are completelybuilt upon the Message Passing Interface (MPI) (Snir et al.,1998; Gropp et al., 1998). The major justification for thisdecision is the fact that MPI has become a quasi standard tohandle parallel applications. MPI libraries are available on

1https://oasistrac.cerfacs.fr/

almost every parallel architecture, be it a vendor-specific im-plementation or provided as one of the many publicly avail-able MPI packages. The complexity of MPI is neverthelesshidden behind the OASIS4 API.

In contrast to previous versions of OASIS, the coupledconfiguration has to be described by the user with the helpof Extentable Markup Language2 (XML) files. In order toread in this information, linking to an XML library is re-quired. Similar to OASIS3, NetCDF (Rew and Davis, 1997)file IO is supported to optionally read and write forcing in-put, diagnostic output and coupling restart files; in this case,a NetCDF or a parallel NetCDF (Li et al., 2003) library needsto be provided. Last but not least, compilers conform to pub-lished Fortran90 and C language standards are required.

With the current version, bilinear, trilinear, bicubic andnearest-neighbour parallel interpolation is provided for themost prominent grid types used in ESM components to mapthe source grid values to the target grid in a data exchange.Furthermore, 2-D conservative remapping is available whichis usually the preferred way to regrid fluxes.

In particular, OASIS4 supports 2- and 3-D coupling be-tween any combination of logically-rectangular, i.e. 2-Dstructured, or Gaussian Reduced grids in the horizontallongitude-latitude plane, each horizontal layer repeating it-self at different vertical levels. OASIS4 is also able to han-dle the exchange of non-geographical data, i.e. data whichare solely provided in an (i, j, k) index space not associ-ated to any geographical domain. To properly handle non-geographical data, it is required that the pairs of source andtarget grids work over the same global index range, althoughthe data can be partitioned differently on the source and tar-get side.

3 The driving and configuration mechanisms

The OASIS4 Driver-Transformer executable can consist ofone or more processes. During the initialisation, the rootprocess of this instance acts as a Driver while during the in-tegration of the coupled model the root and additional pro-cesses act as Transformer processes performing the regrid-ding of the coupling fields. The Transformer task is furtherdiscussed in Sects.6 and8. In this section we focus on theDriver aspect.

OASIS4 supports two ways of starting a coupled appli-cation. With a complete implementation of the MPI2 stan-dard, only the Driver processes have to be started by theuser. All remaining physical components are then spawnedby the OASIS4 Driver at run time. In this scenario, no ex-tra programming work has to be invested in the componentinternal parallelisation as any original MPI communicationwill work as is. If the available MPI library does not sup-port the MPI2 standard, all processes of the whole coupled

2http://www.w3.org/XML/

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

90 R. Redler et al.: The OASIS4 software for coupled climate modelling

application will have to be started simultaneously in a “multi-ple program multiple data” (MPMD) mode; the disadvantageof this approach is that each component must use a specificMPI communicator for its own internal communication. ThisMPI communicator is created and provided by the OASIS4software.

The whole coupled configuration is described with thehelp of XML files. XML is a simple, very flexible text for-mat. Originally designed to meet the challenges of large-scale electronic publishing, XML is also playing an increas-ingly important role in the exchange of a wide variety of dataon the Web and elsewhere. An XML document is simply afile which follows the XML format. The OASIS4 configu-ration XML files must be created by the coupled model de-veloper. A Graphical User Interface (GUI) is currently beingdeveloped to facilitate the creation of those files.

The “Specific Coupling Configuration” (SCC) XML filedefines the general characteristics of a coupled model run,for example the components to be coupled and the numberof processes for each component. The Specific Model Inputand Output Configuration (SMIOC) XML files (one for eachcomponent) describes the relations each component modelwill establish with the external environment through inputsand outputs for a particular run. In the SMIOC files, the userspecifies the coupling exchanges, for example the source ortarget of each input or output field (either another componentor a disk file), the exchange frequency, and the transforma-tions to be performed by OASIS4 on this field.

The contents of the XML files are read and interpreted bythe Driver, and information relevant for the physical compo-nents is communicated to the respective PSMILe and Trans-former processes on case-by-case basis.

4 The application programmer interface

In this section, we briefly describe the design ideas of theOASIS4 API to the PSMILe and highlight the differencescompared to OASIS3.

The API function calls (see Table1) can be split into threedifferent phases. During the initialisation phase, some ba-sic initialisation routines must be called and the grid and ex-change fields need to be specified to the PSMILe. The initial-isation phase is concluded with a call to PRISMEnddef (seeSect.5). The second phase comprises the exchange of datafurther described in Sect.7 while the third phase denotes thetermination of the coupling. For a detailed description of theOASIS4 API, we refer the reader to the OASIS4 User Guide(Valcke and Redler, 2006).

4.1 General design

The general design follows several principles. First, thePSMILe API routines that are defined and implemented shallnot be subject to modifications between the different versions

of the OASIS4 software. However, new routines may beadded in the future to support new functionality. In addition,the PSMILe has been kept extendable to new types of cou-pling data and new types of grids by using Fortran90 func-tion overloading. Some complexity of the API arises fromthe need to transfer not only the coupling data but also theassociated metadata, i.e. mainly the information about theassociated grid. We carefully selected the necessary argu-ments for each subroutine in order to keep the argument listsas short as possible. At the same time we kept the interfacesgeneric in order to avoid the introduction of another set ofroutine for every new configuration we have to support withthe software.

Like in the MPI, the memory that is used for storing in-ternal representations of various data objects is not directlyaccessible by the user and the objects can only be addressedindirectly by the component via their handle. Handles are oftype integer and represent an index to an entry in a list of therespective objects. The object and its associated handle aresignificant only on the process where it is created.

Furthermore, the internal usage of MPI is completely hid-den from the user, except when the available MPI librarydoes not offer the MPI2 spawning functionality (which im-plies that all processes have to be started simultaneously inan MPMD mode, see Sect.3). In this case, each componenthas to retrieve a special MPI communicator for its internalcommunication which is created by the PSMILe and acces-sible with a specific function call to PRISMGet localcomm(see Table1); this call remains the only MPI-related call.

When using MPI for internal communication in paral-lelised components, the calling sequence of some MPI-and PRISM-related calls has to follow a certain rule whichis sketched in Fig.1. The function calls to PRISMInitand PRISMInit comp can be used as a full substitute ofMPI Init, while the PRISMTerminate can be used as a fullsubstitute for MPIFinalize.

4.2 Data exchanges

The PSMILe manages the coupling data flow between anytwo (possibly parallel) component models with a communi-cation pattern completely hidden from the component codes,following a principle of “end-point” data exchange. Whenproducing data, no assumption is made in the source com-ponent code concerning which other component will con-sume these data or whether they will be written to a file, andat which frequency; likewise, when asking for data, a tar-get component does not know which other component modelproduces them or whether they are read in from a file. Thetarget or the source (another component model or a file) foreach field is defined by the user in the SMIOC XML file (seeSect.3) and the coupling exchanges and/or the IO actionstake place according to the user external specifications. Theswitch between the coupled mode and the forced mode istherefore totally transparent for the component model.

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 91

Table 1. List of OASIS4 PSMILe function calls. Description of the OASIS4 PSMILe Function Calls.

Name Description

Initialisation

PRISM Init Initialisation of the applicationPRISM Init comp Initialisation of a component within an applicationPRISM Def grid Abstract definition of a compute gridPRISM Def partition Definition of the process-local grid partition relative to the global compute domainPRISM Reducedgridmap Additional information needed for Gauss-reduced gridsPRISM Setcorners Definition of geographical positions of vertices of grid cellsPRISM Setmask Definition of masked points or cellsPRISM Setpoints Definition of geographical positions of pointsPRISM Def var Definition of exchange and IO fieldsPRISM Enddef End of the definition phase

Data Exchange

PRISM Get To receive data from a remote component and/or read in from a filePRISM Put To send data to a remote component and/or write into a filePRISM Put restart To write additional data sets into an OASIS4 coupling restart file

Termination

PRISM Terminate Termination of the prism part

Auxiliary and Query Functions

PRISM Calc newdate Add and Subtract operations on the OASIS4 Fortran90 Time structurePRISM Get localcomm To retrieve a MPI communicator for component local communciationPRISM Initialized To check whether PRISMInit has already been calledPRISM Put inquire A query function to obtain information whether a PRISMPut has to be postedPRISM Terminated Query function to check whether PRISMTerminate has already been calledPRISM Abort To terminate the coupled application in a well defined to support user-defined error handling

The sending and receiving PSMILe PRISMPut- andPRISM Get calls can be placed anywhere in the source andtarget code and possibly at different locations for the dif-ferent coupling fields. These routines can be called by themodel at each timestep. The actual date at which the callis performed and the date bounds for which it is valid aregiven as arguments; the sending/receiving is effectively per-formed only if the date and date bounds correspond to a timeat which it should be activated, given the field coupling orIO dates indicated by the user in the SMIOC; a change in thecoupling or IO dates is therefore also totally transparent forthe component model itself.

When the action is activated at a coupling or IO date, eachprocess sends or receives only its local partition of the data,corresponding to its local grid defined previously. The cou-pling exchange, including data repartitioning if needed, thenoccurs, either directly between the component models, or viaadditional Transformer processes if regridding is needed.

If the user specifies that the source of a PRISMGet or thetarget of a PRISMPut is a disk file, the PSMILe exploitsthe GFDL mppio package (Balaji, 2001) for its file IO (seeSect.7 for more detail).

PRISM Init comp

...

PRISM Terminate

PRISM Init

PRISM Init comp

...

PRISM Terminate

MPI Init

PRISM Init comp

...

PRISM Terminate

MPI Finalize

MPI Init

PRISM Init

PRISM Init comp

...

PRISM Terminate

MPI Finalize

Fig. 1. The four allowed sequences of calls for initializing and terminating an ESM component for coupling

with OASIS4 and its relation to MPI.

32

Fig. 1. The four allowed sequences of calls for initializing and ter-minating an ESM component for coupling with OASIS4 and its re-lation to MPI.

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

92 R. Redler et al.: The OASIS4 software for coupled climate modelling

4.3 OASIS3 and OASIS4 APIs

The major difference between the OASIS3 and OASIS4 APIsfrom a user point view is in the initialisation phase. InOASIS3, the global grid information has to be provided inseparate OASIS3 specific NetCDF grid files which are ac-cessed by OASIS3 routines. With OASIS4 it is assumed thateach component has all knowledge about its own local geo-graphical grids at some point during the initialisation phase.This local grid information has to be provided through theAPI to initialise the PSMILe search.

Beside this difference, we have kept the design of theOASIS3 and OASIS4 PSMILe API as similar as possible,both following in particular the principle of “end-point” dataexchange explained above, in order to allow an easy transi-tion from OASIS3 to OASIS4 for the community of users.

5 Neighbourhood search

The PSMILe library performs the exchanges of coupling databetween source and target components. Usually those com-ponents express their coupling fields on different numeri-cal grids and a regridding operation has to be performedon the coupling data. The regridding implies: 1-identifyingthe “neighbours” of each target point, i.e. the source gridpoints that will contribute to the calculation of the targetgrid point value in the regridding process (in this article, weuse the term “neighbourhood search” to describe this pro-cess), 2-calculating the weights of the different neighboursand 3-performing the calculation of the grid point values. Tominimize the transfer of source data, it was decided to per-form the neighbour search (1) in the source PSMILe and totransfer only the useful source grid points (see Sect.5.1 forfurther details) to the Transformer which performs the re-gridding calculation per se (i.e. 2 and 3). In this section, wedescribe the neighbourhood search performed by the sourcePSMILe.

The goal here is to provide a highly efficient search al-gorithm which determines the neighbourhood relations be-tween source and target grid points without generating sig-nificant overhead with respect to the model compute time.For ESMs the typical elapsed time for a job is in the orderof hours and the search should not take more time than a fewseconds. At the same time the algorithm has to rely on onlya very limited number of a-priory assumptions in order to beflexible and applicable to more than just a few special gridconfigurations.

Compared to typical physical algorithms for numericalmodels, the result or the output of a search algorithm is al-ready well determined when the problem is posed, i.e. whenthe numerical grids are defined. For any given regriddingscheme a unique solution exists regarding the neighbourhoodbetween target and source points. When programming a li-brary for such purposes, the task is to have flexible algo-

rithms that are able to deal with any peculiarities of a givengrid configuration. While an application programmer typi-cally has a complete control over the local grid, this is notthe case for the library programmer as the library has towork based on the information provided through the user APIwithout any or with only very limited a-priory assumptions.

In order to initialise the search, the components have toprovide geographical information about the position of thegrid points and about the reference volume or area that isassociated with the grid points. This information is storedin one-dimensional arrays together with the grid type depen-dent shape of the original multidimensional array. The gridtype and the information about the array shape provides theimplicit knowledge about the connectivity of the grid pointswithin a single block. Within these blocks correct neigh-bours have to be identified for each target point. In a sim-ple implementation of such search algorithm, this task canbe achieved by comparing the distances for each target pointwith all source points available. This approach will be of theorder of N2 w.r.t. to the compute time (N being the numberof target points to be processed, assuming that source and tar-get grids are about the same size). While such an approachmay still be justified for small problem sizes, this approachwill fail when the horizontal resolution is increased and gridsbecome larger in size. For OASIS4, we have chosen a hierar-chical approach in order to minimise the workload during thesearch. We briefly describe here key aspects of the search al-gorithm which ensure its efficiency. An additional difficultyarises with parallelised components where the source grid ispartitioned. For selected target points, the required “neigh-bour” source locations may reside on different processes andthe search has to be extended across process boundaries. Inthe remaining part of this section we describe the differenttasks which are performed by the PRISMEnddef call.

5.1 Point-based search for block-structured grids

The completion of the definition of grids and coupling fieldsby the component is announced in the component code witha call to the PRISMEnddef routine (end of definition) oneach process. At this stage, the coupling information fromthe XML configuration files about which components haveto exchange data with each other is already available in thePSMILe (see Sect.3). In an initial step, each coupled com-ponent process determines the envelope of its locally definedgrid partition. The envelopes are exchanged between thosecomponent processes that have to exchange data with eachother. Each component process now has a global view ongrid partitions and processes with which it may have a cou-pling interface. As a first step towards an efficient search,regions outside the envelop intersections between source andtarget local grids are cut off. Pairs of source and target pro-cesses that have common intersections are identified. Foreach of these intersections, lists of target grid points includedin the intersection are generated on the target side. Each list

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 93

is transmitted to the respective source process which shares aparticular intersection. We note here that only the envelopesof all partitions are stored on all processes. For this and allfollowing steps in the PSMILe or in the Transformer, it isnever required to gather the global grid information at a cen-tral place onto one single process. This design aspect allowsa minimal use of extra memory for the coupling operations.

The task of the second stage of the search is to find a re-ference source point for each target point which can later beused as a starting point to build the required interpolationstencil, i.e. the set of source neighbour points used for thecalculation of the target point value, or to locate additionalneighbours based on the user choice.

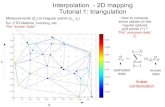

On the source side, a grid hierarchy is established out ofthe local source grid. For block-structured grids, this gridhierarchy is constructed by splitting the local source grid intosmaller subsections with a refinement factor of two similarto a multi-grid approach (see Fig.2). The subsection on thehighest (finest) level in the grid hierarchy typically containsthree grid points in each direction. For each target grid pointin the list, we first determine the containing subsection onthe lowest (coarsest) level by considering the bounding boxesof the subsections on this level. These bounding boxes areconstructed by evaluating the minimum and maximum cellextent in a given i-j-k index range. This containing box isthen subdivided into four subsections (or eight subsectionsin a 3-D search) on the next higher level, and those four oreight boxes are investigated in the same way. The processin continued until we have reached the highest level and areclose to the required source point.

We note here that due to this simple approach based onbounding boxes, it is possible for any target point to be lo-cated in a region covered by more than one bounding box.We start the search in one of these boxes moving up to finerlevels. The higher the levels are in our hierarchy the closerwe can approximate the exact geometry of the local domain.It may happen that the algorithm does not find any appro-priate bounding box on a higher level. In such a case thealgorithm jumps back to the previous lower level and contin-ues with another bounding box. These cycles are repeateduntil either a containing source cell is identified or until allbounding boxes under consideration have been investigated.At this stage, the search on the global domain is completed.

A great advantage of the multi-grid algorithm is that it isonly weakly dependent on the problem size. When the gridsize is doubled in each horizontal direction this would onlyintroduce one more multi-grid level. For grids commonlyused in climate modelling and for higher resolution grids,the overhead to set up the multi-grid hierarchy is more thancompensated by the speedy search. OASIS4 is thus able tohandle very large problem sizes (for example a global gridwith 1/12◦ horizontal resolution) in a reasonable amount oftime. The performance of the OASIS4 multi-grid search isfurther discussed and compared with the performance of aclassical search in Sect.8.4.

overlap

Fig. 2. Sketch of a multi-grid hierarchy for a local domain. Typi-cally the number of cells is much higher and the PSMILe will con-struct o(10) levels.

Now that the containing source cell is identified, the nextstep is to determine the reference source point. The sourcepoints are used to construct an auxiliary cell grid where thesource points serve as corners of the cells (see dashed andsolid lines in Fig.3). The task is then to locate the source-point cell which contains the projection of the respective tar-get point. This is performed with a local search by investigat-ing each of the four source-point cells that surround the pointwhich belongs to the containing source cell. In this example,the source-point box built with points at indices [i, j], [i+1,j], [i, j+1], and [i+1, j+1] is chosen and the source-cell of thepoint [i+1, j+1] (green box in Fig.3) is found. This imme-diately directs us to identify the point with index [i, j] as the“lower-left” source-point. This location is now stored as thereference source point for the particular target point.

In the final step, the remaining source neighbours are iden-tified which are required to perform the interpolation re-quested by the user. For a bilinear interpolation, we are nowable to identify the four corner points of the auxiliary cell(black open and solid bullets connected by solid lines) as thefour neighbour source points needed. For a bicubic interpo-lation, sixteen neighbours have to be identified (the afore-mentioned bilinear points plus the open blue circles shownin Fig. 3).

Typically, block-structured grids include masked points intheir compute domain. The algorithm as it is described so fardoes not distinguish between source masked and non-maskedpoints but solely works on all grid coordinates in the sourcegrid compute domain. For some target points, some sourcepoints first identified as neighbour points may not be avail-able for the interpolation if they are masked out which meansthat the physical fields do not necessarily contain meaningfulvalues on those points. In such cases the user has different

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

94 R. Redler et al.: The OASIS4 software for coupled climate modelling

i,j

i+1,j−1 i+2,j−1

i+1,j i+2,j

i−1,j+2 i,j+2

i,j+1i−1,j+1

i+1,j+2 i+2,j+2

i+1,j+1 i+2,j+1

i,j−1i−1,j−1

i−1,j

Target Point

Reference Source Point

Associated cell area for point i+1, j+1

Additional source points for bilinear interpolation

Additional source points for bicubic interpolation

Fig. 3. Building the interpolation star based on the initial location.

choices via the XML configuration file. As a default op-tion, the remaining valid points will be used for a weighted-distance interpolation. Another option available is to not in-terpolate onto such target points. In the extreme case whenall neighbour source points identified by the default searchare masked, OASIS4 offers the possibility to search for thenon-masked nearest-neighbour value and use this value forthe target point. For this extra nearest-neighbour search, thealgorithm starts from the original masked source grid pointtaken as an initial guess. Starting from the finest level con-taining the initial guess, the multi-grid hierarchy is then usedagain to locate the closest non-masked source points by mov-ing in so-called w-cycles through the multi-grid hierarchy,that is going down to a coarser level and going up again inanother branch of the hierarchy repeatedly until the closestpoint is found.

5.2 Search on domain-partitioned source grids

On modern parallel systems, the application domain is usu-ally distributed to many processes and/or processors. If theinterpolation of fields was performed using locally availabledata only, i.e. data which is available on the actual process,the result of the interpolation would depend on the actualpartitioning. For example, if a target point is located closeto the internal border of a partitioned domain of the sourceapplication, the standard interpolation stencil may requiresource points which are not located on the actual process but

which are located on a neighbour process. The PSMILe cur-rent search, called “global” search in the following, considersremote neighbour points appropriately, and therefore ensuresthat the interpolation is invariant to the domain partitioning.

The “global” search ensures that required data from sourceneighbour points located on remote processes is provided to-gether with local source data to the OASIS4 Transformer.Since the definition of application grids is quite general andsince the full connectivity information for partitioned gridsis not provided to the OASIS4 library (and is not explic-itly available in most of today’s climate model componentcodes), missing source points for interpolation stencils re-quire an additional search step. In this additional search step,the missing source points for the interpolation are searchedin remote neighbouring domains. For block-structured grids,remote neighbouring domains are identified by exchanginginformation about the global index space provided throughthe PRISMDef Partition function call. If these remotesource neighbour points are found, the full information in-cluding the mask data are returned to the process which hasinitiated this additional search step. At run-time, this processwill collect the data from possibly different processes andwill forward the data for the full interpolation to the Trans-former.

5.3 Point-based search for Gauss-reduced grids

Gauss-reduced grids have been introduced mainly for atmo-spheric models, in particular the ARPEGE and IFS models(see for exampleDeque et al., 1994), to overcome problemsresulting from the convergence of meridians close to the ge-ographical poles. As these models and grids play an impor-tant role for the atmospheric modelling community, the deci-sion has been made to support this type of grid as a specialcase. These grids cannot be classified as block-structuredgrids, nor are they completely unstructured. Instead of treat-ing them as unstructured grids, we are in this particular caseable to make use of some implicit knowledge about how thegrid is constructed and introduce an intermediate step. In or-der to reuse as many of the existing functions as possible,we set up a block-structured auxiliary grid which is regularin longitude and latitude and determine the correspondingmapping of the Gauss-reduced grid points onto this auxil-iary grid. We are now able to perform the initial search onthe auxiliary grid and derive initial locations. By mappingthem back onto the Gauss-reduced grid, we obtain the cor-rect start location to continue the local search on the Gauss-reduced source grid in a similar fashion as it has already beendescribed for block-structured grids. In contrast to block-structured grids, the connectivity cannot be derived from thei–j indices directly. Instead we have to determine the connec-tivity among the grid points stored in an 1-D array in order toproceed with the local search; this is done based on the de-scription of the Gauss-reduced grid provided by calling theroutine PRISMReducedgridMap in the component code.

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 95

5.4 Cell-based search

Compared to the search described above for point-based in-terpolation schemes, the cell-based search has to follow aslightly different approach. In order to determine all nec-essary information for conservative remapping, the task hereis to locate, for each target cell, its overlapping source cells.In order to again reuse as much functionality as possible, weperform a point-based search on the corner coordinates in avery similar way to the search described above in order to ob-tain the start location. The indices that we obtain for the startlocation refer to the data structure of the corner points. Forblock-structured grids, these indices can easily be mappedto cell indices. The cell indices point us to the location ofthe geographical information of the cell corners (or verticesof the element). For each target cell, we can now use thisinitial source cell to continue the local search and identifythe remaining source cells which overlap a given target cell(Fig. 4). The intersection or overlap between a pair of sourceand target cells is determined by a pairwise investigation ofthe intersection of the edges without a detailed evaluation ofthe exact area of overlap. The numbering shown in Fig.4indicates the order in which the local search is performedon the source grid. In this particular case, 21 cells are in-vestigated starting with the initial source cell 1. For each ofthe source cells, the overlap with the target cell is checked.Source cells with no overlap are marked as visited. Sourcecells that overlap with the target cell are marked and stored inmemory. For an individual local search, the path way throughthe source cells is not fixed but dependent on the local prob-lem.

5.5 Optimisation

Most grids used in Earth system modelling are block-structured grids. Historically, codes have been developed forstand alone models with non-global grids in mind. Particularissues regarding the connectivity are handled internal to thecode to the extent it is needed for the advection and diffusionoperators and other boundary conditions. For global gridsso-called cyclic boundary conditions have been introduced toguarantee a seamless continuation of the grid in the zonal di-rection. When approaching the geographical poles additionalghost-cells are introduced in order to properly calculate theoperators along the zonal boundaries (see for exampleMadecand Imbard, 1996). When considering the i–j domain in thecase of coupling, a target cell close to the pole may extendfurther than what is covered by the source ghost-cells in thei–j space. As the codes lack the information about the con-nectivity at the poles, in general, an additional search in thegeographical domain is necessary. Not all of this implicitand sometime configuration specific information is transpar-ent for an external software package like the coupler. A newinterface routine could be added to the API to transfer tothe PSMIle any explicit connectivity information the appli-

Checked source cell without overlap

Source cell with overlap

Target cell

101112

14

20

13

19

1615

18

9

75

41

3

2 6

21

17

8

Checked source cell without overlap

Source cell with overlap

Target cell

101112

14

20

13

19

1615

18

9

75

41

3

2 6

21

17

8

Fig. 4. Search of source cells for conservative remapping. Num-bers indicate the sequence in which the source cell are investigatedlocally.

cations would be able to provide. This would help to increasethe efficiency of the search locally and across process bound-aries beyond what is achieved already today.

The OASIS4 PSMILe search algorithms are designed towork on 3-D model domains. In general, one could thinkabout treating all grids as 3-D fully irregular or unstructuredgrids. In this way, one would ignore important a-priory in-formation useful to speed up the search process. In order totake advantage of the existing a-priory knowledge (in par-ticular about regularity along any of the grid axis), differentgrid types are introduced. The different vertical layers ofgrids with vertical z-coordinates are usually a simple verticaltranslation of the same 2-D horizontal grid. In such cases,the search is split into two parts with a 2-D search in the hor-izontal plane and a 1-D search along the vertical axis. In thehorizontal plane, if the different columns or rows of the gridare respectively just a longitudinal or latitudinal translationof one same column or row, the search can be conducted byperforming a 1-D search along each axis which further re-duces the compute time.

It is obvious that individual source points or cells areneeded for the calculation of more than just one target gridpoint or cell. In order to minimize the amount of data tobe transferred and stored, redundant geographical informa-tion (usually of type double precision or real) is removed andan index list is created to guarantee a well defined and cor-rect access to the geographical information. In order to allowthe scattering onto the final n-dimensional destination arrayduring the data exchange, these access index lists are alsotransmitted to the target process.

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

96 R. Redler et al.: The OASIS4 software for coupled climate modelling

6 PSMILe-Transformer interaction and regridding

As outlined further in Sect.7, the PSMILe supports two dif-ferent ways of exchanging data, directly between two compo-nents or through the Transformer processes when regriddingis required. Here we concentrate on the latter case and de-scribe the transfer of information between the PSMILe andthe Transformer and the regridding per se done on the cou-pling fields.

As the final result of the PSMILe search described in thelast section, each source process holds 1-D lists, each listcontaining the geographical information relevant for the re-gridding of an intersection of the local source grid domainwith one target process grid domain. As stated above, ac-cess index lists are created along with the raw geographi-cal information. Hereafter, we will call these lists “intersec-tion regridding lists”. These intersection regridding lists areequally distributed over the Transformer processes available.This ensures that the Transformer processes work in parallelover the regridding of the different source and target processintersections.

During the run, the different Transformer processes waitfor messages to arrive from any component process PSMILe,receive header messages containing information about thenext message to receive or pending requests to fulfill, andperform related actions. They repeat this sequence of actionsin an indefinite loop until the entire coupled run is finished.During the exchange phase, each Transformer process re-ceives the grid point field values (transferred from the sourcecomponent code with a PRISMPut call) corresponding toits lists, calculates the regridding weights if it is the first ex-change, and applies the weights. The resulting target valuesare made available to the corresponding target PSMILe pro-cess. The data are sent upon request from the respective tar-get process (i.e. when a PRISMGet is called in the targetcomponent code).

The calculation of the weights depends on the regriddingalgorithm chosen by the user in the XML configuration filewhich can be different for each coupling field. The cur-rent version of OASIS4 supports purely 3-D regridding (3-D n-neighbour distance-weighted average or trilinear inter-polation) and 2-D regridding in the horizontal plane (2-Dn-neighbour distance-weighted average, bilinear, bicubic in-terpolation or conservative remapping) followed by a linearinterpolation in the vertical. As in OASIS3, the 2-D algo-rithms are taken from the Spherical Coordinate Remappingand Interpolation Package (SCRIP) library (seeJones, 1999).In particular, for the 2-D conservative remapping, the contri-bution of each source cell is proportional to the fraction ofthe target cell it intersects; this ensure local conservation ofextensive properties such as fluxes. The 3-D algorithms are3-D extensions of the 2-D SCRIP algorithms. For a detaileddescription of the regridding the reader is therefore referredto Jones(1999) and to the SCRIP User’s Guide (seeJones,2001). As the SCRIP bicubic algorithm is based on the func-

tion gradient in the i and j directions, it cannot be applied forthe Gauss-reduced grids. For those grids, the SCRIP func-tionality has therefore been extended by a standard bicubicalgorithm based on the 16 source neighbours.

Analysing the quality of the regridding algorithm is a del-icate issue and no firm number can be given as the resultsdepend on the source and target grids and on the character-istics of the regridded field. As an indication, we show herethe error obtained when regridding the values of an analyt-ical cosine bell function which presents one minimum andone maximum over the global Earth domain. For longitudeλ and latitudeφ this is expressed as

F1 = 2−cos[π ·acos(cos(λ)cos(φ))]

The relative error, i.e. the difference between the regriddedvalue and the analytical value of the function divided by theanalytical value, is calculated on each target grid point. TheFigs.5–8 present the results with each pixel showing exactlythis relative error for each target grid point. No projection inthe latitude-longitude space has been performed to avoid anyadditional interpolation by the graphic package.

Figure5 shows the relative error obtained with the bilin-ear interpolation from the LMDz grid to the ORCA2 grid.LMDz (Hourdin et al., 2006) has a regular 3.75◦

× 2.535◦

grid. ORCA2 (Madec, 2008) is based on a 2◦ Mercator mesh,(i.e. variation of meridian scale factor by cosine of the lati-tude); in the Northern Hemisphere the mesh has two polesso that the ratio of anisotropy is nearly one everywhere. Theerror is not shown for masked target points (grey areas). Onecan observe a relative error smaller than 0.4% over most ofthe domain. Near the coastline, for example near the westcoast of the northern South America, the error can reach0.8% at maximum. For those points near the coastline, somesource points that are identified as bilinear neighbours aremasked out. The remaining (non-masked) valid points areused for a less precise weighted-distance interpolation result-ing in a larger error.

Figure6 shows the relative error obtained with the bicu-bic interpolation from a BT42 Gauss-reduced grid (with6232 grid points in total distributed over 64 longitudinal cir-cles) to the ORCA2 grid. The relative error in the equato-rial and tropical regions of the basins is smaller than 0.4%,and growing slightly but not above 0.8% at higher or lowerlatitudes which corresponds to regions where the Gaussiangrid is effectively reduced. Again, the error is larger near thecoastline, reaching 4.2% for some points; like the bilinear in-terpolation, only the non-masked source neighbours are usedfor those points near the coastline resulting in a less preciseweighted-distance interpolation. The error near the coast-line is larger in this regridding involving the BT42 grid thanin the regridding involving the LMDz grid described above;this is linked to the fact that the BT42 grid has a much widermasked domain compared to the LMDZ domain, and not tothe interpolation algorithm.

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 97

Fig. 5. Relative error obtained (in %) with the bilinear regriddingfrom the LMDz grid to the ORCA2 grid.

Fig. 6. Relative error obtained (in %) with the bicubic regriddingfrom the Gauss-reduced grid to the ORCA2 grid.

Figure7 shows the relative error obtained with the conser-vative remapping from the ORCA2 grid to the LMDz grid.The relative error is everywhere smaller than 0.2% exceptfor the last row of the LMDz grid close to the North pole andnear the coastline where it can reach respectively 1% and2.2%. The SCRIP library assumes that the border of a cellbetween two cell corners is linear in longitude and latitude:near the North pole, this assumption becomes less valid andthis can explain the larger error. Near the coastline, differentnormalisation options are available in the SCRIP library tocalculate the value of the target cells which partially overlapmasked source cells (seeJones, 2001). We chose the optionto normalize by the area of the target cell intersecting non-masked source cells; this option does not locally conserveextensive properties but results in more realistic target valueseven if the error is still relatively large. With the alternativeoption, the normalisation by the full area of the target cell,the relative error (not shown) can become as large as 100%.

We note here that the data structure provided to theOASIS4 Transformer differs from the data structure sent tothe OASIS3 Transformer. The source points that are usedfor a particular interpolation stencil may arrive in a differ-ent ordering. The data are multiplied with their individualweights and summed up. The different ordering during the

Fig. 7. Relative error obtained (in %) with the conservative remap-ping from the ORCA2 grid to the LMDz.

Fig. 8. Relative error obtained (in %) with the bilinear regriddingfrom the LMDz grid to the ORCA2 grid when each component ispartitioned over 3 processes and when the global search is not acti-vated.

summation may lead to truncation errors which become vis-ible when comparing the results obtained with OASIS3 andwith OASIS4 even though both software use the same regrid-ding algorithms.

Finally, as an illustration of the benefits of the globalsearch (see Sect.5.2), Fig. 8 shows the relative error ob-tained with the bilinear interpolation from the LMDz gridto the ORCA2 grid when each component is partitionedover 3 processes (in the longitudinal direction) and when theglobal search is not activated. Comparing with Fig.5, weobserve greater relative error (of the order of 1% compara-ble to the maximum error obtained near the coastline) nearthe borders of the source partitions. This can be expected asfor those target points the local search cannot find the usual4 surrounding neighbours needed for the bilinear interpola-tion (as some of those 4 neighbours are located on a neigh-bour process domain). The remaining neighbours are usedfor a less precise weighted-distance interpolation resultingin a larger error. When the interpolation is performed withthe global search described in Sect.5.2, this greater relativeerror vanishes and regridded results (not shown) are exactlythe same in the partitioned and not partitioned cases, whichvalidates the global search.

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

98 R. Redler et al.: The OASIS4 software for coupled climate modelling

7 Data exchange

The exchange of coupling data is typically happening insidea loop over time or iterations and is activated many timesduring the execution of the coupled model. Therefore thehandling of the data exchange is a time-critical task. One ofthe major targets is to minimise the communication overheadand to avoid load-balancing problems induced by the imple-mentation of the exchange.

The data exchange is invoked by the component modelsusing the PRISMPut and PRISMGet interface routines.These interface routines can be called individually by eachmodel component at an arbitrary non-constant frequency.The coupling frequency is determined in the XML file at thebeginning of the job. Inactive calls will return without per-forming any MPI calls. Only active prism calls (calls that areperformed for a date at which an exchange has to occur) willcall the MPI library to exchange data. OASIS4 verifies thatthe successive date bound arguments to these calls cover therun duration with no overlap and no gap and therefore takescare that the active calls have a one to one correspondence.

As described in Sect.4.2, the routines can be used to readfrom and write to NetCDF files as well as sending data toand receiving data from another model component. The ar-guments of the subroutine calls are identical for IO and cou-pling operations, and the concrete action(s) are only specifiedin the component XML configuration file.

Figure9 schematically illustrates possible communicationpathways in a coupled application including the access ofdata from files. Depending on the respective grid configu-ration, the exchange of coupling data is handled in two dif-ferent ways. Data points that reside on identical geographicallocations on the source and target side are exchanged directlybetween the component processes (involving repartitioningif necessary), without the participation of Transformer pro-cesses. Data points for which a regridding is required aresent through the Transformer. Matching and non-matchinggrid points are identified by the PSMILe solely based on thegeographical description of the grid. In order to reduce theamount of messages to be exchanged, the current implemen-tation assumes that the number of matching points must atleast sum up to 10% of the total number of grid points to besent. For any number below this threshold, matching dataare exchanged together with non-matching data through theTransformer processes.

The data transfers, either direct or through the Trans-former, are handled in parallel, in the sense that the sourceand grid point values corresponding to the different intersec-tion regridding lists (see Sect.6) are transferred separately.Gathering or scattering is never performed, and all compo-nent processes that are active in the coupling participate inthe exchange. One Transformer process may treat severalintersection regriddings when the number of source and tar-get domain intersections exceeds the number of Transformerprocesses.

atmosphere & land surface

sea ice

Transformer

ocean

Fig. 9. Example of communication pathways in an OASIS4 coupledapplication.

To further optimise the exchange of data and to reduce thework load of the Transformer processes, only non-maskedsource and target points are transferred. On the receiver side,the non-masked data are scattered onto the destination ar-ray behind the PRISMGet call and provided to the API inthe appropriate array shape leaving masked target points un-touched. The calculation of regridding weights in the Trans-former is delayed until the first exchange for which a partic-ular set of weights is required. The weights are then storedin the Transformer for subsequent exchanges.

It is noteworthy to mention that the data are buffered inthe PSMILe library on the sender side outside the MPI li-brary. Data are transferred with a so-called non-blockingMPI function (MPI Isend). With a standard conform MPIimplementation, this guarantees no overflow in MPI internalbuffers. The PRISMPut will immediately return when thedata are stored locally even if the destination process (be itthe Transformer or another component in case of direct com-munication) is not yet ready to receive data. On the senderside the user code outside the PSMILe can safely progressand reuse its own local memory. The PRISMGet on theother hand will only return when the data are received.

The IO layer is based on the mppio package as describedby Balaji (2001). For the OASIS4 PSMILe IO, variousmodes are supported which become especially relevant ifcomponents are parallelised. The default behaviour of thePSMILe is, for output, to collect all data on the componentroot process and write out data into a single file or, for input,to read data on the component root process and distributeit internally. While this approach is still applicable whenthe domain partitioning is changing from job to job (espe-cially when thinking about coupling restart files) it may notbe the most efficient way to deal with the IO. As an alter-native approach, all IO processes read data from and writedata to individual files. For further analysis these files haveto be glued together in a postprocess. In order to support afull parallel IO, the mppio package has been extended to be

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 99

compliant with the pNetCDF library (Li et al., 2003). Whenlinked against pNetCDF, data are written into and read froma single file via MPI-IO with no pre- or postprocessing re-quired. From a user perspective, this approach is invariant tochanges in domain partitioning and should also provide a rea-sonable IO performance provided that the respective MPI-IOimplementation efficiently uses the underlying system archi-tecture (memory layout and file system).

During the data exchange and IO, the PSMILe is ableto invoke some local transformation routines like time-accumulation, time-averaging, gathering and scattering ofphysical fields. It performs the required transformation lo-cally on the source side before the exchange with other com-ponents of the coupled system or IO. In this case only the endproduct is communicated to the target.

8 Performance

Before seriously considering a new software for a coupledsystem a user typically asks for the cost of coupling andthe overhead one has to consider for the coupling. Prefer-ably, a user would like to have exact numbers at hand interms of CPU seconds or a percentage of time relative to annon-coupled system. For full ESMs, it is difficult to pre-dict the additional time needed for the coupling. To somedegree, this depends on the search that has to be performedon a variety of grids for a larger number of exchange fieldsand different regriddings. Another important aspect is therun-time behaviour of the different ESM components andthe load-(im)balancing between the processes involved. Thismay strongly influence the time needed to perform a data ex-change. ESMs differ from each other in the above mentionedaspects, and providing a number for a particular ESM is oflittle help and not in the scope of this article. In addition,the picture may again change completely when an ESM jobis running in batch mode and eventually has to share someresource with competing applications. In the following, ouraim is to give a first idea about the potential cost for couplingand to provide information about the efficiency of the cou-pling software as such. Therefore we try to exclude the afore-mentioned side effects as much as possible. We will revisitthis subject in our discussion in Sect.9. We now use a sim-plified test case application to demonstrate the performanceof the OASIS4 search algorithm and the data exchange andcompare them with OASIS3 performance.

8.1 Benchmark design

Here we investigate the so-called “strong scaling” propertiesof OASIS4. By keeping the global problem size constant,we expect a decrease in CPU time for individual processeswith the problem distributed to larger number of processesin proportion to the local problem size. While this is in ge-neral reflecting the typical situation the user is confronted

with, strong scaling is considered more difficult to achieve(in contrast to weak scaling where the local problem sizeis kept constant and the global problem to be solved is in-creased with increasing numbers of processes used). The testcase we are considering now is a bidirectional exchange of2-dimensional data between a global “atmospheric” grid forcomponent A and an “ocean” grid for component B, rang-ing from 0◦ to 360◦ in longitude and latitudesφ between70◦ S and 70◦ N. Both grids are described as horizontally ir-regular grids in order to bypass the optimised routines thatare available for completely regular grids and to mimic thelarge class of use cases, the 2-D data exchange between anytwo physical components at the Earth surface. Some of theocean and atmosphere grid points have been masked out inorder to bypass additional optimisation steps of the search al-gorithm which can be applied if complete non-masked gridsare treated. The components are partitioned in latitude di-rection. The search is performed for bilinear interpolationwithout requesting an extra nearest-neighbour search for tar-get points that only have masked “bilinear” source points (seeSect.5.1). The search is performed for one exchange field foreach direction.

In Sect. 8.2, we consider a T255 “atmospheric” gridwith 768×385 grid points for component A and an “ocean”grid with 0.3◦

×cosφ horizontal resolution corresponding to1202×665 grid points for component B. In an additional testdescribed in Sect.8.3, we have expanded the problem intothe vertical dimension towards a full 3-D search and dataexchange with 40 levels for component A and 45 levels forcomponent B. In Sect.8.4, the resolutions of component Aand component B are increased respectively from T21 toT255 and from 4.0◦

×cosφ to 0.5◦×cosφ.

8.2 2-D search and exchange

For this particular benchmark calculations and for the 3-D cases described in Sect.8.3, the OASIS4 sources have beencompiled with the Intel Fortran compiler version 10.1 andthe GNU C compiler version 4.1.2, both using default com-piler switches without any further optimisation. The codehas been run on a local PC cluster. Each node of the clusteris equipped with 2.0 GHz 2 times single core AMD Opteronprocessor 246 and 4 GB of memory per node connected via a2 Gigabit Myrinet. For the message passing, we use the Mes-sage Passing Interface Chameleon Glenn’s Messages propri-etary communication layer (MPICH-GM) provided by Myri-com. For all tests, we have used the MPI1 method where allprocesses have to be started at once (see Sect.3).

The numbers presented in Table2 show the wall-clocktime needed to perform the search for the bilinear inter-polation, the time needed to perform the first exchangeof data (which includes the calculation of the weightsfor the bilinear interpolation in the Transformer), and thetime needed for subsequent data exchanges (averaged over100 data exchanges). When repeating the measurements the

www.geosci-model-dev.net/3/87/2010/ Geosci. Model Dev., 3, 87–104, 2010

100 R. Redler et al.: The OASIS4 software for coupled climate modelling

Table 2. Performance of OASIS4 tasks obained on a PC cluster for 2-D search.

number of processes for time in seconds for

Driver atmos ocean search atm 1st exchng ocn 1st exchng atm nth exchng ocn nth exchng

1 1 1 6.049 0.224 0.248 0.077 0.1012 1 1 6.050 0.087 0.167 0.046 0.0644 1 1 6.062 0.089 0.167 0.046 0.064

1 2 2 6.487 0.241 0.237 0.104 0.0972 2 2 6.484 0.122 0.124 0.052 0.0544 2 2 6.468 0.114 0.120 0.043 0.0498 2 2 6.486 0.049 0.101 0.041 0.032

1 4 4 2.425 0.254 0.257 0.075 0.0832 4 4 2.385 0.195 0.183 0.073 0.0784 4 4 2.412 0.093 0.115 0.033 0.043

1 8 8 1.174 0.234 0.238 0.092 0.0902 8 8 1.172 0.147 0.152 0.045 0.0494 8 8 1.179 0.097 0.097 0.037 0.035

1 16 16 0.826 0.226 0.225 0.113 0.1142 16 16 0.779 0.137 0.136 0.061 0.0654 16 16 0.791 0.092 0.091 0.041 0.0428 16 16 0.771 0.042 0.045 0.018 0.025

differences in time are in the order of a few milliseconds.Therefore we decided not to include any statistics as this willnot change the general picture we are going to discuss.

The time needed for the search can be represented by thetime needed for the PRISMEnddef routine. The search isperformed on the two sets of source component processes asthe component A target points are searched for on the compo-nent B processes and vice versa. As the PRISMEnddef andsubsequent calls therein contain blocking MPI calls, the totaltime needed by the PRISMEnddef is to a large extent con-trolled by the source process which has to solve the largestproblem (mainly determined by the number of target pointsto be processed). The time spent in PRISMEnddef is almostidentical for all processes due to this blocking nature of theMPI function calls used inside. Therefore only one number,the time needed by the component process with the largestdata load is shown in the table for each setting.

In general, the wall clock time needed for the search de-creases with the number of processes dedicated to the com-ponents: in this case, the speed-up is roughly a factor of 8when going from 2 to 16 processes. When going from one totwo component processes, the time for the search is slightlyincreased. This can be attributed to the different algorithmsused, as in the one-processor case certain subroutines do nothave to be called simply because no boundary exchange isrequired on the source grid. When the number of processesis increased further the required wall-clock time is reducedsignificantly to values well below 1 s for the 2-D data ex-change on 16 processors. At this stage, the number of Trans-

former processes is not of critical importance as they are notinvolved in the search. Communication with the Transformeris established only when the search for an intersection sub-domain has been completed and search results are deliveredto the Transformer process(es) (see Sect.6).

In this example, a data exchange can be understood asone pair of PRISMPut and PRISMGet operations in eachcomponent (referred to as ping-pong). The time neededfor a complete ping-pong usually is different on each pro-cess. Load-balancing problems provide one explanation forthis behaviour. Even though the PSMILe library uses non-blocking send operations for the data exchange, this can onlyreduce load-balancing problems, but such problems cannotbe solved inside the library. Furthermore, it may happen thatone instance of the coupled system may have to receive morethan just one message at a time. This is for example the caseif only one Transformer process is available and two sourcecomponents are trying to send or receive data at the sametime. In this case, the messages can only be processed oneafter the other by the Transformer causing other componentprocesses to wait. As only non-masked data are exchangedbetween the components, data are subsampled prior to thesend operation and have to be scattered on the receiver sidebefore they are delivered to the user space. This requires ad-ditional operations which are also captured by our time mea-surements for the ping-pong. In order to provide only a singlenumber for the ping-pong, we show the time needed by theslowest component processor to complete the exchange.

Geosci. Model Dev., 3, 87–104, 2010 www.geosci-model-dev.net/3/87/2010/

R. Redler et al.: The OASIS4 software for coupled climate modelling 101

We observe a general decrease of the wall-clock time forthe exchange with an increasing number of Transformer pro-cesses available (Table2). One reason is that the likelihoodincreases that the Transformer process is ready to receive,process and send data. The other reason is that the amount ofdata to be treated is reduced for each process. The only casewhen increasing the number of Transformer processes doesnot result in a decrease of the exchange wall-clock time iswhen the components themselves are not parallel and whenthe number of processes for the Transformer goes from 2 to4. In this case, there are only two distinct intersection regrid-ding lists (see Sect.6), i.e. one when considering one com-ponent as the source and another one when considering theother component as the source. As the different Transformerprocesses work in parallel over the regridding of the differ-ent source and target process intersections, the 2 additionalprocesses (when going from 2 to 4 processes) do not get anyworkload and do not participate in the data exchange.

During the first exchange of data in addition to the process-ing of the exchange fields, the Transformer has to calculatethe weights which are then stored and reused for subsequentdata exchanges. This additional workload is responsible forthe longer time needed to perform the first exchange whencomparing with the time needed for subsequent exchanges.

We also observe that for a fixed number of Transformerprocesses the exchange time is fairly independent of the num-ber of application processes. As the data are exchangedthrough the Transformer processes, it can act as a bottleneckin the exchange if it is not sufficiently parallel. In general,it is the number of available Transformer processes that dic-tates the speed of the exchange and not the parallelisation ofthe components themselves.

Finally, we notice that the scaling behaviour is in generalnot fully predictable; this can mainly be attributed to the factthat a particular Transformer process may still be busy withother tasks, that data are sometimes not available from thesource component or delivered only with a delay due to punc-tual load imbalancing. We observe super-scalability whengoing in our case from 2 to 4 processes for the components.In the 2-D case this super-scalability is also present when in-creasing the number of component processes further from 4to 8 processes. Cache effects provide one possible explana-tion for this behaviour. A more efficient scheduling of theprocesses with a reduced waiting time for messages to arriveis another answer. A finer partitioning of the target grid mayfit the remote partitioning better and thus reduce the overheadduring the initial search.

8.3 3-D data exchange

The general picture does not change much with an increas-ing amount of data to be exchanged, for example going fromthe 2-D to a 3-D coupling, i.e. when exchanging data be-tween 40 levels for component A and 45 levels for compo-

nent B (see Table3). As in the 2-D case, the efficiency ofthe search mainly depends on the number of component pro-cesses available. The wall-clock time decreases by roughlya factor of 6 when going from 2 to 16 component processes.The time needed for the search decreases to only a few sec-onds when employing four or more application processes –which is likely the case with applications running with thegrid sizes similar to our example. With an increasing num-ber of Transformer processes the exchange time is reducedas in the 2-D case.

8.4 Performance of the multi-grid search and parallelregirdding

To evaluate the performance of OASIS4 and in particularthe efficiency of the multi-grid algorithm, the benchmarkwas adapted to the OASIS3 coupler and additional 2-D cou-pled runs were realized for different resolutions of com-ponents A and B. These additional runs were performedon a Single Core Intel Pentium 4 CPU 3.20 GHz Linux PCwith MPICH-1 message passing. OASIS4, OASIS3 and thebenchmark sources were compiled with the Portland GroupFortran Compiler 9.0-4 and with the GNU C compiler 4.4.1.One process was started for each component and the driver.

The results are presented in Table4 for OASIS4 and inTable5 for OASIS3. In each case, five runs were realizedwith a resolution ranging from T21 (2244 grid point) to T255(295 680 grid points) for component A, and ranging from 4◦

(4692 grid points) to 0.5◦ (288 078 grid points) for compo-nent B. In all cases, the Transformer and the componentswere running with one process each. The numbers providedin the tables give the time for the search and the 1st exchangeas measured in component A (3rd column) and in componentB (4th column). We provide these measures to have a compa-rable basis: with OASIS3, the neighborhood search and theweight calculation is done during the 1st exchange, whereas they are respectively done during the initialisation phasebelow the PRISMEnddef call and during the 1st exchangewith OASIS4. In addition, Tables4 and 5 show the timefor the n-th exchange in component A (5rd column) and incomponent B (6th column).

By comparing Table4 and Table5, we see that even at rel-atively low resolution (2244 and 4692 grid points for com-ponents A and B, respectively) OASIS3 is about two timesslower than OASIS4. The difference gets bigger with in-creasing resolution: the time required for the neighborhoodsearch and the first exchange (including the weights calcula-tion) increases with O(N) for OASIS4 (see Fig.10) and withO(N2) for OASIS3. Even the time for subsequent exchangesis always about 50% higher with OASIS3 than with OASIS4.

These numbers clearly demonstrate the benefit of themulti-grid neighborhood search when compared to a classi-cal search and the increased general performance of OASIS4over OASIS3 even in this simple non-parallel case.