Neural Techniques for Pose Guided Image Generation · Neural Techniques for Pose Guided Image...

Transcript of Neural Techniques for Pose Guided Image Generation · Neural Techniques for Pose Guided Image...

Neural Techniques for Pose Guided Image GenerationAyush Gupta ([email protected]), Shreyash Pandey ([email protected]), Vivekkumar Patel ([email protected])

2018

Introduction•To sythesize person images based on acondition image and desired pose.

•Extend the PG2 model [3] for betterperformance and speedier training.

• Incorporate WGANs for robust and morestable training.

•Use a triplet classification based discriminatorarchitecture for model simplification and speed.

Data Preparation

Dataset•The DeepFashion (In-shop Clothes RetrievalBenchmark) dataset is used.

• It consists of 52,712 number of in-shop clothesimages and around 200,000 cross-pose/scale pairs.

•Each image has a resolution of 256 x 256.Processing•Filtered for non-single and complete images. Splitinto 32,031 training and 7,996 test images.

•Training set augmented to 57,520 images afterleft-right flip and filtering non-different images.

•Gives 127,022 training ex.; 18,568 test ex.•A state-of-the-art pose estimator [2] gives 18 posekeypoints of images. Stored as pickle files.

•The keypoints were also used to generate ahuman body mask by morphologicaltransformations for Stage-1 training

•Thus, each example stored in HDF5 contained acondition image, target image, 18 channel targetpose info, and a single channel pose mask.

•Each image pair was normalized by a mean of127.5, and std. of 127.5.

•Poses normalized to be between -1 and +1.

Original Architecture

•Stage-1 has a U-net like architecture. Skipconnections help in transferring appearanceinformation to the output.

•Stage-2 is a conditional DCGAN that generates adifference map.

•Stage-2 Discriminator differentiates between pairsof images. Real pair has condition and targetimage. Fake pair has condition and generatedimage.

Pose Mask Loss

•We calculate the loss for stage-1 as follows:L = ||(IB − IB) ∗ (1 + MB)||1

•Masking by pose forces the model to ignore thebackground variations.

Our Modifications

•Discriminator is now similar to a classifier. Targetimage has label 1, generated image and conditionimage have label 0.

•Using WGAN training to train the conditionalDCGAN in stage-2.

•With WGAN, Discriminator is trained for N (=10) iterations for every iteration on Generator.

Implementation Details

•With a batch-size of 4, Stage-1 is trained for 40ksteps followed by 25k steps for Stage-2.

•Adam Optimizer is used for Stage-1 and Stage-2,except for WGAN in which we use RMSProp forStage-2.

•Learning rates for each of these stages are set to5e-5.

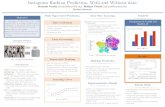

SSIM and IS scores

No. Model IS SSIM1 G1 2.58 0.722 G1 + G2 + D 2.993 0.713 Triplet 3.01 0.7274 WGAN-triplet 3.02 0.735 Ma et. al. 3.091 0.766 Target 3.25 1

Table 1: Ma et al. was the original paper. Target denotes thetarget test set.

Generation Results

•Shown above are results of WGAN-triplet modelin the following order: from left to right,condition image, target pose, Stage-1 result,Stage-2 result and target image.

•Stage-1 combines the target pose and appearance.•Stage-2 generates a sharper image after using thecoarse result.

Failures

Sharpness visualized via Gradients

•Stage-2 helps in generating sharper images.

Conclusion and Future Work

•Two-stage architecture is able to transfer posewhile retaining appearance.

• Incorporating triplet classification and WGANleads to faster convergence and more robusttraining.

•Failure modes include target poses that involvecloseups of people, and attires such as jacketsthat look different from different angles. Moredata could potentially solve these problems.

•One extension could be to extend this model to asemi-supervised setting where pose is supervisedand appearance is unsupervised. This will allowarbitrary manipulation of poses [1].

References[1] Rodrigo de Bem et al. “DGPose: Disentangled Semi-supervised Deep

Generative Models for Human Body Analysis”. In: arXiv preprintarXiv:1804.06364 (2018).

[2] Zhe Cao et al. “Realtime multi-person 2d pose estimation using partaffinity fields”. In: CVPR. Vol. 1. 2. 2017, p. 7.

[3] Liqian Ma et al. “Pose guided person image generation”. In: Advancesin Neural Information Processing Systems. 2017, pp. 405–415.