Module1, probablity

-

Upload

pravesh-kumar -

Category

Engineering

-

view

45 -

download

2

Transcript of Module1, probablity

1

Module 1

Probability

1. Introduction

In our daily life we come across many processes whose nature cannot be predicted in advance.

Such processes are referred to as random processes. The only way to derive information about

random processes is to conduct experiments. Each such experiment results in an outcome

which cannot be predicted beforehand. In fact even if the experiment is repeated under

identical conditions, due to presence of factors which are beyond control, outcomes of the

experiment may vary from trial to trial. However we may know in advance that each outcome

of the experiment will result in one of the several given possibilities. For example, in the cast of

a die under a fixed environment the outcome (number of dots on the upper face of the die)

cannot be predicted in advance and it varies from trial to trial. However we know in advance

that the outcome has to be among one of the numbers1, 2, … , 6. Probability theory deals with

the modeling and study of random processes. The field of Statistics is closely related to

probability theory and it deals with drawing inferences from the data pertaining to random

processes.

Definition 1.1

(i) A random experiment is an experiment in which:

(a) the set of all possible outcomes of the experiment is known in advance;

(b) the outcome of a particular performance (trial) of the experiment cannot be

predicted in advance;

(c) the experiment can be repeated under identical conditions.

(ii) The collection of all possible outcomes of a random experiment is called the sample

space. A sample space will usually be denoted by�. ▄

Example 1.1

(i) In the random experiment of casting a die one may take the sample space as � = 1, 2, 3, 4, 5, 6 , where � ∈ � indicates that the experiment results in ��� = 1,… ,6� dots on the upper face of die.

(ii) In the random experiment of simultaneously flipping a coin and casting a die one may

take the sample space as

� = �, � × 1, 2, … , 6 = ���, ��:� ∈ �, � , � ∈ 1, 2, … , 6 �,

2

where ��, �����, ��� indicates that the flip of the coin resulted in head (tail) on the

upper face and the cast of the die resulted in ��� = 1, 2, … , 6� dots on the upper face.

(iii) Consider an experiment where a coin is tossed repeatedly until a head is observed. In

this case the sample space may be taken as � = 1, 2, … (or � =T, TH, TTH,… ),where � ∈ � (or TT⋯TH ∈ � with �� − 1�Ts and one H) indicates

that the experiment terminates on the �-th trial with first � − 1 trials resulting in tails on

the upper face and the �-th trial resulting in the head on the upper face.

(iv) In the random experiment of measuring lifetimes (in hours) of a particular brand of

batteries manufactured by a company one may take � = �0,70,000",where we have

assumed that no battery lasts for more than 70,000 hours. ▄

Definition 1.2

(i) Let � be the sample space of a random experiment and let # ⊆ �. If the outcome of the

random experiment is a member of the set # we say that the event # has occurred.

(ii) Two events #%and #&are said to be mutually exclusive if they cannot occur simultaneously,

i.e., if #% ∩ #& = (, the empty set. ▄

In a random experiment some events may be more likely to occur than the others. For

example, in the cast of a fair die (a die that is not biased towards any particular outcome),

the occurrence of an odd number of dots on the upper face is more likely than the

occurrence of 2 or 4dots on the upper face. Thus it may be desirable to quantify the

likelihoods of occurrences of various events. Probability of an event is a numerical measure

of chance with which that event occurs. To assign probabilities to various events associated

with a random experiment one may assign a real number )�#� ∈ �0,1" to each event # with

the interpretation that there is a �100 × )�#��% chance that the event # will occur and a +100 × �1 − )�#��,% chance that the event # will not occur. For example if the

probability of an event is 0.25 it would mean that there is a 25% chance that the event will

occur and that there is a 75% chance that the event will not occur. Note that, for any such

assignment of possibilities to be meaningful, one must have )��� = 1. Now we will discuss

two methods of assigning probabilities.

I. Classical Method

This method of assigning probabilities is used for random experiments which result in a

finite number of equally likely outcomes. Let � = .%, … , ./ be a finite sample space with 0�∈ ℕ� possible outcomes; here ℕ denotes the set of natural numbers. For ⊆ � , let |#| denote the number of elements in #. An outcome . ∈ � is said to be favorable to an event

3

# if . ∈ #. In the classical method of assigning probabilities, the probability of an event # is

given by

)�#� = numberofoutocmesfavorabletoEtotalnumberofoutcomes = |#||�| = |#|0 . Note that probabilities assigned through classical method satisfy the following properties of

intuitive appeal:

(i) For any event #, )�#� ≥ 0; (ii) For mutually exclusive events #%, #&, … , #/� i.e. , #D ∩ #E = ( , whenever �, F ∈1, … , 0 , � ≠ F�

)HI#D/DJ% K = |⋃ EMNMJ% |n = ∑ |EM|NMJ%n =P|EM|nN

MJ% = P)�#D�;/MJ%

(iii) )��� = |Q||Q| = 1 .

Example 1.2

Suppose that in a classroom we have 25 students (with registration numbers1, 2, … , 25) born in

the same year having 365 days. Suppose that we want to find the probability of the event #

that they all are born on different days of the year. Here an outcome consists of a sequence of

25 birthdays. Suppose that all such sequences are equally likely. Then |�| = 365&R, |E| = 365 × 364 × ⋯× 341 =STR )&R and )�#� = |U||Q| = STRVWXSTRWX ∙ The classical method of assigning probabilities has a limited applicability as it can be used only

for random experiments which result in a finite number of equally likely outcomes. ▄

II. Relative Frequency Method

Suppose that we have independent repetitions of a random experiment (here independent

repetitions means that the outcome of one trial is not affected by the outcome of another trial)

under identical conditions. Let Z[�#� denote the number of times an event # occurs (also

called the frequency of event # in \ trials) in the first \ trials and let �[�#� = Z[�#�/\ denote

the corresponding relative frequency. Using advanced probabilistic arguments (e.g., using Weak

Law of Large Numbers to be discussed in Module 7) it can be shown that, under mild

conditions, the relative frequencies stabilize (in certain sense) as \ gets large (i.e., for any

event #, lim[→`ra�E� exists in certain sense). In the relative frequency method of assigning

probabilities the probability of an event# is given by

4

)�#� = lim[→` �[�#� � lim[→`Z[�#�\ ∙

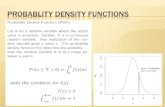

Figure 1.1. Plot of relative frequencies (�[�#�) of number of heads against number of trials (N)

in the random experiment of tossing a fair coin (with probability of head in each trial as 0.5).

In practice, to assign probability to an event #, the experiment is repeated a large (but fixed)

number of times (say \ times) and the approximation )�#� b �[�#� is used for assigning

probability to event#. Note that probabilities assigned through relative frequency method also

satisfy the following properties of intuitive appeal:

(i) for any event #, )�#� B 0; (ii) for mutually exclusive events #%, #&, … , #/

) HI#D/

DJ%K �P)�#D�

/

DJ%;

(iii) )��� � 1. Although the relative frequency method seems to have more applicability than the classical

method it too has limitations. A major problem with the relative frequency method is that it is

5

imprecise as it is based on an approximation�)�#� ≈ �[�#��. Another difficulty with relative

frequency method is that it assumes that the experiment can be repeated a large number of

times. This may not be always possible due to budgetary and other constraints (e.g., in

predicting the success of a new space technology it may not be possible to repeat the

experiment a large number of times due to high costs involved).

The following definitions will be useful in future discussions.

Definition 1.3

(i) A set # is said to be finite if either # = ( (the empty set) or if there exists a one-one and

onto function Z: 1,2, … , 0 → #�orZ: # → 1,2, … , 0 � for some natural number 0;

(ii) A set is said to be infinite if it is not finite;

(iii) A set # is said to be countable if either # = ( or if there is an onto function Z:ℕ → #, where ℕ denotes the set of natural numbers;

(iv) A set is said to be countably infinite if it is countable and infinite;

(v) A set is said to be uncountable if it is not countable;

(vi) A set # is said to be continuum if there is a one-one and onto function Z:ℝ →#�orZ: # → ℝ�, where ℝ denotes the set of real numbers. ▄

The following proposition, whose proof(s) can be found in any standard textbook on set theory,

provides some of the properties of finite, countable and uncountable sets.

Proposition 1.1

(i) Any finite set is countable;

(ii) If d is a countable and e ⊆ d then e is countable;

(iii) Any uncountable set is an infinite set;

(iv) If d is an infinite set and d ⊆ e then e is infinite;

(v) If d is an uncountable set and d ⊆ e then e is uncountable;

(vi) If # is a finite set and f is a set such that there exists a one-one and onto function Z: # → f�orZ: f → #� then f is finite;

(vii) If # is a countably infinite (continuum) set andf is a set such that there exists a one-one

and onto function Z: # → f�orZ: f → #� then f is countably infinite (continuum);

(viii) A set # is countable if and only if either # = ( or there exists a one-one and onto map Z: # → ℕg, for some ℕg ⊆ ℕ; (ix) A set # is countable if, and only if, either # is finite or there exists a one-one map Z:ℕ → #; (x) A set # is countable if, and only if, either # = ( or there exists a one-one map Z: # →ℕ;

6

(xi) A non empty countable set # can be either written as# = .%, .&, …./ , for some 0 ∈ ℕ, or as # = .%, .&, … ; (xii) Unit interval �0,1� is uncountable. Hence any interval �h, i�, where −∞ < h < i < ∞,

is uncountable;

(xiii) ℕ × ℕ is countable;

(xiv) Let l be a countable set and let dm: n ∈ l be a (countable) collection of countable

sets. Then ⋃m∈odm is countable. In other words, countable union of countable sets is

countable;

(xv) Any continuum set is uncountable. ▄

Example 1.3

(i) Define Z:ℕ → ℕ by Z�0� = 0, 0 ∈ ℕ. Clearly Z:ℕ → ℕ is one-one and onto. Thus ℕ is

countable. Also it can be easily seen (using the contradiction method) that ℕ is infinite.

Thus ℕ is countably infinite.

(ii) Let ℤ denote the set of integers. Define Z:ℕ → ℤ by

Z�0� = q 0 − 12 , if0isodd−02 ,if0iseven

Clearly Z:ℕ → ℤ is one-one and onto. Therefore, using (i) above and Proportion 1.1 (vii), ℤ is countably infinite. Now on using Proportion 1.1 (ii) it follows that any subset of ℤ is

countable.

(iii) Using the fact thatℕ is countably infinite and Proposition 1.1 (xiv) it is straight forward

to show that ℚ (the set of rational numbers) is countably infinite.

(iv) Define Z:ℝ → ℝ and t:ℝ → �0, 1� by Z�u� = u, u ∈ ℝ, and t�u� = %%vwx , u ∈ ℝ. Then Z:ℝ → ℝ and t:ℝ → �0, 1� are one-one and onto functions. It follows that ℝand (0, 1)

are continuum (using Proposition 1.1 (vii)). Further, for −∞ < h < i < ∞ , let ℎ�u� = �i − h�u + h, u ∈ �0, 1�. Clearly ℎ: �0,1� → �h, i� is one-one and onto. Again

using proposition 1.1 (vii) it follows that any interval �h, i� is continuum. ▄

It is clear that it may not be possible to assign probabilities in a way that applies to every

situation. In the modern approach to probability theory one does not bother about how

probabilities are assigned. Assignment of probabilities to various subsets of the sample space �

that is consistent with intuitively appealing properties (i)-(iii) of classical (or relative frequency)

method is done through probability modeling. In advanced courses on probability theory it is

shown that in many situations (especially when the sample space � is continuum) it is not

7

possible to assign probabilities to all subsets of � such that properties (i)-(iii) of classical (or

relative frequency) method are satisfied. Therefore probabilities are assigned to only certain

types of subsets of �.

In the following section we will discuss the modern approach to probability theory where we

will not be concerned with how probabilities are assigned to suitably chosen subsets of �.

Rather we will define the concept of probability for certain types of subsets � using a set of

axioms that are consistent with properties (i)-(iii) of classical (or relative frequency) method.

We will also study various properties of probability measures.

2. Axiomatic Approach to Probability and Properties of Probability Measure

We begin this section with the following definitions.

Definition 2.1

(i) A set whose elements are themselves set is called a class of sets. A class of sets will be

usually denoted by script letters {,ℬ, },…. For example { = �1 , 1, 3 , 2, 5, 6 �; (ii) Let } be a class of sets. A function ~: } → ℝ is called a set function. In other words, a

real-valued function whose domain is a class of sets is called a set function. ▄

As stated above, in many situations, it may not be possible to assign probabilities to all subsets

of the sample space � such that properties (i)-(iii) of classical (or relative frequency) method

are satisfied. Therefore one begins with assigning probabilities to members of an appropriately

chosen class } of subsets of � (e.g., if � = ℝ, then } may be class of all open intervals in ℝ; if �

is a countable set, then } may be class of all singletons . , . ∈ �). We call the members of }

as basic sets. Starting from the basic sets in } assignment of probabilities is extended, in an

intuitively justified manner, to as many subsets of � as possible keeping in mind that properties

(i)-(iii) of classical (or relative frequency) method are not violated. Let us denote by ℱ the class

of sets for which the probability assignments can be finally done. We call the class ℱ as event

space and elements of ℱare called events. It will be reasonable to assume that ℱ satisfies the

following properties: (i) � ∈ ℱ, (ii) d ∈ ℱ ⟹ d� = � − d ∈ ℱ ,and (iii)dD ∈ ℱ, � = 1,2, … ⇒⋃ dD ∈ ℱDJ% . This leads to introduction of the following definition.

Definition 2.2

A sigma-field (�-field) of subsets of � is a class ℱ of subsets of � satisfying the following

properties:

(i) � ∈ ℱ;

(ii) d ∈ ℱ ⇒ d� = � − d ∈ ℱ (closed under complements);

8

(iii) dD ∈ ℱ, � = 1, 2, … ⇒ ⋃ dD ∈ ℱDJ% (closed under countably infinite unions). ▄

Remark 2.1

(i) We expect the event space to be a �-field;

(ii) Suppose that ℱ is a �-field of subsets of �. Then,

(a) ( ∈ ℱ�since( = ��� (b) #%, #&, … ∈ ℱ ⇒ ⋂ #D ∈ ℱDJ% �since ⋂ #DDJ% = �⋃ #D�DJ% ���; (c) #, f ∈ ℱ ⇒ # − f = # ∩ f� ∈ ℱ and #Δf ≝ �# − f� ∪ �f − #� ∈ ℱ; (d) #%, #&, … , #/ ∈ ℱ, for some 0 ∈ ℕ,⇒ ⋃ #D ∈ ℱ/DJ% and ⋂ #D ∈ ℱ/DJ% (take #/v% = #/v& = ⋯ = (so that ⋃ #D/DJ% = ⋃ #D∞DJ% or #/v% = #/v& = ⋯ = � so

that ⋂ #D/DJ% = ⋂ #D∞DJ% );

(e) although the power set of ������� is a �-field of subsets of �,in general, a �-

field may not contain all subsets of �. ▄

Example 2.1

(i) ℱ = (, � is a sigma field, called the trivial sigma-field;

(ii) Suppose that d ⊆ �. Then ℱ = d, d� , (, � is a �-field of subsets of �. It is the

smallest sigma-field containing the set d; (iii) Arbitrary intersection of �-fields is a �-field (see Problem 3 (i));

(iv) Let } be a class of subsets of � and let fm ∶ n ∈ l be the collection of all �-fields

that contain}. Then ℱ = �ℱmm∈o

is a �-field and it is the smallest �-field that contains class } (called the�-field

generated by } and is denoted by ��}�) (see Problem 3 (iii));

(v) Let� = ℝ and let � be the class of all open intervals in ℝ. Then ℬ% = ���� is called

the Borel � -field on ℝ. The Borel � -field in ℝ� (denoted by ℬ� ) is the � -field

generated by class of all open rectangles in ℝ�. A set e ∈ ℬ� is called a Borel set in ℝ�; here ℝ� = �u%, … , u��: −∞ < uD < ∞, � = 1,… , � denotes the �-dimensional

Euclidean space;

(vi) ℬ% contains all singletons and hence all countable subsets of ℝ+h = ⋂ +h −/J%%/ , h + %/,, ∙ ▄

Let } be an appropriately chosen class of basic subsets of � for which the probabilities can be

assigned to begin with (e.g., if � = ℝ then }may be class of all open intervals in ℝ; if � is a

countable set then } may be class of all singletons . , . ∈ �). It turns out (a topic for an

advanced course in probability theory) that, for an appropriately chosen class } of basic sets,

9

the assignment of probabilities that is consistent with properties (i)-(iii) of classical (or relative

frequency) method can be extended in an unique manner from }to��}�, the smallest �-field

containing the class}. Therefore, generally the domain ℱ of a probability measure is taken to

be ��}�, the �-field generated by the class } of basic subsets of �. We have stated before that

we will not care about how assignment of probabilities to various members of event space ℱ (a �-field of subsets of �) is done. Rather we will be interested in properties of probability

measure defined on event space ℱ.

Let � be a sample space associated with a random experiment and let ℱ be the event space (a �-field of subsets of �). Recall that members of ℱ are called events. Now we provide a

mathematical definition of probability based on a set of axioms.

Definition 2.3

(i) Let ℱ be a �-field of subsets of �. A probability function (or a probability measure) is a

set function ), defined on ℱ, satisfying the following three axioms:

(a) )�#� ≥ 0,∀# ∈ ℱ; (Axiom 1: Non-negativity);

(b) If #%, #&, … is a countably infinite collection of mutually exclusive events �i. e., #D ∈ℱ, � = 1, 2, … , #D ∩ #E = (, � ≠ F� then

) HI#D∞

DJ% K = P)�#D�∞

%J% ; �Axiom2: Countablyinfiniteadditive� (c) )��� = 1(Axiom 3: Probability of the sample space is 1).

(ii) The triplet ��, ℱ, )� is called a probability space. ▄

Remark 2.2

(i) Note that if #%, #&, … is a countably infinite collection of sets in a � -field ℱ then ⋃ #DDJ% ∈ ℱ and, therefore, )�⋃ #DDJ% � is well defined;

(ii) In any probability space ��, ℱ, )� we have )��� = 1 (or )�(� = 0; see Theorem 2.1 (i)

proved later) but if )�d� = 1 (or )�d� = 0), for some d ∈ ℱ, then it does not mean that d = � ( or d = () (see Problem 14 (ii).

(iii) In general not all subsets of � are events, i.e., not all subsets of � are elements ofℱ.

(iv) When � is countable it is possible to assign probabilities to all subsets of � using Axiom

2 provided we can assign probabilities to singleton subsets u of �. To illustrate this let � = .%, .&, … �orΩ = .%, … , ./ , forsomen ∈ ℕ� and let )�.D � = �D , � =

10

1, 2, … , so that 0 ≤ �D ≤ 1, � = 1,2, … (see Theorem 2.1 (iii) below) and ∑ �D =DJ% ∑ )�.D �DJ% = )�⋃ .D DJ% � = )��� = 1. Then, for any d ⊆ �,

)�d� = P �D .D:��∈�

Thus in this case we may take ℱ = )���, the power set of �. It is worth mentioning

here that if � is countable and } = �. ∶ . ∈ �� (class of all singleton subsets of �) is

the class of basic sets for which the assignment of the probabilities can be done, to

begin with, then ��}� = ���� (see Problem 5 (ii)).

(v) Due to some inconsistency problems, assignment of probabilities for all subsets of � is

not possible when � is continuum (e.g., if � contains an interval). ▄

Theorem 2.1

Let��, ℱ, )�be a probability space. Then

(i) )�(� = 0; (ii) #D ∈ ℱ, � = 1, 2, … . 0 , and #D ∩ #E = (, � ≠ F ⇒ )�⋃ #D/DJ% � = ∑ )�#D�/DJ% (finite

additivity);

(iii) ∀# ∈ ℱ, 0 ≤ )�#� ≤ 1and)�#�� = 1 − )�#�; (iv) #%, #& ∈ ℱ and #% ⊆ #& ⇒ )�#& − #%� = )�#&� − )�#%� and )�#%� ≤ )�#&�

(monotonicity of probability measures);

(v) #%, #& ∈ ℱ ⇒ )�#% ∪ #&� = )�#%� + )�#&� − )�#% ∩ #&�. Proof.

(i) Let #% = � and #D = (, � = 2, 3, … . Then )�#%� = 1 , (Axiom 3) #D ∈ ℱ, � = 1, 2, … ,#% = ⋃ #DDJ% and #D ∩ #E = (, � ≠ F. Therefore,

1 = )�#%� = ) HI#D`DJ% K

=P)�#D��usingAxiom2�`DJ%

= 1 +P)�(�`DJ&

⇒ P)�(�`DJ& = 0

11

⇒ )�(� � 0.

(ii) Let #D � (, � � 0 z 1, 0 z 2,… . Then #D ∈ �, � � 1, 2, … , #D ∩ #E � (, � G F and

)�#D� � 0, � � 0 z 1, 0 z 2,…. Therefore,

) HI#D/

%J%K � )HI#D

`

%J%K

� P)�#D��usingAxiom2�`

DJ%

� P)�#D�/

MJ%.

(iii) Let # ∈ �. Then � � # ∪ #� and # ∩ #� � (. Therefore

1 � )��� � )�# ∪ #�� � )�#� z )�#�� (using (ii)) ⇒ )�#� � 1 and )�#�� � 1 � )�#� (since )�#�� ∈ �0,1") ⇒ 0 � )�#� � 1 and )�#�� � 1 � )�#�.

(iv) Let #%, #& ∈ � and let #% ⊆ #& . Then #& � #% ∈ �, #& � #% ∪ �#& � #%� and #% ∩�#& � #%� � (.

Figure 2.1

Therefore,

12

)�#&� = )�#% ∪ �#& � #%��

� )�#%� z )�#& � #%� (using (ii))

⇒ )�#& � #%� � )�#&� � )�#%�. As )�#& � #%� B 0, it follows that)�#%� � )�#&�. (v) Let #%, #& ∈ �. Then #& �#% ∈ �,#% ∩ �#& � #%� � ( and #% ∪ #& � #% ∪�#& � #%�.

Figure 2.2

Therefore,

)�#% ∪ #&� � )�#% ∪ �#& � #%��

� )�#%� z )�#& � #%� (using (ii)) (2.1)

Also �#% ∩ #&� ∩ �#& � #%� � ( and #& � �#% ∩ #&� ∪ �#& � #%�. Therefore,

Figure 2.3

)�#&� � )��#% ∩ #&� ∪ �#& � #%��

13

= )�#% ∩ #&� + )�#& − #%� (using (ii)

⇒ )�#& − #%� = )�#&� − )�#% ∩ #&� ∙ (2.2)

Using (2.1) and (2.2), we get

)�#% ∪ #&� = )�#%� + )�#&� − )�#% ∩ #&�. ▄

Theorem 2.2 (Inclusion-Exclusion Formula)

Let ��, ℱ, )� be a probability space and let #%, #&, … , #/ ∈ ℱ�0 ∈ ℕ, 0 ≥ 2�. Then

) HI#D/DJ% K = P��,//

�J% , where �%,/ = ∑ )�#D�/DJ% and, for � ∈ 2, 3, … , 0 , ��,/ = �−1���% P )�#D% ∩ #D& ∩⋯∩ #D��.%�D��⋯�D��/

Proof. We will use the principle of mathematical induction. Using Theorem 2.1 (v), we have

)�#% ∪ #&� = )�#%� + )�#&� − )�#% ∩ #&� = �%,& +�&,&, where �%,& = )�#%� + )�#&� and �&,& = −)�#% ∩ #&�. Thus the result is true for 0 = 2. Now

suppose that the result is true for 0 ∈ 2, 3, … , for some positive integer �≥ 2�. Then

) HI #D¡v%DJ% K = )¢HI#D¡

DJ% K ∪ #¡v%£

= ) HI#D¡DJ% K + )�#¡v%� − )¢HI#D¡

DJ% K ∩ #¡v%£�usingtheresultfor0 = 2� = ) HI#D¡

DJ% K + )�#¡v%� − ) HI�#D ∩ #¡v%�¡DJ% K

= P�D,¡¡DJ% + )�#¡v%� − ) HI�#D ∩ #¡v%�¡

DJ% K�usingtheresultfor0 = ��2.3� Let fD = #D ∩ #¡v%, � = 1,… . . Then

14

) HI�#D ∩ #¡v%�¡DJ% K = ) HIfD¡

DJ% K

= ∑ ��,¡¡�J% �againusingtheresultfor0 = �,�2.4� where �%,¡ = ∑ )�fD�¡DJ% = ∑ )�#D ∩ #¡v%�¡DJ% and, for � ∈ 2, 3,⋯ , , ��,¡ = �−1���% P )�fD� ∩ fDW ∩ ⋯∩ fD��%�D��DW�⋯�D��¡

= �−1���% P )�#D� ∩ #DW ∩⋯∩ #D� ∩ #¡v%�%�D��DW�⋯�D��¡ .

Using (2.4) in (2.3), we get

)�⋃ #D¡v%DJ% � = +�%,¡ + )�#¡v%�, + ��&,¡ − �%,¡� + ⋯+ ��¡,¡ − �¡�%,¡� − �¡,¡ .

Note that �%,¡ + )�#¡v%� = �%,¡v%, ��,¡ − ���%,¡ = ��,¡v%, � = 2,3, … , , and �¡,¡ =−�¡v%,¡v%. Therefore,

)HI #D¡v%DJ% K = �%,¡v% + P ��,¡v%

¡v%�J& = P ��,¡v%

¡v%�J% .▄

Remark 2.3

(i) Let#%, #&… ∈ ℱ. Then )�#% ∪ #& ∪ #S�= )�#%� + )�#&� + )�#S�¥¦¦¦¦¦¦§¦¦¦¦¦¦¨©�,ª −�)�#% ∩ #&� + )�#% ∩ #S� + )�#& ∩ #S��¥¦¦¦¦¦¦¦¦¦¦¦¦¦§¦¦¦¦¦¦¦¦¦¦¦¦¦¨©W,ª +)�#% ∩ #& ∩ #S�¥¦¦¦¦¦§¦¦¦¦¦¨©ª,ª

= �%,S − �&,S + �S,S,

where �%,S = �%,S, �&,S = −�&,Sand�S,S = �S,S.

In general,

)�⋃ #D/DJ% � = �%,/ − �&,/ + �S,/⋯+ �−1�/�%�/,/,

where

15

�D,/ = « �D,/,if�isodd−�D,/,if�iseven , � = 1, 2, … 0.

(ii) We have

1 ≥ )�#% ∪ #&� = )�#%� + )�#&� − )�#% ∩ #&� ⇒ )�#% ∩ #&� ≥ )�#%� + )�#&� − 1. The above inequality is known as Bonferroni’s inequality. ▄

Theorem 2.3

Let ��, ℱ, )� be a probability space and let #%, #&, … , #/ ∈ ℱ�0 ∈ ℕ, 0 ≥ 2�. Then, under

the notations of Theorem 2.2,

(i) (Boole’s Inequality) �%,/ + �&,/ ≤ )�⋃ #D/%J% � ≤ �%,/; (ii) (Bonferroni’s Inequality) )�⋂ #D/%J% � ≥ �%,/ − �0 − 1�.

Proof.

(i) We will use the principle of mathematical induction. We have )�#% ∪ #&� = )�#%� + )�#&�¥¦¦¦§¦¦¦¨©�,W −)�#% ∩ #&�¥¦¦¦§¦¦¦¨©W,W

= �%,& + �&,& ≤ �%,&,

where �%,& = )�#%� + )�#&� and �&,& = −)�#% ∩ #&� ≤ 0.

Thus the result is true for 0 = 2. Now suppose that the result is true for 0 ∈2, 3, … , for some positive integer �≥ 2�, i.e., suppose that for arbitrary events f%, … , f¡ ∈ ℱ

) ¢IfD�DJ% £ ≤P)�fD��

DJ% , � = 2, 3, … , �2.5� and

) ¢IfD�DJ% £ ≥P)�fD��

DJ% − P )�fD ∩ fE�%�D�E�� , � = 2, 3, … , .�2.6� Then

16

) HI #D¡v%DJ% K = )¢HI#D¡

DJ% K ∪ #¡v%£

≤ ) HI#D¡DJ% K + )�#¡v%��using�2.5�for� = 2�

≤ P)�#D�¡DJ% + )�#¡v%��using�2.5�fork = m�

= P )�#D�¡v%DJ% = �%,¡v%.�2.7�

Also,

) HI #D¡v%DJ% K = )¢HI#D¡

DJ% K ∪ #¡v%£

= ) HI#D¡DJ% K+ )�#¡v%� − )¢HI#D¡

DJ% K ∩ #¡v%£ �usingTheorem2.2� = ) HI#D¡

DJ% K + )�#¡v%� − ) HI�#D ∩ #¡v%�¡DJ% K.�2.8�

Using (2.5), for � = , we get

) HI�#D ∩ #¡v%�¡DJ% K ≤P)¡

DJ% �#D ∩ #¡v%�,�2.9�

and using (2.6), for � = , we get

) HI#D¡DJ% K ≥ �%,¡ + �&,¡.�2.10�

Now using (2.9) and (2.10) in (2.8), we get

17

) HI #D¡v%DJ% K ≥ �%,¡ + �&,¡ + )�#¡v%� −P)�#D ∩ #¡v%�¡

DJ%

= P )�#D�¡v%DJ% − P )�#D ∩ #E�%�D�E�¡v%

= �%,¡v% + �&,¡v%. (2.11)

Combining (2.7) and (2.11), we get

�%,¡v% + �&,¡v% ≤ )HI #D¡v%%J% K ≤ �%,¡v%,

and the assertion follows by principle of mathematical induction.

(ii) We have

) H�#D/MJ% K = 1 − )¢H�#D/

MJ% K�£

= 1 − )�IEM�NMJ% �

≥ 1 −P)/%J% �#D���usingBoole°sinequality�

= 1 −P�1 − )�#D��/DJ%

= P)�#D� − �0 − 1�.▄/DJ%

Remark 2.4

Under the notation of Theorem 2.2 we can in fact prove the following inequalities:

P�E,/&�EJ% ≤ )¢I#E/

EJ% £ ≤ P �E,/&��%EJ% , � = 1,2, … , ²02³,

18

where ²/&³ denotes the largest integer not exceeding /& . ▄

Corollary 2.1

Let ��, ℱ, )� be a probability space and let #%, #&, … , #/ ∈ ℱ be events. Then

(i) )�#D� = 0, � = 1,… , 0 ⇔ )�⋃ #D/DJ% � = 0; (ii) )�#D� = 1, � = 1,… , 0 ⇔ )�⋂ #D/DJ% � = 1.

Proof.

(i) First suppose that )�#D� = 0, � = 1,… , 0.Using Boole’s inequality, we get

0 ≤ ) HI#D/DJ% K ≤ P)�#D�/

DJ% = 0. It follows that )�⋃ #D/DJ% � = 0. Conversely, suppose that )�⋃ #E/EJ% � = 0 . Then #D ⊆ ⋃ #E/EJ% , � = 1, … , 0 , and

therefore,

0 ≤ )�#D� ≤ )¢I#E/µJ% £ = 0, � = 1,… , 0,

i.e.,)�#D� = 0, � = 1,… , 0.

(ii) We have )�#D� = 1, � = 1,… , 0 ⇔ )�#D�� = 0, � = 1, … , 0

⇔ ) HI#D�/DJ% K = 0�using�i��

⇔ )¢HI#D�/DJ% K�£ = 1,

⇔ ) H�#D/DJ% K = 1.▄

Definition 2.4

A countable collection #D: � ∈ l of events is said to be exhaustive if )�⋃ #DD∈o � = 1. ▄

19

Example 2.2 (Equally Likely Probability Models)

Consider a probability space ��, ℱ, )� . Suppose that, for some positive integer � ≥ 2 , � = ⋃ ¶D�DJ% , where ¶%, ¶&, … , ¶� are mutually exclusive, exhaustive and equally likely events,

i.e.,¶D ∩ ¶E = (, if � ≠ F,)�⋃ ¶D�DJ% � = ∑ )�DJ% �¶D� = 1 and )�¶%� = ⋯ = )�¶�� = %� .Further

suppose that an event # ∈ ℱ can be written as

# = ¶D% ∪ ¶D& ∪⋯∪ ¶D· , where �%, … , �· ⊆ 1,… , � , ¶DE ∩ ¶D� = (, F ≠ �and � ∈ 2, … , � . Then

)�#� = P)+¶DE,·EJ% = ��.

Note that here � is the total number of ways in which the random experiment can terminate

(number of partition sets ¶%, … , ¶� ), and� is the number of ways that are favorable to # ∈ ℱ.

Thus, for any # ∈ ℱ,

)�#� = numberofcasesfavorableto#totalnumberofcases = ��, which is the same as classical method of assigning probabilities. Here the assumption that ¶%, … , ¶� are equally likely is a part of probability modeling. ▄

For a finite sample space �, when we say that an experiment has been performed at random

we mean that various possible outcomes in � are equally likely. For example when we say that

two numbers are chosen at random, without replacement, from the set 1, 2, 3 then � = �1, 2 , 1, 3 , 2, 3 �and )�1, 2 � = )�1, 3 � = )�2, 3 � = %S, where �, F indicates that

the experiment terminates with chosen numbers as �andF, �, F ∈ 1, 2, 3 , � ≠ F. Example 2.3

Suppose that five cards are drawn at random and without replacement from a deck of 52

cards. Here the sample space � comprises of all +525 , combinations of 5 cards. Thus number of

favorable cases= +525 , = �, say. Let ¶%, … , ¶� be singleton subsets of �.Then � = ⋃ ¶D�DJ% and )�¶%� = ⋯ = )�¶�� = %�.Let #% be the event that each card is spade. Then

Number of cases favorable to #% = +135 ,.

20

Therefore,

)�#%� = +135 ,+525 , ∙

Now let #& be the event that at least one of the drawn cards is spade. Then #&� is the event that

none of the drawn cards is spade, andnumber of cases favorable to#&� = +395 , ∙Therefore,

)�#&�� = +395 ,+525 ,,

and )�#&� = 1 − )�#&�� = 1 − +SR ,+R&R , ∙ Let #S be the event that among the drawn cards three are kings and two are queens. Then

number of cases favorable to#S = +43, +42, and, therefore,

)�#S� = +43, +42,+525 , ∙ Similarly, if #¹ is the event that among the drawn cards two are kings, two are queens and one

is jack, then

)�#¹� = +42, +42, +41,+525 , .▄

Example 2.4

Suppose that we have 0�≥ 2� letters and corresponding 0 addressed envelopes. If these

letters are inserted at random in 0 envelopes find the probability that no letter is inserted into

the correct envelope.

Solution. Let us label the letters as º%, º&, … , º/ and respective envelopes as d%, d&, … , d/. Let #D denote the event that letter ºD is (correctly) inserted into envelope dD, � = 1, 2, … , 0. We

need to find )�⋂ #D�/DJ% �. We have

21

) H�#D�/DJ% K = ) ¢HI#D/

DJ% K�£ = 1 − ) HI#D/DJ% K = 1 −P��,/ ,/

�J%

where, for � ∈ 1, 2, … , 0 , ��,/ = �−1���% P )�#D� ∩ #DW ∩ ⋯∩ #D��.%�D��DW�⋯�D��/

Note that 0 letters can be inserted into 0 envelopes in 0! ways. Also, for 1 ≤ �% < �& < ⋯ <�� ≤ 0, #D� ∩ #DW ∩⋯∩ #D� is the event that letters ºD� , ºDW , … , ºD� are inserted into correct

envelopes. Clearly number of cases favorable to this event is �0 − ��! . Therefore, for 1 ≤ �% < �& < ⋯ < �� ≤ 0, )�#D� ∩ #DW ∩⋯∩ #D�� = �0 − ��!0!

⇒ ��,/ = �−1���% P �0 − ��!0!1≤�1<�2<⋯<��≤0

= �−1���% +0�, �0 − ��!0!

= �−1���%�!

⇒ ) H�#D�/DJ% K = 12! − 13! + 14! − ⋯+ �−1�/0! .▄

3. Conditional Probability and Independence of Events

Let ��, ℱ, )� be a given probability space. In many situations we may not be interested in the

whole space �. Rather we may be interested in a subset e ∈ ℱ of the sample space �. This may

happen, for example, when we know apriori that the outcome of the experiment has to be an

element of e ∈ ℱ.

Example 3.1

Consider a random experiment of shuffling a deck of 52 cards in such a way that all 52! arrangements of cards (when looked from top to bottom) are equally likely.

22

Here,

� =all 52! permutations of cards,

and

ℱ = ��Ω�. Now suppose that it is noticed that the bottom card is the king of heart. In the light of this

information, sample space e comprises of51! arrangements of 52 cards with bottom card as

king of heart.Define the event

¼:top card is king.

For # ∈ ℱ, define

)�#� = probability of event # under sample space �,

)½�#� = probability of event# under sample space e.

Clearly,

)½�¼� = S×Rg!R%! .

Note that

)½�¼� = 3 × 50!51! = S×Rg!R&!R%!R&! = )�¼ ∩ e�)�e�

i. e. , )½�¼� = )�¼ ∩ e�)�e� .�3.1�

We call )½�¼� the conditional probability of event¼ given that the experiment will result in an

outcome in e (i.e., the experiment will result in an outcome . ∈ e ) and )�¼� the

unconditional probability of event ¼. ▄

Example 3.1 lays ground for introduction of the concept of conditional probability.

Let ��, ℱ, )� be a given probability space. Suppose that we know in advance that the outcome

of the experiment has to be an element of e ∈ ℱ, where )�e� > 0. In such situations the

sample space is e and natural contenders for the membership of the event space are

23

d ∩ e ∶ d ∈ � . This raises the question whether �½ � d ∩ e ∶ d ∈ � is an event space?

i.e., whether �½ � d ∩ e ∶ d ∈ � is a sigma-field of subsets of e?

Theorem 3.1

Let � be a �-field of subsets � and let e ∈ �. Define �½ � d ∩ e ∶ d ∈ � . Then �½ is a�-

field of subsets of eand �½ ⊆ �.

Proof. Since e ∈ � and �½ � d ∩ e ∶ d ∈ � it is obvious that �½ ⊆ �. We have � ∈ � and

therefore

e � � ∩ e ∈ �½ .�3.2� Also,

¶ ∈ �½ ⇒ C � A ∩ e for same d ∈ �

⇒ ¶� � e � ¶ � �� � d�¥¦§¦∈�

∩ e (sinced ∈ �)

Figure 3.1

⇒ ¶� � e � ¶ ∈ �½, (3.3)

i.e., �½ is closed under complements with respect to e.

Now suppose that ¶D ∈ �½ , � � 1,2, ….Then¶D � dD ∩ e, for somedD ∈ �, � � 1,2, …. Therefore,

I¶D`

DJ%�HIdD

`

DJ%K¥¦¦§¦¦

∈�∩ e�sincedD ∈ �, � � 1,2, … �

24

∈ ℱ½,�3.4�

i.e., ℱ½ is closed under countable unions.

Now (3.2), (3.3) and (3.4) imply that ℱ½is a �-field of subsets ofe. ▄

Equation (3.1) suggests considering the set function )½: ℱ½ → ℝ defined by

)½�¶� = )�¶�)�e� , ¶ ∈ ℱ½ = d ∩ e: d ∈ ℱ . Note that, for¶ ∈ ℱ½ , )�¶� is well defined as ℱ½ ⊆ ℱ.

Let us define another set function )�∙ |e� ∶ ℱ → ℝ by

P�d|e� � )½�d ∩ e� = )�d ∩ e�)�e� , d ∈ ℱ. Theorem 3.2

Let ��, ℱ, )�be a probability space and let e ∈ ℱ be such that )�e� > 0. Then �e, ℱ½, )½� and ��, ℱ, )�⋅ |e�� are probability spaces.

Proof. Clearly

)½�¶� � Á���Á�½� B 0, ∀¶ ∈ ℱ½.

Let ¶D ∈ ℱ½, � = 1, 2, … be mutually exclusive.Then ¶D ∈ ℱ, � = 1, 2, … (sinceℱ½ ⊆ ℱ), and

)½ HI¶D`DJ% K = )�⋃ ¶DDJ% �)�e�

= ∑ )�¶D�DJ%)�e�

= P)�¶D�)�e�`DJ%

= P)½`DJ% �¶D�,�3.5�

i.e., )½ is countable additive on ℱ½.

25

Also

)½�e� = )�e�)�e� = 1 ∙ Thus )½ is a probability measure on ℱ½.

Note that )�d|e� B 0, ∀d ∈ ℱ and

)��|B� � )�� ∩ B�)�e� = )�e�)�e� = 1 ∙ Let #D ∈ ℱ, � = 1,2, … be mutually exclusive. Then ¶D = #D ∩ e ∈ ℱ½ , � = 1, 2, … are mutually

exclusive and

) HI#D|e`

DJ%K � )½ HI¶D

`

DJ%K �P)½�¶D�

`

DJ%� P)½

`

DJ%�#D ∩ e� = P)`

DJ% �#D|e�.�using�3.5��

It follows that)�∙ |e� is a probability measure on ℱ. ▄

Note that domains of )½�∙� and )�∙ |e� are ℱ½ and ℱ respectively. Moreover,

)�d|e� � )½�d ∩ e� = )�d ∩ e�)�e� , d ∈ ℱ. Definition 3.1

Let ��, ℱ, )� be a probability space and let e ∈ ℱ be a fixed event such that )�e� > 0. Define

the set function )�∙ |e�: ℱ → ℝ by

)�d|e� � )½�d ∩ e� = )�d ∩ e�)�e� , d ∈ ℱ. We call )�d|e� the conditional probability of event d given that the outcome of the

experiment is ine or simply the conditional probability of d given e. ▄

Example 3.2

Six cards are dealt at random (without replacement) from a deck of 52 cards. Find the

probability of getting all cards of heart in a hand (event A) given that there are at least 5 cards

of heart in the hand (event B).

Solution. We have,

26

)�d|e� = )�d ∩ e�)�e� . Clearly,

)�d ∩ e� = )�d� = +%ST ,+R&T ,, and )�e� = +%SR ,+S% ,v+%ST ,+R&T , ∙ Therefore,

)�d|e� = +136 ,+135 , +391 , + +136 , .▄

Remark 3.1

For events #%, … , #/ ∈ ℱ�0 ≥ 2�,

)�#% ∩ #&� = )�#%�)�#&|#%�, if )�#%� > 0,

and

)�#% ∩ #& ∩ #S� = )��#% ∩ #&� ∩ #S�

= )�#% ∩ #&�)�#S|#% ∩ #&� = )�#%�)�#&|#%�)�#S|#% ∩ #&�. If )�#% ∩ #&� > 0 (which also guarantees that )�#%� > 0, since #% ∩ #& ⊆ #%).

Using principle of mathematical induction it can be shown that

) H�#D/DJ% K = )�#%�)�#&|#%�)�#S|#% ∩ #&�⋯)�#/|#% ∩ #& ∩ ⋯∩ #/�%�,

provided )�#% ∩ #& ∩⋯∩ #/�%� > 0(which also guarantees that )�#% ∩ #& ∩⋯∩ #D� > 0,� = 1, 2,⋯ , 0 − 1). ▄

27

Example 3.3

An urn contains four red and six black balls. Two balls are drawn successively, at random and

without replacement, from the urn. Find the probability that the first draw resulted in a red ball

and the second draw resulted in a black ball.

Solution. Define the events

d: first draw results in a red ball;

e: second draw results in a black ball.

Then,

Required probability = )�d ∩ e� = )�d�)�e|d�

� 410 × 69 = 1245 .▄

Let ��, ℱ, )� be a probability space. For a countable collection #D: � ∈ l of mutually exclusive

and exhaustive events, the following theorem provides a relationship between marginal

probability )�#� of an event # ∈ ℱ and joint probabilities )�# ∩ #D� of events # and #D, � ∈ l.

Theorem 3.3 (Theorem of Total Probability)

Let ��, ℱ, )� be a probability space and let #D: � ∈ l be a countable collection of mutually

exclusive and exhaustive events (i.e.,#D ∩ #E = (, whenever � ≠ F, and )�⋃ #DD∈o � = 1) such

that )�#D� > 0, ∀� ∈ l.Then, for any event # ∈ ℱ,

)�#� = P)�# ∩ #D�D∈o =P)�#|#D�D∈o

)�#D�. Proof. Let f = ⋃ #DD∈o . Then)�f� = 1 and )�f�� = 1 − )�f� = 0. Therefore,

)�#� = )�# ∩ f� + )�# ∩ f�� = )�# ∩ f��# ∩ f� ⊆ f� ⇒ 0 ≤ )�# ∩ f�� ≤ )�f�� = 0�

= ) HI�# ∩ #D�D∈o K =P)�# ∩ #D�D∈o �#DÂaredisjoint⇒ #D ∩ #s�⊆ #D�aredisjoint�

28

= P)�#|#D�D∈o

)�#D�.▄

Example 3.4

Urn Ä% contains 4 white and 6 black balls and urn Ä& contains 6 white and 4 black balls. A fair

die is cast and urn Ä% is selected if the upper face of die shows 5 or 6 dots. Otherwise urn Ä& is

selected. If a ball is drawn at random from the selected urn find the probability that the drawn

ball is white.

Solution. Define the events: Å ∶ drawnballiswhite;#% ∶ urnÄ%isselected;#& ∶ urnÄ&isselected.

Then #%, #& is a collection of mutually exclusive and exhaustive events. Therefore

)�Å� = )�#%�)�Å|#%� z )�#&�)�Å|#&� � 26 × 410 + 46 × 610

= 815 ∙ ▄

The following theorem provides a method for finding the probability of occurrence of an event

in a past trial based on information on occurrences in future trials.

Theorem 3.4 (Bayes’ Theorem)

Let ��, ℱ, )� be a probability space and let #D:� ∈ l be a countable collection of mutually

exclusive and exhaustive events with )�#D� > 0, � ∈ l . Then, for any event # ∈ ℱ with )�#� > 0, we have

)�#E|#� � )�#|#E�)�#E�∑ )�#|#D�)�#D�D∈o , F ∈ l ∙ Proof. We have, for F ∈ l,

)�#E|#� � )�#E ∩ #�)�#�

29

= )�#|#E�)�#E�)�#�

� )�#|#E�)�#E�∑ )�#|#D�)�#D�D∈o �usingTheoremofTotalProbability�.▄

Remark 3.2

(i) Suppose that the occurrence of any one of the mutually exclusive and exhaustive

events #D, � ∈ l, causes the occurrence of an event #. Given that the event # has

occurred, Bayes’ theorem provides the conditional probability that the event # is

caused by occurrence of event #E, F ∈ l.

(ii) In Bayes’ theorem the probabilities )�#E�, F ∈ l, are referred to as prior probabilities

and the probabilities )�#E|#�, F ∈ l, are referred to as posterior probabilities. ▄

To see an application of Bayes’ theorem let us revisit Example 3.4.

Example 3.5

UrnÄ%contains 4 white and 6 black balls and urn Ä& contains 6 white and 4black balls. A fair

die is cast and urn Ä% is selected if the upper face of die shows five or six dots. Otherwise urn Ä& is selected. A ball is drawn at random from the selected urn.

(i) Given that the drawn ball is white, find the conditional probability that it came from

urn Ä%;

(ii) Given that the drawn ball is white, find the conditional probability that it came from

urn Ä&.

Solution. Define the events:

Å ∶ drawn ball is white; #% ∶ urnÄ%isselected#& ∶ urnÄ&isselected Ç mutually&exhaustiveevents

(i) We have )�#%|Å� � )�Å|#%�)�#%�)�Å|#%�)�#%� z )�Å|#&�)�#&�

� ¹%g × &

T¹%g × &

T z T%g × ¹T

30

= 14 ∙ (ii) Since #% and #& are mutually exclusive and )�#% ∪ #&|Å� � )��|Å� � 1, we have

)�#&|Å� � 1 − )�#%|Å� � 34 ∙ ▄

In the above example

)�#%|Å� � %¹ < %

S � )�#%�, and)�#&|� � 34 > 23 = )�#&�, i.e.,

(i) the probability of occurrence of event #% decreases in the presence of the information

that the outcome will be an element of Å;

(ii) the probability of occurrence of event #& increases in the presence of information that

the outcome will be an element of Å.

These phenomena are related to the concept of association defined in the sequel.

Note that

)�#%|Å� < )�#%� ⇔ )�#% ∩Å� < )�#%�)�Å�,

and

)�#&|Å� > )�#&� ⇔ )�#& ∩Å� > )�#&�)�Å�.

Definition 3.2 Let��, ℱ, )� be a probability space and let d and e be two events. Events d and e are said to

be

(i) negatively associated if )�d ∩ e� < )�d�)�e�; (ii) positively associated if )�d ∩ e� > )�d�)�e�; (iii) independent if )�d ∩ e� = )�d�)�e�. ▄

Remark 3.3

31

(i) If )�e� = 0 then )�d ∩ e� = 0 = )�d�)�e�, ∀d ∈ ℱ, i.e., if )�e� = 0 then any

event d ∈ ℱ and e are independent;

(ii) If )�e� > 0 then d and e are independent If, and only if, )�d|e� � )�d�, i.e., if )�e� > 0, then events d and e are independent if, and only if, the availability of the

information that event e has occurred does not alter the probability of occurrence

of event d. ▄

Now we define the concept of independence for arbitrary collection of events.

Definition 3.3 Let ��, ℱ, )� be a probability space. Let l ⊆ ℝ be an index set and let #m: n ∈ l be a

collection of events in ℱ.

(i) Events #m: n ∈ l are said to be pair wise independent if any pair of events #m and #É, n ≠ Ê in the collection �#E: F ∈ l� are independent. i.e., if )�#m ∩ #É� =)�#m�)�#É�, whenever n, Ê ∈ l and n ≠ Ê;

(ii) Let l = 1, 2, … , n , for some 0 ∈ ℕ, so that #m: n ∈ l = #%, … , #/ is a finite

collection of events in ℱ. Events #%, … , #/ are said to be independent if, for any sub

collection �#m%, … , #m�� of #%, … , #/ �� = 2,3, … , 0�

) ¢�#mE�EJ% £ = Ë)�

EJ% +#mE,.�3.6�

(iii) Let l ⊆ ℝ be an arbitrary index set. Events #m: n ∈ l are said to be independent if

any finite sub collection of events in #m: n ∈ l forms a collection of independent

events. ▄

Remark 3.4

(i) To verify that0 events #%, … , #/ ∈ ℱ are independent one must verify 2/ − 0 −1+= ∑ +0F,/µJ& , conditions in (3.6). For example, to conclude that three events #%, #& and #S are independent, the following 4 �= 2S − 3 − 1� conditions must be

verified: )�#% ∩ #&� = )�#%�)�#&�; )�#% ∩ #S� = )�#%�)�#S�;

32

)�#& ∩ #S� = )�#&�)�#S�; )�#% ∩ #& ∩ #S� = )�#%�)�#&�)�#S�.

(ii) If events #%, … , #/ are independent then, for any permutation �n%, … , n/� of �1, … , 0�, the events #m%, … , #m/ are also independent. Thus the notion of

independence is symmetric in the events involved.

(iv) Events in any sub collection of independent events are independent. In particular

independence of a collection of events implies their pair wise independence. ▄

The following example illustrates that, in general, pair wise independence of a collection of

events may not imply their independence.

Example 3.6 Let � = 1, 2, 3, 4 and let ℱ = ���� , the power set of � . Consider the probability

space ��, ℱ, P�, where )�� � = %¹ , � = 1, 2, 3, 4 . Let d = 1, 4 , e = 2, 4 and¶ = 3, 4 . Then, )�d� = )�e� = )�¶� = %&,

)�d ∩ e� = )�d ∩ ¶� = )�e ∩ ¶� = )�4 � = %¹,

and)�d ∩ e ∩ ¶� = )�4 � = %¹ ∙ Clearly,

)�d ∩ e� = )�d�)�e�; )�d ∩ ¶� = )�d�)�¶�, and)�e ∩ ¶� = )�e�)�¶�, i.e., d, e and ¶ are pairwise independent.

However,

)�d ∩ e ∩ ¶� = %¹ ≠ )�d�)�e�)�¶�. Thus d, e and ¶are not independent. ▄

Theorem 3.5 Let ��, ℱ, )� be a probability space and let d and e be independent events (d, e ∈ ℱ).Then

(i) d� and e are independent events;

33

(ii) d and e�are independent events;

(iii) d�ande� are independent events.

Proof. We have

)�d ∩ e� = )�d�)�e�.

(i) Since e = �d ∩ e� ∪�d� ∩ e� and �d ∩ e� ∩ �d� ∩ e� = (, we have )�e� = )�d ∩ e� + )�d� ∩ e� ⇒ )�d� ∩ e� = )�e� − )�d ∩ e�

= )�e� − )�d�)�e� = �1 − )�d��)�e� = )�d��)�e�, i.e., d� and e are independent events.

(ii) Follows from (i) by interchanging the roles of d and e. (iii) Follows on using (i) and (ii) sequentially. ▄

The following theorem strengthens the results of Theorem 3.5.

Theorem 3.6 Let ��, ℱ, )� be a probability space and let f%, … , f/�0 ∈ ℕ, 0 ≥ 2� be independent events in ℱ. Then, for any � ∈ 1, 2, … , 0 − 1 and any permutation�n%, … , n/� of �1, … , 0�, the events fm%, … , fm� , fm�Ì�� , … , fmÍ� are independent. Moreover the events f%� , … , f/� are independent.

Proof. Since the notion of independence is symmetric in the events involved, it is enough to

show that for any � ∈ 1, 2, … , 0 − 1 the events f%, … , f� , f�v%� , … , f/� are independent. Using

backward induction and symmetry in the notion of independence the above mentioned

assertion would follow if, under the hypothesis of the theorem, we show that the events f%, … , f/�%, f/� are independent. For this consider a sub collection �fD%, … , fD¡, Î� of f%, … , f/�%, f/���%, … , �¡ ⊆ 1,… , 0 − 1 �, where Î = f/�orÎ = fE , for some F ∈ 1, … , 0 −1 − �%, … , �¡ , depending on whether or not f/� is a part of sub collection �fD%, … , fD¡, Î� . Thus the following two cases arise: ÏÐÑÒÓ. Î = f/�

Since f%, … , f/ are independent, we have

34

)¢�fDE¡EJ% £ =Ë)¡

EJ% +fDE,, and

) Ô¢�fDE¡EJ% £ ∩ f/Õ = ÖË)+fDE,¡

EJ% × )�f/�

= ) ¢�fDE¡EJ% £)�f/�

⇒ events�fDE¡EJ% andf/areindependent

⇒ events ⋂ fDE¡EJ% andf/�areindependent�Theorem3.5�ii�) ⇒ ) Ô¢�fDE¡

EJ% £ ∩ f/�Õ = )¢�fDE¡EJ% £)�f/��

= ÖË)+fDE,¡EJ% × )�f/��

⇒ )�fD% ∩⋯∩ fD¡ ∩ Î� = ÖË)+fDE,¡EJ% × )�Î�.

Case II. Î = fE , for someF ∈ 1, … , 0 − 1 − �%, … , �¡ . In this case �fD%, … , fD¡ , Î� is a sub collection of independent events f%, … , f/ and therefore

)�fD% ∩⋯∩ fD¡ ∩ Î� = ÖËfDE¡EJ% × )�Î�.

Now the result follows on combining the two cases. ▄

35

When we say that two or more random experiments are independent (or that two or more

random experiments are performed independently) it simply means that the events associated

with the respective random experiments are independent.

4. Continuity of Probability Measures

We begin this section with the following definition.

Definition 4.1

Let ��, ℱ, )� be a probability space and let d/: 0 = 1, 2, … be a sequence of events in ℱ.

(i) We say that the sequence d/: 0 = 1, 2, … is increasing (written as d/ ↑ ) if d/ ⊆ d/v%, 0 = 1,2, … ; (ii) We say that the sequence d/: 0 = 1, 2, … is decreasing (written as d/ ↓ ) if d/v% ⊆ d/ , 0 = 1,2, … ; (iii) We say that the sequence d/: 0 = 1, 2, … is monotone if either d/ ↑ or d/ ↓;

(iv) If d/ ↑ we define the limit of the sequence d/: 0 = 1, 2, … as ⋃ d//J% and write Lim/→` d/ = ⋃ d//J% ;

(v) If d/ ↓ we define the limit of the sequence d/: 0 = 1, 2, … as ⋂ d//J% and write Lim/→` d/ = ⋂ d//J% . ▄

Throughout we will denote the limit of a monotone sequence d/: 0 = 1, 2, … of events by Lim/→` d/ and the limit of a sequence h/: 0 = 1, 2, … of real numbers (provided it exists) by lim/→` h/.

Theorem 4.1 (Continuity of Probability Measures)

Let d/: 0 = 1, 2, … be a sequence of monotone events in a probability space��, ℱ, )�. Then

) +Lim/→`d/, = lim/→`)�d/�. Proof.

Case I. d/ ↑

In this case, Lim/→` d/ = ⋃ d//J% . Define e% = d%, e/ = d/ − d/�%, 0 = 2, 3, ….

36

Figure 4.1

Thene/ ∈ �, 0 � 1, 2… , e/s are mutually exclusive and⋃ e//J% � ⋃ d//J% � Lim/→` d/ .

Therefore,

) +Lim/→`d/, � ) HIe/`

/J%K

� P)�e/�`

/J%

� lim/→`P)�e��/

�J%

� lim/→` Ü)�d%� zP)�d� � d��%�/

�J&Ý

� lim/→` Ü)�d%� zP�)�d�� � )�d��%��/

�J&Ý

(using Theorem 2.1 (iv) since d��% ⊆ d� , � � 1, 2, …)

� lim/→` Ü)�d%� zP)/

�J&�d�� �P)

/

�J&�d��%�Ý

� lim/→`�)�d%� z )�d/� � )�d%�" � lim/→`)�d/�.

37

Case II. d/ ↓

In this case, Lim/→`d/ = ⋂ d//J% and d/� ↑.Therefore,

) +Lim/→`d/, = ) H�d/`/J% K

= 1 − ) HH�d/`/J% K�K

= 1 − ) HId/�`/J% K

= 1 − )�Lim/→`d/� � = 1 − lim/→`)�d/� ��usingCaseI, sinced/� ↑� = 1 − lim/→`�1 − )�d/��

= lim/→`)�d/�. ▄

Remark 4.1 Let ��, ℱ, )� be a probability space and let #D: � = 1, 2, … be a countably infinite collection of

events in ℱ. Define

e/ = I#D/DJ% and¶/ = �#D/

DJ% , 0 = 1,2, …

Then e/ ↑, ¶/ ↓, Lim/→`e/ = ⋃ e//J% = ⋃ #DDJ% and Lim/→`¶/ = ⋂ #DDJ% . Therefore

) HI#D`DJ% K = ) +Lim/→`e/,

= lim/→`)�e/��usingTheorem4.1� = lim/→`)HI#D/

DJ% K

= lim/→`ß�%,/ + �&,/ +⋯+ �/,/à,

38

where Sâ,Ns are as defined in Theorem 2.2.

Moreover,

) H�#D`DJ% K = ) +Lim/→`¶/,

= lim/→`)�¶/��usingTheorem4.1� = lim/→`)�⋂ #D/DJ% �. Similarly, if #D:� = 1, 2,⋯ is a collection of independent events, then

) H�#D`DJ% K = lim/→`) H�#D/

DJ% K

= lim/→` ÜË)/DJ% �#D�Ý

= Ë)`DJ% �#D�.▄

Problems

1. Let � = 1, 2, 3, 4 . Check which of the following is a sigma-field of subsets of �:

(i) ℱ% = �(, 1, 2 , 3, 4 �; (ii) ℱ& = �(, �, 1 , 2, 3, 4 , 1, 2 , 3, 4 �;

(iii) ℱS = �(, �, 1 , 2 , 1, 2 , 3, 4 2, 3, 4 , 1, 3, 4 �.

2. Show that a class ℱ of subsets of � is a sigma-field of subsets of � if, and only if, the

following three conditions are satisfied: (i) � ∈ ℱ ; (ii) d ∈ ℱ ⇒d� = � − d ∈ ℱ ;

(iii) d/ ∈ ℱ, n = 1, 2,⋯ ⇒ ⋂ d/ ∈/J% ℱ.

3. Let ℱã:ä ∈ l be a collection of sigma-fields of subsets of �.

(i) Show that ⋂ ℱãã∈o is a sigma-field;

(ii) Using a counter example show that ∪ã∈o ℱã may not be a sigma-field;

39

(iii) Let } be a class of subsets of � and let ℱã:ä ∈ l be a collection of all sigma-fields

that contain the class } . Show that ��}� = ⋂ ℱãã∈o , where ��}� denotes the

smallest sigma-field containing the class } (or the sigma-field generated by class }).

4. Let � be an infinite set and let { = d ⊆ �: disfiniteord�isfinite . (i) Show that { is closed under complements and finite unions;

(ii) Using a counter example show that { may not be closed under countably infinite

unions (and hence { may not be a sigma-field).

5. (i) Let � be an uncountable set and let ℱ = d ⊆ �: discountableord� iscountable . (a) Show that ℱ is a sigma-field;

(b) What can you say about ℱwhen � is countable?

(ii) Let Ω be a countable set and let } = . : . ∈ Ω . Show that ��}� = ����.

6. Let ℱ = ���� =the power set of � = 0, 1, 2, … . In each of the following cases, verify

if ��, ℱ, )� is a probability space:

(i) )�d� = ∑ å�ãæ∈� äæ u!⁄ , d ∈ ℱ, ä > 0; (ii) )�d� = ∑ ��1 − ��ææ∈� , d ∈ ℱ, 0 < � < 1; (iii) )�d� = 0, if d has a finite number of elements, and )�d� = 1, if d has infinite

number of elements, d ∈ ℱ.

7. Let ��, ℱ, )�be a probability space and let d, e, ¶, è ∈ ℱ . Suppose that )�d� =0.6, )�e� = 0.5, )�¶� = 0.4, )�d ∩ e� = 0.3, )�d ∩ ¶� = 0.2, )�e ∩ ¶� = 0.2,)�d ∩ e ∩ ¶� = 0.1, )�e ∩ è� = )�¶ ∩ è� = 0, )�d ∩ è� = 0.1and)�è� = 0.2. Find:

(i) )�d ∪ e ∪ ¶�and)�d� ∩ e� ∩ ¶��; (ii) )��d ∪ e� ∩ ¶�and)�d ∪ �e ∩ ¶��; (iii) )��d� ∪ e�� ∩ ¶��and)��d� ∩ e�� ∪ ¶��; (iv) )�e ∩ ¶ ∩ è�and)�d ∩ ¶ ∩ è�; (v) )�d ∪ e ∪ è�and)�d ∪ e ∪ ¶ ∪ è�; (vi) )��d ∩ e� ∪ �¶ ∩ è��.

8. Let ��, ℱ, )� be a probability space and let d and ebe two events (i.e., d, e ∈ ℱ).

(i) Show that the probability that exactly one of the events d or e will occur is given by )�d� + )�e� − 2)�d ∩ e�; (ii) Show that )�d ∩ e� − )�d�)�e� = )�d�)�e�� − )�d ∩ e�� = )�d��)�e� −)�d� ∩ e� = )��d ∪ e��� − )�d��)�e��.

40

9. Suppose that 0�≥ 3� persons )%, … , )/ are made to stand in a row at random. Find the

probability that there are exactly � person between )%and)&; here � ∈ 1, 2, … , 0 − 2 .

10. A point �é, ê� is randomly chosen on the unit square � = �u, ë�: 0 ≤ u ≤ 1, 0 ≤ ë ≤1 (i.e., for any region ì ⊆ � for which the area is defined, the probability that �é, ê�

lies on ì is íîïíðñòíîïíðñ©� ⋅ Find the probability that the distance from �é, ê� to the nearest

side does not exceed %S units.

11. Three numbers h, i and óare chosen at random and with replacement from the set 1, 2, … ,6 . Find the probability that the quadratic equation hu& + iu + ó = 0 will have

real root(s).

12. Three numbers are chosen at random from the set 1, 2, … ,50 . Find the probability that

the chosen numbers are in

(i) arithmetic progression;

(ii) geometric progression.

13. Consider an empty box in which four balls are to be placed (one-by-one) according to

the following scheme. A fair die is cast each time and the number of dots on the upper

face is noted. If the upper face shows up 2 or 5 dots then a white ball is placed in the

box. Otherwise a black ball is placed in the box. Given that the first ball placed in the box

was white find the probability that the box will contain exactly two black balls.

14. Let ��0, 1", ℱ, )� be a probability space such that ℱ is the smallest sigma-field

containing all subintervals of � = �0, 1"and)��h, i"� = i − h, where 0 ≤ h < i ≤ 1

(such a probability measure is known to exist).

(i) Show that i = ⋂ +i − %/v% , i³/J% , ∀i ∈ �0, 1"; (ii) Show that )�i � = 0, ∀i ∈ �0, 1"and )��0, 1"� = 1(Note that here )�i � = 0

but i ≠ (and)��0, 1�� = 1but�0, 1� ≠ Ω) ;

(iii) Show that, for any countable set d ∈ ℱ, )�d� = 0; (iv) For 0 ∈ ℕ, let d/ = +0, %/³ and e/ = +%&+ %/v& , 1³ . Verify that d/ ↓, e/ ↑,)�Lim/→` d/� = lim/→` )�d/� and)�Lim/→` e/� = lim/→` )�e/�.

15. Consider four coding machines ô%, ô&, ôSandô¹ producing binary codes 0 and 1. The

machine ô% produces codes0 and 1 with respective probabilities %¹ and

S¹. The code

produced by machine ô� is fed into machine ô�v%�� = 1, 2, 3� which may either leave

41

the received code unchanged or may change it. Suppose that each of the machines ô&, ôSandô¹ change the received code with probabilityS¹. Given that the machine ô¹

has produced code 1, find the conditional probability that the machine ô% produced

code 0.

16. A student appears in the examinations of four subjects Biology, Chemistry, Physics and

Mathematics. Suppose that probabilities of the student clearing examinations in these

subjects are %& , %S , %¹ and %R respectively. Assuming that the performances of the students

in four subjects are independent, find the probability that the student will clear

examination(s) of

(i) all the subjects; (ii) no subject; (iii) exactly one subject;

(iv) exactly two subjects; (v) at least one subject.

17. Let d and ebe independent events. Show that

max)��d ∪ e���, )�d ∩ e�, )�dΔe� ≥ 49,

where dΔe = �d − e� ∪ �e − d�. 18. For independent events d%, … , d/, show that:

) H�dD�/DJ% K ≤ å�∑ Á����Í�ö� .

19. Let ��, ℱ, )� be a probability space and let d%, d&, … be a sequence of events �i. e. , dD ∈ ℱ, � = 1, 2, … � . Define e/ = ⋂ dDDJ/ , ¶/ = ⋃ dD , 0 = 1,2, … ,DJ/ è =⋃ e//J% and # = ⋂ ¶//J% . Show that:

(i) è is the event that all but a finite number of d/s occur and # is the event that

infinitely many d/s occur;

(ii) è ⊆ #; (iii) )�#�� = lim/→` )�¶/�� = lim/→` lim¡→` )�⋂ d��¡�J/ � and )�#� = lim/→` )�¶/�; (iv) if ∑ )�d/�/J% < ∞ then, with probability one, only finitely many d/s will occur;

(v) if d%, d&, … are independent and ∑ )�d/�/J% < ∞ then, with probability one,

infinitely many d/Â will occur.

42

20. Let d, eand¶ be three events such that dande are negatively (positively) associated

and e and ¶ are negatively (positively) associated. Can we conclude that, in general, d

and ¶ are negatively (positively) associated?

21. Let ��, ℱ, )� be a probability space and let A and B two events�i. e., d, e ∈ ℱ�. Show

that if d and e are positively (negatively) associated then d and e� are negatively

(positively) associated.

22. A locality has 0 houses numbered 1,… . , 0 and a terrorist is hiding in one of these

houses. Let �E denote the event that the terrorist is hiding in house numbered F, F = 1,… , 0 and let )��E� = �E ∈ �0,1�, F = 1,… , 0. During a search operation, let fE

denote the event that search of the house number Fwill fail to nab the terrorist there

and let )�fE|�E� � �E ∈ �0,1�, F = 1, … , 0. For each �, F ∈ 1, … , 0 , � ≠ F, show that �E andfE are negatively associated but �DandfE are positively associated. Interpret

these findings.

23. Let d, eand¶ be three events such that )�e ∩ ¶� > 0. Prove or disprove each of the

following:

(i) )�d ∩ e|¶� � )�d|e ∩ ¶�)�e|¶�; (ii) )�d ∩ e|¶� � )�d|¶�)�e|¶� if dande

are independent events.

24. A�-out-of-0 system is a system comprising of 0 components that functions if, and only

if, at least ��� ∈ 1,2, … , 0 � of the components function. A1-out-of-0 system is called

a parallel system and an0 -out-of-0 system is called a series system. Consider 0

components ¶%, … , ¶/ that function independently. At any given time ÷ the probability

that the component ¶D will be functioning is �D�÷��∈ �0,1�� and the probability that it

will not be functioning at time ÷ is 1 − �D�÷�, � = 1,… , 0.

(i) Find the probability that a parallel system comprising of components ¶%, … , ¶/ will

function at time ÷;

(ii) Find the probability that a series system comprising of components¶%, …,¶/ will

function at time ÷;

(iii) If �D�÷� = ��÷�, � = 1,… , 0, find the probability that a �-out-of-0 system comprising

of components ¶%, … , ¶/ will function at time÷.