Mini-batch deeplearning.ai gradient descent · Batch vs. mini-batch gradient descent Vectorization...

Transcript of Mini-batch deeplearning.ai gradient descent · Batch vs. mini-batch gradient descent Vectorization...

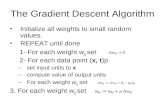

Optimization Algorithms

Mini-batchgradient descentdeeplearning.ai

Andrew Ng

Batch vs. mini-batch gradient descentVectorization allows you to efficiently compute on m examples.

Andrew Ng

Mini-batch gradient descent

Optimization Algorithms

Understanding mini-batch

gradient descentdeeplearning.ai

Andrew Ng

Training with mini batch gradient descent

# iterations

cost

Batch gradient descent

mini batch # (t)

cost

Mini-batch gradient descent

Andrew Ng

Choosing your mini-batch size

Andrew Ng

Choosing your mini-batch size

Andrew Ng

Andrew Ng

Optimization Algorithms

Understanding exponentially

weighted averagesdeeplearning.ai

Andrew Ng

Exponentially weighted averages

days

temperature

!" = $!"%& + (1 − $),"

Andrew Ng

Exponentially weighted averages

!"## = 0.9!(( + 0.1+"##!(( = 0.9!(, + 0.1+((!(, = 0.9!(- + 0.1+(,

…

!/ = 0!/1" + (1 − 0)+/

Andrew Ng

Implementing exponentially weighted averages!" = 0!% = &!" + (1 − &)-%

…

!/ = &!% + (1 − &)-/!0 = &!/ + (1 − &)-0

Optimization Algorithms

Bias correctionin exponentially

weighted averagedeeplearning.ai

Andrew Ng

Bias correction

days

temperature

!" = $!"%& + (1 − $),"

Optimization Algorithms

Gradient descent with momentumdeeplearning.ai

Andrew Ng

Gradient descent example

Andrew Ng

Implementation details

!"# = %!"# + 1 − % )*!"+ = %!"+ + 1 − % ),* = * − -!"#,

Hyperparameters: -, %

Oniteration8:Compute )*, ),on the current mini-batch

, = , − -!"+

% = 0.9

Optimization Algorithms

RMSpropdeeplearning.ai

Andrew Ng

RMSprop

Optimization Algorithms

Adam optimizationalgorithmdeeplearning.ai

Andrew Ng

Adam optimization algorithm

yhat = np.array([.9, 0.2, 0.1, .4, .9])

Andrew Ng

Hyperparameters choice:

Adam Coates

Optimization Algorithms

Learning rate decaydeeplearning.ai

Andrew Ng

Learning rate decay

Andrew Ng

Learning rate decay

Andrew Ng

Other learning rate decay methods

Optimization Algorithms

The problem oflocal optimadeeplearning.ai

Andrew Ng

Local optima in neural networks

Andrew Ng

Problem of plateaus

• Unlikely to get stuck in a bad local optima• Plateaus can make learning slow