Leveraging Dynamic Query Subtopics for Time-aware Search Result Diversification

-

Upload

nattiya-kanhabua -

Category

Presentations & Public Speaking

-

view

112 -

download

1

Transcript of Leveraging Dynamic Query Subtopics for Time-aware Search Result Diversification

Leveraging Dynamic Query Subtopics for

Time-aware Search Result Diversification

Tu Ngoc Nguyen and Nattiya Kanhabua

1

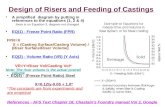

I. Subtopic Mining

A. From Query Logs

B. From Document Collection

II. Time-aware Diversifying Models

III. Experiment

3

Outline

4

temporally

ambiguous, multi-

faceted queries

subtopic mining

query log

document

collection

time-aware

diversification

d1

d2

.

.

dn

dynamic

subtopics

t

System Pipeline

1.a

co-click bipartite

q1

q2

.

.

qn

related queries

querying

time t

clustering

subtopics

querying

time t + 1

5

Subtopic Mining Approach: Query Log

march madness

began

14/03/2006

ncaa women

tournament began

18/03/2006 01/04/2006

final four began

query: ncaa

6

Subtopic Dynamics: Query Log

7

temporally

ambiguous, multi-

faceted queries

subtopic mining

query log

document

collection

time-aware

diversification

d1

d2

.

.

dn

dynamic

subtopics

t

System Pipeline

1.b

– Probabilistic subtopic modeling: Latent Dirichlet Allocation (LDA)

8

Subtopic Mining: Document Collection

query: apple

10

temporally

ambiguous, multi-

faceted queries

subtopic mining

query log

document

collection

time-aware

diversification

d1

d2

.

.

dn

dynamic

subtopics

t

System Pipeline

2.

- Probabilistic model:

- Pr(c|q): weight of certain subtopic in a query

- Pr(q|d), Pr(d|q): relation between document and query

- Pr(c|d), Pr(d|c): relation between document and subtopic

- IA-Select objective function:

11

IA-Select Model [Vallet and Castells. 2012]

document

relevance novelty

• MMR [Carbonell and Goldstein. 98]

- diversify based on similarity of document contents

• IA-Select [Agrawal et al. 2009]

- diversify based on the taxonomy of subtopic categories

• xQuaD [Santos et al. 2010]

- general form of IA-Select

- define objective function as a mixture of relevance and diversity

probabilities

• Topic richness [Dou et al. 2011]

- general form of xQuaD and IA-Select models

- accepts topics from multiple sources

12

Search Result Diversification Models

- Probabilistic model:

- Pr(c|q): weight of certain subtopic in a query

- Pr(q|d), Pr(d|q): relation between document and query

- Pr(c|d), Pr(d|c): relation between document and subtopic

- xQuaD objective function:

13

xQuaD Model [Vallet and Castells. 2012]

noveltydocument-topic

relevance

document-query

relevance

• Temp-IASelect

- IA-Select objective function:

- Temp-IASelect objective function:

14

Temporal Diversifying Models

• Temp-xQuaD

- xQuaD objective function:

- Temp-xQuaD objective function:

• Temp-topic richness

- generalization of temp-xQuaD and temp-IASelect15

Temporal Diversifying Models

• TREC Blogs08 Collection

- crawled from Jan 2008 to Feb 2009

- clean HTML tags using HtmlCleaner and Boilerpipe libraries

- index using Lucene Core

- document’s publication date extracted from:

- Blog content

- URL

- Retrieval date

16

Experiment Settings

• Retrieval baseline:

- Okapi BM25

• Relevance assessments:

- human assessment

- binary relevance judgment, follows TREC Diversity Track 2009 and 2011

- 2 dimensional assessment: relevance and time

- exclude topics mined from query log (time gap between AOL and

Blogs08)

- top 10 most probability words represents a topic.

• Querying-time points:

- how popular is the query at particular time t

- how different to the previous time slice t-1

17

Experiment Settings

18

Relevance assessments

Document Publication

Date

Subtopic Hitting time Relevance

Title: It’s The Most Wonderful Time Of The

Year

Content: The greatest sports week of the

year is upon us, and Chris’s Sports Blog is

ready. Check back daily for coverage of the

ACC and NCAA Tournament, tips on how to

fill out your bracket …

2008-03-17 ncaa basketball

tournament

2008-03 1

Title: Is there a bigger joke than the NCAA?

Content: Each year the NCAA discovers a

new way to make a bigger ass of itself than

the previous one. This years specialty is to

bar from NCAA playoffs in every sport any

school who persists in using "unacceptable"

team names and school mascots, by their

exclusive definition…

2005-08-22 ncaa basketball

tournament

2008-03 0

Title: Apple Quince Jam

Content: The apple quince is a fruit that

ripens in the period from October to

November, it is an apple with a strange

shape: it looks like a pear and apple, and it is

lumpy…

2007-11-15 apple jam 2008-03 1

• α-NDCG

- adding diversity and novelty to nDCG

• Intent-Aware Precision (Precision-IA)

- intent-aware version of Precision

- treat subtopic as distinct interpretation of query

• Intent-Aware Expected Reciprocal Rank (ERR-IA)

- based on cascade model of search

19

Evaluation Metrics

Conclusion• studied temporally ambiguous and multi-faceted queries

- subtopic temporal variability

- subtopic mining from two different sources (query logs, document

collection)

• propose time-aware search results diversification frameworks

• Model and predict the subtopic change

• Combine diversifying by subtopics and time in a unified framework

22

Future work

Settings

• Estimate natural number of subtopics

– Suresh et al. 2010 view LDA as matrix factorization mechanism

– Cd×w = M1d×t × M2t×w

• d: number of document in the corpus

• w: size of vocabulary

• t: the number of topics

– optimum t is with minimal divergence value

•

• CM1 is the distribution of singular values of M1

• CM2 is obtained by normalize vector L · M2, L is 1 x D vector of lengths of

each document in C.

24

• New documents appear all the time

• Document content change over time

• Queries and query volumes change over time

• Example: [Kulkarni et al. 2011]

25

march madness

ncaa

Motivation

Cluster Subtopic Candidates

• Clustering approach [Song et al. 2011]:

– step 1: Construct a similarity matrix of the related queries

– step 2: Cluster using Affinity Propagation algorithm

– step 3: Extract a set of exemplars as subtopics of the query

• Similarity metrics:

– lexical similarity:

• keywords and cosine similarity

– co-click similarity:

• based on fraction of common clicks

– semantic similarity:

• use WordNet as external KB

27

• Vector-based:

– Cosine Similarity

• Bag of words-based:

– Jaccard Coefficient

• Ranked list of words-based:

– Kendall τ Coefficient -based:

• Multinomial distribution-based:

– Kullback-Leibler Divergence

– Jensen-Shannon Divergence

Topic Similarity Metrics [Kim and Oh. 2011]

28

Subtopic Mining Approach

• Dynamic queries: select 57 out of 61 queries from the AOL query log i.e.

yearly recurrent or time-independent.

• Settings

– partition collection into 14 one-month length time slices

– training data in time slice ti is top 2000 documents D with d ∈ D,

pubDate(d) ∈ t

– number of subtopic is preset in the range from 5 to 20

• Subtopic weight:

– weight w(c) is the probability that a given query q implies subtopic c

–

29

Temporal Document Collection

• TREC Blogs08 Collection

- crawled from Jan 2008 to Feb 2009

- clean HTML tags using HtmlCleaner and Boilerpipe libraries

- index using Lucene Core

- document’s publication date extracted from:

- Blog content

- URL

- Retrieval date

30

Subtopic Evaluation

• 61 queries: 51 event-related queries, 10 standard ambiguous queries

– aspect removed e.g. march madness brackets → march madness

• Subtopic evaluation metrics [Radlinski et al. 2010]:

– coherence

– distinctness

– plausibility

– completeness

31