[Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning...

Transcript of [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning...

Learning Cellular Automata Rules

for Pattern Reconstruction Task

Anna Piwonska1,� and Franciszek Seredynski2,3

1 Bialystok University of TechnologyComputer Science Faculty

Wiejska 45A, 15-351 Bialystok, [email protected]

2 Institute of Computer SciencePolish Academy of Sciences

Ordona 21, 01-237 Warsaw, Poland3 Polish-Japanese Institute of Information Technology

Koszykowa 86, 02-008 Warsaw, [email protected]

Abstract. This paper presents results of experiments concerning thescalability of two-dimensional cellular automata rules in pattern recon-struction task. The proposed cellular automata based algorithm runs intwo phases: the learning phase and the normal operating phase. Thelearning phase is conducted with use of a genetic algorithm and its aimis to discover efficient cellular automata rules. A real quality of discov-ered rules is tested in the normal operating phase. Experiments show avery good performance of discovered rules in solving the reconstructiontask.

Keywords: cellular automata, pattern reconstruction task, geneticalgorithm, scalability of rules.

1 Introduction

Cellular automata (CAs) are discrete, spatially-extended dynamical systems thathave been studied as models of many physical and biological processes and ascomputational devices [8,14]. CA consists of identical cells arranged in a regulargrid, in one or more dimensions. Each cell can take one of a finite number of statesand has an identical arrangement of local connections with other cells called aneighborhood. After determining initial states of cells (an initial configurationof a CA), states of cells are updated synchronously according to a local ruledefined on a neighborhood. In the case of two-dimensional CAs, two types ofneighborhood are commonly used: von Neumann and Moore [8]. When a gridsize is finite, we must define boundary conditions.

One of the most interesting features of CAs is that in spite of their simpleconstruction and principle of operation, cells acting together can behave in an� This research was supported by S/WI/2/2008.

K. Deb et al. (Eds.): SEAL 2010, LNCS 6457, pp. 240–249, 2010.c© Springer-Verlag Berlin Heidelberg 2010

Learning Cellular Automata Rules for Pattern Reconstruction Task 241

inextricable and an unpredictable way. Although cells have a limited knowledgeabout the system (only its neighbors’ states), localized information is propagatedat each time step, enabling more global behavior.

The main bottleneck of CAs is a difficulty of constructing CAs rules producinga desired behavior. In some applications of CAs one can design an appropriaterule by hand, based on partial differential equations describing a given phe-nomenon. However, it is not always possible. In the 90-ties of the last centuryMitchell and colleagues proposed to use genetic algorithms (GAs) to discoverCAs rules able to perform one-dimensional density classification task [7] and thesynchronization task [4]. The results produced by Mitchell et al. were interest-ing and started development of a concept of automating rule generation usingartificial evolution. Breukelaar and Back [3] applied GAs to solve the densityclassification problem as well as AND and XOR problem in two dimensionalCAs. Sapin et al. [11] used evolutionary algorithms to find a universal cellularautomaton. Swiecicka et al. [13] used GAs to find CA rules able to solve multi-processor scheduling problem. Bandini et al. [1] proposed to use several MachineLearning techniques to automatically find CA rules able to generate patternssimilar in some generic sense to those generated by a given target rule.

In literature one can find several examples of CAs applications in image pro-cessing [6,10] as well as evolving by GAs CAs rules in image processing task [12].Some of them deal with image enhancement, detection of edges, noise reduction,image compression, etc. The authors have recently proposed to use a GA todiscover CAs rules able to perform pattern reconstruction task [9].

In this paper we present results of subsequent experiments concerning evolv-ing CAs rules to perform pattern reconstruction task. The main aim of theseexperiments was to analyse possibilities of discovered rules in reconstructing thesame patterns but on larger grids, that is the scalability of rules. The paper isorganized as follows. Section 2 presents pattern reconstruction task in context ofCAs. Section 3 describes two phases of the algorithm: the learning phase and thenormal operating phase. Results of computer experiments are reported in Section4. The last section contains conclusions and some remarks about future work.

2 Cellular Automata and Pattern Reconstruction Task

We assume that a given pattern is defined on a two-dimensional array (grid ofcells) of size n×n. Each element of an array can take one of two possible values: 1or 2. Let us assume that some fraction q of values of grid elements is not known.These are missing parts of a pattern. Based on such a not complete pattern,it could be difficult to predict unknown states unless one is able to see somedependencies between values of cells. Let us further assume that we have a seriesof such not complete patterns, created from one given pattern in a random way.These patterns will be treated as initial configurations of two-dimensional CAs.

Pattern reconstruction task is formulated as follows. We want to find a CArule which is able to transform an initial, not complete configuration to a finalcomplete configuration.

242 A. Piwonska and F. Seredynski

Fig. 1. The examples of a complete pattern (on the left) and an incomplete one (onthe right). The incomplete pattern has 30 states unknown (q = 0.3).

Let us construct a two-dimensional CA of size n× n, in order to describe ourpattern. Our CA will be a three-state: unknown values of grid elements will berepresented by state 0. That means that at each time step every cell of our CAcan take a value from the set {0, 1, 2}.

In the context of CAs our task can be described as follows. Let us assumethat we have a finite number of random initial configurations, each of whichis an incomplete pattern. We want to find a CA rule that is able to convergeto a final configuration identical with a complete pattern. That means, a rulethat will be able to reconstruct a pattern. We also assume that a completepattern is not known during searching process. The only data available duringsearching process is a series of incomplete patterns, randomly created from onegiven pattern. It is worth mentioning that related to our task is a problem fromdata mining field described in [5], where a heuristic CA rule was proposed.

Fig. 1 presents the example of a pattern of size 10 × 10 (on the left). Gridelements with value 1 are represented by grey cells and elements with value 2are represented by black cells. On the right side of this figure one can see theexample of this pattern with 30 states unknown. These are represented by whitecells. Indexes of unknown elements were generated randomly. Such an incompletepattern is interpreted as an initial configuration of a CA.

When using CAs in pattern reconstruction task, we must first define a neigh-borhood and boundary connditions. In our experiments we assume von Neumannneighborhood, with three possible cell states (Fig. 2). Using this neighborhoodwe have 35 = 243 possible neighborhood states. Thus, the number of possiblerules equals to 3243, which means enormous search space. In our experiments weassume null boundary conditions: our grid is surrounded by dummy cells alwaysin state 0. The interpretation of this assumption is that we do not know thestate of these cells. In fact, they are not a part of our pattern.

3 The GA for Discovering CAs Rules

The proposed CA-based algorithm runs in two phases: the learning phase andthe normal operating phase.

Learning Cellular Automata Rules for Pattern Reconstruction Task 243

3.1 Learning Phase

The purpose of this phase is searching for suitable CAs rules with the use of theGA. The GA starts with a population of P randomly generated 243-bit CA rules.Five cells of von Neumann neighborhood are usually described by directions onthe compass: North (N), West (W), Central (C), East (E), South (S). Using thisconvention, the bit at position 0 in the rule (the top bit in the bar in Fig. 2)denotes a state of the central cell of the neighborhood 0000000 in the next timestep, the bit at position 1 in the rule denotes a state of the central cell of theneighborhood 0000001 in the next time step and so on, in lexicographic order ofneighborhood.

Fig. 2. The neighborhood coding (on the left) and the fragment of the rule - thechromosome of the GA (on the right, in a bar)

The next step is to evaluate individuals in the initial population for the abil-ity to perform pattern reconstruction task. For this purpose, at each generation,starting from a complete pattern, we randomly generate an incomplete patternwith q states unknown. This process proceeds as follows. We have a completepattern. In single step we randomly select a single cell which has not been previ-ously chosen and this cell changes its state to 0 (unknown state). We repeat thesesteps until we chose q·n2 cells. All these cells will be in the state 0 (unknown).

Then each rule in the population is evolved on that randomly generated in-complete pattern, considered as an initial configuration of CA, for t time steps.At the final time step we compute the number of cells in the grid, with a statedifferent from 0, that have the correct state. If a given cell is in the state 1 in aninitial configuration, then the correct state for this cell in the final configurationis 1. Similarly, if a given cell is in the state 2 in an initial configuration, thenthe correct state for this cell in the final configuration is 2. The number of cellsin a final configuration with the state 1 which are in the correct state will bedenoted as n 1 and the number of cells in a final configuration with the state2 which are in the correct state will be denoted as n 2. Since we compute thenumber of correct states, we deal with maximization problem. The fitness f ofa rule i, denoted as fi, is computed according to formula:

fi = n 1 + n 2 − n 0 , (1)

244 A. Piwonska and F. Seredynski

where n 0 denotes the number of cells in the final configuration with the state0. Subtracting the number of cells with the state 0 is a kind of penalty factorand its task is to prevent from evolving to the final configuration with manycells in the state 0. It would be unfavorable situation from the point of view ofpattern reconstruction task. The maximal fitness value equals to the number ofcells in known states and equals to n2 − q·n2.

After creating an initial population, the GA starts to improve it throughrepetitive application of selection, crossover and mutation. In our experimentswe used tournament selection: individuals for the next generation are chosenthrough P tournaments. The size of the tournament group is denoted as tsize.

After selection individuals are randomly coupled and each pair is subjected toone-point crossover with the probability pc. If crossover is performed, offspringreplace their parents. On the other hand, parental rules remain unchanged.

The last step is a mutation operator. It can take place for each individual inthe population with the probability pm. When a given gene is to be mutated,we replace the current value of this gene by the value 1 or 2, with equal prob-ability. Omitting the value 0 has the same purpose as described previously: itprevents from evolving rules with many 0s. Such rules are more likely to pro-duce configurations containing cells with the state 0. It would be unfavorablesituation.

These steps are repeated G generations and at the end of them the finalpopulation of rules is stored.

3.2 Normal Operating Phase

At the end of the learning phase we have a population of discovered rules whichwere trained to perform pattern reconstruction task. Let us remind that a com-plete pattern was not presented during this phase.

In our previous paper [9] we investigated a real quality of discovered rules byrunning each rule on IC random initial configurations with q states unknown.For a given final configuration produced by rule i, we counted the fraction ofcells’ states identical with these in a complete pattern. This value, denoted asti, was computed according to the formula:

ti =n 1 + n 2

n2. (2)

An ideal rule can evolve an initial configuration to the final configuration withall cells in correct states. Thus, the maximum value ti that such an ideal rule canobtain is 1.0. That means that the final configuration is identical with a completepattern. Since we tested each rule on IC random initial configurations, the finalvalue for a rule was the rule’s average result over IC initial configurations. Wedenoted this value as t̄i.

In experiments described in this paper we decided to test the populationof discovered rules on larger grid size, but on the same pattern. The aim ofthese experiments was to examine the existence of the scalability of CAs rules.Intuitively, one can suppose that testing rules on larger grid size than that on

Learning Cellular Automata Rules for Pattern Reconstruction Task 245

which they have been learned is much harder task. Results of these experimentsare described in the next section.

4 Experimental Results

In our experiments we used two patterns with n = 10 presented in Fig. 3.They were denoted as pattern 1 and pattern 2. For each pattern, we tested theperformance of the GA for three values of q: 0.1, 0.3 and 0.5. The maximalnumber of time steps t during which a CA has to converge to a desired finalconfiguration was set to 100. Experiments showed that such a value is largeenough to let good rules to converge to a desired final configuration. On theother hand, when a CA converged to a stable configuration earlier, the processof CA run was stopped.

Fig. 3. Patterns used in experiments: pattern 1 (on the left) and pattern 2 (on theright)

4.1 Learning Phase

The parameters of the GA were the following: P = 200, tsize = 3, pc = 0.7 andpm = 0.02. Higher than the usual mutation rate results from rather long chro-mosome and an enormous search space. Experiments show that slightly greaterpm helps the GA in the searching process. The searching phase was conductedthrough G = 200 generations. Increasing the number of generations had no effecton improving results.

Fig. 4 and 5 present typical runs of the GA run for two patterns used inexperiments, for the first 100 generations. On each plot one can see the fitnessvalue of the best individual in a given generation, for three values of q.

The maximal fitness value for q = 0.1 equals to 90, for q = 0.3 equals to 70and for q = 0.5 equals to 50. One can see that in case of q = 0.1 and q = 0.3,the GA is able to find a rule with the maximal fitness value.

The differences between patterns appear for q = 0.5. In the case of pattern1, a fitness value of the best individual slightly oscillates around its maximalvalue. On the other hand, rules discovered for pattern 2 seem to be perfect. Inthis case, the GA quickly discovers rules with the maximal fitness value. We canconclude that in our method pattern 2 is easier in reconstruction than pattern 1.

246 A. Piwonska and F. Seredynski

0

10

20

30

40

50

60

70

80

90

100

0 20 40 60 80 100

fitne

ss

generation

pattern 1

q=0.1q=0.3q=0.5

Fig. 4. The GA runs for pattern 1

0

10

20

30

40

50

60

70

80

90

100

0 20 40 60 80 100

fitne

ss

generation

pattern 2

q=0.1q=0.3q=0.5

Fig. 5. The GA runs for pattern 2

4.2 Normal Operating Phase

The aim of the normal operating phase was to examine the scalability of discov-ered rules. For this purpose we tested populations of discovered rules on the samepatterns, but on larger grid size. In our experiments we used n = {20, 30, 40, 50}.New patterns for n = 20 are presented in Fig. 6. Patterns for remaining n valueswere created in the same way.

For both patterns, we tested the final population discovered by the GA forq = 0.1 and n = 10 on IC = 100 random initial configurations, created on larger

Learning Cellular Automata Rules for Pattern Reconstruction Task 247

Fig. 6. Patterns for n = 20 used in experiments

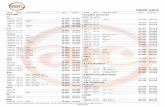

Table 1. ¯tbest values for pattern 1 and different q values

q = 0.1 q = 0.3 q = 0.5

n = 20 0.997 0.959 0.890

n = 30 0.999 0.974 0.930

n = 40 0.999 0.980 0.945

n = 50 0.999 0.984 0.957

Table 2. ¯tbest values for pattern 2 and different q values

q = 0.1 q = 0.3 q = 0.5

n = 20 0.999 0.998 0.998

n = 30 1.0 0.998 0.994

n = 40 1.0 0.999 0.995

n = 50 1.0 0.999 0.996

grid sizes. For each rule, t̄i was computed. Results of the best rules (from thewhole population), denoted as ¯tbest are presented in Tab. 1 and 2.

Results presented in Tab. 1 and 2 show that discovered rules have possibilitiesin reconstructing the same pattern on larger grids. What is interesting, thisscalability does not degrade when n increases. For a given q, as n increases,¯tbest oscillates around similar values. Slight changes are caused by stochastic

way of computing of ¯tbest: a rule i might have different t̄i values, depending ona concrete initial configuration.

As q increases, the scalability of discovered rules slightly decreases, as wasto be expected. This is particularly noticable in the case of pattern 1, whichproved to be more difficult in the learning phase. Higher values of q mean moreunknown states in an initial configuration (and less states known). The moreunknown states, the more difficult pattern reconstruction task is.

Let us look closely at the performance of one of the best rules from the normaloperating phase. The leftmost picture in Fig. 7 presents the initial configurationof the CA for pattern 1, n = 20 and q = 0.3. We run the best rule found for

248 A. Piwonska and F. Seredynski

Fig. 7. Configurations of the CA in time steps: 0, 1 and 14 (from the left to the right)

n = 10 on this configuration, for the maximal number of time steps t = 100.Further configurations in time steps 1 and 14 are presented in Fig. 7.

One can see that the rule is able to quickly tranform the initial configurationto the desired final configuration. What is interesting, the number of time stepsufficient to do it does not increment when n increases. Barely after one timestep, the configuration of CA does not contain any cells with unknown state andhas many cells in the correct state.

5 Conclusions

In this paper we have presented pattern reconstruction task in the contex ofCAs. One of the aims of the paper was to present possibilities of the GA inevolving suitable CAs rules which are able to transform initial, not completeconfigurations to final complete configurations. Results of presented experimentsshow that the GA is able to discover rules appropriate to solve this task for agiven instance of a problem. Found rules perform well even when the number ofunknown cells is relatively high.

The most important subject addressed in this paper is the scalability discov-ered CA rules. Experiments show that during learning phase rules store somekind of knowledge about pattern which is reconstructed. This knowledge can besuccessfully reused in the process of reconstructing patterns on larger grid size.

References

1. Bandini, S., Vanneschi, L., Wuensche, A., Shehata, A.B.: A Neuro-GeneticFramework for Pattern Recognition in Complex Systems. Fundamenta Informati-cae 87(2), 207–226 (2008)

2. Banham, M.R., Katsaggelos, A.K.: Digital image restoration. IEEE Signal Pro-cessing Magazine 14, 24–41 (1997)

3. Breukelaar, R., Back, T.: Evolving Transition Rules for Multi Dimensional Cellu-lar Automata. In: Sloot, P.M.A., Chopard, B., Hoekstra, A.G. (eds.) ACRI 2004.LNCS, vol. 3305, pp. 182–191. Springer, Heidelberg (2004)

4. Das, R., Crutchfield, J.P., Mitchell, M.: Evolving globally synchronized cellularautomata. In: Eshelman, L.J. (ed.) Proceedings of the Sixth International Confer-ence on Genetic Algorithms, pp. 336–343. Morgan Kaufmann Publishers Inc., SanFrancisco (1995)

Learning Cellular Automata Rules for Pattern Reconstruction Task 249

5. Fawcett, T.: Data mining with cellular automata. ACM SIGKDD ExplorationsNewsletter 10(1), 32–39 (2008)

6. Hernandez, G., Herrmann, H.: Cellular automata for elementary image enhance-ment. Graphical Models And Image Processing 58(1), 82–89 (1996)

7. Mitchell, M., Hraber, P.T., Crutchfield, J.P.: Revisiting the Edge of Chaos: Evolv-ing Cellular Automata to Perform Computations. Complex Systems 7, 89–130(1993)

8. Packard, N.H., Wolfram, S.: Two-Dimensional Cellular Automata. Journal of Sta-tistical Physics 38, 901–946 (1985)

9. Piwonska, A., Seredynski, F.: Discovery by Genetic Algorithm of Cellular Au-tomata Rules for Pattern Reconstruction Task. In: Proceedings of ACRI 2010.LNCS. Springer, Heidelberg (to appear, 2010)

10. Rosin, P.L.: Training Cellular Automata for Image Processing. IEEE Transactionson Image Processing 15(7), 2076–2087 (2006)

11. Sapin, E., Bailleux, O., Chabrier, J.-J., Collet, P.: A New Universal Cellular Au-tomaton Discovered by Evolutionary Algorithms. In: Deb, K., et al. (eds.) GECCO2004. LNCS, vol. 3102, pp. 175–187. Springer, Heidelberg (2004)

12. Slatnia, S., Batouche, M., Melkemi, K.E.: Evolutionary Cellular Automata Based-Approach for Edge Detection. In: Masulli, F., Mitra, S., Pasi, G. (eds.) WILF 2007.LNCS (LNAI), vol. 4578, pp. 404–411. Springer, Heidelberg (2007)

13. Swiecicka, A., Seredynski, F., Zomaya, A.Y.: Multiprocessor scheduling andrescheduling with use of cellular automata and artificial immune system support.IEEE Transactions on Parallel and Distributed Systems 17(3), 253–262 (2006)

14. Wolfram, S.: A New Kind of Science. Wolfram Media (2002)

![Page 1: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/1.jpg)

![Page 2: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/2.jpg)

![Page 3: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/3.jpg)

![Page 4: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/4.jpg)

![Page 5: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/5.jpg)

![Page 6: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/6.jpg)

![Page 7: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/7.jpg)

![Page 8: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/8.jpg)

![Page 9: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/9.jpg)

![Page 10: [Lecture Notes in Computer Science] Simulated Evolution and Learning Volume 6457 || Learning Cellular Automata Rules for Pattern Reconstruction Task](https://reader030.fdocuments.in/reader030/viewer/2022020409/575096a51a28abbf6bcc6701/html5/thumbnails/10.jpg)

![A cellular learning automata based algorithm for detecting ... · by combining cellular automata (CA) and learning automata (LA) [22]. Cellular learning automata can be defined as](https://static.fdocuments.in/doc/165x107/601a3ee3c68e6b5bec07f1bb/a-cellular-learning-automata-based-algorithm-for-detecting-by-combining-cellular.jpg)