Hierarchical maximum likelihood parameter estimation for ...

Transcript of Hierarchical maximum likelihood parameter estimation for ...

Hierarchical maximum likelihood parameterestimation for cumulative prospect theory:Improving the reliability of individual risk

parameter estimates

Ryan O. Murphy , Robert H.W. ten Brincke

ETH Risk Center – Working Paper Series

ETH-RC-14-005

The ETH Risk Center, established at ETH Zurich (Switzerland) in 2011, aims to develop cross-disciplinary approaches to integrative risk management. The center combines competences from thenatural, engineering, social, economic and political sciences. By integrating modeling and simulationefforts with empirical and experimental methods, the Center helps societies to better manage risk.

More information can be found at: http://www.riskcenter.ethz.ch/.

ETH-RC-14-005

Hierarchical maximum likelihood parameter estimation for cumu-lative prospect theory: Improving the reliability of individual riskparameter estimates

Ryan O. Murphy , Robert H.W. ten Brincke

Abstract

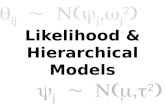

Individual risk preferences can be identified by using decision models with tuned parameters that max-imally fit a set of risky choices made by a decision maker. A goal of this model fitting procedure is toisolate parameters that correspond to stable risk preferences. These preferences can be modeled asan individual difference, indicating a particular decision maker’s tastes and willingness to tolerate risk.Using hierarchical statistical methods we show significant improvements in the reliability of individual riskpreference parameters over other common estimation methods. This hierarchal procedure uses popu-lation level information (in addition to an individual’s choices) to break ties (or near-ties) in the fit qualityfor sets of possible risk preference parameters. By breaking these statistical “ties” in a sensible way,researchers can avoid overfitting choice data and thus better measure individual differences in people’srisk preferences.

Keywords: Prospect theory, Risk preference, Decision making under risk, Hierarchical parameter esti-mation, Maximum likelihood

Classifications: JEL Codes: D81, D03

URL: http://web.sg.ethz.ch/ethz risk center wps/ETH-RC-14-005

Notes and Comments:

ETH Risk Center – Working Paper Series

Hierarchical maximum likelihood parameter estimation

for cumulative prospect theory: Improving the

reliability of individual risk parameter estimates

Ryan O. Murphy∗, Robert H.W. ten Brincke

Chair of Decision Theory and Behavioral Game TheorySwiss Federal Institute of Technology Zurich (ETHZ)

Clausiusstrasse 50, 8006 Zurich, Switzerland+41 44 632 02 75

Abstract

Individual risk preferences can be identified by using decision models withtuned parameters that maximally fit a set of risky choices made by a decisionmaker. A goal of this model fitting procedure is to isolate parameters thatcorrespond to stable risk preferences. These preferences can be modeled asan individual difference, indicating a particular decision maker’s tastes andwillingness to tolerate risk. Using hierarchical statistical methods we showsignificant improvements in the reliability of individual risk preference pa-rameters over other common estimation methods. This hierarchal procedureuses population level information (in addition to an individual’s choices) tobreak ties (or near-ties) in the fit quality for sets of possible risk preferenceparameters. By breaking these statistical “ties” in a sensible way, researcherscan avoid overfitting choice data and thus better measure individual differ-ences in people’s risk preferences.

Key words: Prospect theory, Risk preference, Decision-making under risk,Hierarchical parameter estimation, Maximum likelihood

∗Corresponding author.Email addresses: [email protected] (Ryan O. Murphy),

[email protected] (Robert H.W. ten Brincke)

Working paper April 16, 2014

2

1. Introduction1

People must often make choices among a number of different options3

for which the outcomes are not certain. Epistemic limitations can be pre-4

cisely quantified using probabilities, and given a well defined sets of options,5

each option with its respective probability of fruition, the elements of risky6

decision making are established (Knight 1932; Luce and Raiffa, 1957). Ex-7

pected value maximization stands as the normative solution to risky choice8

problems, but people’s choices do not always conform to this optimization9

principle. Rather decision makers (DMs) reveal different preferences for risk,10

sometimes forgoing an option with a higher expectation in lieu of an option11

with lower variance (thus indicating risk aversion; in some cases DMs reveal12

the opposite preference too thus indicating risk seeking). Behavioral theories13

of risky decision making (Kahneman and Tversky, 1979; Tversky and Kah-14

neman, 1992; Kahneman and Tversky, 2000) have been developed to isolate15

and highlight the order in these choice patterns and provide psychological in-16

sights into the underlying structure of DM’s preferences. This paper is about17

measuring those subjective preferences, and in particular finding a statistical18

estimation procedure that can increase the reliability of those measures, thus19

better capturing risk preferences and doing so at the individual level.20

1.1. Example of a risky choice21

A binary lottery (i.e., a gamble or a prospect) is a common tool for22

studying risky decision making, so common in fact that they have been called23

the fruit flies of decision research (Lopes, 1983). In these simple risky choices,24

DMs are presented with monetary outcomes xi, each associated with an25

explicit probability pi. Consider for example the lottery in Table 1, offering26

a DM the choice between option A, a payoff of $1 with probability .62 or27

$83 with .38; or option B, a payoff of $37 with probability .41 or $24 with28

probability .59. The decision maker is called upon to choose either option A29

or option B and then the lottery is played out via a random process for real30

consequences.31

1.2. EV maximization and descriptive individual level modeling32

The normative solution to the decision problem in Table 1 is straightfor-33

ward. The goal of maximizing long term monetary expectations is satisfied34

2

option A option B

$1with probability

.62

$83 .38

$37with probability

.41

$24 .59

Table 1: Here is displayed an example of a simple, one-shot risky decision. The choiceis simply whether to select option A or option B. The expected value of optionA is higher ($32.16) than that of option B ($29.33), but option A contains thepossibility of an outcome with a relatively small value ($1). As it turns out, themajority of DMs prefer option B, even though it has a smaller expected value.

by selecting the option with the highest expected value. Although this deci-35

sion policy has desirable properties, it is often not an accurate description of36

what people prefer nor what they choose when presented with risky options.37

As can be anticipated, different people have different tastes, and this hetero-38

geneity includes preferences for risk as well. For example, the majority of39

incentivized DMs select option B from the lottery shown above, indicating,40

perhaps, that these DMs have some degree of risk aversion. However the41

magnitude of this risk aversion is still unknown and it cannot be estimated42

from only one choice resting from a binary lottery. This limitation has lead43

researchers to use larger sets of binary lotteries where DMs make many in-44

dependent choices, and from the overall pattern of choices, researchers can45

draw inferences about the DM’s risk preferences.46

Risk preferences have often been modeled at the aggregate level, by fitting47

parameters to the most selected option across many individuals, as opposed48

to modeling preferences at the individual level (see Kahneman and Tversky,49

1979; Tversky and Kahneman, 1992). This aggregate approach is a use-50

ful first step in that it helps establish stylized facts about human decision51

making and further facilitates the identification of systematicity in the de-52

viations from EV maximization. However, actual risk preferences exist at53

the individual level, and thus improving the resolution of measurement and54

modeling is a natural development in this research field. Substantial work55

exists along these lines already for both the measurement of risk preferences56

as well their application (e.g. Gonzalez and Wu, 1999; Holt and Laury, 2002;57

Abdellaoui, 2000; Fehr-Duda et al., 2006). The purpose of this paper is to58

add to that literature by developing and evaluating a hierarchical estimation59

method that improves the reliability of parameter estimates of risk prefer-60

ences at the individual level. Moreover we want to do so with a measurement61

tool that is readily useable and broadly applicable as a measure of individual62

3

risk preferences.63

1.3. Three necessary components for measuring risk preferences64

There are three elementary components in measuring risk preferences65

and these parts serve as the foundation for developing behavioral models of66

risky choice. These three components are: lotteries, models, and statistical67

estimation/fitting procedures. We explain each of these components below68

in general terms, and then provide detailed examples and discussion of the69

elements in the subsequent sections of the paper.70

1.3.1. Lotteries71

Choices among options reveal DM’s preferences (Samuelson, 1938; Var-72

ian, 2006). Lotteries are used to elicit and record risky decisions and these73

resulting choice data serve as the input for the decision models and parameter74

estimation procedures. Here we focus on choices from binary lotteries (such75

as Stott, 2006; Rieskamp, 2008; Nilsson et al., 2011), but the elicitation of76

certainty equivalence values is another well-established method for quantify-77

ing risk preferences (see for example Zeisberger et al., 2010). Binary lotteries78

are arguably the simplest way for subjects to make choices and thus elicit79

risk preferences. One reason to use binary lotteries is because people appear80

to have problems with assessing a lottery’s certainty equivalent (Lichtenstein81

and Slovic, 1971), although this simplicity comes at the cost of requiring a82

DM to make many binary choices to achieve the same degree of estimation83

fidelity that other methods purport to (e.g., the three decision risk measure84

from Tanaka et al., 2010).85

1.3.2. Decision model (non-expected utility theory)86

Models may reflect the general structure (e.g., stylized facts) of DMs’ ag-87

gregate preferences by invoking a latent construct like utility. These models88

also typically have free parameters that can be tuned to improve the accu-89

racy of how they capture different choice patterns. An individual’s choices90

from the lotteries are fit to a model by tuning these parameters and thus91

identifying differences in revealed preferences and summarizing the pattern92

of choices (with as much accuracy as possible) by using particular parameter93

values. These parameters can, for example, establish not just the existence of94

risk aversion, but can quantify the degree of this particular preference. This95

is useful in characterizing an individual decision maker and isolating cog-96

nitive mechanisms that underlie behavior (e.g., correlating risk preferences97

4

with other variables, including measures of physiological processes such as98

in Figner and Murphy, 2010; Schulte-Mecklenbeck et al., 2014) as well as99

tuning a model to make predictions about what this DM will choose when100

presented with different options. Here we focus on the non-expected utility101

model of cumulative prospect theory (Kahneman and Tversky, 1979; Tver-102

sky and Kahneman, 1992; Starmer, 2000) as our decision model. Prospect103

theory is arguably the most important and influential descriptive model of104

risky choice to date and it has been used extensively in research related to105

model fitting and risky decision making.106

1.3.3. Parameter estimation procedure107

Given a set of risky choices and a particular decision model, the best108

fitting parameter(s) can be identified via a statistical estimation procedure.109

A perfectly fitting parameterized model would exactly reproduce all of a110

DM’s choices correctly (i.e., perfect correspondence). Obviously this is a lofty111

goal and it is almost never observed in practice, as there almost certainly is112

measurement error in a set of observed choices.113

A decision model, with best fitting parameters, can be evaluated in sev-114

eral different ways. One approach of evaluating the goodness of fit would be115

to determine how many correct predictions the parameterized model makes116

of a DM’s choices. For example, for a set of risky choices from binary lotter-117

ies, researchers may find that 60% of the choices are constant with expected118

value maximization (EV maximization is the normative decision policy and119

trivially is a zero parameter decision model). If researchers used a concave120

power function to transform values into utilities, this single parameter model121

may increase to 70% consistency with the selected choices. Although this122

model scoring method is straightforward and intuitive, it has some stark123

shortcomings (as we will show later in the paper). Other more sophisticated124

methods, like maximum likelihood estimation, have been developed that al-125

low for a more nuanced approach to evaluating the fit of a choice model (see126

for example Harless and Camerer, 1994; Hey and Orme, 1994). All of these127

different evaluation methods can also include both in-sample, and out-of-128

sample tests, which can be useful to diagnose and mitigate the overfitting129

choice data.130

The interrelationship between lotteries, a decision model, and an esti-131

mation procedure, is shown in Figure 1. Lotteries provide stimuli and the132

resulting choices are the input for the models; a decision model and an esti-133

mation procedure tune parameters to maximize correspondence between the134

5

Out-of-sample

(prediction)

In-sample (summarization)

Parameter

estimation

2

91

with probability

with probability .32.68

3956

with probability .99.01

-24-13

with probability .44.56

-15-62

with probability .48.52

58-5

with probability .60.40

96-40

Week 2 parametersα, λ, δ, λ

Reliability

time 1 time 2

1

.78

.223299

InputChoices

√ √

√

√

√√

Lotteries

Week 1 parametersα, λ, δ, λ

ModelProspect Theory

Figure 1: On the left we find three choices for each of the binary lotteries that serve as inputfor the model. Using an estimation method we fit the model’s risk preferenceparameters to the individual risky choices at time 1. These parameters, to someextent, summarize the choices made in time 1 by using the parameters in themodel and reproduce the choices (depending on the quality of the fit). Theout-of-sample predictive capacity of the model can be evaluated by comparingthe predictions against actual choices at time 2. The reliability of the estimatesis evaluated by comparing the time 1 parameter estimates to estimates obtainedusing the same estimation method on the time 2 data. This experimental designholds the lotteries and choices consistent, but varies the estimation procedures;moreover this is done in a test-retest design which allows the computation andcomparison of both in-sample and out-of-sample statistics.

model and the actual choices from the decision maker facing the lotteries.135

This process of eliciting choices can be repeated again using the same lot-136

teries and the same experimental subjects. This test-retest design allows for137

both the development of in-sample parameter fitting (i.e., statistical expla-138

nation or summarization), as well as out-of-sample parameter fitting (i.e.,139

prediction). Moreover, the test-retest reliability of the different parameter140

6

estimates can be computed as a correlation, as two sets of parameters exist141

for each decision maker. This is a powerful experimental design as it can142

accommodate both fitting and prediction, and further can also diagnose in-143

stances of overfitting which can undermine psychological interpretations (see144

Gigerenzer and Gaissmaier, 2011).145

1.4. Overfitting and bounding parameters146

Multi-parameter models estimation methods may be prone to overfitting147

and in doing so adjust to noise instead of real risk preferences (Roberts148

and Pashler, 2000). This can sometimes be observed when parameter values149

emerge that are highly atypical and extreme. A common solution to this150

problem is to set boundaries and limit the range of parameter values that151

are potentially estimated. Boundaries prevent extreme parameter values in152

the case of weak evidence and thus reduce overfitting, but on the downside153

they negate the possibility of reflecting extreme preferences altogether, even154

though these preferences may be real. Boundaries are also defined arbitrary155

and may create serious estimation problems due to parameter interdepen-156

dence. For example, setting a boundary at the lower range on one parameter157

may radically change the estimate of another parameter (e.g., restricting the158

range of probability distortion may unduly influence estimates of loss aver-159

sion) for one particular subject. The fact that a functional (mis)specification160

can affect other parameter estimates (see for example Nilsson et al., 2011)161

and that interaction effects between parameters have been found (Zeisberger162

et al., 2010) indicates that there are multiple ways in which prospect theory163

can account for preferences. This in turn may mean that choosing different164

parameter boundaries for one or more parameters may affect the estimates of165

other parameter estimates as well if a parameter estimate runs up against an166

imposed boundary. To circumvent the pitfalls of arbitrary parameter bound-167

aries, we use a hierarchical estimation method based on Farrell and Ludwig168

(2008) without such boundaries. At its core, this method uses estimates of169

risk preferences of the whole sample to inform estimates of risk preferences at170

the individual level. In this paper we address to what degree an estimation171

method combining group level information with individual level information172

can more reliably represent individual risk preferences, rather than using173

either individual or aggregate information exclusively.174

7

1.5. Justification for using hierarchical estimation methods175

The ultimate goal of this hierarchical estimation procedure is to obtain re-176

liable estimates of individual risk preferences that can be used to make better177

predictions about risky choice behavior contrasted to other estimation meth-178

ods. This is not modeling as a means to only maximize in-sample fit, but179

rather to maximize out-of-sample correspondence; and moreover these meth-180

ods are a way to gain insights into what people are actually motivated by181

as they cogitate about risky options and make choices when confronting ir-182

reducible uncertainty. Ideally, the parameters should not only be about just183

summarizing choices, but also about capturing some psychological mecha-184

nisms that underlie risky decision making.185

1.6. Other applications of hierarchical estimation methods186

Other researchers have applied hierarchical estimation methods to risky187

choice modeling. Nilsson et al. (2011) have applied a Bayesian hierarchical188

parameter estimation model to simple risky choice data. The hierarchical189

procedure outperformed maximum likelihood estimation in a parameter re-190

covery test. The authors however did not test out-of-sample predictions nor191

the retest reliability of their parameter estimates. Wetzels et al. (2010) ap-192

plied Bayesian hierarchical parameter estimation in a learning model and193

found it was more robust with regard to extreme estimates and misunder-194

standing of task dynamics. Scheibehenne and Pachur (2013) applied Bayesian195

hierarchical parameter estimation to risky choice data using a TAX model196

(Birnbaum and Chavez, 1997) but reported no improvement in parameter197

stability.198

1.7. Other research examining parameter stability199

There exists some research on estimated parameter stability. Glockner200

and Pachur (2012) tested the reliability of parameter estimates using a non-201

hierarchical estimation procedure. The results show that individual risk202

preferences are generally stable and that the individual parameter values203

outperform aggregate values in terms of prediction. The authors concluded204

that the reliability of parameters suffered when extra model parameters were205

added. This is contrasted against the work of Fehr-Duda and Epper (2012)206

who conclude, based on experimental data and a literature review, that those207

additional parameters (related to the probability weighting function) are nec-208

essary to reliably capture individual risk preferences. Zeisberger et al. (2010)209

also tested individual parameter reliability using a different type of risky210

8

choice (certainly equivalent vs. binary choice). They found significant differ-211

ences in parameter value estimates over time but did not use a hierarchical212

estimation procedure.213

1.8. Structure of the rest of this paper214

In this paper we focus on evaluating different statistical estimation pro-215

cedures for fitting individual choice data to risky decision models. To this216

end, we hold constant the set of lotteries DMs made choices with, and the217

functional form of the risky choice model. We then fit the same set of choice218

data using a variety of estimation methods, and then contrast the results219

to differentiate their efficiency. We also compute the test-retest reliability220

of parameter estimations that resulted from different estimation procedures.221

This broad approach allows us to evaluate different estimation methods and222

make substantiated conclusions about fit quality as well as diagnose over-223

fitting. We conclude with recommendations for estimating risk preferences224

at the individual level and briefly describe an online tool for measuring and225

estimating risk preferences that researchers are invited to use as part of their226

own research programs.227

2. Stimuli and Experimental design228

2.1. Participants229

One hundred eighty five participants from the subject pool at the Max230

Planck Institute for Human Development in Berlin volunteered to participate231

in two sessions (referred to hereafter as time 1 and time 2) that were admin-232

istered approximately two weeks apart. After both sessions were conducted,233

complete data from both sessions was available for 142 participants; these234

subjects with complete datasets are retained for subsequent analysis. The235

experiment was incentive compatible and was conducted without deception.236

The data used here are the choice only results from the Schulte-Mecklenbeck237

et al. (2014) experiment and we are indebted to these authors for their gen-238

erosity in sharing their data.239

2.2. Stimuli240

In each session of the experiment individuals made choices from a set of241

91 simple binary option lotteries. Each option has two possible outcomes242

between -100 and 100 that occur with known probabilities that sum to one.243

There were four types of lotteries for which examples are shown in Table244

9

2. The four types are: gains only; lotteries with losses only; mixed lotteries245

with both gains and losses; and mixed-zero lotteries with one gain and one246

loss and zero (status quo) as the alternative outcome. The first three types247

were included to cover the spectrum of risky decisions while the mixed-zero248

type allows for measuring loss aversion separately from risk aversion (Rabin,249

2000; Wakker, 2005). The same 91 lotteries were used in both test-retest250

sessions of this research. The set was compiled of items used by Rieskamp251

(2008), Gaechter et al. (2007) and Holt and Laury (2002); these items are252

also used by Schulte-Mecklenbeck et al. (2014). Thirty-five lotteries are gain253

only, 25 are loss only, 25 are mixed, and 6 are mixed-zero. All of the lotteries254

are listed in Appendix A.255

Lottery Option A Option B Type

132

with probability.78

99 .22

39with probability

.32

56 .68gain

2-24

with probability.99

-13 .01

-15with probability

.44

-62 .56loss

358

with probability.48

-5 .52

96with probability

.60

-40 .40mixed

4-30

with probability.50

60 .500 for sure mixed-zero

Table 2: Examples of binary lotteries from each of the four types.

2.3. Procedure256

Participants received extensive instructions regarding the experiment at257

the beginning of the first session. All participants received 10 EUR as a guar-258

anteed payment for participation in the research, and could earn more based259

on their choices. Participants then worked through several examples of risky260

choices to familiarize themselves with the interface of the MouselabWeb soft-261

ware (Willemsen and Johnson, 2011, 2014) that was used to administer the262

study. While the same 91 lotteries were used for both experimental sessions,263

the item order was randomized. Additionally the order of the outcomes (top-264

bottom) and options order (A or B) and the orientation (A above B, A left265

of B) were randomized and stored during the first session. The exact oppo-266

site spacial representation of this was used in the second session, to mitigate267

order and presentation effects.268

10

Incentive compatibility was implemented by randomly selecting one lot-269

tery at the end of each experimental session and paying the participant ac-270

cording to their choice on the selected item when it was played out with271

the stated probabilities. An exchange rate of 10:1 was used between exper-272

imental values and payments for choices. Thus, participants earned a fixed273

payment of 10 EUR plus one tenth of the outcome of one randomly selected274

lottery for each completed experimental session. As a result participants275

earned about 30 EUR (approximately 40 USD) on average for participating276

in both sessions.277

3. Model specification278

We use cumulative prospect theory (Tversky and Kahneman, 1992) to279

model risk preferences. A two-outcome lottery1 L is valued in utility u(·)280

as the sum of its components in which the monetary reward xi is weighted281

by a value function v(·) and the associated probability pi by a probability282

weighting function w(·). This is shown in Equation 1.283

u(L) = v(x1)w(p1) + v(x2)(1− w(p1)) (1)

Cumulative prospect theory has many possible mathematical specifica-284

tions. These different functional specifications have been tested and reported285

in the literature (see for example Stott, 2006) and the functional forms and286

parameterizations we use here are justifiable given the preponderance of find-287

ings.288

3.1. Value function289

In general power functions have been empirically shown to fit choice data290

better than many other functional forms at the individual level (Stott, 2006).291

Stevens (1957) also cites experimental evidence showing the merit of power292

functions in general for modeling psychological processes. Here we use a293

power value-function as displayed in Equation 2.294

1Value x1 and its associated probability p1 and is the option with the highest value forlotteries in the positive domain and x1 is the option with the lowest associated value forlotteries in the negative domain. The order is irrelevant when lotteries consist of uniformgains or losses, however in mixed lotteries it matters. This ordering is to ensure that acumulative distribution function is applied to the options systematically. See Tversky andKahneman (1992) for details.

11

−25 −15 −5 5 15 25−25

−15

−5

5

15

25

x (objective value)

v(x

) (s

ubje

ctive v

alu

e)

0 0.2 0.4 0.6 0.8 10

0.2

0.4

0.6

0.8

1

p (objective probability)

w(p

) (s

ubje

ctive p

robabili

ty)

Figure 2: This figure shows typical value function (left) and probability weighting function(right) from prospect theory. The parameters used to plot the solid lines are fromTversky and Kahneman (1992) and reflect the empirical findings at the aggregatelevel. The dashed lines represent risk-neutral preferences. The solid line for thevalue function is concave over gains and convex over losses, and further exhibitsa “kink” at the reference point (in this case the origin) consistent with lossaversion. The probability weighting function overweights small probabilitiesand underweights large probabilities. It is worth noting that at the individuallevel, the plots can differ significantly from these aggregate level plots.

v(x) =

{xα x ≥ 0; α > 0−λ(−x)α x < 0; α > 0; λ > 0

(2)

The α-parameter controls the curvature of the value function. If the value295

of α is below one, there are diminishing marginal returns in the domain of296

gains. If the value of α is one, the function is linear and consistent with risk-297

neutrality (i.e., EV maximizing) in decision-making. For α values above one,298

there are increasing marginal returns for positive values of x. This pattern299

reverses in the domain of losses (values of x less than 0), which is referred to300

as the reflection effect.301

For this paper we use the same value function parameter α for both gains302

and losses, and our reasoning is as follows. First, this is parsimonious. Sec-303

ond, when using a power function with different parameters for gains and304

losses, loss aversion cannot be defined anymore as the magnitude of kink at305

the reference point (Kobberling and Wakker, 2005) but would instead need306

12

to be defined contingently over the whole curve.2 This complicates interpre-307

tation and undermines clear separability between utility curvature and loss308

aversion, and further may cause modeling problems for mixed lotteries that309

include an outcome of zero. Problems of induced correlation between the loss310

aversion parameter and the curvature of the value function in the negative311

domain have furthermore been reported when using αgain 6= αloss in a power312

utility-model (Nilsson et al., 2011).313

3.2. Probability weighting function314

To capture subjective probability, we use Prelec’s functional specification315

(Prelec, 1998; see Figure 5). Prelec’s two-parameter probability weighting316

function can accommodate both inverse S-shaped as well as S-shaped weight-317

ings and has been shown to fit individual data well (Fehr-Duda and Epper,318

2012; Gonzalez and Wu, 1999). Its specification can be found in Equation 3.319

w(p) = exp (−δ(− ln(p))γ), δ > 0; γ > 0 (3)

The γ parameter controls the curvature of the weighting function. The320

psychological interpretation of the curvature of the function is a diminish-321

ing sensitivity away from the end points: both 0 and 1 serve as necessary322

boundaries and the further from these edges, the less sensitive individuals are323

to changes in probability (Tversky and Kahneman, 1992). The δ-parameter324

controls the general elevation of the probability weighting function. It is an325

index of how attractive lotteries are considered to be (Gonzalez and Wu,326

1999) in general and it corresponds to how optimistic (or pessimistic) an327

individual decision maker is.328

The use of Prelec’s two-parameter weighting function over the original329

specification used in cumulative prospect theory (Tversky and Kahneman,330

1992) requires additional explanation. In the original specification the point331

of intersection with the diagonal changes simultaneously with the shape of332

the weighting function, whereas in Prelec’s specification, the line always in-333

tersects at the same point if δ is kept constant.3 However, changing the334

2It is possible to use αgain 6= αloss by using an exponential utility function (Kobberlingand Wakker, 2005).

3That point is 1/e ≈ 0.37 for δ = 1, which is consistent with some empirical evidence(Gonzalez and Wu, 1999; Camerer and Ho, 1994). The (aggregate) point of intersec-tion is sometimes also found to be closer to 0.5 (Fehr-Duda and Epper, 2012), which is

13

point of intersection and curvature simultaneously induces a negative corre-335

lation with the value function parameter α because both parameters capture336

similar characteristics and also does not allow for a wide variety of individ-337

ual preferences (see Fehr-Duda and Epper, 2012). Furthermore, the original338

specification of the weighting function is not monotonic for γ < 0.279 (In-339

gersoll, 2008). While that low value is generally not in the range of reported340

aggregate parameters (Camerer and Ho, 1994), this non-monotonicity may341

become relevant when estimating individual preferences, where there is con-342

siderably more heterogeneity.343

4. Estimation methods344

Individual risk preferences are captured by obtaining a set of parameters345

that best fit the observed choices implemented via a particular choice model.346

In this section we explain the workings of the simple direct maximization of347

the explained fraction (MXF) of choices, of standard maximum likelihood es-348

timation (MLE) and of hierarchical maximum likelihood estimation (HML).349

4.1. Direct maximization of explained fraction of choices (MXF)350

Arguably the most straightforward way to capture an individual’s pref-351

erences is by finding the set of parameters that maximizes the fraction of352

explained choices made over all the lotteries considered. By explained choices353

we mean the lotteries for which the utility for the chosen option exceeds that354

of the alternative option, given some decision model and parameter value(s).355

The term “explained” does not imply having established some deeper causal356

insights into the decision making process, but is used here simply to refer to357

statistical explanation (e.g., a summarization or reproducibility) of choices358

given tuned parameters and a decision model. The goal of this MXF method359

is to find the set of parametersM = {α, λ, δ, γ} that maximizes the fraction360

of explained choices from an individual DM.361

Mi = arg maxM

1

N

N∑i=1

c(yi|M) (4)

consistent with Karmarkar’s single-parameter weighting function (Karmarkar, 1979). Atwo-parameter extension of that function is provided by Goldstein and Einhorn’s function(Goldstein and Einhorn, 1987), which appears to fit about equally well as both Prelec’s,and Gonzalez and Wu’s (Gonzalez and Wu, 1999).

14

By c(·) we denote the choice itself as in Equation 5, in which u(·) is given362

by Equation 1. It returns 1 if the utility given the parameters is consistent363

with the actual choice of the subject and 0 otherwise.364

c(yi|M) =

1

if A is chosen and u(A) ≥ u(B) orif B is chosen and u(A) ≤ u(B)

0 otherwise

(5)

365

366

This is a discrete problem because we use a finite number of binary lotteries.367

Solution(s) can be found using a grid search (i.e. parameter sweep) approach,368

combined with a fine-tuning algorithm that uses the best results of the grid369

search as a starting point and then refines the estimate as needed, improving370

the precision of the parameter values. Note that this process can be compu-371

tationally intense for a high resolution estimates since the full combination372

of four parameters over their full range has to be taken into consideration.373

The MXF estimation method has some advantages. Finding the param-374

eters that maximize the explained fraction of choices is a simple metric that375

is directly related to the actual choices from a decision maker. It is easy to376

explain and it is also robust to the presence of a few aberrant choices. This377

quality prevents the estimates from yielding results that are unduly affected378

by a single decision, measurement error, or overfitting.379

An important shortcoming of MXF as an estimation method is that the380

characteristics of the explained lotteries are not taken into consideration when381

fitting the choice data. Consider the lottery at the beginning of the paper382

(Table 1), in which the expected value for option A is higher than that for B,383

but just barely. Consider a decision maker that we suspect to be generally384

risk-neutral, and therefore the EV-maximizing policy would dictate select-385

ing option A over option B. But for argument’s sake, consider if we observe386

the DM select option B; how much evidence does this provide against our387

conjecture of risk neutrality? Hardly any, one can conclude, given the small388

difference in utility between the options. Now consider the same lottery, but389

the payoff for A is $483 instead of $83. Picking B over A is now a huge390

mistake for a risk-neutral decision maker and such an observation would391

yield stronger evidence that would force one to revise a conjecture about392

the decision maker’s risk neutrality. MXF as a estimation method does not393

distinguish between these two instances as its simplistic right/wrong crite-394

15

rion lacks the nuance to integrate this additional information about options,395

choices, and the strength of evidence.396

Another limitation of MXF is that it results in numerous ties for best-397

fitting parameters. In other words, many parameter sets result in exactly398

the same fraction of choices being explained. For several subjects the same399

fraction of choices is explained using very different parameters. For one sub-400

ject just over seventy percent of choices were explained by parameters that401

included 0.6 to 1.25 for the utility curvature function, 1.5 to 6 (and possi-402

bly beyond) for loss aversion, 0.44 to 1.3 for the elevation in the weighting403

function and 0.8 to 2.5 for its curvature. We find such ties for various constel-404

lations of parameters (albeit somewhat smaller) even for subjects for which405

we can explain up to ninety percent of all choices.406

Two other estimation methods (MLE and HLM) avoid these problems407

and we turn our attention to them next.408

4.2. Maximum Likelihood Estimation (MLE)409

A strict application of cumulative prospect theory dictates that even a410

trivial difference in utility leads the DM to always choose the option with the411

highest utility. However, even in early experiments with repeated lotteries,412

Mosteller and Nogee (1951) found that this is not the case with real decision413

makers. Choices were instead partially stochastic, with the probability of414

choosing the generally more favored option increasing as the utility difference415

between the options increased. This idea of random utility maximization has416

been developed by Luce (1959) and others (e.g. Harless and Camerer, 1994;417

Hey and Orme, 1994). In this tradition, we use a logistic function that418

specifies the probability of picking one option, depending on each option’s419

utility. This formulation is displayed in Equation 6. A logistic function fits420

the data from Mosteller and Nogee (1951) well for example and has been421

shown generally to perform well with behavioral data (Stott, 2006).422

p(A � B) =1

1 + eϕ(u(B)−u(A)) , ϕ ≥ 0 (6)

The parameter ϕ is an index of the sensitivity to differences in utility. Higher423

values for ϕ diminish the importance of the difference in utility, as is illus-424

trated in Figure 3. It is important to note that this parameter operates on425

the absolute difference in utility assigned to both options. Two individuals426

with different risk attitudes but equal sensitivity, will almost certainly end up427

16

0 5 10 15 200

0.2

0.4

0.6

0.8

1

utility of A

ϕ=1

ϕ=2

pro

ba

bili

ty o

f p

ickin

g A

ove

r B

Figure 3: This figure shows two example logistic functions. Consider a lottery for whichoption B has a utility of 10 while option A’s utility is plotted on the x-axis.Given the utility of B, the probability of picking A over B is plotted on the y-axis. The option with a higher utility is more likely to be selected in general. Inthe instance when both options’ utilities are equal, the probability of choosingeach option is equivalent. The sensitivity of a DM is reflected in parameterϕ. A perfectly discriminating (and perfectly consistent) DM would have a stepfunction as ϕ → 0; less perfect discrimination on behalf of DMs is captured bylarger ϕ values.

with different ϕ parameters simply because the differences in options utilities428

are also determined by the risk attitude. While the parameter is useful to429

help fit an individual’s choice data, one cannot compare the values of ϕ across430

individuals unless both the lotteries and the individuals’ risk attitudes are431

identical. The interpretation of the sensitivity parameter is therefore often432

ambiguous and it should be considered as simply an aid to the estimation433

method.434

In maximum likelihood estimation the goal function is to maximize the435

likelihood of observing the outcome, which consists of the observed choices,436

given a set of parameters. The likelihood is expressed using the choice func-437

tion above, yielding a stochastic specification.438

We use the notation M = {α, λ, δ, γ, ϕ}, where yi denotes the choice for439

17

the i-th lottery.440

Mi = arg maxM

N∏i=1

c(yi|M) (7)

By c(·) we denote the choice itself, where p(A � B) is given by the logistic441

choice function in Equation 6.442

c(yi|M) =

{p(A � B) if A is chosen (yi is 0)1− p(A � B) if B is chosen (yi is 1)

(8)

This approach overcomes some of the major shortcomings of other methods443

discussed above. MLE takes the utility difference between the two options444

into account and therefore does not only fit the number of choices correctly445

predicted, but also measures the quality of the lottery and its fit. Ironically,446

because it is the utility difference that drives the fit (not a count of correct447

predictions), the resulting best-fitting parameters may explain fewer choices448

than the MXF method. This is because MLE tolerates several smaller pre-449

diction mistakes instead of fewer, but very large, mistakes. The fact that450

the quality of the fit drives the parameter estimates may be beneficial for451

predictions, especially when the lotteries have not been carefully chosen and452

large mistakes have large consequences. Because the resulting fit (likelihood)453

has a smoother (but still reactively lumpy) surface, finding robust solutions454

is computationally less intense than with MXF.455

On the downside, MLE is not highly robust to aberrant choices. If by456

confusion or accident the DM chooses an atypical option for a lottery that is457

greatly out of line with her other choices, the resulting parameters may be458

disproportionately affected by this single anomalous choice. While MLE does459

take into account the quality of the fit with respect to utility discrepancies,460

the fitting procedure has the simple goal of finding the parameter set that461

generates the best overall fit (even if the differences in fit among parameter462

sets are trivially divergent). This approach ignores the fact that there may be463

different parameter sets that fit choices virtually the same. Consider subject464

A in Figure 8, which is the resulting distribution of parameter estimates when465

we change one single choice and reestimate the MLE parameters. Especially466

for loss aversion and the elevation of the probability weighting function we467

find that only a single choice can make the difference between very different468

parameter values.469

The MLE method solves the tie-breaking problem of MXF, but does so470

18

by ascribing a great deal of importance to potentially trivial differences in471

fit quality. Radically different combinations of parameters may be identified472

by the procedure as nearly equivalently good, and the winning parameter set473

may emerge but only differ from the other “almost-as-good” sets by a slight-474

est of margins in fit quality. This process of selecting the winning parameter475

set from among the space of plausible combinations ignores how realistic or476

reflective the sets may be of what the DM’s actual preferences are. Moreover477

the ill behaved structure of the parameter space makes it a challenge to find478

reliable results, as different solutions can be nearly identical in fit quality479

but be located in radically different regimes of the parameter space. A minor480

change in the lotteries, or just one different choice, may bounce the resulting481

parameter estimations substantially. Furthermore, the MLE procedure does482

not consider the psychological interpretation of the parameters and therefore483

the fact that we may select a parameter that attributes preferences that are484

out of the ordinary with only very little evidence to distinguish it from more485

typical preferences.486

4.3. Hierarchical Maximum Likelihood Estimation (HML)487

The HML estimation procedure developed here is based on Farrell and488

Ludwig (2008). It is a two-step procedure that is explained below.489

Step 1. In the first step the likelihood of occurrence for each of the four490

model parameters in the population as a whole is estimated. These likeli-491

hoods of occurrence are captured using probability density distributions and492

reflect how likely (or conversely, out of the ordinary) a particular parameter493

value is in the population given everyone’s choices. These density distribu-494

tions are found by solving the integral in Equation 9. For each of the four495

cumulative prospect theory parameters we use a lognormal density distribu-496

tion, as this distribution has only positive values, is positively skewed, has497

only two distribution parameters, and is not too computationally intense.498

Because the sensitivity parameter ϕ is not independent of the other param-499

eters, and because it has no clear psychological interpretation, we do not es-500

timate a distribution of occurrence for sensitivity, but instead take the value501

we find for the aggregate data with classical maximum likelihood estimation502

for this step.4 We use the notation M = {α, λ, δ, γ, ϕ}, Pα = {µα, σα},503

4It may be that a different approach with regard to the sensitivity parameter leads tobetter performance. We cannot judge this without data of a third re-test, as it requires

19

0 1 2 30

1

2

3

4

← α (curvature)

← λ (loss aversion)

parameter value

de

nsity

Step 1

0 1 2 30

1

2

3

4

← α

← λ

parameter value

de

nsity

Step 2

0 1 2 30

1

2

3

4

← δ (elevation)

(shape) γ →

parameter value

de

nsity

0 1 2 30

1

2

3

4

← δγ →

parameter value

de

nsity

Figure 4: The two steps of the hierarchical maximum likelihood estimation procedure.In the first step we fit the data to four probability density distributions. Inthe second step we obtain the actual individual estimates. A priori and in theabsence of individual choice data the small square has the highest likelihood,which is at the modal value of each parameter distribution. The individualchoices are balanced against the likelihood of the occurrence of a parameter toobtain individual parameter estimates, depicted by big squares.

Pλ = {µλ, σλ}, Pδ = {µδ, σδ}, Pγ = {µγ, σγ}, P = {Pα,Pλ,Pδ,Pγ}, S and I504

estimation in a first session, calibration of the method using a second session, and trueout-of-sample testing of this calibration in a third session. This may be interesting but isbeyond the scope of the current paper.

20

denote the number of participants and the number of lotteries respectively.505

P = arg maxP

S∏s=1

∫∫∫∫ [N∏i=1

c(ys,i|M)

]ln(α|Pα) ln(λ|Pλ) ln(δ|Pδ) ln(γ|Pγ) dα dλ dδ dγ

(9)This first step can be explained using a simplified example which is a506

discrete version of Equation 9. For each of the four risk preference param-507

eters let us pick a set of values that covers a sufficiently large interval, for508

example {0.5, 1, ..., 5}. We then take all parameter combinations (Cartesian509

product) of these four sets, i.e. α = 0.5, λ = 0.5, δ = 0.5, γ = 0.5, then510

α = 0.5, λ = 0.5, δ = 0.5, γ = 1.0, and so forth. Each of these combi-511

nations has a certain likelihood of explaining a subject’s observed choices,512

displayed within the block parentheses in Equation 9. However, not all of the513

parameter values in these combinations are equally supported by the data.514

It could for example be that the combination showed above that contains515

γ = 0.5 is 10 times more likely to explain the observed choices than γ = 1.0.516

How likely each parameter value is in relation to another value is precisely517

what we intend to measure. The fact that one set of parameters is associ-518

ated with a higher likelihood of explaining observed choices (and therefore519

is more strongly supported by the data) can be reflected by changing the520

four log-normal density functions for each of the model parameters. Because521

we multiply how likely a set of values explains the observed choices by the522

weight put on this set of parameters through density functions, Equation 9523

achieves this. The density functions, and the product thereof, denoted by524

ln(·) within the integrals, are therefore ways to measure the relative weight of525

certain parameter values. The maximization is done over all participants si-526

multaneously under the condition and to ensure that it needs to be a density527

distribution. Applying this step to our data leads to the density distributions528

displayed in Figure 4.529

Step 2. In this step we estimate individual parameters as carried out in530

standard maximum likelihood estimation, but now weigh parameters by the531

likelihood of the occurrence of these parameter values as given by the density532

functions obtained in step 1. This likelihood of occurrence is determined by533

the distributions we obtained from step 1. Those distributions and both steps534

are illustrated in Figure 4. This second step of the procedure is described in535

Equation 10. With Mi we denote M as above for subject i. The resulting536

parameters are not only driven by individual decision data, but also by how537

21

likely it is that such a parameter combination occurs in the population.538

Mi = arg maxMi

[N∏i=1

c(yi|Mi)

]ln(α|Pα) ln(λ|Pλ) ln(δ|Pδ) ln(γ|Pγ) (10)

This HML procedure is motivated by the principle that extreme conclusions539

require extreme evidence. In the first step of the HLM procedure, one si-540

multaneously extracts density distributions of all parameter values using all541

choice data from the population of DMs. In the second step, the likelihood542

of a particular parameter value is determined by how well it fits the ob-543

served choices from an individual, and at the same time, it is weighted by544

the likelihood of observing that particular parameter value in the population545

distribution (defined by the density function obtained in step 1). The param-546

eter set with the most likely combination of both of those elements is selected.547

The weaker the evidence is, the more parameter estimates are “pulled” to548

the center of the density distributions. HML therefore counters maximum549

likelihood estimation’s tendency to fit parameters to extreme choices by re-550

quiring stronger evidence to establish extreme parameter estimates. Thus,551

if a DM makes very consistent choices, the population parameter densities552

will have very little effect on the estimates. Conversely, if a DM has greater553

inconsistency in his choices, the population parameters will have more pull554

on the individual estimates. In the extreme, if a DM has wildly inconsistent555

choices, the best parameter estimates for the DM will simply be those for556

the population average.557

This is illustrated in Figure 5 that compares the estimates for four repre-558

sentative participants by MLE (points) and HML (squares). Subject 1 is the559

same subject as in Figure 8. We find that HML estimates are different from560

MLE, but are generally still within the 95% confidence intervals. This is the561

statistical tie-breaking mechanism we referred to in the previous section. It562

is also possible that the differences in estimates are large and fall outside the563

univariate MLE confidence intervals (displayed by lines), such as for subject564

2. For the other two participants HML’s estimates are virtually identical, ir-565

respective of the confidence interval. This is largely driven by how consistent566

a DM’s choices supporting particular parameter values are.567

The estimation method has been implemented in both MATLAB and C.568

Parameter estimates in the second step were found using the simplex algo-569

rithm by Nelder and Mead (1965) and initialized with multiple starting points570

22

1

α λ δ γ

2

3

4

1 2 2 4 6 1 2 1 2

Figure 5: Parameter estimates for four participants, with each row a subject and eachcolumn a parameter. The lines are approximate univariate 95% confidence in-tervals for MLE for each of the four model parameters (sensitivity not included).The round markers indicate the best estimate from the maximum likelihood es-timation procedure. The square marker indicates its hierarchical counterpart.The first subject is subject A in Figure 8. For participants one and two, we ob-serve large differences in parameter estimates, partially explained by the largeconfidence intervals. For participants three and four we find that the results arevirtually identical.

to avoid local minima. Negative log-likelihood was used for computational571

stability when possible. For the first step of the hierarchical method one572

cannot use negative log-likelihood for the whole equation due to the presence573

of the integral, so negative log-likelihood was only used for the multiplication574

across participants. Solving the volume integral is computationally intense575

because of the number of the high dimensions and the low probability val-576

ues. Quadrature methods were found to be prohibitively slow. Because the577

integral-maximizing parameters are what is of central importance, and not578

the precise value of the integral, we used Monte Carlo integration with 2,500579

(uniform random) values for each dimension as a proxy. We repeated this580

twenty times and used the average of these estimates as the final distribution581

parameters. A possible benefit of HML is that the step 1 distribution appears582

to be fairly stable. With a sufficiently diverse population it may therefore be583

possible to simply take the distributions found in previous studies, such as584

this one. The parameter values can be found in the appendix.585

5. Results586

5.1. Aggregate results587

The aggregate data can be analyzed to establish stylized facts and general588

behavioral tendencies. In this analysis, all 12,922 choices (142 participants×589

91 items) are modeled as if they were made by a single DM and one set of risk590

23

preference parameters is estimated. This preference set is estimated using591

each of the three different estimation methods discussed before (MXF, MLE,592

HLM). For MXF, four parameters are estimated that maximize the fraction593

of explained choices; for the other two methods, five parameters (the fifth594

value is the sensitivity parameter ϕ) are estimated for each method.595

The results of the three estimation procedures, including confidence in-596

tervals, can be found in Table 3. On the aggregate, we observe behavior597

consistent with a curvature parameters implying diminishing sensitivity to598

marginal returns; α values less than 1 are estimated for all of the methods,599

namely 0.59 for MXF and 0.76 for both MLE and HLM. Choice behavior600

is also consistent with some loss aversion (λ > 1), which reflects the no-601

tion that “losses loom larger than gains” (Kahneman and Tversky, 1979).602

The highest loss-aversion value we find for any of the estimation methods603

is 1.12, which is much lower than the value of 2.25 reported in the semi-604

nal cumulative prospect theory paper (Tversky and Kahneman, 1992). Part605

of the explanation for a lower value (indicating less loss aversion) might be606

that as it is verboten to take money from participants in the laboratory and607

thus “losses” were bounded to only being reductions in participant’s show-up608

payment. The probability weighting function has the characteristic inverse609

S-shape that intersects the diagonal at around p = 0.44 for MLE and HML610

(and stays above it for MXF). Similar values for all of the estimation meth-611

ods are found in the second experimental session. The largest differences612

between the two experimental test-retest sessions are seen in the probability613

weighting parameters.614

α λ δ γ ϕ

Time 1 MXF 0.59 1.11 0.71 0.90 -

MLE 0.76 (±0.03) 1.12 (±0.04) 0.93 (±0.03) 0.63 (±0.02) 0.25 (±0.04)

HML 0.76 (±0.02) 1.12 (±0.03) 0.93 (±0.02) 0.63 (±0.02) 0.26 (±0.03)

Time 2 MXF 0.58 1.11 0.71 0.86 -

MLE 0.78 (±0.03) 1.18 (±0.04) 0.91 (±0.03) 0.65 (±0.02) 0.23 (±0.03)

HML 0.78 (±0.02) 1.18 (±0.03) 0.91 (±0.02) 0.65 (±0.02) 0.23 (±0.03)

Table 3: Median parameter values for the aggregate data found using all three estimationmethods. Approximate univariate 95% confidence intervals constructed usinglog-likelihood ratio statistics for the MLE and HML methods.

With the choice function and obtained value of ϕ we can convert the615

24

parameter estimates of the first experimental session for MLE (and HML,616

since its aggregate estimates are virtually identical) into a prediction of the617

probability of picking option B over option A for each lottery in the second618

experimental session. This is then compared to the fraction of subjects pick-619

ing option B over option A. On the aggregate, cumulative prospect theory’s620

predictions appear to perform well. The correlation between the predicted621

and observed fractions is 0.93.622

5.2. Individual risk preference estimates623

When benchmarking the explained fraction and fit of individual param-624

eters compared to their estimated aggregate median counterparts, we find625

that individual parameters provide clear benefits, especially in terms of the626

fit. After having established that individual parameters have added value, a627

more direct comparison between MXF, MLE and HML is warranted. Par-628

ticularly for HML it is important to determine to what degree its parameter629

estimates sacrifice in-sample performance, which is the performance of the630

individual parameters of the first session within that same session. Individ-631

ual risk preference parameters were estimated for each subject using all three632

estimation methods. Fits are expressed in negative log-likelihood (lower is633

better) and are directly comparable because the hierarchical component is634

excluded in the evaluation of the likelihood value. The mean and standard635

deviation of the subject’s fits are displayed in Table 4. As to be expected, the636

log-likelihood is lowest for MLE because that method attempts to find the637

set of parameters with the best possible fit. HML is not far behind, however.638

No likelihood is given for MXF, as that method does not use the sensitivity639

parameter. We also determined the explained fraction of choices for each640

individual. The means and standard deviations are also printed in Table 4.641

With 0.82 the explained fraction of choices is highest for MXF because its642

goal function is to maximize that fraction. MLE and HML follow at about643

0.76. Thus, the explained percentage of choices that cannot be explained at644

time 1 by HML estimates but can be explained by MLE estimates is small to645

naught. For some participants the HML estimates explain a larger fraction of646

choices at time 1. While this seems counterintuitive, it is the fit (likelihood)647

that eventually drives the MLE parameter estimates and not the fraction648

of explained choices. For most participants the HML estimates are differ-649

ent from the MLE estimates, but the differences are inconsequential for the650

percentage of explained choices.651

25

Fraction explained by MLE

Fra

ctio

n e

xp

lain

ed

by H

ML

0.4 0.5 0.6 0.7 0.8 0.9 10.4

0.5

0.6

0.7

0.8

0.9

1

Figure 6: The predicted fraction of choices at time 2 when using MLE parameters fromtime 1 (x-axis) and HML parameters from time 1 (y-axis). Each point representsone DM. MLE performs better for a subject whose point appears in the lowerright region, HML performs better when it appears in the upper left region.

20 50 80 110 140 170 20020

50

80

110

140

170

200

Negative log likelihood of result with MLE

Ne

ga

tive

lo

g lik

elih

oo

d o

f re

su

lt w

ith

HM

L

Figure 7: The goodness of fit at time 2 when using MLE parameters from time 1 (x-axis)and HML parameters from time 1 (y-axis). Each point represents one DM. MLEperforms better for a DM whose point appears in the lower right region, HMLperforms better when it appears in the upper left region.

26

Explained (Time 1) Predicted (Time 2)

Fraction MXF 0.82 (0.07) 0.74 (0.10)

MLE 0.76 (0.09) 0.73 (0.09)

HML 0.76 (0.08) 0.73 (0.10)

Fit MXF - -

MLE 83.73 (21.15) 103.24 (26.66)

HML 86.37 (21.81) 98.97 (23.66)

Table 4: Mean and standard deviation (in parentheses) for the three estimation methods.Explained refers to the time 1 statistics given that time 1 estimates are used,predicted refers to time 2 statistics using parameters from time 1. The explainedfraction of choices is about the same, while the fit in time 2 which is better forHML.

So far we have established that the HML estimates do not hurt the ex-652

plained fraction of choices accounted for even if it has a somewhat lower fit653

within-sample; the next question is whether HML is worth the effort in terms654

of prediction (out-of-sample). The estimates of individual parameters of the655

first session were used to predict choices in the second session. In Table 4 we656

have listed simple statistics for the fit (again comparable by not including the657

hierarchical component) and the explained fractions of all three estimation658

methods for the second session. All three methods explain approximately the659

same fraction of choices. The large advantage MXF had over the other two660

methods has disappeared. It is interesting to compare this with the predic-661

tion that participants make the same choices in both sessions. That method662

correctly predicts .71 on average, but its application is of course limited to663

situations in which the lotteries are identical. Figure 6 shows the fraction664

of correctly predicted lotteries and Figure 7 compares the fit for MLE and665

HML parameters. Judging by the fraction of lotteries explained, it is not666

immediately obvious which method is better. A more sensitive measure to667

compare the three methods is the goodness-of-fit. When comparing HML668

to MLE, we find that HML’s out-of-sample fit is better on average and its669

negative log likelihood is lower for 100 out of 142 participants. Using a paired670

sign rank test for medians we find that the fit for HML is significantly lower671

than for MLE (p < 0.001). The results are much clearer when examining the672

fit in the figure too, which shows many points on the diagonal and a large673

number below it (a lower negative log likelihood is better). We can conclude674

that HML sacrificed part of the fit at time 1, for an improved fit at time 2.675

27

Examination of the differences in parameter estimates for those partici-676

pants for which HML outperforms MLE reveals higher MLE mean param-677

eter estimates for the individual probability weighting function parameters.678

To verify if HML did not just work because MLE returned extremely high679

parameter estimates for the weighting function, we applied the simple solu-680

tion of using (somewhat arbitrarily chosen) bounds to the MLE estimation681

method (0.2 ≤ α ≤ 2, 0.2 ≤ λ ≤ 5,0.2 ≤ δ ≤ 2,0.2 ≤ γ ≤ 1). This did not682

change the results, as HML still outperformed MLE. In many instances HML683

is also an improvement over MLE for seemingly plausible MLE estimates, so684

HML’s effect is not limited to outliers or extreme parameter values. Interest-685

ingly, some of the cases in which MLE outperforms HML are those in which686

low extreme values emerge that are consistent with choices in the second687

experimental session, such as loss seeking (less weight on losses) or weight-688

ing functions that are nearly binary step functions. This lack of sensitivity689

highlights one of the potential downsides of HML.690

5.3. Parameter reliability691

An key issue is the reliability of the risk parameter estimates. This view-692

point is consistent with using risk preferences as a trait, a stable characteristic693

of an individual decision maker, and amendable to psychological interpreta-694

tion of the parameters. Reliability can be assessed by applying all three695

estimation procedures independently on the same data from the two exper-696

imental sessions. Large changes in parameter values are a problem and an697

indication that we are mostly measuring/modeling noise instead of real indi-698

vidual risk preferences. At the individual level we can examine the test-retest699

correlations of the parameters from the three estimation methods, displayed700

in Table 5. The parameter correlations for MXF indicate some reliability701

with correlations of approximately 0.40, except for δ which approaches zero.702

MLE provides more reliable estimates for δ than MXF, but performs worse703

overall. The test-retest reliability for MLE can be improved for three out704

of four parameters by applying admittedly arbitrary bounds. The highest705

test-retest correlation is obtained using HML, which yields the most reliable706

parameter estimates for all parameters.707

Another form of parameter reliability is the change in parameter esti-708

mates resulting from small changes in choice input. One way of establishing709

this form of reliability is by temporarily changing the choice on one of a710

subject’s lotteries, after which the parameters are reestimated. This is re-711

peated for each of the 91 lotteries separately. MXF and HML’s estimates are712

28

rα rλ rδ rγMXF 0.39 0.40 0.05 0.39

MLE 0.23 0.36 0.19 0.06

MLE (bounded) 0.28 0.54 0.10 0.45

HML 0.43 0.53 0.44 0.66

Table 5: Test-retest correlation coefficients (r values) for parameter values obtained us-ing the listed estimation procedure for both sessions independently. The use ofparameter bounds (0.2 ≤ α ≤ 2, 0.2 ≤ λ ≤ 5, 0.2 ≤ δ ≤ 2, 0.2 ≤ γ ≤ 1),indicated by by bounded condition, improves the test-retest correlation for MLE.The highest test-retest correlations are obtained with HML.

robust, whereas MLE’s estimates are less so. In Figure 8 the results of rees-713

timation after perturbing one choice is plotted for two subjects using both714

MLE and HML. A single different choice can yield large changes in MLE715

parameter estimates and this contribute to unreliable parameter estimates.716

This is generally an undesirable property of a measurement method as minor717

inattention, confusion, or a mistake can lead to very different conclusions718

about that person’s risk preferences. The high robustness of HML estimates719

is a general finding among subjects in our sample. Moreover the fragility720

of the estimates quickly becomes worse as the number of aberrant choices721

increases by only a few more.722

29

α

Nu

mb

er

of

resu

lts

0 1 20

30

60

90

λ

Subject A − MLE

0 1 20

30

60

90

δ

0 2 40

30

60

90

γ

0 1 20

30

60

90

α

Nu

mb

er

of

resu

lts

0 1 20

30

60

90

λ

Subject A − HML

0 1 20

30

60

90

δ

0 2 40

30

60

90

γ

0 1 20

30

60

90

α

Nu

mb

er

of

resu

lts

0 1 20

30

60

90

λ

Subject B − MLE

0 1 20

30

60

90

δ

0 2 40

30

60

90

γ

0 1 20

30

60

90

α

Nu

mb

er

of

resu

lts

0 1 20

30

60

90

λ

Subject B − HML

0 1 20

30

60

90

δ

0 2 40

30

60

90

γ

0 1 20

30

60

90

Figure 8: The parameter outcomes of MLE parameter estimates for two sample subjects ifwe change a single choice for each of the 91 items. The black line corresponds tothe best MLE estimate of the original choices. The bimodal pattern we observefor loss aversion and the weighting function for subject A is not a desirablefeature. A momentary lapse of attention or a minor mistake may result in verydifferent parameter combinations. HML produces more reliable estimates interms of being less sensitive to one aberrant choice compared to MLE.

30

6. Discussion723

In this paper we illustrated the merits of a hierarchical maximum likeli-724

hood (HML) procedure in estimating individual risk parameter estimations.725

The HML estimates did reduce (albeit trivially) the in-sample performance,726

while at the same time the out-of-sample fit was significantly higher than727

MLE and its explained fraction was as high as any of the other methods.728

The reliability of the parameter estimates for test-retest was furthermore729

higher for HML than for any other method. We conclude that HML offers730

advantages over the maximization of the explained fraction and maximum731

likelihood estimation and is the preferred estimation method for this choice732

model. The use of population information in establishing individual estimates733

can improve both the out-of-sample fit and the reliability of the parameter734

estimates.735

Some caveats are in order however. The benefits of hierarchical modeling736

may, for example, disappear when more choice data is available. In cases737

where many choices have been observed, the signal can more easily be teased738

apart from the noise and hierarchical methods may become unwarranted. Its739

benefits may also depend on the set of lotteries used. For lotteries specifi-740

cally designed for the tested model, that allow a narrow range of potential741

parameter values, the choices alone may be sufficient to reliably estimate742

individual risk preference parameters and hence the MXF estimates may be743

more reliable than in our current results. As researchers however we do not744

always control the set of lottery items, especially outside of the laboratory.745

Furthermore, cumulative prospect theory and the parameterization of it we746

have chosen here is but one model. The possibility exists that other models fit747

the choice data better and that the resulting parameter estimates are highly748

robust over time; in this case there may not be added value of hierarchical749

parameter estimation.750

In this paper we have not discussed alternative hierarchical estimation751

methods. Bayesian models have gained attention in the literature recently752

(Zyphur and Oswald, 2013). The frequentist model is used here because it753

requires the addition of only one term in the likelihood function, given that754

the hierarchical distribution is known. Our data indicate that this distri-755

bution appears to be fairly stable in a population. Nevertheless a Bayesian756

hierarchical estimation method may outperform the frequentist method out-757

lined here. Additionally the hierarchical method we have applied here is of a758

relatively simple nature. It is possible to modify the extent to which the hi-759

31

erarchical method weights the individual risk preference estimates and there760

may be more sophisticated ways to do so. One way to is to fit a parameter761

that moderates the weight of the hierarchical component by maximizing the762

fit in the retest. To determine the advantages of such a method requires an763

experiment with three sessions: one to obtain parameter estimates, another764

to calibrate the parameters and a third to verify it outperforms the other765

methods. This may be of both theoretical and practical interest, but it is766

beyond the scope of this paper.767

One major take away point from the current paper is that the estima-768

tion method can greatly affect the inferences one makes about individual769

risk preferences, particularly in multi-parameter choice models. A number of770

different parameter combinations may produce virtually the same fit result.771

When the interpretation of individual parameter values is used to make pre-772

dictions about what an individual is like (e.g., psychological traits) or what773

will she do in the future (out-of-sample prediction), it is important to real-774

ize that other constellations of parameters are virtually equally plausible in775

terms of fit but may lead to vastly different conclusions about individual dif-776

ferences and predictions about behavior. Moreover, using more sophisticated777

estimation methods can help us extract more “signal” from a set of noisily778

choices and thus support better measurement of innate risk preferences.779

7. Online implementation780

Implementing the measurement and estimation methods delineated in this781