MICE 2016 GUIDEBOOK - Wielding flavoury analogies and imagery.

HAWK Helicopter Aircraft Wielding Kinect Kevin Conley (EE ’12), Paul Gurniak (EE ’12), Matthew...

-

Upload

payton-flores -

Category

Documents

-

view

217 -

download

0

Transcript of HAWK Helicopter Aircraft Wielding Kinect Kevin Conley (EE ’12), Paul Gurniak (EE ’12), Matthew...

- Slide 1

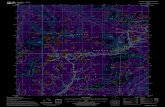

HAWK Helicopter Aircraft Wielding Kinect Kevin Conley (EE 12), Paul Gurniak (EE 12), Matthew Hale (EE 12), Theodore Zhang (EE 12) Team 6, 2012 Advisor: Dr. Rahul Mangharam (ESE) Department of Electrical and Systems Engineering http://www.airhacks.org http://mlab.seas.upenn.edu/ The HAWK System Motivation: The present state of search and rescue (SAR) requires that the attention of rescue personnel be divided between maintaining their own safety and attempting to rescue any victims that they find. To help mitigate this risk, we present a quadrotor system capable of mapping an unknown indoor environment for SAR personnel. SAR personnel can use the generated map to plan rescue efforts while minimizing risks to their personal safety. A quadrotor is ideal for this problem because of its excellent mobility in an unknown enclosed environment when compared to other types of robots. The current state of the art for 3-D mapping technology uses Light Detection and Ranging (LIDAR) systems, which cost upwards of $2000. This project instead uses the Microsoft Kinect, which is a $150 depth camera, as a cost-effective replacement, allowing us to provide a more affordable system. Quadrotor HelicopterBase Station RC Receiver Intel Atom Computer Microsoft Kinect ArduPilot Flight Controller Inertial Measurement Unit Ping Sensors Motor Controllers Laptop Computer RC Controller 2.4 GHz RC 802.11n Wi-Fi 802.11n Wi-Fi Router Motors 0-a We have successfully demonstrated the efficacy of using a quadrotor helicopter to wirelessly transmit camera data from a Kinect to a remote base station for 3D image processing. The two images below show the output visualization produced by the base station software. Point clouds from separate viewpoints are combined using the motion estimate produced by SLAM, and the resulting map is then rendered using OpenGL. All results are developed and displayed in real time. Based on the 3D maps we have created using HAWK, we have concluded that the system is effective at producing accurate, detailed maps in certain small environments such as cluttered rooms and tight corridors. However, the environments in which the Kinect is successful do not sufficiently cover the areas of interest to search and rescue (SAR) personnel. Our conclusion is that the Kinect has considerable limitations in terms of resolution and range, both of which make mapping hallways or large rooms impossible. 0-a The Microsoft Kinect on the quadrotor uses stereo cameras and an infrared (IR) projector to provide live color video and depth image feeds to an onboard Intel Atom processor. These images are compressed and transmitted via Wi-Fi (802.11n) back to the base station in real-time. The color camera feed is provided to the operator of the base station, which is used to remotely pilot the quadrotor. The onboard flight controls help assist with remote operation. The throughput achieved is above 6 frames per second within at least 60 meters line-of- sight (the length of Towne Hall first floor hallway). This is sufficient for a human operator to remotely pilot the system. Creating a 3D Map using the Kinect Color (RGB) Image Depth Image Intel Atom (Quadrotor) 802.11n Wi-Fi Laptop PC (Base Station) Features, which are landmarks like edges or areas of high contrast, are extracted from each color image. This task is accelerated by use of an implementation of GPU SURF (Speeded Up Robust Features) that uses NVIDIAs CUDA to parallelize the procedure. The red circles in the image above are the locations of extracted SURF features. GPU SURF is capable of extracting 500 to 700 features per image without placing a heavy load on CPU resources. CPU implementations of SURF (OpenCV) are limited to around 3 frames per second while extracting a comparable number of features, which would limit the system throughput. The base station overlays the color and depth images from the Kinect to produce a point cloud. This is a collection of (x,y,z) coordinates and associated color values that provide a three-dimensional visualization of the quadrotors viewpoint. Features across different images are stored and matched in logarithmic time using a k-d tree. The three-dimensional location of each match is determined using the point cloud. Matches are compared to determine how the quadrotor has moved between successive images. Making an accurate motion estimate requires the elimination of erroneous matches using RANSAC (RANdom SAmple Consensus). Matches are classified into those that fit the movement model (inliers, shown in green), and those that do not (outliers, shown in red). Using this movement estimate, point clouds from different views are combined to build a larger three-dimensional map. Point Cloud (from left) SURF Features RANSAC Matches Point Cloud (from right) Conclusions Quadrotor with Kinect Figure 3: High-Detail, Zoomed InFigure 4: Low-Detail, Zoomed Out Visualization Results Produced while Mapping the Second Floor of Moore Building (Note: depth was colorized using the provided legend) Quadrotor Crash Prevention RC Transmitter RC Receiver ArduPilot Command Filter Stabilization System Motor Controllers Motors Do the ping sensors detect an obstacle too close in the commanded direction? Yes Quadrotor is commanded to hover and not move in the commanded direction No Will the next command cause the quadrotor to move violently? Yes No Command is passed to the quadrotor Adjust the next command to make subsequent motion less violent. Figure 2: Full HAWK system with ping sensors outlined in red The ping sensors mounted on the quadrotor locate nearby obstacles by measuring the time that elapses between emitting a sound pulse and receiving its reflection. These sensors measure distances of 6 to 254 inches and are accurate to within 1 inch. The ping sensors are chained to fire sequentially so that one sensor does not erroneously receive the reflection of sound emitted by another. A chaining diagram is shown in Figure 1, and five chained ping sensors as mounted on the quadrotor are shown in Figure 2. Source: http://www.maxbotix.com/documents/LV_Chaining_Constantly_Looping_AN_Out.pdfhttp://www.maxbotix.com/documents/LV_Chaining_Constantly_Looping_AN_Out.pdf Figure 1: Ping sensors configured to fire sequentially Flying a quadrotor takes a significant amount of experience to ensure that flight is both stable and safe. This is because the pilot must simultaneously adjust four different inputs (roll, pitch, yaw, and throttle) in real-time. Inexperienced pilots often give disproportionately large inputs to the system while trying to maintain the desired orientation, causing large, dangerous motions. To help prevent crashes in such situations, we have implemented a filter shown in the flow chart below that censors potentially harmful input commands. The HAWK system consists of two main components: 1.A quadrotor helicopter equipped with a Microsoft Kinect and an Intel Atom computer for collecting data 2.A base station laptop computer for remotely processing and visualizing the data in real-time The authors would like to acknowledge the contributions of Kirk MacTavish, Sean Anderson, William Etter, Dr. Norm Badler, Karl Li, Dan Knowlton, and the other members of the SIG Lab.