Gradient descent methods in Deep Learning

Transcript of Gradient descent methods in Deep Learning

Gradient descent methods in Deep Learning

Tuyen Trung Truong

Matematikk Institut, Universitetet i Oslo

Workshop on Data Science

Halden, November 2019

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview

Part 1: Applications, limitations and criticism of DeepLearning

Part 2: Foundations on Deep Neural Network.

Historical overview

Theoretical background: Biological neural networksinspiration, Computer science advancement, Approximationtheory, Optimisation, Dynamical systems, Statistics

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview

Part 1: Applications, limitations and criticism of DeepLearning

Part 2: Foundations on Deep Neural Network.

Historical overview

Theoretical background: Biological neural networksinspiration, Computer science advancement, Approximationtheory, Optimisation, Dynamical systems, Statistics

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview (Cont.)

Part 3: Previous work on GD

Theoretical limitations: convergence either not the same asstrict mathematics notation or require di�cult to-check orto-apply conditions

Practical limitations: practice of fine-tuning of learning rates

Part 4: Recent work on Backtracking GD

A heuristic argument

Theoretical and Experimental comparison to previous work

Looking to the future: some ongoing work

Main reference: Tuyen Trung Truong and Tuan Hang Nguyen(AXON AI Research), Backtracking gradient descent methodsfor general C 1 functions, arXiv: 1808.05160v2, with sourcecodes on GitHub:https://github.com/hank-nguyen/MBT-optimizer.

For parts 1 and 2, use also contents from Anders Hansen’stalks at UiO.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview (Cont.)

Part 3: Previous work on GD

Theoretical limitations: convergence either not the same asstrict mathematics notation or require di�cult to-check orto-apply conditions

Practical limitations: practice of fine-tuning of learning rates

Part 4: Recent work on Backtracking GD

A heuristic argument

Theoretical and Experimental comparison to previous work

Looking to the future: some ongoing work

Main reference: Tuyen Trung Truong and Tuan Hang Nguyen(AXON AI Research), Backtracking gradient descent methodsfor general C 1 functions, arXiv: 1808.05160v2, with sourcecodes on GitHub:https://github.com/hank-nguyen/MBT-optimizer.

For parts 1 and 2, use also contents from Anders Hansen’stalks at UiO.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview (Cont.)

Part 3: Previous work on GD

Theoretical limitations: convergence either not the same asstrict mathematics notation or require di�cult to-check orto-apply conditions

Practical limitations: practice of fine-tuning of learning rates

Part 4: Recent work on Backtracking GD

A heuristic argument

Theoretical and Experimental comparison to previous work

Looking to the future: some ongoing work

Main reference: Tuyen Trung Truong and Tuan Hang Nguyen(AXON AI Research), Backtracking gradient descent methodsfor general C 1 functions, arXiv: 1808.05160v2, with sourcecodes on GitHub:https://github.com/hank-nguyen/MBT-optimizer.

For parts 1 and 2, use also contents from Anders Hansen’stalks at UiO.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Overview (Cont.)

Part 3: Previous work on GD

Theoretical limitations: convergence either not the same asstrict mathematics notation or require di�cult to-check orto-apply conditions

Practical limitations: practice of fine-tuning of learning rates

Part 4: Recent work on Backtracking GD

A heuristic argument

Theoretical and Experimental comparison to previous work

Looking to the future: some ongoing work

Main reference: Tuyen Trung Truong and Tuan Hang Nguyen(AXON AI Research), Backtracking gradient descent methodsfor general C 1 functions, arXiv: 1808.05160v2, with sourcecodes on GitHub:https://github.com/hank-nguyen/MBT-optimizer.

For parts 1 and 2, use also contents from Anders Hansen’stalks at UiO.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Part 1: 5. Recognition: Turing award 2018

Part 1: 6.1. Driverless cars accidents

There are reported 6 fatal accidents involving self driving cars(next slides).

Tuyen Trung Truong Gradient descent methods in Deep Learning

26/10/19, 10)08 pmList of self-driving car fatalities - Wikipedia

Page 1 of 3https://en.wikipedia.org/wiki/List_of_self-driving_car_fatalities#cite_note-10

List of self-driving car fatalitiesSince 2013, when self-driving cars first began appearing in large numbers on public roadways,[1] a primary goal of manufacturers has been to create anautonomous car system that is clearly and demonstrably safer than an average human-controlled car. Whether that will be possible in the real world withoutsacrificing even more human lives is a controversial topic, especially in light of accidents and fatalities resulting from system glitches.[2]

There are currently five levels of automated driving, of which two are considered autonomous (or self) driving (Level 4 and Level 5). Tesla Autopilot is a Level 2automated driving system.

One of the key metrics for comparing the safety levels for autonomously controlled car systems versus human controlled car systems is the number of fatalitiesper 100,000,000 miles (160,000,000 kilometres) driven. Cars driven under traditional human control are currently involved in approximately 1.18 fatalities forevery 100,000,000 mi (160,000,000 km) driven.[3] According to many automotive safety experts, much more data is yet required before any such clear anddemonstrably higher levels of safety can be convincingly provided.[3][4]

To demonstrate reliability in terms of fatalities and injuries, autonomous vehicles have to be driven hundreds of millions more miles in full autonomousmode.[5] As of February 2018, autonomous vehicles from Waymo (the Google Self-Driving Car Project) have covered 5 million miles (8 million kilometres) withthe presence of a human driver who monitors and overrides the autonomous mode to improve safety.[6] According to reports, human intervention forautonomous vehicles was needed every 13 to 5,600 miles (21 to 9,000 km) on average.[7]

On December 20, 2018, Uber returned self-driving cars to the roads in public testing in Pittsburgh, Pennsylvania, following the pedestrian fatality on March 18.Uber said that it received authorization from the Pennsylvania Department of Transportation. Uber said that it was also pursuing deploying cars on roads inSan Francisco, California and Toronto, Ontario.[8][9]

The NTSB is currently still investigating accidents caused by Tesla's Autopilot.[10]

A Level 3 autonomous driving system would occasionally expect a driver to take over control.

List of known autonomous car fatalities (occurring while autonomous-system acknowledged to have been engaged)

Date Incidentno. Country City State/county/province No. of

fatalitiesSystem

manufacturerVehicle

Type

Distancedriven by

the systemat time ofincident

Notes

18March2018

1United States

of America(USA)

Tempe Arizona 1 Uber 'RefittedVolvo' [11] — Pedestrian

fatality.[12]

Level 2 is considered automated driving, but not autonomous driving. A Level 2 driving system expects a driver to be fully aware at any time of the driving andtraffic situation and be able to take over any moment.[13] As of August 9th, 2019, there are four confirmed Level 2 fatalities, each of which involvedAutopilot.[14]

Level 3 fatalities

Level 2 fatalities

26/10/19, 10)08 pmList of self-driving car fatalities - Wikipedia

Page 2 of 3https://en.wikipedia.org/wiki/List_of_self-driving_car_fatalities#cite_note-10

List of known automated driving system car fatalities (occurring while automated driving-system acknowledged to have been engaged)

Date Incidentno. Country City State/county/province No. of

fatalitiesSystem

manufacturerVehicle

Type

Distancedrivenby thesystemat time

ofincident

Notes

20January

20161 China Handan Hebei 1 Tesla (Autopilot) Model S

[15] — Driverfatality.[16][17]

7 May2016 2

UnitedStates ofAmerica(USA)

Williston Florida 1 Tesla (Autopilot) ModelS[11] — Driver

fatality.[18][19]

23 March2018 3

UnitedStates ofAmerica(USA)

MountainView California 1 Tesla (Autopilot) Model

X[11] — Driverfatality.[20]

1 March2019 4

UnitedStates ofAmerica(USA)

DelrayBeach Florida 1 Tesla (Autopilot) Model 3 — Driver

fatality.[21]

19September

20195

UnitedStates ofAmerica(USA)

Osceola Florida 1 Tesla (Autopilot) Model 3 — Driverfatality. [22]

1. "Vislab, University of Parma, Italy - Public Road Urban Driverless-Car Test 2013 - World premiere of BRAiVE" (http://vislab.it/proud-en/).2. "Are Uber's autonomous vehicles safe?" (http://faculty.washington.edu/dwhm/2018/03/19/are-ubers-autonomous-vehicles-safe/).3. McArdle, Megan (March 20, 2018). "Opinion | How safe are driverless cars? Unfortunately, it's too soon to tell" (https://www.washingtonpost.com/opinions/

no-driverless-cars-arent-far-safer-than-human-drivers/2018/03/20/5dc77f42-2ba9-11e8-8ad6-fbc50284fce8_story.html). The Washington Post. Fred Ryan.ISSN 0190-8286 (https://www.worldcat.org/issn/0190-8286). OCLC 2269358 (https://www.worldcat.org/oclc/2269358). Retrieved April 3, 2018.

4. Noland, David (October 14, 2016). "How safe is Tesla Autopilot? A look at the statistics" (https://www.csmonitor.com/Business/In-Gear/2016/1014/How-safe-is-Tesla-Autopilot-A-look-at-the-statistics). The Christian Science Monitor. Christian Science Publishing Society. Green Car Reports. ISSN 0882-7729 (https://www.worldcat.org/issn/0882-7729). Retrieved March 3, 2018.

5. Kalra, Nidhi; Paddock, Susan M. (April 12, 2016). "Driving to Safety: How Many Miles of Driving Would It Take to Demonstrate Autonomous VehicleReliability?" (https://orfe.princeton.edu/~alaink/SmartDrivingCars/Papers/RAND_TestingAV_HowManyMiles.pdf) (PDF). Rand Corporation.doi:10.7249/RR1478 (https://doi.org/10.7249%2FRR1478). Retrieved April 1, 2018.

6. Waymo Team (February 28, 2018). "Waymo reaches 5 million self-driven miles" (https://medium.com/waymo/waymo-reaches-5-million-self-driven-miles-61fba590fafe). medium.com. Retrieved February 22, 2019.

7. Bowden, John (March 23, 2018). "Uber's self-driving cars in Arizona averaged only 13 miles without intervention prior to crash" (https://thehill.com/policy/technology/380038-ubers-self-driving-cars-in-arizona-averaged-only-13-miles-without). TheHill. Retrieved February 22, 2019.

8. "Uber Puts First Self-Driving Car Back on the Road Since Death" (https://www.ttnews.com/articles/uber-puts-first-self-driving-car-back-road-death).Transport Topics. December 22, 2018. Retrieved February 22, 2019.

9. Laris, Michael (December 20, 2018). "Nine months after deadly crash, Uber is testing self-driving cars again in Pittsburgh" (https://www.washingtonpost.com/transportation/2018/12/20/nine-months-after-deadly-crash-uber-is-testing-self-driving-cars-again-pittsburgh-starting-today/). The Washington Post.Retrieved May 10, 2019.

10. "Tesla autopilot was engaged for nearly 14 minutes before 2018 California crash, NTSB says" (https://www.cnbc.com/2019/09/03/tesla-autopilot-engaged-before-2018-california-crash-ntsb-says.html). CNBC. 2019-09-03. Retrieved 2019-09-06.

11. Joseph, Yonette (2018-04-29). "Briton Who Drove Tesla on Autopilot From Passenger Seat Is Barred From Road" (https://www.nytimes.com/2018/04/29/world/europe/uk-autopilot-driver-no-hands.html). The New York Times. ISSN 0362-4331 (https://www.worldcat.org/issn/0362-4331). Retrieved 2018-04-29.

12. Lubben, Alex (March 19, 2018). "Self-driving Uber killed a pedestrian as human safety driver watched" (https://news.vice.com/en_us/article/kzxq3y/self-driving-uber-killed-a-pedestrian-as-human-safety-driver-watched). Vice News. Vice Media. Retrieved April 3, 2018.

13. "Automated Vehicles for Safety Overview" (https://www.nhtsa.gov/technology-innovation/automated-vehicles-safety). NHTSA. June 18, 2019. RetrievedAugust 9, 2019.

14. "Tesla Fatalities Dataset" (https://toolbox.google.com/datasetsearch/search?query=Tesla%20Deaths&docid=pBQyihEwQEMIIcWXAAAAAA%3D%3D).Google Dataset Search. August 8, 2019. Retrieved August 9, 2019.

15. Boudette, Neal (2016-09-14). "Autopilot Cited in Death of Chinese Tesla Driver" (https://www.nytimes.com/2016/09/15/business/fatal-tesla-crash-in-china-involved-autopilot-government-tv-says.html). The New York Times. ISSN 0362-4331 (https://www.worldcat.org/issn/0362-4331). Retrieved 2018-06-07.

16. Horwitz, Josh; Timmons, Heather (September 20, 2016). "The scary similarities between Tesla's (TSLA) deadly autopilot crashes" (https://qz.com/783009/the-scary-similarities-between-teslas-tsla-deadly-autopilot-crashes/). Quartz. Atlantic Media. Retrieved April 3, 2018.

References

26/10/19, 10)08 pmList of self-driving car fatalities - Wikipedia

Page 3 of 3https://en.wikipedia.org/wiki/List_of_self-driving_car_fatalities#cite_note-10

Retrieved from "https://en.wikipedia.org/w/index.php?title=List_of_self-driving_car_fatalities&oldid=921322325"

This page was last edited on 15 October 2019, at 03:14 (UTC).

Text is available under the Creative Commons Attribution-ShareAlike License; additional terms may apply. By using this site, you agree to the Terms of Use andPrivacy Policy. Wikipedia® is a registered trademark of the Wikimedia Foundation, Inc., a non-profit organization.

17. Felton, Ryan (February 27, 2018). "Two Years On, A Father Is Still Fighting Tesla Over Autopilot And His Son's Fatal Crash" (https://jalopnik.com/two-years-on-a-father-is-still-fighting-tesla-over-aut-1823189786). Jalopnik. Gizmodo Media Group. Retrieved April 3, 2018.

18. Yadron, Danny; Tynan, Dan (June 30, 2016). "Tesla driver dies in first fatal crash while using autopilot mode" (https://www.theguardian.com/technology/2016/jun/30/tesla-autopilot-death-self-driving-car-elon-musk). The Guardian. San Francisco: Guardian Media Group. ISSN 0261-3077 (https://www.worldcat.org/issn/0261-3077). OCLC 60623878 (https://www.worldcat.org/oclc/60623878). Retrieved April 3, 2018.

19. Vlasic, Bill; Boudette, Neal E. (June 30, 2016). "Self-Driving Tesla Was Involved in Fatal Crash, U.S. Says" (https://www.nytimes.com/2016/07/01/business/self-driving-tesla-fatal-crash-investigation.html). The New York Times. Detroit: A.G. Sulzberger. ISSN 0362-4331 (https://www.worldcat.org/issn/0362-4331). OCLC 1645522 (https://www.worldcat.org/oclc/1645522). Retrieved April 3, 2018.

20. Green, Jason (March 30, 2018). "Tesla: Autopilot was on during deadly Mountain View crash" (https://www.mercurynews.com/2018/03/30/tesla-autopilot-was-on-during-deadly-mountain-view-crash/). The Mercury News. Palo Alto. ISSN 0747-2099 (https://www.worldcat.org/issn/0747-2099). OCLC 145122249 (https://www.worldcat.org/oclc/145122249). Retrieved April 3, 2018.

21. [1] (https://www.ntsb.gov/investigations/AccidentReports/Reports/HWY19FH008-preliminary.pdf)22. [2] (https://www.clickorlando.com/news/apopka-woman-dies-trying-to-pass-traffic-in-osceola-troopers-say)

Part 1: 6.2. Adversarial images: Synthetic

Part 1: 6.3. Adversarial images: Physical

27/10/19, 3*29 amAdversarial attacks on medical machine learning | Science

Page 1 of 3https://science.sciencemag.org/content/363/6433/1287

POLICY FORUM MACHINE LEARNING

Adversarial attacks on medical machinelearningSamuel G. Finlayson , John D. Bowers , Joichi Ito , Jonathan L. Zittrain , Andrew L. Beam , Is…+ See all authors and a8liations

Science 22 Mar 2019:Vol. 363, Issue 6433, pp. 1287-1289DOI: 10.1126/science.aaw4399

Article Figures & Data Info & Metrics eLetters PDF

SummaryWith public and academic attention increasingly focused on the new role of

machine learning in the health information economy, an unusual and no-

longer-esoteric category of vulnerabilities in machine-learning systems

could prove important. These vulnerabilities allow a small, carefully

designed change in how inputs are presented to a system to completely

alter its output, causing it to conVdently arrive at manifestly wrong

conclusions. These advanced techniques to subvert otherwise-reliable

machine-learning systems—so-called adversarial attacks—have, to date,

been of interest primarily to computer science researchers (1). However,

the landscape of often-competing interests within health care, and billions

of dollars at stake in systems' outputs, implies considerable problems. We

outline motivations that various players in the health care system may have

to use adversarial attacks and begin a discussion of what to do about

them. Far from discouraging continued innovation with medical machine

learning, we call for active engagement of medical, technical, legal, and

ethical experts in pursuit of e8cient, broadly available, and effective health

care that machine learning will enable.

http://www.sciencemag.org/about/science-licenses-journal-article-reuse

This is an article distributed under the terms of the Science JournalsDefault License.

View Full Text

ScienceVol 363, Issue 643322 March 2019

Table of Contents

Print Table of Contents

Advertising (PDF)

ClassiVed (PDF)

Masthead (PDF)

ARTICLE TOOLS

Email Download Powerpoint

Print Save to my folders

Request Permissions Alerts

Citation tools Share

RELATED CONTENT

RESEARCH RESOURCES

A machine learning approach for somaticmutation discovery

FOCUS

Big data and black-box medical algorithms

SHARE

1 2 3 2 4

Recommended articles fromTrendMD

Quantum generative adversarial learningin a superconducting quantum circuitLing Hu et al., Sci Adv, 2019

Hackers easily fool artificial intelligencesMatthew Hutson, Science, 2018

Public Health PreparednessMargaret A. Hamburg, Science, 2002

Refractory Outpatient HFrEFIleana L. Piña, myCME, 2019

Case 2—Focus on Irritable BowelSyndrome With Constipation (IBS-C)

Brian E. Lacy et. al., myCME, 2019

Final results of the SENECA (SEcondline NintEdanib in non-small cell lung

Advertisement

Advertisement

Part 1: 6.4. Adversarial images: Medical imaging

Part 1: 6.5. Too much random and heuristics

Too much random and heuristic: e.g. manual fine-tuning oflearning rates (see later)

Not understood yet what computers really learn: Why the pigis recognised as an airplane?

Tuyen Trung Truong Gradient descent methods in Deep Learning

Part 1: 6.5. Too much random and heuristics

Too much random and heuristic: e.g. manual fine-tuning oflearning rates (see later)

Not understood yet what computers really learn: Why the pigis recognised as an airplane?

Tuyen Trung Truong Gradient descent methods in Deep Learning

27/10/19, 3*50 amCan we trust scientific discoveries made using machine learning?

Page 1 of 3https://news.rice.edu/2019/02/18/can-we-trust-scientific-discoveries-made-using-machine-learning/

Tweet

Genevera Allen (Photo by Tommy LaVergne/RiceUniversity)

Can we trust scientific discoveries made using machinelearning?JADE BOYD – FEBRUARY 18, 2019POSTED IN: NEWS RELEASES

Share 3

Jade [email protected]

Can we trust scientific discoveries made using machine learning?

Rice U. expert: Key is creating ML systems that question their own predictions

WASHINGTON — (Feb. 15, 2019) — Rice University statistician Genevera Allen says scientists must keepquestioning the accuracy and reproducibility of scientific discoveries made by machine-learning techniquesuntil researchers develop new computational systems that can critique themselves.

Allen, associate professor of statistics, computer science and electrical and computer engineering at Rice and ofpediatrics-neurology at Baylor College of Medicine, will address the topic in both a press briefing and a generalsession today at the 2019 Annual Meeting of the American Association for the Advancement of Science (AAAS).

“The question is, ‘Can we really trust the discoveriesthat are currently being made using machine-learning techniques applied to large data sets?'”Allen said. “The answer in many situations isprobably, ‘Not without checking,’ but work isunderway on next-generation machine-learningsystems that will assess the uncertainty andreproducibility of their predictions.”

Machine learning (ML) is a branch of statistics andcomputer science concerned with buildingcomputational systems that learn from data ratherthan following explicit instructions. Allen said muchattention in the ML field has focused on developingpredictive models that allow ML to make predictionsabout future data based on its understanding of datait has studied.

“A lot of these techniques are designed to always make a prediction,” she said. “They never come back with ‘Idon’t know,’ or ‘I didn’t discover anything,’ because they aren’t made to.”

She said uncorroborated data-driven discoveries from recently published ML studies of cancer data are a goodexample.

Like 5

ii

Part 1: 7. Criticisms

27/10/19, 4*03 amLeCun vs Rahimi: Has Machine Learning Become Alchemy? | Synced

Page 1 of 9https://syncedreview.com/2017/12/12/lecun-vs-rahimi-has-machine-learning-become-alchemy/

The medieval art of alchemy was once believed capable of creating gold and even humanimmortality. The trial-and-error method was however gradually abandoned after pioneerslike Issac Newton introduced the science of physics and chemistry in the 1700s. But now,some machine learning researchers are wondering aloud whether today’s artificialintelligence research has become a new sort of alchemy.

The debate started with Google’s Ali Rahimi, winner of the Test-of-Time award at the recentConference on Neural Information Processing (NIPS). Rahimi put it bluntly in his NIPSpresentation: “Machine learning has become alchemy.”

BY SYNCED2017-12-12

AI CONFERENCE UNITED STATES

LeCun vs Rahimi: Has Machine Learning Become Alchemy?

COMMENTS 4

Synced

Part 1: 7. Criticisms (cont.)

Part 2: 6. (Random) Dynamical systems

Classical dynamical systems: asymptotic properties of iteratesof self-map : Z ! Z .

Example: if c0 2 Z is a random point, does sequence c0,c1 = (c0), c2 = (c1), . . ., cn+1 = (cn) converge?

If so, what good about the limit point?

Application to DNN: for Standard GD, look at (c) = c � �rh(c) : RN ! RN .

c = variables ck,j and cj , N = the number of ck,j and cj , h(c)cost function for the DNN, � learning rate.

Want the limit point to be (global) minimum.

Application to DNN: for Backtracking GD, look at randomiterates of many maps n(c) = c � �nrh(c) : RN ! RN , notjust one map. Hence, Random Dynamical Systems.

Entropy (notation in Information theory, Dynamical Systems):used in DNN.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Part 3: Previous work on Gradient Descent (GD)

GD = Gradient + Descent (Decreasing in value)

Theoretical justification

f : Rk ! R a C 1 function

Want to find minimum.

Don’t know which point is minimum. Hence, start fromrandom point x0.

Try to choose new point x1 so that f (x1) f (x0).

x1 should be close to x0. x1 = x0 � �v , � > 0 a small number,||v || = ||rf (x0)||.Approximately f (x1) ⇠ f (x0)� � < rf (x0), v >.

Best to have < rf (x0), v > as large as possible. Happen onlywhen v = rf (x0).

Hence, should choose x1 = x0 � �rf (x0), with � small

enough.

Important practical question: How to choose � small enough,e↵ectively and automatically? If � too big, may happenf (x1) > f (x0), and hence not descent!

Tuyen Trung Truong Gradient descent methods in Deep Learning

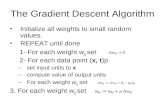

General scheme of GD methods

f : Rk ! R a C 1 function

Solve min f (x)

Choose random x0 2 Rk

Iterate xn = xn�1 � �n�1rf (xn�1)

�n > 0 appropriately chosen

Hope that xn converges to (global) minimum.

Example (Nesterov): f (x) = f (x1, x2) = 2x21+ x4

2� x2

2, and

starting point (1, 0). This function has 3 critical points, and ifxn converges to a critical point, then that point must be(0, 0), which is a saddle point.

Key point: The point (1, 0) is not random!

Tuyen Trung Truong Gradient descent methods in Deep Learning

Standard GD, Convergence

Standard GD: �n = � > 0 is constant

Armijo: first result about convergence in 1966. Veryinfluential paper.

Theorem (Convergence, Most general form of Armijo’smethod, e.g. Prop. 12.6.1 in K. Lange, Optimisation,Springer 2013):Need f 2 C 1,1

L , � < 1/(2L). Moreover, assumef has compact sublevels, and the set of critical points of f isbounded and has at most finitely many elements. ThenStandard GD converges to a critical point.

Remark: Similar results for gradient flows (continuous versionof GD), i.e. solutions to x 0(t) = �rf (x(t)).

Tuyen Trung Truong Gradient descent methods in Deep Learning

Standard GD, Avoidance of saddle points

Theorem (Avoidance of saddle points, Lee et al: Gradientdescent only converges to minimizers, JMRL vol 49 (2016);Panageas and Piliouras, Gradient descent only converges tominimizers ..., ITCS 2017): f 2 C 1,1

L \ C 2, � < 1/L. There isa set E of Lebesgue measure 0 in Rk so that if x0 2 Rk andStandard GD {xn} converges to a point x⇤

0, then x⇤

0is not a

generalised saddle point.

Main idea of proof: The map x 7! x � �rf (x) is adi↵eomorphism of Rk , hence can apply the central - stablemanifold theorem in Dynamical Systems.

Corollary: In most cases, if Standard GD converges (under theabove assumptions), then it converges to a minimum.

Not good (previous slide): the assumptions are di�cult tocheck for realistic cost functions.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Standard GD, Limitations

The assumptions are quite restrictive. E.g. f (t) = 1/(1+ e�t)- popular in DNN - does not have compact sublevels.

Cannot generalise over C 1,1L

Example (T.-Nguyen): f (x) = |x |1+� for 0 < � < 1. f hascompact sublevels, unique critical point at 0 (which is globalminimum), f 0(x) not globally Lipschitz continuous, but Holdercontinuous. If x0 6= 0, then Standard GD xn won’t everconverge to 0 ! Reason?

� must be small enough.

f (x) = |x | for |x | � ✏0 > 0, �0 > 2✏0, start Standard GD with✏0 < x0 < �0 � ✏0. Then the sequence {xn} is periodic{x0, x0 � �0, x0, x0 � �0, . . .}, both x0 and x0 � �0 are notcritical points of f .

Even if f in some C 1,1L , good lower estimates for L di�cult.

Assumption on finitely many critical points almost hopeless tocheck or satisfied practically.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Stochastic GD, limitations

Stochastic version of Standard GD.Allow replace rf (xn) with an approximation. But � isUsually associated to Robbins and Monro, Ann. Math.Statist., 22 (1951), pp 400–407.Overview in Section 4 of Bottou, Curtis and Nocedal, SIAMReview Vol 60, No 2, pp 223–311.Main Theorem 1: If f is C 1,1

L , strongly convex, plus someother assumptions, then Stochastic GD converges inprobability.Limitations: Besides requiring C 1,1

L , the strong convexityimplies f has only one critical point. Most DNN stronglynon-convex.Remark: Also allow varying �n so that

Pn �n = 1 &P

n �2n < 1. Limitation: may slow down convergence speed.

Main Theorem 2: If f is C 1,1L plus other assumptions, then

rf (xn) converges to 0 in probability.Limitations: Not proving convergence of xn!

Tuyen Trung Truong Gradient descent methods in Deep Learning

Why convergence of xn in GD important?

We don’t have infinite time or resources.

So need to stop GD after some finite time n0, when somecriteria satisfied. Remember that then the value xn0 is usedfor new predictions.

If xn converges, then no problem.

If xn not convergent, then problematic!

Another person may use a slightly di↵erent criterion, and thenstop at n1 6= n0. Since no convergence, xn1 can be very

di↵erent from xn0 . Hence, predictions using xn1 can be very

di↵erent from xn0 . Hence no-reproducibility.

Even if you rerun, you may end up at xn1 and hence get verydi↵erent results. One reason is computations have errors.Other reason is you use random shu✏e of mini-batches totrain. Again, no-reproducibility.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Some other GD variants

Reference: Ruder, arXiv: 1609.04747. See also Wikipedia.Momentum: ↵n+1 = ↵n � vn+1, vn+1 = �vn + �rf (↵n).Nesterov accelerated gradient (NAG): ↵n+1 = ↵n � vn+1,vn+1 = �vn + �rf (↵n � �vn�1).Momentum and NAG help to avoid bad minima.Adagrad: Like the general scheme for GD, but �n is now avector (�n,1, . . . , �n,k) and �n,i = �/Gn,i , and Gn,i depends in acomplicated manner on the values of the gradientsrf (x1), . . . ,rf (xn�1).Adadelta, RMSProp, Adam, AdaMax, Nadam: Like Adagrad,but now choose Gn,i di↵erently.Wolfe’s method: Require Backtracking GD plus moreassumptions. Wolfe’s original paper very complicated. SeePart 4.Limitations: Convergence for all methods requires C 1,1

L (notpreserved under small perturbations, such as computationalerrors or regularisation methods) plus other assumptions, inmost cases requiring (strong) convexity.Tuyen Trung Truong Gradient descent methods in Deep Learning

Current practice: 1. Mini-batches

Because of many reasons (space constraint, time constraint,better avoiding saddle points and local minima, better dealwith randomness of data, so on) ) Mini-batches are preferredin Deep Learning.Randomly shu✏e the training data set I , then partition it intosmall subsets Ih. Get instead partial sumsFIh =

Px2Ih N � . . . � 0(x , y(x),↵).

When applying Standard GD, at time t we use the functionFht in computations.Intuition: FIh/Nm should be close to F/N. More technically,FIh/Nm are random and have the same (unknown)distribution. Here N = the size of training set I , Nm = thesize of a mini-batch. Usually want Nm/N small, but Nm is nottoo small.When finishing computing with all N/Nm mini-batches in onsuch partition ) finish an epoch. Usually need to runhundreds of epochs before DNN can learn optimally.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Current practice: 2. Tuning/scaling learning rates

Fine-tuning learning rates: Choose by hand randomly a bunchof values for learning rate, do experiments with them, pick theone that works best among these. Reason?

Take a lot of time and experience, by hand, too personal, toorandom, not rigorously justified, not reproducible.

Warming up and step decay of learning rates: Start from asmall value for learning rate, then increase it by hand until seethat it cannot do better, then decrease it by hand again.

Same issues as before. Compare with Adagrad, Adadelta,RMSProp and so on.

These points can be solved automatically by Backtracking GD.

Scaling of learning rates: If we do not scale learning ratesappropriately, errors may accumulate and explode. E.g. Goyalet al, Accurate, large mini-batch SGD: training ImageNet in 1hour, arXiv: 1706.02677, proposes to divide learning rate byN/Nm. Can be done better (for later).

Tuyen Trung Truong Gradient descent methods in Deep Learning

Current practice: 3. Hyper-parameters

Parameters in the models, other than ↵.

In Standard GD, hyper-parameter is learning rate �.

In Momentum: hyper-parameters are � and �.

Which hyper-parameters are best? ) Big question inOptimisation.

This is again an optimisation problem, but more di�cult.

Current practice is random search. Backtracking GD helps toautomatically find good learning rate.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Part 4: Backtracking GD

Armijo’s condition (Backtracking GD)

Fix 0 < �,� < 1 and � > 0.

In general scheme, choose �n so thatf (xn � �nrf (xn))� f (xn) ���n||rf (xn)||2.�n > 0 is largest in {�, ��, ��2, . . .}Why does such �n exist?

Answer: Taylor’s expansion!

Remark: If � is small (0.01 or 0.05) ) keep �n as big aspossible ) Good (increase training and convergence speed,help avoid bad local minima).

If � = 0 not good (no convergence result known).

Tuyen Trung Truong Gradient descent methods in Deep Learning

Side remark: Wolfe’s condition

(Wolfe, Convergence conditions for ascent methods, SIAMReview 1969) Armijo’s condition + some other conditions.

Including so-called ”su�ciently continuous” which requiresrf to be uniformly continuous at xn. Hard to check, exceptknown already that {xn} is bounded.

Implemented in DNN in Mahsereci and Hennig, Probabilisticline searches for stochastic optimization, JMLR vol 18, 2017.

Compared to Backtracking GD: Need more computations,while convergence not known in general.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Analytic cost functions

Theorem (Absil et al, Convergence of the iterates of thedescent methods for analytic cost functions, SIAM Journal ofOptimization, vol 16, 2005) If f is real analytic (that is, anabsolute convergent power series), then Backtracking GDeither diverges to 1 or converges to a point x⇤.

Remarks:

If the sequence {xn} converges to x⇤, then applying otherresults (such as Armijo’s or Wolfe’s) ) x⇤ is a critical point.

The assumption that f is real analytic is too restrictive. Notpreserved under small perturbations.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Main Theorem

Theorem (T. - Nguyen, arXiv: 1808.05160) f : Rk ! R is inC 1. No additional assumption. {xn} the sequence inBacktracking GD.

1) If a subsequence {xnk} converges to some x⇤ 2 Rk , thenrf (x⇤) = 0.

2) Either limn!1 ||xn|| = 1 or limn!1 ||xn+1 � xn|| = 0.

3) Assume that f has at most countably many critical points.Then either limn!1 ||xn|| = 1 or {xn} converges to a criticalpoint of f .

4) Let x⇤ be a generalised saddle point of f . There exists for✏ > 0 an open set U(✏) ⇢ B(x⇤, ✏) = {x : ||x � x⇤|| < ✏} sothat: i) lim✏!0 Vol(U(✏))/VolB(x⇤, ✏) = 1, and ii) if x0 2 U(✏)then {xn} does not contain any subsequence converging to x⇤.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Main Theorem: comments

Inexact version also true, where updating rule isxn+1 = xn � �nvn, vn close to rf (xn).Morse function: A C 2 function f all of whose critical pointsx⇤ are non-degenerate, that is r2f (x⇤) is invertible.All critical points of a Morse function are isolated ) Morsefunctions have at most countably many critical points.Morse functions are dense: f (x)+ < a, x > is Morse for mosta 2 Rk . E.g. x3 + ax is Morse for a 6= 0. Hence, Morsefunctions are preserved under small perturbations.Part 4 means that even if f has uncountably many criticalpoints, it is very rare that Backtracking GD xn has asubsequence converges to a generalised saddle point of f .Application: functions such asf (x , y) = x3 cos(1/x) + 2y3 sin(1/y). Neither C 1,1

L nor realanalytic.Backtracking versions of Momentum and NAG also defined,and Main Theorem applies.

Tuyen Trung Truong Gradient descent methods in Deep Learning

A heuristic argument

f is C 1. Assume that at every critical point, f is localLipschitz continuous.

Example: f is C 2.

Assume also that f has at most countably many criticalpoints.

Example: f is a Morse function.

Assume that {xn} in Backtracking GD does not diverge toinfinity.

Example: f has compact sublevel.

By Main Theorem ) {xn} converges to a critical point x⇤.

Hence for n big enough, �n belongs to a fixed finite set.Reason?

Hence Backtracking GD becomes a finite union of StandardGD. Can apply Random Dynamical Systems to study.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Two-way Backtracking GD

In the long term, �n belongs to a fixed finite set.

Hence, at iteration n + 1, instead of looking for �n from � anddecrease, we can start from �n�1 to save time.

The Two-way Backtracking GD method is: If � = �n�1 doesnot satisfy Armijo’s condition ) decreases � as in the usualalgorithm.

If, however, � = �n�1 satisfies Armijo’s condition, theninstead of choosing �n = �n�1, we increase � while Armijo’scondition is still satisfied (like warming up), and � is stillsmaller than an upper limit (say 100).

Advantages: Still satisfy the intuition that �n should be closeto �n�1. On the other hand, still keeps �n as large as possible.Convergence is proven as in Main Theorem. As well as savetime and computations.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Experimental setups

Data sets: CIFAR10 & CIFAR 100.

CIFAR 10: 60 000 of 32x32 colour images; 10 classes(airplane, automobile, bird, cat,...), 6000 images per class; 50000 training images, 10 000 test images. Images havestructure (32,32,3) corresponding to (width, height, RGB).

CIFAR 100: Similar, with 100 classes of images.

DNN: Resnet18, PreActResnet18, MobileNetV2, SENet,DenseNet121

Tuyen Trung Truong Gradient descent methods in Deep Learning

32 TUYEN TRUNG TRUONG AND TUAN HANG NGUYEN

(a) Mexican Hat (b) Resnet18

Figure 2. Learning rate attenuation using Two-way Backtracking GD on

(a) Mexican hat function (b) Resnet18 on a dataset contains 500 samples

of CIFAR10 (full batch)

4.2. Experiment 1: Behaviour of learning rates for Full Batch. In this experiment

we check the heuristic argument in Subsection 2.4 for a single cost function. We do

experiments with Two-way Backtracking GD for two cost functions: one is the Mexican

hat in Example 2.12, and the other is the cost function coming from applying Resnet18

on a random set of 500 samples of CIFAR10. See Figure 2. It appears that for the

Mexican hat example, Two-way Backtracking GD stablises to Standard GD in only 6

iterates, while for CIFAR10 stabilising appears after 40 iterates.

BACKTRACKING GRADIENT DESCENT METHOD FOR GENERAL C1 FUNCTIONS 33

(a) CIFAR10 (b) CIFAR100

Figure 3. Learning rate attenuation using Two-way Backtracking GD in

the mini-batch setting on Resnet18 on (a) CIFAR10 (b) CIFAR100

4.3. Experiment 2: Behaviour of learning rates for Mini-Batches. In this exper-

iment we check the heuristic argument in Subsection 2.4 in the mini-batch setting. We

do experiments with MBT-MMT and MBT-NAG for the model Resnet18 on CIFAR10

and CIFAR100. See Figure 3. Obtained learning rates behave similarly to the common

scheme of learning rate warming up and decay in Deep Learning.

34 TUYEN TRUNG TRUONG AND TUAN HANG NGUYEN

LR 100 10 1 10�1 10�2 10�3 10�4 10�5 10�6

12 0.0035 0.0037 0.0037 0.0040 0.0046 0.0040 0.0038 0.0036 0.0038

25 0.0050 0.0051 0.0051 0.0058 0.0049 0.0048 0.0052 0.0057 0.0044

50 0.0067 0.0067 0.0065 0.0060 0.0067 0.0061 0.0066 0.0070 0.0075

100 0.0111 0.0101 0.0093 0.0104 0.0095 0.0098 0.0098 0.0099 0.0085

200 0.0140 0.0143 0.0137 0.0147 0.0125 0.0130 0.0135 0.0122 0.0126

400 0.0159 0.0155 0.0167 0.0153 0.0143 0.0174 0.0164 0.0166 0.0154

800 0.0153 0.0161 0.0181 0.0188 0.0170 0.0190 0.0205 0.0154 0.0167

Table 1. Stability of Averagely-optimal learning rate obtained from

MBT-GD across 9 di↵erent starting learning rates (LR) ranging from 10�6

to 100 and 7 di↵erent batch sizes from 12 to 800. Applied using Resnet18

on CIFAR10. (↵ = 10�4, � = 0.5)

4.4. Experiment 3: Stability of learning rate finding using backtracking line

search. In this experiment, we apply MBT-GD to the network Resnet18 on the dataset

CIFAR10, across 9 di↵erent starting learning rates (from 10�6 to 100) and 7 di↵erent

batch sizes (from 12 to 800), see Table 1. With any batch size in the range, using the

rough grid � = 0.5 and only 20 random mini-batches, despite the huge di↵erences between

starting learning rates, the obtained averagely-optimal learning rates stabilise into very

close values. This demonstrates that our method works robustly to find a good learning

rate representing the whole training data with any applied batch size.

BACKTRACKING GRADIENT DESCENT METHOD FOR GENERAL C1 FUNCTIONS 35

4.5. Experiment 4: Comparison of Optimisers. In this experiment we compare the

performance of our methods (MBT-GD, MBT-MMT and MBT-NAG) with state-of-the-

art methods. See Table 2. We note that MBT-MMT and MBT-NAG usually work much

better than MBT-GD, the explanation may be that MMT and NAG escape bad local

minima better. Since the performance of both MBT-MMT and MBT-NAG are at least

1.4% above the best performance of state-of-the-art methods (in this case achieved by

Adam and Adamax), it can be said that our methods are better than state-of-the-art

methods.

LR 100 10 1 10�1 10�2 10�3 10�4 10�5 10�6

SGD 10.00 89.47 91.14 92.07 89.83 84.70 54.41 28.35 10.00

MMT 10.00 10.00 10.00 92.28 91.43 90.21 85.00 54.12 28.12

NAG 10.00 10.00 10.00 92.41 91.74 89.86 85.03 54.37 28.04

Adagrad 10.01 81.48 90.61 88.68 91.66 86.72 54.66 28.64 10.00

Adadelta 91.07 92.05 92.36 91.83 87.59 73.05 46.46 22.39 10.00

RMSprop 10.19 10.00 10.22 89.95 91.12 91.81 91.47 85.19 65.87

Adam 10.00 10.00 10.00 90.69 90.62 92.29 91.33 85.14 66.26

Adamax 10.01 10.01 91.27 91.81 92.26 91.99 89.23 79.65 55.48

MBT-GD 91.64

MBT-MMT 93.70

MBT-NAG 93.85

Table 2. Best validation accuracy after 200 training epochs (batch size

200) of di↵erent optimisers using di↵erent starting learning rates (MBT

methods which are stable with starting learning rate only use starting

learning rate 10�2 as default). Italic: Best accuracy of the optimiser in

each row. Bold: Best accuracy of all optimisers for all starting learning

rates.

36 TUYEN TRUNG TRUONG AND TUAN HANG NGUYEN

Dataset CIFAR10 CIFAR100

Optimiser MBT-MMT MBT-NAG MBT-MMT MBT-NAG

Resnet18 93.70 93.85 68.82 70.78

PreActResnet18 93.51 93.51 71.98 71.53

MobileNetV2 93.68 91.78 69.89 70.33

SENet 93.15 93.64 69.62 70.61

DenseNet121 94.67 94.54 73.29 74.51

Table 3. (Models and Datasets) Accuracy of state-of-the-art models after

200 training epochs. Bold: Best accuracy on each dataset.

4.6. Experiment 5: Performance on di↵erent datasets and models and opti-

misers. In this experiment, we compare the performance of our new methods on di↵erent

datasets and models. We see from Table 3 that our automatic methods work robustly

with high accuracies across many di↵erent architectures, from light weight models such

as MobileNetV2 to complicated architecture as DenseNet121.

Part 4: Conclusions and Some open questions

Conclusions

Deep Learning has obtained spectacular achievements.

Its limitations are still very huge though.

Approximation theory leads to DNN.

For practical applications must use Numerical Optimisation.

Hence, convergence of the numerical optimisation processmust be established. Otherwise, lead to non-reproducibilityresults, or as Ali Rahimi of Google put it ”Machine Learninghas become Alchemy”.

Many previous work: convergence of GD for C 1,1L cost

functions plus additional assumptions (such as strongconvexity or compact sublevels and finiteness of criticalpoints).

Backtracking GD behaves better.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Conclusions: cont. 1

Absil et al. proved convergence of Backtracking GD for allreal analytic functions. However, real analyticity is veryrestrictive, not preserved under small perturbations.My joint work proved convergence of Backtracking GD for allC 1 functions with at most countably many critical points.This includes Morse functions, which are preserved undersmall perturbations.We proposed Two-way Backtracking GD, and BacktrackingMomentum and Backtracking NAG.We proposed a heuristic argument showing that BacktrackingGD becomes a finite union of Standard GD processes.We also proposed a new rescaling scheme for learning rates,to deal with di↵erences in mini-batch sizes.Experiments vindicate the heuristic argument, also show ournew algorithms are better than state-of-the-art algorithms.The best part of our algorithms is that they are automatic, wedo not need to manually fine-tune.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Conclusions: cont. 2

Concerning the speed: Two-way Backtracking GD does nottake more time than if you work with Standard GD with manydi↵erent learning rates.

In current practices, people need to manually fine-tunelearning rates, hence need a lot of time.

Concerning reproducibility: Yes, our experimental results arereproducible.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Open questions

Prove convergence for Backtracking in general (for allfunctions, and for infinite dimensions): applicable to PDE,and allow more flexible to models in DNN.

(Relevant) No-free-lunch theorem (Wolpert and Macready)

Prove the version of avoidance of saddle points (Lee et al) inthe Backtracking setting.

There is a gap between results proven in the deterministiccase (a single cost function) and the statistic case (StochasticGD). Try to fill it!

Explain rules of thumb in DNN.

Try to resolve the adversarial images problem.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Some work in progress

Backtracking GD can be applied to all currentalgorithms/projects using Standard GD.

Some specific project I am working/discussion on:

Apply to medical image, in particular to skin disease detectionusing AI (with Torus Actions SAS, Deep Learning startup,second prize of the ISIC 2019 competition organised by theInternational Skin Imaging Collaboration).

Apply to 3D reconstruction from 2D images (with Tuan HangNguyen, AXON AI Research).

Happy to collaborate with researchers and/or supervisestudents, in both more theoretical or more applied projects.

Tuyen Trung Truong Gradient descent methods in Deep Learning

Thank you very much for your attention!

![Stochastic Gradient Descent Tricks - bottou.org2.1 Gradient descent It has often been proposed (e.g., [18]) to minimize the empirical risk E n(f w) using gradient descent (GD). Each](https://static.fdocuments.in/doc/165x107/60bec0701f04811115495619/stochastic-gradient-descent-tricks-21-gradient-descent-it-has-often-been-proposed.jpg)