cs. · Web viewThe created mesh was scaled, with the minion having a height of 13cm a pixel...

Transcript of cs. · Web viewThe created mesh was scaled, with the minion having a height of 13cm a pixel...

Reconstruction of 3D objects from Images to Render and Print to Scale

Erin Duber Lembke1 and Jeremy Matthew Bernard Gordet2

1Havelock High School, Havelock, NC2Latta High School, Latte, SC

Research Experience for Teachers at Appalachian State University, July 2015

Abstract:

This paper presents a pipeline for 3D reconstruction via photogrammetry. VisualSFM, MeshLab, and Blender were used to analyze, point match, vectorize to create mesh, and edit 3D models to prepare for printing. A combination

of an IPhone 6 camera, and a Nikon Coolpix camera was used to obtain pictures. Nine different objects were photographed and processed as part of this research. The most desirable object for the parameters of our pipeline

was determined. This paper will discuss the processes and strategies implemented in order to recreate a true to size, and realistic printable object.

1.0 Introduction:

Three-dimensional (3D) printing revolutionized the way in which virtual modeling and design applies to the real world. 3D printing, also known as “stereolithography” was invented by Charles Hall in 1980 [1]. Hall used stereolithography file format (.stl) coupled with CAD program in order to prepare objects for printing.1 Hall was the founder of the 3D Systems company which later produced the first 3D printer available commercially [1]. Hall’s work in addition to advances in printer and software technologies paved the road for the use of 3D printing in manufacturing, medicine, architecture, and engineering [1].

3D printing is rapidly becoming one of the most user friendly and inexpensive technology for consumers. The cost of a small size 3D printer ranges from $300, for basic models, to upwards of $3000 for more advanced models [1]. In addition to the printer itself, there is a plethora of free and/or low cost technologies available for creating 3D objects on the computer. These technologies, such as Computer Aided Design (CAD), allows engineers, artists, architects, and enthusiasts alike to build 3D models for prototyping, manufacturing, and virtual showcases [1]. In addition to using basic geometry to build objects, CAD programs like AutoCAD and 3d Max allow a designer to input a series of measurements in order to manually reconstruction a real world object [2]. The reconstructed object often lacks texture, fine detail, and realism [2]. Therefore, though this may be a cost effective way to acquire data for reconstruction, the limitations of manual reconstruction can be undesirable depending upon the user's intended use for the model.

In order to reproduce a more defined, real world object, researchers are moving toward photogrammetry [2]. In photogrammetry a “camera is

used to catch an object or scene” which can then be ran through processing software in order to extract the 3D character [2]. There are a multitude of programs that allows the most novice designer to take a series of photos in which the program reconstructs a model. The user then has the option to download and print the model themselves, or the company will print and ship the model to the client. Though programs, such as 123dCatch and 3dMax, recreate a model with realistic texture and fine detail, and realism, it is limited upon the size of the printed model. Most models are only printed to scale rather than actual size. This is generally due to limitations of the software, cost for the consumer, and limitations of personal 3D printers. The purpose of this research was to attempt to recreate a real world object, in which all aspects of the object were retained including actual size from a series of photos and utilizing a pipeline of reconstruction software such as VisualSFM, MeshLab, and Blender.

2.0 Background:

Visual Structure from Motion System (VisualSFM) is a GUI based software for 3D reconstruction via Structure from Motion (SfM) created by ChangChang Wu [3-6]. The software utilizes the same science behind a human's ability to perceive depth. The system integrates several of Wu’s previous projects in order to reconstruct an object via photo (.jpg) analysis [3-6]. The user creates a directory containing images of the object from multiple angles and imports the folder into the program. VisualSFM utilizes SIFT on GPU, Multicore Bundle Adjustment, and Towards Linear-time Incremental SfM [3-6]. VisualSFM uses multicore parallelism for feature detection, feature matching, and bundle adjustment in order to reconstruct a point cloud of the object [3-6]. The user

is able to manipulate the point cloud as visualize where the image round the object was taken (Figure 3). A dense reconstruction of the point cloud is then created using Yasutaka Furukawa’s PMVS/CMVS tool chain which creates a more realistic model of the object [3-6]. The model can be edited to remove unwanted points such as background images aka “noise.”

The SfM output of VisualSFM works with several additional tools, including CMP-MVS by Michal Jancosek, MVE by Michael Goesele's research group, SURE by Mathias Rothermel and Konrad Wenzel, and MeshRecon by Zhuoliang Kang [3-6]. The dense point cloud is saved in the same directory as the images / SIFT files, as a “polygon file format” (.ply) [3-6]. VisualSFM renders a folder within the original directory that contains all the information for the point cloud and dense reconstruction in a list format.

The directory containing all project files is then imported into MeshLab. “MeshLab is an advanced mesh processing system, for the automatic and user assisted editing, cleaning, filtering converting and rendering of large unstructured 3D triangularmeshes” [7]. MeshLab was developed by a group at the Visual Computing Lab at the ISTI‐CNR institute [7]. MeshLab was created for ease of use for students and enthusiasts alike. The program was specifically tailored to edit and render the noisy meshes output from multi-stereo reconstruction techniques such as VisualSFM. [7]. The file is exported as a .ply for processing in Blender.

Blender was created by Ton Roosendaal, co-founded of NeoGeo, wrote the program Blender in 1995. Blender was created to allow access to a 3D building and editing tool for consumers outside of NeoGeo Company [8]. Ton Roosendaal founded the non-profit organization Blender Foundation in March 2002 to promote and evolve an open source project [8]. Through the many volunteers Blender works to be as innovative as possible, developing updated editions to improve ease of use for consumers [8]. Blender 2.74 was used for final editing of noise, false points, and render the .stl file required for printing with a MakerGear2 3D printer.

3.0 Methodology:

360 degree images of all objects were captured, using several strategies, i.e. camera angle and/or rotation

speed, varied by case, to make the images more effective.

Figure 1: Processing Pipeline

Case 1: Eraser

A standard black white board eraser, Figure 2, was placed on an 80 cm tall stool that was equipped with a swivel top. The eraser was rotated by hand. 40 photos were taken with an IPhone 6 camera. The photos were loaded into VisualSFM and a point match was processed. The points created were not desirable therefore the image was not exported into MeshLab.

Figure 2: Standard Whiteboard Eraser

Case 2: Vitamin Water bottle

A 12oz bottle of “Revive” Vitamin Water, Figure 3, was placed on the classroom stool. The stool was

rotated as 90 photos were taken with an IPhone 6 camera. The photos were uploaded into VisualSFM where a point match was processed. A dense point cloud was generated from the point matches. Excess and exterior noise points, were deleted. The dense point cloud was imported into Meshlab. A 3D mesh was created via a Poisson Surface Reconstruction. The parameters of the surface reconstruction were set with an octree depth of 10, solver divider of 6, and 4 samples per node. The resultant mesh was incomplete, therefore the trial was terminated.

Figure 3: Vitamin Water Bottle

Figure 4: Point Match

Case 3: Camelbak® water bottle

A 24oz Camelbak® water bottle was placed on the classroom stool. The stool was hand spun and 120 total photos were taken. The camera was held parallel to the bottle for 60 photos. The camera was held at an approximate 45 degree angle to the top of the bottle for the last 60 photos. These photos were processed in VisualSFM and a point match and a dense reconstruction was generated. A triangulated mesh was created in MeshLab when the dense cloud was imported. The mesh contained large holes and was deemed unsatisfactory, therefore case 3 was eliminated.

Case 4: Raspberry pi box

A video was taken of a Raspberry pi box, Figure 5, with a Nikon Coolpix camera. The box was placed on the classroom stool and spun by hand. 25 frames per second were produced via a ffmpeg (ffmpeg.exe –i “file name” image%04d.jpg) converter through the command line. The rendered frames were processed in VisualSFM. The random point match created was deemed unsatisfactory therefore case 4 was eliminated.

Figure 5: Raspberry pi box

Case 5: The Improved Raspberry pi box

The same Raspberry pi box was taped and painted, Figure 6. The box was placed on an automatic turntable that rotated at 30 RPM. 80 photos were taken with an IPhone 6 burst function. The photos were processed through VisualSFM and Meshlab. The mesh created was again inaccurate, therefore the trial was terminated.

The same case was then rotated at 15 rpm and photographed 45 times by a Nikon Coolpix p100

camera in Aperature-Priority mode. These photos were used to create a mesh that was again unsatisfactory therefore no further steps were taken.

Figure 6: Raspberry case taped and painted

Case 6: Tiki model

A 10 cm tall tiki model, Figure 7, was photographed while placed on a stool and spun. The photos were processed and the dense reconstruction showed large holes and excessive noise. The tiki was then taped on the left leg, around the seat and drum. The model was placed on the turntable and placed in front of a window with a large amount of natural light on the model. The turntable was spun at 30 RPM. 120 photos of the model were captured. 60 photos were taken with an IPhone 6, and 60 with a Nikon Coolpix camera. These photos were processed and a mesh was created, Figure 8, through Visual SFM and Meshlab. The mesh was scaled in Meshlab and exported. The scaled mesh was imported into blender. Blender was used to convert the file into a .stl file. The file was uploaded into simplify3D. The scale was visually checked. It was found that the model was not printable and case 6 was eliminated.

Figure 7: Tiki Model with tape

Figure 8: Unprintable Mesh

Case 7: NIKE Women’s Size 8 Running Shoe

Trial: 1

A NIKE women’s size 8 running shoe was placed on a turntable at 30 RPM. An IPhone 6 camera burst function was used and 80 photos were captured. The photos were matched in Visual SFM and a rough point reconstruction was created. A dense point cloud was rendered incomplete, therefore this trial was terminated.

Trial: 2

The shoe was taped on the medial side with blue painters tape. Marks were made on the shoe in order to depict graphics, Figure 9. The shoe was placed on the turntable and 70 photos were captured with an IPhone6’s burst function. Photos were processed via Visual SFM and Meshlab and a mesh was rendered. The model was scaled to a 1:2 ratio. A bisect tool was used to remove excess base. This model, Figure 10, was exported as a .stl file and printed.

Figure 9: Taped shoe

Figure 10: Printable mesh in Blender

Case 8: Enhanced model for Raspberry pi box

An updated model of the raspberry pi box, Figure 11, was painted with dark colors on the bottom and lighter colors on top. The case was placed on the turn table bottom down, back down, and front side down 80 photos were taken from each position. These photos were processed Visual SFM where the background noise was deleted from the point cloud. The dense reconstruction point cloud was imported into MeshLab and was scaled. The model was edited with the smooth brush tool in Blender. The model was saved as a .stl file printed.

Figure 11: Enhanced model Raspberry pi case painted

Figure 12: Dense point cloud in Visual SFM

Case 9: Minion

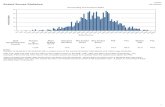

A Universal Studios, “Despicable Me 2,” Minion, Figure 13, photographed on the turntable 235 times. 83 photos were captured with the minion flat on its’

back. The minion was also photographed as it “stood” for 92 photos with the camera parallel to the object, and 80 photos with the camera at an approximate 45 degree angle above the object. Photos were processed through VisualSFM. The dense point cloud was edited and excess background noise was deleted. The edited model was imported into Meshlab were a Poisson surface reconstruction was run and the parameters were set as such; octree depth 12 solver divider 6 and samples per node 1. The mesh was deemed unsatisfactory for Blender.

The minion’s hands and feet were painted white, Figure 13. The same process was used to photograph the painted object. These photos were processed in VisualSFM. A point match, Figure 14, and a dense point cloud, Figure 15, was rendered and edited. The dense point mesh was smoothed when compact faces, compact vertices, and smooth normals function was used. The created mesh was scaled, with the minion having a height of 13cm a pixel height of 3.05743 and a scaled proportion of 42.51951, and aligned to the appropriate axis. Blender was used to further smooth the model, and edit mesh from noise. The file was converted to a .stl. The minion was printed successfully.

Figure 13: Painted Minion

Figure 14: Point Match from minion

Figure 15: Dense Reconstruction Point Match from Minion

4.0 Results and Discussion:

Case 1 - Eraser: Only 20 matched points were found. Additionally there was no similarity between the plotted points and the actual eraser. There were holes in the rendered point match, in addition to a large amount of noise. It was determined that more photos

were required in order to render a complete model. It was also evident that an object that was monotonous in color, smooth texture, and lacking dynamic edges would not render desirable results when a point cloud was processed.

Case 2 - Vitamin water bottle: A complete dense point cloud was created. When the bottle was imported into MeshLab it was evident that the clear portions of the bottle were seen as “holes” by the program. It was determined that clear objects were undesirable for this pipeline, due to a lack of shadows and edges.

Case 3 - Camelbak® water bottle: In order to combat issues with a lack of edges due to clear surfaces, a solid object was used. The dense reconstruction contained excess points from noise in addition to multiple holes. When the dense point cloud was loaded into MeshLab, it was evident that the bottle was still undesirable. The mesh rendered extra ridges that lacked fine detail and realism. When the mesh was closely observed, it was evident that not only clear objects were undesirable for this pipeline, but any object with a reflective surfaces caused VisualSFM to add extra points, which produced an unrealistic representation of the model.

Case 4 - Raspberry Pi box: When the video was converted into .jpg files the pictures rendered were too low quality. The photos loaded in this case seemed to match parts of the case from opposite sides. This result reinforced the fact that a flat, monotonous in color that also lacked texture and enough edges to produce quality matches as initially found in Case 1.

Case 5 - Raspberry pi box taped and painted: The mesh created rendered an unrealistic rough texture and created additional edges that were not present on the object. Through close inspection it was evident that the rough texture was when the VisualSFM program perceived false depth from the addition of tape. It also determined that there was no advantage from use of a high resolution camera, nor from a video capture.

Case 6 - Tiki model: When this model was first processed, the model rendered without a leg and the drum. It seemed that without an appropriate amount of light, there would not be enough shadows to depict the fine detail in the model. In an attempt to combat this issue, tape was placed on the left half of the drum, the left leg, and the seat. When the new round of images were processed, all pieces of the object were reconstructed. This lead to the conclusion that VisualSFM not only is limited in the reconstruction

of reflective objects, but also struggled with symmetric objects. It was evident that the outside of the right leg, looked exactly the same as the left leg, therefore the points were matched as one leg. In the final render in Blender, the hair and skirt combined, Figure 7. This proved also that models that contain large gaps would not render realistic as Meshlab and Blender filled holes to correct for points that were assumed missed.

Case 7 - NIKE Women’s Size 8 Running Shoe: This object was photographed and processed multiple times in an attempt to combat the issues with symmetry. Though the shoe was not truly symmetric, point cloud created only rendered the lateral half of the shoe. This proved that even the slightest amount of symmetry caused VisualSFM to match similar images as the same point. Once the medial side of the shoe was taped, and photographed in Trial 2 a complete printable model was rendered. The model, Figure 16, printed was only half the size of the original model in all dimensions due to limitations on size of the MakerGear 2 printer. The taped side which had a rough surface caused by the extra depth created from the tape

Figure: 16 Printed shoe

Case 8 - Updated model Raspberry pi box painted: The model produced, Figure 17, was successfully printed. The printed model was slightly larger than the original, and had a rough texture. Like the original box, the printed replica was functional as a house for a Raspberry pi circuit. Use of the different colored paints, dark colors depicted depth, and light colors for lack of depth confirmed the VisualSFM programs sees dark colors as shadows or depth. The rough edges was believed to be caused by an unsmooth paint layer in addition to finite shadows picked up between the layers of the original printed Raspberry Pi box.

Figure: 17 printed Raspberry pi case

Case 9 - Minion: The first model processed lacked dimension in the hands. In an attempt to remedy this, the hands and feet were painted white. The second rendered model of the minion was one solid replica, with all parts clearly depicted. This proved that not only was dark color processed as depth, but also was interpreted as a hole. It was evident that black colored objects absorb all light, which was read by VisualSFM and MeshLab as a hole in the mesh. The printed model rendered slight rough edges around the goggle, and the arms were not smooth. Additionally, the printed model was larger than the original (Figure 18). The height of the original model from head to toe was 15 cm, compared to the printed model’s height of 17 cm, 13% error. The circumference of the printed model was 29 cm, compared to the actual circumference of 22 cm 32% error. The proportions however, were within spec. The model was scaled in MeshLab therefore it was found the potential error was made when the model was scaled in Meshlab. The model pixel height on the y-axis was used to scale in Meshlab. Consequentially the model was not aligned directly to the y-axis. It was determined that this error in alignment caused Meshlab to perceive the model as larger than the actual object.

Figure 18: printed and original model

5.0 Conclusion:

The purpose of this research was to reconstruct a 3D model of an object via image processing for printing. The goal was to create an exact replica of the original object in which all aspects of the object were retained including actual size, texture, functionality, and realism. Throughout this process it was determined that for this processing pipeline of VisualSFM, MeshLab, and Blender, the physical characteristics of the objects photographed must be dynamic. Objects that are monotonous in color and shape, and lack texture and edges rendered incomplete models. The light on the object needed to be enough to illuminate all areas of the object. The most accurate models were reconstructed when photos were taken at constant time intervals through use of a turntable and the burst function on the IPhone camera. Best results also required multiple camera angles. There should be a large number of photos taken to be processed upwards of 100-250 for optimal results in a timely manner.

6.0 Acknowledgements

This work was funded by the US National Science Foundation (NSF) Research Experience for Teachers (RET) 1301089 in the Department of Computer Science at Appalachian State University in Boone, North Carolina. Special thanks to Dr. R. Mitchell Parry, Dr. Rahman Tashakkori and Michael

Crawford for their help and guidance. Appreciation is also extended to the Burroughs Welcome Fund and the North Carolina Science, Mathematics and

Technology Center for their funding support for the North Carolina High School Computational Chemistry server.

7.0 References1. Ventola, C.L. “Medical Applications for 3D Printing: Current and Projected Uses.” P&T, 39 (10). 704-7082. Larsen, Christian Lindequist “3D Reconstruction of Buildings From Images with Automatic Fa¸cade Refinement” Master’s Thesis in Vision, Graphics and Interactive Systems. June 20103. Changchang Wu, "Towards Linear-time Incremental Structure from Motion", 3DV 2013 4. Changchang Wu, "VisualSFM: A Visual Structure from Motion System", http://ccwu.me/vsfm/, 2011 5. Changchang Wu, Sameer Agarwal, Brian Curless, and Steven M. Seitz, "Multicore Bundle Adjustment", CVPR 2011 6. Changchang Wu, "SiftGPU: A GPU implementation of Scale Invaraint Feature Transform (SIFT)", http://cs.unc.edu/~ccwu/siftgpu, 2007 7. Blender Online Community. Blender - a 3D modelling and rendering package. Blender Foundation, Blender Institute, Amsterdam. 2013. http://www.blender.org