Computazione per l’interazione naturale: Richiami di...

Transcript of Computazione per l’interazione naturale: Richiami di...

Corso di Interazione Naturale

Prof. Giuseppe Boccignone

Dipartimento di InformaticaUniversità di Milano

[email protected]/IN_2016.html

Computazione per l’interazione naturale: Richiami di algebra lineare (3)

(e primi esempi di Machine Learning)

• Una matrice quadrata A è diagonalizzabile se esiste una matrice Q invertibile che consente la decomposizione

• Una matrice quadrata A reale e simmetrica è diagonalizzabile e avrà autovalori e autovettori distinti. Li normalizzo e li metto in Q che diventa quindi ortonormale

Teorema spettrale

Un po’ di algebra lineare di base //autovettori e autovalori

Un po’ di algebra lineare di base //Singular Value Decomposition (SVD)

Se la matrice non è quadrata?

Un po’ di algebra lineare di base //Singular Value Decomposition (SVD)

• Vale il seguente teorema

(aligner)(stretcher) x(hanger) x

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

Decomposizione SVD

ROUGH DRAFT - BEWARE suggestions [email protected]

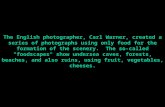

0 2 4 6 8 100

24

68

10

Figure 1: Best-fit regression line reduces data from two dimensions into one.

original data more clearly and orders it from most variation to the least. What makes SVDpractical for NLP applications is that you can simply ignore variation below a particularthreshhold to massively reduce your data but be assured that the main relationships ofinterest have been preserved.

8.1 Example of Full Singular Value Decomposition

SVD is based on a theorem from linear algebra which says that a rectangular matrix A canbe broken down into the product of three matrices - an orthogonal matrix U , a diagonalmatrix S, and the transpose of an orthogonal matrix V . The theorem is usually presentedsomething like this:

Amn = UmmSmnVTnn

where UTU = I, V TV = I; the columns of U are orthonormal eigenvectors of AAT , thecolumns of V are orthonormal eigenvectors of ATA, and S is a diagonal matrix containingthe square roots of eigenvalues from U or V in descending order.

The following example merely applies this definition to a small matrix in order to computeits SVD. In the next section, I attempt to interpret the application of SVD to documentclassification.

Start with the matrix

A =

!3 1 1−1 3 1

"

15

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

Un po’ di algebra lineare di base //Singular Value Decomposition (SVD)

• Vale il seguente teorema

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).The connection between eigenvectors and singular vectors is the following. For an arbitrary

real matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

392 Chapter 12. Latent linear models

12.2.3 Singular value decomposition (SVD)

We have defined the solution to PCA in terms of eigenvectors of the covariance matrix. However,there is another way to obtain the solution, based on the singular value decomposition, orSVD. This basically generalizes the notion of eigenvectors from square matrices to any kind ofmatrix.

In particular, any (real) N × D matrix X can be decomposed as follows

X!"#$N×D

= U!"#$N×N

S!"#$N×D

VT!"#$D×D

(12.46)

where U is an N × N matrix whose columns are orthornormal (so UTU = IN ), V is D × Dmatrix whose rows and columns are orthonormal (so VTV = VVT = ID ), and S is a N × Dmatrix containing the r = min(N,D) singular values σi ≥ 0 on the main diagonal, with 0sfilling the rest of the matrix. The columns of U are the left singular vectors, and the columnsof V are the right singular vectors. See Figure 12.8(a) for an example.Since there are at most D singular values (assuming N > D), the last N − D columns of U

are irrelevant, since they will be multiplied by 0. The economy sized SVD, or thin SVD, avoidscomputing these unnecessary elements. Let us denote this decomposition by USV. If N > D,we have

X!"#$N×D

= U!"#$N×D

S!"#$D×D

VT!"#$D×D

(12.47)

as in Figure 12.8(a). If N < D, we have

X!"#$N×D

= U!"#$N×N

S!"#$N×N

VT!"#$N×D

(12.48)

Computing the economy-sized SVD takes O(NDmin(N,D)) time (Golub and van Loan 1996,p254).

The connection between eigenvectors and singular vectors is the following. For an arbitraryreal matrix X, if X = USVT , we have

XTX = VSTUT USVT = V(STS)VT = VDVT (12.49)

where D = S2 is a diagonal matrix containing the squares singular values. Hence

(XTX)V = VD (12.50)

so the eigenvectors of XTX are equal to V, the right singular vectors of X, and the eigenvaluesof XTX are equal to D, the squared singular values. Similarly

XXT = USVT VSTUT = U(SST )UT (12.51)

(XXT )U = U(SST ) = UD (12.52)

so the eigenvectors of XXT are equal to U, the left singular vectors of X. Also, the eigenvaluesof XXT are equal to the squared singular values. We can summarize all this as follows:

U = evec(XXT ), V = evec(XTX), S2 = eval(XXT ) = eval(XTX) (12.53)

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

5.3 Singular Value Decomposition5 SOLUTIONS AND DECOMPOSITIONS

5.2.3 Symmetric

Assume A is symmetric, then

VVT = I (i.e. V is orthogonal) (260)�i 2 R (i.e. �i is real) (261)

Tr(Ap) =P

i�pi (262)

eig(I + cA) = 1 + c�i (263)eig(A� cI) = �i � c (264)

eig(A�1) = ��1

i (265)

For a symmetric, positive matrix A,

eig(AT A) = eig(AAT ) = eig(A) � eig(A) (266)

5.2.4 Characteristic polynomial

The characteristic polynomial for the matrix A is

0 = det(A� �I) (267)= �n � g

1

�n�1 + g2

�n�2 � ... + (�1)ngn (268)

Note that the coe�cients gj for j = 1, ..., n are the n invariants under rotationof A. Thus, gj is the sum of the determinants of all the sub-matrices of A takenj rows and columns at a time. That is, g

1

is the trace of A, and g2

is the sumof the determinants of the n(n� 1)/2 sub-matrices that can be formed from Aby deleting all but two rows and columns, and so on – see [17].

5.3 Singular Value Decomposition

Any n⇥m matrix A can be written as

A = UDVT , (269)

whereU = eigenvectors of AAT n⇥ n

D =p

diag(eig(AAT )) n⇥mV = eigenvectors of AT A m⇥m

(270)

5.3.1 Symmetric Square decomposed into squares

Assume A to be n⇥ n and symmetric. Then⇥

A⇤

=⇥

V⇤ ⇥

D⇤ ⇥

VT⇤, (271)

where D is diagonal with the eigenvalues of A, and V is orthogonal and theeigenvectors of A.

Petersen & Pedersen, The Matrix Cookbook, Version: November 14, 2008, Page 30

Un po’ di algebra lineare di base //Singular Value Decomposition (SVD)

• Esempio

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 100

24

68

10

Figure 1: Best-fit regression line reduces data from two dimensions into one.

original data more clearly and orders it from most variation to the least. What makes SVDpractical for NLP applications is that you can simply ignore variation below a particularthreshhold to massively reduce your data but be assured that the main relationships ofinterest have been preserved.

8.1 Example of Full Singular Value Decomposition

SVD is based on a theorem from linear algebra which says that a rectangular matrix A canbe broken down into the product of three matrices - an orthogonal matrix U , a diagonalmatrix S, and the transpose of an orthogonal matrix V . The theorem is usually presentedsomething like this:

Amn = UmmSmnVTnn

where UTU = I, V TV = I; the columns of U are orthonormal eigenvectors of AAT , thecolumns of V are orthonormal eigenvectors of ATA, and S is a diagonal matrix containingthe square roots of eigenvalues from U or V in descending order.

The following example merely applies this definition to a small matrix in order to computeits SVD. In the next section, I attempt to interpret the application of SVD to documentclassification.

Start with the matrix

A =

!3 1 1−1 3 1

"

15

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 10

02

46

810

Figure 2: Regression line along second dimension captures less variation in original data.

In order to find U , we have to start with AAT . The transpose of A is

AT =

⎡

⎢⎣3 −11 31 1

⎤

⎥⎦

so

AAT =

[3 1 1−1 3 1

] ⎡

⎢⎣3 −11 31 1

⎤

⎥⎦ =

[11 11 11

]

Next, we have to find the eigenvalues and corresponding eigenvectors of AAT . We know thateigenvectors are defined by the equation Av = λv, and applying this to AAT gives us

[11 11 11

] [x1

x2

]

= λ

[x1

x2

]

We rewrite this as the set of equations

11x1 + x2 = λx1

x1 + 11x2 = λx2

and rearrange to get(11− λ)x1 + x2 = 0

16

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 10

02

46

810

Figure 2: Regression line along second dimension captures less variation in original data.

In order to find U , we have to start with AAT . The transpose of A is

AT =

⎡

⎢⎣3 −11 31 1

⎤

⎥⎦

so

AAT =

[3 1 1−1 3 1

] ⎡

⎢⎣3 −11 31 1

⎤

⎥⎦ =

[11 11 11

]

Next, we have to find the eigenvalues and corresponding eigenvectors of AAT . We know thateigenvectors are defined by the equation Av = λv, and applying this to AAT gives us

[11 11 11

] [x1

x2

]

= λ

[x1

x2

]

We rewrite this as the set of equations

11x1 + x2 = λx1

x1 + 11x2 = λx2

and rearrange to get(11− λ)x1 + x2 = 0

16

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 10

02

46

810

Figure 2: Regression line along second dimension captures less variation in original data.

In order to find U , we have to start with AAT . The transpose of A is

AT =

⎡

⎢⎣3 −11 31 1

⎤

⎥⎦

so

AAT =

[3 1 1−1 3 1

] ⎡

⎢⎣3 −11 31 1

⎤

⎥⎦ =

[11 11 11

]

Next, we have to find the eigenvalues and corresponding eigenvectors of AAT . We know thateigenvectors are defined by the equation Av = λv, and applying this to AAT gives us

[11 11 11

] [x1

x2

]

= λ

[x1

x2

]

We rewrite this as the set of equations

11x1 + x2 = λx1

x1 + 11x2 = λx2

and rearrange to get(11− λ)x1 + x2 = 0

16

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 10

02

46

810

Figure 2: Regression line along second dimension captures less variation in original data.

In order to find U , we have to start with AAT . The transpose of A is

AT =

⎡

⎢⎣3 −11 31 1

⎤

⎥⎦

so

AAT =

[3 1 1−1 3 1

] ⎡

⎢⎣3 −11 31 1

⎤

⎥⎦ =

[11 11 11

]

Next, we have to find the eigenvalues and corresponding eigenvectors of AAT . We know thateigenvectors are defined by the equation Av = λv, and applying this to AAT gives us

[11 11 11

] [x1

x2

]

= λ

[x1

x2

]

We rewrite this as the set of equations

11x1 + x2 = λx1

x1 + 11x2 = λx2

and rearrange to get(11− λ)x1 + x2 = 0

16

ROUGH DRAFT - BEWARE suggestions [email protected]

0 2 4 6 8 10

02

46

810

Figure 2: Regression line along second dimension captures less variation in original data.

In order to find U , we have to start with AAT . The transpose of A is

AT =

⎡

⎢⎣3 −11 31 1

⎤

⎥⎦

so

AAT =

[3 1 1−1 3 1

] ⎡

⎢⎣3 −11 31 1

⎤

⎥⎦ =

[11 11 11

]

Next, we have to find the eigenvalues and corresponding eigenvectors of AAT . We know thateigenvectors are defined by the equation Av = λv, and applying this to AAT gives us

[11 11 11

] [x1

x2

]

= λ

[x1

x2

]

We rewrite this as the set of equations

11x1 + x2 = λx1

x1 + 11x2 = λx2

and rearrange to get(11− λ)x1 + x2 = 0

16

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

Un po’ di algebra lineare di base //Singular Value Decomposition (SVD)

• ortonormalizziamo la matrice

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#

Finally, we have to convert this matrix into an orthogonal matrix which we do by applyingthe Gram-Schmidt orthonormalization process to the column vectors. Begin by normalizingv1.

u1 =v1|v1|

=[1, 1]√12 + 12

=[1, 1]√

2= [

1√2,1√2]

Computew2 = v2 − u1 · v2 ∗ u1 =

17

ROUGH DRAFT - BEWARE suggestions [email protected]

x1 + (11− λ)x2 = 0

Solve for λ by setting the determinant of the coefficient matrix to zero,!!!!!(11− λ) 1

1 (11− λ)

!!!!! = 0

which works out as(11− λ)(11− λ)− 1 · 1 = 0

(λ− 10)(λ− 12) = 0

λ = 10,λ = 12

to give us our two eigenvalues λ = 10,λ = 12. Plugging λ back in to the original equationsgives us our eigenvectors. For λ = 10 we get

(11− 10)x1 + x2 = 0

x1 = −x2

which is true for lots of values, so we’ll pick x1 = 1 and x2 = −1 since those are small andeasier to work with. Thus, we have the eigenvector [1,−1] corresponding to the eigenvalueλ = 10. For λ = 12 we have

(11− 12)x1 + x2 = 0

x1 = x2

and for the same reason as before we’ll take x1 = 1 and x2 = 1. Now, for λ = 12 we have theeigenvector [1, 1]. These eigenvectors become column vectors in a matrix ordered by the sizeof the corresponding eigenvalue. In other words, the eigenvector of the largest eigenvalueis column one, the eigenvector of the next largest eigenvalue is column two, and so forthand so on until we have the eigenvector of the smallest eigenvalue as the last column of ourmatrix. In the matrix below, the eigenvector for λ = 12 is column one, and the eigenvectorfor λ = 10 is column two. "

1 11 −1

#