City University of Hong Kongpersonal.cb.cityu.edu.hk › msawan › grf2018d.pdfOnline Supplementary...

Transcript of City University of Hong Kongpersonal.cb.cityu.edu.hk › msawan › grf2018d.pdfOnline Supplementary...

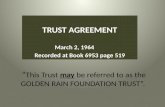

Online Supplementary Material forGRF Proposal 2018:

“New Developments on Frequentist ModelAveraging in Statistics”

Alan WAN (P.I.),Xinyu ZHANG (Co-I.),

Guohua ZOU (Co-I.)

SummaryThis document provides the proofs of the preliminary theoretical results of the captioned GRFProposal. Note that the notations used in each part of this document pertain only to that partand may carry a different meaning from the same notations used in other parts of the document.

Part 1 pp. 1-15Part 2 to be completedPart 3 pp. 15-22Part 4 pp. 22-36

1 Part 1: Frequentist model averaging for high-dimensional quantile regression

1.1 Model Framework and Covariate Dimension Reduction

Let y be a scalar dependent variable, x = (x1, x2, . . . , xp)T be a p-dimensional vector of

covariates, where p can be infinite. Let Dn = (xi, yi), i = 1, . . . , n be independent andidentically distributed (IID) copies of (x, y), where xi = (xi1, xi2, . . . , xip)

T . Assume that theτ th (0 < τ < 1) conditional quantile of y is given by

Qτ (y|x) = µi(τ) =

p∑j=1

θj(τ)xij, (1.1)

where θj(τ) is an unknown coefficient vector. This leads to the following linear QR model:

yi =

p∑j=1

θj(τ)xij + εi(τ), (1.2)

where εi(τ) ≡ yi −∑p

j=1 θj(τ)xij is an unobservable IID error term such that P (εi(τ) ≤0|xi) = τ . For simplicity, we will write µi = µi(τ), εi = εi(τ) and θj = θj(τ).

Clearly, not all of xj’s are useful for predicting y. Model selection attempts to selecta subset of covariates in x and use them as the covariates in a single working model forall subsequent analysis and inference. Model averaging, in contrast, attempts to combineresults obtained from different models, each based on a distinct subset of covariates. Asdiscussed in the proposal, model averaging has the important advantage over model selectionin that it shields against the choice of a very poor model, and there exists ample evidencesuggesting that model averaging is the preferred approach from a sampling-theoretic pointof view. The situation in hand is complicated by the colossal number of plausible modelsdue to the high dimensionality of x. When estimating the conditional mean of y, Ando & Li(2014) encountered a similar problem, and proposed a covariate dimension reduction methodwhereby the covariates are clustered according to their magnitudes of marginal correlationswith y intoM+1 groups, and the group containing covariates with near zero correlations withy is dropped. This results in M candidate models, each being formed by regressing y on oneof the remaining M clusters of covariates that survive the screening step.

When the interest is in regression quantiles, Ando & Li’s (2014) ranking by marginalcorrelation approach does not provide the flexibility for different covariates to be selectedat different quantiles. Here, we adopt an alternative framework based on marginal quantileutility proposed by He et al. (2013) for covariate ranking and screening. Let Mτ = j :Qτ (y|x) functionlly depends on xj be the set of informative covariates at quantile level τ ,Qτ (y|xj) the τ th conditional quantile of y given xj , and Qτ (y) the τ th unconditional quantileof y. He et al.’s (2013) approach is based on the premise that qτ (y|xj) = Qτ (y|xj)−Qτ (y) = 0iff y and xj are independent. Thus, qτ (y|xj) may be taken as a measure of the utility ofxj , and the closer is qτ (y|xj) to zero, the less useful is xj in explaining y at quantile levelτ . Let F be the distribution function of of y. The τ th unconditional quantile of y is thusξ0(τ) = infy : F (y) ≥ τ or F−1(τ). As well, let the sample analog of F be Fn for asample of yi’s, i = 1, · · · , n. Correspondingly, the sample analog of F−1(τ) is F−1

n (τ), theτ th quantile of Fn. We let F−1

n (τ) be the estimator of ξ0(τ), and denote it as ξ(τ).

For the estimation of Qτ (y|xj), He et al. (2013) suggested a B-spline approach. Todescribe it, assume that xj takes on values within the interval [u, v], and Qτ (y|xj) belongsto the class of functions F defined under Assumption (A.1) in Appendix 2.1. Let u = s0 <s1 < . . . < sk = v be a partition of [u, v], and use si’s as knots for constructing N = k + lnormalized B-spline basis functions B1(t), . . . , BN(t), such that ‖Bk(·)‖∞ ≤ 1, where ‖ · ‖∞denotes the sup norm. Write πππ(t) = (B1(t), . . . , BN(t))T , and assume that fj(t) ∈ F. Thenfj(t) can be approximated by a linear combination of the basis functions πππ(t)Tβββ for someβββ ∈ RN . Define βββj = argminβββ∈RN

∑ni=1 ρτ (yi − πππ(xij)

Tβββ), and use

fj(xj) = πππ(xj)T βββj − ξ(τ). (1.3)

as an estimator of qτ (y|xj). We expect fj(xj) to be close to zero if xj is independent of y.

2

Thus, it makes sense to use

‖fj(xj)‖1n = n−1

n∑i=1

∣∣∣fj(xij)∣∣∣ = n−1

n∑i=1

∣∣∣πππ(xij)T βββj − ξ(τ)

∣∣∣as the basis for covariate screening and ranking. Hereafter, we refer to ‖fj(xj)‖1

n as themarginal quantile utility of xj .

Recall that the informative covariates are those that are useful for explaining y. Assumethat there are s (< p) informative covariates and let xT and xF be s × 1 and (p − s) × 1vectors containing the informative and non-informative covariates respectively. Without lossof generality, let xF correspond to the first s covariates in x. Thus, x = (xTT , xTF ).

Theorem 1.1. Under Assumptions (A.1)-(A.5) and (B.1)-(B.3) in Appendix 2.1, we have

P

(maxj∈T c

∥∥∥fj(xj)∥∥∥1

n≥ min

j∈T

∥∥∥fj(xj)∥∥∥1

n

)→ 0 as n→∞,

where T = 1, . . . , s and T c = s+1, . . . , p are index sets corresponding to the informativeand non-informative covariates respectively.

Proof: See Subsection 2.2.

Remark 1.1. He et al. (2013) used ‖fj(xj)‖2n = n−1

∑ni=1 fj(xij)

2 as the basis for covariatescreening. Let νn be a predefined threshold value. The covariates that survive He et al.’s

(2013) screening procedure is contained in the subset Mτ = j ≥ 1 :∥∥∥fj(xj)∥∥∥2

n≥ νn.

He et al. (2013) proved that this procedure achieves the sure screening property (Fan & Lv(2008)), i.e., at each τ ; P (T ⊂ Mτ ) → 1 as n → ∞, or the important covariates are almost

certainly retained as n increases to infinity. However, note that∥∥∥fj1(xj1)∥∥∥2

n≥∥∥∥fj2(xj2)∥∥∥2

n

does not always imply∥∥∥fj1(xj1)∥∥∥1

n≥∥∥∥fj2(xj2)∥∥∥1

n. Thus, while He et al.’s (2013) procedure is

useful for screening the covariates, the same cannot be said when the objective is to rank thecovariates.

Remark 1.2. Theorem 1.1 shows that based on our procedure, a more informative covariateis always ranked higher than a less informative covariate asymptotically. When the procedureis used for covariate screening, Theorem 1.1 implies that a more informative covariate cannotbe dropped while a less informative covariate is selected simultaneously.

He et al. (2013) recommend keeping the covariates corresponding to the top [n/log(n)]

values of ‖fj(xj)‖2n and dropping the rest, where [a] rounds a down to the nearest integer. We

adopt an analogous approach here by retaining only the subset of covariates that result in the[n/log(n)] highest values of ‖fj(xj)‖1

n.

1.2 Model averaging and a delete-one CV criterion

Having reduced the dimension of the covariates, our next step entails constructing the can-didate models. For each quantile level τ , we divide the [n/log(n)] covariates that survive

3

the above screening step into M approximately equal-size clusters based on their estimatedmarginal quantile utilities, placing the most influential covariates in the first cluster, the leastinfluential in the M th cluster, and so on. This results in M candidates models, where the mth

model includes the mth cluster of covariates, m = 1, · · · ,M . This idea of grouping covari-ates follows that of Ando & Li (2014), with the only major differences being that Ando &Li (2014) focused on mean estimation and their ranking of covariates is based on marginalcorrelations.

Write the mth candidate model as

yi = xTi(m)Θ(m) + εi =km∑j=1

θj(m)xij(m) + εi, (1.4)

where km denotes the number of covariates, Θ(m) = (θ1(m), . . . , θkm(m))T ,xi(m) = (xi1(m), . . . , xikm(m))

T ,xij(m) is a covariate and θj(m) is the corresponding coefficient, j = 1, . . . , km. The τ th QRestimator of Θ(m) in the above model is

Θ(m) ≡ argminΘ(m)∈Rkm

n∑i=1

ρτ (yi − xTi(m)Θ(m)). (1.5)

Let εi(m) ≡ yi− ΘT(m)xi(m), w ≡ (w1, . . . , wM)T be a weight vector in the unit simplex of RM

andW ≡ w ∈ [0, 1]M : 0 ≤ wk ≤ 1. The model averaging estimator of µi is thus

µi(w) =M∑m=1

wmxTi(m)Θ(m). (1.6)

Unlike the weights in Lu & Su (2015), our weights are not subject to the conventionalrestriction of

∑Mm=1 wm = 1. As in Ando & Li (2014), the removal of this restriction in our

context is justified by the fact our M models are not equally competitive, given the manner inwhich the covariates are included in the different models.

We propose selecting w by the jackknife or leave-one-out cross validation criteriondescribed as follows.

For m = 1, . . . ,M , let Θi(m) be the jackknife estimator of Θ(m) in model m with theith observation deleted. Consider the leave-one-out CV criterion

CVn(w) =1

n

n∑i=1

ρτ

(yi −

M∑m=1

wmxTi(m)Θi(m)

). (1.7)

The jackknife weight vector w = (w1, . . . , wM)T is obtained by choosing w ∈ W such that

w = argminw∈WCVn(w). (1.8)

Substituting w for w in (1.6) results in the following jackknife model averaging (JMA) esti-mator of µi for high-dimensional quantile regression:

µi(w) =M∑m=1

wmxTi(m)Θ(m). (1.9)

The calculation of the weight vector w is a straightforward linear programming problem.

4

1.3 Asymptotic property of estimator

This section is devoted to an investigation of the theoretical properties of the JMA. Specifi-cally, we show show that jackknife weight vector w is asymptotically optimal in the sense ofminimizing the following out-of-sample final prediction error:

FPEn(w) = E

[ρτ (y

∗ −M∑m=1

wmx∗Tm Θ(m)) | Dn

], (1.10)

where (y∗,x∗) is an independent copy of (y,x), x∗m = (x∗1(m), . . . , x∗km(m))

T and Dn =

(xi, yi), i = 1, . . . , n, x∗1(m), . . . , x∗km(m) are variables in x∗ that correspond to the km re-

gressors in the mth model.

Following the notations of Lu & Su (2015), let f(· | xi) and F (· | xi) denote theconditional probability density function (PDF) and cumulative distribution function (CDF) ofεi given xi respectively, and fy|x(· | xi) the conditional PDF of yi given xi. Consider thefollowing pseudo-true parameter

Θ∗(m) = argminΘ(m)∈RkmE[ρτ (yi − xTi(m)Θ(m))

]. (1.11)

Under Assumptions (C.1)-(C.3) in Subsection 2.1, we can show that Θ∗(m) exists and is uniquedue to the convexity of the objective function. For m = 1, . . . ,M , define

A(m) = E[f(−ui(m) | xi)xi(m)x

Ti(m)

]= E

[fy|x(xTi(m)Θ

∗(m) | xi)xi(m)x

Ti(m)

], (1.12)

B(m) = E[ψτ (εi + ui(m))

2xi(m)xTi(m)

], (1.13)

andV(m) = A−1

m B(m)A−1m , (1.14)

where ui(m) = µi − xTi(m)Θ∗m is the approximation bias for the mth candidate model and

ψτ (εi) = τ − 1εi ≤ 0. Let k = max1≤m≤M km.

Theorem 1.2. Suppose Assumptions (C.1)-(C.3) in Appendix 2.1 hold. Then w is asymptoti-cally optimal in the sense that

FPE(w)

infw∈W

FPE(w)= 1 + op(1). (1.15)

Proof See Subsection 2.3.

Remark 1.3. Theorem 1.2 shows that the FMA estimator due to the jackknife weight vectoryields an out-of-sample final prediction error that is asymptotically identical to that of theFMA estimator that uses the infeasible optimal weight vector. This is similar to the result ofLu & Su (2015) who considered a low dimensional covariate setup. Note that Assumption(C.3) presented in the Appendix allows

∑Mm=1 km to go to infinity.

5

2 Proofs of Theorems 1.1 and 1.2

2.1 Assumptions and Lemmas useful for proving Theorems 1.1 and 1.2

Our derivation of results requires the following regularity conditions from He et al. (2013).

Condition (A.1). Let F be the class of function defined on [0, 1] whose lth derivative satisfiesthe following Lipschitz condition of order c∗ : |f (l)(s) − f (l)(t)| ≤ c0|s − t|c∗ , for somepositive constant c0 and s, t ∈ [u, v], where l is a nonnegative integer and c∗ ∈ [0, 1] satisfiesd = l + c∗ > 1

2.

Condition (A.2). minj∈MτE(Qτ (y|xj)−Qτ (y))2 ≥ c1n−τ for some 0 ≤ τ < 2d

2d+1and some

positive constant c1.

Condition (A.3). The conditional density fy|xj(·) is bounded away from 0 and∞ on [Qτ (y|xj)−ξ, Qτ (y|xj) + ξ], for some ξ > 0, uniformly in xj.

Condition (A.4). The marginal density function gj of xj , 1 ≤ j ≤ p, are uniformly boundedaway from 0 and∞.

Condition (A.5). The number of basis functions N satisfies N−dnτ = o(1) and Nn2τ−1 =o(1) as n→∞.

See He et al. (2013) for an explanation of these assumptions. In addition, we need thefollowing assumptions.

Condition (B.1). minj∈T

1

n

∑ni=1

∣∣πππ(xij)Tβββ0j − ξ0(τ)

∣∣ − maxj∈T c

1

n

∑ni=1

∣∣πππ(xij)Tβββ0j − ξ0(τ)

∣∣ ≥c > 0, where c is a constant, and T c = s + 1, . . . , p and T = 1, . . . , s are non-signalindex set and signal index set, respectively.

Condition (B.2). (i) logp = o(n1−4τ ). (ii) 0 < τ < 1/4. (iii) N = o(nτ ).

Condition (B.3). (i) E[max1≤j≤p

∥∥∥βj − β0j

∥∥∥]4

<∞.

(ii) E[max1≤j≤p

(λmax

(1n

∑ni=1πππ(xij)πππ(xij)

T))]

<∞.

Assumption (B.1) facilitates the construction of the approximate true structures of Tand T c based on marginal quantile utilities. It is similar to the assumption that underliesLemma 3.2 of Ando & Li (2014). Assumption (B.2) imposes constraints on the number ofsamples, the dimension of the models and the number of B-spline basis. Part (i) of Assump-tions (B.2) suggests that the order of p is smaller than the exponent of n1−4τ . Part (ii) ofAssumptions (B.2) can be found in the Theorem 3.3 of He et al. (2013), which allows thehandling of ultra-high dimensionality, that is, p is allowed to grow at the exponential rate withrespect to n1−4τ . Assumption (B.3) places restrictions on βj and B-spline basis.

We also require the following uniformly integrable assumptions:

Condition (C.1). (i) (yi,xi), i = 1, . . . , n, are IID such that (1.2) holds.

(ii) P (εi(τ) ≤ 0 | xi) = τ a.s.

(iii)E(µ4i ) <∞ and supj≥1E(x8

ij) ≤ cx for some cx <∞.

6

Condition (C.2). (i)fy|x(· | xi) is bounded above by a finite constant cf and continuous overits support a.s.

(ii) There exist constants cA(m)and cA(m)

that may depend on km such that 0 < cAm ≤λmin(A(m)) ≤ λmax(A(m)) ≤ cfλmax(E[xi(m)x

Ti(m)]) ≤ cA(m)

<∞.

(iii) There exist constants cB(m)and cB(m)

that may depend on km such that 0 < cB(m)≤

λmin(B(m)) ≤ λmax(B(m)) ≤ cB(m)<∞.

(iv) (cA(m)+ cB(m)

)/km = O(c2A(m)

).

Condition (C.3). Let cA = min1≤m≤M cA(m), cB = min1≤m≤M cB(m)

, cA = max1≤m≤M cA(m),

cA = max1≤m≤M cB(m).

(i) As n→∞,M2k2logn/(n0.5cB)→ 0 and k

4(logn)4/(ncB)2)→ 0.

(ii) nMn−0.5L2kc3A/(cAcB) = o(1) for a sufficiently large constant L.

Assumptions (C.1)-(C.3) are regular conditions on quantile regression taken from Lu& Su (2015), except for the assumption M2k

2logn/(n0.5cB)→ 0 (n→∞), which is similar

to the condition (6) in Ando & Li (2014) and can be reduced to Mk

n0.25/logn → 0 (n → ∞). Itallows M to go to infinity.

A main difficulty of our proof of theorems is that βββj , being the solution to a non-smoothobjective function, has no closed form expression. We make use of the following lemmas toovercome this difficulty.

Following He et al. (2013), let us define, for a given (y0, x0), where x0 = (x01, . . . , x0p),

βββ0j = argminβββ∈RNE[ρτ (y0 − πππ(x0j)

Tβββ)− ρτ (y0)].

Lemma A.1. This is the same lemma as Lemma 3.2 of He et al. (2013). Let Assumptions(A.1)-(A.5) in Appendix 2.1 be satisfied. For any C > 0, there exist positive constants c2 andc3 such that

P ( max1≤j≤p

∥∥∥βj − β0j

∥∥∥ ≥ CN12n−τ ) ≤ 2p exp(−c2n

1−4τ ) + p exp(−c3N−2n1−2τ ).

Proof See the proof of Lemma 3.2 in He et al. (2013).

Lemma A.2. This Lemma is taken from Section 2.3.1 in Serfling (1980) Let 0 < τ < 1, ifξ0(τ) is the unique solution of T c(y−) ≤ τ ≤ T c(y), then

ξ(τ)→ ξ0(τ) with probability 1.

Proof See the proof of theorem of Section 2.3.1 in Serfling (1980).

Remark A.1. The above result asserts that ξ(τ) is strongly consistent for estimation of ξ0(τ),under mild restrictions on T c in the neighborhood of ξ0(τ).

Lemma A.3. This lemma is taken from Lu & Su (2015). Suppose Assumptions (C.1)-(C.3)hold. Let C(m) denote an lm × km matrix such that C0 = limn→∞C(m)C

T(m) exists and is

positive definite, where lm ∈ [1, km] is a fixed integer. Then

7

(i) ‖Θ(m) −Θ∗(m)‖ = Op(√

kmn

);

(ii)√nC(m)V

−1/2(m) [Θ(m) −Θ∗(m)]

d→ N(0, C0).

Proof See the proof of Theorem 3.1 in Lu & Su (2015).

Lemma A.4. This lemma is taken from Lu & Su (2015). Suppose that Assumptions (C.1)-(C.3)hold. Then

(i) max1≤i≤nmax1≤m≤M‖Θi(m) −Θ∗(m)‖ = Op(√n−1klogn);

(ii) max1≤m≤M‖Θ(m) −Θ∗(m)‖ = Op(√n−1klogn).

Proof See the proof of Theorem 3.2 in Lu & Su (2015).

2.2 Proof of Theorem 1.1

By Lemma A.1 and Assumption (B.2), for ∀ε > 0, we have

N−2n4τP

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ ≥ ε

)≤ N−2n4τp(2 exp(−c2n

1−4τ ) + exp(−c3N−2n1−2τ ))→ 0 as n→∞. (A.1)

Also, by Cauchy-Schwarz’s inequality,

E

[max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ]2

= E

[max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ1

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ ≥ ε

)]2

+E

[max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ1

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ < ε

)]2

≤

[E

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥)4] 1

2 [N−2n4τP

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ ≥ ε

)] 12

+ε2P

(max1≤j≤p

∥∥∥βj − β0j

∥∥∥N− 12nτ < ε

)→ 0 as n→∞. (A.2)

8

Furthermore, recognising that ‖fj(xj)‖1n = n−1

∑ni=1

∣∣∣πππ(xij)T βββj − ξ(τ)

∣∣∣, we have

P

(maxj∈T c‖fj(xj)‖1

n ≥ minj∈T‖fj(xj)‖1

n

)=P

(maxj∈T c

1

n

n∑i=1

∣∣∣πππ(xij)T βββj − ξ(τ)

∣∣∣ ≥ minj∈T

1

n

n∑i=1

∣∣∣πππ(xij)T βββj − ξ(τ)

∣∣∣)

=P

(maxj∈T c

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j) + πππ(xij)

Tβββ0j − ξ0(τ) + ξ0(τ)− ξ(τ)∣∣∣

≥ minj∈T

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j) + πππ(xij)

Tβββ0j − ξ0(τ) + ξ0(τ)− ξ(τ)∣∣∣)

≤P

(maxj∈T c

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ maxj∈T c

1

n

n∑i=1

∣∣πππ(xij)T (βββ0j − ξ0(τ))

∣∣+∣∣∣ξ0(τ)− ξ(τ)

∣∣∣≥ −min

j∈T

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ minj∈T

1

n

n∑i=1

∣∣πππ(xij)T (βββ0j − ξ0(τ))

∣∣+∣∣∣ξ0(τ)− ξ(τ)

∣∣∣) .By triangle inequality and Markov’s inequality, we have

P

(maxj∈T c‖fj(xj)‖1

n ≥ minj∈T‖fj(xj)‖1

n

)≤P

(maxj∈T c

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ minj∈T

1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ 2∣∣∣ξ0(τ)− ξ(τ)

∣∣∣≥ min

j∈T

1

n

n∑i=1

∣∣πππ(xij)T (βββ0j − ξ0(τ))

∣∣−maxj∈T c

1

n

n∑i=1

∣∣πππ(xij)T (βββ0j − ξ0(τ))

∣∣)

≤E[maxj∈T c

1n

∑ni=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ minj∈T1n

∑ni=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ 2∣∣∣ξ0(τ)− ξ(τ)

∣∣∣]minj∈T

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

≤E[2 max1≤j≤p

1n

∑ni=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣+ 2∣∣∣ξ0(τ)− ξ(τ)

∣∣∣]minj∈T

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

.

By Lemma A.2 and Theorem 2(c) of Ferguson (1996),

E∣∣∣ξ0(τ)− ξ(τ)

∣∣∣ = o(1) as n→∞.

Also, noting that[1

n

n∑i=1

∣∣∣πππ(xij)T (βββj − βββ0j)

∣∣∣]2× 12

≤∥∥∥βββj − βββ0j

∥∥∥λ 12max

(1

n

n∑i=1

πππ(xij)πππ(xij)T

),

9

then we obtain

P

(maxj∈T c‖fj(xj)‖1

n ≥ minj∈T‖fj(xj)‖1

n

)

≤E[2 max1≤j≤p

∥∥∥βββj − βββ0j

∥∥∥ ·max1≤j≤p λ1/2max

(1n

∑ni=1πππ(xij)πππ(xij)

T)

+∣∣∣ξ0(τ)− ξ(τ)

∣∣∣]minj∈T

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

=E[2 max1≤j≤p

∥∥∥βββj − βββ0j

∥∥∥ ·max1≤j≤p λ1/2max

(1n

∑ni=1πππ(xij)πππ(xij)

T)]

+ o(1)

minj∈T1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

≤2

[E(

max1≤j≤p

∥∥∥βββj − βββ0j

∥∥∥)2]1/2

·[E(max1≤j≤p λmax

(1n

∑ni=1πππ(xij)πππ(xij)

T))]1/2

+ o(1)

minj∈T1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

Finally, by (A.2) and Assumption (B.1), (B.3) and (B.2) (iii), we have

P

(maxj∈T c‖fj(xj)‖1

n ≥ minj∈T‖fj(xj)‖1

n

)=

o(N1/2n−τ ) ·[E(max1≤j≤p λmax

(1n

∑ni=1πππ(xij)πππ(xij)

T))]1/2

+ o(1)

minj∈T1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))| −maxj∈T c

1n

∑ni=1 |πππ(xij)T (βββ0j − ξ0(τ))|

→0 as n→∞.

This completes the proof of Theorem 1.1.

2.3 Proof of Theorem 1.2

Similar to the proof of Theorem 3.3 in Lu & Su (2015), we have to show

supw∈W

∣∣∣∣CVn(w)− FPEn(w)

FPEn(w)

∣∣∣∣ = op(1) (A.3)

in the weight spaceW .

Based on the proof of Theorem 3.3 in Lu & Su (2015), we have

CVn(w)− FPEn(w)

= CV1n(w) + CV2n(w) + CV3n(w) + CV4n(w) + CV5n,

10

where

CV1n(w) =1

n

n∑i=1

[µi −

M∑m=1

wmxTi(m)Θi(m)

]ψτ (εi),

CV2n(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

0

[1εi ≤ s − 1εi ≤ 0 − F (s|xi) + F (0|xi)]ds,

CV3n(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

0

[F (s|xi) + F (0|xi)]ds

−Exi

[∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

0

[F (s|xi) + F (0|xi)]ds

],

CV4n(w) =1

n

n∑i=1

Exi

∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

0

[F (s|xi) + F (0|xi)]ds

−Exi

[∫ ∑Mm=1 wmxT

i(m)Θ(m)−µi

0

[F (s|xi) + F (0|xi)]ds

],

and

CV5n =1

n

n∑i=1

ρτ(εi) − E[ρτ(εi)].

To prove (A.3), we need to prove

(i)minw∈WFPEn(w) ≥ E[ρτ (ε)]− op(1);

(ii)supw∈W |CV1n(w)| = op(1);

(iii)supw∈W |CV2n(w)| = op(1);

(iv)supw∈W |CV3n(w)| = op(1);

(v)supw∈W |CV4n(w)| = op(1);

(vi)CV5n = op(1).

The proofs of (i) and (vi) can be found in Lu & Su (2015). In the following, we prove (ii)-(v).From Lu & Su (2015), it is shown that

CV1n(w) = CV1n,1(w)− CV1n,2(w),

where

CV1n,1(w) =1

n

n∑i=1

[µi −

M∑m=1

wmxTi(m)Θ∗(m)

]ψτ (εi)

and

CV1n,2(w) =1

n

n∑i=1

µi −

M∑m=1

wmxTi(m)

[Θi(m) −Θ∗(m)

]ψτ (εi).

To prove (ii), it suffices to show that supw∈W |CV1n,1(w)| = op(1) and supw∈W |CV1n,2(w)| =op(1).Recognising that E[CV1n,1(w)] = 0 and Var(CV1n,1(w)) = O(k/n), we haveCV1n,1(w) =

11

op(1) for each w ∈ W . When both M and k = max1≤m≤Mkm are finite, based on the proof ofTheorem 3.3 in Lu & Su (2015), we can apply the Glivenko-Cantelli Theorem (e.g., Theorem2.4.1 in van der Vaart & Wellner (1996)) to show that supw∈W |CV1n,1(w)| = op(1). FollowingLu & Su (2015), define the class of functions

G = g(·, ·; w) : w ∈ W,

where g(·, ·; w) : R× Rdx → R is

g(εi,xi; w) =1

n

n∑i=1

[µi −

M∑m=1

wmxTi(m)Θ∗(m)

]ψτ (εi).

Define the metric |·|1 onW where |w−w|1 =∑M

m=1 |wm−wm|, for any w = (w1, . . . , wM) ∈W and w = (w1, . . . , wM) ∈ W . The ε-covering number ofW in high-dimensional quantilemodel averaging isN (ε,W , | · |1) = O(1/εM). Lu & Su (2015) has proved that |g(εi,xi; w)−g(εi,xi; w)| ≤ cΘ|w −w|1 max1≤m≤M ‖xi(m)‖, where cΘ = max1≤m≤M ‖Θ∗(m)‖ = O(k

1/2),

and that E max1≤m≤M ‖xi(m)‖ < ∞ when M and k are finite implies that the ε-bracketingnumber of G with respect to the L1(P )-norm is given by N[ ](ε,G, L1(P )) ≤ C/εM for somefinite C. Thus, applying Theorem 2.4.1 in van der Vaart & Wellner (1996), we can concludethat G is Glivenko-Cantelli.

When either M → ∞ or k → ∞ as n → ∞, let hn = 1/(klogn) and introducegrids using regions of the form Wj = w : |w − wj|1 ≤ hn such that W is covered withN = O(1/hMn ) regions Wj, j = 1, . . . , N . Lu & Su (2015) proved that

supw∈W |CV1n,1(w)| ≤ max1≤j≤N

|CV1n,1(wj)|+ op(1)

and for any ε > 0,

P

(max

1≤j≤NCV1n,1(wj) ≥ 2ε

)≤ P

(max

1≤m≤M

1

n

n∑i=1

|bi(m)| · 1bi(m) ≤ en ≥ ε

)

+ P

(max

1≤m≤M

1

n

n∑i=1

|bi(m)| · 1|bi(m)| ≥ en ≥ ε

)= Tn1 + Tn2(say),

where en = (Mnk2)1/4 and bi(m) = µi − xTi(m)Θ

∗(m).

From Lu & Su (2015), Tn2 = o(1) and

Tn1 ≤ 2 exp

(− nε2

2kσ2 + 2ε(Mnk2)1/4/3

+ logM

),

where σ2 <∞ is a constant.

12

Thus, we only have to establish

exp

(− nε2

2kσ2 + 2ε(Mnk2)1/4/3

+ logM

)= o(1). (A.4)

Now, using Part (i) of Assumption (C.3), we can obtain n3/[k2M(logM)4] → ∞. In the

following, we let c and c′ be constants. By

6kσ2 2ε(Mnk2)1/4,[

(3nε2)

(4ε(Mnk2)1/2)4

]4

=34n3ε2

44Mk2 ≥

cn3

k2M(logM)4

→∞,[6kσ2logM

4ε(Mnk2)1/4

]≤[c′(kM)1/2

(Mn)1/4

]2

=c′2kM1/2

n1/2≤ c′2M

2k2logn

n1/2→ 0,

logM < logn n1/2/(M2k2) n3/(Mk

2)→∞,

we have

exp

(− nε2

2kσ2 + 2ε(Mnk2)1/4/3

+ logM

)

= exp

(−3nε2 − 6kσ2logM − 2ε(Mnk

2)1/4logM

6kσ2 + 2ε(Mnk2)1/4

)

= exp

−

[3nε2

4ε(Mnk2)1/4− 6kσ2logM

4ε(Mnk2)1/4− 2ε(Mnk

2)1/4logM

4ε(Mnk2)1/4

]→ 0. (A.5)

By Part (i) of Assumption (C.3), Lemma A.4 in Appendix 2.1 and the triangle inequality,

supw∈W|CV1n,2(w)| ≤ sup

w∈W

M∑m=1

wm1

n

n∑i=1

|xTi(m)[Θi(m) −Θ∗(m)]ψτ (εi)|

≤ M max1≤i≤n

‖Θi(m) −Θ∗(m)‖ max1≤m≤M

1

n

n∑i=1

‖xTi(m)‖

≤ Op(n−1/2Mk

1/2(logn)1/2)Op(k

1/2) = op(1). (A.6)

Hence supw∈W |CV1n,2(w)| = op(1).

Next, we prove (iii). Lu & Su (2015) have proved that

CV2n(w) = CV2n,1(w) + CV2n,2(w),

where

CV2n,1(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

0

[1εi ≤ s − 1εi ≤ 0 − F (s|xi) + F (0|xi)]ds

13

and

CV2n,2(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

[1εi ≤ s − 1εi ≤ 0 − F (s|xi) + F (0|xi)]ds.

We have to show supw∈W |CV2n,1(w)| = op(1) and supw∈W |CV2n,2(w)| = op(1). Recognis-ing the fact that 1εi ≤ s − 1εi ≤ 0 − F (s|xi) + F (0|xi) ≤ 2, Lemma A.4 and Part (i) ofAssumption (C.3), we have

CV2n,2(w) ≤ 2

n

n∑i=1

|M∑m=1

wmxTi(m)[Θi(m) −Θ∗(m)]|

≤ 2M max1≤i≤n

‖Θi(m) −Θ∗(m)‖ max1≤m≤M

1

n

n∑i=1

‖xTi(m)‖

= Op(Mn−1/2k1/2

(logn)1/2)Op(k1/2

) = op(1). (A.7)

The proof of supw∈W |CV2n,1(w)| = op(1) is analogous to that of supw∈W |CV1n,1(w)| =op(1) and thus omitted.

Now let us establish (iv). Lu & Su (2015) have proved that

CV3n(w) = CV3n,1(w) + CV3n,2(w)

andCV3n,2(w) ≤ CV3n,21(w) + CV3n,22(w),

where

CV3n,1(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

0

[F (s|xi) + F (0|xi)]ds

−E

[∫ ∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

0

[F (s|xi) + F (0|xi)]ds

],

CV3n,2(w) =1

n

n∑i=1

∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

[F (s|xi) + F (0|xi)]ds ,

−E

[∫ ∑Mm=1 wmxT

i(m)Θi(m)−µi

∑Mm=1 wmxT

i(m)Θ∗

(m)−µi

[F (s|xi) + F (0|xi)]ds

],

CV3n,21(w) =1

n

n∑i=1

∣∣∣∣∣M∑m=1

wmxTi(m)

[Θi(m) −Θ∗(m)

]∣∣∣∣∣ , and

CV3n,22(w) =1

n

n∑i=1

Exi

∣∣∣∣∣M∑m=1

wmxTi(m)

[Θi(m) −Θ∗(m)

]∣∣∣∣∣ .We have to show

supw∈W|CV3n,1(w)| = op(1),

supw∈W|CV3n,21(w)| = op(1),

14

andsupw∈W|CV3n,22(w)| = op(1).

we can prove that supw∈W |CV3n,1(w)| = op(1), analogous to the proof of supw∈W |CV1n,1(w)| =op(1). Also, from (A.7), supw∈W |CV3n,21(w)| = op(1). By the triangle and Cauchy-Schwarzinequalities, the fact that ATBA ≤ λmax(B)ATA for any symmetric matrix B, Lemma A.4and Assumption (C.3)(i), we have

supw∈W

CV3n,22(w) ≤ supw∈W

1

n

n∑i=1

|M∑m=1

wmExi |xTi(m)[Θi(m) −Θ∗(m)]|

≤ supw∈W

1

n

n∑i=1

|M∑m=1

wm[Θi(m) −Θ∗(m)]TE[xi(m)x

Ti(m)][Θi(m) −Θ∗(m)]

12

≤ M max1≤m≤M

[λmax(Exi |xTi(m))]1/2 max

1≤i≤nmax

1≤m≤M‖Θi(m) −Θ∗(m)‖

= op(1) (A.8)

To prove (v), noting that |F (s|xi)−F (0|xi)| ≤ 1 and by equation (A.8), we can show

supw∈W

CV4n(w) ≤ supw∈W

1

n

n∑i=1

E[xi

∣∣∣∣∣M∑m=1

wmxTi(m)[Θi(m) − Θ(m)]

∣∣∣∣∣ = op(1).

This completes the proof.

3 Part 2: Statistical inference after model averaging

To be completed.

4 Part 3: Frequentist model averaging under inequality con-straints

4.1 Model framework and notations

This subproject expands the application of frequentist model averaging to models that containinequality constraints on parameters. Let yi be generated by the following data generatingprocess:

yi = µi + εi = xTi0θ0 + εi, i = 1, 2, ..., n,

where µi = xTi0θ0 is the conditional expectation of yi, xi0 = (xi1, xi2, ..., xi∞)T is an infinitedimensional vector of regressors, θ0 = (θ1, θ2, ..., θ∞)T is the vector of the unknown coeffi-cients, and εi’s are independently distributed errors with E(εi) = 0 and E(ε2i ) = σ2

i for all

15

i. In practice, it is likely that some regressors in xi0 will be omitted, resulting in the workingmodel

yi = xTi θ + ei, i = 1, 2, ..., n, (4.1)

where xi = (xi1, xi2, ..., xip)T , θ = (θ1, θ2, ..., θp)

T and p is fixed. The misspecification errorassociated with (4.1) is thus µi − xTi θ. We can write (4.1) as

Y = Xθ + e, (4.2)

where Y = (y1, ..., yn)T , X = (x1, ...,xn)T , and e = (e1, ..., en)T . In addition, we letµ = (µ1, ..., µn)T and Λ = diag(σ2

1, σ22, ..., σ

2n).

Suppose that the investigator’s interests center around M distinct sets of constraints allembedded within

Rθ ≥ 0, (4.3)

that can accommodate virtually all linear equality and inequality constraints encountered inpractice, where θ is a parameter vector andR is a known vector. Now, let M be fixed andRm

be an rm×p known matrix. WriteR = Rm for themth set of constraints on θ,m = 1, 2, ...,M .Thus, there are M candidate models each subject to a distinct, competing set of constraintson θ given by Rmθ ≥ 0, m = 1, 2, ...,M . We refer to the model subject to the mth set ofinequality constraintsRmθ ≥ 0 as the mth candidate model.

Let θ(m) be the least squares (LS) estimator of θ subject to Rmθ ≥ 0. This estimatoris obtained by quadratic programming and many software routines such as the solve.QP in theR package quadprog can be used to solve this problem. Write µ(m) = Xθ(m). The modelaverage estimator of µ is thus

µ(w) =∑M

m=1wmµ(m) =

∑M

m=1wmXθ(m), (4.4)

where w = (w1, w2, ..., wM)T is a weight vector in the following unit simplex ofRM :

H =w ∈ [0, 1]M :

∑M

m=1wm = 1

.

The model average estimator µ(w) smoothes across the estimators µ(m)’s, m = 1 · · · ,M ,each corresponds to distinct set of inequality constraints on θ. This is unlike Kuiper et al.’s(2011) GORIC-based selection approach that uses one of µ(m)’s. A description of the latterapproach is in order. The GORIC-based model selection estimator chooses the model with thesmallest

GORICm = −2logf(Y |Xθ(m)) + 2PTm,

where θ(m) is the order-restricted maximum likelihood estimator of θ, PTm = 1+∑p

l=1wl(p,W , Cm)lis the infimum of a bias term, p is the dimension of θ, W = XTΛ−1X , Cm = θ ∈Rp|Rmθ ≥ 0 is the set of θ that satisfies themth set of inequality constraints, andwl(p,W , Cm)is the level probability for the hypothesis of θ(m) ∈ Cm when θ ∈H = y = (y1, ..., yk)|y1 =... = yk. While Kuiper et al. (2011) considered a t-variate normal linear model with finite di-mensional vector of regressors, we consider a univarite linear model with infinite dimensionalvector of regressors without assuming normality of errors.

16

The biggest challenge confronting the model averaging approach is choosing modelweights. Here, we consider a J-fold cross-validation approach, which extends the Jackknifemodel averaging (JMA) approach proposed by Hansen & Racine (2012). The next subsec-tion introduces the method and develops an asymptotic theory for the resultant J-fold cross-validation model average (JCVMA) estimator.

4.2 A cross-validation weight choice method

The idea of cross-validation is to minimise the squared prediction error of the final estimatorby comparing the out-of-sample fit of parameter estimates using candidate grid choices ontraining data sets to holdout samples. Cross-validation is a technique routinely applied whenover-fitting may be an issue. To implement the method, we divide the data into J groupssuch that each group contains n0 = n/J observations. Let θ(−j)

(m) be the estimator of θ under

Rmθ ≥ 0 with the jth group removed from the sample. Write µ(−j)(m) = X(j)θ

(−j)(m) , where

X(j) is an n0 × p matrix containing observations in the (1 + (j − 1)n0, ..., jn0) rows of X .That is, we delete the jth group of observations from the sample and use the n − n0 sampleto estimate the coefficients θ subject to Rmθ ≥ 0. Then, based on the estimated coefficients,we predict the n0 observations that are excluded. We repeat this process J times until eachobservation in the sample has been held out once. This leads to the vector of estimators

µ(m) =

µ

(−1)(m)

µ(−2)(m)...

µ(−J)(m)

=

X(1)θ

(−1)(m)

X(2)θ(−2)(m)

...X(J)θ

(−J)(m)

=

X(1)

X(2)

. . .X(J)

θ

(−1)(m)

θ(−2)(m)...

θ(−J)(m)

=Aθ(m),

whereA is a block diagonal matrix containing observations ofX and θ(m) = (θ(−1)T(m) , ..., θ

(−J)T(m) )T

is a Jp×1 vector. Substituting θ for θ and µ for µ in the model average results in the followingjackknife model average estimator of µ:

µ(w) =∑M

m=1wmµ(m) =

∑M

m=1wmAθ(m).

Now, consider the following measure based on squared cross-validation errors:

CVJ(w) = ‖µ(w)− Y ‖2,

where ‖a‖2 = aTa. The optimal choice of w resulting from cross-validation is

w = arg minw∈H

CVJ(w). (4.5)

Substituting w for w in (4.4) results in µ(w), the JCVMA estimator of µ.

The efficiency of the JCVMA estimator µ(w) is evaluated in terms of the followingsquared error loss function:

L(w) = ‖µ(w)− µ‖2,

To prove the asymptotic optimality of µ(w), we need the following conditions:

17

Condition (C.1). For any θ(m), there exists a limit θ∗(m) such that

θ(m) − θ∗(m) = Op(n−1/2).

Condition (C.2). ξ−1n n1/2 = o(1) and ξ−2

n σ2∑M

m=1 ‖µ∗(m) − µ‖2 = o(1), where µ∗(m) =

Xθ∗(m), ξn = infw∈H

L∗(w), µ∗(w) =∑M

m=1 wmµ∗(m), L

∗(w) = ‖µ∗(w) − µ‖2, and σ2 =

max1≤i≤n

σ2i .

Condition (C.3). max1≤j≤J

λ(

1n0X(j)TX(j)

)= Op(1), where λ(·) is the maximum singular

value of matrix.

Remark 4.1. Condition (C.1) places a condition on the rate of convergence of θ(m) to itslimit θ∗(m). This condition is derived from results by White (1982), who proved the consis-tency and asymptotic normality of maximum likelihood estimators of unknown parametersunder a compact subset in misspecified models. The first part of Condition (C.2) impliesthat ξn → ∞, which in turn implies that all candidate models are misspecified. It also re-quires ξn to diverge at a faster rate than n1/2. The second part of Condition (C.2) means thatσ2∑M

m=1 ‖µ∗(m) − µ‖2diverges at a slower rate than ξ2n. This condition is commonly used

in other model averaging studies. See Wan et al. (2010), Liu & Okui (2013) and Ando & Li(2014). Condition (C.3) places a restriction on the maximal singular value of the jth block ofX .

The following theorem gives the asymptotic optimality of the JCVMA estimator:

Theorem 4.1. Suppose Conditions (C.1)-(C.3) hold. Then

L(w)

infw∈H

L(w)

p−→ 1. (4.6)

Theorem 4.1 suggests that model averaging using w as the weight vector is asymptoticallyoptimal in the sense that the resulting squared error loss is asymptotically identical to that ofthe infeasible best possible model average estimator. The next subsection gives the proof ofTheorem 4.1.

4.3 Proof of Theorem 4.1

Note that

CVJ(w)

= ‖µ(w)− Y ‖2

= ‖µ(w)− µ+ µ(w)− µ∗(w)− (µ(w)− µ∗(w)) + µ− Y ‖2

≤ ‖µ(w)− µ‖2 + ‖µ(w)− µ∗(w)‖2 + ‖µ(w)− µ∗(w))‖2 + ‖µ− Y ‖2

+2‖µ(w)− µ‖‖µ(w)− µ∗(w)‖+ 2‖µ(w)− µ‖‖µ(w)− µ∗(w))‖+ 2|(µ(w)− µ)Tε|+2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w))‖+ 2‖µ(w)− µ∗(w)‖‖ε‖+ 2‖µ(w)− µ∗(w))‖‖ε‖

18

≤ ‖µ(w)− µ‖2 + ‖µ(w)− µ∗(w)‖2 + ‖µ(w)− µ∗(w))‖2

+2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w)‖+ 2‖µ∗(w)− µ‖‖µ(w)− µ∗(w)‖+2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w))‖+ 2‖µ∗(w)− µ‖‖µ(w)− µ∗(w))‖+2‖µ(w)− µ∗(w)‖‖ε‖+ 2|(µ∗(w)− µ)Tε|+ 2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w)‖+2‖µ(w)− µ∗(w)‖‖ε‖+ 2‖µ(w)− µ∗(w)‖‖ε‖+ ‖ε‖2

= L(w) + Πn(w) + ‖ε‖2

and

L(w)

= ‖µ(w)− µ‖2

= ‖µ(w)− µ∗(w) + µ∗(w)− µ‖2

= ‖µ∗(w)− µ‖2 + ‖µ(w)− µ∗(w)‖2 + 2(µ(w)− µ∗(w))T (µ∗(w)− µ)

= L∗(w) + Ξn(w).

Hence to prove (4.6), it suffices to prove

(s) |Πn(w)/L∗(w)| = op(1) (4.7)

and

(s) |Ξn(w)/L∗(w)| = op(1). (4.8)

The proofs of (4.7) and (4.8) entail proving

(s)‖µ(w)− µ∗(w)‖2 = Op(1), (4.9)

(s)‖µ(w)− µ∗(w)‖2 = Op(1) (4.10)

and

(s)∣∣(µ∗(w)− µ)Tε

∣∣ /L∗(w) = op(1). (4.11)

Now, by Conditions (C.1) and (C.3) and the assumption that both J and M are fixed, we have

(s)‖µ(w)− µ∗(w)‖2

≤ max1≤m≤M

‖µ(m) − µ∗(m)‖2

= max1≤m≤M

‖X(θ(m) − θ∗(m))‖2

≤ max1≤m≤M

λ(n−1XTX

)n‖θ(m) − θ∗(m)‖2

≤ max1≤m≤M

λ(n−1

∑J

j=1X(j)TX(j)

)n‖θ(m) − θ∗(m)‖2

≤ max1≤m≤M

∑J

j=1λ(n−1X(j)TX(j)

)n‖θ(m) − θ∗(m)‖2

≤ max1≤m≤M

J max1≤j≤J

λ(J−1n−1

0 X(j)TX(j)

)n‖θ(m) − θ∗(m)‖2

19

≤ max1≤m≤M

max1≤j≤J

λ(n−1

0 X(j)TX(j)

)n‖θ(m) − θ∗(m)‖2

= O(1)nOp(n−1)

= Op(1),

which is (4.9). Next, we prove (4.10). By Conditions (C.1) and (C.3) and the assumption thatboth J and M are fixed, we have

(s)‖µ(w)− µ∗(w)‖2

≤ max1≤m≤M

‖µ(m) − µ∗(m)‖2

= max1≤m≤M

‖Aθ(m) −Xθ∗(m)‖2

= max1≤m≤M

∥∥∥∥∥∥∥∥∥∥A

θ

(−1)(m)

θ(−2)(m)...

θ(−J)(m)

−A

11...1

⊗ θ∗(m)

∥∥∥∥∥∥∥∥∥∥

2

= max1≤m≤M

trace

θ

(−1)(m) − θ∗(m)

θ(−2)(m) − θ∗(m)

...θ

(−J)(m) − θ∗(m)

T

ATA

θ

(−1)(m) − θ∗(m)

θ(−2)(m) − θ∗(m)

...θ

(−J)(m) − θ∗(m)

≤ max1≤m≤M

λ(n0−1ATA

)n0

∑J

j=1‖θ(−j)

(m) − θ∗(m)‖2

= O(1)n0JOp(n−1)

= Op(1),

where ‖θ(−j)(m) − θ∗(m)‖2 has the same convergence rate as ‖θ(m) − θ∗(m)‖2, due to the samples

being independent and the sample sizes associated with θ(−j)(m) and θ(m) having the same order.

Hence (4.10) holds. In addition, for any δ > 0, by Conditions (C.1) and (C.2), we obtain

pr

(s)ξ−1n

∣∣(µ∗(w)− µ)Tε∣∣ > δ

≤ pr

(s)ξ−1

n

∑M

m=1wm∣∣(µ∗(m) − µ)Tε

∣∣ > δ

= pr

max1≤m≤M

∣∣(µ∗(m) − µ)Tε∣∣ > ξnδ

≤

∑M

m=1pr∣∣(µ∗(m) − µ)Tε

∣∣ > ξnδ

≤ ξ−2n δ−2

∑M

m=1E

(µ∗(m) − µ)Tε2

≤ ξ−2n δ−2σ2

∑M

m=1‖µ∗(m) − µ‖2

= o(1),

which implies (4.11).

20

It is straightforward to show that

‖ε‖2 = Op(n). (4.12)

By (4.9), (4.10), (4.12) and Condition (C.2), it can be shown that

(s)‖µ∗(w)− µ‖‖µ(w)− µ∗(w)‖/L∗(w)

= (s)‖µ(w)− µ∗(w)‖/L∗1/2(w)

≤ ξ−1/2n (s)‖µ(w)− µ∗(w)‖

= op(1), (4.13)

(s)‖µ∗(w)− µ‖‖µ(w)− µ∗(w)‖/L∗(w)

= (s)‖µ(w)− µ∗(w)‖/L∗1/2(w)

≤ ξ−1/2n (s)‖µ(w)− µ∗(w)‖

= op(1), (4.14)

(s)‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w)‖/L∗(w)

≤ (s)‖µ(w)− µ∗(w)‖/L∗1/2(w)(s)‖µ(w)− µ∗(w)‖/L∗1/2(w)

≤ ξ−1/2n (s)‖µ(w)− µ∗(w)‖ξ−1/2

n (s)‖µ(w)− µ∗(w)‖= op(1), (4.15)

(s)‖µ(w)− µ∗(w)‖‖ε‖/L∗(w)

≤ (s)‖µ(w)− µ∗(w)‖/L∗1/2(w)(s)‖ε‖/L∗1/2(w)

≤ ξ−1/2n (s)‖µ(w)− µ∗(w)‖ξ−1/2

n (s)‖ε‖= op(1) (4.16)

and

(s)‖µ(w)− µ∗(w)‖‖ε‖/L∗(w)

≤ (s)‖µ(w)− µ∗(w)‖/L∗1/2(w)(s)‖ε‖/L∗1/2(w)

≤ ξ−1/2n (s)‖µ(w)− µ∗(w)‖ξ−1/2

n (s)‖ε‖= op(1). (4.17)

Combining (4.11)–(4.17), we obtain

(s) |Πn(w)/L∗(w)|= (s)

∣∣‖µ(w)− µ∗(w)‖2 + ‖µ(w)− µ∗(w))‖2

+ 2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w)‖+ 2‖µ∗(w)− µ‖‖µ(w)− µ∗(w)‖+ 2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w))‖+ 2‖µ∗(w)− µ‖‖µ(w)− µ∗(w))‖+ 2‖µ(w)− µ∗(w)‖‖ε‖+ 2|(µ∗(w)− µ)Tε|+ 2‖µ(w)− µ∗(w)‖‖µ(w)− µ∗(w)‖+ 2‖µ(w)− µ∗(w)‖‖ε‖+ 2‖µ(w)− µ∗(w)‖‖ε‖|

/L∗(w)

≤ ξ−1n (s)‖µ(w)− µ∗(w)‖2 + ξ−1

n (s)‖µ(w)− µ∗(w))‖2

21

+2ξ−1/2n (s)‖µ(w)− µ∗(w)‖ξ−1/2

n (s)‖µ(w)− µ∗(w)‖+ 2ξ−1/2n (s)‖µ(w)− µ∗(w)‖

+2ξ−1n (s)‖µ(w)− µ∗(w))‖2 + 2ξ−1/2

n (s)‖µ(w)− µ∗(w)‖+2ξ−1/2

n (s)‖µ(w)− µ∗(w)‖ξ−1/2n (s)‖ε‖

+2(s)|(µ∗(w)− µ)Tε|/L∗(w) + 2ξ−1/2n (s)‖µ(w)− µ∗(w)‖ξ−1/2

n (s)‖µ(w)− µ∗(w)‖+2ξ−1/2

n (s)‖µ(w)− µ∗(w)‖ξ−1/2n (s)‖ε‖+ 2ξ−1/2

n (s)‖µ(w)− µ∗(w)‖ξ−1/2n (s)‖ε‖

= op(1)

and

(s) |Ξn(w)/L∗(w)|= (s)

∣∣‖µ(w)− µ∗(w)‖2 + 2(µ(w)− µ∗(w))T (µ∗(w)− µ)∣∣ /L∗(w)

≤ ξ−1n (s)‖µ(w)− µ∗(w)‖2 + 2ξ−1/2

n (s)‖µ(w)− µ∗(w)‖= op(1),

which are (4.7) and (4.8) respectively. Then we have (4.6). This completes the proof ofTheorem 4.1.

5 Part 4: Frequentist model averaging over kernel regres-sion estimators

5.1 Model framework and notations

We argue in the proposal bandwidth selection in kernel regression is fundamentally a modelselection problem stemming from the uncertainty about the smoothness of the regression func-tion. If the goal is to derive a good estimator of the regression function, the deriving it by takinga weighted average of estimators based on different smoothness parameters will enlarge thespace of possible estimators and may ultimately lead to a more accurate estimator.

Let us consider the following nonparametric regression model:

yi = f(xi) + ei, i = 1, 2, ..., n, (5.1)

where yi is the response, xi = (xi(1), xi(2), ..., xi(d))′ contains d covariates, f(xi) = µ(i) is an

unknown function of xi, and ei is an independently distributed error term with E(ei) = 0 andE(e2

i ) = σ2i . Equation (5.1) may be expressed in matrix form as

y = µ+ e,

where y = (y1, y2, ..., yn)′, µ = (µ(1), µ(2), ..., µ(n))′,X = (x1, x2, ..., xn)′, and e = (e1, e2, ...en)′.

Clearly, E(e) = 0 and E(e′e) = Ω = diag(σ21, σ

22, ..., σ

2n).

Let k(j)(·) be the kernel function corresponding to the jth element of xi. We assumethat k(j)(·) is a density function that is unimodal, compact and symmetric about 0 and has finitevariance. Let h(j) ∈ R+ be the bandwidth or smoothing parameter for xi(j) for j = 1, 2, ..., d,

22

and h = h(1), h(2), ..., h(d). Write k(j)(·/h(j))/h(j) = kh(j)(·). When the dimension d islarger than one, the product kernel function

kPh (z) =d∏j=1

kh(j)(z(j))

may be used, where z = (z(1), z(2), ..., z(d))′ is a d-dimensional vector. Alternatively, one may

use the following spherical kernel function with a common bandwidth across the differentdimensions of the kernel function:

kSh (z) =kh(√z′z)∫

kh(√z′z)dz

,

where kh(u) = 1hdk(u

h). A popular choice for k is the standard d-variate Normal density

k(u) = (2π)−d/2 exp(−u2/2).

The most popular estimator of µ is the Nadaraya-Watson (Nadaraya, 1964; Watson, 1964) orlocal constant kernel estimator, defined as

µ = Ky =

kh(x1−x1)∑nj=1 kh(xj−x1)

kh(x2−x1)∑nj=1 kh(xj−x1)

· · · kh(xn−x1)∑nj=1 kh(xj−x1)

kh(x1−x2)∑nj=1 kh(xj−x2)

kh(x2−x2)∑nj=1 kh(xj−x2)

· · · kh(xn−x2)∑nj=1 kh(xj−x2)

...... . . . ...

kh(x1−xn)∑nj=1 kh(xj−xn)

kh(x2−xn)∑nj=1 kh(xj−xn)

· · · kh(xn−xn)∑nj=1 kh(xj−xn)

y,

where K is an n×n weight matrix comprising the kernel weights with the ijth element givenby Kij = kh(xj − xi)/

∑nl=1 kh(xl − xi).

An appropriate choice of h is essential to achieving a good estimator of µ. Bandwidthselection has always been an intense subject of debate despite an extensive body of literatureon the subject. The goal here is to consider not one but a combination of bandwidths anddevelop an estimator of µ on that basis. Let h1, h2, · · · , hMn each be a d-dimensional vectorof bandwidths, with hm = hm(1), hm(2), ..., hm(d) for m = 1, 2, ...,Mn. We allow Mn,the size of the bandwidth candidate set, to increase to infinity as n → ∞. It is well-knownthat if h(1), h(2), ..., h(d) all have the same order of magnitudes, then the optimal choice ofh(1), h(2), ..., h(d) for minimising mean squared error is h(j) ∼ n−1/(d+4) for j = 1, 2, ..., d; seeLi & Racine (2007).

Now, based on the mth candidate bandwidth, the kernel estimator of µ is

µm =

khm (0)∑n

j=1 khm (xj−x1)

khm (x2−x1)∑nj=1 khm (xj−x1)

· · · khm (xn−x1)∑nj=1 khm (xj−x1)

khm (x1−x2)∑nj=1 khm (xj−x2)

khm (0)∑nj=1 khm (xj−x2)

· · · khm (xn−x2)∑nj=1 khm (xj−x2)

...... . . . ...

khm (x1−xn)∑nj=1 khm (xj−xn)

khm (x2−xn)∑nj=1 khm (xj−xn)

· · · khm (0)∑nj=1 khm (xj−xn)

y ≡ Kmy.

An alternative to using one estimator of µ based on one of h1, h2, · · · , hMn as bandwidth isto form a weighted average of µm’s, m = 1, · · · ,Mn, each based on a different bandwidth.Now, let w = (w1, w2, ..., wMn)′ be a weight vector in the following unit simplex of RMn:

Hn =w ∈ [0, 1]Mn :

∑Mn

m=1wm = 1

.

23

We are interested in the following average or combined estimator of µ:

µ(w) =∑Mn

m=1wmµm =

∑Mn

m=1wmKmy ≡ K(w)y.

By enlarging the space of possible estimators, µ(w) allows us to gain information from allestimators obtained from the bandwidth candidate set. The major challenge confronting theimplementation of µ(w) is choosing appropriate weights for the individual estimators. Theweights should reflect how much the data support the different estimates based on differentbandwidths. Clearly, when w contains an element of one in one entry and zero everywhereelse, µ(w) reduces to the kernel estimator obtained using just one single bandwidth. Wepropose a leave-one-out cross validation method to choose weights in µ(w). As will be shown,this weight choice results in an estimator of µ with an optimal asymptotic property.

Now, let

µm =

0

khm (x2−x1)∑j 6=1 khm (xj−x1)

· · · khm (xn−x1)∑j 6=1 khm (xj−x1)

khm (x1−x2)∑j 6=2 khm (xj−x2)

0 · · · khm (xn−x2)∑j 6=2 khm (xj−x2)

...... . . . ...

khm (x1−xn)∑j 6=n khm (xj−xn)

khm (x2−xn)∑j 6=n khm (xj−xn)

· · · 0

y ≡ Kmy

be the leave-one-out kernel estimator of µ based on a given bandwidth, hm. Correspondingly,the leave-one-out model averaging estimator of µ is

µ(w) =∑Mn

m=1wmµm =

∑Mn

m=1wmKmy ≡ K(w)y.

Write em = y − µm and e = (e1, · · · , eMn). Our choice of w is based on a minimisation ofthe quadratic form

CVn(w) = ‖y − µ(w)‖2 = w′e′ew.

This yields w = arg minw∈Hn

CVn(w). Substituting w in µ(w) leads to the estimator µ(w), which

we shall refer to as the kernel averaging estimator (KAE). As CVn(w) is a quadratic functionin w, the computation of w is simple and straightforward.

5.2 Preliminary results on asymptotic optimality

We have developed some preliminary results on the asymptotic properties of µ(w). Our de-velopment is based on the squared error loss function

Ln(w) = ‖µ(w)− µ‖2.

We will show that Ln(w), the squared error loss of µ(w), is identical to infw∈HnLn(w), theinfimum of the squared error loss defined above, as n→∞.

To develop our theory, let A(w) = In −K(w) and define the conditional risk function

Rn(w) = E Ln(w)|X = ‖A(w)µ‖2 + trK ′(w)K(w)Ω. (5.2)

24

Conformable to the definitions of Ln(w) and Rn(w), the leave-one-out squared errorloss and risk functions may be written as

Ln(w) = ‖µ(w)− µ‖2

andRn(w) = E

Ln(w)|X

=∥∥∥A(w)µ

∥∥∥2

+ trK ′(w)K(w)Ω (5.3)

respectively, where A(w) = In − K(w). Let Km,ij be the ijth element of the matrix Km,ξn = infw∈Hn Rn(w), w0

m be an Mn × 1 vector with an element of 1 in the mth entry and 0everywhere else, and λ(·) be the maximum singular value of a matrix.

The KAE has the following asymptotic property:

Theorem 5.1. As n→∞, if

E(e4Gi ) ≤ κ <∞, for all i = 1, 2, ..., n, (5.4)

Mnξ−2Gn

Mn∑m=1

Rn(w0

m)G a.s.−−→ 0, (5.5)

for some constants κ and fixed integer G (1 ≤ G <∞),

max1≤m≤Mn

max1≤i≤n

n∑j=1

Km,ij = O(1), max1≤m≤Mn

max1≤j≤n

n∑i=1

Km,ij = O(1), a.s. (5.6)

µ′µ

n= O(1), a.s. (5.7)

and

max1≤m≤Mn

max1≤i,j≤n

khm(xj − xi)∑nl=1 khm(xl − xi)

= O(bn), a.s. (5.8)

where bn is required to satisfy

lim supn→∞

nb2nlog4(n) <∞, a.s. (5.9)

and

ξ−1n nb2

n = o(1), a.s. (5.10)

thenLn(w)

infw∈HnLn(w)

p−→ 1. (5.11)

By Theorem 5.1, µ(w), the KAE, is asymptotically optimal in the sense that its squared errorloss is asymptotically identical to that of the infeasible best possible averaging estimator. Theproof of Theorem 5.1 is given in the Subsection 5.3 below. The following remarks may bemade about the theorem and the conditions that form the basis for its validity.

25

Remark A.1. Condition (5.4) imposes a restriction on the moments of the error term. Condi-tion (5.5) has been widely used in studies of model averaging in linear regressions, includingpapers by Wan et al. (2010), Liu & Okui (2013) and Ando & Li (2014). As this conditionhas never been applied to nonparametric regression, we discuss in details its implications forour analysis in Subsection 5.3. Condition (5.6) places a limitation on the 1- and maximum-norms of the kernel function matrixKm and is almost the same as Assumption (i) of Speckman(1988). Condition (5.7) imposes a restriction on the average value of µ2

i , and is similar tothe Condition (11) of Wan et al. (2010). Given that the kernel function cannot be negative,Condition (5.8) implies that all elements of the kernel function matrix go to zero. Conditions(5.8) and (5.9) are the same as Assumption 1.3.3 (ii) of Hardle et al. (2000). If we assumeξn ∼ nd/(d+4), it is straightforward to obtain (5.10) from (5.9), implying that (5.10) is reason-able (note that ξ−1

n nb2n = ξ−1

n log−4(n)×nb2nlog4(n) ∼ n−d/(d+4)log−4(n)× equation (3.8) =

o(1) × O(1) = o(1)). The rationality of assuming ξn ∼ nd/(d+4) is discussed in Subsection5.3.

Remark A.2. Suppose that we assume

supw∈Hn

∣∣∣∣∣Rn(w)

Rn(w)− 1

∣∣∣∣∣ a.s.−−→ 0, (5.12)

and

limn→∞

max1≤m≤Mn

λ(Km) ≤ κ1 <∞, a.s. (5.13)

for some constant κ1 as n → ∞. It can be seen from the proof of Theorem 5.1 in Subsection5.3 that (5.12) and (5.13) can be used in lieu of (5.7)-(5.10) as the conditions for the asymp-totic optimality (4.6). Condition (5.12) requires the difference between the leave-one-out riskfunction and the general risk function to decrease as n increases, and is almost identicalto Condition (A.5) of Hansen & Racine (2012) and Condition (10) of Zhang et al. (2013).Condition (5.13) bears a strong similarly to Condition (A.4) of Hansen & Racine (2012) andCondition (9) of Zhang et al. (2013). This condition places an upper bound on the maximumsingular value of the leave-one-out kernel function matrix Km. Even though (5.12) and (5.13)are more interpretable, we prefer (5.7)-(5.10) and consider them the formal conditions forTheorem 4.1 because they are less stringent than (5.12) and (5.13). That said, Conditions(5.12) and (5.13) still carry significance for two reasons. First, as discussed above, these con-ditions are analogous to the conditions used in several other model averaging studies. Second,and more important, Theorem 5.1 is actually a direct result of (5.12) and (5.13); as shown inSubsection 5.3, we use Conditions (5.7)-(5.10) to prove (5.12) and (5.13), which in turn leadto Theorem 5.1.

Thus, we have the following theorem as a supplement to Theorem 5.1:

Theorem 5.2. Other things being equal, the asymptotic optimality of µ(w) described in (5.11)still holds if Conditions (5.7)-(5.10) are replaced by the stronger but more interpretable Con-ditions (5.12) and (5.13).

The proof of Theorem 5.2 is contained in Subsection 5.3.

26

5.3 Further technical details and proofs of Theorems 5.1 and 5.2

5.3.1 An Elaboration of Condition (5.5)

In this subsection, we explain the rationale of Condition (5.5) under a nonparametric setup.For simplicity, consider the situation where the bandwidths hm(j), j = 1, 2, ..., d, all have thesame order of magnitude. From Li & Racine (2007), the mean squared error of the kernelestimator for the regression function is

MSEm(x)

= E(fm(x)− f(x)

)2

∼

(

d∑j=1

h2m(j)

)2

+1

nhm(1)hm(2) · · · hm(d)

, (5.14)

where fm(x) = 1n

∑ni=1

khm (xi−x)∑nj=1 khm (xj−x)

yi is the kernel estimator with a d-dimensional band-width hm = hm(1), hm(2), ..., hm(d). As n → ∞, hm(j) → 0, and the optimal choice ofhm(j) that minimises MSEm(x) is hm(j),opt ∼ n−1/(d+4). By the optimal choice of hm(j), we

have MSEm(x) ∼(∑d

j=1 h2m(j),opt

)2

∼ n−4/(d+4), and thus Rn(w0m) ∼ nd/(d+4) → ∞. As

ξn = infw∈Hn Rn(w), we have

ξn = O(nd/(d+4)). (5.15)

Thus, it is reasonable to assume that ξn ∼ nd/(d+4). If we apply the optimal choice of band-width, i.e., hm(j) ∼ n−1/(d+4), to all candidate models, then by (5.14) and ξn ∼ nd/(d+4), wehave

Mnξ−2Gn

Mn∑m=1

Rn(w0

m)G

∼M2n

n− 2dGd+4

nG(

d∑j=1

h2m(j)

)2G

+

(1

hm(1)hm(2) · · · hm(d)

)G

∼ max

M2nn− 2dGd+4nG

(d∑j=1

h2m(j)

)2G

, M2n

n−2dGd+4

(hm(1)hm(2) · · · hm(d))G

. (5.16)

As hm(j) ∼ n−1/(d+4), the two quantities inside the bracket in (5.16) have the same order.Thus, if

Mn = o(n

dG2(d+4)

),

then

Mnξ−2Gn

Mn∑m=1

Rn(w0

m)G ∼M2

n

(n−

2dGd+4

1

hdGm(j)

)∼M2

n

(n−

2dGd+4n

dGd+4

)a.s.−−→ 0,

27

which is Condition (5.5).

5.3.2 Useful Preliminary Results

In this subsection, we provide a lemma useful for proving Theorem 5.1. Let

Fm =

khm (0)∑

j 6=1 khm (xj−x1)khm (0)∑

j 6=2 khm (xj−x2)

. . .khm (0)∑

j 6=n khm (xj−xn)

,

and

Bm = In + Fm =

∑nj=1 khm (xj−x1)∑j 6=1 khm (xj−x1) ∑n

j=1 khm (xj−x2)∑j 6=2 khm (xj−x2)

. . . ∑nj=1 khm (xj−xn)∑j 6=n khm (xj−xn)

.

Then Km and Km satisfy the following relationship:

Km − In = Bm(Km − In) = (In + Fm)(Km − In). (5.17)

Lemma 5.1. If Conditions (5.4), (5.6) and (5.8)-(5.10) hold, we obtain

max1≤m≤Mn

λ(Km) = O(1), a.s. (5.18)

λ(Ω) = O(1), a.s. (5.19)

max1≤m≤Mn

λ(Bm) = 1 +O(bn), a.s. (5.20)

max1≤m≤Mn

λ(Fm) = O(bn), a.s. (5.21)

max1≤m≤Mn

tr(Fm) = O (nbn) , a.s. (5.22)

max1≤m≤Mn

tr(Km) = O (nbn) , a.s. (5.23)

and

max1≤m,t≤Mn

tr(K ′mKt) = O (nbn) , a.s. (5.24)

where λ(·) denotes the maximum singular value of a matrix.

28

Proof. By Reisz’s inequality (Hardy et al., 1952; Speckman, 1988) and Condition (5.6), it canbe shown that

max1≤m≤Mn

λ(Km) ≤ max1≤m≤Mn

(max1≤i≤n

n∑j=1

|Km,ij| max1≤j≤n

n∑i=1

|Km,ij|

) 12

= O(1), a.s.

which is equation (5.18). Condition (5.4) guarantees that the second and higher order momentsof the random error term all exist. This implies (5.19) is true. By Condition (5.8), we have

max1≤m≤Mn

λ(Bm) = max1≤m≤Mn

max1≤i≤n

∑nl=1 khm(xl − xi)∑l 6=i khm(xl − xi)

= max1≤m≤Mn

max1≤i≤n

1

1− khm (0)∑nl=1 khm (xl−xi)

≤ 1

1− max1≤m≤Mn

max1≤i,j≤n

khm (xj−xi)∑nl=1 khm (xl−xi)

= 1 +O(bn), a.s.

which is (5.20). Similarly, by Conditions (5.6) and (5.8), we have

max1≤m≤Mn

λ(Fm)

= max1≤m≤Mn

max1≤i≤n

∑nl=1 khm(xl − xi)∑l 6=i khm(xl − xi)

− 1

= max1≤m≤Mn

max1≤i≤n

khm(0)∑l 6=i khm(xl − xi)

= max1≤m≤Mn

max1≤i≤n

khm(0)∑nl=1 khm(xl − xi)

∑nl=1 khm(xl − xi)∑l 6=i khm(xl − xi)

= max1≤m≤Mn

max1≤i≤n

khm(0)∑nl=1 khm(xl − xi)

1

1− khm (0)∑nl=1 khm (xl−xi)

≤ max1≤m≤Mn

max1≤i≤n

khm(0)∑nl=1 khm(xl − xi)

1

1− max1≤i≤n

khm (0)∑nl=1 khm (xl−xi)

≤ max1≤m≤Mn

max1≤i,j≤n

khm(xj − xi)∑nl=1 khm(xl − xi)

1

1− max1≤m≤Mn

max1≤i,j≤n

khm (xj−xi)∑nl=1 khm (xl−xi)

= O(bn)(1 +O(bn))

= O(bn), a.s.

max1≤m≤Mn

tr(Fm) = max1≤m≤Mn

n∑i=1

khm(0)∑l 6=i khm(xl − xi)

29

≤ n max1≤m≤Mn

max1≤i≤n

khm(0)∑l 6=i khm(xl − xi)

= O (nbn) , a.s.

max1≤m≤Mn

tr(Km) = max1≤m≤Mn

n∑i=1

khm(0)∑nl=1 khm(xl − xi)

≤ n max1≤m≤Mn

max1≤i≤n

khm(0)∑nl=1 khm(xl − xi)

= O (nbn) , a.s.

and

max1≤m,t≤Mn

tr(K ′mKt)

= max1≤m,t≤Mn

n∑i=1

n∑j=1

khm(xj − xi)∑nl=1 khm(xl − xi)

kht(xj − xi)∑nl=1 kht(xl − xi)

≤ max1≤m,t≤Mn

n∑i=1

(max1≤i≤n

n∑j=1

khm(xj − xi)∑nl=1 khm(xl − xi)

)(max

1≤i,j≤n

kht(xj − xi)∑nl=1 kht(xl − xi)

)

= n

(max

1≤m≤Mn

max1≤i≤n

n∑j=1

Km,ij

)(max

1≤t≤Mn

max1≤i,j≤n

kht(xj − xi)∑nl=1 kht(xl − xi)

)= nO (1)O (bn)

= O (nbn) , a.s.

which are equations (5.21)-(5.24) respectively.

5.3.3 Proofs of Theorems 5.1 and 5.2

We begin our proof by assuming that Conditions (5.12) and (5.13) hold, i.e.,

supw∈Hn

∣∣∣∣∣Rn(w)

Rn(w)− 1

∣∣∣∣∣ a.s.−−→ 0, (5.25)

and

limn→∞

max1≤m≤Mn

λ(Km) ≤ κ1 <∞, a.s. (5.26)

Part 1 provides the proof of Theorem 5.2, which states that µ is asymptotically optimal in thesense of (5.11) when (5.12) and (5.13) are fulfilled. In Part 2, we show that Conditions (5.12)and (5.13) are implied by the weaker Conditions (5.7)-(5.10).

Part 1.

30

Let ξn = infw∈Hn

Rn(w). Using Conditions (5.12) and (5.5) together with equation (A.1)in Zhang et al. (2013), we obtain

Mnξ−2Gn

∑Mn

m=1

Rn(w0

m)G a.s.−→ 0. (5.27)

Our next task is to verify that

Ln(w)

infw∈Hn

Ln(w)

p−→ 1. (5.28)

Now, observe that

CVn(w) = Ln(w) + ‖e‖2 + 2µ′A′(w)e− 2e′K(w)e.

As ‖e‖2 is independent of w, to prove (5.28), it suffices to show that

supw∈Hn

∣∣∣µ′A′(w)e∣∣∣/Rn(w)

p−→ 0, (5.29)

supw∈Hn

∣∣∣e′K(w)e∣∣∣/Rn(w)

p−→ 0, (5.30)

and

supw∈Hn

∣∣∣Ln(w)/Rn(w)− 1∣∣∣ p−→ 0. (5.31)

For purposes of convenience, we assume thatX is non-random. This will not invalidateour proof, because all our technical conditions except (5.4) hold almost surely. Based on theproof of (A.5) in Zhang et al. (2013), we can obtain (5.29) directly. Using (5.27), Chebyshev’sinequality and Theorem 2 of Whittle (1960), it is observed that for any δ > 0,

Pr

supw∈Hn

∣∣∣e′K(w)e∣∣∣ /Rn(w) > δ

≤∑Mn

m=1Pr∣∣∣e′K(w0

m)e∣∣∣ > δξn

≤∑Mn

m=1δ−2Gξ−2G

n E

(Ω−12 e)′Ω

12 K(w0

m)Ω12 (Ω−

12 e)2G

≤ C1δ−2Gξ−2G

n

∑Mn

m=1trace

Ω

12 K(w0

m)ΩK ′(w0m)Ω

12

G≤ C1δ

−2Gξ−2Gn

∑Mn

m=1λ(Ω)Gtrace

K(w0

m)K ′(w0m)Ω

G≤ C2δ

−2Gξ−2Gn

∑Mn

m=1

R(w0

m)G→ 0, (5.32)

where C1 and C2 are constants. Expression (5.30) is implied by (5.32). Note that∣∣∣Ln(w)− Rn(w)∣∣∣ =

∣∣∣‖K(w)e‖2 − traceK ′(w)K(w)Ω

− 2µ′A′(w)K(w)e

∣∣∣ .31

Hence, to prove (5.31) it suffices to show that

supw∈Hn

∣∣∣µ′A′(w)K(w)e∣∣∣ /Rn(w)

p−→ 0 (5.33)

and

supw∈Hn

∣∣∣‖K(w)e‖2 − traceK ′(w)K(w)Ω

∣∣∣ /Rn(w)p−→ 0. (5.34)

By (5.19), (5.27), (5.4) and (5.13), (5.33) and (5.34) can be proved along the lines of proving(A.4) and (A.5) in Wan et al. (2010). This completes the proof of (5.28). Likewise, using(5.18) and the technique for deriving (5.31), we have

supw∈Hn

|Ln(w)/Rn(w)− 1| p−→ 0. (5.35)

Now, by (5.28), (5.31), (5.35) and (5.12), and following the proof of (A.16) in Zhang et al.(2013), we can obtain (5.11).

Part 2.

Given the proof of Theorem 5.2 shown in Part 1, to prove Theorem 5.1, we only needto prove that Conditions (5.12) and (5.13) hold when Conditions (5.7)-(5.10) are satisfied.

From (5.18) and (5.20), there exists a fixed number κ1 <∞ such that

limn→∞

max1≤m≤Mn

λ(Km) = limn→∞

max1≤m≤Mn

λBm(Km − In) + In

≤ limn→∞

max1≤m≤Mn

[λ(Bm)λ(Km) + λ(In)+ λ(In)]

≤ κ1 <∞. a.s.

This leads to Condition (5.13). By (5.17), we obtain

In − K(w) =Mn∑m=1

wm(In − Km)

=Mn∑m=1

wm(In + Fm)(In −Km)

= In −K(w) +Mn∑m=1

wmFm(In −Km), (5.36)

and

Km = (In + Fm)(Km − In) + In = Km + FmKm − Fm. (5.37)

Also, by (5.18), (5.21), (5.7) and (5.10), we have

ξ−1n sup

w∈Hn

∥∥∥∥∥

Mn∑m=1

wmFm(In −Km)

µ

∥∥∥∥∥2

32

= ξ−1n sup

w∈Hn

∣∣∣∣∣Mn∑m=1

Mn∑t=1

wmwtµ′(In −Km)′F ′mFt(In −Kt)µ

∣∣∣∣∣≤ ξ−1

n max1≤m,t≤Mn

|µ′(In −Km)′F ′mFt(In −Kt)µ|

= ξ−1n max

1≤m,t≤Mn

∣∣∣∣µ′((In −Km)′F ′mFt(In −Kt) + (In −K ′t)F ′tFm(In −Km)

2

)µ

∣∣∣∣≤ ξ−1

n max1≤m,t≤Mn

λ (In −Km)′F ′mFt(In −Kt) |µ′µ|

≤ ξ−1n max

1≤m≤Mn

λ2(In −Km) max1≤m≤Mn

λ2(Fm) |µ′µ|

≤ ξ−1n max

1≤m≤Mn

λ(In) + λ(Km)2 max1≤m≤Mn

λ2(Fm) |µ′µ|

= ξ−1n 1 +O(1)2O(b2

n)O(n)

= ξ−1n O(nb2

n)

= o(1). a.s. (5.38)

Furthermore, by (5.19), (5.21) and (5.24), and recognising that Ft and Ω are diagonalmatrices and the diagonal elements of K ′mFtKt are non-negative, we obtain

max1≤m,t≤Mn

|tr (K ′mFtKtΩ)| = max1≤m,t≤Mn

|tr diag(K ′mFtKt)Ω|

≤ λ(Ω) max1≤m,t≤Mn

tr (K ′mFtKt)

= λ(Ω) max1≤m,t≤Mn

tr (KtK′mFt)

≤ λ(Ω) max1≤t≤Mn

λ(Ft) max1≤m,t≤Mn

tr (KtK′m)

= O(1)O(bn)O(nbn)

= O(nb2n). a.s. (5.39)

Similarly, by (5.19), (5.21)-(5.24), we obtain

max1≤m,t≤Mn

|tr (K ′mFtΩ)| ≤ λ(Ω) max1≤t≤Mn

λ(Ft) max1≤m≤Mn

tr (Km)

= O(1)O(bn)O(nbn)

= O(nb2n), a.s. (5.40)

max1≤m,t≤Mn

|tr (K ′mFmKtΩ)| = max1≤m,t≤Mn

|tr diag(K ′mFmKt)Ω|

≤ λ(Ω) max1≤m,t≤Mn

tr (K ′mFmKt)

= λ(Ω) max1≤m,t≤Mn

tr (KtK′mFm)

≤ λ(Ω) max1≤m≤Mn

λ(Fm) max1≤m,t≤Mn

tr (KtK′m)

= O(1)O(bn)O(nbn)

33

= O(nb2n), a.s. (5.41)

max1≤m,t≤Mn

|tr (K ′mFmFtKtΩ)| = max1≤m,t≤Mn

|tr diag(K ′mFmFtKt)Ω|

≤ λ(Ω) max1≤m,t≤Mn

tr (K ′mFmFtKt)

= λ(Ω) max1≤m,t≤Mn

tr (KtK′mFmFt)

≤ λ(Ω) max1≤m≤Mn

λ2(Fm) max1≤m,t≤Mn

tr (KtK′m)

= O(1)O(b2n)O(nbn)

= O(nb3n), a.s. (5.42)

max1≤m,t≤Mn

|tr (K ′mFmFtΩ)| ≤ λ(Ω) max1≤m≤Mn

λ2(Fm) max1≤m≤Mn

tr (Km)

= O(1)O(b2n)O(nbn)

= O(nb3n), a.s. (5.43)

max1≤m,t≤Mn

|tr (FmKtΩ)| ≤ λ(Ω) max1≤m≤Mn

λ(Fm) max1≤t≤Mn

tr (Kt)

= O(1)O(bn)O(nbn)

= O(nb2n), a.s. (5.44)

max1≤m,t≤Mn

|tr (FmFtKtΩ)| ≤ λ(Ω) max1≤m≤Mn

λ2(Fm) max1≤t≤Mn

tr (Kt)

= O(1)O(b2n)O(nbn)

= O(nb3n), a.s. (5.45)

and

max1≤m,t≤Mn

|tr (FmFtΩ)| ≤ λ(Ω) max1≤m≤Mn

λ(Fm) max1≤t≤Mn

tr (Ft)

= O(1)O(bn)O(nbn)

= O(nb2n). a.s. (5.46)

By (5.37) and (5.39)-(5.46), we have

supw∈Hn

∣∣∣trK ′(w)K(w)Ω− tr K ′(w)K(w)Ω

∣∣∣= sup

w∈Hn

∣∣∣∣∣Mn∑m=1

Mn∑t=1

wmwt

tr(K ′mKtΩ

)− tr (K ′mKtΩ)

∣∣∣∣∣≤ sup

w∈Hn

Mn∑m=1

Mn∑t=1

wmwt

∣∣∣tr (K ′mKtΩ)− tr (K ′mKtΩ)

∣∣∣34

≤ max1≤m,t≤Mn

∣∣∣tr (K ′mKtΩ)− tr (K ′mKtΩ)

∣∣∣= max

1≤m,t≤Mn

∣∣∣tr(K ′mKt −K ′mKt

)Ω∣∣∣

= max1≤m,t≤Mn

|tr [(K ′m +K ′mFm − Fm)(Kt + FtKt − Ft)−K ′mKtΩ]|

= max1≤m,t≤Mn

|tr (K ′mFtKtΩ−K ′mFtΩ +K ′mFmKtΩ +K ′mFmFtKtΩ

− K ′mFmFtΩ− FmKtΩ− FmFtKtΩ + FmFtΩ)|

≤ max1≤m,t≤Mn

|tr (K ′mFtKtΩ)|+ max1≤m,t≤Mn

|tr (K ′mFtΩ)|+ max1≤m,t≤Mn

|tr (K ′mFmKtΩ)|

+ max1≤m,t≤Mn

|tr (K ′mFmFtKtΩ)|+ max1≤m,t≤Mn

|tr (K ′mFmFtΩ)|+ max1≤m,t≤Mn

|tr (FmKtΩ)|

+ max1≤m,t≤Mn

|tr (FmFtKtΩ)|+ max1≤m,t≤Mn

|tr (FmFtΩ)|

= O(nb2n) +O(nb2

n) +O(nb2n) +O(nb3

n) +O(nb3n) +O(nb2

n) +O(nb3n) +O(nb2

n)

= O(nb2n). a.s. (5.47)

Finally, by (5.2), (5.36), (5.38), (5.47) and Condition (5.10), we obtain

supw∈Hn

∣∣∣∣∣Rn(w)

Rn(w)− 1

∣∣∣∣∣= sup

w∈Hn

∣∣∣∣∣Rn(w)−Rn(w)

Rn(w)

∣∣∣∣∣≤ sup

w∈Hn

∣∣∣∣∣‖(In − K(w))µ‖2 − ‖(In −K(w))µ‖2

Rn(w)

∣∣∣∣∣+ξ−1

n supw∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

= supw∈Hn

∣∣∣∣∣∣∣∥∥∥In −K(w) +

∑Mn

m=1 wmFm(In −Km)µ∥∥∥2

− ‖(In −K(w))µ‖2

Rn(w)

∣∣∣∣∣∣∣+ξ−1

n supw∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

≤ supw∈Hn

∥∥∥∑Mn

m=1 wmFm(In −Km)µ∥∥∥2

Rn(w)

+2 supw∈Hn

∣∣∣∣∣∣µ′(In −K(w))′

∑Mn

m=1wmFm(In −Km)µ

Rn(w)

∣∣∣∣∣∣+ξ−1

n supw∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

≤ supw∈Hn

∥∥∥∑Mn

m=1 wmFm(In −Km)µ∥∥∥2

Rn(w)

35

+2 supw∈Hn

∣∣∣∣∣∣‖µ′(In −K(w))′‖

∥∥∥∑Mn

m=1wmFm(In −Km)µ∥∥∥

Rn(w)

∣∣∣∣∣∣+ξ−1

n supw∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

≤ supw∈Hn

∥∥∥∑Mn

m=1 wmFm(In −Km)µ∥∥∥2

Rn(w)

+2 supw∈Hn

∥∥∥∑Mn

m=1wmFm(In −Km)µ∥∥∥

R1/2n (w)

+ξ−1n sup

w∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

≤ ξ−1n sup

w∈Hn

∥∥∥∥∥

Mn∑m=1

wmFm(In −Km)

µ

∥∥∥∥∥2

+2ξ−1/2n sup

w∈Hn

∥∥∥∥∥

Mn∑m=1

wmFm(In −Km)

µ

∥∥∥∥∥+ξ−1

n supw∈Hn

∣∣∣trK ′(w)K(w)Ω − trK ′(w)K(w)Ω∣∣∣

= ξ−1n O(nb2

n) + 2ξ−1n O(nb2

n)1/2 + ξ−1n O(nb2

n)

= o(1). a.s.

This completes the proof of Theorem 5.1.

36

ReferencesANDO, T. & LI, K. C. (2014). A model-averaging approach for high-dimensional regression.

Journal of the American Statistical Association 109, 254–265.

FAN, J. & LV, J. (2008). Sure independence screening for ultrahigh dimensional featurespace. Journal of the Royal Statistical Society (Series B) 70, 849–911.

FERGUSON, THOMAS SHELBURNE (1996). A course in large sample theory, vol. 49. Chap-man & Hall London.

HANSEN, B. E. & RACINE, J. S. (2012). Jackknife model averaging. Journal of Economet-rics 167, 38–46.

HARDLE, W., LIANG, H. & GAO, J. (2000). Partially Linear Models. Springer: Berlin.

HARDY, G.H., LITTLEWOOD, J.E. & POLYA, G. (1952). Inequalities. Cambridge UniversityPress: New York.

HE, X., WANG, L. & HONG, H. G. (2013). Quantile-adaptive model-free variable screeningfor high-dimensional heterogeneous data. The Annals of Statistics 41, 342–369.

KUIPER, R.M., HOIJTINK, H. & SILVAPULLE, M. (2011). An Akaike-type informationcriterion for model selection under inequality constraints. Biometrika 98, 495–501.

LI, Q. & RACINE, J.S. (2007). Nonparametric Econometrics: Theory and Practice. Prince-ton University Press: Princeton.

LIU, Q. & OKUI, R. (2013). Heteroskedasticity-robust cp model averaging. EconometricsJournal , 463–472.

LU, X. & SU, L. (2015). Jackknife model averaging for quantile regressions. Journal ofEconometrics 188, 40–58.

NADARAYA, E.A. (1964). On estimating regression. Theory of Probability and Its Applica-tions 9, 141–142.

SERFLING, ROBERT J. (1980). Approximation theorems of mathematical statistics, vol. 162.John Wiley & Sons.

SPECKMAN, P. (1988). Kernel smoothing in partially linear models. Journal of the RoyalStatistical Society (Series B) 50, 413–436.

VAN DER VAART, A.W. & WELLNER, J. A. (1996). Weak Convergence and Empirical Pro-cesses with Application to Statistics. Springer, Berlin.

WAN, A.T.K., ZHANG, X. & ZOU, G. (2010). Least squares model averaging by Mallowscriterion. Journal of Econometrics 156, 277–283.

WATSON, G.S. (1964). Smooth regression analysis. Sankhya: The Indian Journal of Statistics(Series A) 26, 359–372.

WHITE, H. (1982). Maximum likelihood estimation of misspecified models. Econometrica50, 1–25.

WHITTLE, P. (1960). Bounds for the moments of linear and quadratic forms in independentvariables. Theory of Probability and Its Applications 5, 302–305.

ZHANG, X., WAN, A.T.K. & ZOU, G. (2013). Model averaging by jackknife criterion inmodels with dependent data. Journal of Econometrics 174, 82–94.

37