Chapter 5: Continuous Random Variables

description

Transcript of Chapter 5: Continuous Random Variables

CIS 2033 based onDekking et al. A Modern Introduction to Probability and Statistics. 2007

Instructor Longin Jan Latecki

Chapter 5: Continuous Random Variables

Probability Density Function of X

A random variable (RV) X is continuous if for some function ƒ: R R and for any numbers a and b with a ≤ b,

P(a ≤ X ≤ b) = ∫ab ƒ(x) dx

The function ƒ has to satisfy ƒ(x) ≥ 0 for all x and ∫-∞∞ ƒ(x) dx = 1.

For both continuous and discrete RVs their probabilities map P: Ω -> [0,1], butthe pmf of a discrete RV maps px: R -> [0,1] and P(X=a) = px(a),while for a continuous RV P(X=a) = P(a ≤ X ≤ a) = 0, but see next slide.

To approximate the probability density function at a point a, one must find an ε that is added and subtracted from a and then the area of the box under the curve is obtained by the following: (2ε) * ƒ(a). As ε approaches zero the area under the curve becomes more precise until one obtains an ε of zero where the area under the curve is that of a width-less box. This is shown through the following equation.

P(a – ε ≤ X ≤ a +ε) = ∫a-εa+ε ƒ(x) dx ≈ 2ε*ƒ(a)

DISCRETE NO DENSITY CONTINUOUS NO MASS

BOTH CUMULATIVE DISTRIBUTION Ƒ(a) = P(X ≤ a)

P(a < X ≤ b) = P(X ≤ b) – P(X ≤ a) = Ƒ(b) – Ƒ(a)

How the Distribution Function relates to the Density Function:

Important facts:

Uniform Distribution U(α,β)• A continuous RV has a uniform distribution on the interval [α,β] if its pdf ƒ is given by

ƒ(x) = 0 if x is not in [α,β] and,ƒ(x) = 1/(β-α) for α ≤ x ≤ β

This simply means that for any x in the interval of alpha to beta has the same probability and anything not in the interval is zero.

• The cumulative distribution is given by F(x) = 0 if x < α, F(x) = 1 if x > β, and F(x) = (x − α)/(β − α) for α ≤ x ≤ β

• If U is normalized, i.e., U(0,1), then F(x)=P(U<x)=x for every x.

Exponential Distribution Exp(λ)Intuitively: a continuous version of the geometric distribution.

A continuous random variable has an exponential distribution with parameter λ if its probability density function ƒ is given by

ƒ(x) = λe-λx for x ≥ 0The Distribution function ƒ of an Exp(λ) distribution is given by Ƒ(a) = 1 – e-λa for a ≥ 0

P(X > s + t | x > s) = P(x > s + t)/P(x>s) = (e-λ(s+t))/(e-λs) =e-λt= P(X > t)This simply means that s becomes the origin where t.

Exponential Distribution Exp(λ)An RV X has an expondential distribution if its pdf: ƒ(x) = λe-λx for x ≥ 0and CDF: Ƒ(a) = 1 – e-λa for a ≥ 0

The parameter λ is the inverse of the expected value of X, i.e., E(X)= 1/ λ or λ=1/E(x). If X is time in minutes, then λ is a frequency measured in min-1.

For example, if arrivals occur every half a minute on average, then E(X)=0.5 and λ=2, saying that they occur with a frequency (arrival rate) of 2 arrivals per minute. This λ has the same meaning as the parameter of Poisson distribution.

ƒ(x) = λe-λx for x ≥ 0 Ƒ(a) = 1 – e-λa for a ≥ 0

How much time will elapse before an earthquake occurs in a given region? How long do we need to wait before a customer enters our shop? How long will it take before a call center receives the next phone call? How long will a piece of machinery work without breaking down?Questions such as these are often answered in probabilistic terms using the exponential distribution.All these questions concern the time we need to wait before a certain event occurs. If this waiting time is unknown, it is often appropriate to think of it as a random variable having an exponential distribution.

Roughly speaking, the time we need to wait before an event occurs has an exponential distribution if the probability that the event occurs during a certain time interval is proportional to the length of that time interval. More precisely, X has an exponential distribution if the conditional probability

is approximately proportional to the length Δt of the time interval [t, t + Δt] for any time instant t . In most practical situations this property is very realistic and this is the reason why the exponential distribution is so widely used to model waiting times.

)|( tXttXtP

From: http://www.statlect.com/ucdexp1.htm

A continuous random variable has a Pareto distribution with parameter α > 0 if its probability density function ƒ is given by ƒ(x) = 0 if x < 1 and

for x ≥ 1

Pareto Distribution Par(α)Used for estimating real-life situations such as • the number of people whose income exceeded level x, • city sizes, earthquake rupture areas, insurance claims, and sizes of commercial

companies.

f (x)x1

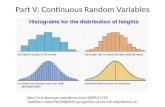

Normal Distribution N(μ,σ2)Normal Distribution (Gaussian Distribution) with parameters μ and σ2 > 0 if its

probability density function ƒ is given by

for -∞ < x < ∞

*Where μ = mean and σ2 = standard deviation*Distribution function is given by:

for -∞ < a < ∞

However, since ƒ does not have an antiderivative there is no explicit expression for Ƒ.Therefore standard normal distribution where N(0,1) is given as follows, and the

distribution function is obtained similarly denoted by capital phi.

for -∞ < x < ∞

f (x)1

2e

1

2(x )2

dxexFa x

2)(

2

1

2

1)(

(x) 1

2e

1

2x 2

Normal Distribution

f (x)1

2e

1

2(x )2 dxexF

a x

2)(

2

1

2

1)(

Quantiles

Portions of the whole which increase from left to right, meaning the 0th percentile is on the left hand side and the 100th percentile is on the right side.

Let X be a continuous random variable and let p be a number between 0 and 1. The pth quantile or 100pth percentile of the distribution of X is the smallest number qp such that

Ƒ(qp) = P(X ≤ qp) = p

The median is the 50th percentile

For continuous random variables qp is often easy to determine. If F is strictly increasing from 0 to 1 on some interval (which may be infinite to one or both sides), then

qp = Finv(p),

where Finv is the inverse of F.

Ƒ(qp) = P(X ≤ qp) = p

What is the median of the U(2, 7) distribution?

The median is the number q0.5 = Finv(0.5). You either see directly that you have got half of the mass to both sides of the middle of the interval, henceq0.5 = (2+7)/2 = 4.5, or you solve with the distribution function:

F(qp) = P(X ≤ qp) = p = 0.5

F(q) = 0.5

0.5

and F(x) = (x − α)/(β − α)

F(qp) = P(X ≤ qp) = p = 0.9

F(a) = 0.92

2

1

2

1)()(

xexxf

dxeaaF

ax

2

2

1

2

1)()(9.0

Matlab: a = norminv(0.9,0,1) => a =1.2816