Backpropagation - RUB

Transcript of Backpropagation - RUB

Backpropagation

— Lecture Notes —

Laurenz WiskottInstitut fur Neuroinformatik

Ruhr-Universitat Bochum, Germany, EU

28 January 2017

Contents

1 Supervised learning 2

1.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Error function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Gradient descent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.4 Online learning rule . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.5 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.5.1 Nonlinear regression in x . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.5.2 Linear regression in x ? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 Supervised learning in multilayer networks 6

2.1 Multilayer networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.2 Error backpropagation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

3 Sample applications (→ slides) 9

© 2006-2008, 2017 Laurenz Wiskott (ORCID http://orcid.org/0000-0001-6237-740X, homepage https://www.ini.rub.

de/PEOPLE/wiskott/). This work (except for all figures from other sources, if present) is licensed under the Creative CommonsAttribution-ShareAlike 4.0 International License, see http://creativecommons.org/licenses/by-sa/4.0/. If figures are notincluded for copyright reasons, they are uni colored, but the word ’Figure’, ’Image’, or the like in the reference is often linkedto a freely available copy.Core text and formulas are set in dark red, one can repeat the lecture notes quickly by just reading these; � marks importantformulas or items worth remembering and learning for an exam; ♦ marks less important formulas or items that I would usuallyalso present in a lecture; + marks sections that I would usually skip in a lecture.More teaching material is available at https://www.ini.rub.de/PEOPLE/wiskott/Teaching/Material/.

1

1 Supervised learning

1.1 Introduction

In supervised learning (D: uberwachtes Lernen) the learning is done based on training data (D:Trainingsdaten) that consist of input data (D: Eingangs-, Eingabedaten) or patterns (D: Muster) xµ

and required output data (D: vorgegebene Ausgangs-, Ausgabedaten) or target values (D: Zielwerte) sµ

(note that µ is an index here and not an exponent). Both may be vectors, but we assume for simplicitythat the output data are scalar values. Generalization to output vectors is straight forward. The individualinput-output data-pairs are indexed by µ = 1, ...,M .

The goal of supervised learning is to find an input-output function y(x) that generates anoutput

� yµ := y(xµ) (1.1)

that is as close as possible to the required output data sµ for the given input data xµ.

To quantify the performance one defines an error function. A simple method to optimize the input-outputfunction to minimize the error is gradient descent. This can be done on all data simultaneously or - which isbiologically more plausible - on the individual data points separately, which is then referred to as an onlinelearning rule.

1.2 Error function

An error function (D: Fehlerfunktion) has the purpose to quantify the performance of the system. If Eµ issome measure of error made for a single data point, the total error is typically the mean over all data points

♦ E :=1

M

∑µ

Eµ . (1.2)

A common measure of error for a single data point is half the squared distance between output value andtarget value, i.e.

♦ Eµ :=1

2(yµ − sµ)2 , (1.3)

so that the total error is often measured by the mean squared error (D: mittlerer quadratischerFehler)

� E =1

M

∑µ

1

2(yµ − sµ)

2, (1.4)

which has to be minimized.

As will become clear later, the factor 1/2 has been introduced for mathematical convenience only anddisappears in derivatives.

1.3 Gradient descent

The input-output function is usually parameterized in some way. For instance, we could define y(x) :=w1x1 + w2x2. In this case, w1 and w2 would be the parameters of the function. To emphasize this we canwrite yw(x).

Learning means adapting the parameters somehow to optimize the function. If an error function is available,a standard method for this is gradient descent, see figure 1.1. The idea of gradient descent (D:Gradientenabstieg) is to start somewhere in parameter space and then walk downhill on the

2

starting

point

final

point

gradient

descent

local

minimum

global

minimum

E(w)

wparameter

Figure 1.1: The idea of gradient descent is to start somewhere and then simply walk downhill. In thisexample there are two minima and the process ends up in the wrong one. If it had started a bit more to theleft, it had found the global one.© CC BY-SA 4.0

error function until one ends up at the bottom of a valley. This is a convenient method, becauseoften it is easy to determine the gradient of the error function with respect to the parameters. Formally, thegradient is simply the vector of the derivatives of the error function with respect to the parameters,

♦ gradwE =

(∂E

∂w1,∂E

∂w2, . . . ,

∂E

∂wI

)T. (1.5)

The change of the weights have to go in the opposite direction of the gradient to go downhill and the stepsshould be of a reasonable size, so that one moves forward but does not jump too much around. This motivatesa negative sign and introducing a learning rate (D: Lernrate) η, so that the learning rule becomes

♦ ∆wi = −η ∂E∂wi

∀i , (1.6)

or in vector notation♦ ∆w = −η gradwE . (1.7)

The main problem with gradient descent is that depending on the shape of the error function it can besensitive to the starting point and is then not guaranteed to find the global minimum, cf. figure 1.1.

1.4 Online learning rule

From a biological point of view it is not particularly plausible to assume that first all training patterns arecollected and then training is applied to all data simultaneously. It is more reasonable to learn on eachtraining pattern separately,

� ∆wµi := −η ∂Eµ

∂wi, (1.8)

3

which is referred to as online or incremental learning (D: (inkrementelles) Lernen) in contrast to batchlearning seen above. The two methods are pretty much equivalent, since

♦ ∆wi(1.6)= −η ∂E

∂wi(1.9)

(1.2)= −η ∂

∂wi

(1

M

∑µ

Eµ

)(1.10)

=1

M

∑µ

−η ∂Eµ

∂wi(1.11)

♦(1.8)=

1

M

∑µ

∆wµi . (1.12)

Thus, it does not matter if we first calculate the mean error and then the gradient (weight change) of thetotal error or first the gradients (weight changes) for the individual errors and then take the average.

However, it is not quite the same, because when calculating the gradient for a new pattern, the weightshave changed already on the previous pattern. Only if the learning rate is small is the change of the weightsbetween two patterns so small that the equivalence between batch and online learning holds.

1.5 Examples

For illustration purposes consider two simple examples, nonlinear regression in one dimension and linearregression in many dimensions.

1.5.1 Nonlinear regression in x

If we choose a polynomial for the input-output function for one-dimensional input x, we can write

♦ y(x) :=

I∑i=0

wixi , (1.13)

with wi indicating the weights of the monomials.

Given some training data xµ and sµ with µ = 1, ...,M the error

♦ E(1.4,1.13)

=1

M

∑µ

1

2

(∑i

wi(xµ)i − sµ

)2

︸ ︷︷ ︸=:Eµ

(1.14)

can be minimized by standard gradient descent on E with respect to the weights wi.

By dropping the averaging over µ we get the online learning rule

♦ ∆wµi := −η ∂Eµ

∂wi(1.15)

(1.20)= −η ∂

(∑k wk(xµ)k

)∂wi

∑j

wj(xµ)j − sµ

(1.16)

= −η (xµ)i

∑j

wj(xµ)j − sµ

(1.17)

♦(1.19)= −η (xµ)i (yµ − sµ) . (1.18)

4

Iterating this rule several times over all µ should lead to (locally) optimal weights wi. Altogether thisbecomes a gradient descent version of nonlinear regression in x.

1.5.2 Linear regression in x ?

For illustration purposes consider linear regression as an example and let us phrase it in the jargon of neuralnetworks. Linear regression can be done with a simple neural network that has just one linear unit.It realizes the function

♦ y(x) :=∑i

wixi = wTx , (1.19)

with wi indicating the (synaptic) weights (D: (synaptischen) Gewichte).

Given some training data xµ and sµ with µ = 1, ...,M the error

♦ E(1.4,1.19)

=1

M

∑µ

1

2

(∑i

wixµi − sµ

)2

︸ ︷︷ ︸=:Eµ

(1.20)

can be minimized by standard gradient descent on E with respect to the weights wi. Since(1.20) is a quadratic function in the weights, there is only one minimum and gradient descent is guaranteedto find it, see figure 1.2.

wi

wj

3

5

4

2

1

Figure 1.2: In linear regression the error function is a quadratic function and has only one minimum, andgradient descent is guaranteed to find it.© CC BY-SA 4.0

5

By dropping the averaging over µ we get the online learning rule

♦ ∆wµi := −η ∂Eµ

∂wi(1.21)

(1.20)= −η ∂ (

∑k wkx

µk)

∂wi

∑j

wjxµj − sµ

(1.22)

= −η xµi

∑j

wjxµj − sµ

(1.23)

♦(1.19)= −η xµi (yµ − sµ) . (1.24)

Iterating this rule several times over all µ should lead to (locally) optimal weights wi. Altogether thisbecomes a gradient descent version of linear regression.

2 Supervised learning in multilayer networks

A neural network with just one linear unit is not particularly powerful. For any serious processing we needa multilayer network. However, with a multilayer network calculating the gradient can quickly become veryinvolved. This makes the strategy sketched above problematic. Fortunately, some clever people came upwith a nice algorithm that takes care of these complications - the error backprogation algorithm (Wikipedia,2017).

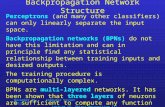

2.1 Multilayer networks

A single unit network is obviously very limited. To realize more complex input-output functionsone has to combine several layers of units resulting in a multilayer network (D: mehrschichtigesNetz(werk)), see figure 2.1. To keep notation simple we drop the pattern index µ altogether. Instead, weuse a superscript index ν to indicate the layers, with ν = 1 for the input layer and ν = N for theoutput layer. Each layer can have several units indicated by e, ..., i, j, k, ..., n, with e for the input layer andn for the output layer. In summary we have the following computation in the network.

� xν+1j := yνj , (2.1)

� yνj := σ(zνj ) , (2.2)

� zνj :=∑i

wνjixνi . (2.3)

The outputs of one layer yνj serve as the input xν+1j to the next layer. σ is a sigmoidal nonlinearity, such as

σ(z) := tanh(z) (2.4)

with σ′(z) = 1− σ(z)2 = 1− y2 , (2.5)

σ(−z) = −σ(z) . (2.6)

2.2 Error backpropagation

Computing the gradient for a multilayer network can quickly become cumbersome and computationally veryexpensive. However, by backpropagating an error signal, computation can be drastically simplified. Since

6

1 2 3 4

1 2 3 4

1 2 3 4

1 2 3 4

1 2 3 4

e =

i =

j =

k =

n =

yνj = σ(zνj ) (output from layer ν)

yν−1i (output from layer ν − 1)

xν+1j = yνj (input into layer ν + 1)

wν41 wν44wν14

zνj =∑

iwνjix

νi (membrane potential in layer ν)

wν+111

layer 1

layer ν − 1

layer ν

layer N

layer ν + 1

xνi = yν−1i (input into layer ν)

output

hidden

hidden

hidden

input

Figure 2.1: A multilayer network and its notation. Processing goes from bottom to top. Full connectivityis only shown between layers ν and ν + 1, but is present between all successive layers.© CC BY-SA 4.0

now the output layer can have several units we extend the error function for a single input-outputdata-pair by summing over all output units

� Eµ :=∑n

1

2(yµn − sµn)

2, (2.7)

but remember that in the following we drop index µ for notational simplicity and use a superscript index νto indicate the layer instead.

The partial derivative of E (2.7) with respect to any weight wνji is

♦∂E

∂wνji

(2.3)=

∂E

∂zνj

∂zνj∂wνji

(chain rule) (2.8)

(2.3)=

∂E

∂zνjxνi (2.9)

♦(2.11)= δνj x

νi (2.10)

♦ with δνj :=∂E

∂zνj. (2.11)

The xνi can be easily computed with (2.1–2.3) iteratively from the input layer to the outputlayer. This leaves the δνj to be determined.

7

For the output layer we have

♦ δNn(2.11)=

∂E

∂zNn(2.12)

(2.2)=

∂E

∂yNn

dyNndzNn

(chain rule) (2.13)

♦(2.2,1.4)

= (yNn − sn)σ′(zNn ) . (2.14)

For the hidden layers we get

♦ δνj(2.11)=

∂E

∂zνj(2.15)

(2.1–2.3)=

∑k

∂E

∂zν+1k

∂zν+1k

∂zνj(chain rule) (2.16)

(2.11)=

∑k

δν+1k

∂zν+1k

∂zνj(2.17)

♦(2.20)= σ′(zνj )

∑k

δν+1k wν+1

kj , (2.18)

because zν+1k

(2.1–2.3)=

∑j

wν+1kj σ(zνj ) (2.19)

=⇒ ∂zν+1k

∂zνj= wν+1

kj σ′(zνj ) . (2.20)

Now, the δνj can be easily computed with the equations (2.14, 2.18). This process is referredto as error backpropagation (D: Fehlerruckfuhrung) because δνj is related to the error, as can be seenfrom (2.14), and it gets computed from the output layer to the input layer (2.18).

If the xνi and δνj are known, the gradient can be computed with (2.10) and the weight changeswith (1.15). The different phases of the backpropagation algorithm are summarized in figure 2.2.

The change of weight is computed for each training data pair anew. Several iterations over all training datashould lead to (locally) optimal weights. Unfortunately there is no guarantee to find the globally optimalweights.

8

phase 1 phase 2 phase 3

error backpropagation weight changeforward computation

we

have

we

get

we

have

we

get

we

have

we

get

δν+1k

δνj

δν−1i

xνi

xν+1j

wνji

wν+1kj

δν+1k = ...zν+1

k

zνj

zν−1i

xν+1j = yνj

yνj = σ(zνj )

zνj =∑

iwνjix

νi

xνi = yνi − 1

yν−1i = ...

δν−1k = σ′(zν−1

i )∑

j δνjw

νji

δνj = σ′(zνj )∑

k δν+1k wν+1

kj

eqs. (2.14,2.18) eqs. (1.6,2.10)eqs. (2.1,2.3)

∆wνji = −ηδνj xνi

∆wν+1kj = −ηδν+1

k xν+1j

wνji

wν+1kj

Figure 2.2: The phases of the backpropagation algorithm.© CC BY-SA 4.0

3 Sample applications (→ slides)

Learning Semantics

Figure: (adapted from https://www.flickr.com/photos/listingslab/9056502486 2016-12-09, © CC BY 2.0, URL)

Learning a semantic represen-tation with a backpropagationnetwork is an example wherein some sense a rather high-level cognitive task is solvedwith a rather simple network.It illustrates that a clever rep-resentation can make a com-plicated task simple.Figure: (adapted fromhttps://www.flickr.com/

photos/listingslab/

9056502486 2016-12-09,© CC BY 2.0, URL)3.1

9

Hierarchical Propositional Tree

Figure: (McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 1,

URL)

Quillian (1968, Semantic In-formation Processing, MITPress) proposed that seman-tic knowledge is representedin a hierarchical propositionaltree. The more abstract con-cepts are are at the root andthe more specific concepts areat the leaves. Properties canbe stored at different loca-tions depending on how gen-eral they are. Rummelhart& Todd (1993, Attention andPerformance XIV, MIT Press)applied this to a simple ex-ample of living things to cre-ate a structured training setfor training a backpropagationnetwork to learn semantics.Figure: (McClelland andRogers, 2003, Fig. 1, URL)3.2

Network Architecture

Figure: (McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 3,

URL)

A network considered by(Rumelhart and Todd, 1993)for semantic learning consistsof one input layer indicatinga specific plant or animal,one input layer indicatinga propositional realtionship,such as ’is a’ or ’can’, twohidden layers, and one outputlayer indicating higher orderconcepts, such as ’tree’ or’living thing’, or properties,such as ’big’ or ’feathers’. Theunits highlighted are activeand indicate a training exam-ple teaching the network thata robin can grow, move, andfly. The network is trainedwith many of such examplesusing the backpropagationalgorithm.Figure: (McClelland andRogers, 2003, Fig. 3, URL)3.3

10

Hidden Layer Representation

Figure: (McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 4a,

URL)

This graph shows how thefirst hidden layer representa-tion develops with training.Each graph shows the activityof the eight first hidden layerunits when one of the first in-put layer units is active. After25 epoches the representationis still fairly unstructured andundifferentiated. One epochecorresponds to the presenta-tion of all training examples.After 500 epoches the repre-sentation is much more struc-tured, i.e. has more stronglyactive or inactive units, andis more differentiated, i.e. dif-ferent input leads to differenthidden layer activities. It isinteresting that similar inputclasses, such as salmon andsunfish, lead to similar hiddenlayer activities. This is the keyto the generalization capabil-

ity of the network.Figure: (McClelland and Rogers, 2003, Fig. 4a, URL)3.4

Discovered Similarities

Figure: (McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 4b,

URL)

This graph illustrates the sim-ilarities between the hiddenlayer activities for different in-puts more clearly. The Eu-clidean distance between thehidden layer activity vectorsbecome large for dissimilar in-put classes, such as ’canary’and ’oak’, but remains smallfor similar ones, such as ’ca-nary’ and ’robin’. For in-stance, the network makesa natural distinction betweenplants and animals because oftheir different properties.Figure: (McClelland andRogers, 2003, Fig. 4b,URL)3.5

11

Generalization

Figure: (McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 3,

URL)

Trained only with the ’sparrow ISA animal & bird’ input-output pair thenetwork learns the correct reponse also to the ’sparrow is / can / has’inputs.

This does not work for a penguin.

The structured hidden layerrepresentation allows the net-work to generalize to new in-put classess, such as ’sparrow’.If one continues to train thenetwork with ’sparrow ISA an-imal & bird’, the network gen-eralizes the correct answersalso for the ’sparrow is / can/ has’ questions.It is obvious that this cannotwork for penguins. The net-work will not know that a pen-guine cannot fly until it is ex-plicitely taught so.Figure: (McClelland andRogers, 2003, Fig. 3, URL)3.6

NETtalk

Image: (http://www.cartoonstock.com/lowres/hkh0046l.jpg 2005-10-27, URL)

Although quite old, theNETtalk example is stilla very popular applicationwhere a backpropagation net-work was trained to read textand actually produce speachthrough a speach synthesizer.

Image: (http://www.cartoonstock.com/

lowres/hkh0046l.jpg 2005-10-27, URL)3.7

12

NETtalk Architecture

Figure: (Sejnowski & Rosenberg, 1987, Complex Systems 1:145-168, Fig. 1, URL)

203 input units: 7 positions × (26 letters + 3 punctuations).

80 hidden units.

26 output units: 21 articulary features + 5 stress and syllable boundaryfeatures.

(Demo: 0:00–0:35 Intro, 0:36–3:15 de novo learning, 3:20–5:00 after 10.000 training words (training set), 5:05–7:20 new corpus (test set))

The network receives text asinput and produces phonemesas output. The phonemescan then be fed into a speachsynthesizer to produce sound.The input layer representsseven consecutive charactersof the text. For each charac-ter position there are 26 unitsfor the letters plus 3 units forpunctuation. One of these 29units is active and indicatesthe character at hand. Thismakes 203 = 7 × (26 + 3)input units. Text is shiftedthrough the character posi-tions from right to left, whichcorresponds to reading fromleft to right. The hiddenlayer has 80 units. The out-put layer has 26 units, 21 ofwhich represent phonemes (ar-ticulary features) and 5 repre-sent stress and syllable bound-

aries.The whole network has been trained with the backpropagation algorithm on a large corpus of hand labeledtext. Creating this training corpus was actually the main work.The audio example shows how the reading performance improves over time and that the network is ableto generalize to new text. It is interesting that in some cases the network makes similar errors as childrenas they learn reading, for example in the way it over-generalizes. However, one should keep in mind thatchildren learn reading very differently. For instance, they first learn talking and then reading while thenetwork learns both simultaneously.Figure: (Sejnowski and Rosenberg, 1987, Fig. 1, URL)3.8

13

NETtalk Learning Curves

Figure: (Sejnowski & Rosenberg, 1987, Complex Systems 1:145-168, Fig. 4, URL)

This graph shows how the per-formance improves with train-ing. It is no surprise that thenetwork performs better onthe stress features than on thephonemes, because there arefewer of the former (5, chancelevel 1/5) than of the latter(21, chance level 1/21). Theexponential learning curve isalso rather typical, with fastlearning in the beginning andmuch slower learning in theend.Figure: (Sejnowski andRosenberg, 1987, Fig. 4,URL)3.9

14

References

McClelland, J. L. and Rogers, T. T. (2003). The parallel distributed processing approach to semanticcognition. Nat. Rev. Neurosci., 4(4):310–322.

Rumelhart, D. E. and Todd, P. M. (1993). Learning and connectionist representations. In Meyer, D. E. andKornblum, S., editors, Attention and performance XIV: Synergies in experimental psychology, artificialintelligence, and cognitive neuroscience, pages 3–30. MIT Press, Cambridge, Massachusetts.

Sejnowski, T. J. and Rosenberg, C. R. (1987). Parallel networks that learn to pronounce english text.Complex systems, 1(1):145–168.

Wikipedia (2017). Backpropagation. Wikipedia, The Free Encyclopedia. https://en.wikipedia.org/

wiki/Backpropagation accessed 28-January-2017.

Notes

3.1adapted from https://www.flickr.com/photos/listingslab/9056502486 2016-12-09, © CC BY 2.0, https://www.flickr.com/photos/listingslab/9056502486

3.2McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 1, http://web.stanford.edu/\unhbox\voidb@x\protect\penalty\@M\jlmcc/papers/McCRogers03.pdf

3.3McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 3, http://web.stanford.edu/\unhbox\voidb@x\protect\penalty\@M\jlmcc/papers/McCRogers03.pdf

3.4McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 4a, http://web.stanford.edu/\unhbox\voidb@x\protect\penalty\@M\jlmcc/papers/McCRogers03.pdf

3.5McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 4b, http://web.stanford.edu/\unhbox\voidb@x\protect\penalty\@M\jlmcc/papers/McCRogers03.pdf

3.6McClelland & Rogers, 2003, Nat. Neurosci.; from Rummelhart & Todd, 1993, Fig. 3, http://web.stanford.edu/\unhbox\voidb@x\protect\penalty\@M\jlmcc/papers/McCRogers03.pdf

3.7http://www.cartoonstock.com/lowres/hkh0046l.jpg 2005-10-27, http://www.cartoonstock.com/lowres/hkh0046l.jpg

3.8Sejnowski & Rosenberg, 1987, Complex Systems 1:145-168, Fig. 1, https://www.researchgate.net/profile/Terrence_

Sejnowski/publication/237111620_Parallel_Networks_that_Learn_to_Pronounce/links/54a4b0150cf257a63607270d.pdf

3.9Sejnowski & Rosenberg, 1987, Complex Systems 1:145-168, Fig. 4, https://www.researchgate.net/profile/Terrence_

Sejnowski/publication/237111620_Parallel_Networks_that_Learn_to_Pronounce/links/54a4b0150cf257a63607270d.pdf

Copyrightprotectionlevel: 2/ 2

15