An Adaptive Checkpoint Model For Large-Scale HPC Systems

Transcript of An Adaptive Checkpoint Model For Large-Scale HPC Systems

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

An Adaptive Checkpoint Model For Large-Scale HPC SystemsSubhendu [email protected]

North Carolina State UniversityRaleigh, North Carolina

Lipeng [email protected]

Oak Ridge National LaboratoryOak Ridge, Tennessee

Frank [email protected]

North Carolina State UniversityRaleigh, North Carolina

Matthew [email protected]

Oak Ridge National LaboratoryOak Ridge, Tennessee

Scott [email protected]

Oak Ridge National LaboratoryOak Ridge, Tennessee

ABSTRACTCheckpoint/Restart is a widely used Fault Tolerance techniquefor application resilience. However, failures and the overhead ofsaving application state for future recovery upon failure reduces theapplication efficiency significantly. This work contributes a failureanalysis and prediction model making decisions for checkpointdata placement, recovery, and techniques for reducing checkpointfrequency. We also demonstrate a reduction in application overheadby taking proactive measures guided by failure prediction.

KEYWORDSHigh Performance Computing, Failure Prediction, I/O subsystem,Checkpoint/Restart, Burst BuffersACM Reference Format:Subhendu Behera, Lipeng Wan, Frank Mueller, Matthew Wolf, and ScottKlasky. 2019. An Adaptive Checkpoint Model For Large-Scale HPC Systems.In Proceedings of ACM Conference (Conference’17). ACM, New York, NY,USA, 2 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn

1 INTRODUCTIONGiven the scale of modern-day High-Performance Computing sys-tems, failures are imminent and frequent. Recent investigations [2,3] develop failure prediction models to improve High-PerformanceComputing(HPC) resiliency. We aim to utilize the DESH failureprediction model [2] to predict failures in advance with a predictedlead time. With sufficient lead time, a proactive measure can betaken to avoid failure and computation waste. The idea is to raise afailure alarm whenever a recognized pattern of logs is found on anode. With sufficient lead time, appropriate action can be taken tomigrate the application from a node under risk of failure to a newand healthy node.

Apart from proactive live migration, log-based failure analysiscan also help in making decisions about checkpoint data placement.As already known, an application’s efficiency on the HPC system

Unpublished working draft. Not for distribution.Permission to make digital or hard copies of all or part of this work for personal orclassroom use is granted without fee provided that copies are not made or distributedfor profit or commercial advantage and that copies bear this notice and the full citationon the first page. Copyrights for components of this work owned by others than ACMmust be honored. Abstracting with credit is permitted. To copy otherwise, or republish,to post on servers or to redistribute to lists, requires prior specific permission and/or afee. Request permissions from [email protected]’17, July 2017, Washington, DC, USA© 2019 Association for Computing Machinery.ACM ISBN 978-x-xxxx-xxxx-x/YY/MM. . . $15.00https://doi.org/10.1145/nnnnnnn.nnnnnnn

is severely impacted by I/O performance which often presents abottleneck. To alleviate this problem, Burst Buffers (BBs, fast NVMestorage devices) are now used in HPC I/O subsystems. BBs areused as an intermediate fast storage medium for checkpoint datafor faster Checkpoint/Restart. BBs also differ in architecture. Forexample, Summit’s compute nodes have local NVMe storage of1.6TB attached to them, whereas Cori’s compute nodes share BBsthat are clustered on special nodes. In the case of clustered BBnodes, the checkpoint data can be retrieved even if a computenode fails. However, the same is not true for local BBs as theybecome inaccessible upon node failure. Hence the stored data onlocal BBs is asynchronously transferred to the Parallel File System(PFS). So classifying failures as node failures and soft failures canhelp to make an appropriate decision regarding the placementof checkpoint data and its recovery. If a failure is a catastrophicnode failure then the checkpoint data should be written to PFS.Otherwise, it can be written to local BBs.

We propose a checkpoint model that takes advantage of modernBBs-based I/O subsystems and a failure prediction/analysis model.We also use the adaptive checkpoint model derived from [4] todetermine an optimal checkpoint interval that considers proactivefault mitigation rates and makes efficient use of both BBs and thePFS.

2 DESIGNOur model is derived from the checkpoint model [4] which decidesthe optimal checkpoint interval while considering the daily writelimit of BBs for optimal use of both BBs and the PFS. We use thismodel as our default model when there is no failure prediction.Our new checkpoint model takes decisions based on the followingscenarios.

• Is a failure Predicted ? During the periods when afailure is not predicted we use the adaptive checkpoint model[4]. This ensures that the application state is saved efficientlywith the use of BBs and the PFS. Checkpoint data is savedon to the BBs or the PFS based on the limit of BB writes (see[4]). If BBs are local, then checkpoints bleed off to the PFSasynchronously and slowly while computation continues.

• Does the predicted failure have enough lead time ?In case a failure is predicted, we need to have enough leadtime to perform proactive live migration or safeguard check-point.

2019-10-16 19:05. Page 1 of 1–2.

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

Conference’17, July 2017, Washington, DC, USA Subhendu Behera, Lipeng Wan, Frank Mueller, Matthew Wolf, and Scott Klasky

175176177178179180181182183184185186187188189190191192193194195196197198199200201202203204205206207208209210211212213214215216217218219220221222223224225226227228229230231232233234235236237238239240241242243244245246247248249250251252253254

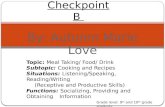

Figure 1: Decision Tree of the Checkpoint Model

• Is the Burst Buffer is local or clustered ? If theHPC system has a clustered Burst Buffer then the checkpointdata can be stored and retrieved from it. In the case of a localBurst Buffer, checkpoint data storage location and recoverystrategy depend on the failure type.

• Is the failure a Node Failure ? With sufficient leadtime for safeguard checkpoint, we need to make sure thatcheckpoint data is stored in BBs first, then bled off to the PFS.Post-failure, the checkpoint data can be recovered from localBB devices on healthy nodes while the new, replacementnode can recover the data from the PFS. However, in the caseof soft failures, the checkpoint data can be stored in BBs andrecovered later from the same.

The above checkpoint model is demonstrated in figure 1 as adecision tree with leaf nodes representing actions taken based onconditions, represented as intermediate nodes, that hold.

3 EVALUATIONWe evaluate our model based on the percentage of checkpoint fre-quency and computation wastage reductions on a Summit-like HPCsystem. First, we analyzed logs from three modern HPC systems(Cray XC30 and Cray XC40) over six months to find instances ofdifferent common sequences of phrases or logs that may lead tofailure, categorized them as soft or node failures, and measuredtheir mean lead time. We used the failure sequences from [1, 2] toextract the possible failure instances and consider them as failuresin our simulation. We also found that failures with more than therequired time to live migrate on Summit is close to 44% of all thefailure instances. We make a few assumptions. First, DRAM size isthe maximum amount of data transfer required to migrate an appli-cation. Second, even though the analyzed logs are from only four

HPC Systems, we expect failures with similar lead time distributionon other HPC systems.

Our simulation for real-world scientific applications such asCHIMERA, XGC, S3D, and VULCAN runs on a Summit-like super-computer along with our Checkpoint/Restart solution. During thesimulation, we measure the percentage of computation hours saveddue to the failure prediction and analysis model. It is observed thatthe gains made by avoiding wastage of computation and reducingthe checkpoint effort are between 22% to 97%. With 44% of thefailures that can be avoided using proactive live migration, thecheckpoint interval in [4] can be increased by 33% as per [5]. Thisreduces the checkpoint writes to BBs by ≈ 29% and increases thedurability of BBs.

4 FUTUREWORKIn the future, we aim to integrate I/O performance prediction mod-els into the checkpoint model to improve application efficiency forfailures that cannot be predicted with sufficient lead time.

5 CONCLUSIONIn this work, we build a checkpoint model that takes into accountthe modern design of the I/O subsystem of large-scale HPC systemswhile being driven by a failure prediction model. This ensures thatcheckpoint data placement is efficient and the data is available forrecovery upon failure. Failure prediction with sufficient lead timeto live migrate can result in the checkpoint writes reduction by≈ 29%. We also demonstrate a 22% - 97% decrease in computationwastage because of failure prediction and analysis.

ACKNOWLEDGMENTSThis research was supported in part by NSF grants 1525609, 1813004,and an appointment to the Oak Ridge National Laboratory ASTROProgram, sponsored by the U.S. Department of Energy and admin-istered by the Oak Ridge Institute for Science and Education.

This research used resources of the Oak Ridge Leadership Com-puting Facility at the Oak Ridge National Laboratory, which issupported by the Office of Science of the U.S. Department of Energyunder Contract No. DE-AC05-00OR22725.

REFERENCES[1] Anwesha Das, Frank Mueller, Paul Hargrove, Eric Roman, and Scott Baden. 2018.

Doomsday: Predicting Which Node Will Fail when on Supercomputers. In Proceed-ings of the International Conference for High Performance Computing, Networking,Storage, and Analysis (SC ’18). IEEE Press, Piscataway, NJ, USA, Article 9, 14 pages.https://doi.org/10.1109/SC.2018.00012

[2] Anwesha Das, Frank Mueller, Charles Siegel, and Abhinav Vishnu. 2018. Desh:Deep Learning for System Health Prediction of Lead Times to Failure in HPC.In Proceedings of the 27th International Symposium on High-Performance Paralleland Distributed Computing (HPDC ’18). ACM, New York, NY, USA, 40–51. https://doi.org/10.1145/3208040.3208051

[3] Ana Gainaru, Franck Cappello, Marc Snir, and William T Kramer. 2013. Failureprediction for HPC systems and applications: Current situation and open issues.International Journal of High Performance Computing Applications 27, 3 (1 8 2013),273–282. https://doi.org/10.1177/1094342013488258

[4] Lipeng Wan, Qing Cao, Feiyi Wang, and Sarp Oral. 2017. Optimizing CheckpointData Placement with Guaranteed Burst Buffer Endurance in Large-scale Hier-archical Storage Systems. J. Parallel Distrib. Comput. 100, C (Feb. 2017), 16–29.https://doi.org/10.1016/j.jpdc.2016.10.002

[5] John W. Young. 1974. A First Order Approximation to the Optimum CheckpointInterval. Commun. ACM 17, 9 (Sept. 1974), 530–531. https://doi.org/10.1145/361147.361115

2019-10-16 19:05. Page 2 of 1–2.