A Non-Parametric Test to Detect Data-Copying in Generative ... · ˇ(D) = L(Dj ˇ)...

Transcript of A Non-Parametric Test to Detect Data-Copying in Generative ... · ˇ(D) = L(Dj ˇ)...

A Non-Parametric Test to Detect Data-Copying in Generative Models

Casey Meehan, Kamalika Chaudhuri, Sanjoy DasguptaUniversity of California, San Diego

Abstract

Detecting overfitting in generative models isan important challenge in machine learning.In this work, we formalize a form of overfittingthat we call data-copying – where the gener-ative model memorizes and outputs trainingsamples or small variations thereof. We pro-vide a three sample non-parametric test fordetecting data-copying that uses the trainingset, a separate sample from the target dis-tribution, and a generated sample from themodel, and study the performance of our teston several canonical models and datasets.

1 Introduction

Overfitting is a basic stumbling block of any learningprocess. While it has been studied in great detail inthe context of supervised learning, it has received muchless attention in the unsupervised setting, despite beingjust as much of a problem.

To start with a simple example, consider a classicalkernel density estimator (KDE), which given datax1, . . . , xn ∈ Rd, constructs a distribution over Rd byplacing a Gaussian of width σ > 0 at each of thesepoints, yielding the density

qσ(x) =1

(2π)d/2σdn

n∑i=1

exp

(−‖x− xi‖

2

2σ2

). (1)

The only parameter is the scalar σ. Setting it too smallmakes q(x) too concentrated around the given points: aclear case of overfitting (see Appendix Figure 6). Thiscannot be avoided by choosing the σ that maximizesthe log likelihood on the training data, since in thelimit σ → 0, this likelihood goes to ∞.

Proceedings of the 23rdInternational Conference on ArtificialIntelligence and Statistics (AISTATS) 2020, Palermo, Italy.PMLR: Volume 108. Copyright 2020 by the author(s).

The classical solution is to find a parameter σ that hasa low generalization gap – that is, a low gap betweenthe training log-likelihood and the log-likelihood on aheld-out validation set. This method however oftendoes not apply to the more complex generative modelsthat have emerged over the past decade or so, suchas Variational Auto Encoders (VAEs) (Kingma andWelling, 2013) and Generative Adversarial Networks(GANs) (Goodfellow et al., 2014). These models easilyinvolve millions of parameters, and hence overfitting isa serious concern. Yet, a major challenge in evaluat-ing overfitting is that these models do not offer exact,tractable likelihoods. VAEs can tractably provide alog-likelihood lower bound, while GANs have no ac-companying density estimate at all. Thus any methodthat can assess these generative models must be basedonly on the samples produced.

A body of prior work has provided tests for evaluatinggenerative models based on samples drawn from them(Salimans et al., 2016; Sajjadi et al., 2018; Wu et al.,2017; Heusel et al., 2017); however, the vast majorityof these tests focus on ‘mode dropping’ and ‘mode col-lapse’: the tendency for a generative model to eithermerge or delete high-density modes of the true distri-bution. A generative model that simply reproduces thetraining set or minor variations thereof will pass mostof these tests.

In contrast, this work formalizes and investigates a typeof overfitting that we call ‘data-copying’: the propensityof a generative model to recreate minute variations ofa subset of training examples it has seen, rather thanrepresent the true diversity of the data distribution.An example is shown in Figure 1b; in the top regionof the instance space, the generative model data-copies,or creates samples that are very close to the trainingsamples; meanwhile, in the bottom region, it underfits.To detect this, we introduce a test that relies on threeindependent samples: the original training sample usedto produce the generative model; a separate (held-out)test sample from the underlying distribution; and asynthetic sample drawn from the generator.

Our key insight is that an overfit generative modelwould produce samples that are too close to the train-

arX

iv:2

004.

0567

5v1

[cs

.LG

] 1

2 A

pr 2

020

A Non-Parametric Test to Detect Data-Copying in Generative Models

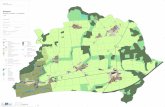

(a) Illustration ofover-/under-representation

Training sample: ×, Generatedsample: •

(b) Illustration ofdata-copying/underfitting

Training sample: ×, Generatedsample: •

(c) VAE copying/underfitting onMNIST

top: ZU = −8.54, bottom:ZU = +3.30

Figure 1: Comparison of data-copying with over/under representation. Each image depicts a single instance space partitioned into tworegions. Illustration (a) depicts an over-represented region (top) and under-represented region (bottom). This is the kind of overfittingevaluated by methods like FID score and Precision and Recall. Illustration (b) depicts a data-copied region (top) and underfit region(bottom). This is the type of overfitting focused on in this work. Figure (c) shows VAE-generated and training samples from a data-copied(top) and underfit (bottom) region of the MNIST instance space. In each 10-image strip, the bottom row provides random generated samplesfrom the region and the top row shows their training nearest neighbors. Samples in the bottom region are on average further to theirtraining nearest neighbor than held-out test samples in the region, and samples in the top region are closer, and thus ‘copying’ (computedin embedded space, see Experiments section).

ing samples – closer on average than an independentlydrawn test sample from the same distribution. Thus,if a suitable distance function is available, then we cantest for data-copying by testing whether the distancesto the closest point in the training sample are on aver-age smaller for the generated sample than for the testsample.

A further complication is that modern generative mod-els tend to behave differently in different regions ofspace; a configuration as in Figure 1b for examplecould cause a global test to fail. To address this, we useideas from the design of non-parametric methods. Wedivide the instance space into cells, conduct our testseparately in each cell, and then combine the resultsto get a sense of the average degree of data-copying.

Finally, we explore our test experimentally on a varietyof illustrative data sets and generative models. Ourresults demonstrate that given enough samples, ourtest can successfully detect data-copying in a broadrange of settings.

1.1 Related work

There has been a large body of prior work on theevaluation of generative models (Salimans et al., 2016;Lopez-Paz and Oquab, 2016; Richardson and Weiss,2018; Sajjadi et al., 2018; Xu et al., 2018; Wu et al.,2017) . Most are geared to detect some form of mode-collapse or mode-dropping: the tendency to eithermerge or delete high-density regions of the training data.Consequently, they fail to detect even the simplest caseof extreme data-copying – where a generative model

memorizes and exactly reproduces a bootstrap samplefrom the training set. We discuss below a few suchcanonical tests.

To-date there is a wealth of techniques for evaluat-ing whether a model mode-drops or -collapses. Testslike the popular Inception Score (IS), Frechét Incep-tion Distance (FID) (Heusel et al., 2017), Precisionand Recall test (Sajjadi et al., 2018), and extensionsthereof (Kynkäänniemi et al., 2019; Che et al., 2016)all work by embedding samples using the features ofa discriminative network such as ‘InceptionV3’ andchecking whether the training and generated samplesare similar in aggregate. The hypothesis-testing bin-ning method proposed by Richardson and Weiss (2018)also compares aggregate training and generated sam-ples, but without the embedding step. The parametricKernel MMD method proposed by Sutherland et al.(2016) uses a carefully selected kernel to estimate thedistribution of both the generated and training samplesand reports the maximum mean discrepancy betweenthe two. All these tests, however, reward a generativemodel that only produces slight variations of the train-ing set, and do not successfully detect even the mostegregious forms of data-copying.

A test that can detect some forms of data-copying isthe Two-Sample Nearest Neighbor, a non-parametrictest proposed by Lopez-Paz and Oquab (2016). Theirmethod groups a training and generated sample ofequal cardinality together, with training points labeled‘1’ and generated points labeled ‘0’, and then reportsthe Leave-One-Out (LOO) Nearest-Neighbor (NN) ac-curacy of predicting ‘1’s and ‘0’s. Two values are then

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

reported as discussed by Xu et al. (2018) – the leave-one-out accuracy of the training points, and the leave-one-out accuracy of the generated points. An idealgenerative model should produce an accuracy of 0.5 foreach. More often, a mode-collapsing generative modelwill leave the training accuracy low and generated ac-curacy high, while a generative model that exactlyreproduces the entire training set should produce zeroaccuracy for both. Unlike this method, our test notonly detects exact data-copying, which is unlikely, butestimates whether a given model generates samplescloser to the training set than it should, as determinedby a held-out test set.

The concept of data-copying has also been explored byXu et al. (2018) (where it is called ‘memorization’) fora variety of generative models and several of the abovetwo-sample evaluation tests. Their results indicate thatout of a variety of popular tests, only the two-samplenearest neighbor test is able to capture instances ofextreme data-copying.

Bounliphone et al. (2016) explores three-sample testing,but for comparing the performance of different models,not for detecting overfitting. Esteban et al. (2017) usesthe three-sample test proposed by Bounliphone et al.(2016) for detecting data-copying; unlike ours, theirtest is global in nature.

Finally, other works concurrent with ours have exploredparametric approaches to rooting out data-copying. Arecent work by Gulrajani et al. (2020) suggests that,given a large enough sample from the model, NeuralNetwork Divergences are sensitive to data-copying. Ina slightly different vein, a recent work by Webster et al.(2019) investigates whether latent-parameter modelsmemorize training data by learning the reverse mappingfrom image to latent code. The present work departsfrom those by offering a probabilistically motivatednon-parametric test that is entirely model agnostic.

2 Preliminaries

We begin by introducing some notation and formalizingthe definitions of overfitting. Let X denote an instancespace in which data points lie, and P an unknownunderlying distribution on this space. A training set Tis drawn from P and is used to build a generative modelQ. We then wish to assess whether Q is the result ofoverfitting: that is, whether Q produces samples thatare too close to the training data. To help ascertainthis, we are able to draw two additional samples:

• A fresh sample of n points from P ; call this Pn.

• A sample of m points from Q; call this Qm.

As illustrated in Figures 1a, 1b, a generative modelcan overfit locally in a region C ⊆ X . To characterizethis, for any distribution D on X , we use D|C denoteits restriction to the region C, that is,

D|C(A) =D(A ∩ C)D(C)

for any A ⊆ X .

2.1 Definitions of Overfitting

We now formalize the notion of data-copying, and il-lustrate its distinction from other types of overfitting.

Intuitively, data-copying refers to situations where Qis “too close” to the training set T ; that is, closer toT than the target distribution P happens to be. Wemake this quantitative by choosing a distance functiond : X → R from points in X to the training set, forinstance, d(x) = mint∈T ‖x − t‖2, if X is a subset ofEuclidean space.

Ideally, we desire that Q’s expected distance to thetraining set is the same as that of P ’s, namelyEX∼P [d(X)] = EY∼Q[d(Y )]. We may rewrite thisas follows: given any distribution D over X , defineL(D) to be the one-dimensional distribution of d(X)for X ∼ D. We consider data-copying to have oc-curred if random draws from L(P ) are systematicallylarger than from L(Q). The above equalized expecteddistance condition can be rewritten as

EY∼Q[d(Y )]− EX∼P [d(X)] = EA∼L(P )

B∼L(Q)

[B −A] = 0

(2)

However, we are less interested in how large the dif-ference is, and more in how often B is larger than A.Let

∆T (P,Q) = Pr(B > A

∣∣ B ∼ L(Q), A ∼ L(P ))

where 0 ≤ ∆T (P,Q) ≤ 1 represents how ‘far’ Q is fromtraining sample T as compared to true distribution P .A more interpretable yet equally meaningful conditionis

∆T (P,Q) = EA∼L(P )

B∼L(Q)

[1B>A] ≈ 1

2

which guarantees (2) if densities L(P ) and L(Q) havethe same shape, but could plausibly be mean-shifted.

If ∆T (P,Q) � 12 , Q is data-copying training set

T , since samples from Q are systematically closerto T than are samples from P . However, even if∆T (P,Q) ≥ 1

2 , Q may still be data-copying. As exhib-ited in Figures 1b and 1c, a model Q may data-copyin one region and underfit in others. In this case, Qmaybe further from T than is P globally, but much closerto T locally. As such, we consider Q to be data-copyingif it is overfit in a subset C ⊆ X :

A Non-Parametric Test to Detect Data-Copying in Generative Models

Definition 2.1 (Data-Copying). A generative modelQ is data-copying training set T if, in some region C ⊆X , it is systematically closer to T by distance metricd : X → R than are samples from P . Specifically, if

∆T (P |C , Q|C) <1

2

Observe that data-copying is orthogonal to the type ofoverfitting addressed by many previous works (Heuselet al., 2017; Sajjadi et al., 2018), which we call ‘over-representation’. There, Q overemphasizes some regionof the instance space C ⊆ X , often a region of high den-sity in the training set T . For the sake of completeness,we provide a formal definition below.Definition 2.2 (Over-Representation). A generativemodel Q is over-representing P in some region C ⊆ X ,if the probability of drawing Y ∼ Q is much greaterthan it is of drawing X ∼ P . Specifically, if

Q(C)− P (C)� 0

Observe that it is possible to over-represent withoutdata-copying and vice versa. For example, if P is anequally weighted mixture of two Gaussians, and Q per-fectly models one of them, then Q is over-representingwithout data-copying. On the other hand, if Q outputsa bootstrap sample of the training set T , then it isdata-copying without over-representing. The focus ofthe rest of this work is on data-copying.

3 A Test For Data-Copying

Having provided a formal definition, we next proposea hypothesis test to detect data-copying.

3.1 A Global Test

We introduce our data-copying test in the global setting,when C = X . Our null hypothesis H0 suggests that Qmay equal P :

H0 : ∆T (P,Q) =1

2(3)

There are well-established non-parametric tests for thishypothesis, such as the Mann-Whitney U test (Mannand Whitney, 1947). Let Ai ∼ L(Pn), Bj ∼ L(Qm)be samples of L(P ), L(Q) given by Pn, Qm and theirdistances d(X) to training set T . The U statistic es-timates the probability in Equation 3 by measuringthe number of all mn pairwise comparisons in whichBj > Ai. An efficient and simple method to gatherand interpret this test is as follows:

1. Sort the n + m values L(Pn) ∪ L(Qm) such thateach instance li ∈ L(Pn), lj ∈ L(Qm) has rank

R(li), R(lj), starting from rank 1, and ending withrank n + m. L(Pn), L(Qm) have no tied rankswith probability 1 assuming their distributions arecontinuous.

2. Calculate the rank-sum for L(Qm) denoted RQm ,and its U score denoted UQm :

RQm =∑

lj∈L(Qm)

R(lj), UQm = RQm −m(m+ 1)

2

Consequently, UQm =∑ij 1Bj>Ai .

3. Under H0, UQm is approximately normally dis-tributed with > 20 samples in both L(Qm) andL(Pn), allowing for the following z-scored statistic

ZU(L(Pn), L(Qm);T

)=UQm − µU

σU,

µU =mn

2, σU =

√mn(m+ n+ 1)

12

ZU provides us a data-copying statistic with normalizedexpectation and variance under H0. ZU � 0 impliesdata-copying, ZU � 0 implies underfitting. ZU < −5implies that if H0 holds, ZU is as likely as sampling avalue < −5 from a standard normal.

Observe that this test is completely model agnosticand uses no estimate of likelihood. It only requires ameaningful distance metric, which is becoming com-mon practice in the evaluation of mode-collapse and-dropping (Heusel et al., 2017; Sajjadi et al., 2018) aswell.

3.2 Handling Heterogeneity

As described in Section 2.1, the above global test canbe fooled by generators Q which are very close to thetraining data in some regions of the instance space(overfitting) but very far from the training data inothers (poor modeling).

We handle this by introducing a local version of our test.Let Π denote any partition of the instance space X ,which can be constructed in any manner. In our exper-iments, for instance, we run the k-means algorithm onT , so that |Π| = k. As the number of training and testsamples grows, we may increase k and thus the instance-space resolution of our test. Letting Lπ(D) = L(D|π)be the distribution of distances-to-training-set withincell π ∈ Π, we probe each cell of the partition Π indi-vidually.

Data Copying. To offer a summary statistic fordata copying, we collect the z-scored Mann-WhitneyU statistic, ZU , described in Section 3.1 in each cell

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

π. Let Pn(π) = |{x : x ∈ Pn, x ∈ π}|/n denote thefraction of Pn points lying in cell π, and similarly forQm(π). The ZU test for cell π and training set T willthen be denoted as ZU

(Lπ(Pn), Lπ(Qm);T

), where

Lπ(Pn) = {d(x) : x ∈ Pn, x ∈ π} and similarly forLπ(Qm). See Figure 1c for examples of these in-cellscores. For stability, we only measure data-copyingfor those cells significantly represented by Q, as deter-mined by a threshold τ . Let Πτ be the set of all cellsin the partition Π for which Qm(π) ≥ τ . Then, oursummary statistic for data copying averages across allcells represented by Q:

CT (Pn, Qm) :=

∑π∈Πτ

Pn(π)ZU(Lπ(Pn), Lπ(Qm);T

)∑π∈Πτ

Pn(π)

Over-Representation. The above test will notcatch a model that heavily over- or under-representscells. For completeness, we next provide a simple rep-resentation test that is essentially used by Richardsonand Weiss (2018), now with an independent test setinstead of the training set.

With n,m ≥ 20 in cell π, we may treat Qm(π), Pn(π)as Gaussian random variables. We then check the nullhypothesis H0 : 0 = P (π)−Q(π). Assuming this nullhypothesis, a simple z-test is:

Zπ =Qm(π)− Pn(π)√p̂(1− p̂

)(1n + 1

m

)where p̂ = nPn(π)+mQm(π)

n+m . We then report two val-ues for a significance level s = 0.05: the number ofsignificantly different cells (‘bins’) with Zπ > s (NDBover-representing), and the number with Zπ < −s(NDB under-representing).

Together, these summary statistics — CT , NDB-over,NDB-under — detect the ways in which Q broadlyrepresents P without directly copying the training setT .

3.3 Performance Guarantees

We next provide some simple guarantees on the perfor-mance of the global test statistic U(Qm). Guaranteesfor the average test is more complicated, and is left asa direction for future work.

We begin by showing that when the null hypothesisH0 does not hold, UQm has some desirable properties– 1mnUQm is a consistent estimator of the quantity of

interest, ∆T (P,Q):

Theorem 1. For true distribution P , model distribu-tion Q, and distance metric d : X → R, the estimator

1mnUQm →P ∆(P,Q) according to the concentrationinequality

Pr( ∣∣ 1

mnUQm −∆(P,Q)

∣∣ ≥ t) ≤ exp

(− 2t2mn

m+ n

)Furthermore, when the model distribution Q actuallymatches the true distribution P , under modest assump-tions we can expect 1

mnUQm to be near 12 :

Theorem 2. If Q = P , and the corresponding distancedistribution L(Q) = L(P ) is non-atomic, then

E[ 1

mnUQm

]=

1

2and E[ZU ] = 0

Proofs are provided in Appendices 7.1 and 7.2.

Additionally, we show that for a Gaussian Kernel Den-sity Estimator, the parameter σ that satisfies the condi-tion in Equation 2 is the σ corresponding to a maximumlikelihood Gaussian KDE model. Recall that a KDEmodel is described by

qσ(x) =1

(2π)k/2|T |σk∑t∈T

exp

(−‖x− t‖

2

2σ2

), (4)

where the posterior probability that a random draw x ∼qσ(x) comes from the Gaussian component centered attraining point t is

Qσ(t|x) =exp(−‖x− t‖2/(2σ2))∑

t′∈T exp(−‖x− t′‖2/(2σ2))

Lemma 3. For the kernel density estimator (4), themaximum-likehood choice of σ, namely the maximizerof EX∼P [log qσ(X)], satisfies

EX∼P[∑t∈T

Qσ(t|X)‖X − t‖2]

=

EY∼Qσ[∑t∈T

Qσ(t|Y )‖Y − t‖2]

See Appendix 7.3 for proof. Unless σ is large, weknow that for any given x ∈ X ,

∑t∈T Qσ(t|x)‖x −

t‖2 ≈ d(x) = mint∈T ‖x − t‖2. So, enforcingthat EX∼P [d(X)] = EY∼Q[d(Y )], and more looselythat EA∼L(P )

B∼L(Q)

[1B>A] = 12 provides an excellent non-

parametric approach to selecting a Gaussian KDE, andought to be enforced for any Q attempting to emulateP ; after all, Theorem 2 points out that effectively anymodel with Q = P also yields this condition.

4 Experiments

Having clarified what we mean by data-copying in the-ory, we turn our attention to data copying by generativemodels in practice1. We leave representation test re-

1https://github.com/casey-meehan/data-copying

A Non-Parametric Test to Detect Data-Copying in Generative Models

(a) (b) (c) (d)

Figure 2: Response of four baseline test methods to data-copying of a Gaussian KDE on ‘moons’ dataset. Only the two-sample NN test(c) is able to detect data-copying KDE models as σ moves below σMLE (depicted as a red dot). The gray trace is proportional to the KDE’slog-likelihood measured on a held-out validation set.

sults for the appendix, since this behavior has beenwell studied in previous works. Specifically, we aim toanswer the two following questions:

1. Are the existing tests that measure generativemodel overfitting able to capture data-copying?

2. As popular generative models range from over- tounderfitting, does our test indicate data-copying,and if so, to what degree?

Training, Generated and Test Sets. In all of thefollowing experiments, we select a training dataset Twith test split Pn, and a generative model Q producinga sample Qm. We perform k-means on T to determinepartition Π, with the objective of having a reasonablepopulation of both T and Pn in each π ∈ Π. We setthreshold τ , such that we are guaranteed to have atleast 20 samples in each cell in order to validate thegaussian assumption of Zπ, ZU .

4.1 Detecting data-copying

First, we investigate which of the existing generativemodel tests can detect explicit data-copying.

Dataset and Baselines. For this experiment, weuse the simple two-dimensional ‘moons’ dataset, asit affords us limitless training and test samples andrequires no feature embedding (see Appendix 7.4.1 foran example).

As baselines, we probe four of the methods describedin our Related Work section to see how they react todata-copying: two-sample NN (Lopez-Paz and Oquab,2016), FID (Heusel et al., 2017), Binning-Based Eval-uation (Richardson and Weiss, 2018), and Precision& Recall (Sajjadi et al., 2018). A detailed descriptionof the methods is provided in Appendix 7.4.2. Notethat, without an embedding, FID is simply the Frechétdistance between two maximum likelihood normal dis-tributions fit to T and Qm. We use the same sizegenerated and training sample for all methods. Notethat the two-sample NN test requires the generatedsample size m to be equal to the training sample size T .

When m < |T | (especially for large datasets and com-putationally burdensome samplers) we use an m-sizetraining subsample T̃ .

Experimental Methodology. We choose as ourgenerative model Q a Gaussian KDE as it allows us toforce explicit data-copying by setting σ very low. Asσ → 0, Q becomes a bootstrap sampler of the originaltraining set. If a given test method can detect the levelof data-copying by Q on T , it will provide a differentresponse to a heavily over-fit KDE Q (σ � σMLE), awell-fit KDE Q (σ ≈ σMLE), and an underfit KDE Q(σ � σMLE).

Results. Figure 2 depicts how each baseline methodresponds to KDE Q models of varying degrees of data-copying, as Q ranges from data-copying (σ = 0.001)up to heavily underfit (σ = 10). The Frechét and Bin-ning methods report effectively the same value for allσ ≤ σMLE, indicating inability to detect data-copying.Similarly, the Precision-Recall curves for different σvalues are nearly identical for all σ ≤ σMLE, and onlychange for large σ.

The two-sample NN test does show a mild changein response as σ decreases below σMLE. This makessense; as points in Qm become closer to points in T ,the two-sample NN accuracy should steadily decline.The primary reason it does not drop to zero is due tothe m subsampled training points, T̃ ⊂ T , needed toperform this test. As such, each training point t ∈ Tbeing copied by generated point q ∈ Qm is unlikely tobe present in T̃ during the test. This phenomenon isespecially pronounced in some of the following settings.However, even when m = |T |, this test will not reduceto zero as σ → 0 due to the well-known result thata bootstrap sample of T will only include ≈ 1 − 1/eof the samples in T . Consequently, several trainingsamples will not have a generated sample as nearestneighbor. The CT test avoids this by specifically findingthe training nearest neighbor of each generated sample.

The reason most of these tests fail to detect data-copying is because most existing methods focus on an-other type of overfitting: mode-collapse and -dropping,

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

(a) (b) (c)

(d) (e) (f)

Figure 3: CT (Pn, Qm) vs. NN baseline and generalization gap on moons and MNIST digits datasets. (a,b,c) compare the three methodson the moons dataset. (d,e,f) compare the three methods on MNIST. In both data settings, the CT statistic is far more sensitive to thedata-copying regime σ � σMLE than the NN baseline. It is more sensitive to underfitting σ � σMLE than the generalization gap test. Thered dot denotes σMLE, and the gray trace is proportional to the KDE’s log-likelihood measured on a held-out validation set.

wherein entire modes of P are either forgotten or aver-aged together. However, if a model begins to data-copy,it is definitively overfitting without mode-collapsing.

Note that the above four baselines are all two sampletests that do not use Pn as CT does. For complete-ness, we present experiments with an additional, threesample baseline in Appendix 7.5. Here, we repeatthe ‘moons’ dataset experiment with the three-samplekernel MMD test originally proposed by Bounliphoneet al. (2016) for generative model selection and lateradapted by Esteban et al. (2017) for testing modelover-fitting. We observe in Figure 11b that the three-sample kMMD test does not detect data-copying, treat-ing the MLE model similarly to overfit models withσ << σMLE. See Appendix 7.5 for experimental de-tails.

4.2 Measuring degree of data-copying

We now aim to answer the second question raised atthe beginning of this section: does our test statisticCT (Pn, Qm) detect and quantify data-copying?

We focus on three generative models: Gaussian KDEs,Variational Autoencoders (VAEs) and Generative Ad-versarial Networks (GANs). For these experiments, weconsider two baselines in addition to our method — thetwo-sample NN test, and the likelihood generalizationgap where it can be computed or approximated.

4.2.1 KDE-based tests

First we consider Gaussian KDEs. While KDEs do notprovide a reliable likelihood in high dimension (Theiset al., 2016), they do have several advantages as apreliminary benchmark – they allow us to directly forcedata-copying, and can help investigate the practicalimplications of the theoretical connection between themaximum likelihood KDE and CT ≈ 0 as describedin Lemma 3. We explore two datasets with GaussianKDE: the ’moons’ dataset, and MNIST.

KDEs: ‘moons’ dataset. Here, we repeat the ex-periment performed in Section 4.1, now including theCT statistic for comparison. Appendix 7.4.1 providesmore experimental details, and examples of the dataset.

Results. Figure 3a depicts how the generalizationgap dwindles as KDE σ increases. While this testis capable of capturing data-copying, it is insensitiveto underfitting and relies on a tractable likelihood.Figures 3b and 3c give a side-by-side depiction of CTand the two-sample NN test accuracies across a range ofKDE σ values. Think of CT values as z-score standarddeviations. We see that the CT statistic in Figure 3bprecisely identifies the MLE model when CT ≈ 0, andresponds sharply to σ values above and below σMLE.The baseline in Figure 3c similarly identifies the MLEQ model when training accuracy ≈ 0.5, but is highervariance and less sensitive to changes in σ, especially forover-fit σ � σMLE. We will see in the next experiment,that this test breaks down for more complex datasetswhen m� |T |.

A Non-Parametric Test to Detect Data-Copying in Generative Models

(a) (b) (c)

(d) (e)

Figure 4: Neural model data-copying: figures (b) and (d) demonstrate the CT statistic identifying data-copying in an MNIST VAE andImageNet GAN as they range from heavily over-fit to underfit. (c) and (e) demonstrate the relative insensitivity of the NN baseline to thisoverfitting, as does figure (a) of the generalization (ELBO) gap method for VAEs. (Note, the markers for (d) apply to the traces of (e))

KDEs: MNIST Handwritten Digits. We nowextend the KDE test performed on the moons datasetto the significantly more complex MNIST handwrittendigit dataset (LeCun and Cortes, 2010).

While it would be convenient to directly apply theKDE σ-sweeping tests discussed in the previous section,there are two primary barriers. The first is that KDEmodel relies on L2 norms being perceptually meaning-ful, which is well understood not to be true in pixelspace. The second problem is that of dimensionality:the 784-dimensional space of digits is far too high fora KDE to be even remotely efficient at interpolatingthe space.

To handle these issues, we first embed each image,x ∈ X , to a perceptually meaningful 64-dimensionallatent code, z ∈ Z. We achieve this by training aconvolutional autoencoder with a VGGnet perceptualloss produced by Zhang et al. (2018) (see Appendix7.4.3 for more detail). Surely, even in the lower 64-dimensional space, the KDE will suffer some from thecurse of dimensionality. We are not promoting thismethod as a powerful generative model, but ratheras an instructive tool for probing a test’s response todata-copying in the image domain. All tests are run inthe compressed latent space; Appendix 7.4.3 providesmore experimental details.

As discussesd briefly in Section 4.1, a limitation ofthe two-sample NN test is that it requires m = |T |.For a large training set like MNIST, it is computation-ally challenging to generate |T | samples, even with a64-dimensional KDE. We therefore use a subsampledtraining set T̃ of size 10, 000 = m < |T | when runningthe two-sample NN test. The proposed CT (Pn, Qm)test has no such restriction on the size of T and Qm.

Results. The likelihood generalization gap is depictedin Figure 3d repeating the trend seen with the ‘moons’dataset.

Figure 3e shows how CT (Pn, Qm) reacts decisivelyto over- and underfitting. It falsely determines theMLE σ value as slightly over-fit. However, the regionof where CT transitions from over- to underfit (say−13 ≤ CT ≤ 13) is relatively tight and includes theMLE σ.

Meanwhile, Figure 3f shows how— with the generatedsample smaller than the training sample, m� |T | —the two-sample NN baseline provides no meaningful es-timate of data-copying. In fact, the most data-copyingmodels with low σ achieve the best scores closest to 0.5.Again, we are forced to use the m-subsampled T̃ ⊂ T ,and most instances of data copying are completelymissed.

These results are promising, and demonstrate the reli-ability of this hypothesis testing approach to probingfor data-copying across different data domains. In thenext section, we explore how these tests perform onmore sophisticated, non-KDE models.

4.2.2 Variational Autoencoders

Gaussian KDE’s may have nice theoretical properties,but are relatively ineffective in high-dimensional set-tings, precluding domains like images. As such, we alsodemonstrate our experiments on more practical neuralmodels trained on higher dimensional image datasets(MNIST and ImageNet), with the goal of observingwhether the CT statistic indicates data-copying as thesemodels range from over- to underfit. The first neuralmodel we consider is a Variational Autoencoder (VAE)

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

trained on the MNIST handwritten images dataset.

Experimetal Methodology. Unlike KDEs, VAEsdo not have a single parameter that controls the de-gree of overfitting. Instead, similar to Bozkurt et al.(2018), we vary model complexity by increasing thewidth (neurons per layer) in a three-layer VAE (seeAppendix 7.4.3 for details) – where higher width meansa model of higher complexity. As an embedding, wepass all samples through the the convolutional autoen-coder of Section 4.2.1, and collect statistics in this64-dimensional space. Observe that likelihood is notavailable for VAEs; instead we compute each model’sELBO on a 10, 000 sample held out validation set, anduse the ELBO approximation to the generalization gapinstead.

We again note here, that for the NN accuracy baseline,we use a subsampled training set T̃ as with the KDE-based MNIST tests where m = |T̃ | = 10, 000.

Results. Figures 4b and 4c compare the CT statis-tic to the NN accuracy baseline . CT behaves as it didin the previous sections: more complex models over-fit,forcing CT � 0, and less complex models underfit forc-ing it � 0. We note that the range of CT values is farless dramatic, which is to be expected since the KDEswere forced to explicitly data-copy. We observe thatthe ELBO spikes for models with CT near 0. Figure4a shows the ELBO approximation of the generaliza-tion gap as the latent dimension (and number of unitsin each layer) is decreased. This method is entirelyinsensitive to over- and underfit models. This maybe because the ELBO is only a lower bound, not theactual likelihood.

The NN baseline in Figure 4c is less interpretable, andfails to capture the overfitting trend as CT does. Whileall three test accuracies still follow the upward-slopingtrend of Figure 3c, they do not indicate where thehighest validation set ELBO is. Furthermore, the NNaccuracy statistics are shifted upward when comparedto the results of the previous section: all NN accura-cies are above 0.5 for all latent dimensions. This isproblematic. A test statistic’s absolute score ought tobear significance between very different data and modeldomains like KDEs and VAEs.

4.2.3 ImageNet GAN

Finally, we scale our experiments up to a larger imagedomain.

Experimental Methodology. We gather our teststatistics on a state of the art conditional GAN, ‘Big-Gan’ (Brock et al., 2018), trained on the Imagenet 12dataset (Russakovsky et al., 2015). Conditioning on

an input code, this GAN will generate one of 1000different Imagenet classes. We run our experimentsseparately on three classes: ‘coffee’, ‘soap bubble’, and‘schooner’. All generated, test, and training images areembedded to a 64-dimensional space by first gatheringthe 2048-dimensional features of an InceptionV3 net-work ‘Pool3’ layer, and then projecting them onto the64 principal components of the training embeddings.Appendix 7.4.4 has more details.

Being limited to one pre-trained model, we increasemodel variance (‘truncation threshold’) instead of de-creasing model complexity. As proposed by BigGan’sauthors, all standard normal input samples outside ofthis truncation threshold are resampled. The authorssuggest that lower truncation thresholds, by only pro-ducing samples at the mode of the input, output higherquality samples at the cost of variety, as determinedby Inception Score (IS). Similarly, the FID score findssuitable variety until truncation approaches zero.

Results. The results for the CT score is depicted inFigure 4d; the statistic remains well below zero untilthe truncation threshold is nearly maximized, indicat-ing that Q produces samples closer to the training setthan real samples tend to be. While FID finds that inaggregate the distributions are roughly similar, a closerlook suggests that Q allocates too much probabilitymass near the training samples.

Meanwhile, the two-sample NN baseline in Figure 4ehardly reacts to changes in truncation, even though thegenerated and training sets are the same size, m = |T |.Across all truncation values, the training sample NNaccuracy remains around 0.5, not quite implying over-or underfitting.

A useful feature of the CT statistic is that one canexamine the ZU scores it is composed of to see whichof the cells π ∈ Πτ are or are not copying. Figure5 shows the samples of over- and underfit clustersfor two of the three classes. For both ‘coffee’ and‘bubble’ classes, the underfit cells are more diverse thanthe data-copied cells. While it might seem reasonablethat these generated samples are further from nearestneighbors in more diverse clusters, keep in mind thatthe ZU > 0 statistic indicates that they are furtherfrom training neighbors than test set samples are. Forinstance, the people depicted in underfit ‘bubbles’ cellare highly distorted.

4.3 Discussion

We now reflect on the two questions recited at the begin-ning of Section 4. Firstly, it appears that many existinggenerative model tests do not detect data-copying. Thefindings of Section 4.1 demonstrate that many popular

A Non-Parametric Test to Detect Data-Copying in Generative Models

(a) Data-copied cells; top: ZU = −1.46, bottom: ZU =−1.00

(b) Underfit cells; top: ZU = +1.40, bottom: ZU = +0.71

Figure 5: Data-copied and underfit cells of ImageNet12 ‘coffee’ and ‘soap bubble’ instance spaces (trunc. threshold = 2). In each 14-figurestrip, the top row provides a random series of training samples from the cell, and the bottom row provides a random series of generatedsamples from the cell. (a) Data-copied cells. (a), top: Random training and generated samples from a ZU = −1.46 cell of the coffee instancespace. (a), bottom: Random training and generated samples from a ZU = −1.00 cell of the bubble instance space. (b) Underfit cells. (b),top: Random training and generated samples from a ZU = +1.40 cell of the coffee instance space. (b), bottom: Random training andgenerated samples from a ZU = +0.71 cell of the bubble instance space.

generative model tests like FID, Precision and Recall,and Binning-Based Evaluation are wholly insensitiveto explicit data-copying even in low-dimensional set-tings. We suggest that this is because these tests aregeared to detect over- and underrepresentation morethan data-copying.

Secondly, the experiments of Section 4.2 indicate thatthe proposed CT (Pn, Qm) test statistic not only de-tects explicitly forced data-copying (as in the KDEexperiments), but also detects data-copying in com-plex, overfit generative models like VAEs and GANs.In these settings, we observe that as models overfitmore, CT drops below 0 and significantly below -1.

A limitation of the proposed CT test is the number oftest samples n. Without sufficient test samples, notonly is the statistic higher variance, but the instancespace partition Π cannot be very fine-grain. Conse-quently, we are limited in our ability to manage theheterogeneity of d(X) across the instance space, andsome cells may be mischaracterized. For example, inthe BigGan experiment of Section 4.2.3, we are pro-vided only 50 test samples per image class (e.g. ‘soapbubble’), limiting us to an instance space partition ofonly three cells. Developing more data-efficient meth-ods to handle heterogeneity may be a promising areaof future work.

5 Conclusion

In this work, we have formalized data-copying : anunder-explored failure mode of generative model overfit-ting. We have provided preliminary tests for measuring

data-copying and experiments indicating its presence ina broad class of generative models. In future work, weplan to establish more theoretical properties of data-copying, convergence guarantees of these tests, andexperiments with different model parameters.

6 Acknowledgements

We thank Rich Zemel for pointing us to Xu et al. (2018),which was the starting point of this work. Thanks toArthur Gretton and Ruslan Salakhutdinov for pointersto prior work, and Philip Isola and Christian Szegedyfor helpful advice. Finally, KC and CM would liketo thank ONR under N00014-16-1-2614, UC Lab Feesunder LFR 18-548554 and NSF IIS 1617157 for researchsupport.

References

Wacha Bounliphone, Eugene Belilovsky, Matthew B.Blaschko, Ioannis Antonoglou, and Arthur Gretton.A test of relative similarity for model selection ingenerative models. In 4th International Conferenceon Learning Representations, ICLR 2016, San Juan,Puerto Rico, May 2-4, 2016, Conference Track Pro-ceedings, 2016. URL http://arxiv.org/abs/1511.04581.

Alican Bozkurt, Babak Esmaeili, Dana H Brooks, Jen-nifer G Dy, and Jan-Willem van de Meent. Can vaesgenerate novel examples? 2018.

Andrew Brock, Jeff Donahue, and Karen Simonyan.Large scale gan training for high fidelity naturalimage synthesis, 2018.

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

Tong Che, Yanran Li, Athul Paul Jacob, Yoshua Ben-gio, and Wenjie Li. Mode regularized generativeadversarial networks. CoRR, abs/1612.02136, 2016.URL http://arxiv.org/abs/1612.02136.

Cristóbal Esteban, Stephanie L Hyland, and GunnarRätsch. Real-valued (medical) time series genera-tion with recurrent conditional gans. arXiv preprintarXiv:1706.02633, 2017.

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza,Bing Xu, David Warde-Farley, Sherjil Ozair, AaronCourville, and Yoshua Bengio. Generative adversarialnets. In Advances in neural information processingsystems, pages 2672–2680, 2014.

Ishaan Gulrajani, Colin Raffel, and Luke Metz. To-wards GAN benchmarks which require generaliza-tion. CoRR, abs/2001.03653, 2020. URL https://arxiv.org/abs/2001.03653.

Martin Heusel, Hubert Ramsauer, Thomas Unterthiner,Bernhard Nessler, and Sepp Hochreiter. Gans trainedby a two time-scale update rule converge to a localnash equilibrium. 2017.

Diederik P Kingma and Max Welling. Auto-encodingvariational bayes. arXiv preprint arXiv:1312.6114,2013.

Tuomas Kynkäänniemi, Tero Karras, Samuli Laine,Jaakko Lehtinen, and Timo Aila. Improved precisionand recall metric for assessing generative models.Advances in Neural Information Processing Systems33 (NIPS 2019), 2019.

Yann LeCun and Corinna Cortes. MNIST handwrittendigit database. 2010. URL http://yann.lecun.com/exdb/mnist/.

David Lopez-Paz and Maxime Oquab. Revisit-ing classifier two-sample tests. arXiv preprintarXiv:1610.06545, 2016.

Henry B Mann and Donald R Whitney. On a test ofwhether one of two random variables is stochasticallylarger than the other. The annals of mathematicalstatistics, pages 50–60, 1947.

Eitan Richardson and Yair Weiss. On gans and gmms.In Advances in Neural Information Processing Sys-tems, pages 5847–5858, 2018.

Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause,Sanjeev Satheesh, Sean Ma, Zhiheng Huang, AndrejKarpathy, Aditya Khosla, Michael Bernstein, Alexan-der C. Berg, and Li Fei-Fei. ImageNet Large ScaleVisual Recognition Challenge. International Journalof Computer Vision (IJCV), 115(3):211–252, 2015.doi: 10.1007/s11263-015-0816-y.

Mehdi S. M. Sajjadi, Olivier Bachem, Mario Lucic,Olivier Bousquet, and Sylvain Gelly. Assessing gen-erative models via precision and recall. Advances

in Neural Information Processing Systems 31 (NIPS2017), 2018.

Tim Salimans, Ian Goodfellow, Wojciech Zaremba,Vicki Cheung, Alec Radford, and Xi Chen. Improvedtechniques for training gans. In Advances in neu-ral information processing systems, pages 2234–2242,2016.

Dougal J. Sutherland, Hsiao-Yu Fish Tung, HeikoStrathmann, Soumyajit De, Aaditya Ramdas,Alexander J. Smola, and Arthur Gretton. Gener-ative models and model criticism via optimized max-imum mean discrepancy. CoRR, abs/1611.04488,2016. URL http://arxiv.org/abs/1611.04488.

Lucas Theis, Aäron van den Oord, and MatthiasBethge. A note on the evaluation of generative mod-els. In 4th International Conference on LearningRepresentations, ICLR 2016, San Juan, Puerto Rico,May 2-4, 2016, Conference Track Proceedings, 2016.URL http://arxiv.org/abs/1511.01844.

Ryan Webster, Julien Rabin, Loïc Simon, and FrédéricJurie. Detecting overfitting of deep generativenetworks via latent recovery. In IEEE Conference onComputer Vision and Pattern Recognition, CVPR2019, Long Beach, CA, USA, June 16-20, 2019,pages 11273–11282. Computer Vision Foundation /IEEE, 2019. doi: 10.1109/CVPR.2019.01153. URLhttp://openaccess.thecvf.com/content_CVPR_2019/html/Webster_Detecting_Overfitting_of_Deep_Generative_Networks_via_Latent_Recovery_CVPR_2019_paper.html.

Yuhuai Wu, Yuri Burda, Ruslan Salakhutdinov, andRoger B. Grosse. On the quantitative analysisof decoder-based generative models. In 5th Inter-national Conference on Learning Representations,ICLR 2017, Toulon, France, April 24-26, 2017,Conference Track Proceedings, 2017. URL https://openreview.net/forum?id=B1M8JF9xx.

Qiantong Xu, Gao Huang, Yang Yuan, Chuan Guo,Yu Sun, Felix Wu, and Kilian Weinberger. An em-pirical study on evaluation metrics of generative ad-versarial networks. arXiv preprint arXiv:1806.07755,2018.

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shecht-man, and Oliver Wang. The unreasonable effective-ness of deep features as a perceptual metric. InCVPR, 2018.

A Non-Parametric Test to Detect Data-Copying in Generative Models

7 Appendix

7.1 Proof of Theorem 1

A restatement of the theorem:

For true distribution P , model distribution Q, anddistance metric d : X → R, the estimator 1

mnUQm →P

∆(P,Q) according to the concentration inequality

Pr( ∣∣ 1

mnUQm −∆(P,Q)

∣∣ ≥ t) ≤ exp

(− 2t2mn

m+ n

)

Proof. We establish consistency using the followingnifty lemma

Lemma 4. (Bounded Differences Inequality) SupposeX1, . . . , Xn ∈ X are independent, and f : Xn → R.Let c1, . . . , cn satisfy

supx1,...,xn,x′i

∣∣f(x1, . . . , xi, . . . , xn)− f(x1, . . . , x′i, . . . , xn)

∣∣≤ ci

for i = 1, . . . , n. Then we have for any t > 0

Pr(∣∣f − E[f ]

∣∣ ≥ t) ≤ exp

(−2t2∑ni=1 c

2i

)(5)

This directly equips us to prove the Theorem.

It is relatively straightforward to apply Lemma 4 to thenormalized U = 1

mnUQm . First, think of it as a functionof m independent samples of X ∼ Q and n indepen-dent samples of Y ∼ P , U(X1, . . . , Xm, Y1, . . . , Yn) =

1mn

∑ij 1d(Xi)>d(Yj)

U : (Rd)mn → R

Let bi bound the change in U after substituting anyXi with X ′i, and cj bound the change in U after sub-stituting any Yj with Y ′j . Specifically

supx1,...,xm,y1,...,yn, x′i

∣∣U(x1, . . . , xi, . . . , xm, y1, . . . , yn)

−U(x1, . . . , x′i, . . . , xm, y1, . . . , yn)

∣∣≤ bi

supx1,...,xm,y1,...,yn, y′j

∣∣U(x1, . . . , xm, y1, . . . , yj , . . . yn)

−U(x1, . . . , xm, y1, . . . , y′j , . . . yn)

∣∣≤ ci

We then know that bi = nmn = 1

m for all i, with equalitywhen d(x′i) < d(yj) < d(xi) for all j ∈ [n]. In thiscase, substituting xi with x′i flips n of the indicator

comparisons in U from 1 to 0, and is then normalizedby mn. By a similar argument, cj = m

nm = 1n for all j.

Equipped with bi and cj , we may simply substituteinto Equation 5 of the Bounded Differences Inequality,giving us

Pr(∣∣U − E[U ]

∣∣ ≥ t) = Pr(∣∣ 1

mnUQm −∆(µp, µq)

∣∣ ≥ t)≤ exp

(−2t2∑m

i=1 b2i +

∑nj=1 c

2j

)= exp

(−2t2∑m

i=11m2 +

∑nj=1

1n2

)= exp

(−2t2

1m + 1

n

)= exp

(−2t2mn

m+ n

)

7.2 Proof of Theorem 2

A restatement of the theorem:

When Q = P , and the corresponding distance distri-bution L(Q) = L(P ) is non-atomic,

E[ 1

mnU]

=1

2

Proof. For random variables A ∼ L(P ) and B ∼ L(P ),we can partition the event space of A× B into threedisjoint events:

Pr(A > B) + Pr(A < B)

+ Pr(A = B) = 1

Since Q = P , the first two events have equal probability,Pr(A > B) = Pr(A < B), so

2 Pr(A > B) + Pr(A = B) = 1

And since the distributions of A and B are non-atomic(i.e. Pr

(B = b

)= 0, ∀ b ∈ R) we have that Pr(A =

B) = 0, and thus

2 Pr(A > B) = 1

Pr(A > B) = ∆(P,Q) =1

2

7.3 Proof of Lemma 3

Lemma 3 For the kernel density estimator (1), themaximum-likehood choice of σ, namely the maximizer

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

of EX∼P [log qσ(X)], satisfies

EX∼P[∑t∈T

Qσ(t|X)‖X − t‖2]

=

EY∼Qσ[∑t∈T

Qσ(t|Y )‖Y − t‖2]

Proof. We have

EX∼P [ln qσ(X)]

= EX∼P

[− ln((2π)k/2|T |σk) + ln

∑t∈T

exp

(−‖x− t‖

2

2σ2

)]

= constant− k lnσ + EX∼P

[ln∑t∈T

exp

(−‖x− t‖

2

2σ2

)]

Setting the derivative of this to zero and simplifying,we find that the maximum-likelihood σ satisfies

σ2 =1

kEX∼P

[∑t∈T

Qσ(t|X)‖X − t‖2]. (6)

Now, interpreting Qσ as a mixture of |T | Gaussians,and using the notation t ∈R T to mean that t is chosenuniformly at random from T , we have

EY∼Qσ

[∑t∈T

Qσ(t|Y )‖Y − t‖2]

= Et∈RTEY∼N(t,σ2Ik)

[‖Y − t‖2

]= kσ2.

Combining this with (6) yields the lemma.

7.4 Procedural Details of Experiments

7.4.1 Moons Dataset, and Gaussian KDE

moons dataset ‘Moons’ is a synthetic dataset con-sisting of two curved interlocking manifolds with addedconfigurable noise. We chose to use this dataset asa proof of concept because it is low dimensional, andthus KDE friendly and easy to visualize, and we mayhave unlimited train, test, and validation samples.

Gaussian KDE We use a Gaussian KDE as our pre-liminary generative model Q because its likelihood istheoretically related to our non-parametric test. Per-haps more importantly, it is trivial to control the de-gree of data-copying with the bandwidth parameterσ. Figures 6b, 6c, 6d provide contour plots of of aGaussian KDE Q trained on the moons dataset withprogressively larger σ. With σ = 0.01, Q will effectivelyresample the training set. σ = 0.13 is nearly the MLEmodel. With σ = 0.5, the KDE struggles to capturethe unique definition of T .

7.4.2 Moons Experiments

Our experiments that examined whether several base-line tests could detect data-copying (Section 4.1), andour first test of our own metric (Section 4.2.1) use themoons dataset. In both of these, we fix a trainingsample, T of 2000 points, a test sample Pn of 1000points, and a generated sample Qm of 1000 points.We regenerate Qm 10 times, and report the averagestatistic across these trials along with a single standarddeviation. If the standard deviation buffer along theline is not visible, it is because the standard deviationis relatively small. We artificially set the constraintthat m,n � |T |, as is true for big natural datasets,and more elaborate models that are computationallyburdensome to sample from.

Section 4.1 Methods Here are the routines we usedfor the four baseline tests:

• Frechét Inception Distance (FID) (Heuselet al., 2017): Normally, this test is run on twosamples of images (T and Qm) that are first em-bedded into a perceptually meaningful latent spaceusing a discriminative neural net, like the Incep-tion Network. By ‘meaningful’ we mean pointsthat are closer together are more perceptually aliketo the human eye. Unlike images in pixel space,the samples of the moons dataset require no em-bedding, so we run the Frechét test directly on thesamples.

First, we fit two MLE Gaussians: N (µT ,ΣT ) to T ,and N (µQ,ΣQ) to Qm, by collecting their respec-tive MLE mean and covariance parameters. Thestatistic reported is the Frechét distance betweenthese two Gaussians, denoted Fr(•, •), which forGaussians has a closed form:

Fr(N (µT ,ΣT ),N (µQ,ΣQ)

)=

‖µT − µQ‖+ Tr(ΣT − ΣQ − 2(ΣTΣQ)

1/2)

Naturally, if Q is data-copying T , its MLE meanand covariance will be nearly identical, render-ing this test ineffective for capturing this kind ofoverfitting.

• Binning Based Evaluation (Richardson andWeiss, 2018): This test, takes a hypothesis testingapproach for evaluating mode collapse and dele-tion. The test bears much similarity to the testdescribed in Section 3.2. The basic idea is as fol-lows. Split the training set into partition Π usingk-means; the number of samples falling into eachbin is approximately normally distributed if it has>20 samples. Check the null hypothesis that thenormal distribution of the fraction of the training

A Non-Parametric Test to Detect Data-Copying in Generative Models

(a) (b) (c) (d)

Figure 6: Contour plots of KDE fit on T : a) training T sample, b) over-fit ‘data copying’ KDE, c) max likelihood KDE, d) underfit KDE

set in bin π, T (π), equals the normal distributionof the fraction of the generated set in bin π, Qm(π).Specifically:

Zπ =Qm(π)− T (π)√p̂(1− p̂

)(1|T | + 1

m

)where p̂ = |T |T (π)+mQm(π)

|T |+m . We then perform aone-sided hypothesis test, and compute the num-ber of positive Zπ values that are greater than thesignificance level of 0.05. We call this the numberof statistically different bins or NDB. The NDB/kought to equal the significance level if P = Q.

• Two-Sample Nearest-Neighbor (Lopez-Pazand Oquab, 2016): In this test — our primarybaseline — we report the three LOO NN valuesdiscussed in Xu et al. (2018). The generated sam-ple Qm and training sample (subsampled to haveequal size, m), T̃ ⊆ T , are joined together cre-ate sample S = T̃ ∪Qm of size 2m, with trainingsamples labeled ‘1’ and test samples labeled ‘0’.One then fits a 1-Nearest-Neighbor classifier to S,and reports the accuracy in predicting the train-ing samples (‘1’s), the accuracy in predicting thegenerated samples (‘0’s), and the average.One can expect that — when Q collapses to afew mode centers of T — the training accuracyis low, and the generated accuracy is high, thusindicating over-representation. Additionally, onecould imagine that when the training and gen-erated accuracies are near 0, we have extremedata-copying. However, as explained in Experi-ments section, when we are forced to subsampleT , it is unlikely that a given copied training pointt ∈ T is used in the test, thus making the testresult unclear.

• Precision and Recall (Sajjadi et al., 2018): Thismethod offers a clever technique for scaling clas-sical precision and recall statistics to high dimen-sional, complex spaces. First, all samples are em-bedded to Inception Network Pool3 features. Then,the author’s use the following insight: for distri-bution’s Q and P , the precision and recall curve

is approximately given by the set of points:

P̂RD(Q,P ) = {(α(λ), β(λ)|λ ∈ Λ}

where

Λ = {tan( i

r + 1

π

2

)|i ∈ [r]}

α(λ) =∑π∈Π

min(λP (π), Q(π)

)β(λ) =

∑π∈Π

min(P (π),

Q(π)

λ

)and where r is the ‘resolution’ of the curve, theset Π is a partition of the instance space andP (π), Q(π) are the fraction of samples falling incell π. Π is determined by running k-means on thecombination of the training and generated sets. Inour tests here, we set k = 5, and report the averagePRD curve measured over 10 k-means clusterings(and then re-run 10 times for 10 separate trials ofQm).

7.4.3 MNIST Experiments

The experiments of Sections 4.2.1 and 4.2.2 use theMNIST digit dataset (LeCun and Cortes, 2010). Weuse a training sample, T , of size |T | = 50, 000, a testsample Pn of size n = 10, 000, a validation sample Vlof l = 10, 000, and create generated samples of sizem = 10, 000.

Here, for a meaningful distance metric, we create acustom embedding using a convolutional autoencodertrained using a VGG perceptual loss proposed by Zhanget al. (2018). The encoder and decoder each have fourconvolutional layers using batch normalization, twolinear layers using dropout, and two max pool layers.The autoencoder is trained for 100 epochs with a batchsize of 128 and Adam optimizer with learning rate0.001. For each training sample t ∈ R784, the encodercompresses to z ∈ R64, and decoder expands back upto t̂ ∈ R784. Our loss is then

L(t, t̂) = γ(t, t̂) + λmax{‖z‖22 − 1, 0}

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

Figure 7: Interpolating between two points in the latent space to demonstrate L2 perceptual significance

(a) (b) (c)

Figure 8: Number of statistically different bins, both those over and under the significance level of 0.05. The black dotted line indicatesthe total number of cells or ‘bins’. (a,b) KDEs tend to start misrepresenting with σ � σMLE, which makes sense as they become less andless dependent on training set. (c) it makes sense that the VAE over- and under-represents across all latent dimensions due to its reverseKL loss. There is slightly worse over- and under-representation for simple models with low latent dimension.

where γ(•, •) is the VGG perceptual loss, andλmax{‖z‖22 − 1, 0} provides a linear hinge loss outsideof a unit L2 ball. The hinge loss encourages the en-coder to learn a latent representation within a boundeddomain, hopefully augmenting its ability to interpolatebetween samples. It is worth noting that the percep-tual loss is not trained on MNIST, and hopefully usesagnostic features that help keep us from overfitting. Weopt to use a standard autoencoder instead of a stochas-tic autoencoder like a VAE, because we want to beable to exactly data-copy the training set T . Thus, wewant the encoder to create a near-exact encoding anddecoding of the training samples specifically. Figure7 provides an example of linearly spaced steps betweentwo training samples. While not perfect, we observethat half-way between the ‘2’ and the ‘0’ is a samplethat appears perceptually to be almost almost a ‘2’and almost a ‘0’. As such, we consider the distancemetric d(x) on this space used in our experiments tobe meaningful.

KDE tests: In the MNIST KDE experiments, wefit each KDE Q on the 64-d latent representations ofthe training set T for several values of σ; we gatherall statistical tests in this space, and effectively onlydecode to visaully inspect samples. We gather theaverage and standard deviation of each data pointacross 5 trials of generating Qm. For the Two-SampleNearest-Neighbor test, it is computationally intenseto compute the nearesnt neighbor in a 64-dimensionaldataset of 20,000 points T̃ ∪Qm 20,000 times. To limitthis, we average each of the training and generated NNaccuracy over 500 training and generated samples. Wefind this acceptable, since the test results depicted in

Figure 3f are relatively low variance.

VAE experiments: In the MNIST VAE experi-ments, we only use the 64-d autoencoder latent repre-sentation in computing the CT and 1-NN test scores,and not at all in training. Here, we experiment withtwenty standard, fully connected, VAEs using binarycross entropy reconstruction loss. The twenty mod-els have three hidden layers and latent dimensionsranging from d = 5 to d = 100 in steps of 5. Thenumber of neurons in intermediate layers is approxi-mately twice the number of the layer beneath it, sofor a latent space of 50-d, the encoder architectureis 784 → 400 → 200 → 100 → 50, and the decoderarchitecture is the opposite.

To sample from a trained VAE, we sample from astandard normal with dimensionality equivalent to theVAEs latent dimension, and pass them through theVAE decoder to the 784-d image space. We then en-code these generated images to the agnostic 64-d latentspace of the perceptual autoencoder described at thebeginning of the section, where L2 distance is mean-ingful. We also encode the training sample T and testsample Pn to this space, and then run the CT andtwo-sample NN tests. We again compute the nearestneighbor accuracies for 10,000 of the training and gen-erated samples (the 1-NN classifier is fit on the 20,000sample set T̃ ∪Qm), which appears to be acceptabledue to low test variance.

7.4.4 ImageNet Experiments

Here, we have chosen three of the one thousand Ima-geNet12 classes that ‘BigGan’ produces. To reiterate,

A Non-Parametric Test to Detect Data-Copying in Generative Models

(a) (b) (c)

Figure 9: This GAN model produces relatively equal representation according to our clustering for all three classes. It makes sense that alow truncation level tends to over-represent for one class, as a lower truncation threshold causes less variance. Even though it places samplesinto all cells, some cells are data-copying much more aggressively than others.

(a) ZU = −1.05 (b) ZU = +1.32

Figure 10: Example of data-copied and underfit cell of ImageNet ‘schooner’ instance space, from ‘BigGan’ with trunc. threshold = 2. Wenote here, that — limited to only 50 training samples — the insufficient k = 3 clustering is perhaps not fine grain enough for this class.Notice that the generated samples falling into the underfit cell (mostly training images of either masts or fronts of boats) are hardly anydifferent from those of the over-fit cell. They are likely on the boundary of the two cells. With that said, the samples of the data-copied cell(a) are certainly close to the training samples in this region.

a conditional GAN can output samples from a spe-cific class by conditioning on a class code input. Weacknowledge that conditional GANs combine featuresfrom many classes in ways not yet well understood,but treat the GAN of each class as a uniquely differentgenerative model trained on the training samples fromthat class. So, for the ‘coffee’ class, we treat the GANas a coffee generator Q, trained on the 1300 ‘coffee’class samples. For each class, we have 1300 trainingsamples |T |, 2000 generated samples m, and 50 testsamples n. Being atypically training sample starved(m > |T |), we subsample Qm (not T !), to produceequal size samples for the two-sample NN test. Assuch, all training samples used are in the combined setS. We also note that the 50 test samples provided ineach class is highly limiting, only allowing us to splitthe instance space into about three cells and keep areasonable number of test samples in each cell. As thenumber of test samples grows, so can the number ofcells and the resolution of the partition. Figure 10provides an example of where this clustering might belimited; the generated samples of the underfit cell seemhardly any different from those of the over-fit cell. Afiner-grain partition is likely needed here. However, thedata-copied cell to the left does appear to be very closeto the training set, potentially too close according toZU .

In performing these experiments, we gather the

CT (Pn, Qm) statistic for a given class of images. Inan attempt to embed the images into a lower dimen-sional latent space with L2 significance, we pass eachimage through an InceptionV3 network and gatherthe 2048-dimension feature embeddings after the finalaverage pooling layer (Pool3). We then project allinception-space images (T, Pn, Qm) onto the 64 princi-pal components of the training set embeddings. Finally,we use k-means to partition the points of each sampleinto one of k = 3 cells. The number of cells is limitedby the 50 test images available per class. Any morecells would strain the Central Limit Theorem assump-tion in computing ZU . Finally, we gather the CT andtwo-sample NN baseline statistics on this 64-d space.

7.5 Comparison with three-sampleKernel-MMD:

Another three-sample test not shown in the main bodyof this work is the three-sample kernel MMD test intro-duced by Bounliphone et al. (2016) intended more formodel comparison than for checking model overfitting.For samples X ∼ P and Y ∼ Q, we can estimate thesquared kernel MMD between P and Q under kernel kby empirically estimating

MMD2(P,Q)

= Ex,x′∼P [k(x, x′)]− 2Ex∼P,y∼Q[k(x, y)] + Ey,y′∼Q[k(y, y′)]

Casey Meehan, Kamalika Chaudhuri, Sanjoy Dasgupta

(a) (b) (c)

(d) (e)

Figure 11: Comparison of the CT (Pn, Qm) test presented in this paper alongside the three sample kMMD test. (a) and (b) compare thetwo tests on the simple moons dataset where Q is a gaussian KDE (repeating the experiments of Figure 2). (c) and (d) compare the twotests for the MNIST dataset where Q is a VAE (repeating the experiments of Figure 4.

More recent works such as Esteban et al. (2017) haverepurposed this test for measuring generative modeloverfitting. Intuitively, if the model is overfitting itstraining set, the empirical kMMD between trainingand generated data may be smaller than that betweentraining and test sets. This may be triggered by thedata-copying variety of overfitting.

This test provides an interesting benchmark to con-sider in addition to those in the main body. Fig-ure 11 demonstrates some preliminary experimen-tal results repeating both the ‘moons’ KDE exper-iment of Figure 2 and the MNIST VAE experi-ment Figure 4b. To implement the kMMD test,we used code posted by Bounliphone et al. (2016)https://github.com/eugenium/MMD, specifically thethree sample RBF-kMMD test.

In Figures 11a, 11b, and 11c we compareCT (Pn, Qm) and the kMMD gap respectively for 50values of KDE σ. We observe that the kMMD betweenthe test and training set (blue) and between the gen-erated and training set (orange) remain near zero forall σ values less than the MLE σ, indicated by the redcircle. This suggests that the three-sample kMMD isnot a particularly strong test for data-copying, sincelow σ values are effectively bootstrapping the originaltraining set. The kMMD gap does diverge for σ valuesmuch larger than the MLE σ, indicating that it candetect underfitting by Q, however.

This is corroborated by Figure 11c which displays thep-value of the kMMD hypothesis test used by Estebanet al. (2017). This checks the null hypothesis that thekMMD between T and Qm is greater than that betweenT and Pn. A high p-value confirms this null hypothesis(as seen for all σ > σMLE). A p-value near 0.5 suggeststhat the kMMD’s are approximately equal. A p-valuenear zero rejects the null hypothesis, suggesting that thekMMD between Qm and T is much smaller than thatbetween Pn and T . We see that the p-value remainswell above 0.5 for all σ values and treats the MLE σjust as it does the overfit σ values.

Figures 11d and 11e compare the CT and kMMDtests for twenty MNIST VAEs with decreasing complex-ity (latent dimension). Figures 11e again depicts thekMMD distance to training set for both the generated(orange) and test samples (blue). We observe that thistest does not appear sensitive to over-parametrizedVAEs (d > 50) in the same way our proposed test(Figure 11d) is. As in the ‘moons’ case above, it ap-pears sensitive to underfitting (d << 50). Here, thecorresponding kMMD p-values are effectively 1 for alllatent dimension values, and thus are omitted.

We suspect that this insensitivity to data-copying is dueto the fact that – for a large number of samples m,n– the kMMDs between T and Pn, and between T andQm are both likely to be near zero when Q data-copies.Consider the case of extreme data-copying, when Qmis simply a bootstrap sample from the training set T .

A Non-Parametric Test to Detect Data-Copying in Generative Models

The kMMD estimate will be

M̂MD2(T,Qm)

=∑

x,x′∈Tk(x, x′)− 2

∑x∈Ty∈Qm

k(x, y) +∑

y,y′∈Qm

k(y, y′)

Informally speaking, the second and third summationsof this expression almost behave identically on averageto the first since Qm is a bootstrap sample. Theyonly behave differently for summation terms that arecollisions: k(x, y), x = y in the second summation, andk(y, y′), y = y′ in the third summation. The fraction ofthese collision summation terms decreases rapidly withm,n. Consequently, the kMMD between the trainingset and this bootstrap sample is very near zero. It isclear to see that the same goes for the kMMD betweenthe training and test sample, since they are independentsamples from the same distribution.

The experiment in Figure 11e does not test for ex-treme data-copying since the model is a VAE, notKDE. Consequently, there still is an appreciable gapbetween the test and generated kMMD to the trainingset. Regardless, this kMMD is not sensitive enough todifferentiate between the well-fit model of dimension≈ 50 and the overfit models of dimension ≈ 100.

![Untitled-4 [] · ˘ ˘ ˇˆ ˙ ˘ ˘ ˝ ˛ ˘ ˇ ˇ˚˘ ˇ ˝ ˘ ˜˘ ! ˇ˘ ˇ˘ ˘ ˛ ˇ ˝˘ ˚ ˘ ˚ " ˘ ˇ # $ ˇ ˘ ˝ !!! ˇ !˘ ˇ ˝ " "ˇ ˇ ˛ ˝˜ ˆ % ˚˛ ˝! ˘ˆ](https://static.fdocuments.in/doc/165x107/5f2ad62a9e3f9d18cd6b754e/untitled-4-oe-.jpg)