A Discriminative Topic Model using Document Network...

Transcript of A Discriminative Topic Model using Document Network...

![Page 1: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/1.jpg)

A Discriminative Topic Modelusing Document Network Structure

Weiwei Yang1, Jordan Boyd-Graber2, and Philip Resnik1

1University of Maryland College Park and 2University of Colorado Boulder

August 8, 2016

1/22

![Page 2: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/2.jpg)

Paper and Slides

Paper

http://ter.ps/bv5

Slides

http://ter.ps/bv7

2/22

![Page 3: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/3.jpg)

Documents are Linked

A Discriminative Topic Model using Document Network Structure

Weiwei YangComputer Science

University of MarylandCollege Park, MD

Jordan Boyd-GraberComputer Science

University of ColoradoBoulder, CO

Philip ResnikLinguistics and UMIACSUniversity of Maryland

College Park, [email protected]

Abstract

Document collections often have links be-tween documents—citations, hyperlinks,or revisions—and which links are added isoften based on topical similarity. To modelthese intuitions, we introduce a new topicmodel for documents situated within a net-work structure, integrating latent blocksof documents with a max-margin learningcriterion for link prediction using topic-and word-level features. Experiments ona scientific paper dataset and collectionof webpages show that, by more robustlyexploiting the rich link structure within adocument network, our model improveslink prediction, topic quality, and blockdistributions.

1 Introduction

Documents often appear within a network struc-ture: social media mentions, retweets, and fol-lower relationships; Web pages by hyperlinks; sci-entific papers by citations. Network structure in-teracts with the topics in the text, in that docu-ments linked in a network are more likely to havesimilar topic distributions. For instance, a cita-tion link between two papers suggests that theyare about a similar field, and a mentioning linkbetween two social media users often indicatescommon interests. Conversely, documents’ sim-ilar topic distributions can suggest links betweenthem. For example, topic model (Blei et al., 2003,LDA) and block detection papers (Holland et al.,1983) are relevant to our research, so we cite them.Similarly, if a social media user A finds anotheruser B with shared interests, then A is more likelyto follow B.

Our approach is part of a natural progressionof network modeling in which models integrate

more information in more sophisticated ways.Some past methods only consider the network it-self (Kim and Leskovec, 2012; Liben-Nowell andKleinberg, 2007), which loses the rich informationin text. In other cases, methods take both links andtext into account (Chaturvedi et al., 2012), but theyare modeled separately, not jointly, limiting themodel’s ability to capture interactions between thetwo. The relational topic model (Chang and Blei,2010, RTM) goes further, jointly modeling topicsand links, but it considers only pairwise documentrelationships, failing to capture network structureat the level of groups or blocks of documents.

We propose a new joint model that makes fulleruse of the rich link structure within a documentnetwork. Specifically, our model embeds theweighted stochastic block model (Aicher et al.,2014, WSBM) to identify blocks in which docu-ments are densely connected. WSBM basically cat-egorizes each item in a network probabilisticallyas belonging to one of L blocks, by reviewingits connections with each block. Our model canbe viewed as a principled probabilistic extensionof Yang et al. (2015), who identify blocks in a doc-ument network deterministically as strongly con-nected components (SCC). Like them, we assign adistinct Dirichlet prior to each block to capture itstopical commonalities. Jointly, a linear regressionmodel with a discriminative, max-margin objec-tive function (Zhu et al., 2012; Zhu et al., 2014) istrained to reconstruct the links, taking into accountthe features of documents’ topic and word distri-butions (Nguyen et al., 2013), block assignments,and inter-block link rates.

We validate our approach on a scientific pa-per abstract dataset and collection of webpages,with citation links and hyperlinks respectively, topredict links among previously unseen documentsand from those new documents to training docu-ments. Embedding the WSBM in a network/topic

Our Paper

: Topic Model,

Document Network, Block Detection

Journal of Machine Learning Research 3 (2003) 993-1022 Submitted 2/02; Published 1/03

Latent Dirichlet Allocation

David M. Blei [email protected]

Computer Science DivisionUniversity of CaliforniaBerkeley, CA 94720, USA

Andrew Y. Ng [email protected]

Computer Science DepartmentStanford UniversityStanford, CA 94305, USA

Michael I. Jordan [email protected]

Computer Science Division and Department of StatisticsUniversity of CaliforniaBerkeley, CA 94720, USA

Editor: John Lafferty

Abstract

We describelatent Dirichlet allocation(LDA), a generative probabilistic model for collections ofdiscrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which eachitem of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, inturn, modeled as an infinite mixture over an underlying set of topic probabilities. In the context oftext modeling, the topic probabilities provide an explicit representation of a document. We presentefficient approximate inference techniques based on variational methods and an EM algorithm forempirical Bayes parameter estimation. We report results in document modeling, text classification,and collaborative filtering, comparing to a mixture of unigrams model and the probabilistic LSImodel.

1. Introduction

In this paper we consider the problem of modeling text corpora and other collections of discretedata. The goal is to find short descriptions of the members of a collection that enable efficientprocessing of large collections while preserving the essential statistical relationships that are usefulfor basic tasks such as classification, novelty detection, summarization, and similarity and relevancejudgments.

Significant progress has been made on this problem by researchers in the field of informa-tion retrieval (IR) (Baeza-Yates and Ribeiro-Neto, 1999). The basic methodology proposed byIR researchers for text corpora—a methodology successfully deployed in modern Internet searchengines—reduces each document in the corpus to a vector of real numbers, each of which repre-sents ratios of counts. In the populartf-idf scheme (Salton and McGill, 1983), a basic vocabularyof “words” or “terms” is chosen, and, for each document in the corpus, a count is formed of thenumber of occurrences of each word. After suitable normalization, this term frequency count iscompared to an inverse document frequency count, which measures the number of occurrences of a

c©2003 David M. Blei, Andrew Y. Ng and Michael I. Jordan.

LDA [Blei et al., 2003]

: Topic Model

The Annals of Applied Statistics2010, Vol. 4, No. 1, 124–150DOI: 10.1214/09-AOAS309© Institute of Mathematical Statistics, 2010

HIERARCHICAL RELATIONAL MODELSFOR DOCUMENT NETWORKS

BY JONATHAN CHANG1 AND DAVID M. BLEI2

Facebook and Princeton University

We develop the relational topic model (RTM), a hierarchical model ofboth network structure and node attributes. We focus on document networks,where the attributes of each document are its words, that is, discrete obser-vations taken from a fixed vocabulary. For each pair of documents, the RTMmodels their link as a binary random variable that is conditioned on theircontents. The model can be used to summarize a network of documents, pre-dict links between them, and predict words within them. We derive efficientinference and estimation algorithms based on variational methods that takeadvantage of sparsity and scale with the number of links. We evaluate thepredictive performance of the RTM for large networks of scientific abstracts,web documents, and geographically tagged news.

1. Introduction. Network data, such as citation networks of documents, hy-perlinked networks of web pages, and social networks of friends, are pervasive inapplied statistics and machine learning. The statistical analysis of network data canprovide both useful predictive models and descriptive statistics. Predictive modelscan point social network members toward new friends, scientific papers towardrelevant citations, and web pages toward other related pages. Descriptive statisticscan uncover the hidden community structure underlying a network data set.

Recent research in this field has focused on latent variable models of link struc-ture, models that decompose a network according to hidden patterns of connectionsbetween its nodes [Kemp, Griffiths and Tenenbaum (2004); Hofman and Wiggins(2007); Airoldi et al. (2008)]. These models represent a significant departure fromstatistical models of networks, which explain network data in terms of observedsufficient statistics [Fienberg, Meyer and Wasserman (1985); Wasserman and Pat-tison (1996); Getoor et al. (2001); Newman (2002); Taskar et al. (2004)].

While powerful, current latent variable models account only for the structureof the network, ignoring additional attributes of the nodes that might be available.For example, a citation network of articles also contains text and abstracts of thedocuments, a linked set of web-pages also contains the text for those pages, andan on-line social network also contains profile descriptions and other information

Received January 2009; revised November 2009.1Work done while at Princeton University.2Supported by ONR 175-6343, NSF CAREER 0745520 and grants from Google and Microsoft.Key words and phrases. Mixed-membership models, variational methods, text analysis, network

models.

124

Relational Topic Model

[Chang and Blei, 2010]

:

Topic Model, Document Network

Learning Latent Block Structure in Weighted Networks

Christopher AicherDepartment of Applied Mathematics, University of Colorado, Boulder, CO, 80309

Abigail Z. JacobsDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

Aaron ClausetDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

BioFrontiers Institute, University of Colorado, Boulder, CO 80303

Santa Fe Institute, Santa Fe, NM 87501

Abstract

Community detection is an important task in network analysis, in which we aim to learn anetwork partition that groups together vertices with similar community-level connectivity pat-terns. By finding such groups of vertices with similar structural roles, we extract a compactrepresentation of the network’s large-scale structure, which can facilitate its scientific interpre-tation and the prediction of unknown or future interactions. Popular approaches, including thestochastic block model, assume edges are unweighted, which limits their utility by discarding po-tentially useful information. We introduce the weighted stochastic block model (WSBM), whichgeneralizes the stochastic block model to networks with edge weights drawn from any exponen-tial family distribution. This model learns from both the presence and weight of edges, allowingit to discover structure that would otherwise be hidden when weights are discarded or thresh-olded. We describe a Bayesian variational algorithm for efficiently approximating this model’sposterior distribution over latent block structures. We then evaluate the WSBM’s performanceon both edge-existence and edge-weight prediction tasks for a set of real-world weighted net-works. In all cases, the WSBM performs as well or better than the best alternatives on thesetasks. community detection, weighted relational data, block models, exponential family,variational Bayes.

1 Introduction

Networks are an increasingly important form of structured data consisting of interactions betweenpairs of individuals in large social and biological data sets. Unlike attribute data where each obser-vation is associated with an individual, network data is represented by graphs, where individuals arevertices and interactions are edges. Because vertices are pairwise related, network data violates tra-ditional assumptions of attribute data, such as independence. This intrinsic difference in structureprompts the development of new tools for handling network data.

In social and biological networks, vertices often play distinct structural roles in generating thenetwork’s large-scale structure. To identify such latent structural roles, we aim to identify a net-work partition that groups together vertices with similar group-level connectivity patterns. We callthese groups “communities,” and their inference produces a compact description of the large-scale

1

arX

iv:1

404.

0431

v2 [

stat

.ML

] 3

Jun

201

4

Weighted Stochastic Block Model

[Aicher et al., 2014]

: Block Detection

3/22

![Page 4: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/4.jpg)

Documents are Linked

A Discriminative Topic Model using Document Network Structure

Weiwei YangComputer Science

University of MarylandCollege Park, MD

Jordan Boyd-GraberComputer Science

University of ColoradoBoulder, CO

Philip ResnikLinguistics and UMIACSUniversity of Maryland

College Park, [email protected]

Abstract

Document collections often have links be-tween documents—citations, hyperlinks,or revisions—and which links are added isoften based on topical similarity. To modelthese intuitions, we introduce a new topicmodel for documents situated within a net-work structure, integrating latent blocksof documents with a max-margin learningcriterion for link prediction using topic-and word-level features. Experiments ona scientific paper dataset and collectionof webpages show that, by more robustlyexploiting the rich link structure within adocument network, our model improveslink prediction, topic quality, and blockdistributions.

1 Introduction

Documents often appear within a network struc-ture: social media mentions, retweets, and fol-lower relationships; Web pages by hyperlinks; sci-entific papers by citations. Network structure in-teracts with the topics in the text, in that docu-ments linked in a network are more likely to havesimilar topic distributions. For instance, a cita-tion link between two papers suggests that theyare about a similar field, and a mentioning linkbetween two social media users often indicatescommon interests. Conversely, documents’ sim-ilar topic distributions can suggest links betweenthem. For example, topic model (Blei et al., 2003,LDA) and block detection papers (Holland et al.,1983) are relevant to our research, so we cite them.Similarly, if a social media user A finds anotheruser B with shared interests, then A is more likelyto follow B.

Our approach is part of a natural progressionof network modeling in which models integrate

more information in more sophisticated ways.Some past methods only consider the network it-self (Kim and Leskovec, 2012; Liben-Nowell andKleinberg, 2007), which loses the rich informationin text. In other cases, methods take both links andtext into account (Chaturvedi et al., 2012), but theyare modeled separately, not jointly, limiting themodel’s ability to capture interactions between thetwo. The relational topic model (Chang and Blei,2010, RTM) goes further, jointly modeling topicsand links, but it considers only pairwise documentrelationships, failing to capture network structureat the level of groups or blocks of documents.

We propose a new joint model that makes fulleruse of the rich link structure within a documentnetwork. Specifically, our model embeds theweighted stochastic block model (Aicher et al.,2014, WSBM) to identify blocks in which docu-ments are densely connected. WSBM basically cat-egorizes each item in a network probabilisticallyas belonging to one of L blocks, by reviewingits connections with each block. Our model canbe viewed as a principled probabilistic extensionof Yang et al. (2015), who identify blocks in a doc-ument network deterministically as strongly con-nected components (SCC). Like them, we assign adistinct Dirichlet prior to each block to capture itstopical commonalities. Jointly, a linear regressionmodel with a discriminative, max-margin objec-tive function (Zhu et al., 2012; Zhu et al., 2014) istrained to reconstruct the links, taking into accountthe features of documents’ topic and word distri-butions (Nguyen et al., 2013), block assignments,and inter-block link rates.

We validate our approach on a scientific pa-per abstract dataset and collection of webpages,with citation links and hyperlinks respectively, topredict links among previously unseen documentsand from those new documents to training docu-ments. Embedding the WSBM in a network/topic

Our Paper

: Topic Model,

Document Network, Block Detection

Journal of Machine Learning Research 3 (2003) 993-1022 Submitted 2/02; Published 1/03

Latent Dirichlet Allocation

David M. Blei [email protected]

Computer Science DivisionUniversity of CaliforniaBerkeley, CA 94720, USA

Andrew Y. Ng [email protected]

Computer Science DepartmentStanford UniversityStanford, CA 94305, USA

Michael I. Jordan [email protected]

Computer Science Division and Department of StatisticsUniversity of CaliforniaBerkeley, CA 94720, USA

Editor: John Lafferty

Abstract

We describelatent Dirichlet allocation(LDA), a generative probabilistic model for collections ofdiscrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which eachitem of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, inturn, modeled as an infinite mixture over an underlying set of topic probabilities. In the context oftext modeling, the topic probabilities provide an explicit representation of a document. We presentefficient approximate inference techniques based on variational methods and an EM algorithm forempirical Bayes parameter estimation. We report results in document modeling, text classification,and collaborative filtering, comparing to a mixture of unigrams model and the probabilistic LSImodel.

1. Introduction

In this paper we consider the problem of modeling text corpora and other collections of discretedata. The goal is to find short descriptions of the members of a collection that enable efficientprocessing of large collections while preserving the essential statistical relationships that are usefulfor basic tasks such as classification, novelty detection, summarization, and similarity and relevancejudgments.

Significant progress has been made on this problem by researchers in the field of informa-tion retrieval (IR) (Baeza-Yates and Ribeiro-Neto, 1999). The basic methodology proposed byIR researchers for text corpora—a methodology successfully deployed in modern Internet searchengines—reduces each document in the corpus to a vector of real numbers, each of which repre-sents ratios of counts. In the populartf-idf scheme (Salton and McGill, 1983), a basic vocabularyof “words” or “terms” is chosen, and, for each document in the corpus, a count is formed of thenumber of occurrences of each word. After suitable normalization, this term frequency count iscompared to an inverse document frequency count, which measures the number of occurrences of a

c©2003 David M. Blei, Andrew Y. Ng and Michael I. Jordan.

LDA [Blei et al., 2003]

: Topic Model

The Annals of Applied Statistics2010, Vol. 4, No. 1, 124–150DOI: 10.1214/09-AOAS309© Institute of Mathematical Statistics, 2010

HIERARCHICAL RELATIONAL MODELSFOR DOCUMENT NETWORKS

BY JONATHAN CHANG1 AND DAVID M. BLEI2

Facebook and Princeton University

We develop the relational topic model (RTM), a hierarchical model ofboth network structure and node attributes. We focus on document networks,where the attributes of each document are its words, that is, discrete obser-vations taken from a fixed vocabulary. For each pair of documents, the RTMmodels their link as a binary random variable that is conditioned on theircontents. The model can be used to summarize a network of documents, pre-dict links between them, and predict words within them. We derive efficientinference and estimation algorithms based on variational methods that takeadvantage of sparsity and scale with the number of links. We evaluate thepredictive performance of the RTM for large networks of scientific abstracts,web documents, and geographically tagged news.

1. Introduction. Network data, such as citation networks of documents, hy-perlinked networks of web pages, and social networks of friends, are pervasive inapplied statistics and machine learning. The statistical analysis of network data canprovide both useful predictive models and descriptive statistics. Predictive modelscan point social network members toward new friends, scientific papers towardrelevant citations, and web pages toward other related pages. Descriptive statisticscan uncover the hidden community structure underlying a network data set.

Recent research in this field has focused on latent variable models of link struc-ture, models that decompose a network according to hidden patterns of connectionsbetween its nodes [Kemp, Griffiths and Tenenbaum (2004); Hofman and Wiggins(2007); Airoldi et al. (2008)]. These models represent a significant departure fromstatistical models of networks, which explain network data in terms of observedsufficient statistics [Fienberg, Meyer and Wasserman (1985); Wasserman and Pat-tison (1996); Getoor et al. (2001); Newman (2002); Taskar et al. (2004)].

While powerful, current latent variable models account only for the structureof the network, ignoring additional attributes of the nodes that might be available.For example, a citation network of articles also contains text and abstracts of thedocuments, a linked set of web-pages also contains the text for those pages, andan on-line social network also contains profile descriptions and other information

Received January 2009; revised November 2009.1Work done while at Princeton University.2Supported by ONR 175-6343, NSF CAREER 0745520 and grants from Google and Microsoft.Key words and phrases. Mixed-membership models, variational methods, text analysis, network

models.

124

Relational Topic Model

[Chang and Blei, 2010]

:

Topic Model, Document Network

Learning Latent Block Structure in Weighted Networks

Christopher AicherDepartment of Applied Mathematics, University of Colorado, Boulder, CO, 80309

Abigail Z. JacobsDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

Aaron ClausetDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

BioFrontiers Institute, University of Colorado, Boulder, CO 80303

Santa Fe Institute, Santa Fe, NM 87501

Abstract

Community detection is an important task in network analysis, in which we aim to learn anetwork partition that groups together vertices with similar community-level connectivity pat-terns. By finding such groups of vertices with similar structural roles, we extract a compactrepresentation of the network’s large-scale structure, which can facilitate its scientific interpre-tation and the prediction of unknown or future interactions. Popular approaches, including thestochastic block model, assume edges are unweighted, which limits their utility by discarding po-tentially useful information. We introduce the weighted stochastic block model (WSBM), whichgeneralizes the stochastic block model to networks with edge weights drawn from any exponen-tial family distribution. This model learns from both the presence and weight of edges, allowingit to discover structure that would otherwise be hidden when weights are discarded or thresh-olded. We describe a Bayesian variational algorithm for efficiently approximating this model’sposterior distribution over latent block structures. We then evaluate the WSBM’s performanceon both edge-existence and edge-weight prediction tasks for a set of real-world weighted net-works. In all cases, the WSBM performs as well or better than the best alternatives on thesetasks. community detection, weighted relational data, block models, exponential family,variational Bayes.

1 Introduction

Networks are an increasingly important form of structured data consisting of interactions betweenpairs of individuals in large social and biological data sets. Unlike attribute data where each obser-vation is associated with an individual, network data is represented by graphs, where individuals arevertices and interactions are edges. Because vertices are pairwise related, network data violates tra-ditional assumptions of attribute data, such as independence. This intrinsic difference in structureprompts the development of new tools for handling network data.

In social and biological networks, vertices often play distinct structural roles in generating thenetwork’s large-scale structure. To identify such latent structural roles, we aim to identify a net-work partition that groups together vertices with similar group-level connectivity patterns. We callthese groups “communities,” and their inference produces a compact description of the large-scale

1

arX

iv:1

404.

0431

v2 [

stat

.ML

] 3

Jun

201

4

Weighted Stochastic Block Model

[Aicher et al., 2014]

: Block Detection

3/22

![Page 5: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/5.jpg)

Links Indicate Topic Similarity

A Discriminative Topic Model using Document Network Structure

Weiwei YangComputer Science

University of MarylandCollege Park, MD

Jordan Boyd-GraberComputer Science

University of ColoradoBoulder, CO

Philip ResnikLinguistics and UMIACSUniversity of Maryland

College Park, [email protected]

Abstract

Document collections often have links be-tween documents—citations, hyperlinks,or revisions—and which links are added isoften based on topical similarity. To modelthese intuitions, we introduce a new topicmodel for documents situated within a net-work structure, integrating latent blocksof documents with a max-margin learningcriterion for link prediction using topic-and word-level features. Experiments ona scientific paper dataset and collectionof webpages show that, by more robustlyexploiting the rich link structure within adocument network, our model improveslink prediction, topic quality, and blockdistributions.

1 Introduction

Documents often appear within a network struc-ture: social media mentions, retweets, and fol-lower relationships; Web pages by hyperlinks; sci-entific papers by citations. Network structure in-teracts with the topics in the text, in that docu-ments linked in a network are more likely to havesimilar topic distributions. For instance, a cita-tion link between two papers suggests that theyare about a similar field, and a mentioning linkbetween two social media users often indicatescommon interests. Conversely, documents’ sim-ilar topic distributions can suggest links betweenthem. For example, topic model (Blei et al., 2003,LDA) and block detection papers (Holland et al.,1983) are relevant to our research, so we cite them.Similarly, if a social media user A finds anotheruser B with shared interests, then A is more likelyto follow B.

Our approach is part of a natural progressionof network modeling in which models integrate

more information in more sophisticated ways.Some past methods only consider the network it-self (Kim and Leskovec, 2012; Liben-Nowell andKleinberg, 2007), which loses the rich informationin text. In other cases, methods take both links andtext into account (Chaturvedi et al., 2012), but theyare modeled separately, not jointly, limiting themodel’s ability to capture interactions between thetwo. The relational topic model (Chang and Blei,2010, RTM) goes further, jointly modeling topicsand links, but it considers only pairwise documentrelationships, failing to capture network structureat the level of groups or blocks of documents.

We propose a new joint model that makes fulleruse of the rich link structure within a documentnetwork. Specifically, our model embeds theweighted stochastic block model (Aicher et al.,2014, WSBM) to identify blocks in which docu-ments are densely connected. WSBM basically cat-egorizes each item in a network probabilisticallyas belonging to one of L blocks, by reviewingits connections with each block. Our model canbe viewed as a principled probabilistic extensionof Yang et al. (2015), who identify blocks in a doc-ument network deterministically as strongly con-nected components (SCC). Like them, we assign adistinct Dirichlet prior to each block to capture itstopical commonalities. Jointly, a linear regressionmodel with a discriminative, max-margin objec-tive function (Zhu et al., 2012; Zhu et al., 2014) istrained to reconstruct the links, taking into accountthe features of documents’ topic and word distri-butions (Nguyen et al., 2013), block assignments,and inter-block link rates.

We validate our approach on a scientific pa-per abstract dataset and collection of webpages,with citation links and hyperlinks respectively, topredict links among previously unseen documentsand from those new documents to training docu-ments. Embedding the WSBM in a network/topic

Our Paper: Topic Model

,

Document Network, Block Detection

Journal of Machine Learning Research 3 (2003) 993-1022 Submitted 2/02; Published 1/03

Latent Dirichlet Allocation

David M. Blei [email protected]

Computer Science DivisionUniversity of CaliforniaBerkeley, CA 94720, USA

Andrew Y. Ng [email protected]

Computer Science DepartmentStanford UniversityStanford, CA 94305, USA

Michael I. Jordan [email protected]

Computer Science Division and Department of StatisticsUniversity of CaliforniaBerkeley, CA 94720, USA

Editor: John Lafferty

Abstract

We describelatent Dirichlet allocation(LDA), a generative probabilistic model for collections ofdiscrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which eachitem of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, inturn, modeled as an infinite mixture over an underlying set of topic probabilities. In the context oftext modeling, the topic probabilities provide an explicit representation of a document. We presentefficient approximate inference techniques based on variational methods and an EM algorithm forempirical Bayes parameter estimation. We report results in document modeling, text classification,and collaborative filtering, comparing to a mixture of unigrams model and the probabilistic LSImodel.

1. Introduction

In this paper we consider the problem of modeling text corpora and other collections of discretedata. The goal is to find short descriptions of the members of a collection that enable efficientprocessing of large collections while preserving the essential statistical relationships that are usefulfor basic tasks such as classification, novelty detection, summarization, and similarity and relevancejudgments.

Significant progress has been made on this problem by researchers in the field of informa-tion retrieval (IR) (Baeza-Yates and Ribeiro-Neto, 1999). The basic methodology proposed byIR researchers for text corpora—a methodology successfully deployed in modern Internet searchengines—reduces each document in the corpus to a vector of real numbers, each of which repre-sents ratios of counts. In the populartf-idf scheme (Salton and McGill, 1983), a basic vocabularyof “words” or “terms” is chosen, and, for each document in the corpus, a count is formed of thenumber of occurrences of each word. After suitable normalization, this term frequency count iscompared to an inverse document frequency count, which measures the number of occurrences of a

c©2003 David M. Blei, Andrew Y. Ng and Michael I. Jordan.

LDA [Blei et al., 2003] : Topic Model

The Annals of Applied Statistics2010, Vol. 4, No. 1, 124–150DOI: 10.1214/09-AOAS309© Institute of Mathematical Statistics, 2010

HIERARCHICAL RELATIONAL MODELSFOR DOCUMENT NETWORKS

BY JONATHAN CHANG1 AND DAVID M. BLEI2

Facebook and Princeton University

We develop the relational topic model (RTM), a hierarchical model ofboth network structure and node attributes. We focus on document networks,where the attributes of each document are its words, that is, discrete obser-vations taken from a fixed vocabulary. For each pair of documents, the RTMmodels their link as a binary random variable that is conditioned on theircontents. The model can be used to summarize a network of documents, pre-dict links between them, and predict words within them. We derive efficientinference and estimation algorithms based on variational methods that takeadvantage of sparsity and scale with the number of links. We evaluate thepredictive performance of the RTM for large networks of scientific abstracts,web documents, and geographically tagged news.

1. Introduction. Network data, such as citation networks of documents, hy-perlinked networks of web pages, and social networks of friends, are pervasive inapplied statistics and machine learning. The statistical analysis of network data canprovide both useful predictive models and descriptive statistics. Predictive modelscan point social network members toward new friends, scientific papers towardrelevant citations, and web pages toward other related pages. Descriptive statisticscan uncover the hidden community structure underlying a network data set.

Recent research in this field has focused on latent variable models of link struc-ture, models that decompose a network according to hidden patterns of connectionsbetween its nodes [Kemp, Griffiths and Tenenbaum (2004); Hofman and Wiggins(2007); Airoldi et al. (2008)]. These models represent a significant departure fromstatistical models of networks, which explain network data in terms of observedsufficient statistics [Fienberg, Meyer and Wasserman (1985); Wasserman and Pat-tison (1996); Getoor et al. (2001); Newman (2002); Taskar et al. (2004)].

While powerful, current latent variable models account only for the structureof the network, ignoring additional attributes of the nodes that might be available.For example, a citation network of articles also contains text and abstracts of thedocuments, a linked set of web-pages also contains the text for those pages, andan on-line social network also contains profile descriptions and other information

Received January 2009; revised November 2009.1Work done while at Princeton University.2Supported by ONR 175-6343, NSF CAREER 0745520 and grants from Google and Microsoft.Key words and phrases. Mixed-membership models, variational methods, text analysis, network

models.

124

Relational Topic Model

[Chang and Blei, 2010]:

Topic Model

, Document Network

Learning Latent Block Structure in Weighted Networks

Christopher AicherDepartment of Applied Mathematics, University of Colorado, Boulder, CO, 80309

Abigail Z. JacobsDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

Aaron ClausetDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

BioFrontiers Institute, University of Colorado, Boulder, CO 80303

Santa Fe Institute, Santa Fe, NM 87501

Abstract

Community detection is an important task in network analysis, in which we aim to learn anetwork partition that groups together vertices with similar community-level connectivity pat-terns. By finding such groups of vertices with similar structural roles, we extract a compactrepresentation of the network’s large-scale structure, which can facilitate its scientific interpre-tation and the prediction of unknown or future interactions. Popular approaches, including thestochastic block model, assume edges are unweighted, which limits their utility by discarding po-tentially useful information. We introduce the weighted stochastic block model (WSBM), whichgeneralizes the stochastic block model to networks with edge weights drawn from any exponen-tial family distribution. This model learns from both the presence and weight of edges, allowingit to discover structure that would otherwise be hidden when weights are discarded or thresh-olded. We describe a Bayesian variational algorithm for efficiently approximating this model’sposterior distribution over latent block structures. We then evaluate the WSBM’s performanceon both edge-existence and edge-weight prediction tasks for a set of real-world weighted net-works. In all cases, the WSBM performs as well or better than the best alternatives on thesetasks. community detection, weighted relational data, block models, exponential family,variational Bayes.

1 Introduction

Networks are an increasingly important form of structured data consisting of interactions betweenpairs of individuals in large social and biological data sets. Unlike attribute data where each obser-vation is associated with an individual, network data is represented by graphs, where individuals arevertices and interactions are edges. Because vertices are pairwise related, network data violates tra-ditional assumptions of attribute data, such as independence. This intrinsic difference in structureprompts the development of new tools for handling network data.

In social and biological networks, vertices often play distinct structural roles in generating thenetwork’s large-scale structure. To identify such latent structural roles, we aim to identify a net-work partition that groups together vertices with similar group-level connectivity patterns. We callthese groups “communities,” and their inference produces a compact description of the large-scale

1

arX

iv:1

404.

0431

v2 [

stat

.ML

] 3

Jun

201

4

Weighted Stochastic Block Model

[Aicher et al., 2014]

: Block Detection

3/22

![Page 6: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/6.jpg)

Links Indicate Topic Similarity

A Discriminative Topic Model using Document Network Structure

Weiwei YangComputer Science

University of MarylandCollege Park, MD

Jordan Boyd-GraberComputer Science

University of ColoradoBoulder, CO

Philip ResnikLinguistics and UMIACSUniversity of Maryland

College Park, [email protected]

Abstract

Document collections often have links be-tween documents—citations, hyperlinks,or revisions—and which links are added isoften based on topical similarity. To modelthese intuitions, we introduce a new topicmodel for documents situated within a net-work structure, integrating latent blocksof documents with a max-margin learningcriterion for link prediction using topic-and word-level features. Experiments ona scientific paper dataset and collectionof webpages show that, by more robustlyexploiting the rich link structure within adocument network, our model improveslink prediction, topic quality, and blockdistributions.

1 Introduction

Documents often appear within a network struc-ture: social media mentions, retweets, and fol-lower relationships; Web pages by hyperlinks; sci-entific papers by citations. Network structure in-teracts with the topics in the text, in that docu-ments linked in a network are more likely to havesimilar topic distributions. For instance, a cita-tion link between two papers suggests that theyare about a similar field, and a mentioning linkbetween two social media users often indicatescommon interests. Conversely, documents’ sim-ilar topic distributions can suggest links betweenthem. For example, topic model (Blei et al., 2003,LDA) and block detection papers (Holland et al.,1983) are relevant to our research, so we cite them.Similarly, if a social media user A finds anotheruser B with shared interests, then A is more likelyto follow B.

Our approach is part of a natural progressionof network modeling in which models integrate

more information in more sophisticated ways.Some past methods only consider the network it-self (Kim and Leskovec, 2012; Liben-Nowell andKleinberg, 2007), which loses the rich informationin text. In other cases, methods take both links andtext into account (Chaturvedi et al., 2012), but theyare modeled separately, not jointly, limiting themodel’s ability to capture interactions between thetwo. The relational topic model (Chang and Blei,2010, RTM) goes further, jointly modeling topicsand links, but it considers only pairwise documentrelationships, failing to capture network structureat the level of groups or blocks of documents.

We propose a new joint model that makes fulleruse of the rich link structure within a documentnetwork. Specifically, our model embeds theweighted stochastic block model (Aicher et al.,2014, WSBM) to identify blocks in which docu-ments are densely connected. WSBM basically cat-egorizes each item in a network probabilisticallyas belonging to one of L blocks, by reviewingits connections with each block. Our model canbe viewed as a principled probabilistic extensionof Yang et al. (2015), who identify blocks in a doc-ument network deterministically as strongly con-nected components (SCC). Like them, we assign adistinct Dirichlet prior to each block to capture itstopical commonalities. Jointly, a linear regressionmodel with a discriminative, max-margin objec-tive function (Zhu et al., 2012; Zhu et al., 2014) istrained to reconstruct the links, taking into accountthe features of documents’ topic and word distri-butions (Nguyen et al., 2013), block assignments,and inter-block link rates.

We validate our approach on a scientific pa-per abstract dataset and collection of webpages,with citation links and hyperlinks respectively, topredict links among previously unseen documentsand from those new documents to training docu-ments. Embedding the WSBM in a network/topic

Our Paper: Topic Model,

Document Network

, Block Detection

Journal of Machine Learning Research 3 (2003) 993-1022 Submitted 2/02; Published 1/03

Latent Dirichlet Allocation

David M. Blei [email protected]

Computer Science DivisionUniversity of CaliforniaBerkeley, CA 94720, USA

Andrew Y. Ng [email protected]

Computer Science DepartmentStanford UniversityStanford, CA 94305, USA

Michael I. Jordan [email protected]

Computer Science Division and Department of StatisticsUniversity of CaliforniaBerkeley, CA 94720, USA

Editor: John Lafferty

Abstract

We describelatent Dirichlet allocation(LDA), a generative probabilistic model for collections ofdiscrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which eachitem of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, inturn, modeled as an infinite mixture over an underlying set of topic probabilities. In the context oftext modeling, the topic probabilities provide an explicit representation of a document. We presentefficient approximate inference techniques based on variational methods and an EM algorithm forempirical Bayes parameter estimation. We report results in document modeling, text classification,and collaborative filtering, comparing to a mixture of unigrams model and the probabilistic LSImodel.

1. Introduction

In this paper we consider the problem of modeling text corpora and other collections of discretedata. The goal is to find short descriptions of the members of a collection that enable efficientprocessing of large collections while preserving the essential statistical relationships that are usefulfor basic tasks such as classification, novelty detection, summarization, and similarity and relevancejudgments.

Significant progress has been made on this problem by researchers in the field of informa-tion retrieval (IR) (Baeza-Yates and Ribeiro-Neto, 1999). The basic methodology proposed byIR researchers for text corpora—a methodology successfully deployed in modern Internet searchengines—reduces each document in the corpus to a vector of real numbers, each of which repre-sents ratios of counts. In the populartf-idf scheme (Salton and McGill, 1983), a basic vocabularyof “words” or “terms” is chosen, and, for each document in the corpus, a count is formed of thenumber of occurrences of each word. After suitable normalization, this term frequency count iscompared to an inverse document frequency count, which measures the number of occurrences of a

c©2003 David M. Blei, Andrew Y. Ng and Michael I. Jordan.

LDA [Blei et al., 2003] : Topic Model

The Annals of Applied Statistics2010, Vol. 4, No. 1, 124–150DOI: 10.1214/09-AOAS309© Institute of Mathematical Statistics, 2010

HIERARCHICAL RELATIONAL MODELSFOR DOCUMENT NETWORKS

BY JONATHAN CHANG1 AND DAVID M. BLEI2

Facebook and Princeton University

We develop the relational topic model (RTM), a hierarchical model ofboth network structure and node attributes. We focus on document networks,where the attributes of each document are its words, that is, discrete obser-vations taken from a fixed vocabulary. For each pair of documents, the RTMmodels their link as a binary random variable that is conditioned on theircontents. The model can be used to summarize a network of documents, pre-dict links between them, and predict words within them. We derive efficientinference and estimation algorithms based on variational methods that takeadvantage of sparsity and scale with the number of links. We evaluate thepredictive performance of the RTM for large networks of scientific abstracts,web documents, and geographically tagged news.

1. Introduction. Network data, such as citation networks of documents, hy-perlinked networks of web pages, and social networks of friends, are pervasive inapplied statistics and machine learning. The statistical analysis of network data canprovide both useful predictive models and descriptive statistics. Predictive modelscan point social network members toward new friends, scientific papers towardrelevant citations, and web pages toward other related pages. Descriptive statisticscan uncover the hidden community structure underlying a network data set.

Recent research in this field has focused on latent variable models of link struc-ture, models that decompose a network according to hidden patterns of connectionsbetween its nodes [Kemp, Griffiths and Tenenbaum (2004); Hofman and Wiggins(2007); Airoldi et al. (2008)]. These models represent a significant departure fromstatistical models of networks, which explain network data in terms of observedsufficient statistics [Fienberg, Meyer and Wasserman (1985); Wasserman and Pat-tison (1996); Getoor et al. (2001); Newman (2002); Taskar et al. (2004)].

While powerful, current latent variable models account only for the structureof the network, ignoring additional attributes of the nodes that might be available.For example, a citation network of articles also contains text and abstracts of thedocuments, a linked set of web-pages also contains the text for those pages, andan on-line social network also contains profile descriptions and other information

Received January 2009; revised November 2009.1Work done while at Princeton University.2Supported by ONR 175-6343, NSF CAREER 0745520 and grants from Google and Microsoft.Key words and phrases. Mixed-membership models, variational methods, text analysis, network

models.

124

Relational Topic Model

[Chang and Blei, 2010]:

Topic Model, Document Network

Learning Latent Block Structure in Weighted Networks

Christopher AicherDepartment of Applied Mathematics, University of Colorado, Boulder, CO, 80309

Abigail Z. JacobsDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

Aaron ClausetDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

BioFrontiers Institute, University of Colorado, Boulder, CO 80303

Santa Fe Institute, Santa Fe, NM 87501

Abstract

Community detection is an important task in network analysis, in which we aim to learn anetwork partition that groups together vertices with similar community-level connectivity pat-terns. By finding such groups of vertices with similar structural roles, we extract a compactrepresentation of the network’s large-scale structure, which can facilitate its scientific interpre-tation and the prediction of unknown or future interactions. Popular approaches, including thestochastic block model, assume edges are unweighted, which limits their utility by discarding po-tentially useful information. We introduce the weighted stochastic block model (WSBM), whichgeneralizes the stochastic block model to networks with edge weights drawn from any exponen-tial family distribution. This model learns from both the presence and weight of edges, allowingit to discover structure that would otherwise be hidden when weights are discarded or thresh-olded. We describe a Bayesian variational algorithm for efficiently approximating this model’sposterior distribution over latent block structures. We then evaluate the WSBM’s performanceon both edge-existence and edge-weight prediction tasks for a set of real-world weighted net-works. In all cases, the WSBM performs as well or better than the best alternatives on thesetasks. community detection, weighted relational data, block models, exponential family,variational Bayes.

1 Introduction

Networks are an increasingly important form of structured data consisting of interactions betweenpairs of individuals in large social and biological data sets. Unlike attribute data where each obser-vation is associated with an individual, network data is represented by graphs, where individuals arevertices and interactions are edges. Because vertices are pairwise related, network data violates tra-ditional assumptions of attribute data, such as independence. This intrinsic difference in structureprompts the development of new tools for handling network data.

In social and biological networks, vertices often play distinct structural roles in generating thenetwork’s large-scale structure. To identify such latent structural roles, we aim to identify a net-work partition that groups together vertices with similar group-level connectivity patterns. We callthese groups “communities,” and their inference produces a compact description of the large-scale

1

arX

iv:1

404.

0431

v2 [

stat

.ML

] 3

Jun

201

4

Weighted Stochastic Block Model

[Aicher et al., 2014]

: Block Detection

3/22

![Page 7: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/7.jpg)

Links Indicate Topic Similarity

A Discriminative Topic Model using Document Network Structure

Weiwei YangComputer Science

University of MarylandCollege Park, MD

Jordan Boyd-GraberComputer Science

University of ColoradoBoulder, CO

Philip ResnikLinguistics and UMIACSUniversity of Maryland

College Park, [email protected]

Abstract

Document collections often have links be-tween documents—citations, hyperlinks,or revisions—and which links are added isoften based on topical similarity. To modelthese intuitions, we introduce a new topicmodel for documents situated within a net-work structure, integrating latent blocksof documents with a max-margin learningcriterion for link prediction using topic-and word-level features. Experiments ona scientific paper dataset and collectionof webpages show that, by more robustlyexploiting the rich link structure within adocument network, our model improveslink prediction, topic quality, and blockdistributions.

1 Introduction

Documents often appear within a network struc-ture: social media mentions, retweets, and fol-lower relationships; Web pages by hyperlinks; sci-entific papers by citations. Network structure in-teracts with the topics in the text, in that docu-ments linked in a network are more likely to havesimilar topic distributions. For instance, a cita-tion link between two papers suggests that theyare about a similar field, and a mentioning linkbetween two social media users often indicatescommon interests. Conversely, documents’ sim-ilar topic distributions can suggest links betweenthem. For example, topic model (Blei et al., 2003,LDA) and block detection papers (Holland et al.,1983) are relevant to our research, so we cite them.Similarly, if a social media user A finds anotheruser B with shared interests, then A is more likelyto follow B.

Our approach is part of a natural progressionof network modeling in which models integrate

more information in more sophisticated ways.Some past methods only consider the network it-self (Kim and Leskovec, 2012; Liben-Nowell andKleinberg, 2007), which loses the rich informationin text. In other cases, methods take both links andtext into account (Chaturvedi et al., 2012), but theyare modeled separately, not jointly, limiting themodel’s ability to capture interactions between thetwo. The relational topic model (Chang and Blei,2010, RTM) goes further, jointly modeling topicsand links, but it considers only pairwise documentrelationships, failing to capture network structureat the level of groups or blocks of documents.

We propose a new joint model that makes fulleruse of the rich link structure within a documentnetwork. Specifically, our model embeds theweighted stochastic block model (Aicher et al.,2014, WSBM) to identify blocks in which docu-ments are densely connected. WSBM basically cat-egorizes each item in a network probabilisticallyas belonging to one of L blocks, by reviewingits connections with each block. Our model canbe viewed as a principled probabilistic extensionof Yang et al. (2015), who identify blocks in a doc-ument network deterministically as strongly con-nected components (SCC). Like them, we assign adistinct Dirichlet prior to each block to capture itstopical commonalities. Jointly, a linear regressionmodel with a discriminative, max-margin objec-tive function (Zhu et al., 2012; Zhu et al., 2014) istrained to reconstruct the links, taking into accountthe features of documents’ topic and word distri-butions (Nguyen et al., 2013), block assignments,and inter-block link rates.

We validate our approach on a scientific pa-per abstract dataset and collection of webpages,with citation links and hyperlinks respectively, topredict links among previously unseen documentsand from those new documents to training docu-ments. Embedding the WSBM in a network/topic

Our Paper: Topic Model,

Document Network, Block Detection

Journal of Machine Learning Research 3 (2003) 993-1022 Submitted 2/02; Published 1/03

Latent Dirichlet Allocation

David M. Blei [email protected]

Computer Science DivisionUniversity of CaliforniaBerkeley, CA 94720, USA

Andrew Y. Ng [email protected]

Computer Science DepartmentStanford UniversityStanford, CA 94305, USA

Michael I. Jordan [email protected]

Computer Science Division and Department of StatisticsUniversity of CaliforniaBerkeley, CA 94720, USA

Editor: John Lafferty

Abstract

We describelatent Dirichlet allocation(LDA), a generative probabilistic model for collections ofdiscrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which eachitem of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, inturn, modeled as an infinite mixture over an underlying set of topic probabilities. In the context oftext modeling, the topic probabilities provide an explicit representation of a document. We presentefficient approximate inference techniques based on variational methods and an EM algorithm forempirical Bayes parameter estimation. We report results in document modeling, text classification,and collaborative filtering, comparing to a mixture of unigrams model and the probabilistic LSImodel.

1. Introduction

In this paper we consider the problem of modeling text corpora and other collections of discretedata. The goal is to find short descriptions of the members of a collection that enable efficientprocessing of large collections while preserving the essential statistical relationships that are usefulfor basic tasks such as classification, novelty detection, summarization, and similarity and relevancejudgments.

Significant progress has been made on this problem by researchers in the field of informa-tion retrieval (IR) (Baeza-Yates and Ribeiro-Neto, 1999). The basic methodology proposed byIR researchers for text corpora—a methodology successfully deployed in modern Internet searchengines—reduces each document in the corpus to a vector of real numbers, each of which repre-sents ratios of counts. In the populartf-idf scheme (Salton and McGill, 1983), a basic vocabularyof “words” or “terms” is chosen, and, for each document in the corpus, a count is formed of thenumber of occurrences of each word. After suitable normalization, this term frequency count iscompared to an inverse document frequency count, which measures the number of occurrences of a

c©2003 David M. Blei, Andrew Y. Ng and Michael I. Jordan.

LDA [Blei et al., 2003] : Topic Model

The Annals of Applied Statistics2010, Vol. 4, No. 1, 124–150DOI: 10.1214/09-AOAS309© Institute of Mathematical Statistics, 2010

HIERARCHICAL RELATIONAL MODELSFOR DOCUMENT NETWORKS

BY JONATHAN CHANG1 AND DAVID M. BLEI2

Facebook and Princeton University

We develop the relational topic model (RTM), a hierarchical model ofboth network structure and node attributes. We focus on document networks,where the attributes of each document are its words, that is, discrete obser-vations taken from a fixed vocabulary. For each pair of documents, the RTMmodels their link as a binary random variable that is conditioned on theircontents. The model can be used to summarize a network of documents, pre-dict links between them, and predict words within them. We derive efficientinference and estimation algorithms based on variational methods that takeadvantage of sparsity and scale with the number of links. We evaluate thepredictive performance of the RTM for large networks of scientific abstracts,web documents, and geographically tagged news.

1. Introduction. Network data, such as citation networks of documents, hy-perlinked networks of web pages, and social networks of friends, are pervasive inapplied statistics and machine learning. The statistical analysis of network data canprovide both useful predictive models and descriptive statistics. Predictive modelscan point social network members toward new friends, scientific papers towardrelevant citations, and web pages toward other related pages. Descriptive statisticscan uncover the hidden community structure underlying a network data set.

Recent research in this field has focused on latent variable models of link struc-ture, models that decompose a network according to hidden patterns of connectionsbetween its nodes [Kemp, Griffiths and Tenenbaum (2004); Hofman and Wiggins(2007); Airoldi et al. (2008)]. These models represent a significant departure fromstatistical models of networks, which explain network data in terms of observedsufficient statistics [Fienberg, Meyer and Wasserman (1985); Wasserman and Pat-tison (1996); Getoor et al. (2001); Newman (2002); Taskar et al. (2004)].

While powerful, current latent variable models account only for the structureof the network, ignoring additional attributes of the nodes that might be available.For example, a citation network of articles also contains text and abstracts of thedocuments, a linked set of web-pages also contains the text for those pages, andan on-line social network also contains profile descriptions and other information

Received January 2009; revised November 2009.1Work done while at Princeton University.2Supported by ONR 175-6343, NSF CAREER 0745520 and grants from Google and Microsoft.Key words and phrases. Mixed-membership models, variational methods, text analysis, network

models.

124

Relational Topic Model

[Chang and Blei, 2010]:

Topic Model, Document Network

Learning Latent Block Structure in Weighted Networks

Christopher AicherDepartment of Applied Mathematics, University of Colorado, Boulder, CO, 80309

Abigail Z. JacobsDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

Aaron ClausetDepartment of Computer Science, University of Colorado, Boulder, CO, 80309

BioFrontiers Institute, University of Colorado, Boulder, CO 80303

Santa Fe Institute, Santa Fe, NM 87501

Abstract

Community detection is an important task in network analysis, in which we aim to learn anetwork partition that groups together vertices with similar community-level connectivity pat-terns. By finding such groups of vertices with similar structural roles, we extract a compactrepresentation of the network’s large-scale structure, which can facilitate its scientific interpre-tation and the prediction of unknown or future interactions. Popular approaches, including thestochastic block model, assume edges are unweighted, which limits their utility by discarding po-tentially useful information. We introduce the weighted stochastic block model (WSBM), whichgeneralizes the stochastic block model to networks with edge weights drawn from any exponen-tial family distribution. This model learns from both the presence and weight of edges, allowingit to discover structure that would otherwise be hidden when weights are discarded or thresh-olded. We describe a Bayesian variational algorithm for efficiently approximating this model’sposterior distribution over latent block structures. We then evaluate the WSBM’s performanceon both edge-existence and edge-weight prediction tasks for a set of real-world weighted net-works. In all cases, the WSBM performs as well or better than the best alternatives on thesetasks. community detection, weighted relational data, block models, exponential family,variational Bayes.

1 Introduction

Networks are an increasingly important form of structured data consisting of interactions betweenpairs of individuals in large social and biological data sets. Unlike attribute data where each obser-vation is associated with an individual, network data is represented by graphs, where individuals arevertices and interactions are edges. Because vertices are pairwise related, network data violates tra-ditional assumptions of attribute data, such as independence. This intrinsic difference in structureprompts the development of new tools for handling network data.

In social and biological networks, vertices often play distinct structural roles in generating thenetwork’s large-scale structure. To identify such latent structural roles, we aim to identify a net-work partition that groups together vertices with similar group-level connectivity patterns. We callthese groups “communities,” and their inference produces a compact description of the large-scale

1

arX

iv:1

404.

0431

v2 [

stat

.ML

] 3

Jun

201

4

Weighted Stochastic Block Model

[Aicher et al., 2014]: Block Detection

3/22

![Page 8: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/8.jpg)

Goal

Make use of the rich information in document links

Improve topic modeling

Replicate existing links

Predict held-out links

4/22

![Page 9: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/9.jpg)

Outline

1 Block Detection and RTM

2 LBH-RTM

3 Link Prediction Results

4 ConclusionsPaper

http://ter.ps/bv5Slides

http://ter.ps/bv7

5/22

![Page 10: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/10.jpg)

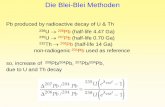

Block Detection

Find densely-connected blocks in a graph

Deterministic: Strongly connected components (SCC)

Puts any linked nodes into the same componentDoes not consider link density

Probabilistic: Weighted stochastic block model (WSBM)

6/22

![Page 11: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/11.jpg)

Block Detection

Find densely-connected blocks in a graph

Deterministic: Strongly connected components (SCC)

Puts any linked nodes into the same componentDoes not consider link density

Probabilistic: Weighted stochastic block model (WSBM)

6/22

![Page 12: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/12.jpg)

Block Detection

Find densely-connected blocks in a graph

Deterministic: Strongly connected components (SCC)

Puts any linked nodes into the same componentDoes not consider link density

Probabilistic: Weighted stochastic block model (WSBM)

6/22

![Page 13: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/13.jpg)

Block Detection

Find densely-connected blocks in a graph

Deterministic: Strongly connected components (SCC)

Puts any linked nodes into the same componentDoes not consider link density

Probabilistic: Weighted stochastic block model (WSBM)

6/22

![Page 14: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/14.jpg)

Relational Topic Model [Chang and Blei, 2010]

A topic model for link prediction

Jointly models topics and links

Each topic is assigned a weight

Indicate the correlationsbetween topics and links

Composes a regression value Rd,d′

Pr (Bd,d′ = 1) = σ (Rd,d′)

Kd

d

d

z

z

z

,

,

,

2

1

Kd

d

d

z

z

z

,'

,'

,'

2

1

K

'dN

dNd

'd

dz dw

'dz 'dw

7/22

![Page 15: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/15.jpg)

Relational Topic Model [Chang and Blei, 2010]

A topic model for link prediction

Jointly models topics and links

Each topic is assigned a weight

Indicate the correlationsbetween topics and links

Composes a regression value Rd,d′

Pr (Bd,d′ = 1) = σ (Rd,d′)

Kd

d

d

z

z

z

,

,

,

2

1

Kd

d

d

z

z

z

,'

,'

,'

2

1

T

K

'dN

dNd

'd

dz dw

'dz 'dw

7/22

![Page 16: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/16.jpg)

Relational Topic Model [Chang and Blei, 2010]

A topic model for link prediction

Jointly models topics and links

Each topic is assigned a weight

Indicate the correlationsbetween topics and links

Composes a regression value Rd,d′

Pr (Bd,d′ = 1) = σ (Rd,d′)

Kd

d

d

z

z

z

,

,

,

2

1

Kd

d

d

z

z

z

,'

,'

,'

2

1

T',ddR

K

'dN

dNd

'd

dz dw

'dz 'dw

7/22

![Page 17: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/17.jpg)

Relational Topic Model [Chang and Blei, 2010]

A topic model for link prediction

Jointly models topics and links

Each topic is assigned a weight

Indicate the correlationsbetween topics and links

Composes a regression value Rd,d′

Pr (Bd,d′ = 1) = σ (Rd,d′)

Kd

d

d

z

z

z

,

,

,

2

1

Kd

d

d

z

z

z

,'

,'

,'

2

1

T',ddR

K

'dN

dNd

'd

',ddB

dz dw

'dz 'dw

7/22

![Page 18: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/18.jpg)

Outline

1 Block Detection and RTM

2 LBH-RTM

3 Link Prediction Results

4 ConclusionsPaper

http://ter.ps/bv5Slides

http://ter.ps/bv7

8/22

![Page 19: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/19.jpg)

Relational Topic Model

K

'dN

dNd

'd

',ddB

dz dw

'dz 'dw

9/22

![Page 20: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/20.jpg)

Relational Topic Model with Weighted Stochastic BlockModel

K

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 21: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/21.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors

1

2

3

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 22: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/22.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors

1

2

3

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 23: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/23.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors, Various Features

Rd,d′ = ηT

zd,1zd,2

...zd,K

zd′,1zd′,2

...zd′,K

︸ ︷︷ ︸

Topical Feature

+ τT

wd,1

wd,2

...wd,V

wd′,1wd′,2

...wd′,V

︸ ︷︷ ︸

Lexical Feature

+ ρl,l′Ωl,l′︸ ︷︷ ︸Block Feat.

Pr(Bd,d′ = 1

)= σ(Rd,d′ ) (Sigmoid Loss)

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 24: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/24.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors, Various Features

Rd,d′ = ηT

zd,1zd,2

...zd,K

zd′,1zd′,2

...zd′,K

︸ ︷︷ ︸

Topical Feature

+ τT

wd,1

wd,2

...wd,V

wd′,1wd′,2

...wd′,V

︸ ︷︷ ︸

Lexical Feature

+ ρl,l′Ωl,l′︸ ︷︷ ︸Block Feat.

Pr(Bd,d′ = 1

)= σ(Rd,d′ ) (Sigmoid Loss)

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 25: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/25.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors, Various Features

Rd,d′ = ηT

zd,1zd,2

...zd,K

zd′,1zd′,2

...zd′,K

︸ ︷︷ ︸

Topical Feature

+ τT

wd,1

wd,2

...wd,V

wd′,1wd′,2

...wd′,V

︸ ︷︷ ︸

Lexical Feature

+ ρl,l′Ωl,l′︸ ︷︷ ︸Block Feat.

Pr(Bd,d′ = 1

)= σ(Rd,d′ ) (Sigmoid Loss)

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 26: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/26.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors, Various Features

Rd,d′ = ηT

zd,1zd,2

...zd,K

zd′,1zd′,2

...zd′,K

︸ ︷︷ ︸

Topical Feature

+ τT

wd,1

wd,2

...wd,V

wd′,1wd′,2

...wd′,V

︸ ︷︷ ︸

Lexical Feature

+ ρl,l′Ωl,l′︸ ︷︷ ︸Block Feat.

Pr(Bd,d′ = 1

)= σ(Rd,d′ ) (Sigmoid Loss)

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw

9/22

![Page 27: A Discriminative Topic Model using Document Network Structurewwyang/files/2016_acl_docblock_slides.pdf · models. 124 Relational Topic Model [Chang and Blei, 2010]: Topic Model, Document](https://reader034.fdocuments.in/reader034/viewer/2022042323/5f0d304c7e708231d4391b99/html5/thumbnails/27.jpg)

Relational Topic Model with Weighted Stochastic BlockModel, Block Priors, Various Features, and Hinge Loss

Pr (Bd,d′ = 1) = σ(Rd,d′) (Sigmoid Loss)

→ Pr (Bd,d′) = exp (−2 max (0, 1− Bd,d′Rd,d′)) (Hinge Loss)

Make more effective use of side information when inferring topics.

'

K

L

LL

'dN

dNd

'd

a

b

dy

'dy

',ddA ',ddB

dz dw

'dz 'dw