WiML Poster

-

Upload

svitlana-volkova -

Category

Education

-

view

645 -

download

1

description

Transcript of WiML Poster

Named Entity Annotation and Tagging in the Domain of EpizooticsSvitlana Volkova, William Hsu, Doina Caragea

Kansas State University, Department of Computing and Information Sciences, Manhattan, KS 66506

ACKNOWLEDGEMENTSThis work is supported through a grant from the U.S. Department

of Defense. A collaborative program on IE with faculty at the

University of Illinois at Urbana-Champaign (ChengXiang Zhai, Dan

Roth, Jiawei Han, and Kevin Chang), the 2009 Data Sciences

Summer Institute (DSSI) on Multimodal Information Access and

Synthesis (MIAS), was made possible through the support of

DHS/ONR.

We appreciate effective discussions with Dr. Chris Callison-Burch,

Dr. Mark Dredze and Dr. Jason Eisner from Center for Language

and Speech Processing, Johns Hopkins University; Tim Weninger,

Research Fellow, UIUC;

John Drouhard, Landon Fowles (KDD Lab, IE Team) for

assistance with experiments.

KANSAS STATE UNIVERSITY KNOWLEDGE DISCOVERY IN DATABASES LABORATORY NATIONAL AGRICULTURAL BIOSECURITY CENTER @ K-STATE

K-State Laboratory for Knowledge

Discovery in Databases (KDD)

OVERVIEW

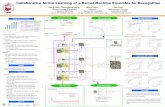

We present an information extraction (IE) application in the domain of animal

diseases. Previously, such tasks were performed only for human disease related

data. As opposed to that, our task is directly related to web crawling for retrieving

animal infectious disease related information.

THE EFFECT OF THE ONTOLOGY SIZE AND QUALITY ON THE

ACCURACY OF DISEASE EXTRACTION

The data set is sampled from animal disease crawled sources with number of

occurrences of the disease named entities above predefined threshold. All animal

diseases were manually annotated within the dataset for future cross-validation.

In the first experiment the baseline run is processed using dictionary look-up with

and w/o capitalization feature (1a, 1b). The next runs include addition only

synonyms (2a, 2b) and abbreviations (3a, 3b) respectively. The last run (4a, 4b)

combines all above mentioned features.

In the second experiment we divide data into training and test sets. Using training

examples we learn the model for animal disease name extraction by discovering

relations between concepts; we report accuracy on test data set.

In the third experiment, we compare our approach of learning relations between

concepts with Google Sets method. We report results in terms of precision, recall

and F-measure. We build learning curves for both methods in order to show the

influence of the ontology size and quality on the accuracy of extracted results.

INFORMATION EXTRACTION IN THE DOMAIN OF EPIZOOTICS

The IE task in the domain of the epizootics can be defined as automatic extraction

of structured information that is related to animal diseases from unstructured web

documents with different content. The IE task is related to development of several

modules for tagging of specific entities such as: animal disease name, species,

vaccines, serotypes etc. at the document-level within a crawled collection of

documents.

GAZETEER COLLECTION AND ONTOLOGY CONSTRUCTION

The main purpose of IE using a gazetteer is to retrieve tokens that match at least

one term with synonyms, abbreviations from known animal disease names. We

collect prior domain specific knowledge and, as a result, construct ontology of

animal disease concepts. The extraction technique is based on a pattern matching

approach. The gazetteer is semi-automatically collected from official web-portals.

Using initial gazetteer we enriched ontology with latent synonymic and causal

relations between related concepts.EMAIL

LITERATURE

Document

Collection

CRAWLER

QUERY

DB

CLASSIFICATION-BASED NAMED ENTITY RECOGNITION

Named Entity Recognition (NER) task is a subtask of IE which seeks to locate and

classify atomic elements in text into predefined categories, such as:

disease names (e.g. “foot and mouth disease”);

viruses (e.g. “picornavirus”) and serotypes (e.g. “Asia-1”);

species and its quantities (e.g. “sheep”, “pigs”);

locations where outbreak happened (e.g. “United Kingdom”, “eastern provinces

of Shandong and Jiangsu, China” – different level of granularity);

dates in different formats including special cases (e.g. “last Tuesday”, “two

month ago”);

organizations that reports outbreak (e.g. “DEFRA”, “CDC”).

ANIMAL

DISEASE

Dipylidiuminfection

Tapeworm

Q fever

Coxiella burnetii

C. burnetii

Baylisascariasis

Baylisascaris procyonis

B. melis

B. procyonis

B. transfuga

DOMAIN INDEPENDENT KNOWLEDGE

Location hierarchy, containingnames of countries, states orprovinces, cities, etc; canonicaldate/time representation.

DOMAIN SPECIFIC KNOWLEDGE

Medical ontology, containingnames of diseases, viruses,animal species etc., organized ina conceptual hierarchy.

Example: The US saw its latest FMD outbreak in Montebello,

California in 1929 where 3,600 animals were slaughtered.

Animal Disease Names Locations

Dates/Times Quantities

DOCUMENT

COLLECTION

Goal: to extract structured

information with facts and

entities related to events from

unstructured or semistructured

sources.

FUTURE WORK

The animal disease extraction task is a

prerequisite for more advanced content

analysis of the unstructured documents within

corpora. So, the design of an NER-driven

system for extracting structured tuples that

describe animal disease-related events will

be performed.

The approach extends the shared NER task

of identifying persons, organizations, and

locations with not only disease names but

constituent entities and attributes of these

event tuples. These include dates and times,

quantities with relevant units, and geo-

referenced locations. A primary overall

objective of the IE task is to support timeline

and map-based visualization of events.

1. Disease names and fact sheets from Iowa State University Center for

Food Security and Public Health (CFSPH):http://www.cfsph.iastate.edu/diseaseinfo/animaldiseaseindex.htm

2. Word Organization of Animal Health (OIE) Animal Disease Data:http://www.oie.int/eng/maladies/en_alpha.htm

3. Department for Environmental Food and Rural Affairs, UK (DEFRA):http://www.defra.gov.uk/animalh/diseases/vetsurveillance/az_index.htm

4. United States Department of Agriculture (USDA), Animal and Plant

Health Inspection Servicehttp://www.aphis.usda.gov/animal_health/animal_diseases/

5. Medline Plus, Service of National Library of Medicine and National

Institute of Healthhttp://www.nlm.nih.gov/medlineplus/animaldiseasesandyourhealth.html

6. Wikipediahttp://en.wikipedia.org/wiki/Animal_diseases

RELATION DISCOVERY BETWEEN CONCEPTS

Synonymy (“is a kind of” relation, e.g. “Swine influenza” is a kind of “Swine fever”);

Example A: “Diseases such as Foot and Mouth Disease, Bovine TB or Johne’s Disease

have far-reaching potential for major economic impact on cattle producers”.

Disease

Extractor

Module

Index of the first/last character

Matched text and length

Canonical disease names

Associated Synonyms/Abbreviations

Non-unique/Unique diseases

Input:

Text from file

Output:

Method A: Number of Training Instances

Accuracy429 773 955 1159 1287 1442 1561 1590 1619 1682

0.964 0.929 0.927 0.925 0.964 0.929 0.927 0.925 0.964 0.929

Method B: Number of Training Instances

Accuracy429 754 925 1118 1238 1385 1497 1524 1552 1611

0.962 0.961 0.864 0.862 0.962 0.961 0.864 0.862 0.962 0.961

Dictionary Look-Up: Number of Instances (max. 429)

Accuracy1a 1b 2a 2b 3a 3b 4a 4b - -

0.885 0.920 0.886 0.896 0.887 0.922 0.889 0.933 - -

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

1 2 3 4 5 6 7 8 9 10

Method B

Method A

Gazetteer

F-Measure

DICTIONARY LOOKUP METHOD FOR DISEASE EXTRACTION

List’s look up features: flexible pattern match

Document level features: keyword

appearance within predefined window.

Word level morphological features

0.0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1.0

4b 4a 3b 3a 2b 2a 1b 1a

Precision Recall F-Measure

0.0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1.0

0 50 100

4b

3b

3a

2b

2a

1b

1a

4a

Recall Range

Run 1, 3.60%

Run 2 - only capitalization,

14.00%

Run 3 - only abbreviations + synonyms,

84.36%

Averaged"FMD" Extraction

Performanceover 50 web-sites

Run 1, 0.20%

Run 2 - only capitalization

38.02%

Run 3 - only abbreviation+ synonyms,

57.52%

Averaged"RVF" Extraction

Performanceover 50 web-sites

0.80

0.82

0.84

0.86

0.88

0.90

0.92

0.94

0.96

0.98

1.00

400 650 900 1150 1400 1650

0.80

0.82

0.84

0.86

0.88

0.90

0.92

0.94

0.96

0.98

1.00

400 650 900 1150 1400 1650

Learning Curve for Method A (Relation Discovery within Training Data)

Learning Curve for Method B (Relation Discovery using Google Sets)

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

Metod A Metod B Dictionary Look-Up

Precision/Recall

Foot-and-mouth disease[DIS] killed 15

hog on farm in Taiwan[LOC]

Fact: killed

Disease: foot-and-mouth disease

Location: Taiwan

Species: hog

Quantity: 15

Foot-and-mouth disease killed 15 hog

on farm in Taiwan. Outbreak was

reported on 9 June.

Event: outbreak

Species: 15 hog

Disease: foot-and-mouth disease

Location: Taiwan

Date/Time: 9 June

Foot-and-mouth disease [SUBJ] killed[VP]

15 hog on farm in Taiwan [PP]

Syntactic Analysis

Extraction

Co-reference

Resolution

Template Generation

NLP TASKS

Run

Precision, Recall, F-measure

Document number

Causal links (“is caused by”, e.g. “Ovine epididymitis is caused by Brucella ovis”).

Example F: “Bluetongue virus (BTV), a member of Orbivirus genus within the

Reoviridae family causes Bluetongue disease in livestock (sheep, goat, cattle)”.

1a - using only initial gazetteer w/o capitalization

1b - using initial gazetteer + capitalization

2a - initial gazetteer + only synonyms w/o capitalization

2b - initial gazetteer + only synonyms with capitalization

3a - init. gazetteer + only abbreviations w/o capitalization

3b - init. gazetteer + only abbreviations with capitalization

4a - init. gazetteer + synonyms + abbrev. w/o capitaliz.

4b - init. gazetteer + synonyms + abbrev. with capitaliz.

WWW (official reports about animal disease outbreaks,

surveillance networks disease descriptions, fact sheets etc.)

Runs

Number of Ontology Concepts Number of Ontology Concepts

Accuracy Accuracy