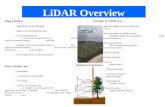

What is a MMS? A vehicular based imaging and LiDAR data collection system.

What is LiDAR?

-

Upload

justin-farrow -

Category

Education

-

view

64.232 -

download

29

description

Transcript of What is LiDAR?

- 1. PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information.PDF generated at: Sun, 26 May 2013 00:10:46 UTCWhat is Lidar?A Resource Curated by AirTopo Group