Weighted Substructure Mining for Image Analysis1ai.dinfo.unifi.it/MLG07/pdf/12_Nowozin.pdf ·...

Transcript of Weighted Substructure Mining for Image Analysis1ai.dinfo.unifi.it/MLG07/pdf/12_Nowozin.pdf ·...

Weighted Substructure Mining for Image Analysis1

Sebastian Nowozin, Max Planck Institute for Biological Cybernetics, Tubingen, GermanyKoji Tsuda, Max Planck Institute for Biological Cybernetics, Tubingen, GermanyTakeaki Uno, National Institute of Informatics, Tokyo, JapanTaku Kudo, Google Japan Inc., Tokyo, JapanGokhan Bakır, Google GmbH, Zurich, Switzerland

Abstract1

In web-related applications of image categorization, it isdesirable to derive an interpretable classification rule withhigh accuracy. Using the bag-of-words representation andthe linear support vector machine, one can partly fulfill thegoal, but the accuracy of linear classifiers is not high andthe obtained features are not informative for users. We pro-pose to combine item set mining and large margin classifiersto select features from the power set of all visual words. Ourresulting classification rule is easier to browse and simplerto understand, because each feature has richer information.As a next step, each image is represented as a graph wherenodes correspond to local image features and edges encodegeometric relations between features. Combining graph min-ing and boosting, we can obtain a classification rule basedon subgraph features that contain more information than theset features. We evaluate our algorithm in a web-retrievalranking task where the goal is to reject outliers from a set ofimages returned for a keyword query. Furthermore, it is eval-uated on the supervised classification tasks with the challeng-ing VOC2005 data set. Our approach yields excellent accu-racy in the unsupervised ranking task and competitive resultsin the supervised classification task.

1. IntroductionDespite some research progress, the ability of programs

to automatically interpret the content of images, or even justassisting a human user to sort or group images is still ratherlimited, both in terms of accuracy and scalability. For exam-ple, thousands of images are easily retrieved using keywordsearching by, e.g., Google Image. Typically they contain out-lier images that do not semantically match what the user reallywanted. Therefore it would be helpful if a machine learningalgorithm can identify those outliers automatically [3]. An-other attractive task would be to classify retrieved images au-tomatically into folders. This is basically a supervised multi-class image classification problem.

Images can be modeled as collections of parts, as is donee.g. in the popular bag-of-words model. Given such represen-tation we can try to identify patterns that frequently appearamong the images. We propose to use frequent item set mining

1A longer version of this paper will appear in the CVPR 2007 proceed-ings.

on the bag-of-words representation in order to identify likelydiscriminative patterns in a collection of images. By combin-ing this mining step with a 1-class linear ν-SVM we obtaina function to rank each image by the similarity to the overallcollection of images. We apply the algorithm to detect outlierimages in a web-retrieval task where images are the result toa keyword query.

In supervised classification where we have known trainingclass labels we can directly search for the most discriminativepatterns instead of assuming frequent patterns to be the mostdiscriminative ones. By using weighted substructure miningalgorithms the patterns maximizing a score function can besought efficiently. We obtain a classification function usingthese patterns by combining the weighted mining with linearprogramming boosting [1] to a new classifier termed item setboosting. This new classifier allows us to iteratively build anoptimal classification rule as a linear combination of simpleand interpretable hypothesis functions, where each hypothe-sis function checks for the presence of a combination of visualcodewords.

The proposed approach is flexible and we demonstrate thisby replacing the bag-of-words representation with one basedon labeled connected graphs. In the graph each vertex rep-resents a local image feature and the geometric relationshipsbetween the features are encoded as edge attributes. Insteadof item sets, the discriminative patterns are subgraphs, whichcapture co-occurrence of multiple features as well as their ge-ometric relationships.

Literature Review. Quack et al. [7] use frequent item setmining on interest points extracted for each video frame in avideo sequence. Each frame becomes a set of interest pointsand frequent spatial configurations corresponding to individ-ual objects are found from these sets. An earlier similar workis Sivic and Zisserman [9].

2. Unsupervised image ranking

Consider the task of keyword based image retrieval, wherefor a given keyword a set of images x1, x2, . . . , xN is re-trieved. Most of the images will be related to the keyword,but there will be a small fraction of images that do not re-late to the keyword. Patterns which consistently appear in thesamples are likely to be discriminative for sorting out the out-liers.

1

For this ranking task we use the bag-of-words representa-tion commonly used in computer vision based on local im-age features. Local image features are a sparse representationfor natural images. Modern local feature extraction methodswork in two steps. First, an interest point operator defined onthe image domain extracts a set of interest points [5]. Second,the image information in the neighborhood around each inter-est point is used to build a fixed-length descriptor such thatinvariance and robustness against common image transforma-tions is obtained [6]. We assume that each extracted interestpoint pi has image coordinates pi.coords ∈ R2 and relativescale information pi.scale ∈ R+. For each image, a set of lo-cal image features is extracted and the feature descriptors areprojected onto a set of discrete “visual words” from a code-book. The codebook is created a-priori by k-means cluster-ing. The discretization is carried out using nearest-neighbormatching to the codebook vectors.

2.1. Method

Denote by d the number of visual words in the codebook.An image is represented as a set of visual words, equivalentlyd-dimensional binary vector x ∈ 0, 1d. In item set mining,a pattern t ∈ 0, 1d is also defined as a set of visual words.A pattern t is included in x, i.e., t ⊆ x, if for all ti = 1we also have xi = 1. Denote by T the set of all possiblepatterns, whose number of non-zero elements is between tmin

and tmax.We set the threshold τ such that the k most frequent pat-

terns T = t1, . . . , tk are extracted. For each image xi, abinary vector is built as Vi = [I(t1 ⊆ xi), . . . , I(tk ⊆ xi)],Vi ∈ 0, 1k, which is the new representation for each image.On this new representation we train a 1-class ν-SVM classi-fier with a linear kernel [8]. The ν-SVM has a high responsefor “prototypical” samples. Thus, we establish a ranking byordering the samples according to the output of the trainedclassifier. Samples on top of the list are more likely to containwhat the user is looking for.

2.2. Experiments and Results

To evaluate the performance of our ranking approach weuse the dataset of 5245 images from Fritz and Schiele [3]. Theimages were obtained by keyword searches for “motorbikes”and vary widely in shape, orientation, type and backgroundscene. All images were assigned labels based on whether theyactually show a motorbike or not; the labels are only used tomeasure the performance of the method and are never usedin the training phase. Of all downloaded images, 194 imagesdo not contain motorbikes and our task is to identify theseoutliers.

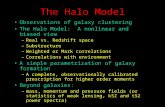

Here, we fix k = 128 and the 1-class SVM parameterν = 0.1; the results were stable over a large range of valuesof k and ν. The results are shown in Table 1. The subjec-tive interpretability of the most influential patterns is shownin Figure 1. The “baseline histogram” results are obtained bynormalizing the bag-of-words vector and applying a linear 1-class ν-SVM. For the 80 Harris-Laplace and the dense 300

Method EERFritz and Schiele [3], appearance 0.66Fritz and Schiele [3], appearance+struct. 0.71Item Set based, appearance, 80 HarLap 0.6701Item Set based, appearance, 300 HesLap 0.7268baseline histogram, 80 HarLap 0.5514baseline histogram, 300 HesLap 0.5914

Table 1. Results for the unsupervised image ranking experiment. Thescores are ROC equal error rates (EER).

Hessian-Laplace bags we used a codebook size of 64 and 192words, respectively. This choice is rather arbitrary, but theperformance is comparable over large variations of the code-book sizes. The baseline results clearly demonstrate thata combination of individual features alone is not informativeenough for ranking, whereas the item set representation andits use of combinations of features is achieving state-of-the-artresults.

3. Supervised object classification

For supervised two-class object classification we are givena set of images with known class labels. For the training phasewe assume each image is member of only one class.

3.1. Classification rule

Our classification function is a linear combination of sim-ple classification stumps h(x; t, ω) and has the form f(x) =∑

(t,ω)∈T ×Ω αt,ωh(x; t, ω). The individual stumps h(·; t, ω)are parametrized by the pattern t and additional parametersω ∈ Ω. We will use Ω = −1, 1 and h(x; t, ω) = ω(2I(t ⊆x) − 1). Also αt,ω is a weight for pattern t and parametersω such that

∑(t,ω)∈T ×Ω αt,ω = 1 and αt,ω ≥ 0. This is

a linear discriminant function in an intractably large dimen-sional space. To obtain an interpretable rule, we need to ob-tain a sparse weight vector α, where only a few weights arenonzero. In the following, we will present a linear program-ming approach for efficiently capturing patterns with non-zeroweights.

3.2. LPBoost 2-class formulation.

To obtain a sparse weight vector, we use the formulation ofLPBoost [1]. Given the training images xn, yn`n=1, yn ∈−1, 1, the training problem is formulated as a linear pro-gram (LP) with a large number of variables. By dualizing theLP, we can obtain an equivalent with small number of vari-ables but huge number of constraints. Such a linear programcan be efficiently solved by column generation techniques:Starting with an empty pattern set, the pattern whose cor-responding constraint is violated the most is identified andadded iteratively. Each time a pattern is added, the optimal so-lution is updated by solving the reduced dual problem. Thisprocess ensures convergence to the global optimal solution.While the original primal variables define a weight distribu-

Figure 1. Top five most influential patterns. Each row shows the response of the global top five and global bottom five samples for one itemset pattern, where each pattern carries a positive weight. In each image the features responding to the pattern are drawn in blue. As in theexperiment k = 128 has been used and the top five images all have the same total sum as they all contain all frequent patterns. The obviousmistake in image 5243 is due to particularly low resolution of the image (77x54 pixels). The patterns are interpretable, for example pattern #19(second row) captures a wheel and handlebar in the shown images; pattern #100 (third row) captures features on the wheels.

tion over classification stumps, the dual variables λ corre-spond a weight distribution over the input samples [1].

In each iteration of LPBoost we add one constraint to thelinear program. Selecting the constraint to add correspondsto a weighted substructure mining problem and is the com-putationally most expensive step. Therefore the efficiencyof LPBoost depends on how efficiently the pattern corre-sponding to the most violated constraint of the dual LP canbe found. The search problem is formulated as (t, ω) =

argmax(t,ω)∈T ×Ω

gain(t, ω), where

gain(t, ω) =∑n=1

λnynh(xn; t, ω). (1)

Problem (1) can be solved using the following generalizationof frequent substructure mining in which each sample xn isassigned a weight λn ∈ R.

Problem 1 (Weighted substructure mining) Given a setX = x1, . . . ,xN, xi ∈ T , associated weightsL = λ1, . . . , λN, λi ∈ R, and a threshold τ ,find the complete set of patterns and parameters Tw =(t1, ω1), . . . , (t|T |, ω|T |), ti ∈ T , ωi ∈ Ω such that forall ti holds:

∑n λnh(xn; ti, ωi) ≥ τ .

This problem is more difficult than the frequent substructuremining, because we need to search with respect to a non-monotonic score function (1). So we use the following mono-tonic bound, gain(t, ω) ≤ µ(t),

µ(t) = max 2∑n|yn=+1,t⊆xn λn −

∑`n=1 ynλn,

2∑n|yn=−1,t⊆xn λn +

∑`n=1 ynλn .

The pattern set Tw is enumerated by branch-and-bound pro-cedure based on this bound and to obtain the best pattern, thealgorithm should be slightly modified such that τ is alwaysupdated to the current best value [4].

3.3. Graph BoostingThe bag-of-words model used in item set boosting dis-

cards all information about geometric relationships betweenthe individual local features. Using graphs of local image fea-tures we can additionally model the geometry. The weightedsubstructure mining problem is tractable using graph miningalgorithms; therefore our framework stays the same and weonly replace item sets with graphs and weighted item set min-ing with weighted graph mining. We define graphs on im-ages as follows. Each interest point is represented by onevertex and its descriptor becomes the corresponding vertexlabel. We connect all vertices by undirected edges to obtaina completely connected graph. For each edge a temporarycontinuous-valued edge label vector A = [a1, a2, a3] is de-rived from its adjacent vertices’ interest points p1, p2, where

a1 = |log (p1.scale/p2.scale)| ,

a2 =‖p1.coords− p2.coords‖minp1.scale, p2.scale ,

a3 = |sin (max atan2 (V, W ) , atan2 (−V,−W ))|V = p1.coords.y − p2.coords.y

W = p1.coords.x− p2.coords.x

These attributes are used to encode the ratio of scales, anormalized distance and a horizontal orientation measure, re-spectively. The continuous attributes are normalized and dis-cretized using nearest-neighbor vector quantization on code-book created by k-means clustering on the training set.

Problem (1) can be solved for graphs by extending gSpanof Yan and Han [10]. gSpan originally mines mine frequentsubgraphs for a given set of labeled graphs, here we use theextended gSpan version of Kudo et al. [4].

3.4. Experiments and Results

We evaluate our supervised classification approach usingthe VOC2005 dataset [2]. We use the same experimental pro-cedure as used in the official VOC challenge report [2] and

ClassesTest set 1 1 2 3 4χ2 SVM 0.829 0.728 0.738 0.858Graph Boosting 0.764 0.663 0.679 0.775Item Set Boosting 0.745 0.647 0.679 0.812Test set 2 1 2 3 4χ2 SVM 0.665 0.631 0.599 0.687Graph Boosting 0.643 0.564 0.596 0.649Item Set Boosting 0.615 0.574 0.601 0.667

Table 2. VOC2005 ROC Equal Error Rate results. The classes aremotorbike (1), bike (2), person (3) and car (4). Test set 1 is from thesame distribution as the training set, test set 2 is much more difficult.Here we used 80 Harris-Laplace features per image.

evaluate the classifier on the two test sets (“test1” and “test2”).To extract local image features we use the Harris-Laplace in-terest point operator and the SIFT feature descriptor [5, 6],extracted by the binaries provided by Mikolajczyk2. The in-terest point operator threshold is adjusted for each image suchthat a 80 features are extracted.

To compare the relative performance of our method withanother approach we also implemented the method of Zhanget. al [11], which performed best in the VOC2005 object clas-sification challenge. In Zhang’s approach each image is repre-sented by a histogram of local image feature descriptors. OneSVM is trained per class in a one-vs-rest fashion. The ker-nel function used is the χ2-kernel3 between two N -elementnormalized histograms h, h′. Here, A is a normalization con-stant set to the mean of the χ2-distances between all trainingsamples.

Results. The results for the different methods on the super-vised classification experiment are shown in Table 2. The first,χ2-SVM uses histogram features and has a codebook size of1024 words. The graph boosting method has ν = 0.15 andcodebook sizes of 48 and 96. The two item set boosting re-sults use codebooks of size 512 and 384 and ν = 0.4 andν = 0.2, respectively.

The results in the supervised object classification task arecompetitive to the χ2-SVM approach. Generally and as ex-pected the graph boosting is doing better than the item setboosting approach. For the easier test set (“test1”), our boost-ing approach is consistently a bit worse than the χ2-SVM one.

Discussion. Considering the results of our supervised ap-proach it is particularly interesting that we achieve respectableresults using only a linear combination a small number of sim-ple features. This is the case because our formulation is ableto explicitly select discriminative features from a very largefeature space. The theoretical number of possible item setsis O(2n), where n is the number of codewords, whereas the

2http://www.robots.ox.ac.uk/˜vgg/research/affine/

3K(h, h′) = exp

„− 1

A

»12

Pn:hn+h′

n>0(hn−h′

n)2

hn+h′n

–«

limit for the theoretical number of possible subgraphs is evenlarger.

The absolute EER results of the χ2-SVM approach are be-low the ones reported in the VOC report [2], but we use a moresparse representation of only 80 features per image in order tomake a relative comparison of the approaches; in comparison,for the results reported in [2] Zhang et al. [11] used an aver-age of over 3000 features per image. For such a large num-ber of features, the resulting completely-connected graphs aretoo large to be mined exhaustively with current graph miningtechniques.

4. ConclusionObject classification in natural images, supervised or unsu-

pervised, is a remarkably difficult task. The best performingapproaches from the literature based on non-linear SVMs donot offer an accessible interpretation of the model parameters.

The contribution of this paper is twofold; first, we haveshown that weighted pattern mining algorithms are well suitedfor cluttered image data because they are able to ignore non-discriminate or non-frequent parts. For suitable patterns theyare both efficient and allow interpretability. Second, we de-rived and validated two practical methods for unsupervisedranking and supervised object classification based on the LP-Boost formulation.

References[1] A. Demiriz, K. P. Bennett, and J. Shawe-Taylor. Linear pro-

gramming boosting via column generation. Journal of MachineLearning, 46:225–254, 2002.

[2] M. Everingham, A. Zisserman, C. K. I. Williams, and L. J.V. G. et al. The 2005 PASCAL visual object classes challenge.In J. Q. Candela, I. Dagan, B. Magnini, and F. d’Alche Buc,editors, MLCW, volume 3944 of Lecture Notes in ComputerScience, pages 117–176. Springer, 2005.

[3] M. Fritz and B. Schiele. Towards unsupervised discovery ofvisual categories. 2006. DAGM, Berlin.

[4] T. Kudo, E. Maeda, and Y. Matsumoto. An application ofboosting to graph classification. In NIPS, 2004.

[5] K. Mikolajczyk and C. Schmid. Scale & affine invariant inter-est point detectors. IJCV, 60(1):63–86, Oct. 2004.

[6] K. Mikolajczyk and C. Schmid. A performance evaluation oflocal descriptors. PAMI, 27(10):1615–1630, Oct. 2005.

[7] T. Quack, V. Ferrari, and L. J. V. Gool. Video mining with fre-quent itemset configurations. In H. Sundaram, M. R. Naphade,J. R. Smith, and Y. Rui, editors, CIVR, volume 4071 of LectureNotes in Computer Science, pages 360–369. Springer, 2006.

[8] B. Scholkopf and A. J. Smola. Learning with Kernels, 2ndEdition. MIT Press, 2002. ISBN 0-262-19475-9.

[9] J. Sivic and A. Zisserman. Video data mining using configura-tions of viewpoint invariant regions. In CVPR, pages 488–495,2004.

[10] X. Yan and J. Han. gspan: Graph-based substructure patternmining. In ICDM, pages 721–724, 2002.

[11] J. Zhang, M. Marszalek, S. Lazebnik, and C. Schmid. Localfeatures and kernels for classification of texture and object cat-egories: An in-depth study. In INRIA, 2005.