Wavelet-packet based Geodesic Active Regions (WB-GARM)

-

Upload

addy-osmani -

Category

Documents

-

view

1.399 -

download

3

description

Transcript of Wavelet-packet based Geodesic Active Regions (WB-GARM)

A Wavelet-packet based Geodesic Active Region

Model (WB-GARM) for Glandular segmentation

of histopathology images

Adnan Osmani B.S.c

Supervisor: Dr. asir M. Rajpoot

Department of Computer Science, University of Warwick,

Coventry CV4 7AL, UK

A thesis submitted for the award of a Master's degree by Research in Computer Science

July 2009

i

Abstract

This thesis discusses wavelet-based boundary enhancement techniques for improving the

segmentation quality of contour based texture segmentation algorithms. With a focus on

improving the glandular segmentations of clinical histopathology images, a number of issues

with existing approaches are investigated before arriving at the conclusion that image and

boundary enhancement techniques play a significant role in improving image segmentation

quality.

We present a method for enhancing the visibility of region-of-interest (ROI) boundaries in

Chapter 3. This method takes advantage of the information in wavelet-packet sub-bands

overlaying wavelet feature information over a group of selected texture samples as part of a

supervised segmentation approach. This builds on the existing Geodesic Active Region model

and aims to improve the probability that a more accurate segmentation may be achieved post-

enhancement. Further insight into our algorithmic design process is also provided.

In Chapter 4, the proposed technique is validated against sets of both real world and medical

images. Experiments are demonstrated to present the improvement in segmentation quality

achieved with encouraging results being observed on both sets. Simple further adjustments are

also made to the algorithm providing additional benefits in the quality of results for the

application of glandular segmentation. The method proposed in this thesis is flexible enough to

be used in other segmentation problems, offering a computationally cheap qualitative

enhancement to their existing capabilities. It may also be powerful enough to offer real-world

solutions in the area of glandular segmentation.

ii

Contents

Abstract ....................................................................................................................................... i

List of Figures ........................................................................................................................ iv

List of Tables .......................................................................................................................... vi

Acknowledgements ............................................................................................................ vii

Chapter 1 – Introduction and Objectives ................................................................... 1 1.1 Problem Description ................................................................................................................. 3

1.2 Main Contributions ................................................................................................................... 4

1.3 Thesis Organization .................................................................................................................. 4

Chapter 2 – Literature Review ........................................................................................ 6

2.1.1 Edge-based segmentation ....................................................................................................... 6

2.2 Region-based segmentation ...................................................................................................... 8

2.3 Texture-based segmentation ................................................................................................... 10

2.4 Hybrid segmentation methods ................................................................................................ 12

2.5 Contour-based segmentation ................................................................................................... 12

2.6 Snakes: Active Contour Models ............................................................................................. 13

2.7 Level-set methods ................................................................................................................... 17

2.8 Chan-Vese Active Contour Model .......................................................................................... 20

2.9 Geodesic Active Region Model (GARM) .............................................................................. 21

2.10 Summary ............................................................................................................................... 25

Chapter 3 - Wavelet-based Geodesic Active Region Model ......................... 27 3.1 Texture descriptors.................................................................................................................. 28

3.2 The Wavelet transform ........................................................................................................... 30

3.3 The Inverse Wavelet transform ............................................................................................... 31

3.4 Wavelet Packets ...................................................................................................................... 32

3.5 The Forward Wavelet-packet transform ................................................................................. 33

3.6 Cost functions ......................................................................................................................... 34

3.7 Weaknesses of the GARM ...................................................................................................... 35

3.8 Improving the GARM ............................................................................................................. 37

3.9 A Wavelet-packet texture descriptor ...................................................................................... 38

3.10 A Pseudo-code description of the WB-GARM texture descriptor enhancement technique . 40

3.11 Generating Multi-Scale Wavelet Packet Texture Features ................................................... 40

3.12 Preparing WPF feature images for usage .............................................................................. 45

3.14 Rescaling pixel values........................................................................................................... 46

3.15 Pixel Addition ....................................................................................................................... 50

3.16 Adjustments for improved results in Medical Applications ................................................. 53

3.17 Summary ............................................................................................................................... 54

Chapter 4 .................................................................................................................................. 55

iii

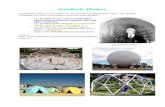

4.1 Results on a real-world data set .............................................................................................. 55

4.1.1 Data ...................................................................................................................................... 55

4.1.2 Analysis of real-world results .............................................................................................. 58

4.2 Glandular segmentation of histology images .......................................................................... 59

4.2.1 Background information on Colon Cancer .......................................................................... 60

4.2.2 Prior work in Glandular Segmentation ................................................................................ 61

4.2.3 Application of WB-GARM to Glandular segmentation ...................................................... 64

4.2.4 Results on Glandular Segmentation ..................................................................................... 65

4.3 Segmentation Setup ............................................................................................................... 67

4.3.1 Data ...................................................................................................................................... 67

4.3.2 Texture ................................................................................................................................. 67

4.3.3 Ground truth generation ....................................................................................................... 68

4.4 Results on images without the thresholding of lymphocytes.................................................. 69

4.5 Results on images using lymphocyte thresholding ................................................................. 71

4.5.1 Specimen 1 ........................................................................................................................... 72

4.5.2 Specimen 2 ........................................................................................................................... 77

4.5.3 Specimen 3 ........................................................................................................................... 81

4.5.4 Specimen 4 ........................................................................................................................... 84

4.5.5 Specimen 5 ........................................................................................................................... 88

4.6 Overview and discussion of results ......................................................................................... 92

4.6.1 Summary of the algorithm’s performance ........................................................................... 93

4.6.2 Areas for improvement ........................................................................................................ 93

4.6.3 Summary .............................................................................................................................. 93

Chapter 5 – Thesis Summary & Conclusions ....................................................... 94

5.1 Summary ................................................................................................................................. 94

5.2 Conclusions ............................................................................................................................. 96

Bibliography .......................................................................................................................... 98

iv

List of Figures

Figure 2.1: An Example of two different Kernels .......................................................................... 7 Figure 2.2: A typical example of pixel aggregation ....................................................................... 9 Figure 2.3: An Active Contour attracted to edges ........................................................................ 14 Figure 2.4: An example of the Level set evolution of a circle ...................................................... 14 Figure 2.5: An example of ACM segmentation ............................................................................ 16 Figure 2.6: An example of more difficult ACM texture segmentation ......................................... 18 Figure 3.1: Wavelet transform of the well-known ‘Lena’ image ................................................. 30 Figure 3.2: Daubechies reconstruction of the ‘Nat 2B’ image ..................................................... 29 Figure 3.3: The Wavelet-packet tree ............................................................................................. 33 Figure 3.4: A cost function applied to a Wavelet-packet transform ............................................. 35 Figure 3.5: Examples of GARM Segmentation ............................................................................ 36 Figure 3.6: Example of a Wavelet-packet decomposition ............................................................ 40 Figure 3.7: FWPT Decomposition ................................................................................................ 41 Figure 3.8: IWPT Recomposition ................................................................................................. 42 Figure 3.9: FWPT of the ‘Lena’ image ......................................................................................... 42 Figure 3.10: Perfect reconstruction of the ‘Lena’ image .............................................................. 43 Figure 3.11: Generating IWPF feature data ................................................................................. 44 Figure 3.12: IWPT of Scale 2, subband 2 with greater detail ....................................................... 45 Figure 3.13: Creating Feature Images ........................................................................................... 45 Figure 3.14: (a) A synthetic Brodatz image ............................................................................... 46 (b) A selection of Wavelet packet subbands .......................................................... 46

(c) A WP feature (WPF) version of the Brodatz image ......................................... 46 Figure 3.15: An analysis of pixel value ranges ............................................................................. 48 Figure 3.16: Equation for threshold-based pixel rescaling ........................................................... 48 Figure 3.17: Example of applied pixel rescaling .......................................................................... 48 Figure 3.18: The effect of contrast-adjustment on WPF samples ................................................. 50 Figure 3.19: Visual walkthrough of proposed algorithm .............................................................. 51 Figure 4.1: ARM segmentation results ......................................................................................... 56 Figure 4.2: WB-GARM segmentation results .............................................................................. 57

Figure 4.3: Ground truth images featuring points of curvature .................................................... 57 Figure 4.4: Colon biopsy samples featuring variations in glands, size and intensity ................... 60

Figure 4.5: Regions of interest in colon biopsy samples .............................................................. 62 Figure 4.6: Visual analysis of difficulties in glandular segmentation .......................................... 62 Figure 4.7: Artefacts surrounding the glands ................................................................................ 64 Figure 4.8: Glandular segmentation with lymphocyte-thresholding ............................................ 70 Figure 4.9: Specimen 1 - Hand labelling ...................................................................................... 72 Figure 4.10: Specimen 1 – Segmentation comparison.................................................................. 73 Figure 4.11: Specimen 1 – Boundary point comparison............................................................... 74 Figure 4.12: Specimen 1 – Comparison of results after contrast adjustment ............................... 76 Figure 4.13: Specimen 2 – Segmentation comparison.................................................................. 78 Figure 4.14: Specimen 2 – Boundary pointcomparison................................................................ 78 Figure 4.15: Specimen 2 – Comparison of results after contrast adjustment ............................... 79 Figure 4.16: Specimen 3 – Segmentation comparison.................................................................. 81 Figure 4.17: Specimen 3 – Boundary pointcomparison................................................................ 80 Figure 4.18: Specimen 3 – Comparison of results after contrast adjustment ............................... 83 Figure 4.19: Specimen 4 – Segmentation comparison.................................................................. 85

v

Figure 4.20: Specimen 4 – Boundary point comparison............................................................... 86 Figure 4.21: Specimen 4 – Comparison of results after contrast adjustment ............................... 85 Figure 4.22: Specimen 5 – Segmentation comparison.................................................................. 89 Figure 4.23: Specimen 5 – Boundary pointcomparison................................................................ 90 Figure 4.24: Specimen 5 – Comparison of results after contrast adjustment ............................... 92 Figure 4.25: Percentage of correctly segmented boundary points – a distribution comparison ... 92

vi

List of Tables

Table 4.1: Comparison of segmentation qualities ......................................................................... 57 Table 4.2: Table of Algorithmic comparisons .............................................................................. 74 Table 4.3: Comparison table for segmentation results after contrast adjustment ......................... 77 Table 4.4: Table of Algorithmic comparisons .............................................................................. 79 Table 4.5: Comparison table for segmentation results after contrast adjustment ......................... 80 Table 4.6: Table of Algorithmic comparisons .............................................................................. 82 Table 4.7: Comparison table for segmentation results after contrast adjustment ......................... 84 Table 4.8: Table of Algorithmic comparisons .............................................................................. 86 Table 4.9: Comparison table for segmentation results after contrast adjustment ......................... 88 Table 4.10: Table of Algorithmic comparisons ............................................................................ 90 Table 4.11: Comparison table for segmentation results after contrast adjustment ....................... 92

.

vii

Acknowledgements

My gratitude goes to my thesis supervisor, Dr. Nasir Rajpoot, for sharing his guidance and

insightful thoughts during the development of this thesis. His kindness, devotion, encouragement

but most of all his patience were a great asset during my write-up and will always be appreciated.

I am also indebted to my mother, father and sister for being a constant source of love and a

continuous inspiration throughout my life - they have always supported my aspirations and I

would not be the man I am today without them. I thank them for all they have done for me. I

thank my graduate school for being so understanding during the course of this thesis and for the

additional time provided to getting the concepts down right. Dr.Daniel Heesch, formally of

Imperial College, has my thanks for his research papers and humour which assisted me during

some of the more difficult moments in finishing this thesis. Finally I would like to thank

Danielle for her love and the happiness and joy she brings into my life and for always

encouraging me. The support of my family and friends have helped make this thesis possible and

I would like to extend my thanks to them all.

1

Chapter 1

Introduction

Medical imaging methods result in generating images which contain a broad range of

information about the anatomical structures being studied. This information can be used to assist

in disease diagnosis and the selection of adequate therapies and treatments. An intriguing

scenario is presented in physicians visually performing a first-hand analysis of medical images.

Here, there is a potential for observer bias and error where one physician’s visual perception of

an image may be different from that of another's [1]. Developments in medical image processing

have broadened their capabilities in recent times to be both highly sophisticated and in many

cases accurate. This contrasts with human diagnosis where such a level of certainty is not always

present. In addition to this problem, the presence of noise, variability of biological cells and

tissues, anisotropy issues with imaging systems make the automated analysis of medical images

(using both supervised and unsupervised approaches) a very difficult task which takes time to

complete.

Simplifying the ultimate goal of the analysis often restricts it to single anatomical areas (eg. the

head), single structures inside areas (eg. the brain) and single image modalities (eg. echo) to a

single type of view. Information which may be extracted about the areas to be computationally

analyzed may fall into several different categories: colour, shape, texture, position and structure

[2]. The knowledge which can be integrated into a system or method for automated analysis

typically represents a highly simplified model of the real world. This can result in certain

applications of automated methods being unreliable, slow or impractical for use under lab

conditions in hospitals. To combat this, the development of a technique should ideally be both

robust and capable of factoring in complications, artefacts and issues found in real-world image

data.

2

The computational analysis of medical images often revolves around the prior task of

segmentation and classification of specific areas inside the body [1]. It is these techniques that

allow a computer to simulate a physician with high powers of discrimination without the

downside of single-observer bias. Segmentation (and in particular texture segmentation) is of a

particular interest as it a growing field with many implications for laser assisted-surgery [3].The

ability to provide an accurate segmentation of an area of interest would mean that a surgeon or

surgical technician could greatly reduce the risk of burning more tissue than necessary,

potentially lowering the risk of complications and pain with a patient.

Image segmentation has been an ongoing challenge in the area of computer vision for several

years now and is also a fundamental step in medical image analysis. It is believed that texture is

one of the primary visual features required for segmentation as it is one of the main properties

the human visual system uses to distinguish everyday objects from each other. Although a

number of different definitions for texture exist, none of these have been proven to be adequate

and complete for all applications where it may apply [4][5].

Texture segmentation is typically composed of two primary steps; the extraction of texture

features from an image and the clustering of these features in an area to achieve a segmentation.

The extraction step is present here to map the differences in the spatially varying intensity

structures into the differences in the texture feature space. Homogeneous regions are obtained by

using a clustering method to analyze the feature space. How high in quality a classification (and

segmentation) is strongly depends on the quality of the texture features used. The quality of these

features however, is reliant on the spatial extent of the image data from which the features are

extracted. Were one able to increase the quality of the texture data extracted from an image or

enhance it’s boundaries, this may lead to a segmentation algorithm being better able to determine

where an object’s borders end.

Various methods exist for extracting textural features. These fall under the categories of

statistical, geometrical, signal and model-based approaches. While Geometrical approaches also

cover structural methods, other paradigms such as autocorrelation features comprise of statistical

methods which make use of the spatial distribution of gray-level values in an image [6]. Looking

further at the range of methods available, Wavelet Transforms and Spatial domain filtering are

also other approaches that have widely been studied.

3

1.1 Problem Description

Inaccurate segmentation is a problem that affects many areas of image processing such as

medical imaging and in particular, glandular segmentation (GS). GS is an ongoing challenge

which spans across many areas of medical histopathology including the study of prostate images

[7]. In several cases, the isolation of a particular area of a slide for further study is of critical

importance in making an early prognosis of the disease – such as in the diagnosis of colon

cancer. In clinical histopathology, a significant quantity of inter and intra-observational variation

in the judgement of specimens can lead to inaccurate or inconsistent manual segmentations of

regions of interest. This deficiency of a single accurate observation for pathologists highlights an

area where computational analysis can aid in providing a reliable segmentation.

Whilst many studies have looked at the problem of segmenting the histopathological images

used in the diagnosis of colorectal cancer [8][9], few have been able to adequately address the

issue of segmentation accuracy. One of the main challenges to address in GS is boundary

segmentation where the accurate segmentation of lumen (the interior part of the cell) from the

darker nuclei on its boundaries is the primary task any computational solution must address

effectively. Computational estimation of lumen boundaries can at times be a difficult task due to

the low differences in contrast between the lumen and material which surround the outer walls of

the gland. This closeness in intensity values makes accurate segmentation of lumen a far greater

challenge, but does highlight that GS is an area where improvements in the quality of a final

segmentation could be critical to aiding a pathologist or laser-guided surgeon in saving a

patient’s life.

Examining signal processing in greater detail, feature extraction can be viewed as a problem

composed of two key stages: a signal decorrelation step and a computation of the feature metric

which is often a probability measure [10]. Wavelet Analysis of an image can be viewed in the

frequency domain as partitioning it into a set of sub-bands. The Discrete Wavelet Transform

(DWT) offers a multi-resolution representation of an image. Transient events in the data are also

preserved by this analysis. Whilst the DWT applies a wavelet transform step to just the low pass

result, the Wavelet Packet Transform [11] applies this step to both the low pass and high pass

results which pave the way forward for obtaining a wider range of texture features from an image

4

than are currently being harnessed or utilized as part of segmentation approaches such as the

Geodesic Active Region model (GARM) – a framework designed to work with frame partition

problems based on curve-propagation under the influence of boundary and region based forces.

1.2 Main Contributions

This thesis proposes a new texture descriptor for texture segmentation models which utilizes

wavelet packet texture features (WPF) with combined pixel-addition of the source as part of an

ROI boundary enhancement routine. The primary enhancements made in this routine are an

increase in the visible edge and boundary artefacts which surround the main objects in an input

image, allowing segmentation approaches to have a clearer understanding of where the true

boundaries of an ROI lie. The result of these enhancements is a contour based texture

segmentation algorithm which may offer improved segmentation results on both real-world and

medical images, as will be discussed in Chapters 3 and 4. For the purposes of performance

evaluation and demonstration, the proposed multi-scale enhancement routine is directly

integrated into the Geodesic Active Region model (GARM) [49] such that the input to the

GARM spectrum analyzers is the Gabor response to a WPF texture sample summed with a

source texture sample. With respect to the particular application the proposed method is found to

be useful. The proposed solution is capable of enhancing the clarity of boundaries surrounding

the lumen in glands such that a texture descriptor is more accurately capable of representing

these boundaries. This effectively results in a segmentation model being better able to correctly

segment the objects that lie inside them and a significantly more accurate final segmentation.

1.3 Thesis Organization

Chapter 1 is the introduction to this thesis and provides a summary of the background information to

it. The problem description and the thesis organization are also provided here.

Chapter 2 examines current and past literature in the field of texture segmentation with references to

some of the popular models that have consistently provided a certain level of accuracy in this field.

Chapter 3 introduces the newly proposed texture descriptor with specific references to wavelet packet

texture features and pixel addition for improved ROI boundaries during segmentation. The

methodology behind this method is discussed here as well.

5

Chapter 4 provides the results of evaluating the proposed texture descriptor against real world and

medical images with specific focus on its application to glandular segmentation in histopathology.

Chapter 5 states the conclusions drawn from this thesis and suggests possible directions for future

research

6

Chapter 2

Literature review

Introduction

Two fundamental techniques that have been employed in image segmentation for many years

have been edge and region based. Edge-based segmentation partitions images by locating

discontinuations or breaks in consistency among regions inside the image area. In contrast,

region based methods apply a similar function which is based on the uniformity of a particular

property within a sub-window. In section 2.1, a brief introduction to these two types of

segmentation methods is presented.

2.1 Edge-based segmentation

Edge-based segmentation, one of the oldest forms of segmentation, accounts for a large group of

techniques based on information about the edges in an image. This approach searches for

discontinuities in intensity which assists in highlighting object boundaries. Some researchers

may argue that rather than following the conventional meaning of the term "segmentation", this

particular approach may be more appropriately considered a form of boundary detection [12].

The Oxford English dictionary defines an edge as the line along which two surfaces meet. For

the purposes of our problem, this can be considered as a distinct boundary between two regions

who have their own discrete characteristics. Traditional edge-based segmentation takes an overly

simplistic view of image homogeneity. Under this assumption, it is assumed that every region is

adequately uniform such that the borders that separate them may be determined using

discontinuity metrics alone. This flawed view is the basis for many improved models being

introduced over time including some of the algorithms that will be discussed in the next section.

Many edge-based segmentation approaches rely upon the concept of a convolution filter. Image

7

convolution is an image processing operation where each destination pixel is calculated based on

a weighted sum of a set of nearby source pixels. As an example, one may label the pixels in an

image as a one-dimensional array. Allowing the n-th destination pixel to have the value nb , the

p-th source pixel to have the value pa and the digital filter F to have a set of non-zero vales mF

for a set m where typically the filter mF is normalized such that Summ mF =1. The filter mF

works as follows:

mB = Summ mF ma – n for each destination pixel n. (2.1)

The sum over the m terms in the convolution is the inner loop of the computation. The order of

the indices on the right hand side of this equation is a convention for the convolution. If the

images are of the form NxM pixels and the number of non-zero elements in the filter F is s, then

the convolution needs NMs multiplications and additions to be calculated. Local derivative

operations can then be performed by convolving the image with a variety of different kernels.

Both Sobel and Canny edge detectors are both widely used kernels in Computer Vision.

The Sobel operator [13][14] is an edge detection operation which calculates the gradient of an

image's intensity at each point, providing the direction of the largest potential increases from

light to dark and the rate of change in that particular direction. The result displays how smoothly

the given images changes at that point and thus, how likely it is that that part of the image

represents an edge. It also displays how that edge is likely to be oriented. The operator consists

of 3x3 convolution kernels (one is effectively the other rotated by 90 degrees). These kernels can

be applied separately to an input image in order to produce separate measurements of the

gradient component in each direction.

(a) (b)

Figure 2.1: An example of two different kernels (a) An example of a vertical gradient kernel, (b)

example of a vertical Sobel kernel

8

Although Sobel is very useful for simple thresholding, Canny combines thresholding with

contour following to reduce the probability of false contours and will be discussed next.

The Canny edge detection algorithm is considered by many as the most rigorous edge detector.

Canny intended for his approach to improve on several of the well established methods of edge

detection when he began his work. The first criterion of the algorithm is its low error rate. The

second is that the distance between edge pixels as discovered by the detector and the actual edge

is to be at a minimum. The third criterion was that it was to only have one response to a single

edge. This was added as a requirement as the first steps were not substantial enough to fully

eliminate the possibility of multiple responses to an edge. Using these criteria, the Canny edge

detector smoothes the image being processed to eliminate the noise. It then discovers the image

gradient to highlight regions which have high spatial derivatives. The algorithm tracks along

these regions, suppressing any pixel which is not at the maximum. The gradient array is next

further reduced through the process of hysteresis - this tracks along the pixels which have not yet

been suppressed. This technique uses two separate thresholds and if if a magnitude is below the

first threshold, it is set to zero. If above the high threshold, it is made an edge.

Of the two approaches above, one would generally opt for Canny as it performs additional

processing and non-maximum suppression during processing which can eliminates the

possibility of wide ridges often seen with Sobel. Edge-based segmentation by gradient operations

achieves reasonably good results in images which have clearly defined borders, non-

heterogeneous intensity profiles and low noise. As a result of the latter limitation, pre-processing

operations such as smoothing would have been considered a pre-requisite to using this method

were it not destructive to the sensitive edge information. This point aside, there are actually

certain benefits to using edge-based segmentation. For instance, as it is not a computationally

expensive operation, it can be completed much faster than most modern approaches. It can be

implemented as part of a local convolution filter making it relatively easy to integrate into other

applications.

2.2 Region-based segmentation

Region-based segmentation aims to partition regions or sub-windows based on common image

properties such as: intensity (either original or post-processed), colour, textures unique to each

region and spectral profiles which provide additional

may sound familiar as they are also encountered in texture class

area which is parallel to image segmentation.

exploits the fact that pixels which lie closely packed together have similar pixel

grayscale values [16

as follows:

1. An initial group of small areas is iteratively merged based on a loosely def

similarity criteria.

2. A set of seed points or seed pixels is then selected and used for

neighbouring pixels

3. Regions grow from these seeds by appending neighbouring pixels which are considered

similar.

4. If a single region stops growing, another seed is chosen which has not yet been assigned

ownership to any other regi

Figure

and in (b

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing

before allowing other seeds to pro

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

may give rise to very different

region and spectral profiles which provide additional

may sound familiar as they are also encountered in texture class

area which is parallel to image segmentation.

exploits the fact that pixels which lie closely packed together have similar pixel

grayscale values [16

as follows:

1. An initial group of small areas is iteratively merged based on a loosely def

similarity criteria.

2. A set of seed points or seed pixels is then selected and used for

neighbouring pixels

3. Regions grow from these seeds by appending neighbouring pixels which are considered

similar.

4. If a single region stops growing, another seed is chosen which has not yet been assigned

ownership to any other regi

Figure 2.2: A typical examp

and in (b) the resulting segmentation.

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing

before allowing other seeds to pro

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

may give rise to very different

region and spectral profiles which provide additional

may sound familiar as they are also encountered in texture class

area which is parallel to image segmentation.

exploits the fact that pixels which lie closely packed together have similar pixel

grayscale values [16]. A demonstr

1. An initial group of small areas is iteratively merged based on a loosely def

similarity criteria.

2. A set of seed points or seed pixels is then selected and used for

neighbouring pixels.

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

ownership to any other regi

A typical examp

resulting segmentation.

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing

before allowing other seeds to pro

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

may give rise to very different

region and spectral profiles which provide additional

may sound familiar as they are also encountered in texture class

area which is parallel to image segmentation.

exploits the fact that pixels which lie closely packed together have similar pixel

A demonstration of this can be seen in

1. An initial group of small areas is iteratively merged based on a loosely def

2. A set of seed points or seed pixels is then selected and used for

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

ownership to any other region and the process is started again.

(a) (b)

A typical example of pixel aggregation. In (a

resulting segmentation.

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing

before allowing other seeds to proceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

may give rise to very different segmentation results

9

region and spectral profiles which provide additional

may sound familiar as they are also encountered in texture class

area which is parallel to image segmentation. Region growing is an aggregation

exploits the fact that pixels which lie closely packed together have similar pixel

ation of this can be seen in

1. An initial group of small areas is iteratively merged based on a loosely def

2. A set of seed points or seed pixels is then selected and used for

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

on and the process is started again.

(a) (b)

ixel aggregation. In (a

resulting segmentation.

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

segmentation results

region and spectral profiles which provide additional multi-dimensional image data [15

may sound familiar as they are also encountered in texture class

Region growing is an aggregation

exploits the fact that pixels which lie closely packed together have similar pixel

ation of this can be seen in

1. An initial group of small areas is iteratively merged based on a loosely def

2. A set of seed points or seed pixels is then selected and used for

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

on and the process is started again.

(a) (b)

ixel aggregation. In (a) we can see a se

Although region growing is a simple concept, there are a number of significant problems which

arise when integrating it for use in applications. Allowing a particular seed to grow in it

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

segmentation results [17].

dimensional image data [15

may sound familiar as they are also encountered in texture classification approaches, a subject

Region growing is an aggregation

exploits the fact that pixels which lie closely packed together have similar pixel

ation of this can be seen in Figure 2.2

1. An initial group of small areas is iteratively merged based on a loosely def

2. A set of seed points or seed pixels is then selected and used for comparison to other

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

on and the process is started again.

(a) (b)

) we can see a se

Although region growing is a simple concept, there are a number of significant problems which

a particular seed to grow in it

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

dimensional image data [15

ification approaches, a subject

Region growing is an aggregation concept which

exploits the fact that pixels which lie closely packed together have similar pixel intensity and

.2. Region growing works

1. An initial group of small areas is iteratively merged based on a loosely defined set of

comparison to other

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

) we can see a set of seeds underlined

Although region growing is a simple concept, there are a number of significant problems which

a particular seed to grow in it

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

dimensional image data [15]. These

ification approaches, a subject

concept which

intensity and

. Region growing works

ined set of

comparison to other

3. Regions grow from these seeds by appending neighbouring pixels which are considered

4. If a single region stops growing, another seed is chosen which has not yet been assigned

t of seeds underlined

Although region growing is a simple concept, there are a number of significant problems which

a particular seed to grow in its entirety

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue tha

is encountered when incorrectly using this approach is that different selections of seed pixels

]. These

ification approaches, a subject

concept which

intensity and

. Region growing works

t of seeds underlined

Although region growing is a simple concept, there are a number of significant problems which

s entirety

ceed creates a biased and inaccurate segmentation in favour of

the earliest regions that are segmented. The disadvantages of this may include ambiguities at the

edges of neighbouring regions which may not be possible to resolve correctly. Another issue that

10

The advent of further research into region growing has led to the creation of more sophisticated

segmentation methods which utilize additional information to increase the accuracy of the

approach. Region competition [30] is one of them. This algorithm minimises a strong Bayes

crtieria using a variational principle and brings together the best features of both snake models

and region growing. By merging nearby regions under a criterion of region-uniformity, it is

possible to achieve over-segmented results whilst the opposite leads to quite poor segmentations.

Parametric models are yet another region-based segmentation method based on the paradigm of

uniformity. Here, if two-regions contain similar values within a threshold they may be

considered uniform. It is common for such parameter values to be obtained from image analysis,

observation data or knowledge of the imaging process. Such deductions are often made by use of

conditional probability density functions (PDF's) and Bayes rule [18].

One of the constraints of estimation-based segmentation is the lack of explicit representation

when dealing with the obvious uncertainly of parameter values. This makes them prone to errors

if the estimation of parameters is poor. Returning to Bayes, the probability of region

homogeneity exploits the complete set of information extracted from the statistical image models

rather than relying on an estimation of parameter values. Today there exist statistical

segmentation methods which are based on both estimation and Bayesian approaches expanded to

several models including the Active Contour Model and the Active Region Model. Both of these

approaches will be discussed further in Chapter 3.

2.3 Texture-based segmentation

Whilst no mathematical definition exists for texture, it is often attributed to human perception as

the appearance or feel of a particular material or fabric. For example, the arrangement of threads

in a textile. If this concept of "threads" are applied to "pixels" a similar definition could be

considered for the pixels in an image. In image processing, groups of pixels may be labelled

according to a particular application ; for example, a group of pixels exhibiting green colours

which appear in a column structure could be labelled as exhibiting a "grass" texture. Using the

concept of human perception once more one may explain the segmentation of textures using it as

an analogy. When one views an object, a type of local spatial frequency analysis is performed on

the image observed by the retina and this analysis is carried out by a bank of band-pass filters

11

which allows one to distinguish characteristics about the image such as it's different textures

[21].

The segmentation of textures has long been an important task in image processing. Texture

segmentation techniques aim to segment an image into homogeneous regions and identify the

boundaries which separate regions with different textures. Efficient texture segmentation

methods can be very useful in computer vision applications such as the analysis of biomedical

images, aerial images and also in the study of aerial imaging. Several texture segmentation

schemes are based on filter bank models, where a collection of filters, known as Gabor filters,

are derived from Gabor functions.

The goal of employing a filter bank is to transform differences in texture into filter-output

discontinuities at texture boundaries which can be detected. By locating such discontinuities, one

may segment the image into differently textured regions. Distinct discontinuities, however, only

occur if the Gabor filter parameters are well chosen. Segmenting an image containing textures is

typically completed in two core stages. The first stage involves decomposing an image into a

spatial-frequency representation (using a band of digital band-pass filters such as a Gabor filter).

The second stage is analysing this data to find regions of similar local spatial frequency. This

makes it possible for an algorithm to find multiple textures in a digital image. There have been

many studies done in the area of multi-channel filtering, particularly in the wavelet domain [22-

25].

One of the most essential choices to be made when exploring this problem domain is between

supervised texture segmentation and unsupervised texture segmentation. The main difference

between the two is in the prior knowledge available about the specific problem being addressed.

If one can establish that the image contains only a small set of different, distinct textures that we

may delineate small regions of homogeneous texture, extract feature vectors from them using a

chosen segmentation algorithm and utilize these vectors as fixed points in the feature space. The

vectors can then be labelled by assigned them the label of their closest neighbour which is a

fixed point.

Through neural networks or some other machine learning approach, the system may then be told

when it makes mistakes and it can adjust its segmentation model accordingly. If, however, the

number of potential textures is deemed to be too large, or if no information about the type of

12

texture to be presented to the system is available, then an unsupervised method must be used.

With this method, before each feature may be assigned to a class as generated, statistical analysis

must be performed on the entire distribution of vectors. The goal of this is to recognize clusters

that are in the distribution and assign the same label to all of them. This is usually a much harder

task to be accomplished.

In this thesis, we will be using supervised segmentation for our chosen approach as a portion of

the work presented builds upon the Geodesic Active Region model (a supervised segmentation

algorithm).

2.4 Hybrid segmentation methods

Some approaches have attempted to integrate both region and edge based segmentation

approaches [26-28] whilst others have even tried fusing region and contour segmentation using

watersheds [29]. The combination of two or more different algorithms have also produced some

interesting results [30][31] - this is an encouraging development that I will be exploiting in my

own proposed method to be introducing in a later chapter.

2.5 Contour-based segmentation

Over the past decade, extensive studies have been conducted on the curve evolution of snakes

and their applications to computer vision. In comparison to some of the other methods available

today such as region-growing and edge-flow methods, the active contour model has maintained a

position of favourable note due to its lack of strong sensitivity to smoothed edges and

discontinuities in contour lines. The original concept of a snake was first introduced in early

1988 [32] and was later advanced by a number of researchers. It can be described as the

deformation of an initial contour towards an object's boundary by minimization of a function R,

defined such that the minimum of R is achieved at the object's boundary. This minimization

where the overall smoothness of the curve is controlled by one set of components and the

attraction force pulling the curve towards the boundary is controlled by another is quite atypical

of the approaches researched. There are two primary types of active contour model - geometric

[33][34][35] and parametric [36].

13

The parametric active contour models [36][38] are part of a class of conventional snake models

where a curve is explicitly represented by a group of curve points which are moved by an energy

function. This approach is considered significantly more ingenious as its mathematical

formulation enables it to be a more powerful image segmentation paradigm than its implicit

alternative. The parametric active contour model offers simpler integration of image data, desired

curve properties and domain-related constraints within a single process. Although this places it at

an advantage, the parametric model does suffer from limitations such as not being able to handle

non-complex topologies. This limits its effective usage, however work has been done in easing

these conditions to make it more broadly appealing [38].

The geometric models consist of embedding a snake as a zero-level set of a higher dimensional

function and solving the related equation of motion rather than computing curves.

Methodologies such as this are best suited to the segmentation of objects with complex shapes

and unknown topologies [37]. Unfortunately, as a result of higher dimensional formulation,

geometric contour models are not as convenient as parametric models in applications such as

shape analysis and user interaction.

2.6 Snakes: Active Contour Models

Active Contour models (Snakes) have been used in the past in computer vision problems related

to image segmentation and understanding. They are a special case of the deformable model

theory [39] which are analogous to mechanical systems where a force of influence may be

measured using potential and kinetic energy. An active contour model is defined as an energy

minimizing spline where the snake’s energy is dependent upon its shape and location within the

image. Local minimization of this energy then corresponds to desired image properties. Snakes

do not solve the problem of discovering contours inside images, but instead, depend on other

mechanisms such as interaction with a user or information from image data to assist it in

achieving a segmentation. This user interaction must state some approximate shape and starting

position for the snake to begin (ideally somewhere near the desired contour). Prior knowledge is

then employed to push the snake towards an acceptable solution. In Figures 2.3 and 2.4 one may

see examples of one method which may be used to provide a good starting point (priori) for the

active contour model – a binarization of the original source image. This method is very useful for

14

images containing a small set of textures but does not perform as well on those containing many

(an example of this is may be viewed in Figure 2.6 (a)).

(a) (b) (c) (d)

Figure 2.3: An example of Active Contour Model segmentation. 2.5 (a),(c) Two sets of ACM

segmentation results compared to the binary mask initializers (Figure 2.5 (b)(d)) used to achieve

these outputs.

(a) (b) (c)

Figure 2.4: An example of more difficult texture segmentation using the ACM. (a) ACM

segmentation of an image with a wide variety of individually distinct textures, and (b) its binary.

(c) The ACM results on a synthetically generated image.

15

From a geometric perspective, snakes are a parametric contour which are assumed to be closed

and embedded in a domain. With this in mind, a snake may be represented as a time varying

parametric contour. Parametrically, a simplified non-time varying ACM snake may be defined

by:

( ) ( ( ), ( ))v s x s y s= (2.2)

where [0,1]s∈ is the arc length and x(s),y(s) are x and y co-ordinates along the contour. Next,

the energy for the contour may be expressed by:

(2.3)

Here, Einternal corresponds to the internal energy of the contour and Eexternal to the external energy.

The internal forces arise from the shape and discontinuities in the snake whilst the external

forces are based on the image interface or higher level understanding process. Eimage is the

image's energy which represents lines, edges and termination terms.

The internal contour’s energy is composed of the first order differential vs = dv/ds which is

controlled by ( )sα and the second order differential vss= d2v/ds2 , which is controlled by β(s). In

extended form, this is expressed as follows:

22 2

int . 2( ) ( )ernal

dv d vE s s ds

ds dsα β= +∫ (2.4)

where ( )sα , β(s) specify the elasticity and stiffness of the contour snake.

The purpose of the internal energy Einternal is to force a shape on the deformable snake and ensure

that a constant distance is maintained between nodes in the contour. With this in mind, the first

order term adjusts the elasticity of the snake and the second-order curvature is responsible for

making an active contour shrink or grow. Visually, if there are no other influences acting, the

continuity energy term pushes an open contour into a straight line and a closed contour into a

circle.

int .( ) (( ) ) ( )snake ernal external imageE ds E v ds E v ds E v ds= + +∫ ∫ ∫ ∫

16

A variety of functionals (or metrics) can be used to attract the snake to different artefacts in the

image. Let us take for example a line functional and an edge functional. As described by Kass et

al. in [32] a line functional can be expressed as simply as:

( , )lineE f x y= (2.5)

where x,y are coordinates in an image I and f(x,y) is a function which denotes the gray levels at

the location (x,y). The most simple useful image functional based on this is image intensity

where f is substituted for I. In this case, the snake will either attempt to align itself with the

lightest or darkest nearby contour.

An edge-based functional would attract the contour to areas with strong edges and can be

expressed as:

( )2( , )edgeE grad f x y= (2.6)

(a) (b) (c)

Figure 2.5: An Active Contour attracted to edges. (a) An illustration of the target area. Here the

shape of the snake contour between the edges in the illusion is completely determined by a spline

smoothness term [32] (b) A termination snake attracted to the edges and lines in equilibrium on

the subjective contour (extended from Kass et al.)[32][24] (c) An initialization of the ACM.

17

The Active Contour Model uses minimisation of the energy function as a means to achieving

edge detection of objects. The final snake (a contour of the object of interest) is however highly

dependent on its initial starting position and starts from a path close to the solution and converge

to a local minimum of the energy, ideally as close to the expected object boundaries as possible.

There are several possibilities for where a convergence may occur as can be seen above in Figure

2.13 (c). Here, the curve a is outside the object, the curve b overlaps and the curve c is

perpendicular to it.

2.7 Level-set methods

Osher and Sethian [33] proposed a new concept for implementing active contours known as the

Level set theory . Level set methods, rather than following an interface take an original curve and

build it into an isosurface of a function. The produced evolution is then mapped into an evolution

of the level set function itself. Using [33], Osher and Sethian were able to harness the power of a

two-dimensional Lipschitz function, ɸ(x,y) : Ω → ℝ in order to represent a contour implicitly.

The term ɸ(x,y) is referred to as a level set function. On the zero level of this function the level

set function is defined as a contour c such that:

( , ) : ( , ) 0 ( , )C x y x y x yφ= = ∀ ∈Ω (2.7)

where Ω denotes the complete image plane. As the level set function increases from its initial

stage the correlated set of contours C propagate towards the outside. Based on this definition, the

contour evolution is equal to the evolution of the level set function.

( , )C d x y

t dt

φ∂=

∂

(2.8)

The primary advantage of using the zero level is that a contour may be expressed as the

boundary or border that lies between a positive area and a negative area, such that the contours

may be explicitly identified by simply checking the sign value of the level set function ɸ(x,y).

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

gradient given by:

Here,

the level set function

Figure 2.6

For a contour C

ɸ0(x,y)

When applying level sets to image segmentation, we seek

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

boundaries in the image are f

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

with support for both two

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

gradient given by:

Here, v signifies a constant speed to deform the contour and

the level set function

Figure 2.6: An

For a contour C

(x,y) = 0, (x,y)=

When applying level sets to image segmentation, we seek

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

boundaries in the image are f

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

ith support for both two

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

gradient given by:

signifies a constant speed to deform the contour and

the level set function ɸ(x,y)

An example of the

For a contour C0, the initial level set function

(x,y)= C0.

When applying level sets to image segmentation, we seek

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

boundaries in the image are f

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

ith support for both two

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

( , )d x y

dt

φ

signifies a constant speed to deform the contour and

ɸ(x,y).

le of the level set

, the initial level set function

When applying level sets to image segmentation, we seek

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

boundaries in the image are found. Level set methods differ from other front

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

ith support for both two and three dimensional surfaces.

18

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

( , )d x y

dtφ κ φ= ∇ +

signifies a constant speed to deform the contour and

level set evolution of a circle (hard line)

, the initial level set function ɸ is zero at the initial contour points given by

When applying level sets to image segmentation, we seek

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

ound. Level set methods differ from other front

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

and three dimensional surfaces.

18

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

( )v cφ κ φ= ∇ +

signifies a constant speed to deform the contour and

evolution of a circle (hard line)

is zero at the initial contour points given by

When applying level sets to image segmentation, we seek to detect the boundaries of an object in

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

ound. Level set methods differ from other front

as they make use of an implicit representation of the interface.

because many complications such as breaking and merging are easily handled by the method

and three dimensional surfaces.

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

( )φ κ φ

signifies a constant speed to deform the contour and κ expresses the mean curvature of

evolution of a circle (hard line)

is zero at the initial contour points given by

to detect the boundaries of an object in

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

ound. Level set methods differ from other front

as they make use of an implicit representation of the interface. Level sets are an intuitive idea

because many complications such as breaking and merging are easily handled by the method

Contour deformation is typically represented in the form of a PDE. Osher and Sethian

originally proposed a formulation of contour evolution which used the magnitude of the level set

expresses the mean curvature of

evolution of a circle (hard line) with normal speed F.

is zero at the initial contour points given by

to detect the boundaries of an object in

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

ound. Level set methods differ from other front-tracking techniques

Level sets are an intuitive idea

because many complications such as breaking and merging are easily handled by the method

Contour deformation is typically represented in the form of a PDE. Osher and Sethian [33]

originally proposed a formulation of contour evolution which used the magnitude of the level set

(2.

expresses the mean curvature of

with normal speed F.

is zero at the initial contour points given by

to detect the boundaries of an object in

the image we wish to segment. This is achieved by initializing an interface at a position in the

image and then changing it by allowing appropriate forces to act on it until the correct

tracking techniques

Level sets are an intuitive idea

because many complications such as breaking and merging are easily handled by the method

originally proposed a formulation of contour evolution which used the magnitude of the level set

(2.9)

expresses the mean curvature of

with normal speed F.

to detect the boundaries of an object in

the image we wish to segment. This is achieved by initializing an interface at a position in the

tracking techniques

Level sets are an intuitive idea

because many complications such as breaking and merging are easily handled by the method

19

We now return to the paradigm of edge-based segmentation. Contour based methods which make

use of edge information typically involve two key parts: regularity, which determines a contours

shape and the edge detection factor, which attracts contours towards the edges.

Solving the classical problem edge-based segmentation using snakes amounts to finding for a set

of constants, a curve C that minimizes the energy associated with this curve. Considering an

image which contains multiple objects, it is not possible to detect all of the objects present

because these approaches cannot directly deal with changes in topology. Topology-handling

routines must thus be incorporated to assist in making this possible. Classic energy-based models

also require the selection of parameters which control the trade-off between smoothness and

proximity to the object.

Caselles et al addressed both these issues in [35] using their Geodesic Active Contour Model

(GACM). The Geodesic Active Contour Model is based on active contours evolving in time

according to intrinsic geometric measures of the an input image. Evolving contours split and

merge naturally which allows the simultaneous detection of multiple objects and both interior

and exterior boundaries. In addition to this, the GACM applies a regularization effect from

curvature based curve flows which allow it to achieve smooth curves without a need for the high

order smoothness found in energy-based approaches.

The GACM was one of the first active contour approaches to utilize level-sets to approach the

problem of image segmentation. By embedding the evolution of the curve C inside that of a

level-set formulation, topological changes are handled automatically and the accuracy and

stability of these are achieved using a proper numerical algorithm.

A stopping function g(I) is also employed by the GACM for the purpose of stopping an evolving

curve when it arrives at the objects boundaries (as can be seen in Equation 2.14).

1( )

ˆ1p

g II

=+ ∇

(2.10)

20

Where I is a smoothed version of the image I computed using Gaussian filtering and p=1 or 2.

For an ideal edge, ˆ , 0I gδ∇ = = and the curve stops at 0tu = . One can then find the boundary

given by the set 0u = .

Although edge-based approaches such as the GACM work acceptably on simple segmentation

problems, their lack of support for topological changes makes them inadequate for images which

contain more than one object. Edge-based models are also susceptible to missing out on smooth

or unclearly defined boundaries and are also sensitive to noisy data. Region-based approaches,

however have specific advantages over edge-based techniques; these include the ability to

produce coherent regions which link together edges and gaps produced by missing pixels and

much better topological handling for images containing noise.

2.8 Chan-Vese Active Contour Model

Chan and Vese proposed a piecewise constant active contour model employed the Mumford-

Shah segmentation model to extend the original algorithm [46][47]. Rather than searching edges,

piecewise-constant ACMs deform a contour based on the minimization of an energy function.

Constants approximate statistics of the image intensity within a particular subset whilst

piecewise-constants approximate similar measures across the entire area of an image.

As many classical snakes and active contour models rely on the edge-function , depending on the

image gradient 0u∇ , to stop the curve evolution, these models can detect only objects with

edges defined by gradient. In practice, the discrete gradients are bounded and then the stopping

function g is never zero on the edges and the curve may pass through the boundary.

The Chan-Vese approach uses the minimization of an energy-based segmentation which employs

a stopping term based on the Mumford Shah segmentation techniques. By doing this, they obtain

a model which may detect contours both with or without gradient for instance objects with very

smooth boundaries or even discontinuous boundaries.

Assuming the image 0u is formed by two regions of approximately piecewise constant intensities

of distinct values 1

0u and 0

0u and that the object to be detected is represented by the region with

the value 0

iu .Next, denote its boundary by 0C , where 0 0

iu u≈ denotes being inside the object and

0

0 0u u≈ denotes being outside it. Considering the following fitting term:

21

2 2

1 2 0 1 0 2( ) ( )

( ) ( ) ( , ) ( , )inside C outside C

F C F C u x y c dxdy u x y c dxdy+ = − + −∫ ∫ (2.11)

Where C is any other variable curve and the constant 1c , 2c depending on C, are the averages of

0u inside C and respectively outside C. In this simple case, it is obvious that the boundary of the

object is the minimizer of the fitting term:

1 2 1 0 2 0

inf ( ) ( ) 0 ( ) ( )F C F C F C F C

C+ ≈ ≈ +

(2.12)