vol2 no 2

description

Transcript of vol2 no 2

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

1

Multiple region Multiple Quality ROI Encoding using Wavelet Transform

P.Rajkumar1 and Madhu Shandilya2

1,2Department of Electronics, Maulana Azad National Institute of Technology, Bhopal, India

Abstract: The Wavelet Transform, which was developed in the last two decades, provides a better time- frequency representation of the signal than any other existing transforms. It also supports region of interest (ROI) encoding. This allows different regions of interests to be encoded at different bit rats with different quality constraints, rather than encoding the entire image with single quality constraints. This feature is highly desirable in medical image processing. By keeping this in mind we propose multiple regions multiple quality (MRMQ) encoding technique which facilitates different ROIs according to the priority of enhancement. The paper utilizes the adaptive biorthogonal wavelet application to keep quality in the highest priority ROI, as they posses excellent reconstruction features. The paper also proposes bit saving by pruning the detail coefficients in the wavelet decompositions and truncating the approximation coefficients outside ROI by using space frequency quantization method. Simulation results obtained shows that, by proposed method the image quality can be kept high up to loss less in the ROI region while limiting the quality outside the ROIs. Keywords: Wavelet Transform, supports region of interest.

1. Introduction to medical imaging Medical images are now almost always gathered and stored in digital format for easy archiving, storage and transmission, and to allow digital processing to improve diagnostic interpretation. Recently, medical diagnostic data produced by hospitals increase exponentially. In an average-sized hospital, many tetra or 1015 bytes of digital data are generated each year, almost all of which have to be kept and archived. Furthermore, for telemedicine or tele browsing applications, transmitting a large amount of digital data through a bandwidth-limited channel becomes a heavy burden [1]. Three-dimensional data sets, such as medical volumetric data generated by computer tomography (CT) or magnetic resonance (MR), typically contain many image slices that require huge amounts of storage. A typical mammogram must be digitized at a resolution of about 4000 x 5000 pixels with 50 µm spot size and 12 bits, resulting in approximately 40Mb of digital data. Such high resolution is required in order to detect isolated clusters of micro calcifications that herald an early stage cancer. The processing or transmission time of such digital images could be quite long. Also, archiving the amount of data generated in any screening mammography program becomes an expensive and difficult challenge. [2] The storage requirement for digital coronary angiogram

video is huge. A typical procedure of 5 minutes, taken at 30 frames per second for 512x512 pixel images results in approximately 2.5GB of raw data. [3]

2. Need for Image compression A digital compression technique can be used to solve both the storage and the transmission problems. An efficient lossy compression scheme to reduce digital data without significant degradation of medical image quality is needed.

Compression methods are important in many medical applications to ensure fast interactivity during browsing through large sets of images (e.g. volumetric data sets, time sequences of images, image databases). In medical imaging, it is not acceptable to lose any information when storing or transmitting an image. There is a broad range of medical image sources, and for most of them discarding small image details might alter a diagnosis and cause severe human and legal consequences. [1]

In addition to high compression efficiency, future moving image coding systems will require many other features. They include fidelity and resolution scalability, region of interest enhancement, random access decoding, and resilience to errors due to channel noise or packet loss, fast encoding/decoding speed, low computational and hardware complexity.

Recent developments in pattern recognition (regions of interest segmentation) and image processing have advanced the state-of-the-art of image processing. During the past few years, we have applied some of these latest image-processing techniques on image enhancement classification and compression.

3. Region of interest (ROI) encoding Support of region of interest (ROI) access is a very interesting feature of image compression, in which an image sequence can be encoded only once and then the decoder can directly extract a subset of the bit stream to reconstruct a chosen ROI of required quality [4] The arbitrarily shaped regions inside an image will be encoded at different quality levels according to their importance or, as per diagnostic relevance. The whole image is transformed and coefficients associated to the ROI are coded at higher precision (up to lossless) than the background. Especially in medical imaging ROI coding help compression method s to focus on those regions that are important for diagnosis purpose.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

2

For some applications, only a subsection of the image sequence is selected for analysis or diagnosis. Therefore, it is very important to have region of interest retrievability that can greatly save decoding time and transmission bandwidth. An important part of genetics analysis starts with researchers breaking down the nucleus of a human cell into a jumbled cluster of chromosomes that are then stained with dye so that they can be studied under a microscope. This jumble of stained chromosomes, which carry the genetic code of the host individual, is then photographed, creating a chromosome spread image (see Fig. 1 (a)). This image is subsequently subject to a procedure called chromosome-karyotyping analysis. The result of this procedure is a karyotype image (see Fig. 1 (b)), the standard form used to display chromosomes. In this configuration, the chromosomes are ordered by length from the largest (chromosome 1) to the smallest (chromosome 22 in humans), followed by the sex chromosomes. Karyotype images are used in clinical tests, such as amniocentesis, to determine if all the chromosomes appear normal and are present in the correct number. [5] Unlike some other types of medical imagery, chromosome images (see Fig. 1) have an important common characteristic: the regions of interest (ROIs) to cytogeneticists for evaluation and diagnosis are all well determined and segmented prior to image storage. The remaining background images, which may contain cell nuclei and stain debris, are kept as well in routine cytogenetics lab procedures for specimen reference rather than for diagnostic purposes. Since the chromosome ROIs are much more important than the rest of the image for karyotyping analysis, loss less compression for the former is required while lossy compression for the latter is acceptable. This calls for lossy and loss less region-of-interest (ROI) coding. In contrast, commercial chromosome karyotyping systems fail to utilize the ROI information by compressing entire chromosome spread or karyotype images.

Figure. 1(a) A metaphase cell spread image[5]

Figure. 1(b) A metaphase cell’s karyotype[5]

In the karyotype, all chromosomes in the spread are rotated and copied onto an image with constant background and positioned according to their classes. The label annotation is drawn separately.

4. Wavelet transform Discrete cosine transform and Wavelet transform are more commonly used for compression. The popular JPEG & MPEG uses discrete cosines transform based compression;

While JPEG 2000 uses Wavelet transform based compression. The DCT based compression; the algorithm breaks the image into 8x8 pixel blocks and performs a discrete cosine transform on each block. The result is an 8x8 block of spectral coefficients with most of the information is concentrated in relatively few coefficients. Quantization is performed, which approximates the larger coefficients; smaller coefficients become zero. These quantized coefficients are then reordered in a zig zag manner to group the largest values first, with long strings of zeroes at the end that can be efficiently represented.

While this algorithm is very good for general purposes, it has some draw backs when applied to medical images .It degrades ungracefully at high compression ratios, producing prominent artifacts at block boundaries, and it can not take advantage of patterns larger than the8x8 blocks. Such artifacts could potentially be mistaken as being diagnostically significant. A wavelet approach was also suggested by Li who considered the problem of accessing still, medical image data, remotely over low bandwidth networks. This was accomplished using a region of interest (ROI) based approach that allocated additional bandwidth within a dynamically allocated ROI, whilst at the same time providing an embedded bit stream, which is useful for progressive image encoding.[3] Some of the key features of the wavelet transform which make it such a useful tool are:

• Spatial-frequency localization, • Energy compaction, • Decaying magnitude of wavelet coefficients across

sub-bands. Wavelet based compression schemes generally out

perform JPEG compression in terms of image quality at a given compression ratio, and the improvement can be dramatic at high compression ratios. [9] The Wavelet Transform, which was developed in the last two decades, provides a better time- frequency representation of the signal than any other existing transforms. It supports ROI (region of interest) encoding. Multiple regions multiple qualities ROI encoding facilitates different ROIs according to the priority to be enhanced with different qualities (i.e. at different compression ratios, both by lossless and lossy methods), while limiting the bit size outside ROI by compressing heavily (lossy). This paper proposes the application of adaptive biorthogonal wavelets to keep quality in the highest priority ROI as they posses excellent reconstruction features. 4.1 Classification of wavelets We can classify wavelets into two classes: [7] (a) Orthogonal and (b) biorthogonal.Based on the application, either of them can be used. 4.1.1 Features of orthogonal wavelet filter banks The coefficients of orthogonal filters are real numbers. The filters are of the same length and are not symmetric. The low pass filter, G

0 and the high pass filter, H

0 are related

to each other by

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

3

H0 (z) = z

-N G

0 (-z

-1) (1)

{Φi,j (t)Ψi,j (t)} and {Φi,j(t) Ψ˜i,j(t)} are the biorthogonal basis functions[8]. a˜j,k and b˜j,k are the scaling and wavelet coefficients respectively; together they form the biorthogonal DWT coefficients of x(t). The DWT starts at some finest scale j = M and stops at some coarsest scale j = N. M - N is the number of levels of decomposition in the biorthogonal DWT of x (t).

4.1.3 Selection of wavelet from the biorthogonal family: Different wavelets will be suited for different types of images. One wavelet basis may produce highest coefficient for a picture, may not necessarily produce highest

coefficients for all pictures for all images while decomposing the image using the wavelet transform.

Keeping this in the mind, Adaptive wavelet basis function is additionally proposed. Amongst the all important biorthogonal wavelet basis functions, a particular wavelet basis function is chosen on the basis of comparison of the highest coefficients produced by all the different wavelet basis functions at the first level of the decomposition process. The proposed MRMQ Encoding uses the Discrete Wavelet Transform, chooses the right Biorthogonal basis function on the basis of the highest coefficient produced in the highest priority ROI amongst of all the ROIs.

5. Multiple Regions Multiple Quality ROI Encoding For certain applications, only specific regions in the volumetric data or video are of interest. For example, in MR imaging of the skull, the physician is mostly concerned about the features inherent in the brain region. In video conferencing, the speaker’s head and shoulders are of main interest and need to be coded at higher quality whereas the background can be either discarded or encoded at a much lower rate. High compression ratios can be achieved by allocating more bit rate for region(s) of interest (ROI) and less bit rate for the remaining regions, i.e. the background.

Region-based image coding schemes using heterogeneous (multiple) quality constraints are especially attractive because they not only can well preserve the diagnostic features in region(s) of interest, but also meet the requirements of less storage and shorter transmission time for medical imaging applications and video transmission.

A main advantage of the proposed technique is that it supports multiple-region multiple-quality (MRMQ) coding. By this method, total bit budget can be allocated among multiple ROIs and background depending on the quality constraints. Experimental results show that this technique offers reasonably well performance for coding multiple ROIs.

To support region of interest (ROI) coding, it is necessary to identify the wavelet transform coefficients associated with the ROI. We have to keep track of the coefficients that are involved in the reconstruction of ROI through each stage of decomposition.

5.1 ROI Coding

We use region mapping which trace the positions of pixels in an ROI in image domain back into transform domain by inverse DWT. The coefficients of greater importance are called ROI coefficients, the rest are called background coefficients.

The coding algorithm, fig.2 needs to keep track of the locations of wavelet coefficients according to the shape of the ROI. To obtain the information about ROI coefficients, a mask image, which specifies the ROI, is decomposed by the wavelet decomposition of the image. In each decomposition stage, each subband of the decomposed mask contains information for specifying the ROI in that subband. By successively decomposing the approximation coefficients

The two filters are alternated flip of each other. The

alternating flip automatically gives double-shift orthogonality between the low pass and high pass filters, i.e., the scalar product of the filters, for a shift by two is zero i.e, ∑G [k] H [k-2l] = 0, where k,lεZ . Perfect reconstruction is possible with alternating flip.

Also, for perfect reconstruction, the synthesis filters are identical to the analysis filters except for a time reversal. Orthogonal filters offer a high number of vanishing moments. This property is useful in many signal and image processing applications. They have regular structure, which leads to easy implementation and scalable architecture. 4.1.2 Features of biorthogonal wavelet filter banks In the case of the biorthogonal wavelet filters, the low pass and the high pass filters do not have the same length. The low pass filter is always symmetric, while the high pass filter could be either symmetric or anti-symmetric. The coefficients of the filters are either real numbers or integers.

For perfect reconstruction, biorthogonal filter bank has all odd length or all even length filters. The two analysis filters can be symmetric with odd length or one symmetric and the other antisymmetric with even length. Also, the two sets of analysis and synthesis filters must be dual. The linear phase biorthogonal filters are the most popular filters for data compression applications

The analysis and synthesis equations for the biorthogonal DWT of any x (t) ε L2(R) are

Analysis

a ̃ j,κ = ∫ x(t)2j/2φ̃(2jt-k)dt (2)

b̃j,κ = ∫ x(t)2j/2ψ ̃(2jt-k)dt (3)

Synthesis M-1

x(t)= 2 N/2Σ a ̃N,k φ(2 Nt-k) + Σ 2j/2

k j=N Σ b ̃j,k ψ(2 jt-k) (4) k

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

4

(LL subband) for a number of decomposition levels, information about ROI coefficients is obtained.

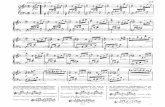

Figure 2. ROI Encoding using Wavelet Transform

5.2 Outside ROI Space –Frequency Quantization (SFQ) technique exploits both spatial and frequency compaction property of the wavelet transform through the use of two simple quantization modes.

To exploit the spatial compaction property, a symbol is defined, that indicates that a spatial region of high frequency coefficients has zero value. Application of this symbol is referred to as zero-tree quantization. This is done in the first phase called Tree Pruning Algorithm. In the next phase called Predicting the tree, the relation between a spatial region in image and the tree- structured set of coefficients is exploited. Zero tree quantization can be viewed as a mechanism for pointing to the location where high frequency coefficients are clustered. Thus, this quantization mode directly exploits the spatial clustering of high frequency coefficients predicted.

For coefficients that are not set to zero by zero tree quantization, a common uniform scalar quantization, independent of coefficient frequency band is applied. Uniform quantization followed by entropy coding provides nearly optimal coding efficiency.

6. Results

In Fig. 3 an Image is taken showing the abdominal operation. In the above Image MRMQ ROI encoding is applied. The result is shown in fig. 4.Here the no. of ROIs are 2, as shown the area outside the ROI is heavily degraded .The PSNR of this image is 25dB.while the quality is lossless in the ROI. The PSNR vs. bits per pixel of the ROI enhanced Image at various compression points is plotted in fig.5. This shows that although the PSNR difference is less whiles the bits per pixel reduces from 1bpp to 0.5 bpp using Matlab® simulation.

Fig. 6 an image of a newborn baby affected by

sacrococcyged teratoma (before surgery) is taken. In fig. 7 the area outside the ROI is heavily degraded. In fig. 8, Outside the ROI is excluded for further reducing the bit size requirement. The PSNR vs. bits per pixel at various compression points are plotted in fig. 9.

Figure 5. PSNR vs. bits per pixel of the ROI enhanced Image “abdomen”

Note: ROI area is 17230pixels and Image is 448x336 pixels.

Figure 6. Teratoma affected baby

Figure 7. ROI enhanced Teratoma affected baby

(Background degraded)

Figure 8. ROI enhanced Trachoma affected baby (Background excluded)

Figure 3. Original medical Image of abdominal operation (2.32bpp)

Figure 4. ROI Enhanced medical image of fig. 3 Here no. of ROI =2(0.92bpp, PSNR =25.2db)

Bits per pixel

PSNR

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

5

Figure 9. PSNR vs. bpp of the ROI enhanced Teratoma affected baby

7. Conclusions The simulation results give bits per pixel with corresponding PSNR values for the proposed MRMQ ROI encoding. It has been observed that for still images, the bit size requirement was made less by degrading the image quality out side the ROI, while keeping the quality of image uncompromised in the ROI region.

In the present paper, still image results are simulated with the biorthogonal wavelet transform family for the multiple region multiple quantization (MRMQ) ROI coding, therefore further analysis can be extended in the field of video processing. Also the present analysis is carried on the medical still images, which can be extended for the video processing with graphical and natural images.

References

[1] Shaou-Gang Miaou, Shih-Tse Chen, Shu-Nien Chao , Wavelet-Based Lossy-to-Lossless Medical Image Compression Using Dynamic VQ And SPIHT Coding, Biomedical Engineering applications, Basis &Communications. Pg 235-242 Vol. 15 No. 6 December 2003.

[2] M´onica Penedo, William A. PearmanPablo G. Tahoces, Miguel Souto, Juan J. Vidal, EmbeddedWavelet Region-Based Coding Methods Applied To Digital Mammography IEEE International Conference on Image Processing, vol.3 Barcelona, Spain 2003.

[3] David Gibson, Michael Spann, Sandra I. Woolley, A Wavelet-Based Region of Interest Encoder for the Compression of Angiogram Video Sequences, IEEE Transactions on Information Technology in Biomedicine 8(2): 103-113 (2004)

[4] Kaibin Wang, B.Eng., M.Eng. Thesis: Region-Based Three-Dimensional Wavelet Transform Coding, Carleton University, Ottawa, Canada, May, 2005.

[5] Zixiang Xiong, Qiang Wu and Kenneth R.,Castlemen, Enhancement, Classification And Compression Of Chromosome Images. Workshop on Genomic Signal Processing, 2002 , Cite seer.

[6] Charilaos Christopoulos, Athanassios Skodras ,Touradj Ebrahimi - The JPEG2000 Still Image Coding System:An Overview , IEEE Transactions on Consumer

Electronics, Vol. 46, No. 4, pp. 1103-1127, November 2000.

[7] K.P.Soman and K.I.Ramachandran -Insight Into

Wavelets –From Theory to Practice,PHI Publications. [8] Sonja Grgic, Mislav Grgic, and Branka Zovko-Cihlar,

Performance Analysis of Image Compression Using Wavelets, IEEE Transactions on Industrial Electronics, vol.48, no. 3, June 2001.

[9] Michael W. Marcellina, Margaret A. Lepleyb, Ali Bilgina, Thomas J. Flohrc, Troy T. Chinend, James H. Kasner, An overview of quantization in JPEG 2000 Signal Processing: Image Communication 17 (2002) 73–84.

Authors Profile P.Rajkuma received the M.Tech. Degree in Electronics Engineering from Maulana Azad National Institute of Tech.Bhopal, India in 2006.He is doing his research in the area of medical image processing. Madhu Shandilya received the M.Tech. and Ph.D. degrees in Electronics Engineering from Maulana Azad National Institute of Tech.Bhopal, India in 1994 and 2005, respectively. From last 22 years she is associated with Electronics Engineering from Maulana Azad National Institute of Tech.Bhopal. She has published/presented about 20 papers in various national and international journals /conferences. Her area of specialization is digital image processing.

PSNR (db)

Bits per pixel

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

6

2- Dimension Automatic Microscope Moving Stage

Ovasit P.1 Adsavakulchai S.1,* Srinonghang W1., and Ueatrongchit, P. 1

1School of Engineering, University of the Thai Chamber of Commerce

126/1 Vibhavadi Rangsit Rd., Bangkok 10400 *Corresponding author, e-mail: [email protected]

Abstract: Currently, microscope stage has controllable by manual. In medical laboratory, the specimen slide examination system processes one at a time for microscopic examination. The main objective of this study is to develop a two-dimension automatic microscope moving stage. This equipment is designed by microcontroller PIC 16F874 using stepping motor as horizontal feed mechanism. There are three function modes, the first one is manual, the second is automatic scan specimen which transfers the specimen slide onto a microscope stage which has controllable X and Y axis positioning to move the specimen slide into the optical viewing field of the microscope and examination over the desired area of the specimen and the last one is to examine specimen slides may be automatically returned to the microscope stage for reexamination. The result of this study can be concluded that the accuracy of this equipment for reexamination the specimen slide is 86.03 % accuracy.

Keywords: Microscope Automatic Moving Stage, microcontroller PIC16F874, stepping motor

1. Introduction The microscope is a conventional laboratory microscope with attached to actuate the stage and control is affected through manual [1] as shown in Figure 1.

Figure 1. Microscope moving stage The basic principal for diagnostic in red blood cell is using microscope manually. In such cases, electronic systems may be used to automatically examine and analyze the optical images of the microscope [2]. Where electronics systems are used for rapid analysis of microscope specimen images it becomes desirable to automatically regularly and rapidly feed the specimens to the microscope optics. After analysis a specimen would be removed to make room for the next specimen and would be collected for either further examination, reference, record keeping or disposal.

An automated slide system is disclosed which organizes microscope slides in cassettes, automatically and positioned each slide under the microscope as provided by the protocol, and after examination returns the slide to its proper cassette [3]. A slot configured for holding slides in spaced parallel configuration using the mechanism for removing and replacing a slide housed. A feed arm containing a longitudinal to draw-out spring wire surrounding an imaginary longitudinal axis having at the first end and a second end, the first and second end being bent orthogonal to one another and to the imaginary longitudinal axis of said draw-out spring wire, said longitudinal draw-out spring wire being positioned in said longitudinal channel in said feed arm such that bent ends protrude from the channel and wherein said longitudinal draw-out spring wire is operatively positioned in said longitudinal channel such that the draw-out spring wire is rotatable therein, allowing for each bent end to change orientation in respect to the feed arm. [1].

The main objective of this study is to develop an automatically returned to the microscope stage for reexamination. These technologies dramatically increased the accuracy of measurement results and contributed greatly to the modernization of testing and medical care (medical testing).

2. Materials and Methods Sample preparation: 2.1 To prepare the blood smear sample and set up the

microscope working area at 1000x with 0.2 mm. dimension [2] as shown in Figure 2. In order to the area of the specimen slide is viewed during examination of the specimen without sliding it.

Figure 2. Microscope working area

2.2 To set up the scope of microscope moving stage

scanning area with 40 x 26 mm. as shown in Figure 3. About the specimen stage this opening in the specimen

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

7

stage is made as large as possible and exposes the full width of the specimen slide.

Figure 3. Scanning area

2.3 The automatic sequential examination of a group of microscope specimen slides comprising: 2.3.1 Moving stage design: Apparatus comprised a substage directing serves to move stage in a horizontal plane and there is provided further positioning means supporting said translation means and operable for moving said stage with a specimen slide supported therein vertically as shown in Figure 4.

Figure 4. Moving stage design

2.3.2 Digital Electronics Design:

The digital electronics architecture has two main functional blocks, Master Board and Slave Board. ICP (Instrument Control Processor): used a PIC 16F873 processor to perform all instrument control and event processing functions as shown in Figure 5. The ICP will be responsible for the following tasks: processing commands; monitoring source and adjusting the LCD readout mode as required; calculating centroids and transmitting centroid positions [4],[5],[6].

Figure 5. Digital Electronics design

Figure 5 is to illustrate the overall digital electronics using two microcontrollers with serial communication and synchronous Serial Peripheral Interface (SPI).

2.3.3 Microcontroller in Slave Board:

2.3.3.1 Encoder: using sequential logic to control moving stage. The characteristic of encoder 2 signal using microcontroller PIC 16F873 via RA0-RA3 port as shown in Figure 6. The signals moving stage is to set up into 3 statuses 1. No movement 2. Increase the distance and 3. Reduce distance as shown in Figure 7.

Figure 6. The characteristic of encoder 2 signal

Figure 7. Logical control

2.3.3.2 Stepping motor: control moving stage using microcontroller PIC 16F873 via RB0-RB7 port and working together with IC ULN2803 to control stepping motor as shown in Figure 8.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

8

Figure 8. Characteristics of stepping motor control

2.3.3.3 Serial Peripheral Interface: using Master Synchronous Serial Port in microcontroller PIC 16F873 using Microcontroller in Slave Board as shown in Figure 9.

Figure 9. Serial Peripheral Interface (SPI)

2.3.4 Microcontroller in Mater Board 2.3.4.1 Input from keyboard using RB1-RB7 in term of matrix 4 x 3 2.3.4.2 Display result using RA0-RA5 for Liquid

Crystal Display 2.3.4.3 Serial Peripheral Interface SCK port 2.3.4.5 Data communication using SPI as shown

in table 1 Table 1 : Data communication using SPI

To design main menu as shown in Figure 10 to control the

microscopic stage.

X 0000 #:ExitY 0000 *:Stop

Microscope Scan.Version 1.00

1:Home 2:Manual3:Scan 4:Config

X 0000 #:ExitY 0000 *:Jump

HomePlease Wait.

1:Posit. #:Exit2:Option Config

3

1 4

Wait

1:Start #:Exit2:Final < Save

X _000 #:ExitY 0000 < Jump

Wait

Start.Please Wait.

X 0000 #:ExitY 0000 *:Scan

*

T: 0sec #:ExitS:>_unit Space

1: 0sec #:Exit2: 0unit Option

Start 0000x0000yFinal 0000x0000y

T:>_sec #:ExitS: 0unit Time.

2 : S

1

1 : T

2

* : X :Y# : 1 or 2

#

2

#

Figure 10. Main menu of control program

3. RESULTS AND DISCUSSION To test the points and the results is shown in table 2.

The microscopic examination of the specimen slide can take place either visually or automatically. Motorized microscope components and accessories enable the investigator to automate live-cell image acquisition and are particularly useful for time-lapse experiments about 20 milliseconds [7],[8]. For this purpose the X and Y positioning systems can be controlled manually or automatically. Thus, the specimen slide carried by the stage may be moved to any desired location relative to the optical axis by actuation of the Y-axis drive 43 and the X-axis drive 44. For automatic examination the drives 43, 44 would be energized under scan or other program control. Finally, the specimen slide reexamination is automatically returned to the microscope stage for reexamination with very high accuracy.

Table 2: Sample testing results

Status 1a 1b 2a 2b

Master

Register SSPBUF

Register SSPBUF

Register SSPSR Register SSPSR

Slave

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

9

4. Conclusion

After the examination of a particular series of specimen slides has been completed any individual specimen slide that requires re-examination can by either operator signals or by predetermined control signals be fed automatically back into the microscope viewing optics for further examination [9].

Upon completion of the examination of a slide the horizontal positioning Y-axis drive returns the specimen slide on the stage to the position. It can be concluded that the accuracy of this equipment for reexamination the specimen slide is 86.03 % accuracy.

Acknowledgements

This project is supported by University of the Thai Chamber of Commerce grant.

References [1] C.R.David, Microscopy and related methods from

http://www.ruf.rice.edu/~bioslabs/methods/microscopy/microscopy.html

[2] Robert H., et.al, Handbook of Hematologic Pathology, Marcel Dekker, Inc. (2000)

[3] Qin Zhang et al., A Prototype Neural Network Supervised Control System for Bacillus thuringiensis Fermentations, Biotechnology and Bioengineering, vol. 43, pp. 483-489 (1994).

[4] N. Armenise et al., High-speed particle tracking in nuclear emulsion by last generation automatic

microscopes, accepted for publication on Nucl. Instr. Meth. A(2005).

[5] Powell, Power and Perkins, The Study of Elementary Particles by the Photo-graphic Method, Pergamon Press (1959).

[6] W.H. Barkas, Nuclear Research Emulsion, Academic Press Inc. (London) Ltd. (1963).

[7] John F. Reid et al., A Vision-based System for Computer Control and Data Acquisition in fermentation Processes, 38th Food Technology Conference, 1992.

[8] J.M. Shine, Jr., et al., Digital Image Analysis System for Determining Tissue-Blot Immunoassay Results for Ratoon Stunting Disease of Sugarcane, Plant Disease, vol. 77 No. 5, pp. 511-513, 1993.

[9] Jinlian Ren et al., Knowledge-based Supervision and Control of Bioprocess with a Machine Vision-based Sensing System, Journal of Biotechnology 36 (1994) 25-34.

Authors Profile Suwannee Adsavakulchai received the M.S. degrees in Computer Information Systems from Assumption University in 1994 and Doctoral of Technical Science from Asian Institute of Technology in 2000, respectively. She now works as lecturer in the department of Computer Engineering, School of Engineering, University of the Thai Chamber of Commerce.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

10

A Large Block Cipher Involving a Key Applied on Both the Sides of the Plain Text

V. U. K. Sastry1, D. S. R. Murthy2, S. Durga Bhavani3

1Dept. of Computer Science & Engg., SNIST,

Hyderabad, India, [email protected]

2Dept. of Information Technology, SNIST,

Hyderabad, India, [email protected]

3School of Information Technology, JNTUH,

Hyderabad, India, [email protected]

Abstract: In this paper, we have developed a block cipher by modifying the Hill cipher. In this, the plain text matrix P is multiplied on both the sides by the key matrix. Here, the size of the key is 512 bits and the size of the plain text is 2048 bits. As the procedure adopted here is an iterative one, and as no direct linear relation between the cipher text C and the plain text P can be obtained, the cipher cannot be broken by any cryptanalytic attack. Keywords: Block Cipher, Modular arithmetic inverse, Plain text, Cipher text, Key.

1. Introduction The study of the block ciphers, which was initiated several centuries back, gained considerable impetus in the last quarter of the last century. Noting that diffusion and confusion play a vital role in a block cipher, Feistel etal, [1] –[2] developed a block cipher, called Feistel cipher. In his analysis, he pointed out that, the strength of the cipher increases when the block size is more, the key size is more, and the number of rounds in the iteration is more. The popular cipher DES [3], developed in 1977, has a 56 bit key and a 64 bit plain text. The variants of the DES are double DES, and triple DES. In double DES, the size of the plain text block is 64 bits and the size of the key is 112 bits. In the triple DES, the key is of the length 168 bits and the plain text block is of the size is 64 bits. At the beginning of the century, noting that 64 bit block size is a drawback in DES, Joan Daemen and Vincent Rijmen, have developed a new block cipher called AES [4], wherein the block size of the plain text is 128 bits and key is of length 128, 192, or 256 bits. In the subsequent development, on modifying Hill cipher, several researchers [5]–[9], have developed various cryptographical algorithms wherein the length of the key and the size of the plain text block are quite significant. In the present paper, our objective is to develop a block cipher wherein the key size and the block size are significantly large. Here, we use Gauss reduction method for obtaining the modular arithmetic inverse of a matrix. In what follows, we present the plan of the paper. In section 2, we have discussed the development of the cipher. In section 3, we have illustrated the cipher by

considering an example. In section 4, we have dealt with the cryptanalysis of the cipher. Finally, in section 5, we have presented the computations and arrived at the conclusions.

2. Development of the cipher Consider a plain text P which can be represented in the form of a square matrix given by P = [Pij], i = 1 to n, j = 1 to n, (2.1) where each Pij is a decimal number which lies between 0 and 255. Let us choose a key k consisting of a set of integers, which lie between 0 and 255. Let us generate a key matrix, denoted as K, given by K = [Kij], i = 1 to n, j = 1 to n, (2.2) where each Kij is also an integer in the interval [0 – 255]. Let C = [Cij], i = 1 to n, j = 1 to n (2.3) be the corresponding cipher text matrix. The process of encryption and the process of decryption applied in this analysis are given in Fig. 1.

Figure 1. Schematic Diagram of the cipher

Here r denotes the number of rounds. In the process of encryption, we have used an iterative procedure which includes the relations P = (K P K) mod 256, (2.4)

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

11

P = Mix (P), (2.5) and P = P ⊕ K (2.6) The relation (2.4) causes diffusion, while (2.5) and (2.6) lead to confusion. Thus, these three relations enhance the strength of the cipher. Let us consider Mix (P). In this the decimal numbers in P are converted into their binary form. Then we have a matrix of size n x 8n, and this is given by

Here, P111, P112, …, P118 are binary bits corresponding to P11. Similarly, Pij1, Pij2, …, Pij8 are the binary bits representing Pij. The above matrix can be considered as a single string in a row wise manner. As the length of the string is 8n2, it is divided into n2 substrings, wherein the length of each substring is 8 bits. If n2 is divisible by 8, we focus our attention on the first 8 substrings. We place the first bits of these 8 binary substrings, in order, at one place and form a new binary substring. Similarly, we assemble the second 8 bits and form the second binary substring. Following the same procedure, we can get six more binary substrings in the same manner. Continuing in the same way, we exhaust all the binary substrings obtained from the plain text. However, if n2 is not divisible by 8, then we consider the remnant of the string, and divide it into two halves. Then we mix these two halves by placing the first bit of the second half, just after the first bit of the first half, the second bit of the second half, next to the second bit of the first half, etc. Thus we get a new binary substring corresponding to the remaining string. This completes the process of mixing. In order to perform the exclusive or operation in P = P ⊕ K, we write the matrices, both P and K, in their binary form, and carryout the XOR operation between the corresponding binary bits. In the process of decryption, the function IMix represents the reverse process of Mix. In what follows, we present the algorithms for encryption, and decryption. We also provide an algorithm for finding the modular arithmetic inverse of a square matrix. Algorithm for Encryption 1. Read n, P, K, r 2. for i = 1 to r { P = (K P K) mod 256 P = Mix (P) P = P ⊕ K } 3. C = P 4. Write (C)

Algorithm for Decryption 1. Read n, C, K, r 2. K–1 = Inverse (K) 3. for i = 1 to r { C = C ⊕ K C = IMix (C) C = (K–1 C K–1) mod 256 } 4. P = C 5. Write (P) Algorithm for Inverse (K) // The arithmetic inverse (A–1), and the determinant of the

matrix (∆) are obtained by Gauss reduction method. 1. A = K, N = 256 2. A–1 = [Aji] / ∆, i = 1 to n, j = 1 to n

//Aji are the cofactors of aij, where aij are elements of A, and ∆ is the determinant of A

3. for i = 1 to n { if ((i ∆) mod N = 1) d = i; break; } 4. B = [d Aji] mod N // B is the modular arithmetic inverse of A

3. Illustration of the cipher Let us consider the following plain text. No country wants to bring in calamities to its own people. If the people do not have any respect for the country, then the Government has to take appropriate measures and take necessary action to keep the people in order. No country can excuse the erratic behaviour of the people, even though something undue happened to them in the past. Take the appropriate action in the light of this fact. Invite all the people to come into the fold of the Government. Try to persuade them as far as possible. Let us see!! (3.1) Let us focus our attention on the first 256 characters of the above plain text which is given by No country wants to bring in calamities to its own people. If the people do not have any respect for the country, then the Government has to take appropriate measures and take necessary action to keep the people in order. No country can excuse the erratic (3.2) On using EBCDIC code, we get 26 numbers, corresponding to 256 characters. Now on placing 16 numbers in each row, we get the plain text matrix P in the decimal form

Obviously, here the length of the plain text block is 16 x 16 x 8 (2048) bits.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

12

Let us choose a key k consisting of 64 numbers. This can be written in the form of a matrix given

by The length of the secret key (which is to be transmitted) is 512 bits. On using this key, we can generate a new key K in the form

where U = QT, in which T denotes the transpose of a matrix, and R and S are obtained from Q and U as follows. On interchanging the 1st row and the 8th row of Q, the 2nd row and the 7th row of Q, etc., we get R. Similarly, we obtain S from U. Thus, we have

whose size is 16 x 16. On using the algorithm for modular arithmetic inverse (See Section 2), we get

On using (3.6) and (3.7), it can be readily shown that

K K–1 mod 256 = K–1K mod 256 = I. (3.8) On applying the encryption algorithm, described in Section 2, we get the cipher text C in the form

On using (3.7) and (3.9), and applying the decryption algorithm presented in section 2, we get the Plain text P. This is the same as (3.3). Let us now find out the avalanche effect. To this end, we focus our attention on the plain text (3.2), and modify the 88th character ‘y’ to ‘z’. Then the plain text changes

only in one binary bit as the EBCDIC code of y is 168 and that of z is 169.

On using the encryption algorithm, we get the cipher text C corresponding to the modified plain text (wherein y is replaced by z) in the form

On comparing (3.9) and (3.10), we find that the two cipher texts

differ in 898 bits, out of 2048 bits, which is quite considerable. However, it may be mentioned here that, the impact of changing 1 bit is not that copious, as the size of

the plain text is very large. Even then it is remarkable. Now let us change the key K given in (3.6) by one

binary bit. To this end, we replace the 60th element 5 by 4. Then on using the original plain text given by (3.3), we get C in the form

On comparing (3.9) and (3.11), we find that the cipher texts

differ in 915 bits, out of 2048 bits. From the above analysis, we find that the avalanche effect is quite pronounced and shows very clearly that the cipher is a strong one.

4. Cryptanalysis In the literature of cryptography, it is well known that the different types of attacks for breaking a cipher are:

(1) Cipher text only attack, (2) Known plain text attack, (3) Chosen plain text attack, (4) Chosen cipher text attack.

In the first attack, the cipher text is known to us together with the algorithm. In this case, we can determine the plain text, only if the key can be found. As the key contains 64 decimal numbers, the key space is of size 2512 ∼ (103)51.2 = 10153.6 which is very large. Hence, the cipher cannot be broken by applying the brute force approach. We know that, the Hill cipher [1] can be broken by the known plain text attack, as there is a direct linear relation between C and P. But in the present modification, as we have all nonlinear relations in the iterative scheme, the C can never be expressed in terms of P, thus P cannot be determined by any means in terms of other quantities. Hence, this cipher cannot be broken by the known plain text attack.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

13

As there are three relations, which are typical in nature, in the iterative process for finding C, no special choice of either the plain text or the cipher text or both can be conceived to break the cipher.

5. Conclusions In the present paper, we have developed a large block cipher by modifying the Hill cipher. In the case of the Hill cipher, it is governed by the single, linear relation C = (K P) mod 26, (5.1) while in the present case, the cipher is governed by an iterative scheme, which includes the relations P = (K P K) mod 256, (5.2) P = Mix (P), (5.3) and P = P ⊕ K. (5.4) Further, it is followed by C = P. (5.5) In the case of the Hill cipher, we are able to break the cipher as there is a direct linear relation between C and P. On the other hand, in the case of the present cipher, as we cannot obtain a direct relation between C and P, this cipher cannot be broken by the known plain text attack. By decomposing the entire plain text given by (3.1) into blocks, wherein each block is of size 256 characters, the corresponding cipher text can be obtained in the decimal form. The first block is already presented in (3.9) and the rest of the cipher text is given by

In this analysis, the length of the plain text block is 2048 bits and the length of the key is 512 bits. As the cryptanalysis clearly indicates, this cipher is a strong one and it cannot be broken by any cryptanalytic attack. This analysis can be extended to a block of any size by using the concept of interlacing [5].

References

[1]. Feistel H, “Cryptography and Computer Privacy”, Scientific American, May 1973.

[2]. Feistel H, Notz W, Smith J, “Some Cryptographic Techniques for Machine-to-Machine Data Communications”, Proceedings of the IEEE, Nov. 1975.

[3]. William Stallings, Cryptography and Network Security, Principles and Practice, Third Edition, Pearson, 2003.

[4]. Daemen J, Rijmen V, “Rijdael: The Advanced Encryption Standard”, Dr. Dobb’s Journal, March 2001.

[5]. V. U. K. Sastry, V. Janaki, “On the Modular Arithmetic Inverse in the Cryptology of Hill Cipher”, Proceedings of North American Technology and Business Conference, Sep. 2005, Canada.

[6]. V. U. K. Sastry, S. Udaya Kumar, A. Vinaya Babu, “A Large Block Cipher using Modular Arithmetic Inverse of a Key Matrix and Mixing of the Key Matrix and the Plaintext”, Journal of Computer Science 2 (9), 698 – 703, 2006.

[7]. V. U. K. Sastry, V. Janaki, “A Block Cipher Using Linear Congruences”, Journal of Computer Science 3(7), 556 – 561, 2007.

[8]. V. U. K. Sastry, V. Janaki, “A Modified Hill Cipher with Multiple Keys”, International Journal of Computational Science, Vol. 2, No. 6, 815 – 826, Dec. 2008.

[9]. V. U. K. Sastry, D. S. R. Murthy, S. Durga Bhavani, “A Block Cipher Involving a Key Applied on Both the Sides of the Plain Text”, International Journal of Computer and Network Security (IJCNS), Vol. 1, No.1, pp. 27 – 30, Oct. 2009.

Authors Profile Dr. V. U. K. Sastry is presently working as Professor in the Dept. of Computer Science and Engineering (CSE), Director (SCSI), Dean (R & D), SreeNidhi Institute of Science and Technology (SNIST), Hyderabad, India. He was Formerly Professor in IIT, Kharagpur, India and worked in IIT, Kharagpur during 1963 – 1998. He guided 12 PhDs, and published more than 40 research papers in various international journals. His research interests are Network Security & Cryptography, Image Processing, Data Mining and Genetic Algorithms. Dr. S. Durga Bhavani is presently working as Professor in School of Information Technology (SIT), JNTUH, Hyderabad, India. Her research interest is Image Processing. Mr. D. S. R. Murthy obtained B. E. (Electronics) from Bangalore University in 1982, M. Tech. (CSE) from Osmania University in 1985 and presently pursuing Ph.D. from JNTUH, Hyderabad since 2007. He is presently working as Professor in the Dept. of Information Technology (IT), SNIST since Oct. 2004. He earlier worked as Lecturer in CSE, NIT (formerly REC), Warangal, India during Sep. 1985 – Feb. 1993, as Assistant Professor in CSE, JNTUCE, Anantapur, India during Feb. 1993 – May 1998, as Academic Coordinator, ISM, Icfaian Foundation, Hyderabad, India during May 1998 – May 2001 and as Associate Professor in CSE, SNIST during May 2001 - Sept. 2004. He worked as Head of the Dept. of CSE, JNTUCE, Anantapur during Jan. 1996 – Jan 1998, Dept. of IT, SNIST during Apr. 2005 – May 2006, and Oct. 2007 – Feb. 2009. He is a Fellow of IE(I), Fellow of IETE, Senior Life Member of CSI, Life Member of ISTE, Life Member of SSI, DOEACC Expert member, and Chartered Engineer (IE(I) & IETE). He published a text book on C Programming & Data Structures. His research interests are Image Processing and Image Cryptography.

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

14

Electrocardiogram Prediction Using Error Convergence-type Neuron Network System

Shunsuke Kobayakawa1 and Hirokazu Yokoi1

1Graduate School of Life Science and Systems Engineering, Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu-shi, Fukuoka 808-0196, Japan {kobayakawa-shunsuke@edu., yokoi@}life.kyutech.ac.jp Abstract: The output error of a neuron network cannot converge at zero, even if training for the neuron network is iterated many times. “Error Convergence-type Neuron Network System” has been proposed to improve this problem. The output error of the proposed neuron network system is converged at zero by infinitely increasing the number of neuron networks in the system. A predictor was constructed using the system and applied to electrocardiogram prediction. The purpose of this paper is to prove the validity of the predictor for electrocardiogram. The neuron networks in the system were trained 30,000 cycles. As a result, averages of root mean square errors of the first neuron network was 2.60×10-2, and that of the second neuron network was 1.17×10-5. Prediction without error was attained by this predictor, so that its validity was confirmed.

Keywords: Volterra neuron network, Predictor, Error free, Electrocardiogram.

1. Introduction A neuron network (NN) cannot be learned if there is not a correlation between input signals to each layer and teacher signals of it. Therefore, uncorrelated components between them cannot be learned when correlated and uncorrelated components intermingled between them. NN cannot learn completely correlated components between them when learning capability of NN is low. Elevating learning capability of NN as a means to learn completely these correlated components is thought. However, such NN has not existed. Various researches have been actively done to elevate output accuracy of NN up to now. Improvements on the I/O characteristics of a neuron [1-11,17], the structure of NN [13-23] and NN system [24] are effective as means of the output accuracy elevation. However, output errors of their NNs cannot converge at zero, even if trainings for their NNs are iterated many times. Therefore, usual NNs are used with output error in tolerance. However, it is insufficient when applying NN to predictive coding [10-12] and the flight control for an aircraft [18,19] and a spacecraft etc. which smaller output error is advisable.

Then, NN system with a possibility which this problem can be solved even with NN which learning capability is low if it is used by plural has been proposed. As a result, “Error Convergence Method for Neuron Network System (ECMNNS)” which output error of single output NN system using NNs of multi-step converges and “Error Convergence Neuron Network System (ECNNS)” which is designed using it have been proposed by S. Kobayakawa [25]. The output error is theoretically converged at zero by infinitely increasing the number of NNs in ECNNS. However, it is necessary to devise ECNNS when it is used as plural

outputs, for ECNNS is a single output. The highest output accuracy of NN system cannot be expected for even if ECMNNS is simply applied to BP network (BPN) [26], because the BPN has the mutual interference problem of learning between outputs [18-22]. Then “Error Convergence Parallel-type Neuron Network System (ECPNNS)” which ECNNS was applied to a parallel-type NN which does not have the above-mentioned problem and which outputs accuracies are high to deal with plural outputs has been designed. Furthermore, “Error Convergence-type Recurrent Neuron Network System (ECRNNS)” which ECNNS was applied and “Error Convergence Parallel-type Recurrent Neuron Network System (ECPRNNS)” which ECPNNS was applied also have been designed. It is theoretically shown that output accuracy of NN system is elevated by them.

In general, BPN which learns a time series signal using a teacher signal and input signals is that two teacher signal values or more occasionally correspond to same values of input signals. In such a state, the BPN cannot be used for ECNNS because it cannot be learned. There are means using input-delay NN and Volterra NN (VNN) to eliminate this problem. These means are used for usual researches on compression for nonlinear signal using predictive coding [10,27]. Learning for a nonlinear predictor using NNs is easier by strengthening of causality between signals from past to present and a prediction signal. Therefore, Learning for NN at the first step in ECNNS is comparatively easy. However, learning for NN at high step in ECNNS is difficult because the causality weakens by rising of steps in ECNNS. Then, ECNNS is redesigned for improvement on learning capability. The redesigning is that NN at each step in ECNNS can be used as a predictor to strengthen causality between an input signal and a teacher signal of it. As a result, predictor using ECNNS [28] has been designed. This is called “Error Convergence-type Predictor (ECP)”

The purpose of this paper is to prove the validity of ECP with rounding which is constructed of 2nd-order VNNs (2VNN) of two steps for a normal sinus rhythm electrocardiogram (ECG). This ECP is called “Error Convergence-type 2nd-order Volterra Predictor (EC2VP)”. As a result, prediction without error was attained by this predictor, so that its validity was confirmed.

2. Single Output Error Convergence-type Neuron Network System

2.1 Principle Here, it thinks about a single output NN which error of the output signal to a teacher signal does not converge at zero though it becomes smaller than one before training for the

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

15

NN under the result of executing until the training converges to all input signals with correlation between the teacher signal.

The training is executed by this NN at the beginning. Next, other training is executed by another NN using an error signal obtained from an output signal and a teacher signal of the NN as a teacher signal. Thus it is thought that an error of an output signal to a teacher signal cannot be converged at zero, even if it is kept training an error signal obtained from training of previous NN one after another by another NN. That is, the error obtained from a difference between the sum of the output signals of all NNs and the teacher signal of the first NN does not converge at zero. This is shown in expressions from (1) to (4).

i i iy z ε= + ( )1, 2, ,i n= L (1)

1i iyε += ( )1, 2, , 1i n= −L (2)

11

n

i ni

y z ε=

= +∑ (3)

lim 0nn

ε←∞

≠ (4)

where y is the teacher signal, z is the output signal, ε is the output error, suffix of each sign is steps of NN.

Moreover, trainings for error signals used as a teacher signal for NN at the second step or more become difficult because it is thought that error signals become small along with the number of steps. Error signals which are amplified are used for teacher signals to NN at the second step or more to improve this problem. As a result, these trainings become easy. An output error of NN after training is reduced when it is restored to signal level before the amplification, if the amplification factor is larger than 1. Moreover, it approaches zero when the amplification factor is very large.

Here, an output error obtained from training under an amplification factor of an error signal which is a teacher signal to NN at the n step as An is assumed to be ε. Output error εn to a teacher signal given to a whole of NN system is shown like expression (5) and (6). Moreover, it is shown like expressions (7) and (8) when steps of NN are infinitely, and the output error converges at zero. Therefore, an error obtained from a difference between a sum of output signals after restoration of all NNs and the teacher signal given to the whole of NN system converges at zero from expressions (3) and (8) when the steps of NN are infinitely, and they become equal. This is shown in expression (9). Thus, this means to improve the above-mentioned problem is effective and necessary to obtain highly accurate output. This means is called ECMNNS, and NN system which applies this is called ECNNS.

nnA

εε = (5)

nε ε< (6)

lim nn

A←∞

= ∞ (7)

TrainingExtracting the error

Gain tuning to the teacher signal

Spreading the errorRestoration to an output signal

Sum of restored output signals

Iteration until the error converges at zero

+-

Teacher signal to ECNNS

Figure 1. Concept of processing for error convergence-type neuron network system

lim 0n n nAε

ε←∞

= = (8)

1lim

n

in iy z

←∞ =

= ∑ (9)

2.2 Single Output Neuron Network System Figure 1 shows a concept of processing for ECNNS. Figure 2 shows that ECNNS is designed based on this concept. Symbol NN in this figure also contains the state of a neuron. This ECNNS can be built in freely selected NN and the learning rule of the processible type for the I/O relation which internal each NN should be achieved. Here, the general I/O characteristics of ECNNS are discussed without touching concerning the I/O characteristic of NN concretely applied to ECNNS.

ECNNS equips with amplifiers to tune amplitudes of input signals and a teacher signal to NN at each step, to execute the appropriate training. Furthermore, ECNNS also equips with amplifiers for restoration which amplification factor is a reciprocal of an amplification factor for amplification of the teacher signal to each NN on its output part, to restore its output signal level. The I/O characteristics of ECNNS are shown in expressions from (10) to (16). Moreover, the relation of their teacher signals is shown in expressions from (17) to (20). Here, conditions of the amplification factors used by expressions (16), (18) and (19) are expressions (21) and (22).

( )

in1 2, , ,x nx x x= L (10)

( )inin 1 2, , ,A i i i ina a a= L ( )1, 2, ,i n= L (11)

Aij ij jx a x= ( )in1, 2, , ; 1,2, ,i n j n= =L L (12)

( )in1 2, , ,i Ai Ai Ainx x x=x L ( )1, 2, ,i n= L (13)

( )xAi i iz f= ( )1, 2, ,i n= L (14)

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

16

-

-+

+

-+

-+

-

-

-1

1

n

ii

z−

=∑

A2

1/A2

1/A3

A3

1/An

An

1/A1-+

A1

+

+

+

Ain2

Ain3

Ainn NNn

x Ain11x

2x

3x

nx

1ε

2ε

3ε

nε

1Az1Ay

2Az

3Az

Anz

1z

2z 3z

2Ay

3Ay

nzAny

y

z

+

+

+

+

NN1

NN2

NN3

2y

3y

ny

1y

Figure 2. Error convergence-type neuron network system

1

n

ii

z z=

= ∑ (15)

Ai

ii

zz

A= ( )1, 2, ,i n= L (16)

1y y= (17)

1 1 1Ay A y= (18)

1

1

i

Ai i jj

y A y z−

=

= −

∑ ( )2,3, ,i n= L (19)

i Ai Aiy zε = − ( )1, 2, ,i n= L (20)

0iA ≠ ( )1, 2, ,i n= L (21)

lim nn

A←∞

= ∞ (22)

where x is the input signal vector, x is the input signal, xAi is the input signal after amplification at the ith step, xi is the input signal vector after amplification at the ith step, Aini is the input signal amplification factor vector at the ith step, ai is the input signal amplification factor at the ith step, suffixes of x, xAi and a i are input signal numbers, zAi is the output signal of NN at the ith step, fi is a nin variables function which shows the I/O relation of NN at the ith step, y is the teacher signal to ECNNS, z is the output signal of ECNNS, yAi is the teacher signal to NN at the ith step after amplification, zi is the output signal to NN at the ith step after restoration, Ai is the teacher signal amplification factor for NN at the ith step, εi is the output error of NN at the ith step.

x ECNNS1

ECNNS2

y1z1y2z2

ECNNS3y3z3

ECNNSmymzm

Figure 3. Error convergence parallel-type neuron network system

3. Plural Outputs Error Convergence-type Neuron Network system

3.1 Principle ECNNSs are applied to the parallel-type NN when applying ECNNS to a plural outputs NN system, for ECNNS is a single output. NN system of this type is called ECPNNS. Moreover, it can be said a general type of ECNNS. Figure 3 shows ECPNNS. This is constructed of parallel units which apply ECNNS of the same number as the outputs. Therefore, it is thought that high output accuracy is obtained, because the mutual interference problem of learning between outputs of BPN is not caused either, for training for each output is executed independently by ECNNS.

3.2 Plural Outputs Neuron Network System I/O characteristics of ECPNNS are shown in expressions from (23) to (28). Moreover, relation of teacher signals is shown in expressions from (29) to (32). Here, conditions of amplification factors used by expressions (28), (30) and (31) are expressions (33) and (34).

( )inin 1 2, , ,A ij ij ij ijna a a= L

( )1, 2, , ; 1, 2, , ii m j n= =L L (23)

Aijk ijk kx a x= in

1, 2, , ; 1,2, ,1, 2, ,

ii m j nk n

= = =

L L

L

(24)

( )in1 2, , ,ij Aij Aij Aijnx x x=x L

( )1, 2, , ; 1, 2, , ii m j n= =L L (25)

( )xAij ij ijz f= ( )1,2, , ; 1, 2, , ii m j n= =L L (26)

1

in

i ijj

z z=

= ∑ ( )1, 2, ,i m= L (27)

Aij

ijij

zz

A= ( )1,2, , ; 1, 2, , ii m j n= =L L (28)

1i iy y= ( )1, 2, ,i m= L (29)

1 1 1Ai i iy A y= ( )1, 2, ,i m= L (30)

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

17

1

1

j

Aij ij i ikk

y A y z−

=

= −

∑ ( )1,2, , ; 2,3, , ii m j n= =L L

(31) ij Aij Aijy zε = − ( )1,2, , ; 1, 2, , ii m j n= =L L (32)

0ijA ≠ ( )1, 2, , ; 1,2, , ii m j n= =L L (33)

lim inn

A←∞

= ∞ ( )1, 2, ,i m= L (34)

where the first figure of suffix of each sign is the parallel unit number, the second figure of it is the step number in ECNNS, the third figure of it is the input signal number. However, xk is the kth input signal to ECNNS.

4. Applications 4.1 Simulator

Here are two application network systems using ECNNS and ECPNNS. For example, control signals and state signals are necessary for input when achieving a simulator for nonlinear plant. Furthermore, the state signals output from the simulator every moment decided depending on the input signals are recurrently needed for the state input signals. When achieving the simulator with high accuracy, it is advisable that errors are not included in output of the simulator. Therefore, it is thought that the simulator which works by the specified significant figure obtains a highly accurate output by the plant model using ECNNS and a rounding to eliminate the output error. Concretely, the network system which the output signal of ECNNS recurs to the input through a rounding shown in Figure 4 is thought about the simulator in case of a state signal. This is called ECRNNS. Furthermore, the network system which output signal vector of ECPNNS recurs to the input through roundings as shown in Figure 5 is thought about the simulator in case of plural state signals. This is called ECPRNNS. In these figures, y is the teacher signal vector, and z is the output signal vector.

4.2 Nonlinear Predictor A nonlinear predictor used for predictive coding and its principle must be improved to obtain high accuracy. Here is a means for improvement on learning capability of NN at each step in ECNNS for a nonlinear predictor used for predictive coding. This is called ECP. Learning for a nonlinear predictor using NNs is easier by strengthening of causality between signals from past to present and a prediction signal. Therefore, learning for NN at the first step in ECNNS is comparatively easy. However, learning for NN at the high step is difficult because it is guessed that the causality weakens by rising of the steps. Then, NN at each step in ECNNS is used as a predictor to strengthen causality between input signals and a teacher signal of it.

Moreover, learning capability of the NN can be elevated by increasing the number of input signals [29]. Figure 6 is redesigning of ECNNS in Figure 2 to realize it. Furthermore, expression (13) is changed to expressions from (35) to (38). Expression (37) shows initial conditions for NNs from the second step. I/O relations of NNs in ECP are

shown in expression (39). An output signal of ECP is shown in expression (40). A teacher signal to ECP is shown in expression (41).

( ) ( ) ( ) ( )( )in1 11 12 1, , ,A A A nx x xτ τ τ τ=x L (35)

( ) ( ) ( ) ( ) ( )( )inin 1 2, , , ,i i ij Ai Ai AinAe x x x xτ τ τ τ τ=x L

( )in2,3, , ; 1,2, ,i n j n= =L L (36)

( ) 0ijx τ =

( )in0; 2,3, , ; 1, 2, ,i n j nτ ≤ = =L L (37)

( ) ( ) ( )1

1

1

i

ij j kk

x x zτ τ τ−

−

=

= − ∑

in

02,3, ,1, 2, ,

i nj n

τ > = =

L

L

(38)

( ) ( )( )Ai i iz fτ τ= x ( )1, 2, ,i n= L (39)

( ) ( )1ˆ jz xτ τ += ( )in1, 2, ,j n= L (40)

( ) ( )1

jy xτ τ += ( )in1, 2, ,j n= L (41) where xij is the input error signal at the ith step to input signal xj to ECP, Aeini is an amplification factor of the input error signal at the ith step, Ai

is an amplification factor of the teacher signal at the ith step, fi is the nin variables function when i is 1 or the nin + 1 variables function when i is 2 or more to show I/O relation of NN at the ith step, x̂ is the prediction.

5. Computer simulations

5.1 2nd-order Volterra Neuron Network Figure 7 shows 2nd-order Volterra neuron (2VN) in discrete-time. I/O characteristics of 2VN are shown in expressions from (42) to (44).

( ) ( ) ( )

1

n

i ii

u w xτ τ τ

=

= ∑ (42)

( ) ( ) ( )

10

( ) ( ) ( ) ( )2

0

( )

( , )

Qp

p

Q Qp q

p q p

s p u

p q u u h

τ τ τ

τ τ τ τ

σ

σ

−

=

− −

= =

=

+ −

∑

∑∑

(43)

( ) ( )( ) 1( ) tan ( )z f s A sτ ττ −= = (44)

where u is the input weighted sum, xi is the ith input signal, wi is the ith connection weight, s is the input sum, D is the delay, Q is the prediction order, σ1 is the prediction coefficient of the 1st-order term corresponding to the signal obtained from between from an input of the 1st delay to an output of the Qth delay, σ2 is the prediction coefficient of the 2nd-order term corresponding to the product of all combinations of two sig-

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

18

yz

xECNNS

Rounding

Figure 4. Error convergence-type recurrent neuron network system

yz

x

Roundings

ECPNNS

Figure 5. Error convergence parallel-type recurrent neuron network system

( )3τε

NN1

NN2

-

-+

+

-+

- +

-

-

-( )1

1

ni

iz τ−

=∑

A2

1/A2

1/A3

A3

1/An

An

1/A1

-+

A1

+

+

+

NNn

( )τx

( )1τε

( )nτε

( )1Az τ

( )1Ay τ

( )2Az τ

( )3Az τ

( )Anz τ

( )1zτ

( )2z τ

( )3z τ

( )2Ay τ

( )3Ay τ

( )nz τ

( )Any τ

( )y τ

( )z τ

+

+

++

( )2τε

+-

+ -

+-

Ainn

( )1τx

( )2τx

( )nτx

D( )11zτ −

D( )12z τ −

D( )13z τ −

( )jx τ

Ain2

Ain3

( )3τx

NN3

( )2 jx τ

( )3 jx τ

( )njx τ

Ain1

Aein2

Aein3

Aeinn

( )2y τ

( )3y τ

( )ny τ

( )1y τ

Figure 6. Predictor using error convergence-type neuron network system

nals included in combinations of the same signal obtained from between from an input of the 1st delay to an output of the Qth delay, h is the threshold, z is the output signal, f is the output function, A is the output coefficient. wi , h , σ1 a n d σ2 are changed by training.

Figure 8 shows a three-layer 2VNN of one input one output which is constructed of 2VNs. This 2VNN is used for ECP.

5.2 Method

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

19

+

+

+

M

M

M

M

M

M

++−

+

( )h τ

( )s τ( )z τ

f(・)

( )1 (0) τσ

( )1 (1) τσ

( )1(2) τσ

( )1( )Q τσ

( )2 (0, 0) τσ

( )2 (0,1) τσ

( )2 (0, 2) τσ

( )2(0, )Q τσ

( )2 (1,1) τσ

( )2 (1, 2) τσ

( )2 (1, )Q τσ

( )2 (2, 2) τσ

( )2 (2, )Q τσ

( )2 ( , )Q Q τσ

DDD( )Qu τ−( )1u τ − ( )2u τ −( )u τ

( )1wτ

( )2wτ

( )nwτ

( )1x τ

( )2x τ

( )nx τ

Figure 7. 2nd-order Volterra neuron

Input Output( )iτx ( )

Aiz τ

2VN

2VN

Figure 8. 2nd-order Volterra neuron network

In computer simulations, EC2VP constructed of 2VNNs of two steps shown in Figure 9 is trained using combinations of an input signal x(τ) and a teacher signal y(τ) = x(τ+1) in the time series pattern of one dimension in space direction. The teacher signal is shown in Figure 10. This is a normal sinus rhythm ECG signal of MIT-BIH No.16786. This ECG signal is that the sampling frequency is 128 Hz, the significant figure is four-digit, the quantization step size is 0.005 mV, and the number of data for the input signal without an initial input and the teacher signal is 640, respectively. Here, training for 2VNN at each step in EC2VP is completed sequentially from the first step.

At the beginning, 2VNN at the first step (2VNN1) is trained using combinations of an input signal x(τ) and a teacher signal y1

(τ)=x(τ+1) in the time series pattern of one dimension in space direction. Here, computer simulations for the training are executed as A1=a11=1/3.275 according to the following procedure from 1) to 4).

1) A pair of the input signal or signals and the teacher signal is given once after 1,580 initial data are inputted into the 2VNN at the training. This process is defined as one training cycle.

2) Table 1 shows conditions for computer simulations to valuate prediction accuracy for the 2VNN. Initial values of prediction coefficients of the 2VNN are decided by exponential smoothing, and the other initial values are

Rounding2VNN1

2VNN2

-

-+

A2

1/A2

1/A1

-+

A1

( )1

τε

( )1Az τ

( )1Ay τ

( )2Az τ

( )1zτ

( )2z τ

( )2Ay τ

( )y τ

( )z τ++

( )2τε

+-

( )11Ax τ

( )2x τ

D( )11z τ −

( )x τ

a21

( )21x τ

a11( )2y τ

( )1y τ

( )2Ax τ

( )21Ax τ

Aein2

Figure 9. Error convergence-type 2nd-order Volterra Predictor

-1.0-0.50.00.51.01.52.02.53.0

Time [s]

Vol

tage

[mV

]

543210

0

Figure 10. Teacher signal used for training for error convergence-type 2nd-order Volterra predictor

decided by pseudo-random numbers at the training process a time. Gradient descent method is used for learning rule for the 2VNN. The number of middle layer elements and filter length of the 2VNN has been decided by studying experiences of ECG prediction using 2VNN.

3) The trainings for searches are executed as a parameter to set a condition for the computer simulations which is the learning reinforcement coefficient.

4) Averages of root mean square errors (RMSEs) obtained from the searches of three times are compared.

2VNN achieved the minimum average of RMSEs by the searches is NN at the first step in EC2VP. A part of training signals to 2VNN at the second step (2VNN2) in EC2VP is an error signal obtained from a difference between an output signal of 2VNN1 and a teacher signal to EC2VP.

Next, 2VNN2 is trained using combinations of input signals x21

(τ) and x(τ) in the time series pattern of two dimensions in space direction and a teacher signal y2

(τ)=x21(τ+1) in the time series pattern of one dimension in

space direction. A signal to which gain tuning which adjusts the maximum absolute value of error signal to 1 is performed are used for xA2

(τ) and yA2(τ). Here, computer

simulations for the training are executed as Aein2=A2 and a21=1/3.275 according to the above-mentioned procedure from 1) to 4).

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

20

Table 1: Conditions for computer simulations to train 2nd-order Volterra neuron network at each step

10-5~110 times

30,0003

-0.3~0.3-0.3~0.3

Prediction coefficents 0.7×0.3p

Training cycles

Learningreinforcement

coefficients

Range

Processing times

Connection weightsThresholds

Gradient-basedmethod

Initialconditions

Filter length

Interval

σ2

σ1

Momentum 0Output coefficents 1

0.7×0.3p×0.7×0.3q

Learning roule Learning roule for Volterra neuron network

Number of middle layer elements

Steps The 1st step The 2nd step

469

1064

Time [s]

Vol

tage

[mV

] ]

543210

00.2

1.0

-0.2

×10-1

0.40.60.8

-0.4-0.6-0.8-1.0-1.2

Figure 11. Teacher signal for 2nd-order Volterra neuron network at the second step

Training cycles

2VNN1

10-6

10-9

10-3

1

0 10,000 20,000 30,000

Eval

uatio

n fun

ctio

n val

ue

2VNN2

Figure 13. Relation between training cycles and average evaluation function values at the minimum average of root mean square errors concerning 2nd-order Volterra neuron network at each step

Learning reinforcement coefficent

RM

SE

Minimum (1.17×10-5)

10-1 110-210-310-410-5

10-1

101

10-2

10-3

10-4

10-5

N2VNN1

Minimum (2.60×10-2)

N2VNN2

10-6

Figure 12. Averages of root mean square errors and their standard deviations to learning reinforcement coefficient in gradient descent method term

-1.0-0.50.00.51.01.52.02.53.0

Time [s]

Vol

tage

[mV

]

系列1 系列2

543210

2VNN1 2VNN2

0

Figure 14. Output signal of 2nd-order Volterra neuron network at each step

(IJCNS) International Journal of Computer and Network Security, Vol. 2, No. 2, February 2010

21

Then, 2VNN achieved the minimum average of RMSEs by the searches is NN at the second step in EC2VP. An output of the 2VNN2 is restored at a level of the teacher signal to EC2V. The restored output signal is added to the output of 2VNN1 at the same time as it. Finally, the fourth decimal place of the output after the addition is rounded off. The obtained output is an output of EC2VP as a result. Its prediction accuracy is evaluated.

5.3 Results Results of computer simulations are shown in figures from 11 to 14. A result of making training signal for 2VNN2 is shown in Figure 11. Averages of RMSEs and their standard deviations obtained by the computer simulations for 2VNN at each step are shown in Figure 12. Fig. 13 shows a relation between training cycles and average of evaluation function values of 2VNN at each step which is recorded before beginning to train and at the first time and every 100 times of the training cycle when the minimum average of RMSEs is obtained by searches of three times. This figure shows that prediction errors when training for 2VNN1 is saturated can be decreased more by using 2VNN2. Moreover, gradient of average evaluation function value of 2VNN2 at 30,000 cycles is surmisable like being able to train more. Figure 14 shows output signal of 2VNN at each step. An output signal of EC2VP which is obtained as a result of adding output signals of 2VNN1 and 2VNN2, and rounding it. This signal is error free at all, and is equal to the teacher signal.

Thus, complete learning accomplishment capability of EC2VP could be demonstrated. An excellent learning capability and validity of EC2VP to normal sinus rhythm ECG can be confirmed.

6. Discussion

6.1 Accuracy Up for Output and Speed Up for Training