Voice training HTS

-

Upload

danilo-sousa -

Category

Education

-

view

674 -

download

0

Transcript of Voice training HTS

Voice Training HTS

Ms. Danilo de Sousa Barbosa

Course provided by: Erica Cooper

Columbia University

Voice Training HTS

Voice Training HTS

2

Conteúdo

1. Preparing Data for training an HTS Voice .................................................................................. 3

2. HTS installation and Demo Errors and Solutions ...................................................................... 3

3. Background: Acoustic and linguistic features ........................................................................... 4

4. Directory Setup ......................................................................................................................... 5

5. Prerequisites for HTS data setup ............................................................................................... 5

6. Make data steps ...................................................................................................................... 12

7. Questions File .......................................................................................................................... 14

8. Voice training .......................................................................................................................... 15

9. Subset selection Scripts and info ............................................................................................ 19

10. Variations voice ..................................................................................................................... 20

Voice Training HTS

Voice Training HTS

3

1. Preparing Data for training an HTS Voice

This tutorial assumes that you have HTS and all its dependencies installed, and that you have successfully run the demo training scripts. This site does not provide comprehensive info on installation as this is included in the HTS README. However, some notes on installation and various errors you might encounter can be found here.

These are instructions for preparing new data to train a voice using the HTS 2.3 speaker-independent demo. For information about speaker-adaptively training a voice and other variants, please see the variants page.

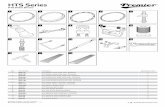

In the diagram above, the blue items are files you must provide before running the HTS data preparation steps, and the green items are the output created by each step. Note that .raw and .utt files are not required by the actual training demo scripts, so if you have your own way of creating acoustic features or HTS-format .lab files, you can just drop those in.

2. HTS installation and Demo Errors and Solutions

Ubuntu

Error about x2x not being found: The scripts expect a different directory structure for SPTK than the one which is actually there. I fixed this with a new directory in my SPTK folder called installation/bin where I just copied all the binaries right into the bin/ instead of the way they are originally, which is e.g. bin/x2x/x2x

o Even easier than having to copy / symlink stuff into installation/bin is to mkdir installation configure --prefix=/path/to/installation make make install (this is for SPTK.) Everything will be there after that.

Voice Training HTS

4

Mac

HGraf X11 error when you run make for HTS (installation, not demo): for ./configure you should instead run ./configure CFLAGS=-I/usr/X11R6/include

delta.c:589: internal compiler error: Segmentation fault: 11 when compiling SPTK: The fix was to change bin/delta/Makefile "CC" from "gcc" to "clang" and doing the top-level make again.

Installing ActiveTcl with Snack: had to install it with a .dmg instead of the usual compile. The files got installed to /usr/local/bin/tclsh8.4

General (Mac and Ubuntu)

Error: HTS voices cannot be loaded. When running the demo. This was fixed by installing an older version of the hts_engine instead of the latest one: Version 1.05, which can be found here.

o Note that this was for HTS-2.2. With HTS-2.3, I also encountered errors related to using wrong versions of dependencies. Follow the README and be extra careful that everything is the right version.

/usr/bin/sox WARN sox: Option `-2' is deprecated, use `-b 16' instead. /usr/bin/sox WARN sox: Option `-s' is deprecated, use `-e signed-integer' instead. /usr/bin/sox WARN sox: Option `-2' is deprecated, use `-b 16' instead. In step WGEN1 of the demo, change $SOXOPTION from '2' to 'b 16' in Config.pm. Then, in Training.pl, look for all invocations of sox and change the option -s to -e signed-integer. This fixes it.

3. Background: Acoustic and linguistic features

There are two main kinds of data that we use to train TTS voices - these are acoustic

and linguistic, which typically start out as the audio recordings of speech and their text

transcripts, respectively. The model we are learning is a regression that maps text (typically

transformed into a richer linguistic representation) to its acoustic realization, which is how we

can synthesize new utterances.

The acoustic features get extracted from the raw audio signal (raw in the diagram above, in the step make features); these include lf0 (log f0, a representation of pitch) and mgc (mel generalized cepstral features, which represent the spectral properties of the audio).

The linguistic features are produced out of the text transcripts, and typically require additional resources such as pronunciation dictionaries for the language. The part of a TTS system that transforms plain text into a linguistic representation is called a frontend. We are using Festival for our frontend tools. HTS does not include frontend processing, and it assumes that you are giving it the text data in its already-processed form. .utt files are the linguistic representation of the text that Festival outputs, and the HTS scripts convert that format into the HTS .lab format, which 'flattens' the structured Festival representation into a list of phonemes in the utterance along with their contextual information. Have a look at lab_format.pdf (which is part of the HTS documentation) for information about the .lab format and the kind of information it includes.

Voice Training HTS

5

4. Directory Setup

To start, copy the empty template to a directory with a name of your choosing,

e.g. yourvoicename. You will then fill in the template with your data.

cp -r /proj/tts/hts-2.3/template_si_htsengine/path/to/yourvoicename cd yourvoicename

Then, under scripts/Config.pm, fill in $prjdir to contain the path to your voice directory.

5. Prerequisites for HTS data setup

You will need each of the following to start with, before you can proceed with the HTS

data preparation scripts:

It is expected that you already have these before proceeding with the next steps. Click on each one to learn more about how to create these.

Raw audio (.raw) Fullcontext training labels (.utt) Generation labels for synthesis (.lab)

Place your .raw files in yourvoicename/data/raw. Place your .utt files in yourvoicename/data/utt. Place your gen labels in yourvoicename/data/labels/gen.

Preparing .raw Audio for HTS Voice Training

16k .wav to .raw

Your wav files should be in 16k format, e.g. using soxi:

Input File : 'fae_0001.wav' Channels : 1 Sample Rate : 16000 Precision : 16-bit Duration : 00:00:08.49 = 135872 samples ~ 636.9 CDDA sectors File Size : 272k Bit Rate : 256k Sample Encoding: 16-bit Signed Integer PCM

Assuming your audio is already in 16k .wav format, use this command to convert to the appropriate .raw format for HTS:

ch_wave -c 0 -F 32000 -otype raw in.wav | x2x +sf | interpolate -p 2 -d | ds -s 43 | x2x +fs > out.raw

See below for converting other formats.

Voice Training HTS

6

Errors and Solutions

Segmentation fault - This is a heisenbug. If you just run it again it should work. rateconv: failed to convert from 8000 to 32000 - This happens for very short

utterances, often backchannels, that contain little to no audio. Just exclude these from training. Be careful though because it still creates the raw file....

x2x : error: input data is over the range of type 'short'! ds : File write error! - This is from maxed-out audio or clipping. It still writes the file.

Any .wav to 16k .wav

If your audio is in some .wav format other than 16k, use sox to convert it:

sox input.wav -r 16000 output.wav

Converting .sph to .wav

.sph is the format that many LDC corpora use. Certain versions of sox can do this conversion:

sox inputfile.sph outputfile.wav

Except that this won't work if it is the particular .sph format that uses 'shorten' compression. If that's the case, you'll see this error: sph: unsupported coding `ulaw,embedded-shorten-v2.00'

In that case, you need to use the NIST tool sph2pipe:

sph2pipe -p [-c 1|2] infile outfile

The -p forces it to the 16k format required above. The -c picks channel 1 or 2 if you want to separate them, e.g. for speakers. Speech lab students: we have a copy of this under /proj/speech/tools/sph2pipe_v2.5

Even after you do this, it seems to still retain a .sph header, so you'll next have to use sox infile outfile to force convert it to regular .wav.

Also, sometimes you need to do

sph2pipe -p -f wav in.sph out.wav again, depending on the particular version of the .sph format.

-p -- force conversion to 16-bit linear pcm -f typ -- select alternate output header format 'typ' five types: sph, raw, au, rif(wav), aif(mac)

Creating .utt Files for English

Create prompt file and general setup

First you need to make a .data file with the base filenames of all the utterances and

the text of each utterance. e.g.

Voice Training HTS

7

( uniph_0001 "a whole joy was reaping." )

( uniph_0002 "but they've gone south." )

( uniph_0003 "you should fetch azure mike." )

You will also need to do some general setup to get Festival and related things on your path -- put these in your .bashrc file: (These are the newest version of Festival (2.4), containing EHMM, from the Babel Festvox scripts) and also don't forget to source .bashrc: export PATH=/proj/tts/tools/babel_scripts/build/festival/bin:$PATH export PATH=/proj/tts/tools/babel_scripts/build/speech_tools/bin:$PATH export FESTVOXDIR=/proj/tts/tools/babel_scripts/build/festvox export ESTDIR=/proj/tts/tools/babel_scripts/build/speech_tools

** Note that these are the paths that Speech Lab students should use. If you are not in Speech Lab, then set these paths to wherever you have Festival, Festvox, and EST installed.

** Also note that any labels created using the old version of Festival (in /proj/speech/tools) will be missing the feature "vowel in current syllable," which especially affects the quality of Merlin voices. Make sure the labels you are using are consistent, e.g. if using old-style labels, make sure to be comparing the voice to a baseline that also is using the old-style labels.

Fullcontext labels using EHMM alignment

EHMM stands for "ergodic HMM" and is an alignment method which accounts for the

possibility that there might be pauses in between phoneme labels. This should in theory result

in better duration models. This method is fairly commonly used, and is built into Festival. More

information on EHMM can be found in this paper: Sub-Phonetic Modeling for Capturing

Pronunciation Variations for Conversational Synthetic Speech (Prahallad et al. 2006).

Source: modified from http://www.nguyenquyhy.com/2014/07/create-full-context-labels-for-hts/

Prepare the directory: $FESTVOXDIR/src/clustergen/setup_cg cmu us awb_arctic Instead of cmu and awb_arctic you can pick any names you want, but please keep us so that Festival knows to use the US English pronunciation dictionary.

Copy or symlink your .wav files into the wav/ folder -- these should be in 16kHz, 16bit mono, RIFF format.

Put all the transcriptions into the file etc/txt.done.data -- this is the file you created in the very first step above

Run the following 3 commands: ./bin/do_build build_prompts ./bin/do_build label ./bin/do_build build_utts

The .utt files should now be under festival/utts.

In the label step, if you get an error "Wave files are missing. Aborting ehmm." then check the file names in txt.done.data vs. those in wav/ -- something is likely missing or duplicate. The set of utterances in both places must match exactly.

Voice Training HTS

8

Getting alignment score from EHMM

EHMM will tell you the average likelihood after each round

(under ehmm/mod/log100.txt), but does not by default record the likelihood for each

utterance. Speech lab students: our version of Festvox will print this out to stdout when it

runs. Everyone else: I added this by going into $FESTVOXDIR/src/ehmm/src/ehmm.cc, the

function ProcessSentence, and adding this line:

cout << "Utterance: " << tF << " LL: " << lh << endl;

after the part where the variable lh gets computed for the utterance. Then, recompile by going to top-level $FESTVOXDIR and running make.

Fullcontext labels using DTW alignment [DEPRECATED]

This method synthesizes all of the utterances with an existing English Festival voice, and then

uses dynamic time warping (DTW) with the synthesized and actual audio, to get the alignments

between our actual audio and the text. This is what we have used for many of our English

voices so far, but there are better methods out there that we should use instead (see EHMM

above). This method is included for reference.

Source: modified from http://festvox.org/bsv/x3082.html

You must select a name for the voice, by convention we use three part names consisting of a institution name, a language, and a speaker (or the corpus name). Make a directory of that name and change directory into it mkdir cmu_us_awb cd cmu_us_awb

There is a basic set up script that will construct the directory structure and copy in the template files for voice building. If a fourth argument is given, it can be name one of the standard prompts list. $FESTVOXDIR/src/unitsel/setup_clunits cmu us awb cp mydatafile.data etc/ ** note that while you can rename cmu and awb to whatever you want, us needs to stay, in order to tell Festival to use the US English dictionary for pronunciations.

The next stage is to generate waveforms to act as prompts, or timing cues even if the prompts are not actually played. The files are also used in aligning the spoken data. festival -b festvox/build_clunits.scm '(build_prompts "etc/mydatafile.data")' Use whatever prompt file you are intending to use.

o This step creates prompt-lab/*, prompt-utt/*, and prompt-wav/* Copy or symlink your .wav files into the wav/ directory. Now we must label the spoken prompts. We do this by matching the synthesized

prompts with the spoken ones. As we know where the phonemes begin and end in the synthesized prompts we can map that onto the spoken ones and find the phoneme segments. This technique works fairly well, but it is far from perfect and it is worthwhile to check the result and probably fix by hand. ./bin/make_labs prompt-wav/*.wav

o This creates cep/*, lab/*, and prompt-cep/*

Voice Training HTS

9

After labeling we can build the utterance structure using the prompt list and the now labeled phones and durations. festival -b festvox/build_clunits.scm '(build_utts "etc/mydatafile.data")'

o This creates festival/utts/* The .utt files are now under festival/utts/

Creating .utt Files for Babel Languages

1. General setup

Make sure these are all in your .bashrc:

export PATH=/proj/tts/tools/babel_scripts/build/festival/bin:$PATH export BABELDIR=/proj/tts/data/babeldir export ESTDIR=/proj/tts/tools/babel_scripts/build/speech_tools export FESTVOXDIR=/proj/tts/tools/babel_scripts/build/festvox export SPTKDIR=/proj/tts/tools/babel_scripts/build/SPTK export BABELDIR=/proj/tts/data/babeldir

Then make sure the Babel language you want is in $BABELDIR, e.g. BABEL_BP_105 (Turkish), and if it's not, then symlink it in.

2. Directory setup

For this example, we will say that we are using Turkish-language data from the Omniglot

dataset. Change the directory name and the Babel language ID (BABEL_BP_105) accordingly

for whichever language and dataset you are using. Things you will want to tailor to your own

language and data are in italics.

cd /proj/tts/tools/babel_scripts mkdir turkish_omniglot cd turkish_omniglot

Then run the following, which should all be on one line: /proj/tts/tools/babel_scripts/make_build setup_voice turkish_omniglot $BABELDIR/BABEL_BP_105/conversational/reference_materials/lexicon.txt $BABELDIR/BABEL_BP_105/conversational/training/transcription $BABELDIR/BABEL_BP_105/conversational/training/audio

3. Drop in our data

Put .wav files under wav/. These should be 16k and 16 bit format.

Create txt.done.data file with transcripts under etc/. This is a file containing the utterance filename IDs with the transcripts. It should look something like this:

( uniph_0001 "a whole joy was reaping." ) ( uniph_0002 "but they've gone south." ) ( uniph_0003 "you should fetch azure mike." )

Voice Training HTS

10

4. Build prompts and identify OOVs

Run this from your top-level turkish_omniglot directory:

./bin/do_build parallel build_prompts etc/txt.done.data

It is recommended to run this command in an emacs shell, since it appears to handle utf-8 the best and cause the fewest problems.

This step will reveal words that are not in the lexicon. Get a list of those words and use Sequitur G2P or Phonetisaurus to generate their pronunciations. Once you have the new pronunciations, add them to festvox/cmu_babel_lex.out, NOT the other one (lex.scm). Make sure they are in proper alphabetical order. We currently do this by hand.

For syllabification, there are a few options:

Naive syllabification: e.g. cv cvc cv Use the syllabification pipeline that Chevy has developed:

/proj/tts/syllabification Based on LegaliPy.

For the stress markers (the numbers at the end of each syllable unit, currently all just 0) we are just continuing to put 0 for now. We have stress information available in the Babel lexicon, but it is not currently incorporated.

Once all of the OOV pronunciations have been added, re-run the above command. If you still need to fix any errors, remember to re-run until no more errors.

5. Label using EHMM alignment

./bin/do_build label etc/txt.done.data

If you get an error "Wave files are missing. Aborting ehmm." then check the file names in txt.done.data vs. those in wav/ -- something is likely missing or duplicate.

6. Last steps

./bin/do_clustergen generate_statenames

./bin/do_clustergen generate_filters

./bin/do_clustergen parallel build_utts etc/txt.done.data

Then the .utt files should be there in festival/utts.

7. HTS

If you want to use this data with HTS, you will have to phone-map them to make sure there are no non-alphabetical phoneme names (see instructions for gen labels, step 4, for more info). Then, you will have to run "make label" to create both full and mono labels out of these utt files. Finally, don't forget to run "make list" once all full and gen labels are in place.

Voice Training HTS

11

Creating Babel Language gen .lab Files for Synthesis

Please note: these instructions are for Columbia Speech Lab students only. Many of

these tools and data sets are not yet publicly available.

1. Make your Festival-format .data file.

For example:

( gen_001 "sentence to synthesize." ) ( gen_002 "another new sentence." ) ( gen_003 "here is one more." ) .... Except these won't be in English obviously.

2. Tell Babel-Festival about the voice.

It is assumed that you already have created a default Clustergen Babel voice with the Babel-

Festival scripts for your language. In this example we are using Turkish; change the language as

appropriate.

ecooper@kucing /proj/tts/tools/babel_scripts/build/festival/lib/voices/turkish/cmu_babel_turkish_cg $ ln -s /proj/tts/tools/babel_scripts/turkish/festvox .

ecooper@kucing /proj/tts/tools/babel_scripts/build/festival/lib/voices/turkish/cmu_babel_turkish_cg $ ln -s /proj/tts/tools/babel_scripts/turkish/festival .

Then, start Festival (just type festival at the command line) and when you run (voice.list) it should show cmu_babel_turkish_cg. Exit Festival using (exit) before proceeding with the next steps.

3. Run text2utts with that voice.

We can tell Festival to select the Turkish voice using the -eval argument.

export FESTVOXDIR=/proj/tts/tools/babel_scripts/build/festvox

ecooper@kucing /proj/tts/tools/babel_scripts/turkish $ $FESTVOXDIR/src/promptselect/text2utts -eval "(voice_cmu_babel_turkish_cg)" -all -level Text -odir /proj/tts/tools/babel_scripts/turkish/festival/utts_dev -otype utts -itype data "etc/your.txt.done.data"

The .utt output will be in festival/utts_dev.

Voice Training HTS

12

4. Phoneme mapping

HTS doesn't like anything that isn't alphabetic for phoneme names. Thus, you have to pick a phone mapping from any numerical or special-character phoneme symbols to something alphabetical (can be more than one character). Then you have to create new .utt files that use the HTS-compatible phoneset. We already have phone mappings for Turkish. See /proj/tts/examples/map_phones.py for an example.

5. Festival .utt format to HTS .lab format

We can convert from Festival format to HTS format using the HTS demo scripts. However, the HTS demo scripts do not include a recipe for creating gen labels since the demo includes pre-made gen labels for US English, so we need to repurpose the 'full' label recipe to create gen labels. These are basically the same format except for that full labels have timestamps and gen labels should not.

Follow the steps in the data Makefile (e.g. /proj/tts/hts-2.3/ template_si_htsengine/data/ Makefile) for make label. We typically just copy over the Makefile to wherever the utts are, edit it to just do the fullcontext step, and run it.

If you see warnings about phoneset Radio, these are safely ignored. This is just a warning indicating that we are using phonemes outside of the default US English set.

The output will be in labels/full. Follow the next steps to remove the timestamps, and put these final labels into yourvoicedir/data/labels/gen.

6. Remove unnecessary timestamps

The training .lab files have timestamps for where the phonemes start and end, however these are not needed for the generation .lab files. Remove them. Speech lab students: see /proj/tts/examples/fix_labels.py for an example script that does this.

6. Make data steps

In yourvoicename/data you will see a Makefile. We will step through the steps in this Makefile

to set up the data in HTS format. All of these steps should be run from the

directory yourvoicename/data.

Changes to the Makefile

Please make the following changes in your Makefile:

You will see this in various places: /proj/tts/PATHTOYOURVOICEDIR/... Make sure to replace these with the actual path to your voice directory.

make features

This step extracts various acoustic features from the raw audio. It creates the following files:

mgc: spectral features (mel generalized cepstral) lf0: log f0 bap: if you are using STRAIGHT

Voice Training HTS

13

You can run:

make features

make cmp

This step composes the various different acoustic features extracted in the previous

step into one combined .cmp file per utterance. Run:

make cmp

make lab

This step "flattens" the structured .utt file format into the HTS .lab format. This step

creates labels/full/*.lab, the fullcontext labels, and labels/mono/*.lab, which are monophone

labels for each utterance. Run:

make lab

make mlf

These files are "Master Label Files," which can contain all of the information in the .lab

files in one file, or can contain pointers to the individual .lab files. We will be creating .mlf files

that are pointers to the .lab files. Run:

make mlf

make list

This step creates full.list, full_all.list, and mono.list, which are lists of all of the unique

labels. full.list contains all of the fullcontext labels in the training data, and full_all.list contains

all of the training labels plus all of the gen labels. Run:

make list

** Note that make list as-is in the demo scripts relies on the cmp files already being there -- it checks that there is both a cmp and a lab file there before adding the labels to the list. However, it does not use any of the information actually in the cmp file, beyond checking that it exists.

make scp

This step creates training and generation script files, train.scp and gen.scp. This is just a

list of the files you want to use to train the voice, and a list of files from which you want to

synthesize examples. Run:

make scp

If you ever want to train on just a subset of utterances, you only have to modify train.scp.

Voice Training HTS

14

7. Questions File

Make sure you are using a questions file appropriate to the data you are using. The

default one in the template is for English. We have also created a questions file for Turkish, as

well as ones for custom frontend features. Read more about questions files here.

You will need to create / modify a questions file for your voice if:

1. You are creating a voice for a new language. 2. You are working with some custom frontend features.

If you are not doing either of those things, then please use the appropriate existing question

file.

The questions file is in yourvoicedir/data/questions/questions_qst001.hed. It is language (actually, phoneset) dependent and it needs to be filled in appropriately with your phoneset.

The questions file is used in the decision tree part of the training, and it relates to the fullcontext label file format. The questions file is basically just pattern matching on the fullcontext labels. On the left side is the name of the question (e.g. "LL-Vowel" is basically asking, "Is the phoneme two to the left of the current one a vowel?") and on the right side is a list of things for which a match would mean the answer is "yes" (e.g. all vowels). The fullcontext label file uses symbols to delimit the different features, so that's why everything pertaining to, e.g. the current phoneme (questions starting with "C-") has the possible matches put between - and + (because that's how you find the current phoneme in the current label, according to lab_format.pdf).

The questions basically repeat for LL, L, C, R, and RR, so it is possible to just fill in the first section and use a script to populate the rest of them. In fact, we already have a script here:/proj/tts/resources/qfile/ You only have to fill in a file in a format like turkish_categories, and then make_qfile_from_categories.py can generate the questions file (with the 'repeat' question categories) in the right format. As for figuring out which phonemes go in which category, this will require some looking up the definitions for the categories on Wikipedia or elsewhere.

Errors and Solutions

make mgc o /proj/speech/tools/SPTK-3.6/installation/bin/x2x: 8: Syntax error: "("

unexpected That's because you're trying to use a 64bit-compiled version of SPTK on a 32bit machine, or vice versa. Recompile SPTK using the machine on which you're running.

make lf0 o shift: can't shift that many

There is a mismatch between the speaker list and the speaker f0 range list, i.e. you have more speakers than ranges listed, or the other way around. Fix the lists, then re-run.

Voice Training HTS

15

o Unable to open mixer /dev/mixer This may happen on machines that are servers as opposed to desktops. This is safely ignored.

make label o WARNING

No default voice found in ("/proj/speech/tools/festival64/festival/lib/voices/") These are safely ignored. You can also install festvox-kallpc16k if you don't want to see these.

o [: 7: labels/mono/h1r/cu_us_bdc_h1r_0001.lab: unexpected operator (with missing scp files under data/scp) In data/Makefile under scp: you need to make sure those paths are pointing to the right place.

make list o [: 7: labels/mono/h1r/cu_us_bdc_h1r_0001.lab: unexpected operator

You are missing some .lab files, make sure they are there and being looked for in the right place.

o sort: cannot read: tmp: No such file or directory You are missing some .cmp files, make sure they are there and being looked for in the right place.

make scp o /bin/sh: 3: [: labels/mono/f3a/f3a_0001.lab: unexpected operator

Check the paths in each of the three parts! This happens when there is a typo in a path.

8. Voice training

Voice Training Checklist

Speech lab students: please see the voice training checklist to make sure everything is in place

before running voice training.

Speaker-Independent Training

Make sure you are using a template from the right version of HTS. Either copy from a similar voice, or use /proj/tts/hts-2.3/template_si_htsengine for HTS 2.3.

Make sure the $MCDGV fix is present. This is a common training error for which we have embedded a fix into the training scripts. You can check that you have the fix by looking in yourvoicedir/scriptsand seeing that a file called fix_mcdgv.py is there. If you used template_si_htsengine, then it should already be there. If not, please use a template that has it.

Also under scripts, make sure that in Config.pm, $prjdir is set to the path to your voice directory.

Acoustic data: under data/, put data from your corpus into directories cmp, lf0, and mgc. Typically we use symbolic links to avoid duplicating large amounts of data for each voice. If you are experimenting with different acoustic settings like f0 extraction ranges or other variations, then you'll have to re-make these with your own settings, rather than copying the data over.

Text labels: under labels/, put data from your corpus into mono and full. Make sure that mono.mlf and full.mlf contain the right paths. If you are experimenting with some modified frontend, e.g. different pronunciations or syllabification or extra features,

Voice Training HTS

16

you will need to re-create these label files rather than using the default ones in the /proj/tts/data repository.

Generation labels for synthesis: place these in labels/gen. Again, if you are using a nonstandard frontend, you will need to generate these labels yourself rather than using the default ones in the data repository.

Model lists: these are lists/full_all.list, full.list, mono.list. full_all.list needs to contain labels for both training and synthesis, and also if you are using any non-standard frontend you will need to re-create these for the new model definitions resulting from your new labels. When in doubt, re-run this using make list in the data/Makefile (make sure to update any paths in there) after all your label files are in place.

Training and generation script files. scp/train.scp is the list of files to use for training. Make sure they are all there, and that the paths to your voice directory are correct. If you are training a subset voice, this is the place to define the subset. scp/gen.scp lists the files to synthesize from for test synthesis. Make sure these contain the correct files and paths.

Questions files under questions/. These are language-dependent so make sure you are using ones for the correct language. If you copied from template_si_htsengine then you are using the English ones. You can copy the ones for Turkish from any of the Turkish voices we have already trained. If you are using any of your own additional frontend features, then you will also need to add in questions about those in the questions file.

Are you training using SAT? If so, then please also check the following: o Each data directory should have one subdirectory for each speaker. o gen labels should be prefixed with the ID of the target speaker and be in a

subdirectory named after that speaker. o labels/*.mlf files should have speaker-specific lines. o scripts/Config.pm - check that $spkrPat is correct and also that $spkr is the ID

of the adaptation speaker that you want to use.

Running the Training

Once all the data is in place, run this from the top-level voice directory:

perl scripts/Training.pl scripts/Config.pm > train.log 2> err.log

This typically takes a while and is best run under a screen session. If you want to have it email you when done so you don't have to keep checking on it, you can run it like this using the mail command:

( perl scripts/Training.pl scripts/Config.pm > train.log 2> err.log ; echo "email content" | mail -s "email subject" [email protected] )

If the training fails before it is finished, you can see how far it got: grep "Start " train.log

These steps match the ones at the end of scripts/Config.pm, so once you debug the problem, you can pick up the voice training from where it left off, rather than starting over from the beginning, by switching off the steps that already completed successfully in scripts/Config.pm by switching their 1s to 0s.

You will know the voice has trained successfully when there are .wav files in the gen/qst001/ver1/hts_engine/ directory.

Voice Training HTS

17

Voice Output

Test synthesis utterances can be found under gen/qst001/ver1. hts_engine contains

utterances synthesized using HTS-engine, and 1mix, 2mix, and stc contain synthesis using

either SPTK or STRAIGHT.

The different speech waveform generation methods are as follows (from this thread):

1mix o Distributions: Single Gaussian distributions o Variances: Diagonal covariance matrices o Engine: HMGenS

2mix o Distributions: Multi-variate Gaussian distributions (number of mixture is 2). o Variances: Diagonal covariance matrices o Engine: HMGenS

stc o Distributions: Single Gaussian distributions o Variances: Semi-tied covariance matrices o Engine: HMGenS

hts_engine o Distributions: Single Gaussian distributions o Variances: Diagonal covariance matrices o Engine: hts_engine

Errors and Solutions

MKEMV o If this doesn't output anything: this happened when I had a

different Config.pm already on my path (my .cpanplus directory) that it was pulling in. This is fixed by forcing a path to the Config.pmthat you want to use, e.g. ./Config.pm.

IN_RE o ERROR [+6510] LOpen: Unable to open label file

/local/users/ecooper/voices/bdc/data/cmp/h1r/cu_us_bdc_h1r_0001.lab You have the wrong paths in data/labels/full.mlf and/or data/labels/mono.mlf.

o ERROR [+2121] HInit: Too Few Observation Sequences [0] FATAL ERROR - Terminating program /proj/speech/tools/HTS/htk/bin/HInit Error in /proj/speech/tools/HTS/htk/bin/HInit -A -C /local2/ecooper/datasel/short15/configs/trn.cnf -D -T 1 -S /local2/ecooper/datasel/short15/data/scp/train.scp -m 1 -u tmvw -w 5000 -H /local2/ecooper/datasel/short15/models/qst001/ver1/cmp/init.mmf -M /local2/ecooper/datasel/short15/models/qst001/ver1/cmp/HInit -I /local2/ecooper/datasel/short15/data/labels/mono.mlf -l zh -o zh /local2/ecooper/datasel/short15/proto/qst001/ver1/state-5_stream-4_mgc-105_lf0-3.prt This happened when we were training a voice on the 15min of shortest utterances. These utterances were mostly empty and there probably weren't enough good examples for a lot of phonemes. This error basically just means

Voice Training HTS

18

there's not enough good data to train a voice. "Good" examples means examples that contain enough frames to model with a 5-state HMM -- if there are fewer than 5 frames, then that example can't be used to train a model. Adding in more examples of that phoneme won't necessarily help unless they are at least 5 frames long. cf. http://hts.sp.nitech.ac.jp/hts-users/spool/2007/msg00232.html

MMMMF o If the training just hangs at this step and doesn't continue. Running top shows

that nothing is running. Ctrl-C to kill the training shows this: Error in /proj/speech/tools/HTS/htk/bin/HHEd -A -B -C /local2/chevlev/datasel/F0meanMiddle15min/configs/trn.cnf -D -T 1 -p -i -d /local2/chevlev/datasel/F0meanMiddle15min/models/qst001/ver1/cmp/HRest -w /local2/chevlev/datasel/F0meanMiddle15min/models/qst001/ver1/cmp/monophone.mmf /local2/chevlev/datasel/F0meanMiddle15min/edfiles/qst001/ver1/cmp/lvf.hed /local2/chevlev/datasel/F0meanMiddle15min/data/lists/mono.list We never really figured out what this problem was, but we were able to successfully run the voice on a different machine with more memory, so it may have been a memory issue.

ERST0 o It just says error: probably also an out-of-memory issue.

MN2FL o An error about not being able to allocate that much memory: We were

running training on a 32bit machine. The same voice trained successfully on a 64bit machine.

o Error in /proj/speech/tools/HTS/htk/bin/HHEd -A -B -C /proj/tts/voices/fisher/overarticulated_one_2_copy/configs/trn.cnf -D -T 1 -p -i -H /proj/tts/voices/fisher/overarticulated_one_2_copy/models/qst001/ver1/cmp/monophone.mmf -w /proj/tts/voices/fisher/overarticulated_one_2_copy/models/qst001/ver1/cmp/fullcontext.mmf /proj/tts/voices/fisher/overarticulated_one_2_copy/edfiles/qst001/ver1/cmp/m2f.hed /proj/tts/voices/fisher/overarticulated_one_2_copy/data/lists/mono.list When you run the command by itself, it just says Killed. Also suspected to be an out-of-memory error because the same voice trained successfully on a machine with more memory.

ERST1 o Processing Data: f1a_0001.cmp; Label f1a_0001.lab

ERROR [+7321] CreateInsts: Unknown label pau This happened because our full.mlf path was pointing to the mono labels.

o Error in /proj/speech/tools/HTS/htk/bin/HERest with the rest of the command line. Running the command by itself just terminates in Killed. Suspected out of RAM. More info / suggestions on this from the mailing list: http://hts.sp.nitech.ac.jp/hts-users/spool/2014/msg00146.html

o ERROR [+5010] InitSource: Cannot open source file fullcontextlabel Something is probably missing from one of your lists under data/lists. Make sure those were made properly / re-make if necessary. When you re-start the training, you will actually need to start from the previous step, MN2FL.

Voice Training HTS

19

CXCL1 o ERROR [+2661] LoadQuestion: Question name RR-Vowel invalid

I had a typo in my custom questions file -- a duplicate definition for question RR-Vowel when the second one was supposed to be RR-Vowel_R.

FALGN MCDGV

o MCDGV: making data, labels, and scp from fae_0210.lab for GV...Cannot open No such file or directory at Training.pl line 1216, <SCP> line 210. Every .cmp file in train.scp needs to have a corresponding .lab file in gv/qst001/ver1/fal/. These get created during training during an alignment step. If some utterance is too difficult to align (often because of background noise or errors in the transcription), then the .lab file just won't get created. There are two possible fixes for this:

1. Remove utterances which couldn't align from your train.scp, by checking which .lab files are missing from that directory and then just deleting the corresponding lines in train.scp. Then, pick up the training from this step ($MCDGV). Speech lab students: we have built this into the voice training recipe.

2. Force the training to accept a bad alignment by increasing the beam width ($beam in Config.pm). You can set it to 0 to disable the beam (i.e. infinite beam). Note that wider beams will consume more memory. More info here: http://hts.sp.nitech.ac.jp/hts-users/spool/2014/msg00119.html Pick up the training from the step right before this one, $FALGN.

PGEN1 o Generating Label alice01.lab

ERROR [+9935] Generator: Cannot find duration model X^x-pau+ae=l@x_x/A:0_0_0/B:x-x-x@x-x&x-x#x-x$x-x!x-x;x-x|x/C:1+1+2/D:0_0/E:x+x@x+x&x+x#x+x/F:content_2/G:0_0/H:x=x^1=10|0/I:19=12/J:79+57-10 in current list Your data/lists/full_all.list does not include information from this gen label. Possibly because you added in gen labels after creating full_all.list. Re-make full_all.list to include all gen labels.

CONVM o ERROR [+7035] Failed to find macroname .SAT+dec_feat3

Make sure that your $spkr in scripts/Config.pm is correct. SEMIT PGENS

9. Subset selection Scripts and info

We have done a number of preliminary data selection experiments which required finding and

writing scripts to select subsets based on different features. These can be re-used on other

data sets, or used as an example.

Using Praat for Basic Prosodic Features

We use a Praat script to extract standard acoustic features. You can read more about Praat

here: http://www.fon.hum.uva.nl/praat/

Voice Training HTS

20

Use this script: /proj/speech/tools/speechlab/extract_acoustics_old.pl Run it as follows: extract_acoustics_old.pl path/to/your/file.wav 75 500 0 lengthofthefile

75 500 are the are the default expected pitch range for a female speaker, as documented in the README.

0 lengthofthefile are the start and end times that you want the script to look at. We want to use the entire file. The length of the audio file can be found using SoX: soxi -d yourwavfile.wav

MACROPHONE

Look in /proj/tts/data/english/macrophone/scripts/ for a number of scripts that select subsets

based on different features.

CALLHOME

Files of interest are under /proj/tts/data/english/callhome.

Under datasel/subsets/, longest_* are different-sized subsets of the longest utterances. e.g. longest_15_min.txt is 15 minutes worth of the longest utterances in the corpus (female speakers only). Other similarly-named files are subsets based on other features.

Under datasel/scripts are some of the scripts used to create those subsets.

BURNC

/proj/tts/data/english/brn/datasel contains prepared subsets for a variety of features.

/proj/tts/data/english/brn/datasel/docs contains a few python scripts related to making subsets.

10. Variations voice Speaker-Adaptive Training Using HTS

Using the HTS SAT demo as-is

If you are dropping in your own data, the main difference is that for each type of data, each

speaker gets his or her own directory. When extracting acoustic features, keep in mind that

you want to be setting appropriate f0 ranges for each speaker. Use a general male/female

range, or even better, pick the range specifically for each speaker.

For synthesis, you have to have gen labels that match the speaker you want to adapt to; see how this is done in the data/Makefile.

Other changes you have to make in data/Makefile:

DATASET if you are using Change the list of speakers to the appropriate speaker IDs. TRAINSPKR, ADAPTSPKR,

and ALLSPKR. Change ADAPTHEAD to whatever is appropriate.

Voice Training HTS

21

Change F0_RANGES to the correct thing for each speaker. We are currently using 110 280 for female speakers and 50 280 for male, but it's better to customize for each speaker if possible.

Changes you have to make in scripts/Config.pm:

$spkr $spkrPat -- the %%% is the mask for the part of the filename that represents the

speaker ID. $prjdir

Synthesizing directly from a SAT-trained AVM without adapting to a specific speaker

Note that this is theoretically not something you should do, since the AVM is in some

undefined space until you adapt it to a particular speaker. However, the implementation of

AVMs in HTS produce reasonable, average-sounding speech.

Speech lab students: see /proj/tts/examples/HTS-demo_AVM for an example. Modified CONVM and ENGIN steps were added to convert AVM MMFs to the HTS-engine format and synthesize from them. We basically removed everything referring to the transforms since we don't want to use one.

Model Interpolation Using HTS-Engine

If you have two trained models, you can use hts-engine to interpolate and synthesize

from them. Here is an example interpolation command, assuming you already have voices

trained under directories voice1 and voice2:

hts_engine -td voice1/voices/qst001/ver1/tree-dur.inf -td voice2/voices/qst001/ver1/tree-dur.inf -tf voice1/voices/qst001/ver1/tree-lf0.inf -tf voice2/voices/qst001/ver1/tree-lf0.inf -tm voice1/voices/qst001/ver1/tree-mgc.inf -tm voice2/voices/qst001/ver1/tree-mgc.inf -tl voice1/voices/qst001/ver1/tree-lpf.inf -tl voice2/voices/qst001/ver1/tree-lpf.inf -md voice1/voices/qst001/ver1/dur.pdf -md voice2/voices/qst001/ver1/dur.pdf -mf voice1/voices/qst001/ver1/lf0.pdf -mf voice2/voices/qst001/ver1/lf0.pdf -mm voice1/voices/qst001/ver1/mgc.pdf -mm voice2/voices/qst001/ver1/mgc.pdf -ml voice1/voices/qst001/ver1/lpf.pdf -ml voice2/voices/qst001/ver1/lpf.pdf -dm voice2/voices/qst001/ver1/mgc.win1 -dm voice2/voices/qst001/ver1/mgc.win2 -dm voice2/voices/qst001/ver1/mgc.win3 -df voice2/voices/qst001/ver1/lf0.win1 -df voice2/voices/qst001/ver1/lf0.win2 -df voice2/voices/qst001/ver1/lf0.win3 -dl voice2/voices/qst001/ver1/lpf.win1 -s 48000 -p 240 -a 0.55 -g 0 -l -b 0.4 -cm voice1/voices/qst001/ver1/gv-mgc.pdf -cm voice2/voices/qst001/ver1/gv-mgc.pdf -cf voice1/voices/qst001/ver1/gv-lf0.pdf -cf voice2/voices/qst001/ver1/gv-lf0.pdf -k voice2/voices/qst001/ver1/gv-switch.inf -em voice2/voices/qst001/ver1/tree-gv-mgc.inf -ef voice1/voices/qst001/ver1/tree-gv-lf0.inf -ef voice2/voices/qst001/ver1/tree-gv-lf0.inf -b 0.0 -ow nat_0001.wav nat_0001.lab -i 2 0.5 0.5

hts_engine

Speech lab students: we have this located in /proj/tts/hts-2.3/hts_engine_API-1.09/

bin/hts_engine

Voice Training HTS

22

Options

td, tf, tm, and tl are all decision tree files (for state duration, spectrum, log f0, and low-pass

filter respectively). You need to specify these for both voice1 and voice2.

md, mf, mm, and ml are all pdf model files for state duration, spectrum, lf0, and low-pass filter respectively. You need to specify these for both of the voices you are interpolating.

dm, df, and dl are window files for calculation delta of spectrum, lf0, and lpf respectively. These should be the same for both voices so no need to specify twice, but you do need to put win1, win2, and win3 for both dm and df, but just win1 for dl.

cm and cf are filenames for global variance of spectrum and lf0. It will let you specify these for either just one or both voices, but since they may differ for different voices, it should get specified for both voices.

kis a tree for GV switch. You can specify for just one or for both voices.

em and ef are decision tree files for global variance of spectrum and lf0. You can specify for just one or for both voices, but again these will differ for different voices, so best to specify for both.

Synthesis

-ow nat_0001.wav -- the output synthesized file.

nat_0001.lab -- the fullcontext label file to synthesize from.

-i 2 0.5 0.5 -- interpolate two voices, with weights each 0.5.

Miscellaneous HTS Commands

Converting binary to ASCII .mmf:

/proj/tts/hts-2.3/htk/HTKTools/HHEd -C ../../../../configs/qst001/ver1/trn.cnf -D -T 1 -H

re_clustered.mmf -w re_clustered.ascii.mmf empty.hed ../../../../data/lists/full.list

where empty.hed is just an empty file.

![Clear Voice Product Training[1]](https://static.fdocuments.in/doc/165x107/577d364b1a28ab3a6b92bc34/clear-voice-product-training1.jpg)