Value-Added as a Measure of Teacher Impact on Student Achievement

-

Upload

germaine-rush -

Category

Documents

-

view

26 -

download

0

description

Transcript of Value-Added as a Measure of Teacher Impact on Student Achievement

Value-Added as a Measure of Teacher Impact on Student

Achievement

2012 Center of Excellence Research Consortium Francis Marion University

March 22, 2012

Andy Baxter & Jason SchoenebergerCharlotte-Mecklenburg Schools

3/22/2012Center of Excellence Research Consortium

1

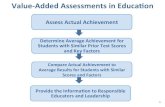

What We Mean by Value-Added

The next slides were created by a 7th Grade Math teacher in Wisconsin in an attempt to explain the rationale for the type of growth measure being adopted by CMS.

The slides can be found at:

http://varc.wceruw.org/tutorials/oak/index.htm

3/22/2012Center of Excellence Research Consortium

3

Let’s evaluate the performance of two gardeners.

We keep a yearly record to keep track of the height of the trees for evaluation.

For the past year, they have been tending to their oak trees trying to maximize the height of the trees.

3/22/2012Center of Excellence Research Consortium

4

This method is analogous to a Proficiency Model.

To measure the performance of the gardeners, we will measure the height of the trees today (1 year after they

began tending to the trees).Using this method, Gardener B is the superior gardener.

3/22/2012Center of Excellence Research Consortium

5

Using our records, we can compare the height of the trees one year ago to the height today.

By finding the difference between these heights, we can find how much the trees grew during the year of gardener’s care.

We can see that Oak B had superior growth this year.

This is analogous to a Simple Growth Model .

3/22/2012Center of Excellence Research Consortium

6

Oak A is in a region that experiences a high level of rainfall.Oak B is in a region with very low rainfall.

Oak A is in a region with poor soil richness.Oak B is in a region with very rich soil.

Oak A is in a region infested with insect pests.Oak B is in a region with very few insect pests.

3/22/2012Center of Excellence Research Consortium

7

+20 SimpleGrowth

+14 SimpleGrowth

Now we will adjust for environmental conditions to give an “apples to apples” comparison of the two oak trees.

To calculate our new adjusted growth, we need to start with simple growth.

- 3 for Rainfall

+ 3 for Soil

+ 8 for Pests_________+22 inchesAdjusted Growth

+ 5 for Rainfall- 2 for Soil

- 5 for Pests_________+18 inches

Adjusted Growth

This is analogous to an Adjusted Growth Model – another name for Value-Added.

3/22/2012Center of Excellence Research Consortium

8

Not all gardeners are the same. Are all teachers?

Section 2

3/22/2012Center of Excellence Research Consortium

9

This difference matters for students later in life.

For one year, difference for a student between having a 50th and 84th percentile teacher.

Raises probability of attending college at age 20 by 0.5 percentage points..Difference in lifetime earnings is equivalent to giving each student $4,600.Decreased probability of teenage child-bearing

http://obs.rc.fas.harvard.edu/chetty/value_added.html

3/22/2012Center of Excellence Research Consortium

15

Key Question Value-Added Attempts to Answer

3/22/2012Center of Excellence Research Consortium

17

2 Teachers

1 Classroom

What’s the Difference

for the Students?

How Value-Added is Calculated (1 of 3)

Scor

e

Year

Estimating Teacher Effects

Expected Score Based on 2 Prior Scores

2007 20092008

Step 1:

Calculate a student’s

expected score.

How Value-Added is Calculated (2 of 3)

Scor

e

Year2007 20092008

Estimating Teacher Effects

Expected Score Based on 2 Prior Scores

Actual Score

Difference(Growth/Change)

Step 2:

Measure differencein

actual score and

expected score.

How Value-Added is Calculated (3 of 3)

Scor

e

Year2008 20102009

Estimating Teacher Effects

Expected Score Based on 2 Prior Scores

Actual Score

What part of this change derives from

these sources?

Student

TeacherClassroom

School

Random

Step 3:

Determine howmuch of the

differenceis due to the

teacher.

Goal of Value-Added Models

• Identify the portion of the residual, or error that is due to the teacher

• To accomplish this, we have to reduce the influence of, and account for differences associated with other factors: student prior achievement, school climate, etc.

Basic Requirements

• Longitudinal data, with test scores from multiple grades for each student

• Tests with good psychometric properties measuring attribute of interest

• Databases linking students, teachers and schools, with student, neighborhood, family, teacher, etc. characteristics

Accounting for Differences

• Differences exist between students, classrooms and schools

• Inclusion of covariates (school-level % FRL) or fixed effects?

• How do we intend to use the results of the model?

Example of Adjusting for Differences

3/22/2012Center of Excellence Research Consortium

24

Student Classroom SchoolGenderAgeEnglish FluencyExceptional Child CategoryRepeating GradeFirst Yr in the SchoolTest Score (2 Prior Yrs)Days AbsentDays in OSS (Prior Year)Days in ISS (Prior Year)McKinney-Vento statusStudent mobilityGrade (e.g., 4th)Year (e.g., 2009)Academically Gifted

% MaleAvg. Age% LEP% EC% Repeating Grade% First Yr in SchoolAvg. Test Score (Prior Yr)Avg. Days Absent Avg. Days in OSS (Prior Yr)Avg. Days in ISS (Prior Yr)% Academically GiftedClass Size

% MaleAvg. Age% LEP% EC% First Yr in SchoolSchool SizeSchool Type (ES, MS, HS)% School EDS % School Mobility % Academically Gifted

Key Data Issues

• Teacher-Student Matches– Teacher mobility– Student mobility– Dynamic models of team teaching

• Missing Data– Classification based on patterns (MCAR,

MAR, MNAR)– Estimate accuracy depends on model

used3/22/2012

Center of Excellence Research Consortium

26

Key Data Issues

• Omitted Variables– We can’t measure everything– Confounding occurs when other

influences are incorrectly modeled, or not modeled at all

– Extent of confounding dependent upon the clustering of students/teachers with different characteristics

3/22/2012Center of Excellence Research Consortium

27

Key Data Issues

• Achievement Tests– Scaling and

construction of tests

– Timing of tests– Measurement error

present and heteroskedastic

3/22/2012Center of Excellence Research Consortium

28

3. Does sorting bias the effects?

• High confidence– Students to teachers on observables

• Moderate confidence– Students to teachers on unobservables

• No confidence– If truly highly effective teachers are

going to affluent schools then we are docking them .

3/22/2012Center of Excellence Research Consortium

38

Remember the Status Quo

1. In what ways, and to what extent, might principal observations be biased?

2. What is the width of the confidence interval you’d place around a summative evaluation?

3. How reliable are principal observations? (i.e., inter-rater reliability?)

4. How connected are observation results to student achievement results?

3/22/2012Center of Excellence Research Consortium

39

Recruiting

3/22/2012Center of Excellence Research Consortium

42

Value-Added of Teachers by Undergraduate Institution First 5 Years of Teaching

Notes: 4th-8th grade math and reading teachers with five or fewer years of experience between 1998-99 to 2008-09

-.1

-.05

0.0

5R

eadi

ng V

AM

-.1 -.05 0 .05 .1 .15Math VAM

Institutions marked with a red dot have VA in math or reading significantly different from zero.

Development0

510

1520

Val

ue-A

dded

(Day

s of In

stru

ctio

n)

0 1 2 3 4 5 6 7 8 9-25 26+Years of Experience

Math Value-Added 95% Confidence Intervalcreated 20100526 at 15:30:48 using on

MathReturns to Experience

3/22/2012Center of Excellence Research Consortium

44

Feedback

3/22/2012Center of Excellence Research Consortium

45

How am I doing over

time?

How am I doing over

time?

How am I doing with different

types of students?

Evaluation

46%3%

1% 50%

-90

-45

0

45

90

135

Tea

cher

Eff

ective

ness

(Day

s Val

ue-A

dded

+/- 1

80)

0 1 2 3 4

Evaluation Score

Correlation of value-added estimate and evaluation rating is .24. Scores of 3 or higher on evaluation indicate that the teacher performed at or above standard.Value-Added estimates above 0 indicate above-average effectiveness.

CMS Teachers (2010), MathCorrelation of Evaluations to Value-Added Teacher Estimates

3/22/2012Center of Excellence Research Consortium

46

Retention

51 6580

30 19 31

31 3265

-80-60-40-20

020406080

100

-80-60-40-20

020406080

100

2008 2009 2010

2008 2009 2010

Teacher 1 Teacher 2

Teacher 3

Tea

cher

Eff

ect

(+/- 1

80 D

ays In

stru

ctio

n b

y A

vera

ge

Tea

cher

)

Year (Spring)District percentile across all subjects taught by teacher label each point .

Middle School CTrajectory of Effectiveness for Teachers Just Granted Career Status

3/22/2012Center of Excellence Research Consortium

47

Percentile of Teacher Value-added Score

Compensation

3/22/2012Center of Excellence Research Consortium

48

Conclusion

• It’s one measure.• Not perfect.• But it tells us something meaningful

about a teacher’s impact on students both in the short-term.

• And far into adulthood.

3/22/2012Center of Excellence Research Consortium

52

Bibliography

• Braun (2005)• Chetty, Friedman & Rockoff (2011)• Goldhaber (2011)• Guarino (2011)• Hanushek (2003)• McCaffrey, Lockwood, Koretz & Hamilton (2003)• McCaffrey, Lockwood, Koretz, Louis & Hamilton (2004)• Raudenbush (2004)• Rivkin, Hanushek & Kain (2005)• Rothstein (2009; 2010)• Tekwe, Carter, Ma, et al. (2004)• Todd & Wolpin (2003)

3/22/2012Center of Excellence Research Consortium

55

Contact Information

Andy BaxterDirector, Human Capital StrategiesCharlotte-Mecklenburg [email protected]

Jason SchoenebergerDoctoral CandidateUniversity of South [email protected]

3/22/2012Center of Excellence Research Consortium

56

Add in some other slides on the ceiling effects

3/22/2012Center of Excellence Research Consortium

58

Key Identifying Decisions

• How to control for observable and unobservable student characteristics that are related to assignment to a given teacher and to the student’s test score– Questions about controls at the student,

classroom, and school – Questions about how to handle time-

invariant student heterogeneity

3/22/2012Center of Excellence Research Consortium

59

Key Estimating Questions

• Accounting for measurement error in the tests

• Accounting for sampling error • Number of prior tests

3/22/2012Center of Excellence Research Consortium

60