Uncertain Numerical Data Clustering Using VORONOI Diagram ... · Uncertain Numerical Data...

Transcript of Uncertain Numerical Data Clustering Using VORONOI Diagram ... · Uncertain Numerical Data...

Uncertain Numerical Data Clustering UsingVORONOI Diagram and R-Tree With Ensemble

SVM

Dissertation

submitted in partial fulfilment of the requirementsfor the degree of

Master of Technology in Computer Engineering

by

Digambar M. Padulkar

Roll No: 120922015

under the guidance of

Prof. V. Z. Attar

Department of Computer Engineering and Information TechnologyCollege of Engineering, Pune

Pune - 411005.

2012

ii

Dedicated tomy father

Mr. Machhindra D. Padulkar

andmy mother

Mrs. Parvati M. Padulkar

iv

Dissertation Approval Sheet

This is to certify that the dissertation titled

Uncertain Numerical Data Clustering Using VORONOI Diagram andR-Tree With Ensemble SVM

has been successfully completed

By

Digambar M. Padulkar(120922015)

and is approved for the degree of Master of Technology in ComputerEngineering.

Prof. V. Z. Attar(Guide)

Dr. Jibi Abraham(H. O. D.)

External Examiner

Date :

Place : College of Engineering, Pune

vi

Abstract

Recently data mining over the uncertain data grabed more attention of the data miningcommunity. To classify or cluster the valid or certain data, there are different approacheslike DTL, Rule based Classification, Naive Bayes Classification and many more tech-niques. Its easy to classify the certain data but classification of the uncertain data is bitdifficult. The uncertainty occurs in the a data because of the impresize measurementof the results, like scientific results, data from sensor netowork, measuring temperature,humidity, pressure and so on. From such a sources there is possibility of getting theuncertainty in a data. Main task is to handle the uncertainty of the data in order toclassify or cluster it. It comes under the NP-Hard problems. Solving this problem,different approaches are available. We study the problem of clustering and classifica-tion of uncertain objects whose locations are described by probability density functions(pdf) means valued uncertainty. We show that the averaging algorithm with K- Meanalgorithm, which generalises the k-means algorithm to handle uncertain objects, is veryinefficient. The inefficiency comes from the fact that it does the averaging of the rangeof uncertain attribute. In UK-means, an object is assigned to the cluster whose repre-sentative has the smallest expected distance to the object. For arbitrary pdfs, expecteddistances are computed by numerical integrations, which are costly operations. Previousliterature has applied bounding-box-based techniques to reduce the number of ExpectedDistance(ED) calculation. We use pruning techniques that are based on Voronoi dia-grams to further reduce the number of expected distance calculation. These techniquesare analytically proven to be more effective than the basic bounding- box-based tech-nique previously known in the literature. We use R-tree index to organise the uncertainobjects in groups so as to reduce pruning overheads. We conduct experiments to evalu-ate the effectiveness of our novel techniques, and extend the studies to different datasets.We show that these techniques are additive and, when used in combination, significantlyoutperform previously known methods.

vii

viii

Acknowledgments

I express my sincere gratitude towards my guide Prof. V. Z. Attar for her constanthelp, encouragement and inspiration throughout the project work. Without her invalu-able guidance, this work would never have been a successful one. I am also thankfulto Prof. A. A. Sawant , Prof. Dr. J. V. Aghav for their constant support andmotivation. I am also thankful to the Principal Vidya Pratishthans College of Engi-neering Baramati,Dr. S. B. Deosarkar for providing me the opportunity to pursuemy M. Tech. Program. My special thanks to my friend Prof. D. A. Zende withouthis support it could be a dream. I would also like to thank those who supported me tofinish the work. I am also thankful those who supported me directly, indirectly from mycollege and society. I express my siencere gratitude towards my parents, without theirblessings this work could not be possible. at last but not the least I am thankful to HODComputer Engineering and Information technology Department College of EngineeringPune Dr. Jibi Abraham and Director, Dr. A. D. Sahastrabuddhe for providinghealthy academic environment.

Digambar M. PadulkarCollege of Engineering, Pune

May 21, 2012

ix

x

Contents

Abstract vii

Acknowledgments ix

List of Figures xiii

1 Introduction: Uncertain Data 11.1 Uncertain Numerical Data . . . . . . . . . . . . . . . . . . . . . . . . . . 11.2 Clustering Approaches on Uncertain Data . . . . . . . . . . . . . . . . . 21.3 Problem Defination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.4 Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.4.1 Handling Uncertain Numerical Data . . . . . . . . . . . . . . . . 31.5 Thesis Objective and Scope . . . . . . . . . . . . . . . . . . . . . . . . . 31.6 Thesis Outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

2 Literature Survey 52.1 Uncertainty in a Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.1.1 Uncertain Data Mining Application . . . . . . . . . . . . . . . . 72.2 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

3 Clustering and Classification of Uncertain Data 93.1 Clustering System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

3.1.1 Cluster formation for Uncertain Data . . . . . . . . . . . . . . . 103.1.2 VORONOI Diagrams . . . . . . . . . . . . . . . . . . . . . . . . 113.1.3 R-Tree Indexing . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.2 Classification System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

4 Experiments and Results 154.1 Dataset Used . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154.2 MBR formation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154.3 Clustering using VORONOI Diagram . . . . . . . . . . . . . . . . . . . 184.4 Classification Result . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

4.4.1 Clsuter Average Distance and Clssification Accuracy . . . . . . . 19

5 Conclusion and Future Work 255.1 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255.2 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265.3 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Bibliography 29

xii

List of Figures

3.1 Clustering process block diagram . . . . . . . . . . . . . . . . . . . . . . 93.2 Sample VORONOI Diagram . . . . . . . . . . . . . . . . . . . . . . . . . 123.3 Structure of R-Tree . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133.4 Classification process block diagram . . . . . . . . . . . . . . . . . . . . 14

4.1 MBR formation using R-Tree Method for synthetic data . . . . . . . . . 164.2 MBR formation using R-Tree Method for real dataset long-4 . . . . . . 164.3 MBR formation using R-Tree Method for real dataset 100ml-4-I . . . . . 174.4 VORONOI Diagram Clustering for synthetic data . . . . . . . . . . . . 184.5 VORONOI Diagram Clustering for real dataset long-4 . . . . . . . . . . 194.6 VORONOI Diagram Clustering for real dataset long-4 . . . . . . . . . . 204.7 VORONOI Diagram Clustering for real dataset 100ml-4-I . . . . . . . . 204.8 Result of individual and Hybrid SVMs on synthetic dataset . . . . . . . 214.9 Average cluster distance VS Accuracy of classification on synthetic dataset 214.10 Result of Individual and Hybrid SVM on real dataset long-4 . . . . . . . 224.11 Result of Individual and Hybrid SVM on real dataset 100ml-4-I . . . . . 224.12 Result of Avg. Cluster Dist. VS Acuuracy of SVM on real dataset long-4 234.13 Result of Avg. Cluster Dist. VS Acuuracy of SVM on real dataset 100ml-

4-I . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234.14 Result of Avg. Cluster method vs. SVM with VORONOI diagram on

real dataset long-4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

xiv

Chapter 1

Introduction: Uncertain Data

1.1 Uncertain Numerical Data

Clustering is a technique that has been widely studied and applied to many real- lifeapplications. Many efficient algorithms, including the well known and widely appliedk-means algorithm, have been devised to solve the clustering problem efficiently. Tradi-tionally, clustering algorithms deal with a set of objects whose positions are accuratelyknown. The goal is to find a way to divide objects into clusters so that the total dis-tance of the objects to their assigned cluster centres is minimised. Although simple, theproblem model does not address situations where object locations are uncertain. Datauncertainty[1][2], however, arises naturally and often inherently in many applications.For example, physical measurements can never be 100 percent precise in theory (dueto Heisenbergs Uncertainty Principle). Limitations of measuring devices thus induceuncertainty to the measured values in practice. As another example, consider the ap-plication of clustering a set of mobile devices. By grouping mobile devices into clusters,a leader can be elected for each cluster, which can then coordinate the work within itscluster. For example, a cluster leader may collect data from its clusters members, pro-cess the data, and send the data to a central server via an access point in batch. In thisway, local communication within a cluster only requires short-ranged signals, for whicha higher bandwidth is available. Long-ranged communication between the leaders andthe mobile network only takes place in the form of batch communication. This resultsin better bandwidth utilisation and energy conservation.

We remark that device locations are uncertain in practice. A mobile device may[1][2][10]deduce and report its location by comparing the strengths of radio signals from mobileaccess points. Unfortunately, such deductions are susceptible to noise. Furthermore,locations are reported periodically. Between two sampling time instances, a locationvalue is unknown and can only be estimated by considering a last reported value andan uncertainty model. Typically, such an uncertainty model considers factors such asthe speed of the moving devices and other geometrical constraints (such as road net-work, etc.). In other applications (such as tracking animals using wireless sensors), thewhereabouts of objects are sampled. The samples of a mobile object are collected toconstruct a probability distribution that indicates the occurrence probabilities of theobject at various points in space. In this paper we consider the problem of cluster-ing uncertain objects whose locations are specified by uncertainty regions over whicharbitrary probability density functions (pdfs) are defined.

1

Chapter 1. Introduction: Uncertain Data

1.2 Clustering Approaches on Uncertain Data

Traditional clustering methods were designed to handle point-valued data and thuscannot cope with data uncertainty. One possible way to handle data uncertainty is tofirst transform uncertain data into point-valued data by selecting a representative pointfor each object before applying a traditional clustering algorithm. For example, thecentroid of an objects pdf can be used as such a representative point. However, in[3],it is shown that considering object pdfs gives better clustering results than the centroidmethod.

In this project we concentrate on the problem of clustering objects with valueduncertainty. Rather than a single point in space, an object is represented by a prob-ability density function (pdf) over the space Rm being studied. We assume that eachobject is confined in a finite region, so that the probability density outside the regionis zero. Each object can thus be bounded by a finite bounding box. This assumptionis realistic because, in practice the probability density of an object is high only withina very small region of concentration. The probability density is negligible outside theregion. (For example, the uncertainty region of a mobile device can be limited by themaximum speed of the device.)

The problem of clustering uncertain objects was first described in [7], in which theUK-means algorithm was proposed. UK-means is a generalisation of the traditionalk-means algorithm to handle objects with uncertain locations. Traditional k-meansclustering algorithm (for point-valued data) is an iterative procedure. Each iterationconsists of two steps. In step 1, each object Oi is assigned to a cluster whose rep-resentative (a point) is the one closest to Oi among all representatives. We call thisstep cluster assignment. In step 2, the representative of each cluster is updated by themeans of all the objects that are assigned to the cluster. In cluster assignment, thecloseness between an object and a cluster is measured by some simple distance suchas Euclidean distance. The difference between UK-means and k-means is that underUK-means, objects are represented by pdfs instead of points. Also, UK-means computesexpected distances (EDs) between objects and cluster representatives instead of simpleEuclidean distances during the cluster assignment step. Since many EDs are computed,the major computational cost of UK-means is the evaluation of EDs, which involvesnumerical integration using a large number of sample points for each pdf. To improveefficiency[1][2] introduced some pruning techniques to avoid many ED computations.The pruning techniques make use of bounding boxes over objects as well as the triangleinequality to establish lower- and upper-bounds of the EDs. Using these bounds, somecandidate clusters are eliminated from consideration when UK-means determines thecluster assignment. of an object. The corresponding computation of expected distancesfrom the object to the pruned clusters are thus not necessary and are avoided.

An important contribution of this project is the introduction VORONOI diagram.prunning in UK-means algorithm that are based on Voronoi diagrams[1][2]. These prun-ing techniques take into consideration the spatial relationship among the cluster rep-resentatives. The Voronoi-diagram-based technique is theoretically more effective thanthe basic bounding-box-based technique. Indeed,pruning techniques are so effective thatover 95 percent of the ED evaluations can be eliminated. With a highly effective pruningmethod, only few expected distances are computed and thus the once dominating EDcomputation cost no longer occupies the largest fraction of the execution time. Theoverheads incurred in realizing the pruning strategy (e.g., the testing of certain pruningconditions) now become relatively significant. To further reduce execution time, we have

2

1.3 Problem Defination

used a performance boosting technique based on R-trees index. Instead of treating theuncertain objects as an unorganised set of objects, as in the basic k-means algorithmand derivatives, we index the uncertain objects using a bulk-loaded R-tree[2]. Each nodein the R-tree represents a rectangular region in space that encloses a group of uncertainobjects. The idea is to apply Voronoi-diagram-based pruning techniques on an R-treenode instead of on each individual object that belongs to the node. Effectively, we prunein batch. Our experiment shows that using an R-tree[2] significantly reduces pruningoverheads. It is important to note that these two types of techniques have different goals.The Voronoi-diagram-based techniques aim at reducing the amount of ED calculations.at the expense of some pruning cost. The R-tree-based booster aims at reducing thispruning cost, which becomes dominant once the number of ED calculations have beentremendously reduced.

Since pruning techniques are orthogonal to the ones proposed in[1][2], it is possibleto integrate the various pruning techniques to create hybrid algorithms. Our empiricalstudy shows that the hybrid algorithms achieve significant performance improvement.

1.3 Problem Defination

Let D be the m dimensional dataset over Rm and contains n data records. Each itemin the dataset is uncertain with valued uncertainty with the range [min,max], min-minimum value of specifird range and max- maximum value of the specified range. Taskis to apply Probability Density Function(PDF) and cluster the items into ten clusters.supply this clustered items to other classification model and generate the classificationresult.

1.4 Challenges

1.4.1 Handling Uncertain Numerical Data

Handling the uncertain numerical data is the main motive of this study. the datauncertainty occurs is of two types called as existensial uncertainty and value uncertainty.existential uncertainty occurs when it is uncertain whether an object or data tuple exists.for example, a data tuple in a data set can be associated with the probability[6]. thevalue uncertainty occurs when the tuples are known to exist but their values are notknown precisely. the uncertainty with a value uncertainty generally represented by aprobabibity density function(PDF) over the finite and bounded region over the space.there are different techniques which will do the clustering of data, but the existingclustering techniques will not applied directly to handle the uncertain numerical data.so here we propose a system which will find or fix the region of the uncertain objectover the space and then apply the clustering techniques on the uncertain data objectsbounded by some region. Once we fix the region its easy to apply the spatial clusteringtechniques on it so that we cluster the records.

1.5 Thesis Objective and Scope

This work aims at improving the performance of uncertain numerical data clusteringand classification in terms of accuracy in classification. In the previous attempts forclustering and classification of uncertain numerical data the usual algorithms like k-mean cannot apply directly for clustering. So the specified treatment is required to

3

Chapter 1. Introduction: Uncertain Data

the uncertain numerical data in terms of the Probability Density Function (PDF). Sothat we fix the region where probability of having particular record is non zero. Themain focus of this work is to present the solution for clustering uncertain numerical dataand then classify it and show that accuracy of classification depends upon the clusteraverage distance, by improving the cluster quality. The implementation of clusteringand classification algorithms is carried out in a java language with the help of WEKA[17]and KEEL Miner[18] tool and results are discussed. The scope of this work is limited touncertain numerical data only at a large extent. The effect of uniform and non uniformdistribution is not considered.

1.6 Thesis Outline

Thesis is organized into five chapters, chapter 1. describes about the uncertain nu-merical data, their clustering algorithms. then it describes the major challenges to behandled in terms of valued uncertainty of the dataset. chapter 2. is all about the lit-erature survey which describes the VORONOI diagrams, R- Tree indexing method andMinimum Bounding Rectangles. Chapter 3. describes about what kind of the clusteringand classification techniques used in this project. For clustering approach it describesthe VORONOI diagrams and R-Tree with MBR and for classifiacation it describes theensemble SVM approach. chapter 4,5 expalains about the experimental work we havecarried out and conclusion and future work we listed respectively.

4

Chapter 2

Literature Survey

To cluster the uncertain data, [4] devised the approach with assumption that every tupleshould have a continuos probability Distribution Function(Here after called as PDF) andevery data tuple having mulitple instances, like, xi(x1 − − − −xn). So the stream ofthe data comming in the form of (Dt, f) where the t is the time stamp and D is thedata, f is the probability density function. There may be different instances of the givendata tuple like x1, x2, x3 −−−−−−xn then it calculates the instance uncertainty andtuple uncertainty. Based on these two uncertianty metrics and the multidimensionaldata, having the dimension d it generates the Probability Cluster Feature (Here afrecalled as PCF). The PCF is calculate based on the above two calculation for instanceuncertainty and tuple uncertainty. After that uses the Lumicro algorithm. Based onthe above mentioned PCF it will add it to the desired cluster whose centroid is morenearer to the data we are adding. The Lumicro algorithm works as follows, initially thealgorithm will start with the number of null clusters and initially creates a singletoneclusters to which the number of poits are added subsequently. The Lumicro implementsthe k-NN strategy for the points to be in the clusters. The Lumicro algorithm is havingthe linear growth as that of the Umicro[4] but the basic difference between the Umicroand Lumicro is that in Umicro it used seperate dimensions for data and error, meansboth the things are handled differently, this is the overhead, its reduced in the Lumicroalgorithm.

According to [4] it clusters the uncertain data receiving in the form of the stream.The data received is multidimensioanl as x1 − − − − − −xd with d dimension. As thedata is uncertain its associated with the some sort of the error (Dt + r) here Dt is adata received at time t, and r is the error associated with it. It taken as (Data, error)in combination. So the error is treated as seperately. It uses the algorithm callled asUmicro algorithm, this algorithm will take the input as a pair of (Data, error). This isapplying the approach of micro clustering. As we compre it with the previous algorithmLumicro algorithm, the Umicro will take more time as it processes the records in thepair of (data, error). But that is not happening in the Lumicro algorithm. Again inthis it is going to calculate the Expection, for expected diatance from centroid. TheExpection is applied on the each and every record in order to cluter them properly. Itsincorporate the time decay its important for the stream of data(online data). Commingfrom the sources like satellite or sensor networks to measure the pressure, tempreture,humidity etc. The arrival time very important, while applying the Expection on therecords to calculate the Expected diatance the time decay is important, that not usedin the paper [3]to cluster the records efficiently. In order to cluster the records correctlyin the it calculates the Expected Cluster Features(ECF) and on the basis of the ECF it

5

Chapter 2. Literature Survey

classify the records. The Umicro algorithm used in this is more efficient than the Umeanalgorithm(Uncertain Mean).

According to [2] they have used the approach called as Voronoi diagrams and R- Treeindexing. To cluster the uncertain data by using UK mean and Umicro the Expectioncalculation for every data record is must[3, 4] without the Expection calculation of therecords its nt possible to cluster the data points into specific cluster. But in the [2]the approach is different, they have added the Voronoi diagrams, and R- Tree indexingfor clustering the records in the specific clusters. In UK Mean algorithm it has tocalculate the Expected Distance (ED) between objects and their cluster representativesfor arbitrary PDF’s the ED’s calculations are numerical integrations so that which arecostly. By using Voronoi diagrams and prunning techniques we can reduce the ED’s.With the help of R- Tree (varient of the B+ Tree). The R- Tree nodes represents theuncertain data with their multiple values.(only numeric uncertain values are considered).So the every node in tree are indexed with certain number and according that numberthe Voronoi diagrams are represented, and then clusters are formed. This more efficientapprach than the[4] and [4]

In order to cluster the data point by using approximation algorithm its a very promi-nat way to do the clustering with approximation algorithms[5] in this they applied theapproach of the ε-approximation algorithm. They have used K mean, Kmedian algo-rithm, with the approach of approximation. As the factor ε > 0 as its value increase themore the efficiency so that they have taken the 1 + ε, and again it uses the importantfactor called as PDF for the same.

To classify the uncertain data by using Decision Tree Learning[15]. Just they haveextended the work of the traditional decision tree to handle the uncertainty in thedata by using two approaches called as averaging and Ditribution, in this paper also anumerical uncertain data is considered for calssification. To calssify the records withthe first approach of averaging the the range given in the records as uncertain. Butits not that much efficeint to classify the records correctly in the desired class. But byusing the another approach call as the distribution we can classify the records correctly.But we require prunnung the tree. To classify the data by using Rule based classifiers[6] proposes the rule based classifier for the uncertain data, it consider the texttual aswell as numerical data. The total rule generation is depends upon the PDF. For eachentry into the record set the PDF is calculated for uncertain attribute and then theProbabilty Cadinality(Here after called as PC) is taken for each record and accordingto the PC. According to that it generate the adjuncy matrix it contains the PC’s andit will start the Rule generation by using Urule Algorithm, at the same time it appliesthe rule prunning on it so that more and more correct rules will be generated. On theany instance of the record it gets the two probable values, which is for positive class andnegative class. Accordingly that record will go to the specific class.

2.1 Uncertainty in a Data

To represent uncertain data, The possible worlds model is commonly used[13][14], wheredata uncertainty is modelled by a set of possible instances. A more formal represenata-tion of the The possible worlds model can be observed in a probabilistic database, whichis a finite space whose outcomes are all possible instances consistent with a given schema.Each possible instance is associated with a probability of existence, with the sum of allinstances probability equal to 1. Representing uncertain data with The possible worldsmodel, however, is not feasible for its large number of possible instances.

6

2.1 Uncertainty in a Data

In view of the shortcoming with The possible worlds model, simplified representa-tions such as Probabilistic tables[13] and Probabilistic or a set tables[13] are developedto model uncertain data. The two tables have very different objectives in modelinguncertain data. Probabilistic tables focus on modeling the existence of a particulartuple, to determine the probability of a tuple with all attributes value specified. Prob-abilistic or-set tables ,unlike Probabilistic tables, focus on tuples that are known toexist, but the attributes value are unknown. In or-set tables, each attribute has apossible value set. A tuple is composed by picking one possible value from each at-tribute. For example, if a transaction containing attributes A,B,C are known to exist,but the value of each attribute is not known. A or-set representation of this transactionwould be a1,a2,a3,b1,b2,c1,c2, where one of the possible candidate for this transactionis (a2,b2,c1). The Probabilistic tables and Probabilistic or set tables modelled 2 majorclasses of data uncertainty, namely the existential uncertainty and value uncertainty.

Existential uncertainty appears when it is uncertain whether an object or a datatuple exists. For example, a data tuple in a relational database could be associatedwith a probability that represents the confidence of its presence[1][2][3]. Existentialuncertainty also considered issues such as tuple independence i.e. whether the presenseor absense of a tuple would affect another tuples prsense or absense, and whether thereare mutual exclusive relationship between certain tuples. Value uncertainty, on the otherhand, appears when a tuple is known to exist, but its values are not known precisely. Adata item with value uncertainty is usually represented by statistical parameter such asvariance or, for spatial data, a pdf over a finite and bounded region of possible values.In this paper, we study the problem of clustering objects with value (e.g., location)uncertainty where the entire pdf is known. One well-studied topic on value uncertaintyis imprecise query processing. An answer to such a query is associated with a probabilisticguarantee on its correctness. Some example studies on imprecise data include indexingstructures for ran query processing, nearest neighbour query processing, and impreciselocation- dependent query processing.

2.1.1 Uncertain Data Mining Application

The growing interest in uncertain data has led to many interesting mining applications.Traditional data mining algorithms are unable to cope with uncertain data, many newalgorithms are devised to mine uncertain data. The problem of frequent pattern min-ing for precise data is extended to uncertain data[6]. Each item of a transaction isassociated with a probability of existence. Un- like traditional frequent pattern miningproblem, support of a given pattern is defined by counting the number of transactionsthat contain it. In the uncertain data model, the support of a given pattern is definedprobabilistically, where each transaction shows a degree of support for a given patternby a probability, which is calculated by multiplying the probability of different items in atransaction. If the probabilistic support for a given pattern considering all transactions isno less than a user specified threshold, then that pattern is defined as frequent. Supportvector machines classification of uncertain data is also proposed[11]. The new modelconsiders input data be corrupted with noise. These noise should,therefore, be takeninto account to model the underlying input as a hidden mixture component. The pro-posed model has a natural and intuitive geometric interpretation, where margin createdby support vector machine be modified by uncertain points near the margin. Uncertaindata is also considered in decision tree construction[15]Instead of building decision treeon precise numeric data, set of sample points, taken from the probability density func-

7

Chapter 2. Literature Survey

tion(pdf ) of a numeric variable, is used. The objective of considering uncertain data inbuilding decision tree is to improve the accurcacy in predicting class label by consideringthe pdf of the numeric variable. The challenge of building such decision tree is on findingthe split point of variables for internal node of the decision tree, and different pruningtechnique is used to effectively reduce the computation cost. Uncertain data also poseschallenge to the traditional outlier detection problem[12, 13, 14]. Adding uncertainty toall data points change the distribution of the data and hid- ing the real outliers. Theproblem is more challenging when data dimensionality increases. Outliers are harder tocorrectly identified; data with high dimensionality is inherently sparse and every pair ofpoints will appeared to be equidistant. A density based approach is employed to detectoutliers in subspace where the density of data is lower than a user defined threshold. Inthe next section, we will discuss issues related to clustering of uncertain data.

2.2 Summary

Our approach to cluster and classify the uncertain numerical data adds that, VORONOIdiagram base clustering uncertain numerical data saves computational time of the algo-rithm as well as use of R Tree boosts the performance of the clustering process, becauseof R- Tree regerously iterates over the different iterations and generte the intermediate,leaf nodes and root node of the tree, which helps to form the MBRs. and hence itimproves the performance of the clustering process.

8

Chapter 3

Clustering and Classification ofUncertain Data

The whole system is divided into two parts, clustering and classification. these two aredescribed as bellow.

3.1 Clustering System

The Figure 3.1 shows the clustering process carried out. The block diagram expliansabout the clustering process how that is carried out. Uncertain numerical data is sup-plied to the PDF generator algorithm, which generates the PDF’s for all the recordsthose who falls in valued uncertainty.

Figure 3.1: Clustering process block diagram

As shown in the above figure the the generated PDFs are bulk loaded into the R-Tree[2], which goes through the different iterations and fix the final root node for the tree.all the PDFs are stored into the leaf node of the tree and all other intermediate nodesincluding the root node represents some Bounding box called as Minimum BoundingRectangle(MBR)[2], which holds more than one records from the given dataset.As theprocess of MBR generation finishes our next starts called as prunning process with thehelp of the VORONOI diagram. this process fixes the centroid from the given datasetonly and passes over reading the MBRs. If that MBR completely lies inside one the cellthen there is no need to find out EDs for all the data items present into the same MBR,that is the biggest advantage of using the VORONOI diagrams for clustering process.So that when we compare the UK- mean algorithm do the clustering of uncertain data.it passes every data records through the K number of iterations as K cluters are assumed

9

Chapter 3. Clustering and Classification of Uncertain Data

to be generated there. so the total time requirement of the UK-Mean[4] theoraticallycome to O(kXn), where the n- number of records and K- number of cluters want to begenerated.

As expalined previous the MBR represents the more than one uncertain data recordso that, number of EDs calculated will less as compared to the previous algorithm, thisis the major reason of chosing VORONOI diagram for clustering uncertain numericaldata. Probably we are generating ten clusters because we want to use ten SVMs forclassification purpose. so next step start called as classification process.

This is the first part of the whole system in which we are applying clustering tech-niques on the uncertain data. with a algorithms like k-means its difficult to cluster theuncertain numerical data, directly we cant apply any clustering algorithms available onthe uncertain data. so before proceeding to the clustering algorithms we are findingthe PDF’s for all the data records and their attributes present into the dataset. oncewe generate the PDF for all the records that is supplied to the R- Tree[2][1] which assimillar as that of the B+ tree. all the pdfs are supplied to the R-Tree as a input andR-Tree modify itself through the different iterations so that it fixes the final root for thetree. all the leaf nodes of the tree represents the pdf values for the data records. andall the intermediate nodes represents the Bounding Boxes called as Minimum BoundingBox Rectangles(MBRs)[2], which may hold more than one records from the dataset.this process generated MBRs represented by the interanl nodes of the tree, finally thatis represented in terms of the spatial data. this spatial data supplied as a input tothe VORONOI diagrams[2], the property of the VORONOI diagram is to seperate thepoints with distinguished boundaries. the main task is to supply this MBRs to theVORONOI diagrams and check for fisibility where the addition of this MBR is possible,to that VORONOI cell that MBR is added. the benefit doing the clustering based uponthe MBRs rather than seprate records is, it avoids the Expected distance calculation foreach of the records. because we know that each of the MBR holds at leat one records soit reduces the ED calculation while doijng the clustering of uncertain numerical data.we are gernating ten clusters over here because in one of the next step want employeeten different Support Vector Machines (SVM) for the classification purpose. this newlygenerated clusters are supplied as input to the SVM as a classification tool. we are usingthe ensemble approach of SVM classification here in order to improve the classificationaccuracy of model.

3.1.1 Cluster formation for Uncertain Data

Depending on the application, the result of cluster analysis can be used to identify the(locally) most probable values of model parameters[1] (e.g., means of Gaussian mix-tures), to identify high-density connected regions [12][13] (e.g., areas with high popula-tion density), or to minimise an objective function (e.g., the total within-cluster squareddistance to centroids). For model parameters learning, by viewing uncertain data as sam-ples from distributions with hidden parameters, the standard Expectation-Maximisation(EM) framework[12] can be used to handle data uncertainty[13].

There has been growing interest in clustering of uncertain data. In[3], the well-known k-means clustering algorithm is extended to the UK-means algorithm for clus-tering uncertain data. In that study, it is empirically shown that clustering results areimproved if data uncertainty is taken into account during the clustering process. As wehave explained, data uncertainty is usually captured by pdf s, which are generally rep-resented by sets of sample values. Mining uncertain data is therefore computationally

10

3.1 Clustering System

costly due to information explosion (sets of samples vs. singular values). To improvethe performance of UK-means, CK-means introduced a novel method for computingthe EDs efficiently. However, that method only works for a specific form of distancefunction. For general distance functions, takes the approach of pruning, and proposedpruning techniques such as min-max-dist pruning. In this thesis, we follow the pruningapproach and use pruning techniques that are significantly more powerful.

Apart from studies in partition-based uncertain data clustering, for density-baseddclustering, two well-known algorithms, namely, DBSCAN and OPTICS have been ex-tended to handle uncertain data. The corresponding algorithms are called FDBSCAN[12]and FOPTICS[13], respectively. In DBSCAN, the concepts of core objects and reach-ability are defined. Clusters are then formed based on these concepts. In FDBSCAN,the concepts are redefined to handle uncertain data. For example, under FDBSCAN, anobject Oi is a core object if the probability that there is a good number of other objectsthat are close to o exceeds a certain probability threshold. Also, whether an object y isreachable from another object x depends on both the probability of y being close to xand the probability that x is a core object. FOPTICS takes a similar approach of usingprobabilities to modify the OPTICS algorithm to cluster uncertain data.

Clustering of uncertain data is also related to fuzzy clustering, which has long beenstudied in fuzzy logic[12]. In fuzzy clustering, a cluster is represented by a fuzzy subsetof objects. Each object has a degree of belongingness with respect to each cluster.The fuzzy c-means algorithm is one of the most widely used fuzzy clustering methods.Different fuzzy clustering methods have been applied on normal or fuzzy data to producefuzzy clusters. A major difference between the clustering problem studied in this paperand fuzzy clustering is that it focus on hard clustering, for which each object belongsto exactly one cluster. Our formulation targets for applications such as mobile deviceclustering, in which each device should report its location to exactly one cluster leader.

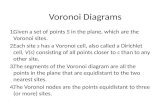

3.1.2 VORONOI Diagrams

Voronoi diagram is a well-known geometric structure in computational geometry[1][2]. Ithas also been applied to clustering. Voronoi diagram is employed to identify the naturalnumber of clusters from the input data points[2]. For n number of input data, all ofthem are used to construct a Voronoi Diagram with n Voronoi cell. Smaller Voronoi cellsindicates they are closer its neighbors than those with larger voronoi cells. By mergingdensed region (smaller voronoi cells) into clusters, the natural number of clusters canbe obtained. Voronoi trees[2] have been proposed to answer Reverse Nearest Neighbour(RNN) queries. Given a set of data points and a query point q, the RNN problem is tofind all the data points whose nearest neighbour is q. Voronoi Diagram is also used ink-means clustering. The methodology is as follows: first, construct a voronoi diagramof k anchor points. Then, input data points located in one voronoi cell will be addedto the cluster associated with the anchor point of that cell. We can do so because ofthe following property: any point in a voronoi cell should be closer to the cells anchorpoints than all other anchor points. Our studies extends this method of clustering pointdata to uncertain data. Following figure 3.2 represents sample VORONOI diagram.

Suppose that in the D- dimensional region Rm we have set of P uncertain datapoints, we have set C of centers and distance function d(Pi, Cj) A Voronoi diagram V(P,C, d(*, *)) assigns each data point pi to the center ci with smallest distance d(pi, cj).This induces a partition of the data points into clusters. For the continuous case, itcan be more natural to refer to regions rather than clusters. in both cases, we mean

11

Chapter 3. Clustering and Classification of Uncertain Data

Figure 3.2: Sample VORONOI Diagram

the subsets of the data points that are closest to a particular center. Assuming weare using the distance function, All regions are convex (no indentations). All regionsare polygonal. Each region is the intersection of half planes, The infinite regions havecenters on the convex hull. In some ways, a Voronoi diagram is just a picture; in otherways,it is a data structure which stores geometric information about boundaries, edges,nearest neighbors, and so on. How would you compute and store this information in acomputer? It’s almost a bunch of polygons, but of different shapes and sizes and ordersand some polygons are infinite”. If you need to compute the exact locations of vertices’sand edges, then this is a hard computation, and you need someone else’s software. Thevoronoi diagram seems to exhibit the ordering imposed by a set of center points thatattract their nearest points. However, since the placement of the centers is arbitrary,the overall picture can vary a lot. The shape and size of the regions in particular seemsomewhat random. Is there a way that we can impose more order? Yes! ... if we arewilling to allow the centers to move.

The voronoi diagram is really a snapshot” of the situation at the beginning of onestep of the continuous K-Means algorithm, when we have updated the clusters. Asmentioned in the previously, we can take a given set of centers with a disorderly” Voronoidiagram, and repeatedly replace the centers by centroids. This will gradually producea more orderly pattern in which the clusters are roughly the same size and shape, andthe centers are truly at the center. The key to this approach is to be able to computeor estimate the centroid of a set of points that are defined implicitly by the nearnessrelation. This algorithm needs the centroid (xi, yi) of each cluster Ci . If Ci is a polygon,we use geometry to find the centroid.

3.1.3 R-Tree Indexing

An R-tree[2] is a self-balancing tree structure that resembles a B+-tree, except thatit is devised for indexing multi-dimensional data points to facilitate proximity-based

12

3.2 Classification System

searching, such as k-nearest neighbour (kNN) queries. R-trees are well studied andwidely used in real applications. They are also available in many RDBMS productssuch as SQLite, MySQL and Oracle.

The sample R-Tree[2] is shown in the following figure3.3

Figure 3.3: Structure of R-Tree

An R-tree conceptually groups the underlying points hierarchically and records theminimum bounding rectangle (MBR) of each group to facilitate answering of spatialqueries. While most existing works on R-tree concentrate on optimising the tree foranswering spatial queries, we use R-trees in the thesis in a quite innovative way: Weexploit the hierarchical grouping of the objects organised by an R-tree to help us checkpruning criteria in batch, thereby avoiding redundant checking.

3.2 Classification System

Above generated clusters are written to the ten seperate data files which lead as a inputto the SVM. while using SVM, the SVMs used are with linear Regression kernal becausethe data tuples are represented in terms of the MBRs. so its effective to use the LinearRegression kernel for the classification purpose.

The Figure 3.4 shows the process of classification of uncertain numerical data. theattempts made to clssify the uncertain data, classifies it but the approach we have usedcluster the data items first and then classify. we proved that the suppling the data itemsas it is to the classification model, and do the clustering and supply the clusters as inputto the classification system it increases the accuracy of the model.That is representd inthe next section result and ayalysis.

As shown in figure3.4 takes input as clusters as seperate datasets and do the classifi-cation. This classification helps to analyse the clustering results. In this system SVM’sare used for further classifiacation.

This classification system classify the clustered items into specified classes. withexisting tools of data mining not a single algorithm which will handle uncertain datadirectly is not available. so the attempt made in this project provides a base solutionwhich will do the classification of uncertain data.

13

Chapter 3. Clustering and Classification of Uncertain Data

Figure 3.4: Classification process block diagram

14

Chapter 4

Experiments and Results

4.1 Dataset Used

To carry series of experiments we have used uncertain numerical dataset from KEELMiner website[18]. this is also called as weak dataset. This is the dataset with nonuni-form distribution,to carry out the experiments uncertain numerical data is supplied tothe PDF generator, this is sub-part of the clustering system. In which it generatesthe PDFs for all the data items persent under uncertainty. Our next module is MBRformation by using R-tree method, this PDF data is supplied as bulk loaded data tothe R-Tree, then it start generation of MBR recursively through different iterations.Thedataset used contains ten thousands of uncertain numerical data records, with five di-mensions. fifth one represents the class attribute. The sample uncertain data is shownbellow.1 : [4.8, 5.18] [3.48, 3.72] [1.105, 1.695] [0.08, 0.32]1 : [5.2, 5.58] [3.78, 4.02] [1.405, 1.995] [0.28, 0.52]1 : [4.42, 4.78] [3.28, 3.52] [1.105, 1.695] [0.18, 0.42]1 : [4.82, 5.18] [3.28, 3.52] [1.205, 1.795] [0.08, 0.32]1 : [4.22, 4.58] [2.78, 3.02] [1.105, 1.695] [0.08, 0.32]2 : [6.82, 7.18] [3.08, 3.32] [4.405, 4.995] [1.28, 1.52]

This dataset contains min and max values by using this min and max we find outthe PDF for given range. Also we have used synthetic dataset, in which we have addeduncertainty, same is tested on this model.

4.2 MBR formation

The uncertain data objects passes through the different models as decribed above. whilepassing through the clustering model, used PDF generation, R- Tree representation forMBR formation to be used for clustering by using VORONOI doagrams. The sampleresult of the series of computations carried by R-tree for MBR generation is shown inthe follwing figure 4.1 shows how the MBRs formed by using R-Tree.

Above MBR generation is for uncertain synthetic data gnerated on WEKA. Anotherrepresentation for real dataset for long-4 from KEEL Miner [18] and another datasetwith uncertainty and four attribute called as 100ml-4-I[18] the series of experiments arecarried out and we have seen the following representations of the MBRs[2] as representedin following figure 4.2 Dataset Long-4: This dataset is used to predict whether an athlete

15

Chapter 4. Experiments and Results

Figure 4.1: MBR formation using R-Tree Method for synthetic data

will improve certain threshold in the long jump, given the indicators mentioned before.We have measured 25 athletes, thus the set has 25 instances, 4 features, 2 classes, nomissing values. All the features, and also the output variable, are intervalvalued For

Figure 4.2: MBR formation using R-Tree Method for real dataset long-4

another dataset called as 100ml-4-I[18] the MBR formation is shown in the figure4.3This dataset deals with predicting whether a mark in the 100 metres sprint race is beingachieved. Actual measurements are taken by three observers, and are combined into thesmallest interval that contains them. 25 instances, 4 features, 2 classes, no missing data.

16

4.2 MBR formation

Figure 4.3: MBR formation using R-Tree Method for real dataset 100ml-4-I

All input and output variables are intervals. In our implementation, we use an R-treelike the one depicted in4.1. Each tree node, containing multiple entries is stored in a diskblock. Based on the size of a disk block, the number of entries in a node is computed.The height of the tree is a function of the total number of objects being stored, as wellas the fan-out factors of the internal and leaf nodes. Each leaf node corresponds to agroup of uncertain objects. Each entry in the node maps to an uncertain object. Thefollowing information are stored in each entry.

• MBR of the uncertain Object.

• The centroid of uncertain Object.

• Pointer to the PDF data of the Object.

Each internal node of the tree corresponds to a supergroup, which is a group of groups.Each entry in an internal node points to a child group. Each entry contains the followinginformation.

• MBR of the child group.

• The number of object under the subtree.

• Centroids of the Objects under the subtree.

The above generated MBRs are passed to the clustering algorithms like UK- Meanwith whom the VORONOI diagrams are working to reduce the ED calculation. asexplained above the MBR contains more than one data records, that are supplied si-multanously to the VD, it finds out the ED for MBR not for the single object. becauseof this feature it reduces the number of ED calculations for all the data objects to beclustered.

The MBR formation process contains the rectangles, because of that we have choosenlinear regression kernel of the SVM for classification purpose.

17

Chapter 4. Experiments and Results

4.3 Clustering using VORONOI Diagram

This is the second step of the clustering process, in which generated MBRs[1, 2] aresuuplied to the VORONOI diagram in order to do the clustering. the VORONOI dia-gram clustering process is dipicted in the following shows the data item clustering withVORONOI diagram. figure 4.4 shows the clustered items using VORONOI diagram[2].Most significant use of VORONOI diagram is it redues the cluster average distance ascompared to the other algorithms.So that it helps to good classification results as shownin the next part. averange cluster distance is minimum,it helps to enhance the classifica-tion accuracy of the model. The generated clusters are written to seperate data files(inthis example 10 clustersare considered). These newly generated clusters are supplied to

Figure 4.4: VORONOI Diagram Clustering for synthetic data

the classification model to classify the data items, that is described in the next section.The same kind of the experiments are also carried out on the real datasets for clusteingpurpose. The datasets used are namely long-4 and 100ml-4-I[18]. when the clusteingprocess by using VORONOI diagram is carried out for both the datasets. their resultclustering, when represented spatially are On the another dataset called as 100ml-4-Isame VORONOI trigulations are applied and the cluster formation is done. Same isdipicted in the following figure4.6 shown in the following figures4.5 The last clusteringdiagram represents the clusters for uncertain or low quality dataset 100ml-4-I[18] whiledoing the clustering process with the help of VORONOI diagram, it does all by usingdelauny edges. this delauny edges helps to fix the boundaries for different clusters. Theboundaries are represented by yellow line in the figure4.7

18

4.4 Classification Result

Figure 4.5: VORONOI Diagram Clustering for real dataset long-4

4.4 Classification Result

The result of classification is dipicted in next figure 4.8. The result shows that individualSVM are less accurate for classification than the Hybrid model of classifiacation. Indi-vidual SVM results varies even if we have employed same SVM multiple time, becauseof the average distance of the clusters. For some of the clusters that average distanceis lesser the accuracy of classifiacation is greater. Again for more than one executionthe clusters are randomly supplied to the SVM. because of that it varies iteration toiteration.

4.4.1 Clsuter Average Distance and Clssification Accuracy

The relation of classifiacation accuracy and average cluster distance is dipicted in fol-lowing 4.9

Also series of the experiments are carried on real datasets namely long-4 and 100ml-4-I[18] and the results of the same are represented bellow

The result of classification accuracy for real dataset 100ml-4-I[18] is represented inthe following figure4.11

The result of average cluster distance and accuracy of SVM with real datasets long-4and 100ml-4-I are shown bellow.

finaly both SVM methods and averaging methods are compared, our method is farahead than the averaging method. this is shown in the following figure ??

19

Chapter 4. Experiments and Results

Figure 4.6: VORONOI Diagram Clustering for real dataset long-4

Figure 4.7: VORONOI Diagram Clustering for real dataset 100ml-4-I

20

4.4 Classification Result

Figure 4.8: Result of individual and Hybrid SVMs on synthetic dataset

Figure 4.9: Average cluster distance VS Accuracy of classification on synthetic dataset

21

Chapter 4. Experiments and Results

Figure 4.10: Result of Individual and Hybrid SVM on real dataset long-4

Figure 4.11: Result of Individual and Hybrid SVM on real dataset 100ml-4-I

22

4.4 Classification Result

Figure 4.12: Result of Avg. Cluster Dist. VS Acuuracy of SVM on real dataset long-4

Figure 4.13: Result of Avg. Cluster Dist. VS Acuuracy of SVM on real dataset 100ml-4-I

23

Chapter 4. Experiments and Results

Figure 4.14: Result of Avg. Cluster method vs. SVM with VORONOI diagram on realdataset long-4

24

Chapter 5

Conclusion and Future Work

5.1 Conclusion

We have studied the problem of clustering uncertain numerical data with valued uncer-tainty are represented by probability density functions. we have discussed the VORONOIdiagram based prunning techniques , which prunes effectively than any another tech-niques. We have explained that the computation of expected distances(EDs)[2, 3, 4, 5]dominates the clustering process, especially when the number of samples used in rep-resenting objects pdfs is large. the other techniques like MinMax do not consider thespatial relationship among cluster representatives, nor make use of the proximity be-tween groups of uncertain objects to perform pruning in batch. To further improvethe performance of UK-means[7], we have first devised new pruning techniques that arebased on Voronoi diagrams. The VD algorithm achieves effective pruning by two prun-ing methods: Voronoi-cell pruning and bisector pruning. We have proved theoreticallythat bisector pruning is strictly stronger than MinMax- BB. Furthermore, we have pro-posed the idea of pruning by partial ED calculations and have incorporated the methodin VD prunning.

Expected distances(EDs) are reduced to such an extent that the originally relativelycheap pruning overhead has become a dominating term in the total execution time. Tofurther improve efficiency, we exploit the spatial grouping derived from an R-tree indexbuilt to organise the uncertain objects. This R-tree boosting technique turns out to cutdown the pruning costs significantly. We have also noticed that some of the pruningtechniques and R-tree boosting[2] can be effectively combined. Employing differentpruning criteria, the combination of these different techniques yield very impressivepruning effectiveness.

The results show that clutering uncertain numerical data, and supply as a inputfor classifiacation it improves the classification accuracy especialy for uncertain dataas compared to averaging method. The overhead of building an R-tree index also getscompensated by the large reduction of pruning costs. The experiments also consistentlydemonstrated that the hybrid algorithms can prune more effectively than the otheralgorithms. Therefore, we conclude that our innovative techniques based on Voronoidiagrams and R-tree index are effective and practical. The use of clustering the datafirst and supply as input to classifiacation process, if the cluster is more dense then itprobably incerases the classifiacation accuracy.

25

Chapter 5. Conclusion and Future Work

5.2 Related Work

Data uncertainty has been classified into existential uncertainty and value uncertainty.Existential uncertainty appears when it is uncertain whether an object or a data tupleexists. For example, a data tuple in a relational database could be associated with aprobability that represents the confidence of its presence [2, 1]. Value uncertainty, onthe other hand, appears when a tuple is known to exist, but its values are not knownprecisely.A data item with value uncertainty is usually represented by a pdf over a finiteand bounded region of possible values[2] the problem of clustering objects with value(e.g., location) uncertainty. One well-studied topic on value uncertainty is imprecisequery processing. An answer to such a query is associated with a probabilistic guaranteeon its correctness. Some example studies on imprecise data include indexing structuresfor range query processing, nearest neighbor query processing, and imprecise location-dependent query processing. uncertain data as samples from distributions with hiddenparameters, the standard Expectation-Maximization (EM) framework[2] can be usedto handle data uncertainty[1]. There has been growing interest in uncertain data min-ing. In[7], the well-known k-means clustering algorithm is extended to the UK-meansalgorithm for clustering uncertain data. In that study, it is empirically shown that clus-tering results are improved if data uncertainty is taken into account during the clusteringprocess. As we have explained, data uncertainty is usually captured by pdfs, which aregenerally represented by sets of sample values. Mining uncertain data is, therefore, com-putationally costly due to information explosion (sets of samples versus singular values).To improve the performance of UK-means, CK-means introduced a novel method forcomputing the EDs efficiently. However, that method only works for a specific formof distance function. For general distance functions, [6] takes the approach of pruning,and proposed pruning techniques such as min-max-dist pruning. In this paper, we fol-low the pruning approach and propose new pruning techniques that are significantlymore powerful than those proposed in[7]. In[5], guaranteed approximation algorithmshave been proposed for clustering uncertain data using k-means, k-median as well ask-center. Apart from studies in partition-based uncertain data clustering, other direc-tions in uncertain data mining include density-based clustering frequent item set mining,and density-based classification[12]. For density-based clustering, two well-known algo-rithms, namely, DBSCAN and OPTICS, have been extended to handle uncertain data.The corresponding algorithms are called FDBSCAN and FOPTICS respectively. InDBSCAN, the concepts of core objects and reachability are defined. Clusters are thenformed based on these concepts. In FDBSCAN, the concepts are redefined to handleuncertain data. For example, under FDBSCAN, an object o is a core object if theprobability that there is a good number of other objects that are close to o exceedsa certain probability threshold. Also, whether an object y is reachable from anotherobject x depends on both the probability of y being close to x and the probability thatx is a core object. FOPTICS takes a similar approach of using probabilities to modifythe OPTICS algorithm to cluster uncertain data. Clustering of uncertain data is alsorelated to fuzzy clustering, which has long been studied in fuzzy logic[16]. In fuzzy clus-tering, a cluster is represented by a fuzzy subset of objects. Each object has a degree ofbelongingness with respect to each cluster. The fuzzy c-means algorithm is one of themost widely used fuzzy clustering methods,Different fuzzy clustering methods have beenapplied on normal or fuzzy data to produce fuzzy clusters. A major difference betweenthe clustering problem studied in this paper and fuzzy clustering is that we focus on hardclustering, for which each object belongs to exactly one cluster. Our formulation targets

26

5.3 Future Work

for applications such as mobile device clustering in which each device should report itslocation exactly one cluster leader. Voronoi diagram is a well-known geometric struc-ture in computational geometry. It has also been applied to clustering. An R-tree[2]is a self-balancing tree structure that resembles a B+-tree, except that it is devised forindexing multidimensional data points to facilitate proximity-based searching, such ask-nearest neighbor (kNN) queries. R-trees are well studied and widely used in real ap-plications. They are also available in many RDBMS products such as SQLite, MySQL,and Oracle. An R-tree conceptually groups the underlying points hierarchically andrecords the minimum bounding rectangle (MBR) of each group to facilitate answeringof spatial queries. While most existing works on R-tree concentrate on optimizing thetree for answering spatial queries, we use R-trees in this paper in a quite innovative way:We exploit the hierarchical grouping of the objects organized by an R-tree to help uscheck pruning criteria in batch, thereby avoiding redundant checking

5.3 Future Work

In future the work of clustering and classification of uncertain numerical data may beextended to the categorial data uncertainty as well. this can be extended to the perfectanalysis of the scientific data were the accuracy is very importtant. this can applied tothe sattelite data applications were the accuracy is highly important. other than theSVM approach for classification the another approaches like neural network can be usedefficiently for classification purpose especialy for uncertain numerical data.

In future the same SVM kernel linear regreesion may replaced by the other kernelwhich will supplort non linear tendancy of the data.

27

Chapter 5. Conclusion and Future Work

28

Bibliography

[1] Ben Kao, Sau Dan Lee, David W. Cheung, Wai-Shing Ho, K. F. Chan Cluster-ing Uncertain data using Voronoi Diagrams, IEEE international conferecne on datamining ICDM 2006.

[2] Ben Kao, Sau Dan Lee, Foris K.F. Lee,David Wai-lok Cheung, Wai-Shing Ho Clus-tering Uncertain Data Using Voronoi Diagrams and R-Tree Index IEEE transactionson knowledge and data engineering, vol. 22, no. 9, september 2010

[3] Chen Zhang, Ming Gao, Aoying Zhou Tracking High Quality Clusters over UncertainData Streams IEEE interanational conference on data engineering 2009.

[4] Charu C. Aggarwal, Philip S. Yu A Framework for Clustering Uncertain DataStreams IEEE international conference on data mining 2008. pp. 150-159.

[5] Graham Cormode, Andrew McGregor Approximation Algorithms for Clustering Un-certain Data POD’s 2008 June 9-12, 2008 Vancouver, BC, Canada.

[6] Biao Qin, Yuni Xia, Sunil Prabhakar, Yicheng Tu A Rule Based Classification Al-gorithm for Uncertain Data, IEEE International Conference on data engineering2009.

[7] Wang Kay Ngai, Ben Kao, Chun Kit Chui, Reynold Cheng, Michael Chau, KevinY. Yip Efficient Clustering of Uncertain Data Proceeding of sixth international con-ference on data mining(ICDM 06).

[8] Charu C. Aggarwal, P. S. Yu A Survey of Uncertain Data Algorithms and Applica-tions in IBM Research report october 31, 2007.

[9] Charu C. Aggarwal,Han J. Wnag, P. S. Yu A Framework for Clustering EvolvingData Streams in VLDB, 2004 pp. 852-863.

[10] M. Chau, R. Cheng, B. Kao, and J. Ng, Uncertain Data Mining:An Example inClustering Location Data Proceeding Pacific-Asia Conference Knowledge Discoveryand Data Mining (PAKDD), pp. 199-204, Apr. 2006.

[11] N.N. Dalvi and D. Suciu, Efficient Query Evaluation on Probabilistic DatabasesThe VLDB Journal, vol. 16, no. 4, pp. 523-544,2007.

[12] H.P. Kriegel and M. Pfeifle, Density-Based Clustering of Uncertain Data ProceedingIntl Conference Knowledge Discovery and Data Mining (KDD), pp. 672-677, Aug.2005.

29

BIBLIOGRAPHY

[13] H.P. Kriegel and M. Pfeifle, Hierarchical Density-Based Clustering of UncertainData Proceeding Fifth IEEE Intl Conference Data Mining (ICDM 05), pp. 689-692,Nov. 2005.

[14] R. Cheng, D.V. Kalashnikov, and S. Prabhakar, Querying Imprecise Data in MovingObject Environments IEEE Trans.Knowledge and Data Eng., vol. 16, no. 9, pp. 1112-1127, Sept. 2004.

[15] Smith Tsang, Ben Kao, Kevin Y. Yip, Wai-Shing Ho, Sau Dan Lee Decision Treesfor Uncertain Data IEEE tranaction on Knowledge and Data Engineering 2011.

[16] Osamu Takata, Sadaaki Miyamoto, Kazutaka Umayahara, Fuzzy Clustering of Datawith Uncertainties using Minimum and Maximum Distances based on L1 MetricsIEEE international conference 2001, pp. 2511-2516.

[17] WEKA TOOL http://www.cs.waikato.ac.nz/ml/weka/index_downloading.

html

[18] KEEL Miner http://sci2s.ugr.es/keel/dataset/data/lowQuality//full/

30