Transfer Learning Based Cross-lingual Knowledge Extraction ...

Transcript of Transfer Learning Based Cross-lingual Knowledge Extraction ...

Transfer Learning Based Cross-lingualKnowledge Extraction for Wikipedia

Zhigang Wang†, Zhixing Li†, Juanzi Li†, Jie Tang†, and Jeff Z. Pan‡

† Tsinghua National Laboratory for Information Science and TechnologyDCST, Tsinghua University, Beijing, China

{wzhigang,zhxli,ljz,tangjie}@keg.cs.tsinghua.edu.cn‡ Department of Computing Science, University of Aberdeen, Aberdeen, UK

Abstract

Wikipedia infoboxes are a valuable sourceof structured knowledge for global knowl-edge sharing. However, infobox infor-mation is very incomplete and imbal-anced among the Wikipedias in differen-t languages. It is a promising but chal-lenging problem to utilize the rich struc-tured knowledge from a source languageWikipedia to help complete the missing in-foboxes for a target language.

In this paper, we formulate the prob-lem of cross-lingual knowledge extractionfrom multilingual Wikipedia sources, andpresent a novel framework, called Wiki-CiKE, to solve this problem. An instance-based transfer learning method is utilizedto overcome the problems of topic driftand translation errors. Our experimen-tal results demonstrate that WikiCiKE out-performs the monolingual knowledge ex-traction method and the translation-basedmethod.

1 Introduction

In recent years, the automatic knowledge extrac-tion using Wikipedia has attracted significant re-search interest in research fields, such as the se-mantic web. As a valuable source of structuredknowledge, Wikipedia infoboxes have been uti-lized to build linked open data (Suchanek et al.,2007; Bollacker et al., 2008; Bizer et al., 2008;Bizer et al., 2009), support next-generation in-formation retrieval (Hotho et al., 2006), improvequestion answering (Bouma et al., 2008; Fer-randez et al., 2009), and other aspects of data ex-ploitation (McIlraith et al., 2001; Volkel et al.,2006; Hogan et al., 2011) using semantic web s-tandards, such as RDF (Pan and Horrocks, 2007;

Heino and Pan, 2012) and OWL (Pan and Hor-rocks, 2006; Pan and Thomas, 2007; Fokoue etal., 2012), and their reasoning services.

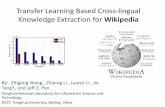

However, most infoboxes in different Wikipedi-a language versions are missing. Figure 1 showsthe statistics of article numbers and infobox infor-mation for six major Wikipedias. Only 32.82%of the articles have infoboxes on average, and thenumbers of infoboxes for these Wikipedias varysignificantly. For instance, the English Wikipedi-a has 13 times more infoboxes than the ChineseWikipedia and 3.5 times more infoboxes than thesecond largest Wikipedia of German language.

English German French Dutch Spanish Chinese0

0.5

1

1.5

2

2.5

3

3.5

4

x 106

Languages

Num

ber

of In

stan

ces

ArticleInfobox

Figure 1: Statistics for Six Major Wikipedias.

To solve this problem, KYLIN has been pro-posed to extract the missing infoboxes from un-structured article texts for the English Wikipedi-a (Wu and Weld, 2007). KYLIN performswell when sufficient training data are available,and such techniques as shrinkage and retraininghave been used to increase recall from EnglishWikipedia’s long tail of sparse infobox classes(Weld et al., 2008; Wu et al., 2008). The extractionperformance of KYLIN is limited by the numberof available training samples.

Due to the great imbalance between differentWikipedia language versions, it is difficult to gath-er sufficient training data from a single Wikipedia.Some translation-based cross-lingual knowledge

extraction methods have been proposed (Adar etal., 2009; Bouma et al., 2009; Adafre and de Rijke,2006). These methods concentrate on translatingexisting infoboxes from a richer source languageversion of Wikipedia into the target language. Therecall of new target infoboxes is highly limitedby the number of equivalent cross-lingual arti-cles and the number of existing source infoboxes.Take Chinese-English1 Wikipedias as an example:current translation-based methods only work for87,603 Chinese Wikipedia articles, 20.43% of thetotal 428,777 articles. Hence, the challenge re-mains: how could we supplement the missing in-foboxes for the rest 79.57% articles?

On the other hand, the numbers of existing in-fobox attributes in different languages are high-ly imbalanced. Table 1 shows the comparisonof the numbers of the articles for the attributesin template PERSON between English and Chi-nese Wikipedia. Extracting the missing value forthese attributes, such as awards, weight, influencesand style, inside the single Chinese Wikipedia isintractable due to the rarity of existing Chineseattribute-value pairs.

Attribute en zh Attribute en zhname 82,099 1,486 awards 2,310 38birth date 77,850 1,481 weight 480 12occupation 66,768 1,279 influences 450 6nationality 20,048 730 style 127 1

Table 1: The Numbers of Articles in TEMPLATEPERSON between English(en) and Chinese(zh).

In this paper, we have the following hypothesis:one can use the rich English (auxiliary) informa-tion to assist the Chinese (target) infobox extrac-tion. In general, we address the problem of cross-lingual knowledge extraction by using the imbal-ance between Wikipedias of different languages.For each attribute, we aim to learn an extractor tofind the missing value from the unstructured arti-cle texts in the target Wikipedia by using the richinformation in the source language. Specifically,we treat this cross-lingual information extractiontask as a transfer learning-based binary classifica-tion problem.

The contributions of this paper are as follows:

1. We propose a transfer learning-based cross-lingual knowledge extraction framework

1Chinese-English denotes the task of Chinese Wikipediainfobox completion using English Wikipedia

called WikiCiKE. The extraction perfor-mance for the target Wikipedia is improvedby using rich infoboxes and textual informa-tion in the source language.

2. We propose the TrAdaBoost-based extractortraining method to avoid the problems of top-ic drift and translation errors of the sourceWikipedia. Meanwhile, some language-independent features are introduced to makeWikiCiKE as general as possible.

3. Chinese-English experiments for four typ-ical attributes demonstrate that WikiCiKEoutperforms both the monolingual extrac-tion method and current translation-basedmethod. The increases of 12.65% for pre-cision and 12.47% for recall in the templatenamed person are achieved when only 30 tar-get training articles are available.

The rest of this paper is organized as follows.Section 2 presents some basic concepts, the prob-lem formalization and the overview of WikiCiKE.In Section 3, we propose our detailed approaches.We present our experiments in Section 4. Some re-lated work is described in Section 5. We concludeour work and the future work in Section 6.

2 Preliminaries

In this section, we introduce some basic con-cepts regarding Wikipedia, formally defining thekey problem of cross-lingual knowledge extrac-tion and providing an overview of the WikiCiKEframework.

2.1 Wiki Knowledge Base and Wiki Article

We consider each language version of Wikipediaas a wiki knowledge base, which can be represent-ed as K = {ai}pi=1, where ai is a disambiguatedarticle in K and p is the size of K.

Formally we define a wiki article a ∈ K as a5-tuple a = (title, text, ib, tp, C), where

• title denotes the title of the article a,

• text denotes the unstructured text descriptionof the article a,

• ib is the infobox associated with a; specif-ically, ib = {(attri, valuei)}qi=1 representsthe list of attribute-value pairs for the articlea,

Figure 2: Simplified Article of “Bill Gates”.

• tp = {attri}ri=1 is the infobox template as-sociated with ib, where r is the number ofattributes for one specific template, and

• C denotes the set of categories to which thearticle a belongs.

Figure 2 gives an example of these five impor-tant elements concerning the article named “BillGates”.

In what follows, we will use named subscripts,such as aBill Gates, or index subscripts, such as ai,to refer to one particular instance interchangeably.We will use “name in TEMPLATE PERSON”to refer to the attribute attrname in the templatetpPERSON . In this cross-lingual task, we use thesource (S) and target (T) languages to denote thelanguages of auxiliary and target Wikipedias, re-spectively. For example, KS indicates the sourcewiki knowledge base, and KT denotes the targetwiki knowledge base.

2.2 Problem FormulationMining new infobox information from unstruc-tured article texts is actually a multi-template,multi-slot information extraction problem. In ourtask, each template represents an infobox templateand each slot denotes an attribute. In the Wiki-CiKE framework, for each attribute attrT in aninfobox template tpT , we treat the task of missingvalue extraction as a binary classification prob-lem. It predicts whether a particular word (token)from the article text is the extraction target (Finnand Kushmerick, 2004; Lafferty et al., 2001).

Given an attribute attrT and an instance(word/token) xi, XS = {xi}ni=1 and XT ={xi}n+m

i=n+1 are the sets of instances (words/tokens)in the source and the target language respectively.xi can be represented as a feature vector accordingto its context. Usually, we have n ≫ m in our set-ting, with much more attributes in the source thatthose in the target. The function g : X 7→ Y mapsthe instance from X = XS ∪ XT to the true la-bel of Y = {0, 1}, where 1 represents the extrac-tion target (positive) and 0 denotes the backgroundinformation (negative). Because the number oftarget instances m is inadequate to train a goodclassifier, we combine the source and target in-stances to construct the training data set as TD =TDS ∪ TDT , where TDS = {xi, g(xi)}ni=1 andTDT = {xi, g(xi)}n+m

i=n+1 represent the sourceand target training data, respectively.

Given the combined training data set TD, ourobjective is to estimate a hypothesis f : X 7→ Ythat minimizes the prediction error on testing datain the target language. Our idea is to determine theuseful part of TDS to improve the classificationperformance in TDT . We view this as a transferlearning problem.

2.3 WikiCiKE Framework

WikiCiKE learns an extractor for a given attributeattrT in the target Wikipedia. As shown in Fig-ure 3, WikiCiKE contains four key components:(1) Automatic Training Data Generation: giventhe target attribute attrT and two wiki knowledgebases KS and KT , WikiCiKE first generates thetraining data set TD = TDS ∪ TDT automati-cally. (2) WikiCiKE Training: WikiCiKE usesa transfer learning-based classification method totrain the classifier (extractor) f : X 7→ Y by usingTDS ∪ TDT . (3) Template Classification: Wi-kiCiKE then determines proper candidate articleswhich are suitable to generate the missing value ofattrT . (4) WikiCiKE Extraction: given a candi-date article a, WikiCiKE uses the learned extractorf to label each word in the text of a, and generatethe extraction result in the end.

3 Our Approach

In this section, we will present the detailed ap-proaches used in WikiCiKE.

Figure 3: WikiCiKE Framework.

3.1 Automatic Training Data Generation

To generate the training data for the target at-tribute attrT , we first determine the equivalen-t cross-lingual attribute attrS . Fortunately, sometemplates in non-English Wikipedia (e.g. ChineseWikipedia) explicitly match their attributes withtheir counterparts in English Wikipedia. There-fore, it is convenient to align the cross-lingual at-tributes using English Wikipedia as bridge. Forattributes that can not be aligned in this way, cur-rently we manually align them. The manual align-ment is worthwhile because thousands of articlesbelong to the same template may benefit from itand at the same time it is not very costly. In Chi-nese Wikipedia, the top 100 templates have cov-ered nearly 80% of the articles which have beenassigned a template.

Once the aligned attribute mapping attrT ↔attrS is obtained, we collect the articles from bothKS and KT containing the corresponding attr.The collected articles from KS are translated intothe target language. Then, we use a uniform au-tomatic method, which primarily consists of wordlabeling and feature vector generation, to generatethe training data set TD = {(x, g(x))} from thesecollected articles.

For each collected article a ={title, text, ib, tp, C} and its value of attr,we can automatically label each word x in textaccording to whether x and its neighbors are

contained by the value. The text and value areprocessed as bags of words {x}text and {x}value.Then for each xi ∈ {x}text we have:

g(xi) =

1 xi ∈ {x}value, |{x}value| = 1

1 xi−1, xi ∈ {x}value or xi, xi+1 ∈ {x}value,|{x}value| > 1

0 otherwise(1)

After the word labeling, each instance(word/token) is represented as a feature vec-tor. In this paper, we propose a general featurespace that is suitable for most target languages.As shown in Table 2, we classify the featuresused in WikiCiKE into three categories: formatfeatures, POS tag features and token features.

Category Feature ExampleFormat First token of sentence 你好,世界!feature Hello World!

In first half of sentence 你好,世界!Hello World!

Starts with two digits 12月31日31th Dec.

Starts with four digits 1999年夏天1999’s summer

Contains a cash sign 10¥or 10$Contains a percentage 10%symbolStop words 的,地,这…

of, the, a, anPure number 365Part of an anchor text 电影导演

Movie DirectorBegin of an anchor text 游戏设计师

Game DesignerPOS tag POS tag of current tokenfeatures POS tags of

previous 5 tokensPOS tags ofnext 5 tokens

Token Current tokenfeatures Previous 5 tokens

Next 5 tokensIs current tokencontained by titleIs one of previous 5tokens contained by title

Table 2: Feature Definition.

The target training data TDT is directly gener-ated from articles in the target language Wikipedi-a. Articles from the source language Wikipediaare translated into the target language in advanceand then transformed into training data TDS . Innext section, we will discuss how to train an ex-tractor from TD = TDS ∪ TDT .

3.2 WikiCiKE TrainingGiven the attribute attrT , we want to train a clas-sifier f : X 7→ Y that can minimize the prediction

error for the testing data in the target language.Traditional machine learning approaches attemptto determine f by minimizing some loss functionL on the prediction f(x) for the training instancex and its real label g(x), which is

f = argminf∈Θ

∑L(f(x), g(x)) where (x, g(x)) ∈ TDT

(2)

In this paper, we use TrAdaBoost (Dai et al.,2007), which is an instance-based transfer learn-ing algorithm that was first proposed by Dai to findf . TrAdaBoost requires that the source traininginstances XS and target training instances XT bedrawn from the same feature space. In WikiCiKE,the source articles are translated into the targetlanguage in advance to satisfy this requirement.Due to the topic drift problem and translation er-rors, the joint probability distribution PS(x, g(x))is not identical to PT (x, g(x)). We must adjust thesource training data TDS so that they fit the dis-tribution on TDT . TrAdaBoost iteratively updatesthe weights of all training instances to optimize theprediction error. Specifically, the weight-updatingstrategy for the source instances is decided by theloss on the target instances.

For each t = 1 ∼ T iteration, given a weightvector pt normalized from wt(wt is the weightvector before normalization), we call a basic clas-sifier F that can address weighted instances andthen find a hypothesis f that satisfies

ft = argminf∈ΘF

∑L(pt, f(x), g(x))

(x, g(x)) ∈ TDS ∪ TDT

(3)

Let ϵt be the prediction error of ft at the tth iter-ation on the target training instances TDT , whichis

ϵt =1∑n+m

k=n+1 wtk

×n+m∑

k=n+1

(wtk × |ft(xk)− yk|) (4)

With ϵt, the weight vector wt is updated by thefunction:

wt+1 = h(wt, ϵt) (5)

The weight-updating strategy h is illustrated inTable 3.

Finally, a final classifier f can be obtained bycombining fT/2 ∼ fT .

TrAdaBoost has a convergence rate ofO(

√ln(n/N)), where n and N are the number

of source samples and number of maximumiterations respectively.

TrAdaBoost AdaBoostTarget + wt wt

samples − wt × β−1t wt × β−1

t

Source + wt × β−1 No source trainingsamples − wt × β sample available

+: correctly labelled −: miss-labelledwt: weight of an instance at the tth iterationβt = ϵt × (1− ϵt)

β = 1/(1 +√2 lnnT )

Table 3: Weight-updating Strategy of TrAd-aBoost.

3.3 Template Classification

Before using the learned classifier f to extrac-t missing infobox value for the target attributeattrT , we must select the correct articles to be pro-cessed. For example, the article aNew Y ork is nota proper article for extracting the missing value ofthe attribute attrbirth day.

If a already has an incomplete infobox, it isclear that the correct tp is the template of its owninfobox ib. For those articles that have no infobox-es, we use the classical 5-nearest neighbor algo-rithm to determine their templates (Roussopouloset al., 1995) using their category labels, outlinks,inlinks as features (Wang et al., 2012). Our classi-fier achieves an average precision of 76.96% withan average recall of 63.29%, and can be improvedfurther. In this paper, we concentrate on the Wiki-CiKE training and extraction components.

3.4 WikiCiKE Extraction

Given an article a determined by template classi-fication, we generate the missing value of attrfrom the corresponding text. First, we turn thetext into a word sequence and compute the fea-ture vector for each word based on the featuredefinition in Section 3.1. Next we use f to labeleach word, and we get a labeled sequence textl astextl = {xf(x1)

1 ...xf(xi−1)i−1 x

f(xi)i x

f(xi+1)i+1 ...x

f(xn)n }

where the superscript f(xi) ∈ {0, 1} representsthe positive or negative label by f . After that, weextract the adjacent positive tokens in text as thepredict value. In particular, the longest positive to-ken sequence and the one that contains other pos-itive token sequences are preferred in extraction.E.g., a positive sequence “comedy movie director”is preferred to a shorter sequence “movie direc-tor”.

4 Experiments

In this section, we present our experiments to e-valuate the effectiveness of WikiCiKE, where wefocus on the Chinese-English case; in other words,the target language is Chinese and the source lan-guage is English. It is part of our future work totry other language pairs which two Wikipedias ofthese languages are imbalanced in infobox infor-mation such as English-Dutch.

4.1 Experimental Setup4.1.1 Data SetsOur data sets are from Wikipedia dumps2 generat-ed on April 3, 2012. For each attribute, we collectboth labeled articles (articles that contain the cor-responding attribute attr) and unlabeled articlesin Chinese. We split the labeled articles into twosubsets AT and Atest(AT ∩ Atest = ∅), in whichAT is used as target training articles and Atest isused as the first testing set. For the unlabeled arti-cles, represented as A′

test, we manually label theirinfoboxes with their texts and use them as the sec-ond testing set. For each attribute, we also collect aset of labeled articles AS in English as the sourcetraining data. Our experiments are performed onfour attributes, which are occupation, nationality,alma mater in TEMPLATE PERSON, and coun-try in TEMPLATE FILM. In particular, we extractvalues from the first two paragraphs of the textsbecause they usually contain most of the valuableinformation. The details of data sets on these at-tributes are given in Table 4.

Attribute |AS| |AT| |Atest| |A′test|

occupation 1,000 500 779 208alma mater 1,000 200 215 208nationality 1,000 300 430 208country 1,000 500 1,000 −

|A|: the number of articles in A

Table 4: Data Sets.

4.1.2 Comparison MethodsWe compare our WikiCiKE method with two dif-ferent kinds of methods, the monolingual knowl-edge extraction method and the translation-basedmethod. They are implemented as follows:

1. KE-Mon is the monolingual knowledge ex-tractor. The difference between WikiCiKEand KE-Mon is that KE-Mon only uses theChinese training data.

2http://dumps.wikimedia.org/

2. KE-Tr is the translation-based extractor. Itobtains the values by two steps: finding theircounterparts (if available) in English usingWikipedia cross-lingual links and attributealignments, and translating them into Chi-nese.

We conduct two series of evaluation to compareWikiCiKE with KE-Mon and KE-Tr, respectively.

1. We compare WikiCiKE with KE-Mon on thefirst testing data set Atest, where most val-ues can be found in the articles’ texts in thoselabeled articles, in order to demonstrate theperformance improvement by using cross-lingual knowledge transfer.

2. We compare WikiCiKE with KE-Tr on thesecond testing data set A

′test, where the

existences of values are not guaranteed inthose randomly selected articles, in order todemonstrate the better recall of WikiCiKE.

For implementation details, the weighted-SVMis used as the basic learner f both in WikiCiKEand KE-Mon (Zhang et al., 2009), and BaiduTranslation API3 is used as the translator both inWikiCiKE and KE-Tr. The Chinese texts are pre-processed using ICTCLAS4 for word segmenta-tion.

4.1.3 Evaluation MetricsFollowing Lavelli’s research on evaluation of in-formation extraction (Lavelli et al., 2008), we per-form evaluation as follows.

1. We evaluate each attr separately.

2. For each attr, there is exactly one value ex-tracted.

3. No alternative occurrence of real value isavailable.

4. The overlap ratio is used in this paper ratherthan “exactly matching” and “containing”.

Given an extracted value v′ = {w′} and itscorresponding real value v = {w}, two measure-ments for evaluating the overlap ratio are defined:

recall: the rate of matched tokens w.r.t. the realvalue. It can be calculated using

R(v′, v) =|v ∩ v′||v|

3http://openapi.baidu.com/service4http://www.ictclas.org/

precision: the rate of matched tokens w.r.t. theextracted value. It can be calculated using

P (v′, v) =|v ∩ v′||v′|

We use the average of these two measures toevaluate the performance of our extractor as fol-lows:

R = avg(Ri(v′, v)) ai ∈ Atest

P = avg(Pi(v′, v)) ai ∈ Atest and vi

′ = ∅

The recall and precision range from 0 to 1 andare first calculated on a single instance and thenaveraged over the testing instances.

4.2 Comparison with KE-Mon

In these experiments, WikiCiKE trains extractorson AS ∪ AT , and KE-Mon trains extractors juston AT . We incrementally increase the number oftarget training articles from 10 to 500 (if available)to compare WikiCiKE with KE-Mon in differentsituations. We use the first testing data set Atest toevaluate the results.

Figure 4 and Table 5 show the experimental re-sults on TEMPLATE PERSON and FILM. We cansee that WikiCiKE outperforms KE-Mon on allthree attributions especially when the number oftarget training samples is small. Although the re-call for alma mater and the precision for nation-ality of WikiCiKE are lower than KE-Mon whenonly 10 target training articles are available, Wi-kiCiKE performs better than KE-Mon if we takeinto consideration both precision and recall.

10 30 50 100 200 300 5000

0.2

0.4

0.6

0.8

number of target training articles

P(KE−Mon)P(WikiCiKE)R(KE−Mon)R(WikiCiKE)

(a) occupation

10 30 50 100 2000.4

0.5

0.6

0.7

0.8

0.9

1

number of target training articles

P(KE−Mon)P(WikiCiKE)R(KE−Mon)R(WikiCiKE)

(b) alma mater

10 30 50 100 200 300

0.5

0.6

0.7

0.8

0.9

1

number of target training articles

P(KE−Mon)P(WikiCiKE)R(KE−Mon)R(WikiCiKE)

(c) nationality

10 30 50 100 200 300 5000

5

10

15

20

perc

ent(

%)

number of target training articles

performance gain

PR

(d) average improvements

Figure 4: Results for TEMPLATE PERSON.

Figure 4(d) shows the average improvementsyielded by WikiCiKE w.r.t KE-Mon on TEM-PLATE PERSON. We can see that WikiCiKEyields significant improvements when only a fewarticles are available in target language and the im-provements tend to decrease as the number of tar-get articles is increased. In this case, the articlesin the target language are sufficient to train the ex-tractors alone.

#KE-Mon WikiCiKE

P R P R10 81.1% 63.8% 90.7% 66.3%30 78.8% 64.5% 87.5% 69.4%50 80.7% 66.6% 87.7% 72.3%

100 82.8% 68.2% 87.8% 72.1%200 83.6% 70.5% 87.1% 73.2%300 85.2% 72.0% 89.1% 76.2%500 86.2% 73.4% 88.7% 75.6%

# Number of the target training articles.

Table 5: Results for country in TEMPLATEFILM.

4.3 Comparison with KE-TrWe compare WikiCiKE with KE-Tr on the secondtesting data set A

′test.

From Table 6 it can be clearly observed that Wi-kiCiKE significantly outperforms KE-Tr both inprecision and recall. The reasons why the recal-l of KE-Tr is extremely low are two-fold. First,because of the limit of cross-lingual links and in-foboxes in English Wikipedia, only a very smal-l set of values is found by KE-Tr. Furthermore,many values obtained using the translator are in-correct because of translation errors. WikiCiKEuses translators too, but it has better tolerance totranslation errors because the extracted value isfrom the target article texts instead of the outputof translators.

Attribute KE-Tr WikiCiKEP R P R

occupation 27.4% 3.40% 64.8% 26.4%nationality 66.3% 4.60% 70.0% 55.0%alma mater 66.7% 0.70% 76.3% 8.20%

Table 6: Results of WikiCiKE vs. KE-Tr.

4.4 Significance TestWe conducted a significance test to demonstratethat the difference between WikiCiKE and KE-Mon is significant rather than caused by statisticalerrors. As for the comparison between WikiCiKEand KE-Tr, significant improvements brought by

WikiCiKE can be clearly observed from Table 6so there is no need for further significance test.In this paper, we use McNemar’s significance test(Dietterich and Thomas, 1998).

Table 7 shows the results of significance testcalculated for the average on all tested attributes.When the number of target training articles is lessthan 100, the χ is much less than 10.83 that cor-responds to a significance level 0.001. It suggeststhat the chance that WikiCiKE is not better thanKE-Mon is less than 0.001.

# 10 30 50 100 200 300 500χ 179.5 107.3 51.8 32.8 4.1 4.3 0.3

# Number of the target training articles.

Table 7: Results of Significance Test.

4.5 Overall Analysis

As shown in above experiments, we can see thatWikiCiKE outperforms both KE-Mon and KE-Tr.When only 30 target training samples are avail-able, WikiCiKE reaches comparable performanceof KE-Mon using 300-500 target training samples.Among all of the 72 attributes in TEMPLATEPERSON of Chinese Wikipedia, 39 (54.17%) and55 (76.39%) attributes have less than 30 and 200labeled articles respectively. We can see that Wi-kiCiKE can save considerable human labor whenno sufficient target training samples are available.

We also examined the errors by WikiCiKE andthey can be categorized into three classes. For at-tribute occupation when 30 target training sam-ples are used, there are 71 errors. The first cat-egory is caused by incorrect word segmentation(40.85%). In Chinese, there is no space betweenwords so we need to segment them before extrac-tion. The result of word segmentation directlydecide the performance of extraction so it caus-es most of the errors. The second category is be-cause of the incomplete infoboxes (36.62%). Inevaluation of KE-Mon, we directly use the val-ues in infoboxex as golden values, some of themare incomplete so the correct predicted values willbe automatically judged as the incorrect in thesecases. The last category is mismatched words(22.54%). The predicted value does not match thegolden value or a part of it. In the future, we canimprove the performance of WikiCiKE by polish-ing the word segmentation result.

5 Related Work

Some approaches of knowledge extraction fromthe open Web have been proposed (Wu et al.,2012; Yates et al., 2007). Here we focus on theextraction inside Wikipedia.

5.1 Monolingual Infobox Extraction

KYLIN is the first system to autonomously ex-tract the missing infoboxes from the correspond-ing article texts by using a self-supervised learn-ing method (Wu and Weld, 2007). KYLIN per-forms well when enough training data are avail-able. Such techniques as shrinkage and retrainingare proposed to increase the recall from EnglishWikipedia’s long tail of sparse classes (Wu et al.,2008; Wu and Weld, 2010). Different from Wu’sresearch, WikiCiKE is a cross-lingual knowledgeextraction framework, which leverags rich knowl-edge in the other language to improve extractionperformance in the target Wikipedia.

5.2 Cross-lingual Infobox Completion

Current translation based methods usually con-tain two steps: cross-lingual attribute alignmen-t and value translation. The attribute alignmen-t strategies can be grouped into two categories:cross-lingual link based methods (Bouma et al.,2009) and classification based methods (Adar etal., 2009; Nguyen et al., 2011; Aumueller et al.,2005; Adafre and de Rijke, 2006; Li et al., 2009).After the first step, the value in the source lan-guage is translated into the target language. E.Adar’s approach gives the overall precision of54% and recall of 40% (Adar et al., 2009). How-ever, recall of these methods is limited by thenumber of equivalent cross-lingual articles and thenumber of infoboxes in the source language. It isalso limited by the quality of the translators. Wi-kiCiKE attempts to mine the missing infoboxesdirectly from the article texts and thus achievesa higher recall compared with these methods asshown in Section 4.3.

5.3 Transfer Learning

Transfer learning can be grouped into four cate-gories: instance-transfer, feature-representation-transfer, parameter-transfer and relational-knowledge-transfer (Pan and Yang, 2010).TrAdaBoost, the instance-transfer approach, isan extension of the AdaBoost algorithm, anddemonstrates better transfer ability than tradition-

al learning techniques (Dai et al., 2007). Transferlearning have been widely studied for classifica-tion, regression, and cluster problems. However,few efforts have been spent in the informationextraction tasks with knowledge transfer.

6 Conclusion and Future Work

In this paper we proposed a general cross-lingualknowledge extraction framework called Wiki-CiKE, in which extraction performance in the tar-get Wikipedia is improved by using rich infobox-es in the source language. The problems of topicdrift and translation error were handled by usingthe TrAdaBoost model. Chinese-English exper-imental results on four typical attributes showedthat WikiCiKE significantly outperforms both thecurrent translation based methods and the mono-lingual extraction methods. In theory, WikiCiKEcan be applied to any two wiki knowledge basedof different languages.

We have been considering some future work.Firstly, more attributes in more infobox templatesshould be explored to make our results muchstronger. Secondly, knowledge in a minor lan-guage may also help improve extraction perfor-mance for a major language due to the cultural andreligion differences. A bidirectional cross-lingualextraction approach will also be studied. Last butnot least, we will try to extract multiple attr-valuepairs at the same time for each article.

Furthermore, our work is part of a more ambi-tious agenda on exploitation of linked data. On theone hand, being able to extract data and knowl-edge from multilingual sources such as Wikipedi-a could help improve the coverage of linked datafor applications. On the other hand, we are alsoinvestigating how to possibly integrate informa-tion, including subjective information (Sensoy etal., 2013), from multiple sources, so as to bettersupport data exploitation in context dependent ap-plications.

Acknowledgement

The work is supported by NSFC (No. 61035004),NSFC-ANR (No. 61261130588), 863 High Tech-nology Program (2011AA01A207), FP7-288342,FP7 K-Drive project (286348), the EPSRC WhatIfproject (EP/J014354/1) and THU-NUS NExT Co-Lab. Besides, we gratefully acknowledge the as-sistance of Haixun Wang (MSRA) for improvingthe paper work.

ReferencesS. Fissaha Adafre and M. de Rijke. 2006. Find-

ing Similar Sentences across Multiple Languagesin Wikipedia. EACL 2006 Workshop on New Text:Wikis and Blogs and Other Dynamic Text Sources.

Sisay Fissaha Adafre and Maarten de Rijke. 2005.Discovering Missing Links in Wikipedia. Proceed-ings of the 3rd International Workshop on Link Dis-covery.

Eytan Adar, Michael Skinner and Daniel S. Weld.2009. Information Arbitrage across Multi-lingualWikipedia. WSDM’09.

David Aumueller, Hong Hai Do, Sabine Massmann andErhard Rahm”. 2005. Schema and ontology match-ing with COMA++. SIGMOD Conference’05.

Christian Bizer, Jens Lehmann, Georgi Kobilarov,Soren Auer, Christian Becker, Richard Cyganiakand Sebastian Hellmann. 2009. DBpedia - A crys-tallization Point for the Web of Data. J. Web Sem..

Christian Bizer, Tom Heath, Kingsley Idehen and TimBerners-Lee. 2008. Linked data on the web (L-DOW2008). WWW’08.

Kurt Bollacker, Colin Evans, Praveen Paritosh, Tim S-turge and Jamie Taylor. 2008. Freebase: a Collabo-ratively Created Graph Database for Structuring Hu-man Knowledge. SIGMOD’08.

Gosse Bouma, Geert Kloosterman, Jori Mur, GertjanVan Noord, Lonneke Van Der Plas and Jorg Tiede-mann. 2008. Question Answering with Joost atCLEF 2007. Working Notes for the CLEF 2008Workshop.

Gosse Bouma, Sergio Duarte and Zahurul Islam.2009. Cross-lingual Alignment and Completion ofWikipedia Templates. CLIAWS3 ’09.

Wenyuan Dai, Qiang Yang, Gui-Rong Xue and YongYu. 2007. Boosting for Transfer Learning. ICM-L’07.

Dietterich and Thomas G. 1998. Approximate Statis-tical Tests for Comparing Supervised ClassificationLearning Algorithms. Neural Comput..

Sergio Ferrandez, Antonio Toral, ıscar Ferrandez, An-tonio Ferrandez and Rafael Munoz. 2009. Exploit-ing Wikipedia and EuroWordNet to Solve Cross-Lingual Question Answering. Inf. Sci..

Aidan Finn and Nicholas Kushmerick. 2004. Multi-level Boundary Classification for Information Ex-traction. ECML.

Achille Fokoue, Felipe Meneguzzi, Murat Sensoy andJeff Z. Pan. 2012. Querying Linked OntologicalData through Distributed Summarization. Proc. ofthe 26th AAAI Conference on Artificial Intelligence(AAAI2012).

Yoav Freund and Robert E. Schapire. 1997.A Decision-Theoretic Generalization of On-LineLearning and an Application to Boosting. J. Com-put. Syst. Sci..

Norman Heino and Jeff Z. Pan. 2012. RDFS Rea-soning on Massively Parallel Hardware. Proc. ofthe 11th International Semantic Web Conference(ISWC2012).

Aidan Hogan, Jeff Z. Pan, Axel Polleres and Yuan Ren.2011. Scalable OWL 2 Reasoning for Linked Data.Reasoning Web. Semantic Technologies for the Webof Data.

Andreas Hotho, Robert Jaschke, Christoph Schmitzand Gerd Stumme. 2006. Information Retrieval inFolksonomies: Search and Ranking. ESWC’06.

John D. Lafferty, Andrew McCallum and FernandoC. N. Pereira. 2001. Conditional Random Fields:Probabilistic Models for Segmenting and LabelingSequence Data. ICML’01.

Alberto Lavelli, MaryElaine Califf, Fabio Ciravegna,Dayne Freitag, Claudio Giuliano, Nicholas Kush-merick, Lorenza Romano and Neil Ireson. 2008.Evaluation of Machine Learning-based InformationExtraction Algorithms: Criticisms and Recommen-dations. Language Resources and Evaluation.

Juanzi Li, Jie Tang, Yi Li and Qiong Luo. 2009. Ri-MOM: A Dynamic Multistrategy Ontology Align-ment Framework. IEEE Trans. Knowl. Data Eng..

Xiao Ling, Gui-Rong Xue, Wenyuan Dai, Yun Jiang,Qiang Yang and Yong Yu. 2008. Can Chinese We-b Pages be Classified with English Data Source?.WWW’08.

Sheila A. McIlraith, Tran Cao Son and Honglei Zeng.2001. Semantic Web Services. IEEE IntelligentSystems.

Thanh Hoang Nguyen, Viviane Moreira, Huong N-guyen, Hoa Nguyen and Juliana Freire. 2011. Mul-tilingual Schema Matching for Wikipedia Infoboxes.CoRR.

Jeff Z. Pan and Edward Thomas. 2007. Approximat-ing OWL-DL Ontologies. 22nd AAAI Conferenceon Artificial Intelligence (AAAI-07).

Jeff Z. Pan and Ian Horrocks. 2007. RDFS(FA): Con-necting RDF(S) and OWL DL. IEEE Transactionon Knowledge and Data Engineering. 19(2): 192 -206.

Jeff Z. Pan and Ian Horrocks. 2006. OWL-Eu: AddingCustomised Datatypes into OWL. Journal of WebSemantics.

Sinno Jialin Pan and Qiang Yang. 2010. A Survey onTransfer Learning. IEEE Trans. Knowl. Data Eng..

Nick Roussopoulos, Stephen Kelley and Frederic Vin-cent. 1995. Nearest Neighbor Queries. SIGMODConference’95.

Murat Sensoy, Achille Fokoue, Jeff Z. Pan, TimothyNorman, Yuqing Tang, Nir Oren and Katia Sycara.2013. Reasoning about Uncertain Information andConflict Resolution through Trust Revision. Proc.of the 12th International Conference on AutonomousAgents and Multiagent Systems (AAMAS2013).

Fabian M. Suchanek, Gjergji Kasneci and GerhardWeikum. 2007. Yago: a Core of Semantic Knowl-edge. WWW’07.

Max Volkel, Markus Krotzsch, Denny Vrandecic,Heiko Haller and Rudi Studer. 2006. SemanticWikipedia. WWW’06.

Zhichun Wang, Juanzi Li, Zhigang Wang and Jie Tang.2012. Cross-lingual Knowledge Linking across Wi-ki Knowledge Bases. 21st International World WideWeb Conference.

Daniel S. Weld, Fei Wu, Eytan Adar, Saleema Amer-shi, James Fogarty, Raphael Hoffmann, Kayur Pa-tel and Michael Skinner. 2008. Intelligence inWikipedia. AAAI’08.

Fei Wu and Daniel S. Weld. 2007. Autonomously Se-mantifying Wikipedia. CIKM’07.

Fei Wu and Daniel S. Weld. 2010. Open InformationExtraction Using Wikipedia. ACL’10.

Fei Wu, Raphael Hoffmann and Daniel S. Weld. 2008.Information Extraction from Wikipedia: Movingdown the Long Tail. KDD’08.

Wentao Wu, Hongsong Li, Haixun Wang and KennyQili Zhu. 2012. Probase: a Probabilistic Taxonomyfor Text Understanding. SIGMOD Conference’12.

Alexander Yates, Michael Cafarella, Michele Banko,Oren Etzioni, Matthew Broadhead and StephenSoderland. 2007. TextRunner: Open InformationExtraction on the Web. NAACL-Demonstrations’07.

Xinfeng Zhang, Xiaozhao Xu, Yiheng Cai and YaoweiLiu. 2009. A Weighted Hyper-Sphere SVM. IC-NC(3)’09.