Training Radial Basis Function Networks · Radial Basis Function Networks: Training Training radial...

Transcript of Training Radial Basis Function Networks · Radial Basis Function Networks: Training Training radial...

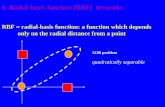

Training Radial Basis Function Networks

Christian Borgelt Artificial Neural Networks and Deep Learning 217

Radial Basis Function Networks: Initialization

Let Lfixed = {l1, . . . , lm} be a fixed learning task,consisting of m training patterns l = (~ı (l), ~o (l)).

Simple radial basis function network:One hidden neuron vk, k = 1, . . . ,m, for each training pattern:

∀k ∈ {1, . . . ,m} : ~wvk =~ı (lk).

If the activation function is the Gaussian function,the radii σk are chosen heuristically

∀k ∈ {1, . . . ,m} : σk =dmax√2m

,

where

dmax = maxlj,lk∈Lfixed

d(

~ı (lj),~ı (lk))

.

Christian Borgelt Artificial Neural Networks and Deep Learning 218

Radial Basis Function Networks: Initialization

Initializing the connections from the hidden to the output neurons

∀u :m∑

k=1

wuvm out(l)vm −θu = o

(l)u or abbreviated A · ~wu = ~ou,

where ~ou = (o(l1)u , . . . , o

(lm)u )⊤ is the vector of desired outputs, θu = 0, and

A =

out(l1)v1 out

(l1)v2 . . . out

(l1)vm

out(l2)v1 out

(l2)v2 . . . out

(l2)vm

... ... ...

out(lm)v1 out

(lm)v2 . . . out

(lm)vm

.

This is a linear equation system, that can be solved by inverting the matrix A:

~wu = A−1 · ~ou.

Christian Borgelt Artificial Neural Networks and Deep Learning 219

RBFN Initialization: Example

Simple radial basis function network for the biimplication x1 ↔ x2

x1 x2 y

0 0 11 0 00 1 01 1 1

1

2

1

2

1

2

1

2

0

x2

x1

y

1

0

1

0

1

1

0

0w1

w2

w3

w4

Christian Borgelt Artificial Neural Networks and Deep Learning 220

RBFN Initialization: Example

Simple radial basis function network for the biimplication x1 ↔ x2

A =

1 e−2 e−2 e−4

e−2 1 e−4 e−2

e−2 e−4 1 e−2

e−4 e−2 e−2 1

A−1 =

aD

bD

bD

cD

bD

aD

cD

bD

bD

cD

aD

bD

cD

bD

bD

aD

whereD = 1− 4e−4 + 6e−8 − 4e−12 + e−16 ≈ 0.9287

a = 1 − 2e−4 + e−8 ≈ 0.9637

b = −e−2 + 2e−6 − e−10 ≈ −0.1304

c = e−4 − 2e−8 + e−12 ≈ 0.0177

~wu = A−1 · ~ou =1

D

a + c

2b2b

a + c

≈

1.0567−0.2809−0.28091.0567

Christian Borgelt Artificial Neural Networks and Deep Learning 221

RBFN Initialization: Example

Simple radial basis function network for the biimplication x1 ↔ x2

single basis function

–10

12

–1

0

1

2

1

1

x1

x2

act

all basis functions

–10

12

–1

0

1

2

1

1

x1

x2

act

output

–10

12

–1

0

1

2

1

1

x1

x2

y

• Initialization leads already to a perfect solution of the learning task.

• Subsequent training is not necessary.

Christian Borgelt Artificial Neural Networks and Deep Learning 222

Radial Basis Function Networks: Initialization

Normal radial basis function networks:Select subset of k training patterns as centers.

A =

1 out(l1)v1 out

(l1)v2 . . . out

(l1)vk

1 out(l2)v1 out

(l2)v2 . . . out

(l2)vk... ... ... ...

1 out(lm)v1 out

(lm)v2 . . . out

(lm)vk

A · ~wu = ~ou

Compute (Moore–Penrose) pseudo inverse:

A+ = (A⊤A)−1A⊤.

The weights can then be computed by

~wu = A+ · ~ou = (A⊤A)−1A⊤ · ~ou

Christian Borgelt Artificial Neural Networks and Deep Learning 223

RBFN Initialization: Example

Normal radial basis function network for the biimplication x1 ↔ x2

Select two training patterns:

• l1 = (~ı (l1), ~o (l1)) = ((0, 0), (1))

• l4 = (~ı (l4), ~o (l4)) = ((1, 1), (1))

x1

x2

1

2

1

2

θ y

1

0

0

1

w1

w2

Christian Borgelt Artificial Neural Networks and Deep Learning 224

RBFN Initialization: Example

Normal radial basis function network for the biimplication x1 ↔ x2

A =

1 1 e−4

1 e−2 e−2

1 e−2 e−2

1 e−4 1

A+ = (A⊤A)−1A⊤ =

a b b a

c d d e

e d d c

where

a ≈ −0.1810, b ≈ 0.6810,c ≈ 1.1781, d ≈ −0.6688, e ≈ 0.1594.

Resulting weights:

~wu =

−θ

w1w2

= A+ · ~ou ≈

−0.36201.33751.3375

.

Christian Borgelt Artificial Neural Networks and Deep Learning 225

RBFN Initialization: Example

Normal radial basis function network for the biimplication x1 ↔ x2

basis function (0,0)

–10

12

–1

0

1

2

1

1

x1

x2

act

basis function (1,1)

–10

12

–1

0

1

2

1

1

x1

x2

act

output

–10

12

–1

0

1 0

1

–0.36

0

1

x1

x2

y

(1, 0)

• Initialization leads already to a perfect solution of the learning task.

• This is an accident, because the linear equation system is not over-determined,due to linearly dependent equations.

Christian Borgelt Artificial Neural Networks and Deep Learning 226

Radial Basis Function Networks: Initialization

How to choose the radial basis function centers?

• Use all data points as centers for the radial basis functions.

◦ Advantages: Only radius and output weights need to be determined; desiredoutput values can be achieved exactly (unless there are inconsistencies).

◦ Disadvantage: Often much too many radial basis functions; computing theweights to the output neuron via a pseudo-inverse can become infeasible.

• Use a random subset of data points as centers for the radial basis functions.

◦ Advantages: Fast; only radius and output weights need to be determined.

◦ Disadvantages: Performance depends heavily on the choice of data points.

• Use the result of clustering as centers for the radial basis functions, e.g.

◦ c-means clustering (on the next slides)

◦ Learning vector quantization (to be discussed later)

Christian Borgelt Artificial Neural Networks and Deep Learning 227

RBFN Initialization: c-means Clustering

• Choose a number c of clusters to be found (user input).

• Initialize the cluster centers randomly(for instance, by randomly selecting c data points).

• Data point assignment:Assign each data point to the cluster center that is closest to it(that is, closer than any other cluster center).

• Cluster center update:Compute new cluster centers as the mean vectors of the assigned data points.(Intuitively: center of gravity if each data point has unit weight.)

• Repeat these two steps (data point assignment and cluster center update)until the clusters centers do not change anymore.

It can be shown that this scheme must converge,that is, the update of the cluster centers cannot go on forever.

Christian Borgelt Artificial Neural Networks and Deep Learning 228

c-Means Clustering: Example

Data set to cluster.

Choose c = 3 clusters.(From visual inspection, can bedifficult to determine in general.)

Initial position of cluster centers.

Randomly selected data points.(Alternative methods includee.g. latin hypercube sampling)

Christian Borgelt Artificial Neural Networks and Deep Learning 229

Delaunay Triangulations and Voronoi Diagrams

• Dots represent cluster centers.

• Left: Delaunay TriangulationThe circle through the corners of a triangle does not contain another point.

• Right: Voronoi Diagram / TesselationMidperpendiculars of the Delaunay triangulation: boundaries of the regionsof points that are closest to the enclosed cluster center (Voronoi cells).

Christian Borgelt Artificial Neural Networks and Deep Learning 230

Delaunay Triangulations and Voronoi Diagrams

• Delaunay Triangulation: simple triangle (shown in gray on the left)

• Voronoi Diagram: midperpendiculars of the triangle’s edges(shown in blue on the left, in gray on the right)

Christian Borgelt Artificial Neural Networks and Deep Learning 231

c-Means Clustering: Example

Christian Borgelt Artificial Neural Networks and Deep Learning 232

Radial Basis Function Networks: Training

Training radial basis function networks:Derivation of update rules is analogous to that of multi-layer perceptrons.

Weights from the hidden to the output neurons.

Gradient:

~∇~wue(l)u =

∂e(l)u

∂ ~wu= −2(o

(l)u − out

(l)u ) ~in

(l)u ,

Weight update rule:

∆~w(l)u = −η3

2~∇~wu

e(l)u = η3(o

(l)u − out

(l)u ) ~in

(l)u

Typical learning rate: η3 ≈ 0.001.

(Two more learning rates are needed for the center coordinates and the radii.)

Christian Borgelt Artificial Neural Networks and Deep Learning 233

Radial Basis Function Networks: Training

Training radial basis function networks:Center coordinates (weights from the input to the hidden neurons).

Gradient:

~∇~wve(l) =

∂e(l)

∂ ~wv= −2

∑

s∈succ(v)(o(l)s − out

(l)s )wsu

∂ out(l)v

∂ net(l)v

∂ net(l)v

∂ ~wv

Weight update rule:

∆~w(l)v = −η1

2~∇~wv

e(l) = η1∑

s∈succ(v)(o(l)s − out

(l)s )wsv

∂ out(l)v

∂ net(l)v

∂ net(l)v

∂ ~wv

Typical learning rate: η1 ≈ 0.02.

Christian Borgelt Artificial Neural Networks and Deep Learning 234

Radial Basis Function Networks: Training

Training radial basis function networks:Center coordinates (weights from the input to the hidden neurons).

Special case: Euclidean distance

∂ net(l)v

∂ ~wv=

n∑

i=1

(wvpi − out(l)pi )

2

−12

(~wv − ~in(l)v ).

Special case: Gaussian activation function

∂ out(l)v

∂ net(l)v

=∂fact( net

(l)v , σv)

∂ net(l)v

=∂

∂ net(l)v

e−

(

net(l)v

)2

2σ2v = −net(l)v

σ2ve−

(

net(l)v

)2

2σ2v .

Christian Borgelt Artificial Neural Networks and Deep Learning 235

Radial Basis Function Networks: Training

Training radial basis function networks:Radii of radial basis functions.

Gradient:∂e(l)

∂σv= −2

∑

s∈succ(v)(o(l)s − out

(l)s )wsu

∂ out(l)v

∂σv.

Weight update rule:

∆σ(l)v = −η2

2

∂e(l)

∂σv= η2

∑

s∈succ(v)(o(l)s − out

(l)s )wsv

∂ out(l)v

∂σv.

Typical learning rate: η2 ≈ 0.01.

Christian Borgelt Artificial Neural Networks and Deep Learning 236

Radial Basis Function Networks: Training

Training radial basis function networks:Radii of radial basis functions.

Special case: Gaussian activation function

∂ out(l)v

∂σv=

∂

∂σve−

(

net(l)v

)2

2σ2v =

(

net(l)v

)2

σ3ve−

(

net(l)v

)2

2σ2v .

(The distance function is irrelevant for the radius update,since it only enters the network input function.)

Christian Borgelt Artificial Neural Networks and Deep Learning 237

Radial Basis Function Networks: Generalization

Generalization of the distance function

Idea: Use anisotropic (direction dependent) distance function.

Example: Mahalanobis distance

d(~x, ~y) =√

(~x− ~y)⊤Σ−1(~x− ~y).

Example: biimplication

x1

x2

1

30 y

1

2

1

2

1

Σ =

(

9 8

8 9

)

x1

x2

0 1

0

1

Christian Borgelt Artificial Neural Networks and Deep Learning 238

Application: Recognition of Handwritten Digits

picture not available in online version

• Images of 20,000 handwritten digits (2,000 per class),split into training and test data set of 10,000 samples each (1,000 per class).

• Represented in a normalized fashion as 16× 16 gray values in {0, . . . , 255}.

• Data was originally used in the StatLog project [Michie et al. 1994].

Christian Borgelt Artificial Neural Networks and Deep Learning 239

Application: Recognition of Handwritten Digits

• Comparison of various classifiers:

◦ Nearest Neighbor (1NN) ◦ Learning Vector Quantization (LVQ)

◦ Decision Tree (C4.5) ◦ Radial Basis Function Network (RBF)

◦ Multi-Layer Perceptron (MLP) ◦ Support Vector Machine (SVM)

• Distinction of the number of RBF training phases:

◦ 1 phase: find output connection weights e.g. with pseudo-inverse.

◦ 2 phase: find RBF centers e.g. with some clustering plus 1 phase.

◦ 3 phase: 2 phase plus error backpropagation training.

• Initialization of radial basis function centers:

◦ Random choice of data points

◦ c-means Clustering

◦ Learning Vector Quantization

◦ Decision Tree (one RBF center per leaf)

Christian Borgelt Artificial Neural Networks and Deep Learning 240

Application: Recognition of Handwritten Digits

picture not available in online version

• The 60 cluster centers (6 per class) resulting from c-means clustering.(Clustering was conducted with c = 6 for each class separately.)

• Initial cluster centers were selected randomly from the training data.

• The weights of the connections to the output neuronwere computed with the pseudo-inverse method.

Christian Borgelt Artificial Neural Networks and Deep Learning 241

Application: Recognition of Handwritten Digits

picture not available in online version

• The 60 cluster centers (6 per class) after training the radial basis function networkwith error backpropagation.

• Differences between the initial and the trained centers of the radial basis functionsappear to be fairly small, but ...

Christian Borgelt Artificial Neural Networks and Deep Learning 242

Application: Recognition of Handwritten Digits

picture not available in online version

• Distance matrices showing the Euclidean distances of the 60 radial basis functioncenters before and after training.

• Centers are sorted by class/digit: first 6 rows/columns refer to digit 0, next 6rows/columns to digit 1 etc.

• Distances are encoded as gray values: darker means smaller distance.

Christian Borgelt Artificial Neural Networks and Deep Learning 243

Application: Recognition of Handwritten Digits

picture not available in online version

• Before training (left): many distances between centers of different classes/digits aresmall (e.g. 2-3, 3-8, 3-9, 5-8, 5-9), which increases the chance of misclassifications.

• After training (right): only very few small distances between centers of differentclasses/digits; basically all small distances between centers of same class/digit.

Christian Borgelt Artificial Neural Networks and Deep Learning 244

Application: Recognition of Handwritten Digits

Classification results:

Classifier Accuracy

Nearest Neighbor (1NN) 97.68%Learning Vector Quantization (LVQ) 96.99%Decision Tree (C4.5) 91.12%2-Phase-RBF (data points) 95.24%2-Phase-RBF (c-means) 96.94%2-Phase-RBF (LVQ) 95.86%2-Phase-RBF (C4.5) 92.72%3-Phase-RBF (data points) 97.23%3-Phase-RBF (c-means) 98.06%3-Phase-RBF (LVQ) 98.49%3-Phase-RBF (C4.5) 94.83%Support Vector Machine (SVM) 98.76%Multi-Layer Perceptron (MLP) 97.59%

• LVQ: 200 vectors(20 per class)

C4.5: 505 leavesc-means: 60 centers(?)

(6 per class)SVM: 10 classifiers,

≈ 4200 vectorsMLP: 1 hidden layer

with 200 neurons

• Results are mediansof three training/test runs.

• Error backpropagationimproves RBF results.

Christian Borgelt Artificial Neural Networks and Deep Learning 245