Towards a method of automatic assessment of semantic ... · Web viewby Roy B. Clariana, Ravinder...

Click here to load reader

Transcript of Towards a method of automatic assessment of semantic ... · Web viewby Roy B. Clariana, Ravinder...

Computer-based scoring . . . 1

The criterion-related validity of a computer-based approach for scoring concept maps

by

Roy B. Clariana, Ravinder Koul, and Roya Salehi

International Journal of Instructional Media, 33 (3), in press

Anticipated publication date – October, 2006

Abstract

This investigation seeks to confirm a computer-based approach that can be

used to score concept maps (Poindexter & Clariana, in press) and then

describes the concurrent criterion-related validity of these scores.

Participants enrolled in two graduate education courses (n=24) were asked to

read about and research online the structure and function of the heart and

circulatory system, to construct a concept map, and then to write a 250-word

essay on this topic. Pathfinder and Latent Semantic Analysis approaches

were used to analyze the data. Term agreement with an expert was

significantly related to human-rater concept map scores (r = 0.75). All of the

computer-derived scores were significantly correlated to human-rater essay

scores (maximum r = 0.83). These results indicate that automatically

derived concept map scores can provide a relatively low-cost, easy to use,

and easy to interpret measures of students’ science content knowledge.

There is an extensive and growing literature on the use of concept maps as an

alternative form of assessment (Markham, Mintzes, & Jones, 1994; Ruiz-Primo, 2000;

Ruiz-Promo, Schultz, Li, & Shavelson, 2000; Ruiz-Primo, Shavelson, Li, & Schultz,

Computer-based scoring . . . 2

2001; Shavelson, Lang, & Lewin, 1994). Jonassen and Wang (1992) propose that

well-developed structural knowledge (the knowledge of the structural inter-

relationship of knowledge elements) of a content area is necessary in order to flexibly

use that knowledge. Concept maps and essays may be an appropriate approach for

assessing structural knowledge (Jonassen, Beissner, & Yacci, 1993).

Recently, Poindexter and Clariana (in press) devised a computer-based

approach for collecting what they termed “distance and link” data from existing

paper-based concept maps. Their use of distance data is new and so unknown, but is

based on principles of free association data collection (Deese, 1965) and on current

connectionist modeling of language and knowledge structure (Elman, 1995;

McClelland, McNaughton, & O’Reilly, 1995). Their use of link data is assumed to be

nearly equivalent to scoring propositions (a proposition is defined as two concept

terms connected by a labeled link line, the label is usually a verb such as “is a”, “has

a”, “contains”). For example, Harper, Hoeft, Evans, and Jentsch (2004) reported that

the correlation between just counting link lines compared to actually scoring correct

and valid propositions in the same set of maps was r = 0.97, suggesting that the

substantial extra time and effort required to specify and hand-score all possible

linking terms adds little additional information over just counting link lines.

Poindexter and Clariana (in press) reported that the actual geometric distances

between terms in a concept map were most related to comprehension multiple-choice

posttest scores (r = 0.71) while links drawn connecting terms were most related to

terminology multiple-choice posttest scores (r = 0.77). They proposed that since

essays are likely more like comprehension than like terminology, then concept map

distance data should be a better predictor of essay scores than is link data.

Computer-based scoring . . . 3

Essays are a well researched, important, versatile, somewhat reliable, and

authentic but expensive form of assessment (Nitko, 1996). Though not ordinarily

coupled together, essays and concept maps are expected to be highly related and

complementary forms of assessment (Goldsmith & Johnson, 1990; Goldsmith,

Johnson, & Acton, 1990; Gonzalvo, Canas, & Bajo, 1994). For example, one of the

first large-scale uses of concept maps in assessment (e.g., the Connecticut statewide

assessment) involved converting student essays into concept maps, and then hand

scoring the concept maps as a measure of science content knowledge and

comprehension (Lomask, Baron, Greig, & Harrison, 1992). In fact, Novak originally

invented concept maps in order to translate copious interview data into visual

representations that are easier to compare and analyze (Novak & Gowin, 1984). Since

essays are a well-established and accepted assessment approach, essay scores provide

a reasonable criterion variable for comparison to concept map scores.

A closely related issue involves the influence of the structure of lesson content

on the resulting cognitive structure of the learner (Landauer, Laham, Rehder, &

Schreiner, 1997; Shavelson, 1972). Kintsch (1994) distinguishes between the

students’ “knowledge-base” and the “text-base” in reading comprehension, with the

idea that information in the text passage interacts with the students’ knowledge. To

examine the effects of lesson text on concept maps, instructional text passages were

provided to half of the participants but not to the other half. Analyses consider how

these text passages influence the resulting concept maps and essays.

The purpose of this investigation is to extend the computer-based approach

described by Poindexter and Clariana (in press) for scoring concept maps by

describing the concurrent criterion-related validity of these scores with human-rater

Computer-based scoring . . . 4

concept map and essay scores. In addition, the effects of instructional text passages on

the uniformity of the resulting concept maps and essays are considered.

Method

Population, Materials, and Procedure

The participants in this investigation were practicing teachers (n = 24) enrolled

in graduate-level courses on our campus. The participants ranged in age from 30 to 38

years old, 14 were female and 10 were male. Participants were briefed on the purpose

of the investigation and were asked to participate, and all agreed.

One group (the internet but no text group) used the Internet to find information

on the structure and function of the human heart, while the second group (the text plus

internet group) was given printed materials taken from the Internet by the instructor

as well as Internet access.

The printed materials given to the text plus internet group consisted of five

appropriate text passages on the structure and function of the heart taken from the

Internet. The text passages ranged from about 1000 words to 2400 words in length,

with an average Flesch-Kincaid Grade level of 9th grade. There were 18 figures in

the five text passages, which were mainly labeled line drawings of the heart and

circulatory system. The five text passages and 18 figures were dissimilar in style and

approach, but the information in the passages was similar.

Working in pairs, participants were asked to select key terms to describe the

anatomy, function, and purpose of the human heart and circulatory system and then

list these terms on separate yellow sticky “post-it” notes. On a large sheet of blank

newsprint paper (about 27 by 30 inches), pairs represented the associations between

terms by placing the “yellow stickies” on the newsprint in groups and clusters; and

Computer-based scoring . . . 5

then added, combined, and removed terms in order to reach consensus. Next, the pairs

formed propositions by drawing lines and arrows between terms with colored markers

and then described the relationships between the concepts by labeling the lines. After

the concept maps were created, the pairs were asked to review and reflect on their

own concept map and then write an approximately 250-word essay to describe the

function of the human heart and circulatory system.

Concept Map Scores and Rubric

Human-rater scores for the concept maps serve as the main criterion in this

investigation. The participants who created the maps and essays changed roles and

became raters. Using the Lomask et al. (1992) rubric, 5 pairs of human raters scored

the text plus internet group’s concept maps and 5 different raters scored the internet

but no text group’s concept maps. This rubric considered size (the count of terms in a

student map expressed as a proportion of terms in an expert concept map) and

strength (the count of links in a student map as a proportion of necessary, accurate

connections with respect to the expert map). However, an expert map representation

was not provided to the raters, each rater pair had to reach consensus on the expert

representation of this content. Cronbach alpha reliability for the human-rated concept

map scores for the text plus internet group was 0.73 and for the internet but no text

group, 0.91.

Essay Scores and Rubric

Essay scores served as a second criterion measure. As above, the text plus

internet group’s essays were scored by 5 pairs of human raters and the internet but no

text group’s essays were scored by 5 other human raters all using a rubric. The essay

rubric considered (a) content, is the science content clear, relevant, accurate, and

Computer-based scoring . . . 6

concise, (b) style, is the essay a fluent and succinct piece of writing and is the

composition clear, functional and effective, (c) mechanics, including technical or

procedural details, and the practicalities, use of grammar, punctuation and spelling,

and (d) overall score from a holistic view. This investigation focuses on “content”,

however the other assessment dimensions were included in the essay rubric in order to

improve the content measure. Specifically, since the raters had an option for scoring

style and mechanics, then the content score will more accurately reflect the essay’s

content (per Nitko, 1996). Cronbach alpha reliability for human raters content scores

for the text plus internet group essays is 0.88 and for the internet but no text group

essays, 0.84.

Essays Scored by Latent Semantic Analysis

In addition, the essays were also scored automatically using Latent Semantic

Analysis (LSA) software available on the Internet (Landauer, Foltz, & Laham, 1998).

LSA is a computer-based approach for determining the similarity between text

portions. Because LSA is a computer essay scoring system, its internal reliability is

1.00. The correlation of the LSA scores relative to the average of the human raters is

r = 0.83 for the text plus internet group essays and r = 0.75 for the internet but no text

group essays. These strong correlations are consistent with previous findings for LSA

essay scores (Powers, Burstein, Chodorow, Fowles, & Kukich, 2002).

Link and Distance Data

Following Poindexter and Clariana (2004), two kinds of data were derived

from each concept map, a link array that represented the links in a map, and a distance

array that represented all of the pair-wise distances in a map. To capture this data, first

a content expert was asked to list the fewest number of terms describing the structure

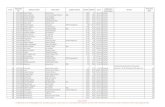

Computer-based scoring . . . 7

and function of the human heart and circulatory systems, in this case, the expert listed

25 terms. Next, an Excel spreadsheet with these 25 terms listed down rows and across

columns was created to record the links in each paper-based concept map, note that

“1” indicates a link between two terms, while “0” indicates no link (see Figure 1). The

number of possible one-directional links between 25 terms not including self-self

links is 300 (i.e., (252 – 25)/2 = 300). Using the spreadsheet, all of the participants’

maps and an expert’s map were represented as link arrays (actually half-arrays). Next,

S-Mapper software was used to establish distance arrays for every map (see Clariana,

2002). The distance arrays contained all pair-wise distances between the 25 terms

(also contains 300 data elements).

Distance Array

Link Array

lungs oxygenated deoxygenated

pulmonary artery

pulmonary vein

left atrium right ventricle

a b c d e f ga left atrium - b lungs 0 - c oxygenate 0 1 - d pulmonary artery 0 1 0 - e pulmonary vein 1 1 0 0 - f deoxgenate 0 1 0 0 0 - g right ventricle 0 0 0 1 0 0 -

a b c d e f ga left atrium - b lungs 120 - c oxygenate 150 36 - d pulmonary artery 108 84 120 - e pulmonary vein 73 102 114 138 - f deoxgenate 156 42 54 84 144 - g right ventricle 66 102 138 42 114 120 -

moves through

to the passes into

to the

Figure 1. The link and distance arrays of an example concept map.

Following the approach described by Goldsmith and Davenport (1990), the

link and distance arrays for each concept map were analyzed using a software tool

called Knowledge Network and Orientation Tool (KNOT). KNOT was used to

convert the link and distance arrays into network representations of structural

Computer-based scoring . . . 8

knowledge called PFNets, and then to calculate the similarity of each concept map to

the expert’s concept map. Following the standard analysis approach, the KNOT

parameter r was set at infinity, q was set at 24 (i.e., n-1), minimum for the link

similarity arrays was set at 0.1 (in order to exclude missing terms), and maximum for

the distance dissimilarity arrays was set at 900 (in order to exclude missing terms).

KNOT provides two measures of PFNet similarity called “common” and

“configural similarity”. Common is simply the total number of links in common

between the participant’s PFNet and the expert’s. In set terminology, common is the

intersection of the participant’s and the expert’s PFNets. Following Poindexter and

Clariana (in press) and for clarity, we refer to these common scores as link agreement

with an expert (Link-Ex, maximum 34) and distance agreement with an expert (Dist-

Ex, maximum 24). Configural similarity is a set-theoretic measure of node similarity

(Goldsmith & Davenport, 1990) that ranges from 0 (no similarity) to 1 (perfect

similarity). Again, following Poindexter and Clariana, we refer to link configural

similarity as Link-C and distance configural similarity as Dist-C.

Further, unlike the investigation by Poindexter and Clariana, in this present

investigation participants were not given the terms ahead of time. Thus the concept

maps that they created may have missing terms relative to the expert’s concept map.

To examine the effects of the presence or absence of terms in participants’ concept

maps, data on term agreement with an expert was also collected (Term-Ex, maximum

25).

Correlations between the human-rater criterion variables and the computer-

generated scores are presented in order to examine the concurrent criterion-related

validity of this prototype scoring approach. As a secondary issue, in order to

Computer-based scoring . . . 9

understand how text passages may influence concept maps, a simple descriptive

comparison of the text plus internet group versus the internet but no text group

concept map and essay scores is provided.

Results

Comparisons to Criterion Variables

The variables included in this analysis include: Concept Map (the content

score of the concept maps as determined by raters), Essay (the content score of the

essays as determined by raters), LSA (the content score of the essays as determined by

the LSA software), Link-Ex (the sum total of links that agree with the expert), Link-C

(the configural closeness of link data), Dist-Ex (the sum total of PFNet distances that

agree with the expert), Dist-C (the configural closeness of the PFNet distance data),

and Term-Ex (term agreement with an expert).

First, the correlation between human-rated concept map scores and human-

rated essay scores was r = 0.49 (see Table 1). In this investigation, human-rater

concept map and essay scores were not significantly related.

Table 1. Intercorrelations of the rater and computer scores.

Map Essay LSA Link-Ex Link-C Dist-Ex Dist-CMap 1*Essay 0.49* 1*LSA 0.31* 0.73* 1*Link-Ex 0.36* 0.76* 0.83* 1*Link-C 0.29* 0.75* 0.81* 0.95* 1*Dist-Ex 0.54* 0.71* 0.67* 0.89* 0.78* 1*Dist-C 0.50* 0.67* 0.64* 0.87* 0.76* 0.99* 1*Term-Ex 0.75* 0.76* 0.63* 0.70* 0.61* 0.74* 0.69** p < .05.

Computer-based scoring . . . 10

Next, the correlation between human-rater essay content scores and LSA essay

content scores was r = 0.73 (sig.). This is consistent with other LSA research (e.g.,

Powers, Burstein, Chodorow, Fowles, & Kukich, 2002) and indicates that LSA

software was reasonably good at scoring these essays relative to the human raters.

Next, the correlations between the link and distance computer-derived scores

compared to the human-rater criterion concept map and essay scores are of greatest

interest in this investigation. Only Term-Ex was significantly related to human map

scores (r = 0.75). However, all of the computer-derived concept map scores were

significantly correlated with human-rater essay scores and all but one were

significantly correlated with LSA essay content scores.

Link-Ex and Dist-Ex were significantly correlated (r = 0.89) as was Link-C

and Dist-C (r = 0.76). This indicates that concept map link data and distance data are

related but not identical. Specifically, actual links (ink on paper) connecting terms on

a concept map relate to the distances between the most related terms.

The Influence of Text on Participants’ Concept Maps

It was assumed that participants in the text plus internet group would produce

concept maps that are more similar to each other relative to the internet but no text

group due to the influence of the five instructional text passages. To examine this

question, “raw” link and distance data (the 300-element arrays) for each concept map

were correlated with that of every other map.

For the raw link data, the internet but no text group concept map data within-

group correlations (median r = .24) were larger than those of the text plus internet

group (median r = .13) indicating counter intuitively that the internet but no text

group’s concept maps were more like each other relative to the text plus internet

Computer-based scoring . . . 11

group’s raw link data. Further, the internet but no text group maps were considerably

more similar to the expert’s map (median r = .35) than were the text plus internet

group maps (median r = .19). Somehow, the text passages given to the text plus

internet group did not result in more similar or better links in their maps.

However, for the raw distance data, the internet but no text group concept map

data within-group correlations (median r = .09) were about the same as those of the

text plus internet group (median r = .11). Although, as with raw link data, the internet

but no text group maps were a little more similar to the expert’s map (median r = .24)

than were the text plus internet group maps (median r = .21).

Summary and Conclusions

The purpose of this investigation was to take the next step in establishing an

automatic system for scoring concept maps. Several computer-based concept map

scores were considered. Unlike Harper et al, (2004), link line data were not

significantly correlated with propositions scored by raters (r = 0.36) but computer-

derived term agreement with an expert scores were significantly related to human-

rater concept map scores. This suggests that even though the scoring rubric

emphasized terms and propositions, the human raters were more influenced by the

presence of the terms in the maps than by the propositions. Hand scoring terms in a

concept map is easier than scoring correct and valid propositions. Also, the structure

of the concept map scoring rubric may have influenced the raters to look at terms first

and then propositions second, resulting in an intended halo effect for proposition

scores for those concept maps with more correct terms. Future investigation should

consider collecting term and proposition data separately or in counterbalancing the

rubric structure for terms and propositions.

Computer-based scoring . . . 12

All of the concept map link and distance computer-derived scores were

significantly correlated with human-rater essay scores and to the LSA computer-based

essay scores. Apparently, this approach for converting concept map link and distance

data into scores provided distinct information that is strongly related to human-rater

essay scores.

This investigation also considered the influence of instructional text passages

on concept maps and essays. Results suggest that providing print-based text passages

did not result in more similar concept maps for the text plus internet group. Counter

intuitively, the internet but no text group’s concept maps were more homogenous than

the text plus internet group’s maps. Possibly the participants’ pre-existing knowledge

base influenced their maps more than did the print-based text passages, though that

doesn’t explain the no text group’s relative concept map homogeneity. More likely,

this is a methodological flaw due to too much choice. Because five print-based text

passages were handed out, any particular pair of students in the text plus internet

group may have focused on only one or two of the five passages to the exclusion of

the other passages, thus producing a concept map more like the one or two passages

that they choose. Future research on the effects of lesson text should provide only one

print-based text passage, perhaps created by the expert from the expert’s concept map,

in order top control this over choice factor.

Pragmatically, all links (propositions) are assumed to be of equal value in this

investigation (i.e., 1 point). However, some previous concept map studies have

awarded different points for different kinds of propositions, such as weighting cross-

links as worth more than within-cluster links (Rye & Rubba, 2002). If such an

approach is valid, it may be possible to improve Link-Ex and Link-C scores by

Computer-based scoring . . . 13

weighting important links and different kinds of links, differently. For example, links

formed by “long” links between neighborhoods (cross-links) may be worth more than

shorter links. Also, links within a neighborhood may be given different weights,

perhaps by an expert beforehand, or else statistically, based on item discrimination

values.

It is quite possible for computer software to immediately generate link- and

distance-based scores using the approach described here if the concept maps are

created within a computer program, such as with Inspiration or C-Tools (C-Tools,

2003). A self-scoring concept map tool based on the method described in this

investigation should be of great interest to the research and education communities.

References

Clariana, R. B. (2002). S-Mapper, version 1.0. Available for download online: http://www.personal.psu.edu/rbc4

C-Tools (2003). http://ctools.msu.edu/ctools/index.html

Deese, J. (1965). The structure of associations in language and thought. Baltimore, MD: The John Hopkins Press.

Elman, J.L. (1995). Language as a dynamical system. In Port & van Gelder, Eds. Mind as Motion, 195–225. Boston, MA: MIT Press. Retrieved January 14, 2005 from http://www3.isrl.uiuc.edu/~junwang4/langev/alt/ elman95languageAs/Elman--1995--LanguageAsADynamicalSystem.pdf

Goldsmith, T.E., & Davenport, D.M. (1990). Assessing structural similarity in of graphs. In Schvaneveldt (ed.), Pathfinder associative networks: studies in knowledge organization, 75-87. Norwood, NJ: Ablex.

Goldsmith, T. E., & Johnson, P. J. (1990). A structural assessment of classroom learning. In Schvaneveldt, ed., Pathfinder associative networks: studies in knowledge organization, 241-253. Norwood, NJ: Ablex Publishing Corporation.

Goldsmith, T. E., & Johnson, P. J., & Acton, W.H. (1991). Assessing structural knowledge. Journal of Educational Psychology, 83 (1), 88-96.

Gonzalvo, P., Canas, J. J., & Bajo, M. (1994). Structural representations in knowledge acquisition. Journal of Educational Psychology, 86 (4), 601-616.

Computer-based scoring . . . 14

Harper, M. E., Hoeft, R. M., Evans, A. W. III, & Jentsch, F. G. (2004). Scoring concepts maps: Can a practical method of scoring concept maps be used to assess trainee’s knowledge structures? Paper presented at the Human Factors and Ergonomics Society 48th Annual Meeting.

Jonassen, D. H., Beissner, K., & Yacci, M. (1993). Structural knowledge: techniques for representing, conveying, and acquiring structural knowledge. Hillsdale, NJ: Lawrence Erlbaum Associates.

Jonassen, D. H., & Wang, S. (1992). Acquiring structural knowledge from semantically structured hypertext. In Proceedings of Selected Research and Development Presentations at the 14th Annual Convention of the Association for Educational Communications and Technology. (ERIC Document Reproduction Service No. ED 348 000)

Kintsch, W. (1994). Discourse processing. In d’Ydewalle, Eelen, & Bertelson, Eds. International perspectives on psychological science: Volume 2: The state of the art, 135-155. Hove, UK: Lawrence Erlbaum Associates Ltd.

Landauer, T. K., Foltz, P. W., & Laham, D. (1998). Introduction to Latent Semantic Analysis. Discourse Processes, 25, 259-284.

Landauer, T.K., Laham, D., Rehder, R., & Schreiner, M.E. (1997). How well can passage meaning be derived without using word order? A comparison of latent semantic analysis and humans. In M. G. Shafto & P. Langley (Eds.), Proceedings of the 19th annual meeting of the Cognitive Science Society, 412-417. Mawhwah, NJ: Erlbaum.

Lomask, M., Baron, J.B., Greig, J., & Harrison, C. (1992, March). ConnMap: Connecticut’s use of concept mapping to assess the structure of students’ knowledge of science. Paper presented at the annual meeting of the National Association of Research in Science Teaching, in Cambridge, MA.

Markham, K. M., Mintzes, J. J., & Jones, M. G. (1994). The concept map as a research and evaluation tool: further evidence of validity. Journal of Research in Science Teaching, 31 (1), 91-101.

McClelland, J.L., McNaughton, B.L., & O’Reilly, R.C. (1995). Why there are complimentary learning systems in the hippocampus and neocortex: insights from the success and failures of connectionist models. Psychological Review, 102, 419-457.

Nitko, A.J. (1996). Educational assessment of students. Englewood Cliffs, NJ: Merrill.

Novak, J. D., & Gowin D. B. (1984). Learning How to Learn. New York: Cambridge University Press.

Computer-based scoring . . . 15

Poindexter, M.T., & Clariana, R.B. (in press). The influence of relational and proposition-specific processing on structural knowledge and traditional learning outcomes. International Journal of Instructional Media, 33 (2), in press (anticipated June 2006).

Powers, D.E., Burstein, J.C., Chodorow, M.S., Fowles, M.E., & Kukich, K. (2002). Comparing the validity of automated and human scoring of essays. Journal of Educational Computing Research, 25 (4), 407-425.

Ruiz-Primo, M. A. (2000). On the use of concept maps as an assessment tool in science: what we have learned so far. Revista Electronica de Investigacion Educative, 2 (1). Available from: http://redie.ens.uabc.mx/vol2no1/contenido-ruizpri.html

Ruiz-Primo, M. A., Schultz, S. E., Li, M., & Shavelson, R. J. (2000). Comparison of the reliability and validity of scores from two concept mapping techniques. Journal of Research in Science Teaching, 38 (2), 260-278.

Ruiz-Primo, M. A., Shavelson, R.J., Li, M., & Schultz, S.E. (2001). On the validity of cognitive interpretations of scores from alternative concept-mapping techniques. Educational Assessment, 7 (2), 99-141.

Rye, J. A., & Rubba, P. A. (2002). Scoring concept maps: an expert map-based scheme weighted for relationships. School Science and Mathematics, 102 (1), 33-44.

Shavelson, R. J. (1972). Some aspects of the correspondence between content structure and cognitive structure in physics instruction. Journal of Educational Psychology, 63, 225-234.

Shavelson, R. J., Lang, H., & Lewin, B. (1994). On concept maps as potential “authentic” assessments in science (CSE Tech. Rep. No. 388). Los Angeles, CA: University of California, Center for Research on Evaluation, Standards, and Student Testing.