The well tempered search application

-

Upload

ted-sullivan -

Category

Software

-

view

409 -

download

0

Transcript of The well tempered search application

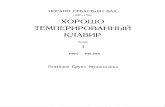

The Well-Tempered Search Application

Variations on a Theme:

Why does my search app suck, and what can I do about it?

Ted Sullivan – (old Phuddy Duddy)Senior (very much so I’m afraid) Solutions (I hope) Architect (and sometime plumber)Lucidworks Technical Services

Our Basic Premises (Premisi?)

• Lemma 1: Search Applications use algorithms that make finding chunks of text within large datasets possible in HTT (human-tolerable time).

• Lemma 2: These algorithms work by breaking text into primitive components and building up a search “experience” from that.

• Lemma 3: Lemma 2 is not sufficient to achieve Lemma 1.

The Basic Disconnect

• Text can be analyzed at the level of tokens (syntax) and at the level of meaning (semantics).

• We think one way (semantics), search engines think another (syntax – i.e. token order).

• How do we bridge the gap? … More clever algorithms!

Art and Science

• We need to be intelligent curators of these algorithms. Craftsmen (craftswomen?) that think of these as tools with a specific purpose.

• Like any good craftsperson – we need a wide array of tools to get the job done (well almost).

When is my search app done?

• Quick answer: NEVER (ain’t consultin’ great?)

• Long answer – As long as it is continues to improve, like fine wine or bourbon, you are on the path to enlightenment.

• How do you get there grasshopper? Add semantic intelligence to the engine!

Search cannot be shrink-wrapped!!

What have we got for Donny behind Curtain #1 Jay?

Well Monty - Heeeeeeeeeeeerrrrrrrreeeeesssss the Google … SEARCH Appliance!!!!

Search cannot be shrink-wrapped!!

What have we got for Donny behind Curtain #1 Jay?

Well Monty - Heeeeeeeeeeeerrrrrrrreeeeesssss the Google … SEARCH Appliance!!!!*

Sorry Donny – It’s a ZONK!* but Google Web Search has some Serious Mojo!

Prelude part 1– The basic problem

The inverted index and “bag-of-words” search:

The red fox jumped over the fence.

Time flies like an arrow. Fruit flies like a banana.

the 1,6red 2fox 3

jumped 4over 5fence 7

flies 2,7like 3,8

Prelude part B – The Tried and True

• Phrase and Proximity boosting and “Slop”

• Synonyms and stop words

• Stemming or Lemmatization

• Autocomplete

• Best Bets / Landing Pages – the sledgehammer

• Spell check – spell suggest – aka the warm fuzzies.

Fugue - Subject or Exposition

Search engines need more ‘semantic awareness’ or at least the illusion of this.

There is a heavy duty solution called Artificial Intelligence – which except in the fertile imagination of Hollywood screenwriters, is not there yet. So we need to fake it just a bit.

Theme and Variations I autophrasing and the red sofa

Theme: When multiple words mean just one thing.

Fuzzy way: Boosting phrases (proximity and phrase slop)- pushes false positives down – i.e. out of the limelight- i.e. - shoves ‘em under the rug

This encounters a problem with faceted search

Like the eye of Sauron in LOTR or Santa Claus, the faceting engine SEES ALL (sins)!

Brake Pads example: hit on things that have ‘brake’ (like children’s stroller brakes) and ‘pads’ – like mattress pads.

Variation I: Autophrasing

AutophrasingTokenFilter tells Lucene not to tokenize when a noun phrase represents a single thing - by providing a flat list of phrases.

Creates one-to-one token mapping that Luceneprefers because it avoids the “sausagization” problem.

https://github.com/LucidWorks/auto-phrase-tokenfilter

income tax refundincome tax

tax refund

“income tax” is not income. A “tax refund” is not a tax.

Solution: Autophrasing + synonym mapping

income tax => taxtax refund => refund

Autophrasing Example

Autophrasing Setupautophrases.txt:

income taxtax refundtax rebatesales taxproperty tax

synonyms.txt

income_tax,property_tax,sales_tax,taxtax_refund,refund,rebate,tax_rebate

<fieldType name="text_autophrase" class="solr.TextField" positionIncrementGap="100"><analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/><filter class="solr.LowerCaseFilterFactory"/><filter class="com.lucidworks.analysis.AutoPhrasingTokenFilterFactory"

phrases="autophrases.txt" includeTokens="true” replaceWhitespaceWith="_" />

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" enablePositionIncrements="true" />

<filter class="solr.PorterStemFilterFactory"/></analyzer>

</fieldType>

Multi-term synonym problem• New York, New York – it’s a HELLOVA town!

Subject was inspired by an old JIRA ticket: Lucene-1622

“if multi-word synonyms are indexed together with the original token stream (at overlapping positions), then a query for a partial synonym sequence (e.g., “big” in the synonym “big apple” for “new york city”) causes the document to match”

(or “apple” which will hit on my blog post if you crawl lucidworks.com !)

This means certain phrase queries should match but don't (e.g.: "hotspot is down"), and other phrase queries shouldn't match but do (e.g.: "fast hotspot fi").

Other cases do work correctly (e.g.: "fast hotspot"). We refer to this "lossy serialization" as sausagization, because the incoming graph is unexpectedly turned from a correct word lattice into an incorrect sausage.

This limitation is challenging to fix: it requires changing the index format (and Codec APIs) to store an additional int position length per position, and then fixing positional queries to respect this value.

Sausagization: from Mike McCandless blog Changing Bits: Lucene's TokenStreams are actually graphs!

http://blog.mikemccandless.com/2012/04/lucenes-tokenstreams-are-actually.html

Multi-term synonym demo

new yorknew york stateempire statenew york citynew york new yorkbig appleny nycity of new yorkstate of new yorkny state

autophrases.txt

new_york => new_york_state,new_york_city,big_apple,new_york_new_york,ny_ny,nyc,empire_state,ny_state,state_of_new_york

new_york_state,empire_state,ny_state,state_of_new_york

new_york_city,big_apple,new_york_new_york,ny_ny,nyc,city_of_new_york

synonyms.txt

This document is about new york state.

This document is about new york city.

There is a lot going on in NYC.

I heart the big apple.

The empire state is a great state.

New York, New York is a hellova town.

I am a native of the great state of New York.

new york new york city new york state

Multi-term synonym demo

/select /autophrase

This document is about new york state.

This document is about new york city.

There is a lot going on in NYC.

I heart the big apple.

The empire state is a great state.

New York, New York is a hellova town.

I am a native of the great state of New York.

empire state

Multi-term synonym demo

/select /autophrase

(Even a blind squirrel finds a nut once in a while)

Variation II: The Red Sofa Problem

{"response":{"numFound":3,"start":0,"docs":[

{"color":"red",”text":"This is the red sofa example. Please find with 'red sofa' query.",

{"color":"red",”text":"This is a red beach ball. It is red in color but is not something that you

should not sit on because you would tend to roll off.",{

"color":"blue",”text":"This is a blue sofa, it should only hit on sofas that are blue in color."]

}}

OOTB – q=red sofa is interpreted as text:red text:sofa (default OR)

http://localhost:8983/solr/collection1/select?q=red+sofa&wt=json

Closing the Loop: Content Tagging and Intelligent Query Filtering

Using the search index itself as the knowledge source:

Solution for the Red Sofa problemQuery Autofiltering: Search Index driven query introspection / query rewriting:

Lucene FieldCache MagicLucene FieldCache (to be renamed UninvertedIndex in Lucene 5.0)

Inverted Index: Show all documents that have this term value in this field.

Uninverted or Forward Index:Show all term values that have been indexed in this field.

SolrIndexSearcher searcher = rb.req.getSearcher();SortedDocValues fieldValues = FieldCache.DEFAULT.getTermsIndex(

searcher.getAtomicReader( ), categoryField );…StringTokenizer strtok = new StringTokenizer ( query, " .,:;\"'" );while (strtok.hasMoreTokens( ) ) {

String tok = strtok.nextToken( ).toLowerCase( );BytesRef key = new BytesRef( tok.getBytes() );if (fieldValues.lookupTerm( key ) >= 0) {

Query Autofiltering

{"response":{"numFound":1,"start":0,"docs":[

{"id":"1","color":"red","description":"This is the red sofa example. Please find with 'red sofa' query."]

}

http://localhost:8983/solr/collection1/infer?q=red+sofa&wt=json

Now search for “red sofa” only returns ….. red sofas!

But – is this too “brute force”? The takeaway is that using the search index AS a knowledge store can be very powerful!

Architecture: its all about Plumbing

• Pipelines for every occasion.

Indexing Pipelines – good ‘ole ETL- Content enrichment, tagging - Metadata cleanup

Query Pipelines – identification, query preprocessing - introspection

One is the “hand” the other, the “glove”

Index Pipelines

Lots of choices here:

• Internal to Solr – DIH, UpdateRequestProcessor

Pros and cons

• External – Morphlines, Open Pipeline, Flume, Spark, Hadoop, Custom SolrJ

• Lucidworks Fusion

Entity and Fact Extraction

Entities: Things, Locations, Dates, People, Organizations, Concepts

Entity RelationshipsCompany was acquired by CompanyDrug cures DiseasePerson likes Pizza

Annotation Pipelines (UIMA, Lucidworks Fusion):Entity Extraction followed by Fact ExtractionPattern method:

$Drug is used to treat $ConditionParts of Speech (POS) analysis

Subject Predicate Object

Theme and Variations IIThe Classification Wars

• Machine Learning or Taxonomy – is it a Floor Wax or a Dessert Topping?

Answer: It’s a floor wax AND a dessert topping! Its delicious and just look at that shine!

Machine Learning

Use mathematical vector-crunching algorithms like Latent Dirichlet Allocation (LDA), Bayesian Inference, Maximum Entropy, log likelihood, Support Vector Machines (SVM) etc., to find patterns and to associate those patterns with concepts.

Can be supervised (i.e. given a training set) or unsupervised (the algorithm just finds clusters). Supervised learning are called semi-automatic classifiers.

Check out Taming Text by Ingersoll, Morton and Farris (Manning)

Machine Learning In LucidworksFusion

Training DataNLP Trainer

Stage

NLP Model

Test DataNLP Classifier

StageClassified

Documents

Taxonomy or Ontology“Knowledge graphs” that relate things and concepts to each other either hierarchically or associatively.

Pros:Works without large amounts of content to analyze

Encapsulates the knowledge of human subject matter experts

Cons:Often not well designed for search (mixes semantic relationship

types / organizational logic)

Requires curation by subject matter experts whose time is costly

Taxonomies Designed for SearchCategory nodes and Evidence nodes

Category Node:A ‘parent’ nodeCan have child nodes that are:

Sub CategoriesEvidence Nodes

Evidence Node:Tends to be a leaf node (no children)Contains keyterms (synonyms)May contain “rules” e.g. (if contains term a and term b but not term c)Evidence Nodes can have more than one category node parent

Hits on Evidence Nodes add to the cumulative score of a Category Node.

Scores can be diluted as the accumulate up the hierarchy – so that the nearest category gets the strongest ‘vote’.

US Corporations

Foreign CorporationsBritishChineseFrenchGermanJapaneseRussianetc.

Fortune 100 Companies

Energy

Financial ServicesInvestment BanksCommercial Banks

Health CareHealth InsuranceHMOMedical DevicesPharmaceuticals

Hospitality

Manufacturing

AircraftAutomobilesElectrical Equipment

Ford, GM, Chrysler

Fortune 100 Companies

Energy

Financial ServicesInvestment BanksCommercial Banks

Health CareHealth InsuranceHMOMedical DevicesPharmaceuticals

Hospitality

Manufacturing

AircraftAutomobilesElectrical Equipment

US Corporations

Foreign CorporationsBritishChineseFrenchGermanJapaneseRussianetc.

Ford, GM, Chrysler,Toyota,BMW

Fortune 100 Companies

Energy

Financial ServicesInvestment BanksCommercial Banks

Health CareHealth InsuranceHMOMedical DevicesPharmaceuticals

Hospitality

Manufacturing

AircraftAutomobilesElectrical Equipment

US Corporations

Foreign CorporationsBritishChineseFrenchGermanJapaneseRussianetc.

Fortune 100 Companies

Energy

Financial ServicesInvestment BanksCommercial Banks

Health CareHealth InsuranceHMOMedical DevicesPharmaceuticals

Hospitality

Manufacturing

AircraftAutomobilesElectrical Equipment

Ford, GM, Chrysler,Toyota,BMW

GE, Boeing

US Corporations

Foreign CorporationsBritishChineseFrenchGermanJapaneseRussianetc.

Fortune 100 Companies

Energy

Financial ServicesInvestment BanksCommercial Banks

Health CareHealth InsuranceHMOMedical DevicesPharmaceuticals

Hospitality

Manufacturing

AircraftAutomobilesElectrical Equipment

Ford, GM, Chrysler,Toyota,BMW

GE, Boeing

Bank of America, Hyatt

US Corporations

Foreign CorporationsBritishChineseFrenchGermanJapaneseRussianetc.

Query Pipelines

The ‘Wh’ Words: Who, What, When, Where

Who are they (authentication)?

What can they see (security - authorization)?

When can they see it (entitlement)?

What are they interested in (personalization / recommendation)?

Where are they now (location)?

Query Pipelines

Inferential SearchQuery introspection -> Query modification.

Query Autofiltering

Are you feeling lucky today?

Topic boosting / spotlightingUse ML to detect the topic, then boost and/or spotlight results tagged this way.

Use a specialized collection to store ‘facet knowledge’

The Art of the Fugue:Inferential Search

• Infer what the user is looking for and give them that

• Clever software infers meaning aka query “intent”

• When we do this right, it appears to be magic!

Machine Learning DrivesQuery Introspection

Training DataNLP Trainer

Stage

NLP Model

Test DataNLP Classifier

StageClassified

Documents

Machine Learning models can driveQuery Introspection

NL QueryNLP Query

Stage

NLP Model

Tagged Query

Landing Page

Boost Documents

Da Capo al Coda

• Killer search apps are crafted from fine ingredients and like fine whiskey will get better with age - if you are paying attention to ‘what’ your users are looking for.

• Putting the pieces together requires an understanding of ‘what’ things, independent of what words they use to describe it.

Thanks for your attention!

Ted SullivanLucidworks, Technical Services

[email protected]: ted.sullivan5LinkedIn

Metuchen, New Jersey (You gotta problem with that?)