The NEAR Command and Data Handling System · 1998. 3. 19. · TCU CDU CDU Command and telemetry...

Transcript of The NEAR Command and Data Handling System · 1998. 3. 19. · TCU CDU CDU Command and telemetry...

-

D. D. STOTT ET AL.

The Near Earth Asteroid Rendezvous (NEAR) spacecraft’s command and datahandling (C&DH) system is designed to manage complex operations and to collect datawhen the spacecraft is out of contact with ground control. During ground contacts, theC&DH system accepts uplink commands and memory loads that describe a time-ordered set of events to follow, and it transmits previously recorded data back to theground station. This article describes the overall C&DH system, gives details on eachof the components, and tells how the system was tested prior to spacecraft integration.Solid-state data recorders and flight computers are also discussed.(Keywords: Autonomous spacecraft, CCSDS, Deep space, Spacecraft, Telemetry.)

The NEAR Command and Data Handling System

David D. Stott, David A. Artis, Brian K. Heggestad, Jerry E. Kroutil, Robert O. Krueger,Lloyd A. Linstrom, James A. Perschy, Paul D. Schwartz, and Glen F. Sweitzer

INTRODUCTIONAlthough the Near Earth Asteroid Rendezvous

(NEAR) spacecraft is in ground contact at least severaltimes a week, the spacecraft does most of its workindependently; that is, it operates on sequences of com-mands that are received via the X-band radio frequency(RF) uplink and stored onboard. Scenarios range fromminimal housekeeping during cruise mode to recordingdata continuously during a 16-h cycle of science mea-surements at the asteroid Eros. Normally, ground con-tacts are needed only to send recorded data back toEarth and to receive the next series of commands to beexecuted. However, onboard autonomy rules will senseevents that threaten the health of the spacecraft. Thestrategy in these cases is to enter a safe mode and waitfor ground commands to direct a recovery. The com-mand and data handling (C&DH) system communi-cates with the ground via the X-band RF system,

220 JOH

processes commands, formats and stores data, and au-tonomously protects the health of the spacecraft.

OVERVIEW

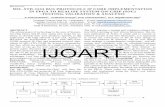

Data Flow and Spacecraft InterfacesThe NEAR C&DH system has electrical interfaces

throughout the spacecraft. Figure 1 shows the C&DHsystem in relation to the other packages onboard thespacecraft. The flow of data and electrical power overthese interfaces is managed by the embedded computersin the command and telemetry processors (CTPs).

Command and telemetry signals pass between theRF receivers and transmitters and the C&DH systemas serial streams. Table 1 shows the bit rates supportedby the NEAR RF links. Formats of these serial streams

NS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

Flightcomputer

X-bandtransponder

2

Receiver

Transmitter

TCU

CDU

CDU

Commandand

telemetryprocessor

1

1553BCRT

Commandand

telemetryprocessor

2

1553BCRT

Powerswitching

unit

Solid-state datarecorder

1

Solid-state datarecorder

2

MSIDPU

1553RT

XGRSDPU

1553RT

NIS/MAGDPU

1553RT

LaserRange-finder

1553RT

MSI

XGRS

X-bandtransponder

1

Transmitter

Receiver

TCU

1553RT

Attitudeinterface

unit1553BCRT

1 2

Powersystem

electronics1

2

RelaycontactsHousekeepingdata

Command and DataHandling System

1553RT

Flightcomputer

Inertialmeasurement

units

1553RT1553RT

Starcamera

1

2

Propulsion Reactionwheels (4)Sun

sensor

Command and data handling 1553 bus

Guidance and control 1553 bus

Relaycontacts

High-speedserial data

Ser

ial d

ata

Ser

ial d

ata

Discrete interface lines

Discreteinterface lines

1-Hz time tick discretesignals (not bussed)

NIS

MAG

Figure 1. Data interfaces among NEAR components. (BC = bus controller, RT = remote terminal, CDU = command detector unit,DPU = data processing unit, TCU = telemetry conditioning unit, MSI = Multispectral Imager, XGRS = X-Ray/Gamma-Ray Spectrometer,NIS = Near-Infrared Spectrometer, MAG = Magnetometer.)

are based on standards published by the ConsultativeCommittee for Space Data Systems (CCSDS).1–4

NEAR follows CCSDS standards regarding headers fortransfer frames and packets, Reed–Solomon coding,convolution coding, and command operating proce-dure version 1 (COP-1) for the “handshake” betweenground station and spacecraft for commanding. Com-mands use CCSDS telecommand transfer frame struc-tures containing nonpacketized NEAR-unique com-mand bit formats. Telemetry data use the CCSDStransfer frame and packet structures. CCSDS headersprovide virtual channel (VC) designations for telem-etry frames. Assignment of VCs is mission dependent.NEAR uses four VCs, referred to as VC0, VC1, VC2,and VC3. These four channels have specific meanings,as defined in Table 2. Within a telemetry frame aretelemetry packets that contain data from one source,such as a subsystem or subprocess. Table 2 lists thepackets defined for NEAR. A telemetry packet is des-ignated by its application process identifier, a numberfrom 0 to 13.

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1

The C&DH 1553B data bus is a time multiplexed,bidirectional data interface5 for communication be-tween bus controller and remote terminals. It is usefulfor all intraspacecraft messages except those that would

Table 1. Telemetry downlink data rates.

Bit rate Transfer frame (TF)Multiplier (kilobits/s) rate (TF/s)

24 26.496 316 17.664 28 8.832 14 4.416 1/2

2-2/3 2.944 1/31 1.104 1/8

1/28 0.0394 1/2241/112 0.0099 1/896

998) 221

-

D. D. STOTT ET AL.

overload its 1-megabit per second data rate. Dedicatedhigh-speed serial circuits are provided for the multispec-tral imagers that must transfer images at 2 megabits persecond to the solid-state data recorders (SSDRs).

Power switching merits a special dedicated controland status interface to the CTPs, because the capabilityfor switching relay contacts under fault conditions iscritical. Relay contacts from power switching are usedto turn spacecraft +28-V bus power on and off to otherpackages and in some cases to control discretefunctions.

ceivers, as well ascheme with auto

Stored CommanProtection Capa

Commands fromas they are receiveUp to 10,000 comcommands can becific value of miss

Table 2. CCSDS virtual channel and application process identifierassignments.

Virtual channel or applicationprocess identifiera Description

Telemetry transfer frames(virtual channel)

VC0 Recorder input science and engineeringdata packets, used for recorder input only

VC1 Recorder output data streamVC2 Recorder input Multispectral Imager data,

nonpacketized image dataVC3 Real-time data packets, used for down-

linking only

Telemetry packets (applicationprocess identifier)

0 CTP 1 packets1 CTP 2 packets2 Attitude Interface Unit 1 packets3 Attitude Interface Unit 2 packets4 Multispectral Imager DPU packets5 X-Ray/Gamma-Ray Spectrometer DPU

packets6 Near-Infrared Magnetometer DPU packets7 NEAR Laser Rangefinder packets8 X-Ray Spectrometer packets9 Gamma-Ray Spectrometer packets10 Near-Infrared Spectrometer packets11 Magnetometer packets12 Flight computer 1 packets13 Flight computer 2 packets

Telecommand transfer frames(virtual channel)

VC1 Transfer frame addressed to CTP 1VC2 Transfer frame addressed to CTP 2

Note: CCSDS = Consultative Committee for Space Data Systems, VC = virtual channel,CTP = command and telemetry processor, DPU = data processing unit.aThe application process identifier was not used for telecommand packets.

222 JO

Discrete interface lines, i.e., sin-gle wires, are used for distributingthe 1-Hz synchronization pulse(time tick) to spacecraft sub-systems. A set of discrete line inter-faces is also provided for fault pro-tection between the CTPs and theattitude interface units.

Redundancy and CrossStrapping

System reliability concerns dic-tated that the NEAR C&DH sys-tem have no credible single pointof failure that could compromisethe mission. Figure 1 shows theconfiguration in which full hard-ware redundancy is provided forC&DH components. Cross strap-ping at critical points makes use ofthe redundancy. Both CTP 1 andCTP 2 are powered and operation-al for the entire mission. The com-mand function is actively redun-dant, which means that both CTPsaccept and decode all uplink sig-nals from both sides of the RF sys-tem. Control bits in the commandmessage headers prevent any com-mand from being executed morethan once. Only one CTP at a timecan be in control of telemetry gen-eration, the data recorders, and the1553B data bus. The position of arelay in the power switching unit isused to select the CTP used for ac-tive telemetry and 1553B bus con-trol. Thus, where spacecraft sys-tems are redundant in a stand-bysense, the active side is selected bycommand. Finally, the 1553B databus is a fully redundant system initself. It features redundantcable harnessing, drivers, and re-

s a message parity error detectionmatic retry for failed messages.

d and Autonomous Faultbilities

the ground station can be executedd or can be stored for later execution.mands can be stored per CTP. Stored time tagged for execution at a spe-ion elapsed time (MET), or they can

HNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

be organized into a macro that is called by anothercommand. More details on stored commanding are de-scribed in the section on C&DH software.

Autonomous fault protection is based on autonomyrules that are uploaded and stored in special locationsin the CTP memory. Each rule can sense up to twotelemetry points, test them separately for limits, andlogically combine the test results. When a rule “fires,”it causes a specific command to be executed.

Within each CTP are four levels of hardware watch-dog timers to protect against processor failures. In orderof time-out period, they are the RTX2010 microproces-sor wait-state time-out (10.6 ms), the 1-s polling looptime-out (1.001 s), the 1553B bus initializer time-out(4.0 s), and the “last resort timer,” which fires if nocommand is received from the ground for 12 days. Theoccurrence of any of these events will result in a pro-cessor hard reset. Such a reset reloads computer soft-ware and default autonomy rules from electrically eras-able programmable read-only memory (EEPROM)devices on the computer board and resets all hardware.

Management of Stored DataNEAR mission scenarios require science and engi-

neering data to be stored onboard for later transmissionto the ground station. As shown in Fig. 1, two SSDRsprovide this function. They have capacities of 1024 and512 megabits. Recorder input–output circuits are fullycross strapped between the two CTPs. Either or bothof the SSDRs can be operated at any time, accordingto mission needs. Data recording rates are driven bythe availability of science data packets. This schemegives maximum flexibility for allo-cating recorder capacity. ForNEAR, the quantity of sciencedata delivered to the ground sta-tion is limited by the bandwidth ofthe X-band RF downlink, not bythe capacity of the recorders.NEAR is the first APL spacecraftto use solid-state devices for space-borne mass storage.

Timing and SynchronizationTiming for the NEAR C&DH

system is driven by a crystal oscil-lator and fixed-hardware dividechain in the active (bus controller)CTP. All of the frequencies for bitrates, 8-Hz 1553B bus minorframes, 1-Hz time tick, etc., comefrom this chain; thus, all C&DHevents are synchronized. Given thedownlink telemetry frame lengthof 8832 bits (from CCSDS), the Figure 2. The comm

JOHNS HOPKINS APL TECHNICAL DIGEST, VO

oscillator frequency and downlink bit rates were chosenso that all frame rates are multiples or even divisionsof the 1-Hz time tick. The MET is a count kept in CTPsoftware of the 1-Hz time ticks. A length of 32 bitsallows MET to run continuously throughout the mis-sion without rolling over. MET, which was set to zeroat launch, is used to time tag all downlink telemetryframes and packets.

The value of MET is distributed once per second bymessages on the C&DH 1553B bus to each remoteterminal. The remote terminal can get a 1-s timemarker to an accuracy of several milliseconds by detect-ing an event on the 1553B bus. Three of the sciencedigital processor units require more accuracy, and, asshown in Fig. 1, receive a hardware 1-Hz time tick pulsefrom the CTP.

The MET and Coordinated Universal Time (UTC)are correlated on the ground. Correlation is based onthe measured UTC of receipt of a transfer frame, thevalue of MET in that frame, and the known distancefrom spacecraft to ground station.

COMMAND AND TELEMETRYPROCESSOR FLIGHT HARDWARE

The CTP flight hardware processes uplink com-mand messages, formats downlink telemetry data, andkeeps spacecraft time. It is fully redundant and consistsof two mechanical assemblies, each housing six elec-tronics boards. Figure 2 is a photograph of one of thetwo processor assemblies before final installation of theflight cover. The following paragraphs describe CTP

and and telemetry processor with front cover plate removed.

LUME 19, NUMBER 2 (1998) 223

-

D. D. STOTT ET AL.

functionality, as well as some of the processor’s signif-icant design features.

Functional DescriptionFigure 3 is a functional diagram of one of the CTP

assemblies. The processor performs the following space-craft functions:

1. Processes real-time and time-tagged uplink commandmessages and outputs the appropriate command dataover the 1553B data bus or one of several dedicatedcommand interfaces

2. Gathers and formats real-time and recorded scienceand housekeeping data and downlinks the data at oneof eight selectable downlink data rates

3. Encodes downlink data in accordance with the com-manded operating mode using Reed–Solomon andconvolutional techniques

4. Formats and stores real-time science and housekeep-ing data on the onboard SSDR for subsequent play-back and reformatting of the data for transmission onthe downlink

5. Detects anomalous spacecraft conditions, includingpower bus undervoltage conditions, attitude controlsystem safing flags, and out-of-limit housekeepingdata, and reconfigures the spacecraft in accordancewith uploaded and stored control sequences

224 JOH

6. Maintains spacecraft time, distributes time data overthe 1553B data bus, and embeds time data in allformatted data outputs

Both CTPs are always powered, one serving as the1553B bus controller and the other as a 1553B busremote terminal. Each processor requires approximate-ly 230 mA at 128 V in its normal operating mode.

Board DescriptionsFigure 4 shows the processor board, one of the six

printed-wiring electronics boards. All boards but theflight direct corrent (DC)/DC converter board are22 3 20 cm. Each board performs specific processorfunctions.

Processor Board

The processor board design is based on theRTX2010 microprocessor and the United Technolo-gies Microelectronics Center Summit MIL-STD-1553B bus controller. Fault tolerance features added tothe design include memory error detection and correc-tion electronics, as well as memory write protection.The processor provides 32,000 words of EEPROM,which is used to hold program, constants, and auton-omy rules, and 64,000 words of random access memory.

Figure 3. Functional block diagram of one command and telemetry processor. (BC = bus controller; FIFO = first in, first out; LVSS = low-voltage sense switch; VC = virtual channel.)

1553BC

Uplinkcommandinterface

VC2(imager)headers

trailer FIFO

Imagerinterface

electronics

VC2high-rateformatter

LVSSinterface

Microprocessorelectronics

VC0transfer frame

FIFO

Transferframe selection

logic

Housekeepinginput FIFO

Power switchingdigital interface

electronics

Dedicatedcommandinterface

Downlinkdata FIFO

Serializer and encoding logic

Housekeepingdata statemachine

Oscillator andtiming chainelectronics

Housekeepinginterface

electronics

Oscillator andtiming chainelectronics

Power supply

1 s

1 s1 s

1 s

To instruments,subsystems, etc.

Fromtransponder 1

Fromtransponder 2

Toimager

Datafromrecorder

To powerswitching

Serial datacommands 1 s

• • •

• • •

1-smarkers

Vbus

Downlink datato transponder 1Downlink datato transponder 2

Data torecorder

Recorder playbackinterface electronics

and FIFO

NS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

Housekeeping Board

The housekeeping board contains the state ma-chine logic and interface electronics necessary to gatherand condition 31 single-ended analog voltage chan-nels, 31 differential analog voltage channels, 31 AD590temperature transducer output channels, 31 platinumwire temperature sensor channels, 24 digital telltalechannels, and 8 serial digital channels once every sec-ond and to transfer the data to the processor board.

(a)

(b)

4 5 6

4 5 6

Figure 4. (a) Top and (b) bottom views of the RTX2010 processorboard removed from the command and telemetry processor chas-sis (scale is in inches).

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1

Each housekeeping board contains two separate statemachine programs—one for when the processor is func-tioning as the bus controller, and one for when theprocessor is functioning as a remote terminal on the1553B data bus.

Encoder Board

The main purpose of the encoder board is to encodetelemetry data received from the processor board usingReed–Solomon and convolutional coding standardsand to serially transmit the encoded data to the telem-etry conditioning unit. The convolutional encoding israte 1/2, constraint length 7, or else rate 1/6, constraintlength 15. The encoding is done according to CCSDSrecommendations and is a means of keeping downlinktransmissions from corrupting data. The encoder boardalso contains redundant interfaces to the commanddetector units to collect telemetry data and to controlthe uplink data rate.

Common Interface Board

Dedicated serial digital data command outputs andpower switching matrix control signals are generated onthe common interface board. Innovative design fea-tures include rise-time control on all serial data outputsto minimize interference with other signals and inter-face overvoltage protection circuitry to ensure C&DHsubsystem functionality should a converter fail in oneprocessor. In addition, the common interface boardincludes uplink command interface logic and circuitryto control playback of the SSDR.

Transfer Frame Generator Board

The transfer frame generator board contains a pre-cision temperature-compensated crystal oscillator andthe timing chain logic necessary to maintain accuratemission time and to generate all of the internal C&DHclock signals. Additional processor functions performedon this board include gathering high-speed science datafrom the Multispectral Imager, formatting Imager datainto transfer frames stored on the SSDR, and processingspacecraft bus voltage and attitude anomaly flags.

DC/DC Converter Board

The CTP power converter uses two modules pro-duced by Interpoint to convert the 128-V directcurrent power from the spacecraft into ±15-V and15-V regulated DC power for use by the C&DH elec-tronics. Another Interpoint module is used to filter theinput power to meet electromagnetic interferencerequirements. The converter contains custom circuitryto provide output overvoltage and low-input voltageturn-on protection.

998) 225

-

D. D. STOTT ET AL.

PACKAGE DESIGNEach CTP flight unit consists of six multilayer elec-

tronics boards and a motherboard, with overall dimen-sions of 24 3 24 3 17 cm and a weight of 5 kg. Thechassis of the C&DH is machined magnesium ZK 60A-75 with a minimum thickness of 1.5 mm. The designuses an integral housing for improved thermal controland includes separate compartments for the powerconverter and the digital electronics to eliminate po-tential cross talk.

The CTP board assemblies consist primarily ofsurface-mounted components populated on both sidesto minimize board area. The printed wiring boarddesigns use polyimide glass material with a high glass-transition temperature. To meet the requirements im-posed by the launch environment, each board is sup-ported by a set of wedge lock card guides and a stiffener.

The CTP was designed for only conductive coolingto ensure that junction temperatures are held withinoperational limits. For highly dissipative devices, anadditional aluminum heat sink was bonded to the boardto improve the heat path between the devices and thechassis.

C&DH SOFTWARE

BackgroundThe most pressing requirements that had to be

addressed during the C&DH software developmenteffort were programmatic constraints. To meet theNEAR launch date, C&DH software had to be ready forinstallation on the spacecraft at the outset of theintegration and test phase; other spacecraft componentscould not be operated by the integration and test groundsystem without a functioning C&DH. An early programdecision was made that C&DH software could not bechanged via upload commands after launch. This placedadded emphasis on early requirements definition andexhaustive testing of the implementation, as well asmandating a software design that could be flexiblyconfigured by mode commands and parameter uploads.In addition, NEAR development began when the costlyand very public failures of the Clementine and MarsObserver missions were fresh reminders to NASA andthe aerospace community of the importance ofautonomous fault detection and correction capabilities.Thus, early delivery, an inflexible launch date, the needfor configurable yet robust software, and the need forextensive autonomous capabilities for health and safetywere the biggest design challenges.

C&DH Software OverviewThe NEAR C&DH software is implemented as com-

piled FORTH code resident in 64 kilobytes of main

226 JOHN

memory of the CTP RTX2010 microprocessor board.The FORTH language was selected because theRTX2010 processor design uses a native instruction setmodeled after FORTH for optimum processor efficiency.FORTH on the RTX2010 also offers the advantage ofsupporting both interactive and compiled softwaremodes.

The context diagram of Fig. 5 depicts the C&DHsoftware environment and external interfaces. Thesoftware is structured as an executive that is defined bya 1-s major frame subdivided into eight 125-ms minorframes. The top-level software functions controlled bythe executive include the following: uplink processor,command processor, 1553B bus manager, housekeepingdata manager, SSDR manager, downlink manager, au-tonomy functions, and maintenance functions. High-lights of these software processes are described in thefollowing paragraphs. Functional differences betweenthe active (1553B bus controller) and backup (1553Bbus remote terminal) CTPs are described in the sectionon the bus manager.

ExecutiveAll C&DH software functions are scheduled with

respect to the eight minor frames, each driven by an8-Hz hardware interrupt that also provides a marker todefine the 1-s major frame boundary. The executive isan interrupt service routine that responds to every8-Hz interrupt, increments minor frame count, andmaintains MET in seconds. All subordinate softwareroutines are scheduled with respect to minor frames 0through 7. This scheduled polling approach for C&DHfunctions eliminates the need for multitasking andadditional interrupts, and it also results in a simpledeterministic design that can be readily tested. Func-tions are allocated by minor frame to ensure that pro-cessor use is distributed among tasks; stress testingunder maximum load conditions verified that peakprocessor use within any single 125-ms minor frame is73 ms. Upon initial power-up or reset of the CTP, theexecutive executes a number of self-test and initializa-tion functions before enabling the interrupt to start theminor frame schedule.

Uplink ProcessorCTP uplink hardware decodes received telecom-

mand transfer frames and buffers the binary data in first-in, first-out memory. The uplink manager controls thatinterface and reads up to four buffered data bytes in eachminor frame, performs additional decoding, and detectswhen a full transfer frame has been received from eitherof the redundant uplink decoders. Any transmissionerror detected by the above logic is signaled to groundvia the CCSDS status word reported in downlink telem-etry frames. If the received data have no transmission

S HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

NEARCommand andData Handling

Software

Uplinkhardware

(2)

Housekeepingdata collection

hardware

Hardwareflags

1553databus

Serial datacommandhardware

Powerswitchinghardware

Downlinkhardware

Timingchain

Command anddata handling

watchdog

Uplink transfer units

Status and control

Housekeeping data

Status and control

RT packets

RT houskeeping

Status and requests

Sync, time, tlm mode

RT serial data loads

Status and control

LVS 1, LVS 2

Separation

Data

User select

Status and control

Flag or interrupt

Timer control

Pulse length

Relay & position select

Downlink transfer frames

Status and control

Enable/disable

Rate/encoding select

Timer reset

Imageformatter andSSR recordhardware

SSDRplaybackhardware

VC0 transfer frames

VC2 wrappers

Mode/count

Status and control

Playback bits

Status and control

Interval timers

Pulse width

AIU 1 and 2Status/control

discretes

8-Hz interrupt

Active CTP select Relay telltale

Bus controllerwatchdogTimer reset

Last resortwatchdogTimer reset

CTP resetstatus registerReset status

CTPconfiguration

registerStatus and control

Markers: 1 Hz and 8 Hz

Status

(CTP 1/2 ID)

Uplinkprocessor

Commandprocessor

1553 busmanager

House-keeping

datamanager

Recordermanager

Downlinkmanager

Autonomymanager

Maintenancefunctions

Figure 5. Diagram of the NEAR command and data handling software context. (AIU = attitude interface unit, ID = identifier, LVS = low-voltage sensor, RT = remote terminal, SSDR = solid-state data recorder, VC = virtual channel, CTP = command and telemetry processor.)

errors, and if the frame has the expected sequencenumber and contains commands that meet validitychecks, the commands are extracted from the frame andpassed to the command processing task. Upon receiptof each valid command, the uplink manager also resetsa software-controlled CTP RF watchdog timer that willtime out if not reset within a commandable time inter-val (from 1 h to 12 days), thereby triggering a storedcommand sequence that autonomously switches theuplink hardware to the redundant side.

Command ProcessorCommand processing entails handling real-time

commands received from the uplink, managing storage

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (19

of command sequences in C&DH memory, anddispatching pending commands based on a priorityscheme. Real-time command packets containing anoperation code targeting one of the NEAR instrumentsor the attitude interface unit are routed to the 1553Bbus manager for delivery to that subsystem. Commandsdestined for the C&DH software are processed directlyby the command processor; such commands includecontrol and mode options associated with the variousC&DH functions. Some C&DH commands supportdefinition of autonomy rules and upload of commandsequences (macros) to be stored in memory for laterexecution. The command processor also accepts a dataload command and, via a hardware serial data interface,delivers control bits to the SSDRs, command detector

98) 227

-

D. D. STOTT ET AL.

units, and telemetry conditioning units (none of whichis accessible via the 1553B data bus). Last, relay com-mands are dispatched by controlling a hardware inter-face with the power switching unit. Command managersoftware prepares a command history and error statusthat is stored in memory for diagnostic use.

Once per minor frame, the queue of pending com-mands is examined and the command with the highestsource priority is executed. The source priority, in high-to-low order, is real-time command, spacecraft separa-tion sequence, macro triggered by safing autonomy rule,macro triggered by housekeeping autonomy rule, acommand sent from the attitude interface unit, and atime-tagged macro scheduled to execute at thecurrent MET. A macro queued for execution is pro-cessed to completion unless a command from a higherpriority source is received, at which point the macro issuspended; it resumes once the intervening commandis completed.

1553B Bus ManagerA 1553B data bus is the means by which the C&DH

software communicates with the four NEAR instru-ments, the two redundant attitude interface units, andthe backup CTP. The primary CTP, which serves as buscontroller, knows each of these systems as a remoteterminal identified by an assigned index. Each sub-system contains a 1553B controller chip that arbitratesexchange of data via shared memory subdivided intoreceive subaddresses and transmit subaddresses. Busmanager software schedules and controls all of thesedata exchanges; it also manages various internal selftests and retry strategies associated with the redundantbus architecture. Software interaction with the buscontroller chip is managed by delivering control blocksthat direct bus activities as delineated in a schedule thatassigns transactions by minor frame.

Once per major frame, the bus manager picks uphousekeeping data provided by each of the remoteterminals and provides these data to the housekeepingdata manager. The bus manager also supports pickup ofscience or engineering data packets from designatedsubsystems, which are provided to the SSDR manager(VC0) or the downlink manager (VC3). Each remoteterminal can deliver up to two VC0 packets per second.Other bus manager functions include distribution ofMET and a time synchronization message to all remoteterminals, as well as preparation of bus status statisticsmaintained for diagnostic use.

One of the two CTPs is always designated as thebackup CTP, which means it actually appears to theactive CTP as a remote terminal. The C&DH softwareon the backup CTP senses that it is not “active,” andits bus manager software reverts to a different status, inwhich it receives commands from the active CTP,

228 JOH

provides housekeeping and a status message to theactive CTP, and receives MET from the active CTP.The backup CTP can dispatch commands, but it col-lects only a subset of the housekeeping data and doesnot collect or provide packets to the SSDR or thedownlink VCs.

Housekeeping Data ManagerOnce per major frame, housekeeping software con-

catenates housekeeping data collected from all NEARsubsystems and the CTP housekeeping board to createthe spacecraft housekeeping packet on which autono-my rules operate and which is downlinked at a perioddependent on telemetry rate and mode. The softwarealso prepares the part of the housekeeping packet thatcontains internal C&DH status information. Otherdata inserted into the housekeeping packet are comput-ed telemetry points, which comprise an arithmeticcombination of two or more collected data elements.Upon receipt of a command to do so, the data managerdelivers to the SSDR manager a copy of the currenthousekeeping packet for storage on the recorder.

Several bookkeeping functions are provided by datamanager software: a stored table retains a history ofhigh and low values for all telemetry points; snapshotsof the housekeeping packets are stored in memory whenan error is autonomously detected; and various one-bittelltales are maintained to serve as indicators of prob-lem conditions. Some of these telltales are “sticky,” inthat they signal an error, and a ground command isrequired to clear them.

Solid-State Data Recorder ManagerThe recorder manager controls the SSDR hardware

interface that accepts data to be recorded. Once persecond, the buffer of accumulated VC0 packets is ex-amined. Sets of three packets are extracted, packagedinto a VC0 transfer frame, and delivered to the recorderinterface. As many as five VC0 transfer frames persecond are written to the recorder, depending on howground commands have configured remote terminals todeliver packets. To record images, a hardware formatter(Fig. 5) interfaces with the Multispectral Imager to readimage data from a high-speed serial line. Because theimage bits must be packaged into VC2 transfer frames,the SSDR manager task is notified (via the 1553B bus)when an image is to be collected; the task then preparesthe 184 transfer frame wrappers needed by the format-ter to encapsulate one image as it is delivered to therecorder.

Downlink ManagerThe downlink manager can be commanded to con-

figure the downlink in any of eight transmission rates

NS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

and five different modes that designate recording modeand interleaving of real time (VC3) and SSDR play-back (VC1) telemetry frames. This flexibility wasmandated by mission requirements that vary dramati-cally, depending on mission phase (launch mode, cruisephase, at-asteroid operations) and state (safe holds vs.normal operations). The downlink manager keeps trackof the current transmission rate and builds a telemetrytransfer frame when the downlink hardware has fin-ished transmitting the previous frame. When directedto transmit a frame, the software manages a downlinkinterface that alternates between two buffers at theoccurrence of a major frame marker. The same interfacecontrols downlink hardware frequency and encodingoptions, as set by ground command sent to the CTP.The downlink manager mediates changes to theseparameters so that they take effect only on telemetryframe boundaries. At the slowest downlink rate, a frameis transmitted every 896 s; at the fastest rate, threeframes are transmitted per second. Because of the vari-able frame transmit rate and various interleave modes,downlink software incorporates the handshaking nec-essary for the bus manager to notify remote terminalswhen VC3 packets will go in the downlink in thefollowing major frame.

The C&DH telemetry mode specifies (via com-mand) the interleaving of VC3 and VC1 frames. In asecond in which a VC3 frame is to be prepared, thecurrent housekeeping packet is inserted into slot 1 inthe frame. Slots 2 and 3 are filled with packets collectedfrom designated remote terminals. Slots 2 and 3 canalso be assigned as memory dump packets, in which casethe downlink manager prepares packets that containdata words read from designated C&DH memory loca-tions. The CCSDS frame header and trailer (includingthe status word that alternates between reporting theuplink status for the two CTPs) are added to the threepackets, and the frame is written to the downlink buffer.At the higher downlink rates, when more than oneVC3 frame is transmitted per second, the second andthird VC3 frames are fill frames to avoid reporting ofduplicate data.

Similarly, for a 1-s period in which the telemetrymode calls for a VC1 frame to be transmitted, downlinksoftware manages the SSDR playback interface to readdata bytes played from the recorder. One thousandseventy four bytes of recorder data are encapsulated inVC1 frame wrappers, and the appropriate number offrames is delivered to the downlink buffer.

Autonomy FunctionsAutonomous fault detection and correction func-

tions are implemented in the form of uploadable mon-itor rules. The autonomy manager maintains 165 rules.Each rule identifies one or two telemetry points in the

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1

housekeeping packet, optional arithmetic or maskingoperations to be performed on those data, and a logicalcompare operation that results in a Boolean flag thatis “true” if the criterion is met for the designated num-ber of consecutive housekeeping packets. When eval-uated as true, a rule causes the specified stored com-mand macro to be queued for execution. Rules areevaluated in priority order, so high-priority safing func-tions take precedence. Commands to the autonomymanager can load or clear rules, turn individual rulesor sets of rules on or off, or disable checking of all rulesin either of two subsets: safing rules that are stored bydefault upon CTP power-up, or housekeeping rules thatare used for less critical monitoring functions. Thesafing rules are the means by which mission safingmodes are implemented. The spacecraft separationsequence, Sun-safe mode, Earth-safe mode, power sys-tem monitor functions, and attitude interface unitmonitor functions are all implemented as stored com-mand macros triggered by autonomy rules.

This rule-based approach to meeting autonomy re-quirements allowed C&DH design to proceed, evenwhen autonomy modes and actions had not been fullyspecified at the mission level. The autonomy manageris basically a housekeeping checker and rule evaluationengine that queues stored commands. This software wasthoroughly tested under maximum stress conditionsbefore delivery to the spacecraft. Later in the integra-tion phase, the actual mission rules and macros wereinstalled. What seemed at first to be a simple rule-baseddesign actually became quite complex when it came todefining the checks and command responses needed tocoordinate safing for all spacecraft subsystems. Forexample, a rule may trigger a command sequence thatin turn disables sets of rules and enables others. Missionrule and macro definition was done by the systemsengineering team, which coordinated all subsystemsand defined cross-subsystem tests.

The autonomy manager performs several bookkeep-ing functions to provide data that summarize rule-checking status. An autonomy history table is main-tained, and a handshake to the data manager andcommand processor ensures that triggered macro com-mands and housekeeping packet snapshots are stored inmemory.

Maintenance FunctionsSoftware maintenance functions are distributed

among the minor frame schedule as small tasks requir-ing little processing time. Such functions include reset-ting a watchdog timer that times out if a software erroroccurs, thereby causing a processor reset; stepwise read-ing of all electronically correctable memory to causethe hardware to correct any single-bit errors that mayhave occurred; and computing checksums over blocks

998) 229

-

D. D. STOTT ET AL.

of memory so the checksum table can be dumped bycommand to verify that current memory contentsmatch the image model maintained by the missionoperations team.

POWER SWITCHING UNITPower switching for the NEAR spacecraft is a single

unit that provides switched power and signals for theoperation of the spacecraft. The unit is capable of 64on/off relay commands. Design of the unit was adaptedfrom similar units used on the Midcourse Space Exper-iment spacecraft.

Functional DescriptionThe unit contains a motherboard and six 18 3

18 cm plug-in cards, two driver/telemetry boards, andfour relay boards. The boards are mounted in a 23 323 3 25 cm chassis. Two types of latching relays providethe various switching functions, with each relay select-ed according to the power requirements of the users.Relay contact ratings are 2 and 10 A. In addition,2-A nonlatching relays generate high-level pulse com-mands. The interface between the spacecraft power busand the subsystems is through the two 50-pin “D” con-nectors mounted on each relay board, one for power inand the other for switched power out. Table 3 lists thecharacteristics of the power switching unit assembly;Table 4 shows the types, contact ratings, and quantitiesof relays.

Table 3. Characteristics of the power switching unit.

Characteristic Range or valuePower 120 to 134 V, 12–200 mA

15 to 10.5 V, 1–8 mA

Size 23 3 23 3 24 cm

Operating temperature 230 to 170°C

Weight 5.9 kg

Table 4. Power switching relay types.

Relay type Contact rating Number3PDT 10A 244PDT 2A 28

2PDT-NL 2A 72

Note: 3PDT = 3-pole double throw, 4PDT = 4-pole doublethrow, 2 PDT-NL = 2-pole double throw, nonlatching.

230 JOH

Circuit DescriptionThe two driver/telemetry boards provide redundant

relay coil drive and relay contact position telemetry.Relay drive circuits are configured in an 8 3 8 matrix.Each driver/telemetry board accepts an 8-bit parallelcommand word from one of the two command proces-sors and decodes the command into the appropriatesignal to operate the relays. The driver/telemetryboards also contain parallel-to-serial shift registers withoutput interfaces for telemetering the relay contactpositions. All latching relays are monitored. The te-lemetry data stream, one from each driver/telemetryboard, supplies data to one of the redundant CTPs. Allcommand decoding and telemetry logic is implementedwith CD4000B series integrated circuits. Relay coildrive circuits use discrete transistors.

Power RequirementsThe unit operates with a nominal 128-V and a

15-V input to each driver/telemetry board. The128-V power, supplied by the spacecraft battery, op-erates the command decoding and relay coil drive cir-cuitry. The 15-V power operates the telemetry circuits.

FLIGHT COMPUTERThe NEAR flight computer is a Honeywell model

HSC-E, which is a three-slice assembly consisting oftwo independent processor slices and a dual-power con-verter as the middle slice. Figure 6 is a composite pho-tograph of the flight hardware chassis and circuitboards, with a summary of attributes. The HSC-Eoccupies less than 3770 cm3 and weighs 5 kg. Oneprocessor and one-half of the dual-power converterconstitute a computer element called a string. The twostrings can be operated simultaneously, or one can beactive and one can be in standby (hot or cold) mode.Each string operates from a 128-V source, drawing,nominally, 8 W. Each processor slice contains a genericvery-high-speed integrated circuit spaceborne comput-er (GVSC), 512,000 words of static random accessmemory, 16,000 words of start-up read-only memory,256,000 words of EEPROM, and input/output (I/O).

The central processing unit function consists of aGVSC chip set and associated processor support logic.The GVSC chip set implements the MIL-STD-1750A6

instruction set. Processor support logic is implementedin a gate array with the I/O control functions. Allmemory access goes through the GVSC memory man-agement unit and block protect unit. The memorymanagement unit maps logical addresses to physicaladdresses in 4000 pages via 16 sets of page registers andprovides lock and key protection for 4000 blocksof memory to prevent unauthorized accesses. The

NS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

HSC-E processor supports four I/O interfaces: 1553B,serial I/O, parallel I/O, and a test port.

PRE-INTEGRATION TESTING ANDTESTBED SIMULATOR

The NEAR C&DH system consists of five chassisassemblies that are structurally and thermally attachedto spacecraft honeycomb panels and powered by the128-V bus. Each item received comprehensive stand-alone testing and a formal pre-integration review of testresults before it was installed on the spacecraft. Thesetests included

• Full functional test at ambient conditions• Hot and cold temperature tests in air• Powered 3-axis sinusoidal and random vibration tests• Hot and cold soaks and temperature cycles in thermal

vacuum

Additional compatibility tests were performed toverify all of the interfaces shown in Fig. 1. These testsinvolved connecting the breadboard CTP to prototypesof the other units one at a time. To prove the interfaces,commands were sent, telemetry verified, 1553B bustraffic analyzed, waveforms confirmed, etc. This effortled to well-understood interfaces and exceptionallytrouble-free spacecraft integration.

Software testing of the RTX2010 microprocessorcode in the CTP was initially performed by the devel-opment team. Additional independent validation andverification testing was done by an outside contractor.

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (19

Finally, a testbed simulator for the ground stationevolved from an early command and telemetry testbetween the CTP and the spacecraft electrical groundsupport equipment. The breadboards and CTP benchtest equipment were preserved for use by the NEARmission operations team. The team uses this local test-bed to try out operational commands and memory loadsand to connect various exercises with prototype instru-ments and the SSDR.

Test sets were developed specifically for each of theflight units previously discussed. The most elaborate ofthe test sets was the CTP bench test equipment. Thisequipment was custom designed for the NEAR CTP byTelenetics, Inc., in Columbia, Maryland. Its scope wasestablished by the need to drive every CTP input andto monitor every CTP output under closed-loop controlby a computer. Telenetics implemented this bench testequipment with off-the-shelf VME-based modules aug-mented by custom-designed interface boards wherenecessary. The control software was written in C lan-guage and runs on a 486 PC under the OS2 operatingsystem. Originally planned as a hardware tester, theflexibility of the design lent itself to expanding require-ments during CTP development. This bench testequipment became the platform for independent val-idation and verification testing of the CTP software. Itscapability for negative testing, such as sending a delib-erately bad command message and seeing that it isrejected, was used extensively during these tests. Thebench test equipment is now incorporated into thetestbed simulator that is used routinely by the NEARmission operations team.

Figure 6. The Honeywell HSC-E flight computer showing the fully assembled chassis and front and back sides of one processor module.The module has two printed wiring boards laminated to the front and back of an aluminum heat sink plate. Hardware attributes: RH1750processor; 1.6 million instructions per second at MHz Defense Avionics Instruction Set (DAIS); 512 K words static random access memory,single error correcting double error detecting (SECDED), error logging; 16 K total startup read-only memory; 256 K words electricallyerasable, programmable read-only memory (EEPROM), SECDED, error logging; 1553B port; serial input/output port; 12 user interrupts;probability of success Ps = 0.998 for the dual-string computer of the NEAR mission; size, 26.2 3 7.2 3 20.3 cm; weight, 5 kg (dual string);power, 8 W nominal (single string), 16 W with programmable read-only memories (PROMs) on.

98) 231

-

D. D. STOTT ET AL.

CONCLUSIONThe NEAR CTPs and power switching unit were

designed, fabricated, and tested at APL. Schedule andcost constraints from the NASA Discovery programdictated a “keep it simple” approach and the use ofdesign heritage as much as possible. The outside con-tracts with SEAKR Engineering and Honeywell wereclosely monitored by APL staff with many years oftechnical and contract management experience. TheC&DH software was developed by a three-person teamthat defined unit tests and an acceptance test proce-dure using bench test equipment that could simulatespacecraft subsystems. The preliminary build of C&DHsoftware was delivered to the spacecraft for integrationin June 1995, 9 months after the software requirementswere signed off (Artis7). The CTPs were loaded withnew C&DH software a total of 22 times during inte-gration and test and prior to launch. Eight of theserevisions incorporated a minor code change; all otherrevisions incorporated an updated set of default missionautonomy rules and stored macros. After a full year incruise mode and a flyby of the asteroid Mathilde,NEAR operations experience has shown the success ofthe C&DH implementation.

232 JOH

REFERENCES1Consultative Committee for Space Data Systems (CCSDS), TelecommandPart 1 Channel Service Architectural Specification, Blue Book, CCSDS 201.0-B-1, NASA Communications and Data Systems Division (1987).

2Consultative Committee for Space Data Systems (CCSDS), TelecommandPart 2 Data Routing Service, Blue Book, CCSDS 202.0-B-2, NASA Commu-nications and Data Systems Division (1992).

3Consultative Committee for Space Data Systems (CCSDS), Packet Telemetry,Blue Book, CCSDS 102.0-B-3, NASA Communications and Data SystemsDivision (1992).

4Consultative Committee for Space Data Systems (CCSDS), TelemetryChannel Coding, Blue Book, CCSDS 101.0-B-3, NASA Communications andData Systems Division (1992).

5Department of Defense, Aircraft Internal Time Division Command/ResponseMultiplex Data Bus, MIL-STD-1553B (1978), with Notice II (1986).

6Department of Defense, Sixteen-Bit Computer Instruction Set Architecture, MIL-STD-1750A (2 Jul 1980).

7Artis, D. A., NEAR Command & Data Handling System Software RequirementsSpecification, Drawing 7352-9066, JHU/APL, Laurel, MD (1994).

ACKNOWLEDGMENTS. We thank Joseph F. Bogdanski, Jr., Richard F. Conde,Binh Q. Le, and Donald L. Mitchell for their many contributions to the NEARC&DH designs. George Theodorakos worked closely with them, carrying out thedesign of two boards in the CTP. Susan C. Lee defined the mission autonomy rulesand safing mode macros. APL’s Technical Services Department designed andfabricated the printed wiring board and chassis for the CTP and power switchingunit. Howard S. Feldmesser led this effort very effectively. Kenneth Nellis andMark Hall of Telenetics, Inc., performed independent validation and verificationtesting and circuit board design for the indispensable CTP bench test equipment.Honeywell program managers Mack Flakes and Robert Parker delivered the flightcomputer on time. We also owe thanks to NASA for sponsorship of the NEARprogram. This work was supported under contract N00039-95-C-0002 with theU.S. Navy.

DAVID A. ARTIS graduated with an M.A. in physics from Indiana StateUniversity and joined the APL Space Department in 1981. His previousassignments have included lead software engineer for the NEAR command anddata handling system, software engineer for the decision/control software forUVISI on MSX, lead engineer for SMILS PMP, and member of the SpaceDepartment team that defined departmental software reliability and qualityassurance standards. Currently, Mr. Artis is the mission systems software engineerfor FUSE, a spacecraft project at The Johns Hopkins University Center forAstrophysical Sciences. His e-mail address is [email protected].

THE AUTHORS

DAVID D. STOTT supervises the Control Electronics Section of the APL SpaceDepartment’s Electronic Systems Group and is a member of the PrincipalProfessional Staff. He received B.S. and M.S. degrees in electrical engineeringfrom the University of Michigan in 1963 and 1966, respectively. Since joiningAPL in 1966, he has worked on spacecraft command and telemetry systems,ground support equipment, aircraft avionics systems, and biomedical instrumen-tation. Mr. Stott was lead engineer for the NEAR command and data handlingsystem. His e-mail address is [email protected].

NS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

-

THE NEAR COMMAND AND DATA HANDLING SYSTEM

BRIAN K. HEGGESTAD received a B.S. degree in electrical engineering fromColorado State University in 1989 and an M.S. degree in electrical engineeringfrom The Johns Hopkins University in 1997. He is an electrical engineer in theAPL Space Department’s Electronic Systems Group. He developed hardwarefor the MSX command processor and embedded software for the NEARcommand and data handling system. Mr. Heggestad is currently the lead softwareengineer for the FUSE instrument data system flight processor. His e-mail addressis [email protected].

JERRY E. KROUTIL is a Senior Staff member in APL’s Spacecraft FlightSystems Group. He received an Associate of Applied Science degree from theCapitol Radio Engineering Institute. Since joining APL in 1965, Mr. Kroutilhas developed power switching components for numerous spacecraft pro-grams including TIP, HUT, POLAR BEAR, Delta Star 181 and 183, MSX, andNEAR. Mr. Kroutil was the lead engineer for the ACE power switchingcomponents and currently leads a team developing the instrument powerswitching and distribution unit for the FUSE program. His e-mail address [email protected].

ROBERT O. KRUEGER is a Senior Systems Engineer with Interface andControl Systems, Inc. He studied at MIT from 1967 to 1971. He has worked withprograms at APL for the last several years. At APL, he designed the bench testequipment for the MSX data handling system and the NEAR command and datahandling system. He also converted the NEAR command and data handlingbreadboard into a spacecraft simulator for the NEAR mission operations group.Most recently, Mr. Krueger has been part of the integration and test team for theACE spacecraft. His e-mail address is [email protected].

LLOYD A. LINSTROM is an Electrical Engineer in the Space SystemsApplications Group of the APL Space Department. He earned a B.S. degreefrom the University of Maryland in 1982 and a master’s degree from theUniversity of Illinois in 1989. Mr. Linstrom’s interests include development ofembedded software, communications systems, and digital hardware. His e-mailaddress is [email protected].

JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998) 233

mailto:[email protected]:[email protected]:[email protected]:[email protected]

-

D. D. STOTT ET AL.

JAMES A. PERSCHY is a Principal Professional Staff Engineer in the APLSpace Department. He earned a B.S.E.E. from the George WashingtonUniversity in 1958 and an M.S. in physics from the University of Maryland in1965. After joining APL in 1958, he designed the flight data handling system forthe Transit Navy navigation satellites. He led APL satellite ocean-altimetersignal processor development for Seasat, Geosat, and Topex. Mr. Perschydesigned the RTX2010-based processor used in the NEAR and ACE commandand data handling systems. He is currently leading the TIMED spacecraftcommand and data handling system development and is designing the millioninstructions per second–based processor for the GPS navigation and attitudecontrol systems. His e-mail address is [email protected].

PAUL D. SCHWARTZ received his B.S. and M.Eng. degrees in electricalengineering from Cornell University. Since joining the APL Space Departmentin 1973, he has been the lead design engineer for the development of numerousspacecraft subsystems including the AMPTE command system, the COBEmomentum management assembly, and the MSX data handling system. Mr.Schwartz was the lead hardware design engineer for the NEAR commandtelemetry processor. He has designed power conditioning electronics for severalspace instruments, including the Galileo Energetic Particles Detector and theUlysses LAN experiment. Mr. Schwartz is currently the system engineer for thedevelopment of a miniaturized visible imager for the JAWSAT spacecraft. His e-mail address is [email protected].

GLEN F. SWEITZER is an Associate Engineer in the APL Space Department’sElectronics Systems Group. He studied electronics in the U.S. Air Force in1957–1958 and has taken numerous electronics and computer courses sincejoining APL in 1961. Mr. Sweitzer has developed satellite test and operationsscenarios, assisted in development of TDRSS signal processing equipment andtest and operations equipment, provided test support for biomedical research, andwas the on-site technical representative for Transit spacecraft development atRCA Astro-Electronics from 1985 to 1990. He was also lead engineer for theMSX KG/Tempest Unit, technical representative for the NEAR flight computer,and project manager for the Transit Program. Mr. Sweitzer is currently technicalrepresentative for TIMED guidance and control instruments. His e-mail addressis [email protected].

234 JOHNS HOPKINS APL TECHNICAL DIGEST, VOLUME 19, NUMBER 2 (1998)

mailto:[email protected]:[email protected]:[email protected]