Stanford’s 2014 Slot Filling Systemsi.stanford.edu/hazy/papers/2014kbp-systemdescription.pdf ·...

Transcript of Stanford’s 2014 Slot Filling Systemsi.stanford.edu/hazy/papers/2014kbp-systemdescription.pdf ·...

Stanford’s 2014 Slot Filling Systems

Gabor Angeli∗, Sonal Gupta∗, Melvin Jose∗, Christopher D. Manning∗Christopher Re∗, Julie Tibshirani∗, Jean Y. Wu∗, Sen Wu∗, Ce Zhang†

∗Stanford University; Stanford, CA 94305{angeli, sonalg, melvinj, manning}@stanford.edu{chrismre, jtibs, jeaneis, senwu}@stanford.edu

†University of Wisconsin; Madison, WI [email protected]

Abstract

We describe Stanford’s entry in the TAC-KBP 2014 Slot Filling challenge. We sub-mitted two broad approaches to Slot Fill-ing: one based on the DeepDive frame-work (Niu et al., 2012), and another basedon the multi-instance multi-label relationextractor of Surdeanu et al. (2012). In ad-dition, we evaluate the impact of learnedand hard-coded patterns on performancefor slot filling, and the impact of the par-tial annotations described in Angeli et al.(2014).

1 Introduction

We describe Stanford’s two systems in the 2014KBP Slot Filling competition. The first, and bestperforming system, is built on top of the Deep-Dive framework. We describe the system brieflybelow, and in more detail in Section 2; the cen-tral lesson we would like to emphasize from thissystem is that leveraging large computers allowsfor completely removing the information retrievalcomponent of a traditional KBP system, and al-lows for quick turnaround times while processingthe entire source corpus as a single unit. DeepDiveoffers a convenient framework for developing sys-tems on these large computers, including definingthe pre-processing pipelines (feature engineering,entity linking, mention detection, etc.) and thendefining and training a relation extraction model.

The second system Stanford submitted is basedaround the MIML-RE relation extractor, follow-ing closely from the 2013 submission, but with theaddition of learned patterns, and with MIML-REtrained fixing carefully selected manually anno-tated sentences. The central lesson we would liketo emphasize from this system is that a relativelysmall annotation effort (10k sentences) over care-fully selected examples can yield a surprisingly

large gain in end-to-end performance on the SlotFilling task (4.4 F1). We describe the two systemsbriefly below.

1.1 DeepDive

Stanford submitted two runs using the Deep-Dive framework for relation extraction (http://deepdive.stanford.edu/). The systemsdiffered only in the threshold chosen for propos-ing relations. DeepDive is a framework for con-structing trained systems whose goal is to makeit easier to integrate domain-specific knowledgeand user feedback. It runs in two phases: fea-ture extraction, and statistical inference and learn-ing. The first phase is implemented as steps ina pipeline, with intermediate results stored in alarge distributed database. For example, once ini-tialized with a Sentences table, mention detectionruns a simple Python script on each row in the Sen-tences table and populates the resulting mentionsin a Mentions table. Featurization can then takeevery pair of mentions from this mentions table,and run a featurization script to populate the fea-tures table. Importantly, the entire source corpusis processed as a single unit, without need for aninformation retrieval component.

Inference (and consequently learning) over thedata is performed by grounding a factor graph viaa set of database queries, and then running a Gibbssampler to infer relation probabilities (Niu et al.,2011a). The model uses the distant supervisionassumption, as in Mintz et al. (2009a).

Negative examples are constructed identicallyto the 2013 system, taking incompatible and in-complete relations with respect to the input knowl-edge base. The training corpus consists of onlythe source corpus for the year it is evaluated on –for the 2013 dev set and 2014 evaluation set, thisconsists of only the 2013 source corpus. In ad-dition, snowball sampling over a large web cor-pus was used to collect additional named entity

(1) Feature Extraction (2) Probabilistic Engineering (3) Inference and Learning

Feature Weight and_his_wife 5.0 and -3.0

Unstructured Info.

Structured Info.

Web Pages

PDF documents

Evidence Schema Sentence … Barack Obama and his wife M. Obama …

Mentions word offset1 offset2 M. Obama 6 7

MentionPairFeatures m1 m2 f Barack Obama

M. Obama

and_his_wife

Factor Graph factors variables

MHasSpouse m1 m2 Barack Obama M. Williams Barack Obama M. Obama

feature=“and_his_wife”

Weights (Learning)

Probability (Inference) m1 m2 prob Barack Obama M. Williams 0.1 Barack Obama M. Obama 0.9

Calibration Plots

Knowledge Base

HasSpouse

Query Relation

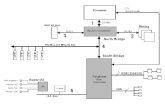

Figure 1: An overview of a system built with DeepDive that takes as input both structured and unstruc-tured information and creates a knowledge base as the output. There are three phases of DeepDive’sexecution model: (1) Feature Extraction; (2) Probabilistic Engineering; and (3) Inference and Learning.Section 2 contains a more detailed walkthrough of these phases, and Figure 2 shows more details of howto conduct tasks in these three phases using SQL and script languages, e.g., Python.

triggers, and some high-precision relation triggers.We used Freebase as our source of training data,aligned to the set of KBP relations. Entity Link-ing was performed with a series of high-precisionrules.

The system obtained an F1 of 36.7 on the 2014evaluation. In development runs, the system ob-tained an F1 of 62 on the 2010 data, and 56 on the2013 data.

1.2 MIML-RE

In addition to the DeepDive system, Stanford sub-mitted three systems making use of the MIML-REmodel. These are to a large extent similar to Stan-ford’s submission last year, but with some key im-provements.

First, the MIML-RE model was re-trained us-ing selective human-annotated relation mentionlabels, as described in Angeli et al. (2014) andsummarized in Section 4. Two submitted runs dif-fer only by their use of the unmodified versus ac-tive learning models.

In addition, our model employed a somewhatextended set of hand-coded patterns, as well as alarger collection of automatically learned patterns(see Section 5). Two runs differ in their use ofthese patterns versus using only the MIML-RE re-lation extractor.

For training, we used both the 2010 and 2013source corpora, as well as a 2013 dump ofWikipedia. For testing, we used only the officialcorpus for the evaluation year, as this was shownto perform better in the 2013 evaluation than usingall three corpora.

The dev F1 scores for each year for the bestMIML-RE based system are as follows. Slotthresholds were trained on a per-relation basis tomaximize the score on the 2013 development set.Our best MIML-RE based system obtained an F1

of 32; active learning gained us an improvement of4.4 F1, while patterns gained us 1.9 F1. Our scoreson the development sets were:

2009 2010 2011 2012 201325.54 32.02 28.53 30.74 40.86

We proceed to describe our two approaches inmore detail.

2 A Brief Overview of DeepDive

We briefly introduce the programming and execu-tion model of DeepDive. There are three phasesin DeepDive’s execution model, as shown in Fig-ure 1, and described below. All of the experimentson the KBP corpus were performed using a 1.5 TBmemory computer; a distributed GreenPlum rela-tional database (a distributed Postgres) was set upwith 40 nodes on the computer. Therefore, it isrelevant to point out that each of these phases oc-curs in parallel on 40 cores, and is run primarily ifnot entirely out of main memory.

(1) Feature Extraction. The input to this stageoften contains both structured and unstruc-tured information, and the goal is to producea relational database that describes the fea-tures or signals of the data, which we call theevidence schema. We use the phrase evidenceto emphasize that, in this stage, data is not re-quired to be precisely correct; a fact which

(a) Input

e1 e2 Barack Obama Michelle Obama

FreebaseSpouse

m1 m2 Barack Obama Michelle

MentionPairs

m e Michelle Michelle Obama

EntityLinking

(d) Distant Supervision (c) Constants & Domain Knowledge (b) Feature Extraction sql: ! SELECT t1.Mention1, ! t1.Mention2 ! FROM FreebaseSpouse t0 ! MentionPairs t1 ! EntityLinking t2 ! EntityLinking t3 ! WHERE t1.m1 = t2.m ! AND t1.m2 = t3.m ! AND t0.e1 = t2.e ! AND t0.e2 = t3.e !

m1 m2 Barack Obama Michelle

PostiveExamples

sql: ! SELECT t0.*, t1.* ! FROM HasSpouse t0 ! HasSpouse t1 ! WHERE t0.e1 = t1.e1 ! AND t0.e2 <> t1.e2 !function: AND(t0, t1) !weight: -2 !

(Barack_Obama, Michelle_Obama)

(Barack_Obama, Michelle_Williams)

Factor Graph

sql: ! SELECT content ! FROM Sentence !py_func: ! for c in sys.stdin(): ! mentions = extMentions(c) ! for m1 in mentions: ! for m2 in mentions: ! f = extFeature(c,m1,m2) ! print [m1, m2, f] !

content Barack Obama and his wife Michelle thank supporters.

Sentence

m1 m2 f Barack Obama

Michelle and_his_wife

MentionPairFeature function: AND weight: -2

Figure 2: Illustration of popular operations in DeepDive. (a) Prepare data sets in relational form that canbe used by DeepDive. (b) Generate labels using distant supervision with SQL; (c) Integrate constraintswith SQL and logic functions; (d) Extract features with SQL and script languages (e.g., Python).

is very relevant for distantly supervised sys-tems.

(2) Probabilistic Engineering. The goal of thisphase is to transform the evidence schemainto a probabilistic model, specifically a fac-tor graph that specifies:

• The set of random variables that the userwants to model. To define the set ofrandom variables in DeepDive is to cre-ate new relations called query relations,in which each tuple corresponds to onerandom variable. This operation can bedone by using SQL queries on existingrelations.• How those random variables are corre-

lated; e.g., “The mention ‘Obama’ refersto the president is correlated with therandom variable that indicates whether‘Obama’ is a person.” To specify how,the user specifies a factor function. Al-though our submissions do not make useof more complex constraints, they areeasy to encode in the framework. Fig-ure 2(c) shows a rough example that de-scribes the intuition that “people tendto be married to only a single person.”One (of many ways) to say this is tosay that there is some correlation be-tween pairs of married tuples; i.e., us-ing the logical function AND(t0, t1) re-turns 1 in possible worlds in which bothmarried tuples are true and 0 in oth-ers. DeepDive then learns the “strength”of this correlation from the data, which

is encoded as weight.1 Here, −2 indi-cates that it is less likely that both mar-ried tuples are correct. This phase isalso where the user can write logicalconstraints, e.g., hard functional con-straints.

Our previous work has shown that thisgrounding phase (Niu et al., 2011b) can bea serious bottleneck if one does not use scal-able relational technology. We have learnedthis lesson several times.

(3) Inference and Learning. This phase islargely opaque to the user: it takes the fac-tor graph as input, estimates the weights,performs inference, and produces the out-put database along with a host of diagnos-tic information, notably calibration plots (seeFig. 3). More precisely, the output of Deep-Dive is a database that contains each ran-dom variable declared by the user with itsmarginal probability. For example, one tuplein the relation HasSpouse might be (BarackObama, Michelle Obama), and ideally, wehope that DeepDive outputs a larger proba-bility for this tuple as output.

2.1 Operations to Improve Quality inDeepDive

We describe three routine tasks that a user per-forms to improve a DeepDive based system.

1A weight is roughly the log odds, i.e., the log p1−p

wherep is the marginal probability of this random variable. This isstandard in Markov Logic Networks (Richardson and Domin-gos, 2006), on which much of DeepDive’s semantics arebased.

Feature Extraction. DeepDive’s data modelallows the user to use any scripting language forfeature extraction. Figure 2(b) shows one suchexample using Python. One baseline feature thatis often used in relation extraction systems is theword sequence between mention pairs in a sen-tence (Mintz et al., 2009b; Hoffmann et al., 2010),and Figure 2(b) shows an example of extractingthis feature. The user first defines the input to thefeature extractor using an SQL query, which se-lects all available sentences. Then the user definesa UDF that will be executed for each tuple returnedby the SQL query. In this example, the UDF isa Python function that first reads a sentence fromSTDIN, extracts mentions from the sentence, ex-tracts features for each mention pair, and outputsthe result to STDOUT. DeepDive will then loadthe output of this UDF to the MentionPairFea-ture relation.

Constraints and Domain Knowledge. Oneway to improve a KBP system is to integrate do-main knowledge. DeepDive supports this opera-tion by allowing the user to integrate constraintsand domain knowledge as correlations among ran-dom variables, as shown in Figure 2(c).

Imagine that the user wants to integrate a sim-ple rule that says “one person is likely to bethe spouse of only one person.” For exam-ple, given a single entity “Barack Obama,” thisrule gives positive preference to the case whereonly one of (Barack Obama, Michelle Obama)and (Barack Obama, Michelle Williams) is true.Figure 2(c) shows one example of implementingthis rule. The SQL query in Figure 2(c) defines aview in which each tuple corresponds to two rela-tion candidates with the same first entity but dif-ferent second entities. The function AND(t0, t1)defines the “type of correlation” among variables,and the weight “-2” defines the strength of the cor-relation. This rule indicates that the it is less likelythat both (Barack Obama, Michelle Obama) and(Barack Obama, Michelle Williams) are true (i.e.,when AND(t0, t1) returns 1). Typically, DeepDiveis used to learn the weights from data.

Distant Supervision. Like the MIML-REsystem, DeepDive is built on top of the distant su-pervision assumption, allowing more data to be in-corporated easily.

As we have described, the user has at least theabove three ways to improve the system and is free

to use one or a combination of them to improve thesystem’s quality. The question we address next is,“What should the user do next to get the largestquality improvement in the KBP system?”

3 Calibration Plots in the DeepDive

A DeepDive system is only as good as its featuresand rules. In the last two years, we have foundthat understanding which features to add is themost critical—but often the most overlooked—step in the process. Part of this process is theusual fine-grained error analysis; however, wehave found that a macro-error analysis can be use-ful to guard against statistical errors and give an at-a-glance description of an otherwise prohibitivelylarge amount of output.

In DeepDive, calibration plots are used to sum-marize the overall quality of the results. BecauseDeepDive uses a joint probability model, eachrandom variable is assigned a marginal probabil-ity. Ideally, if one takes all the facts to whichDeepDive assigns a probability score of 0.95, then95% of these facts are correct. We believe thatprobabilities remove a key element: the developerreasons about features, not the algorithms under-neath. This is a type of algorithm independencethat we believe is critical.

DeepDive programs define one or more test setsfor each relation, which are essentially a set of la-beled data for that particular relation. This set isused to produce a calibration plot. Figure 3 showsan example calibration plot for the another Deep-Dive application: PaleoDeepDive, which providesan aggregated view of how the system behaves. Byreading each of the subplots, we can get a roughassessment of the next step to improve our system.We explain each component below.

As shown in Figure 3, a calibration plot containsthree components: (a) accuracy, (b) # predictions(test set), which measures the number of extrac-tions in the test set with a certain probability; and(c) # predictions (whole set), which measures thenumber of extractions in the whole set with a cer-tain probability. The test set is assumed to havelabels so that we can measure accuracy, while thewhole set does not.

(a) Accuracy. To create the accuracy his-togram, we bin each fact extracted by DeepDiveon the test set by the probability score assigned toeach fact; e.g., we round to the nearest value in theset k/10 for k = 1, . . . , 10. For each bin, we com-

Figure 3: Illustration of calibration plots in DeepDive.

pute the fraction of those predictions that is cor-rect. Ideally, this line would be on the (0,0)-(1,1)line, which means that the DeepDive-producedprobability value is calibrated, i.e., it matches thetest-set accuracy. For example, Figure 3(a) showsa curve for calibration. Differences in these twolines can be caused by noise in the training data,quantization error due to binning, or sparsity in thetraining data.

(b) # Predictions (Testing Set). We also cre-ate a histogram of the number of predictions ineach bin. In a well-tuned system, the # Predictionshistogram should have a “U” shape. That is, mostof the extractions are concentrated at high proba-bility and low probability. We do want a number oflow-probability events, as this indicates DeepDiveis considering plausible but ultimately incorrect al-ternatives. Figure 3(b) shows a U-shaped curvewith some masses around 0.5-0.6. Intuitively, thissuggests that there is some hidden type of exam-ple for which the system has insufficient features.More generally, facts that fall into bins that are notin (0,0.1) or (0.9,1.0) are candidates for improve-ments, and one goal of improving a KBC system isto “push” these probabilities into either (0,0.1) or(0.9,1.0). To do this, we may want to sample fromthese examples and add more features to resolvethis uncertainty.

(c) # Predictions (Whole Set). The final his-togram is similar to Figure 3(b), but illustratesthe behavior of the system, for which we do nothave any training examples. We can visually in-spect that Figure 3(c) has a similar shape to (b);If not, this would suggest possible overfitting orsome bias in the selection of the hold-out set.

Next, we proceed to describe our MIML-REbased KBP system; in particular, we describe theimprovements to the system made since the 2013

entry.

4 Active Learning in MIML-RE

A key improvement in the 2014 KBP entry isthe re-training of MIML-RE incorporating fixedlabels for uncertain sentences. The approach isdescribed in more detail in Angeli et al. (2014).We describe the process for incorporating thesesentence-level statistics, and the selection crite-ria used for selecting sentences to annotate. Thiswork yielded a net gain of 4.4 F1 in the evaluation.

4.1 Training with sentence-level annotations

Following Surdeanu et al. (2012), MIML-RE istrained through hard discriminative ExpectationMaximization, inferring the latent z values in theE-step and updating the weights for both the z andy classifiers in the M-step. During the E-step, weconstrain the latent z to match our sentence-levelannotations when available.

We describe three criteria for selection exam-ples to annotate. The first – sampling uniformly– is a baseline for our hypothesis that intelligentlyselecting examples is important. For this criterion,we select mentions uniformly at random from thetraining set to annotate. The other two criteria relyon a metric for disagreement provided by QBC;we describe our adaptation of QBC for MIML-REas a preliminary to introducing these criteria.

4.2 QBC For MIML-RE

We use a version of QBC based on bootstrap-ping (Saar-Tsechansky and Provost, 2004). Tocreate the committee of classifiers, we re-samplethe training set with replacement 7 times and traina model over each sampled dataset. We mea-sure disagreement on z-labels among the classi-fiers using a generalized Jensen-Shannon diver-gence (McCallum and Nigam, 1998), taking the

0.2

0.3

0.4

0.5

0.6

0.7

0 0.05 0.1 0.15 0.2 0.25 0.3

Pre

cisi

on

Recall

Sample JSHigh JS

UniformMIML-RE

Figure 4: A comparison of models trained withvarious selection criteria on the evaluation of Sur-deanu et al. (2012), all initialized with the corre-sponding supervised classifier.

average KL divergence of all classifier judgments.We first calculate the mention-level confi-

dences. Note that z(m)i ∈ Mi denotes the latent

variable in entity pair i with index m; z(−m)i de-

notes the set of all latent variables except z(m)i :

p(z(m)i |yi,xi) =

p(yi, z(m)i |xi)

p(yi|xi)

=

∑z(−m)i

p(yi, zi|xi)∑z(m)i

p(yi, z(m)i |xi)

.

Notice that the denominator just serves to nor-malize the probability within a sentence group.We can rewrite the numerator as follows:∑

z(−m)i

p(yi, zi|xi)

=∑z(−m)i

p(yi|zi)p(zi|xi)

= p(z(m)i |xi)

∑z(−m)i

p(yi|zi)p(z(−m)i |xi).

For computational efficiency, we approximatep(z

(−m)i |xi) with a point mass at its maximum.

Next, we calculate the Jensen-Shannon (JS) diver-gence from the k bootstrapped classifiers:

1

k

k∑c=1

KL(pc(z(m)i |yi,xi)||pmean(z

(m)i |yi,xi)) (1)

where pc is the probability assigned by each of thek classifiers to the latent z(m)

i , and pmean is the av-erage of these probabilities. We use this metric

System P R F1

Mintz++ 41.3 28.2 33.5MIML + Dist 38.0 30.5 33.8Supervised + SampleJS 33.5 35.0 34.2MIML + Sup 35.1 35.6 35.5MIML + Sup + SampleJS 39.4 36.2 37.7

Table 1: A comparison of the best performing su-pervised classifier with other systems on the 2013development set. The top section compares the su-pervised classifier with prior work. The lower sec-tion highlights the improvements gained from ini-tializing MIML-RE with a supervised classifier.

to capture the disagreement of our model with re-spect to a particular latent variable. This is thenused to inform our selection criteria.

We note that QBC may be especially useful inour situation as our objective is highly nonconvex.If two committee members disagree on a latentvariable, it is likely because they converged to dif-ferent local optima; annotating that example couldhelp bring the classifiers into agreement.

The second selection criterion we consider isthe most straightforward application of QBC – se-lecting the examples with the highest JS disagree-ment. This allows us to compare our criterion, de-scribed next, against an established criterion fromthe active learning literature.

4.3 Sample by JS Disagreement

We propose a novel active learning sampling cri-terion that incorporates not only disagreement butalso representativeness in selecting examples toannotate. Prior work has taken a weighted combi-nation of an example’s disagreement and a scorecorresponding to whether the example is drawnfrom a dense portion of the feature space (e.g.,McCallum and Nigam (1998)). However, this re-quires both selecting a criterion for defining den-sity (e.g., distance metric in feature space), andtuning a parameter for the relative weight of dis-agreement versus representativeness.

Instead, we account for choosing representa-tive examples by sampling without replacementproportional to the example’s disagreement. For-mally, we define the probability of selecting anexample z

(m)i to be proportional to the Jensen-

Shannon divergence in (1). Since the training set isan approximation to the prior distribution over ex-amples, sampling uniformly over the training set is

Slot Dependency Patternper:employee of ENTITY (nsubjpass)← dropped→(prep from) {ner:/ORGANIZATION/}=FILLper:country of death {ner:/COUNTRY/}=FILL (nsubj)← mourns→(dobj) ENTITYorg:founded by founders→(conj and) {ner:/PERSON/}=FILL→(nn) ENTITY

Table 2: Some examples of learned dependency patterns used by the system. The format of the patternsis in SemGrex format over Stanford Dependencies, where ENTITY and FILL are the entity head tokenand the slot fill head token respectively.

an approximation to sampling from the prior prob-ability of seeing an input x. We can view our crite-rion as an approximation to sampling proportionalto the product of two densities: a prior over exam-ples x, and the JS divergence mentioned above.

4.4 Analysis of Selection Criteria

It’s worth emphasizing at least three results fromthis line of work. First, that the selection crite-ria for annotating examples is very important forperformance. Figure 4 shows the performance ofthe model using various selection criteria; we notethat highJS and sampleJS noticeably outperformthe uniform criterion. Second, that MIML-RE isvery sensitive to initialization. To illustrate, thegains in Figure 4 disappear entirely if the model isinitialized with Mintz++ (as in the original paper);gains only appear if the model is initialized froma supervised classifier. Lastly, it’s worth notingthat this supervised classifier, used on its own, per-forms surprisingly well on the 2013 developmentset. Table 1 shows the system’s performance usingdistant supervision, vanilla MIML-RE (33.8 F1 on2013), and a supervised classifier trained from theSampleJS examples (34.2 F1). This suggests thatit may be reasonable for newcomers to the task totrain a relatively simple classifier, and perhaps fo-cus on downstream processes; although, of course,the best classifiers are still those which incorporatethe examples into MIML-RE (37.7 F1).

5 Learning Patterns to AugmentMIML-RE

To extract slot fillers, we also used two typesof patterns in our system: surface word lexico-syntactic patterns (such as, “X was married to Y”)and dependency patterns (such as, “X (nsubj) ←marry→ (dobj) Y”). Patterns often are useful forextracting slots with high precision, even thoughwith low recall. Usually recall is increased by it-eratively learning patterns by using the extractionslearned in the previous iteration as labeled data.

The lexico-syntactic patterns were hand writtenand the dependency patterns were both hand writ-ten and learned.

We learned the dependency patterns for eachslot independently. For each slot, first, the theknown entities and slot fillers were matched tosentences as described above. For each pair ofentity head word and slot filler head word in amatched sentence, we extracted the shortest pathbetween them in the dependency tree of the sen-tence as the candidate pattern. If any of the entityor the slot filler were tagged with a named entitytag, we included the restriction in the pattern. Thecandidate patterns were weighted by the measure:

Pos√Neg +All + 2

where Pos is the number of times the patternmatched the correct pair of entity and slot filler,Neg is the number of the times the pattern ex-tracted a pair of entity and slot filler that were re-lated by another slot, and All is the total numberof times the pattern matched the sentences. Pat-terns above a certain threshold were selected. Welearned a total of 1073 patterns for all slots. Due tolack of time, we ran the system on a small sampleof the training data for one iteration. In future, weplan to extend this to run pattern learning for mul-tiple iterations and improve pattern scoring usingthe approach of Gupta and Manning, 2014.

Some examples of the learned dependency pat-terns are given in Table 2.

6 Conclusion

We have described Stanford’s two systems in the2014 KBP competition: one based on DeepDive,and another around the MIML-RE relation extrac-tor. We hope to convey at least two central mes-sages: First, that there is an undervalued benefitto scaling computing resources proportional to thesize of the task being solved. That is to say, usingDeepDive to effectively harness large computers

to quickly iterate features and debug a KBP systemis very valuable for creating top-performing sys-tems. Second, that carefully annotating selecteddifficult examples can, with minimal cost and hu-man intervention, yield large improvements in re-lation extraction accuracy.

ReferencesGabor Angeli, Julie Tibshirani, Jean Y. Wu, and

Christopher D. Manning. 2014. Combining dis-tant and partial supervision for relation extraction.In EMNLP.

Raphael Hoffmann, Congle Zhang, and Daniel S.Weld. 2010. Learning 5000 relational extractors.In ACL.

Andrew McCallum and Kamal Nigam. 1998. Employ-ing EM and pool-based active learning for text clas-sification. In ICML.

Mike Mintz, Steven Bills, Rion Snow, and Dan Juraf-sky. 2009a. Distant supervision for relation extrac-tion without labeled data. In ACL.

Mike Mintz, Steven Bills, Rion Snow, and Dan Juraf-sky. 2009b. Distant supervision for relation extrac-tion without labeled data. In ACL.

Feng Niu, Christopher Re, AnHai Doan, and JudeShavlik. 2011a. Tuffy: Scaling up statistical infer-ence in markov logic networks using an rdbms. Pro-ceedings of the VLDB Endowment, 4(6):373–384.

Feng Niu, Christopher Re, AnHai Doan, and JudeShavlik. 2011b. Tuffy: Scaling up statistical infer-ence in Markov logic networks using an RDBMS.PVLDB.

Feng Niu, Ce Zhang, Christopher Re, and Jude WShavlik. 2012. Deepdive: Web-scale knowledge-base construction using statistical learning and in-ference. In VLDS, pages 25–28.

Matthew Richardson and Pedro Domingos. 2006.Markov logic networks. Machine Learning.

Maytal Saar-Tsechansky and Foster Provost. 2004.Active sampling for class probability estimation andranking. Machine Learning, 54(2):153–178.

Mihai Surdeanu, Julie Tibshirani, Ramesh Nallap-ati, and Christopher D. Manning. 2012. Multi-instance multi-label learning for relation extraction.In EMNLP.