Software Multiagent Systems: Lecture 13

description

Transcript of Software Multiagent Systems: Lecture 13

Teamwork

When agents act together

Understanding Teamwork

Ordinary traffic

Driving in a convoy

Two friends A & B together drive in a convoy B is secretly following A

Pass play in Soccer

Contracting with a software company

Orchestra

Understanding Teamwork

• Not just a union of simultaneous coordinated actions• Different from contracting

Together

Joint Goal

Co-labor Collaborate

Why Teamwork?Why not: Master-Slave? Contracts?

Why Teams

Robust organizations

Responsibility to substitute

Mutual assistance

Information communicated to peers

Still capable of structure (not necessarily flat)

Subteams, subsubteams

Variations in capabilities and limitations

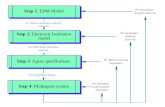

Approach

Theory

Practical teamwork architectures

Taking a step back…

Key Approaches in Multiagent Systems

Market mechanismsAuctions

Distributed Constraint Optimization (DCOP)

x1

x2

x3 x4

Belief-Desire-Intention (BDI) Logics and Psychology

(JPG p (MB p) ۸ (MG p) ۸ (Until [(MB p) ۷ (MB p)] (WMG p))

Distributed POMDP

HybridDCOP/ POMDP/ AUCTIONS/ BDI

• Essential in large-scale multiagent teams

• Synergistic interactions

Key Approaches for Multiagent Teams

Markets

BDI

Dis POMDPs

Local interactions

Uncertainty Localutility

Human usability& plan structure

DCOP

Markets

BDI

Dis POMDPs

Local interactions

Uncertainty Localutility

Human usability& plan structure

DCOP

BDI-POMDPHybrid

Distributed POMDPs

Three papers on the web pages:What to read:

Ignore all the proofsIgnore complexity resultsJAIR article: the model and the results at the endUnderstand fundamental principles

Domain: Teamwork for Disaster Response

Multiagent Team Decision Problem (MTDP)

MTDP: < S, A, P, R>

S: s1, s2, s3…

Single global world state, one per epoch

A: domain-level actions; A = {A1, A2, A3,…An}

Ai is a set of actions for each agent i

Joint action

MTDP

P: Transition function:

P(s’ | s, a1, a2, …an)

RA: Reward

R(s, a1, a2,…an)

One common reward; not separate

Central to teamwork

MTDP (cont’d)

: observations

Each agent: different finite sets of possible observations

O: probability of observation

O(destination-state, joint-action, joint-observation)

P(o1,o2..om | a1, a2,…am, s’)

Simple Scenario

Cost of action: -0.2

Must fight fires together

Observe own location and fire status

+20 +40

MTDP Policy

he problem: Find optimal JOINT policies

One policy for each agent

i: Action policy

Maps belief state into domain actions

(Bi A) for each agent

Belief state: sequence of observations

MTDP Domain Types

Collectively partially observable: general case, no assumptions

Collectively observable: Team (as a whole) observes state

For all joint observations, there is a state s, such that, for all other states s’ not equal to s, Pr (o1,o2…on | s’) = 0

Pr (o1, o2, …on | s ) = ?

Pr (s | o1,o2..on) = ?

Individually observable: each agent observes the state

For all individual observations, there is a state s, such that for all other states s’ not equal to s, Pr (oi | s’) = 0

From MTDP to COM-MTDP

Two separate actions: communication vs domain actions

Two separate reward types:

Communication rewards and domain rewards

Total reward: sum two rewards

Explicit treatment of communication

Analysis

Communicative MTDPs(COM-MTDPs)

: communication capabilities, possible “speech acts”

e.g., “I am moving to fire1.”

R: communication cost (over messages)

e.g., saying, “I am moving to fire1,” has a cost

RWhy ever communicate?

Two Stage Decision Process

Agent

World

Observes

Actions

SE1 P1b1

P2SE2

b2

Communicationsto and from

• P1: Communicationpolicy

• P2: Action policy

•Two state estimators

• Two beliefState updates

COM-MTDP Continued

Belief state (each Bi history of observations, Communication)

Two stage belief update

Stage 1: Pre-communication belief state for agent i (updates just from observations)

i0i

1i t-1t-1i

t

Stage 2: Post-communication belief state for i (updates from observations and communication)

i0i

1i t-1t-1i

tt

Cannot create probability distribution over states

COM-MTDP Continued

he problem: Find optimal JOINT policies

One policy for each agent

: Communication policy

Maps pre-communication belief state into message

(Bi for each agent

A: Action policy

Maps post-communication belief state into domain actions

(Bi A) for each agent

More Domain Types

General Communication: no assumptions on R

Free communication: R(s,) = 0

No communication: R(s,) is negatively infinite

Teamwork Complexity Results

Individual

observability

Collective

observability

Collective

Partial obser.

No

communication

P-complete NEXP

complete

NEXP

complete

General

communication

P-complete NEXP

complete

NEXP

complete

Full

communication

P-complete P-complete PSPACE

complete

Classifying Different Models

Individual

observability

Collective

observability

Collective

Partial obser.

No

communication

MMDP DEC-POMDP

POIPSG

General

communication

XUAN-LESSER

COM-MTDP

Full

communication

True or False

If agents communicated all their observations at each step then the distributed POMDP would be essentially a single agent POMDP

In distributed POMDPs, each agent plans its own policy

Solving Distributed POMDPs with two agents is of same complexity

as solving two separate individual POMDPs

Algorithms

NEXP-complete

No known efficient algorithms

Brute force search

1. Generate space of possible joint policies

2. For each policy in policy space

3. Evaluate over finite horizon T

Complexity:

No. of policies Cost of evaluation

Locally optimal search

Joint equilibrium based search for policiesJESP

Nash Equilibrium in Team Games

Nash equilibrium vs Global optimal reward for the team

3,6 7,1

5,1 8,2

6,0 6,2

x

y

z

u v

A

B

9 8

6 10

6 8

x

y

z

u v

A

B

JESP: Locally Optimal Joint Policy

9 5 8

6 7 10

6 3 8

x

y

z

u v

A

Bw

• Iterate keeping one agent’s policy fixed• More complex policies the same way

Joint Equilibrium-based Search

Description of algorithm:

1. Repeat until convergence

2. For each agent i

3. Fix policy of all agents apart from i

4. Find policy for i that maximizes joint reward

Exhaustive-JESP:

brute force search in policy space of agent I

Expensive

JESP: Joint Equilibrium Search (Nair et al, IJCAI 03)

Repeat until convergence to local equilibrium, for each agent K:

Fix policy for all except agent KFind optimal response policy for agent K

Optimal response policy for K, given fixed policies for others in MTDP:

Transformed to a single-agent POMDP problem:

“Extended” state defined as not as

Define new transition function

Define new observation function

Define multiagent belief state

Dynamic programming over belief states

Fast computation of optimal response

Extended State, Belief StateSample progression of beliefs: HL and HR are observations

a2: Listen

Run-time Results

Method 2 3 4 5 6 7

Exhaustive-JESP 10 317800 - - - -

DP-JESP 0 0 20 110 1360 30030

Is JESP guaranteed to find the global optimal?

Random restarts

9 5 8

6 7 10

6 3 8

Not All Agents are Equal

Scaling up Distributed POMDPs for Agent Networks

Runtime

POMDP vs. distributed POMDP

Distributed POMDPs more complex

Joint transition and observation functions

Better policy

Free communication = POMDP

Less dependency = lower complexity

BDI vs. distributed POMDP

BDI teamwork Distributed POMDP teamwork

Explicit joint goal Explicit joint reward

Plan/organization hierarchies Unstructured plans/teams

Explicit commitments Implicit commitments

No costs / uncertainties Costs & uncertainties included