Social Hash: An Assignment Framework for Optimizing Distributed ...

Transcript of Social Hash: An Assignment Framework for Optimizing Distributed ...

This paper is included in the Proceedings of the 13th USENIX Symposium on Networked Systems

Design and Implementation (NSDI ’16).March 16–18, 2016 • Santa Clara, CA, USA

ISBN 978-1-931971-29-4

Open access to the Proceedings of the 13th USENIX Symposium on

Networked Systems Design and Implementation (NSDI ’16)

is sponsored by USENIX.

Social Hash: An Assignment Framework for Optimizing Distributed Systems Operations

on Social NetworksAlon Shalita, Brian Karrer, Igor Kabiljo, Arun Sharma, Alessandro Presta, and Aaron Adcock,

Facebook; Herald Kllapi, University of Athens; Michael Stumm, University of Toronto

https://www.usenix.org/conference/nsdi16/technical-sessions/presentation/shalita

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 455

Social Hash: an Assignment Framework for OptimizingDistributed Systems Operations on Social Networks

Alon Shalita†, Brian Karrer†, Igor Kabiljo†, Arun Sharma†, Alessandro Presta†, Aaron Adcock†,Herald Kllapi∗, and Michael Stumm§

†Facebook {alon,briankarrer,ikabiljo,asharma,alessandro,aadcock}@fb.com∗University of Athens [email protected]

§University of Toronto [email protected]

AbstractHow objects are assigned to components in a distributedsystem can have a significant impact on performanceand resource usage. Social Hash is a framework forproducing, serving, and maintaining assignments of ob-jects to components so as to optimize the operationsof large social networks, such as Facebook’s SocialGraph. The framework uses a two-level scheme to de-couple compute-intensive optimization from relativelylow-overhead dynamic adaptation. The optimization atthe first level occurs on a slow timescale, and in our ap-plications is based on graph partitioning in order to lever-age the structure of the social network. The dynamicadaptation at the second level takes place frequently toadapt to changes in access patterns and infrastructure,with the goal of balancing component loads.

We demonstrate the effectiveness of Social Hash withtwo real applications. The first assigns HTTP requeststo individual compute clusters with the goal of minimiz-ing the (memory-based) cache miss rate; Social Hash de-creased the cache miss rate of production workloads by25%. The second application assigns data records to stor-age subsystems with the goal of minimizing the numberof storage subsystems that need to be accessed on multi-get fetch requests; Social Hash cut the average responsetime in half on production workloads for one of the stor-age systems at Facebook.

1 Introduction

Almost all of the user-visible data and informationserved up by the Facebook app is maintained in a sin-gle directed graph called the Social Graph [2, 34, 35].Friends, Checkins, Tags, Posts, Likes, and Commentsare all represented as vertices and edges in the graph. Assuch, the graph contains billions of vertices and trillions

of edges, and it consumes many hundreds of petabytes ofstorage space.

The information presented to Facebook users is pri-marily the result of dynamically generated queries on theSocial Graph. For instance, a user’s home profile pagecontains the results of hundreds of dynamically triggeredqueries. Given the popularity of Facebook, the SocialGraph must be able to service well over a billion queriesa second.

The scale of both the graph and the volume of queriesmakes it necessary to use a distributed system design forimplementing the systems supporting the Social Graph.Designing and implementing such a system so that it op-erates efficiently is non-trivial.

A problem that repeatedly arises in distributed sys-tems that serve large social networks is one of assign-ing objects to components; for example, assigning userrequests to compute servers (HTTP request routing), orassigning data records to storage subsystems (storagesharding). How such assignments are made can havea significant impact on performance and resource us-age. Moreover, the assignments must satisfy a widerange of requirements: e.g., they must (i) be amenableto quick lookup, (ii) respect component size constraints,and (iii) be able to adapt to changes in the graph, usagepatterns and hardware infrastructure, while keeping theload well balanced, and (iv) limit the frequency of as-signment changes to prevent excess overhead.

The relationship between the data of the social net-work and the queries on the social network is m : n —a query may require several data items and a data itemmay be required by several queries. This makes findinga good assignment of objects to components non-trivial;finding an optimal solution for many objective functionsis NP Hard [6]. Moreover, a target optimization goal,captured by an objective function, may conflict with the

456 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

Components (e.g., compute clusters or storage subsystems)

Groups

Objects (e.g., data records or HTTP requests)

static

assign

men

t dy

namic

assign

men

t

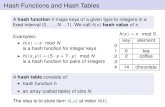

Figure 1: Social Hash Abstract Framework

goal of keeping the loads on the components reasonablywell balanced. In the next subsection, we propose a two-level framework that allows us to trade off these two con-flicting objectives.

Social Hash Framework

We have developed a general framework that accommo-dates the HTTP request routing and storage sharding ex-amples mentioned above, as well as other variants of theassignment problem. In our Social Hash framework, theassignment of objects (such as users or data records) tocomponents (such as compute clusters or storage subsys-tems) is done in two steps. (See Fig. 1.)

In the first step, each object is assigned to a group,where groups are conceptual entities representing clus-terings of objects. Importantly, there are usually manymore groups than components. This assignment is basedon optimizing a given, scenario-dependent, objectivefunction. For example, when assigning HTTP requeststo compute clusters, the objective function may seek tominimize the (main memory) cache miss rate; and whenassigning data records to disk subsystems, the objectivefunction may seek to minimize the number of disk sub-systems that must be contacted for multi-get queries. Be-cause this optimization is typically computationally in-tensive, objects are re-assigned to groups only periodi-cally and offline (e.g., daily or weekly). Hence, we referto this as the static assignment step.

In the second step, each group is assigned to a com-ponent. This second assignment is based on inputsfrom system monitors and system administrators so as torapidly and dynamically respond to changes in the sys-tem and workload. It is able to accommodate compo-nents going on or offline, and it is responsible for keep-ing the components’ loads well balanced. Because theassignments at this level can change in real time, we re-fer to this as the dynamic assignment step.

A key attribute of our framework is the decoupling ofoptimization in the static assignment step, and dynamic

adaptation in the dynamic assignment step. Our solutionsto the assignment problem rely on being able to benefi-cially group together relatively small, cohesive sets ofobjects in the Social Graph. In the optimizations per-formed by the static assignment step, we use graph par-titioning to extract these sets from the Social Graph orfrom prior access patterns. Optimization methods otherthan graph partitioning could be used interchangeably,but graph partitioning is expected to be particularly ef-fective in the context of social networks, because mostrequests are social in nature where users that are sociallyclose tend to consume similar data. The Social in So-cial Hash reflects this essential idea of grouping sociallysimilar objects together.

Contributions

This paper describes the Social Hash framework for as-signing objects to components given scenario-dependentoptimization objectives, while satisfying the require-ments of fine-grained load balancing, assignment stabil-ity, and fast lookup in the context of practical difficultiespresented by changes in the workload and infrastructure.

The Social Hash framework and the two applicationsdescribed in this paper have been in production use atFacebook for over a year. Over 78% of Facebook’s“stateless” Web traffic routing occurs with this frame-work, and the storage sharding application involves tensof thousands of storage servers. The framework has alsobeen used in other settings (e.g., to distribute vertices in agraph processing system, and to reorder data to improvecompression rates). We do not describe these additionalapplications in this paper.

The three most important contributions we make inthis paper are:

1. the two-step assignment hierarchy of our frame-work that decouples (a) optimization on the SocialGraph or previous usage patterns from (b) adapta-tion to changes in the workload and hardware in-frastructure;

2. our use of graph partitioning to exploit the structureof the social network to optimize HTTP routing invery large distributed systems;

3. our use of query history to construct bipartite graphsthat are then partitioned to optimize storage shard-ing.

With respect to (1), the use of a multi-level scheme forallocating resources in distributed systems is not new, noteven when used with graph partitioning [33]. In par-ticular, some multi-tenant resource allocation schemes

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 457

have used approaches that are in many respects similarto the one being proposed here [19, 26, 27, 28]. How-ever, the specifics of our approach, especially as they re-late to Facebook’s operating environment and workload,are sufficiently interesting and unique to warrant a ded-icated discussion and analysis. Regarding (2), edge-cutbased graph partitioning techniques have been used fornumerous optimization applications, but to the best ofour knowledge not for making routing decisions to re-duce cache miss rates. Similarly, for (3), graph partition-ing has previously been applied to storage sharding [33],but partitioning bipartite graphs based on prior accesspatterns is, as far as we know, novel.

We show that the Social Hash framework enables sig-nificant performance improvements as measured on theproduction Social Graph system using live workloads.Our HTTP request routing optimization cut the cachemiss rate by 25%, and our storage sharding optimizationcut the average response latency in half.

2 Two motivating example applications

In this section, we provide more details of the two exam-ples we mentioned in the Introduction. We discuss andanalyze these applications in significantly greater detailin later sections.

HTTP request routing optimization. The purpose ofHTTP request routing is to assign HTTP requests to com-pute clusters. When a cluster services a request, it fetchesany required data from external storage servers, and thencaches the data in a cluster-local main memory-basedcache, such as TAO [2] or Memcache [24], for later reuseby other requests. For example, in a social network, aclient may issue an HTTP request to generate the list ofrecent posts by a user’s friends. The HTTP request willbe routed to one of several compute clusters. The serverwill fetch all posts made by the user’s friends from ex-ternal databases and cache the fetched data. How HTTPrequests are assigned to compute clusters will affect thecache hit rate (since a cached data record may be con-sumed by several queries). It is therefore desirable tochoose a HTTP request assignment scheme which as-signs requests with similar data requirements to the samecompute cluster.

Storage sharding optimization. The purpose of stor-age sharding is to distribute a set of data records acrossseveral storage subsystems. A query which requires acertain record must communicate with the unique hostthat serves that record.1 A query may consume sev-

1To simplify our discussion, we disregard the fact that data is typi-cally replicated across multiple storage servers.

eral records, and a record may be consumed by severalqueries. For example, if the dataset consists of recentposts produced by all the users, a typical query mightfetch the recent posts produced by a user’s friends.

The assignment of data records to storage subsystemsdetermines the number of hosts a query needs to commu-nicate with to obtain the required data. A common opti-mization is to group requests destined to the same storagesubsystem and issue a single request for all of them. Ad-ditionally, since requests to different storage subsystemsare processed independently, they can be sent in paral-lel. As a result, the latency of the slowest request willdetermine the latency of a multi-get query, and the morehosts a query needs to communicate with, the higher theexpected latency (as we show in Section 6.1). It is thusdesirable to choose a data record assignment scheme thatcollocates the data required by similar queries within asmall number of storage subsystems.

3 The assignment problem

Assigning objects to system components is a challeng-ing part of scaling an online distributed system. In thissection, we abstract the essential features of our two mo-tivating examples to formulate the problem we solve inthis paper.

3.1 Requirements

We have the following requirements:• Minimal average query response time: User satis-

faction can improve with low query response times.• Load balanced components: The better load-

balanced the components, the higher the efficiency of thesystem; a poorly load-balanced system will reach its ca-pacity earlier and in some cases may lead to increasedlatencies.• Assignment stability: Assignments of objects to

components should not change too frequently in orderto avoid excessive overhead. For example, reassigning aquery from one cluster to another may lead to extra (cold)cache misses at the new cluster.• Fast lookup: Low latency lookup of the object-

component assignment is important, given the online na-ture of our target distributed system.

3.2 Practical challenges

Meeting the requirements listed above is challenging fora variety of reasons:

458 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

• Scale: The assignment problem typically requiresassigning a large number of objects to a substantiallysmaller number of components. The combinatorial ex-plosion in the number of possible assignments preventssimple optimization methods from being effective.• Effects of similarity on load balance: Colocating

similar objects usually results in higher load imbalancesthan when colocating dissimilar objects. For example,similar users likely have similar hours of activity, brows-ing devices, and favorite product features, leading to loadimbalance when assigning similar users to the same com-pute clusters.• Heterogenous and dynamic set of components:

Components are often heterogeneous and thus supportdifferent loads. Further, the desired load on each compo-nent can change over time; e.g., due to hardware failure.Finally the set of components will change over time asnew hardware is introduced and old hardware removed.• Dynamic workload: The relationship between data

and queries can change over time. A previously rarelyaccessed data record could become popular, or new typesof queries could start requesting data records that werepreviously not accessed. This can happen, for example, iffriendship ties in the network are introduced or removed,or if product features change their data consumption pat-tern.• Addition and removal of objects: Social networks

change and grow constantly, so the set of objects thatmust be assigned changes over time. For example, usersmay join or leave the service.

The magnitude and relative importance of these prac-tical challenges will differ depending on the distributedsystem being targeted. For Facebook, the scale is enor-mous; similar users do have similar patterns; and het-erogeneous hardware is prevalent. On the other hand,changes to the graph occur at a (relatively) modest rate(in part because we often only consider subgraphs of theSocial Graph); and rate of hardware failures is reason-ably constant and predictable.

4 Social Hash Framework

In this section, we propose a framework called the SocialHash Framework which comprises a solution to the as-signment problem and, moreover, addresses the practicalchallenges listed above.

In Section 1 we introduced the abstract frameworkwith objects at the bottom, (abstract) groups in the mid-dle, and components at the top. Recall that objects arequeries, users, or data records, etc., and components arecomputer clusters, or storage subsystems, etc..

Objects are first assigned to groups in a optimization-based static assignment that is updated on a slowtimescale of a day to a week. Groups are then assignedto components using an adaptation-based dynamic as-signment that is updated on a much faster timescale.Dynamic assignment is used to keep the system load-balanced despite changes in the workload or changes inthe underlying infrastructure. This two-level design isintended to accommodate the disparate requirements andchallenges of efficiently operating a huge social network,as described in Section 3.

Below, we give more concrete details on the abstractframework, how it is implemented, and how it is used.In Sections 5 and 6 we will become even more concreteand present specific implementation issues for our twoexamples. We begin by presenting our rationale for usinga two-level design.

4.1 Rationale

Our two-level approach for assigning objects to compo-nents is motivated by the observation that there is a con-flict between the objectives of optimization and adapta-tion. In theory, one could assign objects to componentsdirectly, resulting in only one assignment step. However,this would not work well in practice because of diffi-culties adapting to changes: as mentioned, componentloads often change unpredictably; components are addedor removed from the system dynamically; and the sim-ilarity of objects that are naturally grouped together foroptimization leads to unbalanced utilization of resources.Waiting to rerun the assignment algorithm would leavethe system in a suboptimal state for too long, and chang-ing assignments on individual objects without re-runningthe assignment algorithm would also be suboptimal.

An assignment framework must therefore address boththe optimization and adaptation objectives, and it mustoffer enough flexibility to be able to shift emphasis be-tween these competing objectives at will. With a two-level approach, the static level optimizes the assignmentto groups where, from the point of view of optimization,the group is treated as a virtual component. The dynamiclevel adapts to changes by assigning groups to compo-nents. Multiple groups may be assigned to the samecomponent; however, all objects in the same group areguaranteed to be assigned to the same component. (SeeFigure 1.) As such, what is particularly propitious aboutour architecture is that dynamic reassignment of groupsto components does not negate the optimization step be-cause objects in a group remain collocated to the samecomponent, even after reassignment.

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 459

We are able to seamlessly shift emphasis betweenstatic optimization and dynamic adaptation by means ofthe parameter n, the ratio of number of groups to numberof components; that is n := |G|

/|C|. When n = 1, the

emphasis is entirely on the static optimization. There isa 1 : 1 correspondence between groups and components.As noted above, this may not work well for some applica-tions because it may not be sufficiently adaptive. Whenn � 1, we trade off optimization for increased adapta-tion. When n is too large, the optimization quality maybe severely degraded, and the overhead of dynamic as-signment may be prohibitive. Clearly, the choice of n,and thus the tradeoff between optimization and adapta-tion, is best selected on a per-application basis; as weshow in later sections, some applications require less ag-gressive adaptation than others, allowing more emphasisto be placed on optimization.

4.2 Framework OverviewIn this subsection, we describe the main elements ofthe Social Hash framework, as depicted in Fig. 2: thestatic assignment algorithm, the dynamic assignment al-gorithm, the lookup method, and the missing key assign-ment. In the discussion that follows it is useful to notethat objects are uniquely identified by a key.

The static assignment algorithm generates a staticmapping from objects to groups using the following in-put: (i) a context dependent graph, which in our workcan be either a unipartite graph (e.g., friendship graph)or a bipartite graph based on access logs (e.g., relatingqueries and accessed data records); (ii) type of object thatis to be assigned to groups (e.g. data records, users, etc);(iii) an objective function; (iv) number of groups; and(v) permissible imbalance between groups. The outputof the static partitioning algorithm is a hash table of (key,group) pairs, indexed by key. We refer to this hash tableas the Social Hash Table.2

The dynamic assignment uses the following input:(i) current component loads, (ii) the desired maximumload per component, and possibly (iii) the historical loadsper group. The desired load for each component is pro-vided by system operators and monitoring systems, andthe historical loads induced by each group can be de-rived from system logs. As the observed and desiredloads change over time, the dynamic assignment shiftsgroups among components to balance the load. The out-put of the dynamic assignment is a hash table of (group,component) pairs, called the Assignment Table.2

2In practice, any key-value store that supports fast lookups can beused. We describe it as a hash table for ease of comprehension.

Social Hash Tbl Assignment Tbl

group g

Look

up

Requ

est

Missing key assignment

key

failed

grou

p

c

Graph Partitioning

graph

specifications Dynamic Assignment

monitoring info

operator console

key

Figure 2: Social Hash Architecture

When a client wishes to look up which component anobject has been assigned to, it will do so in two steps:first the object key is used to index into the Social HashTable to obtain the target group, g; second, g is used toindex into the Assignment Table to obtain the componentid c. This is shown in Figure 2.

Because the Social Hash Table is constructed only pe-riodically, it is possible that a target key is missing inthe Social Hash table; for example, the key could re-fer to a new user or a user that has not had any activityin the recent past (and hence is not in the access log).When an object key is not found in the Social Hash Ta-ble, then the Missing Key Assignment rule does the ex-ception handling and assigns the object to a group on thefly. The primary requirement is that these exceptional as-signments are generated in a consistent way so that sub-sequent lookups of the same key return the same group.Eventually these new keys will be incorporated into theSocial Hash Table by the static partitioning algorithm.

4.3 Static assignment algorithm

We use graph partitioning algorithms to partition objectsinto groups in the static assignment step. Graph par-titioning algorithms have been well-studied [3], and anumber of graph partitioning frameworks exist [5, 18].However, social network graphs, like Facebook’s SocialGraph, can be huge compared to what much of the ex-isting literature contemplates. As a result, an approachis needed that is amenable to distributed computation ondistributed memory systems. We built our custom graphpartitioning solution on top of the Apache Giraph graphprocessing system [1], in part because of its ability to par-tition graphs in parallel; other graph processing systemscould have also potentially been used [10, 11, 20].

The basic strategy in obtaining good static assign-ments is the following graph partitioning heuristic. We

460 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

assume the algorithm begins with an initial (weight-)balanced assignment of objects to groups represented aspairs (v,gv), where v denotes an object and gv denotes thegroup to which v is initially assigned. Next, for each v,we record the group g∗v that gives the optimal assignmentfor v to minimize the objective function, assuming allother assignments remain the same. This step is repeatedfor each object to obtain a list of pairs (v,g∗v). Each ob-ject can be processed in parallel. Finally, via a swap-ping algorithm, as many reassignments of v to g∗v are car-ried out under the constraint that group sizes remain un-changed within each iteration; the swapping can again bedone in parallel as long as it is properly coordinated (inour implementation with a centralized coordinator). Thisoverall process is then repeated with the new assignmentstaken as the initial condition for the next iteration. Theabove process is iterated on until it converges or reachesa limit on the number of iterations.

The initial balanced assignment required by the staticassignment algorithm is either obtained via a random as-signment (e.g., when the algorithm is run for the veryfirst time) or is obtained from the output of the previousiteration of the static assignment algorithm modulo thenewly added objects that are assigned randomly.

The above procedure manages to produce high qualityresults for the graphs underlying Facebook operations ina fast and scalable manner. Within a day, a small clusterof a few hundred machines is able to partition the friend-ship graph of over 1.5B+ Facebook users into 21,000 bal-anced groups such that each user shares her group with atleast 50% of her friends. And the same cluster is able toupdate the assignment starting from the previous assign-ment within a few hours, easily allowing a weekly (oreven daily) update schedule. Finally, it is worth point-ing out that the procedure is able to partition the graphinto tens of thousands of groups, and it is amenable tomaintaining stability, since each iteration begins with theprevious assignment and it is easy to limit the movementof objects across groups.

We have successfully used the above heuristic on bothunipartite and bipartite graphs, as we describe in moredetail in Sections 5 and 6.

4.4 Dynamic assignment

The primary objective of dynamic assignment is to keepcomponent loads well balanced despite changes in accesspatterns and infrastructure. Load balancing has been wellresearched in many domains. However, the specific loadbalancing strategy used for our Social Hash frameworkmay vary from application to application so as to provide

the best results. Factors that may affect the the choice ofload balancing strategy include:• Accuracy in predicting future loads: Low pre-

diction accuracy favors a strategy with a high group-to-component ratio (e.g., � 1,000) and groups being as-signed to components randomly. This is the strategy thatis used for HTTP routing. On the other hand, the amountof storage used by data records is easier to predict (inour case), and hence warrants a low group-to-componentratio and non-random component assignment.• Dimensionality of loads: A system requiring bal-

ancing across multiple different load dimensions (CPU,storage, queries per second, etc.) favors using a highgroup-to-component ratio and random assignment.• Group transfer overhead: The higher the overhead

of moving a group from one component to another, themore one would want to limit the rate of moves betweencomponents by increasing the load imbalance thresholdthat triggers a move.• Assignment memory: It can be more efficient to

assign a group back to an underloaded component it waspreviously assigned to in order to potentially benefit fromthe residual state that may still be present. This favors re-membering recent assignments, or using techniques sim-ilar to consistent hashing.

Finally, we note that load balancing strategies used inother domains will need to be adapted to the Social Hashframework; e.g., load is transferred from one componentto another in increments of a group; and the load eachgroup incurs is not homogeneous, in part because of thesimilarity of objects within groups.

5 Social Hash for Facebook’s Web TrafficRouting

In this section, we describe how we applied the SocialHash framework to Facebook’s global web traffic routingto improve the efficiency of large cache services. This isFacebook’s largest application using the framework andhas been in production for over a year.

Facebook operates several worldwide data centers,each divided into front-end clusters containing web andcache tiers, and back-end clusters containing databaseand service tiers. To fulfill an HTTP request, a front-endweb server may need to access databases or services in(possibly remote) back-end clusters. The returned data iscached within front-end cache services, such as TAO [2]or Memcache [24]. Clearly, the lower the cache missrate, the higher the efficiency of hardware usage, and thelower the response times.

In addition, to reduce latencies for users, Facebook

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 461

has “Point-of-Presence” (PoP) units around the world:small-scale computational centers which reside close tousers. PoPs are used for multiple purposes, includ-ing peering with other network operators and mediacaching [12]. When an HTTP request to one of Face-book’s services is issued, the request will first go to anearby PoP. A load balancer in the PoP then routes therequest to one of the front-end clusters over fast commu-nication channels.

5.1 Prior strategy

Prior to using Social Hash, routing decisions werebased on user identifiers, using a consistent hashingscheme [15]. To make a routing decision, the user identi-fier was extracted from the request, where it was encodedwithin the request persistent attributes (i.e., cookie), andthen used to index into a consistent hash ring to obtainthe cluster id. The segments of the consistent hash ringcorresponded in number and weight to the front-end clus-ters. The ring’s weights were dynamic and could bechanged at any time, allowing dynamic changes to thecluster’s traffic load. The large number of users in com-parison to the small number of clusters, along with therandom nature of the hash ring, ensured that each clus-ter received a homogeneous traffic pattern. With fixedcluster weights, a user would repeatedly be routed to thesame cluster, guaranteeing high hit rates for user-specificdata. The consistent nature of the ring also ensuredthat changes to cluster weights resulted in relatively mi-nor changes to the user-to-cluster mapping, reducing thenumber of cache misses after such changes.

5.2 Social Hash implementation

For the Social Hash static assignment, we used a uni-partite graph with vertices representing Facebook’s usersand edges representing the friendship ties between them.We partition the graph using the edge-cut optimizationcriterion, knowing that friends and socially similar userstend to consume the same data, and that they are there-fore likely to reuse each other’s cached data records.

We use a relatively large number of groups for two rea-sons. First, the global routing scheme needs to be able toshift traffic across clusters in small quantities. Second,changes in HTTP request routing will affect many sub-systems at Facebook, not just the cache tiers; and it isvery difficult to predict how much load each group willincur on each subsystem. Hence, we have found the beststrategy to balance the load overall is to use many groupsand assign the groups to clusters randomly.

For the dynamic assignment step, we kept the existingconsistent hash scheme, which is oblivious to the type ofidentifier it receives as input (either user- or group-id).

To be able to make an HTTP request routing decisionat run time, it is necessary to access both the Social HashTable and the Assignment Table. The latter is computedon-the-fly using the consistent hash mechanism, whichrequires a fairly small map between clusters and theirweights; it is therefore easy to hold the map in the POPmemories. The former, however, is large, consumingseveral gigabytes of storage space when uncompressed.We considered storing the Social Hash Table close to thePoP (in its own memory or in a nearby storage service),but decided not to do so due to added PoP complexity,fault tolerance considerations, and limited PoP resourcesthat could be put to better use by other services. We alsoconsidered sending a lookup request to a data center, butrejected this idea due to latency concerns.

Instead, we encode the user assigned group within therequest persistent attributes (i.e., as a cookie) and de-code it to make a routing decision when a request arrives.Requests that do not have the group-id encoded in theheader are routed to a random front-end cluster, wherethe session creation mechanism accesses a local copy ofthe Social Hash Table to fetch the group assigned to theuser. Because the Social Hash Table is updated once aweek, group-ids in the headers may become stale. Forthis reason, a user request will periodically (at least oncean hour) trigger an update process where the group-id isupdated with its latest value from the Social Hash Ta-ble. This allows long lasting connections to receive freshrouting information.

Our design eliminates the complexities and overheadof a Social Hash Table lookup at the PoPs, requiring justa single header read instead. The design is also more re-silient to failure, because even if the data store providingthe Social Hash Table is down, group-id’s will mostly beavailable in the request headers.

For technical reasons, some requests cannot be taggedproperly with either the user or the group identifier (be-cause the requests may have been issued by crawlers,bots or legacy clients). These requests are routed ran-domly, yet in a consistent manner, to one of the front-end clusters while respecting load constraints. In the pastthree months, 78% of the requests had a valid group-idthat could be used for routing (and those that did not werenot tagged with a user-id, a group-id, or any other identi-fier.).

Some may argue that the decreased miss ratesachieved with Social Hash leads to a fault tolerance issue,because the data records are less likely to be present in

462 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

�

��

��

��

��

��

�

�

�

�

�

�

0.00

0.25

0.50

0.75

1.00

10 100 1,000 10,000 100,000Number of groups

Edge

loca

lity

Figure 3: Edge locality (fraction of edges within groups) vs. thenumber of groups for Facebook’s friendship graph.

multiple caches simultaneously. This could be a concernas the recovery of a cache failure would overwhelm thebacking storage systems with excessive traffic and thuslead to severely degraded overall performance. How-ever, our experience indicates that a failure of the main-memory caches within a cluster only causes a temporaryload increase on the backend storage servers that stayswithin the normal operational load thresholds.

5.3 Operational observations

To get a sense of how access patterns of friends relate,we sampled well over 100 million accesses to TAO datarecords from access logs. We found that when two usersaccess the same data record, there is a 15% chance theyare friends. This is millions of times larger than the prob-ability of two random users being friends. We concludethat co-locating the processing of friends’ HTTP requestsas much as possible is an effective strategy.

Figure 3 depicts edge locality vs. the number of groupsused to partition the 1.5B+ Facebook users. Edge lo-cality measures the fraction of “friend” edges connect-ing two users that are both assigned to the same group(thus, the goal of static assignment would be to maxi-mize edge locality). It is not a surprise that edge localitydecreases with the number of groups. Perhaps a bit moreunexpected is the observation that edge locality remainsreasonably large even when the number of groups in-creases significantly (e.g., >20% with 1 million groups);intuitively, this is because the friendship graph containsmany small relatively dense communities. We chose thesmallest number of groups that would satisfy our mainrequirement for dynamic assignment, namely to be ableto balance the load by shifting only small amounts of

traffic between front-end clusters. Repeating the processof assigning different numbers of groups into compo-nents offline and examining the resulting imbalance onknown loads led us to use 21,000 groups on a few 10’s ofclusters; our group-to-component ratio is thus quite high.

The combination of new users being added to the sys-tem and changes to the friendship graph causes edge lo-cality to degrade over time. We measured the decrease ofedge locality from an initial, random assignment of usersto one of 21,000 groups over the course of four weeks.We observed a nearly linear 0.1% decrease in edge lo-cality per week. While small, we decided to update therouting assignment once a week so as to minimize a no-ticeable decrease in quality. At the same time, we didnot observed a decrease in cache hit rate between up-dates, implying that 0.1% is a negligible amount. Thedecrease in edge locality implies that a longer updateschedule would also be satisfactory, and that Social Hashcan tolerate a long maintenance breakdown without alter-ing Facebook’s web traffic routing quality.

For the past three months, the Social Hash Table usedfor Facebook’s routing has maintained an edge locality ofover 50%, meaning half the friendships are within eachof the 21,000 groups. This edge locality is slightly higherthan the exploratory values shown in Figure 3, becausewe iterated longer in the graph partitioning algorithm onthe production system than we did in the experimentsfrom which we obtained the figure. The static assign-ment is well-balanced, with the largest group containingat most 0.8% more users than the average group. Eachweekly update by the static assignment step resulted inaround 1.5% of users switching groups from the previ-ous assignment. All of these updates were suitably smallto avoid noticeable increases in the cache miss rate whenthe updates were introduced into production.

5.4 Live traffic experiment

To measure the effectiveness of Social Hash-based HTTProuting optimization, we performed a live traffic experi-ment on two identical clusters with the same hardware,number of hosts and capacity constraints. These clustersare typical of what Facebook uses in production. Eachcluster had many hundred TAO servers, which served thelocal web tier with cached social data.

For our experiment, we selected a set of groups ran-domly from the Social Hash Table. We then routed allHTTP requests from users assigned to these groups toone “test” cluster, while HTTP requests from a samenumber of other users were routed to the second, “con-trol” cluster. Hence, the control cluster saw traffic with

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 463

−30

−20

−10

0

10

Sun

Mon Tue

Wed Thu Fri

Sat

Sun

Mon Tue

Wed Thu Fri

Sat

Day

Perc

enta

ge c

hang

e in

TAO

mis

s ra

te (%

)

−5.0

−2.5

0.0

2.5

5.0

Sun

Mon Tue

Wed Thu Fri

Sat

Sun

Mon Tue

Wed Thu Fri

Sat

Day

Perc

enta

ge c

hang

e in

idle

CPU

(%)

Figure 4: Percentage change in TAO miss rate (left, where lower is better) and CPU idle rate (right, where higher is better) onthe Social Hash cluster relative to the cluster with random assignment. Area between red dashed lines: period of the test. Orangedashed lines: traffic shifts. Green dot-dash line: Social Hash Table is updated. The values on the days traffic was shifted (Tuesdayand Wednesday, respectively) are not representative

attributes very similar to the traffic it received with theprior strategy: the traffic with the prior strategy was sam-pled from all users, while the traffic for the control clus-ter was sampled from all users except those associatedwith the test cluster. We ran the experiment for 10 days.During this time, operational changes that would affecthit rates on the two clusters were prevented.

The left hand side of Figure 4 shows the change incache miss rate between the test and control clusters. Itis evident that the miss rate drops by over 25% whenassigning groups to a cluster as opposed to just users.

The right hand side of Figure 4 shows the change inaverage CPU idle rate between the test and the controlcluster. The test cluster had up to 3% more idle timecompared to the control cluster.

During the experiment, we updated the Social HashTable by applying an updated static assignment. Thetime at which this occurred is shown with a vertical greendot-dash line. We note that the cache miss rate and theCPU idle time are not affected by the update, demon-strating that the transition process is smooth.

Figure 5 compares the daily working set size for TAOobjects at both clusters. The daily working set of a clus-ter is the total size of all objects that were accessed bythe TAO instance on that front-end cluster at least onceduring that day. The figure shows that the working setsize dropped by as much as 8.3%.

We conclude from this experiment that Social Hashis effective at improving the efficiency of the cache forHTTP requests: fewer requests are sent to backend sys-tems, and the hardware is utilized in a more efficient way.

6 Storage sharding

In this section, we describe in detail how we appliedthe Social Hash framework to sharded storage systemsat Facebook. The assignment problem is to decide howto assign data records (the objects) to storage subsystems(the components).

6.1 Fanout vs. LatencyThe objective function we optimize is fanout, the numberof storage subsystems that must be contacted for multi-get queries. We argue and experimentally demonstratethat fanout is a suitable objective function, since lowerfanout is closely correlated with lower latencies [7].

Multiget queries are typically forced to issue requeststo multiple storage subsystems, and they do so in paral-lel. As such, the latency of a multi-get query is deter-mined by the slowest request. By reducing fanout, theprobability of encountering a request that is unexpect-edly slower than the others is reduced, thus reducing thelatency of the query. This is the fundamental argumentfor using fanout as the objective function for the assign-ment problem in the context of storage sharding. An-other argument is that lower fanout reduces the connec-tion overhead per data record.

To further elaborate the relevance of choosing fanoutas the objective function, consider this abstract scenario.Suppose 1% of individual requests to storage serversincur a significant delay due to unanticipated system-specific issues (CPU thread scheduling delays, system

464 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

�

�

�

�� �

�

� �

�

�

��

−8

−4

0Su

nMon Tu

eWed Th

u Fri Sat

Sun

Mon Tue

Wed Thu Fri

Day

Perce

ntage

chan

ge in

work

ing se

t (%)

Figure 5: Percentage change in daily TAO working set size onthe Social Hash cluster relative to the cluster with random as-signment. The red dashed lines indicate the first and last daysof the test where the test was running only during part of theday (so the values for these two days may not be representa-tive).

interrupts, etc.). If a query must contact 10 storageservers, then one can calculate that the multi-get requesthas a 9.6% chance an individual sub-request will expe-rience a significant delay. If the fanout can be reduced,one can reduce the probability of incurring the delay.

We ran a simple experiment to confirm our under-standing of the relationship between fanout and latency.We issued trivial remote requests and measured the la-tency of a single request (fanout=1) and the latency oftwo requests sent in parallel (fanout=2). Figure 6 showsthe cumulative latency distribution for both cases. Afanout of 1 results in lower latencies than a fanout of 2.If we calculate the expected distribution computed fromtwo independent samples from the single request distri-bution, then the observed overall latency for two parallelrequests matches the expected distribution quite nicely.

One possible caveat to our analysis of the relationshipbetween fanout and latency is that reducing fanout gener-ally increases the size of the largest request, which couldincrease latency. Fortunately, storage subsystems todayhave processors with many cores that can be exploitedby the software to increase the parallelism in servicing asingle, large request.

6.2 Implementation

For the static assignment we apply bipartite graph parti-tioning to minimize fanout. We create the bipartite graphfrom logs of queries from the dynamic operations of the

0.00

0.25

0.50

0.75

1.00

0.0 0.5 t 1.0 t 1.5 t 2.0 tLatency

CDF Single call

Two callsExpected

Figure 6: Cumulative distribution of latency for a single re-quest, two requests in parallel, and the expected distributionfrom two independent samples from the single request distribu-tion, where t is the average latency of a single call.

social network.3 The queries and data records accessedby the queries are represented by two types of vertices.A query vertex is edge-connected to a data vertex iff thequery accesses the data record. The graph partitioningalgorithm is then used to partition the data vertices intogroups so as to minimize the average number of groupseach query is connected to.

Clearly, most data needs to be replicated for fault tol-erance (and other) reasons. Many systems at Facebookdo this by organizing machines storing data into non-overlapping sets, each containing each data record ex-actly once. We refer to such a set as a replica. Sinceassignment is independent between replicas, we will re-strict our analysis to scenarios with just one replica.

6.3 Simplified sharding experiment

We consider the following simple experiment. We use40 stripped down storage servers, where data is stored ina memory-based, key-value store. We assume that thereis one data record per user. We run this setup in twoconfigurations. In the first, “random” configuration, datarecords are distributed across the 40 storage servers us-ing a hash function, which is a common practice. In thesecond, “social” configuration, we use our Social Hashframework to minimize fanout.

We then sampled a live traffic pattern, and issued thesame set of queries to both configurations, and we mea-sured fanout and latency. With the random configuration,

3In some cases, prior knowledge of which records each query mustretrieve is sufficient to create the graph.

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 465

0.00

0.25

0.50

0.75

1.00

0 3 t 6 t 9 t 12 tLatency

CDF random parallel

social parallelsocial serial

Figure 7: Cumulative latency distribution for fetching data offriends, where t is the average latency of a single call.

the queries needed to issue requests to 38.8 storage sub-systems on average. With the social configuration, thequeries needed to issue requests to only 9.9 storage sub-systems on average. This decrease in fanout resulted in a2.1X lower average latency for the queries.

The cumulative distribution of latencies for the ran-dom and social configurations are shown in Figure 7,where we also include the social configuration’s latencydistribution after disabling parallelism within each ma-chine. Without parallelism, the average latency is stilllower then with the random configuration, but only by23%. Furthermore, the slowest 25% queries on the socialconfiguration without parallelism exhibited substantiallyhigher latencies than the 25% slowest queries on the ran-dom configuration. This figure confirms the importanceof using parallelism within each system.

6.4 Operational observationsAfter we deployed storage sharding optimized with So-cial Hash to one of the graph databases at Facebook, con-taining thousands of storage servers, we found that mea-sured latencies of queries decreased by over 50% on av-erage, and CPU utilization also decreased by over 50%.

We attribute much of this improvement in perfor-mance to our method of assigning data records to groups,using graph partitioning on bi-partite graphs generatedfrom prior queries. The solid line in Figure 8 shows theaverage fanout as a function of the number of groupswhen using our method. The dotted line shows the av-erage fanout when using standard edge-cut optimizationcriteria on the (unipartite) friendship graph.

After analyzing expected load balance, we decided ona group-to-component ratio of 8; the dynamic assign-ment algorithm then selects which 8 groups to assign tothe same storage subsystem, based on the historical loadpatterns. This allowed us to keep fanout small, while still

��

�

�

�

�

�

�

�

�

�

�

�

�

�

�

10

20

30

40

50

3 10 30 100 300 1,000 3,000 10,000Number of groups

Aver

age

fano

ut

Figure 8: The average fanout versus number of groups on Face-book’s friendship graph when using edge locality optimization(dotted curve) and our fanout optimization (solid curve), re-spectively.

being able to maintain good load balance.In practice, fanout degrades over time. For the 40

group solution we used in the simplified application, weobserved a fanout increase of about 2% on average overthe course of a week. A single static assignment updatesufficed to bring the fanout back to what it was previ-ously, requiring only 1.85% of the data records to haveto be moved. With such low impact, we decided static as-signment updates were only necessary every few months,relying on dynamic assignment to move groups whennecessary in between. Even then, we found that dynamicassignment updates were not necessary more than oncea week on average. We used the same static assignmentfor all replicas, but made dynamic assignment decisionsindependently for each replica.

7 Related work

As discussed in Section 4.3, graph partitioning has an ex-tensive literature, and our optimization objectives, edgelocality and fanout, correspond to edge cut and hyper-graph edge cut. A recent review of graph partitioningmethods can be found online [3]. Many graph partition-ing systems have been built and are available. For exam-ple, Metis [16, 18] is one that is frequently used.

A Giraph-based approach to graph partitioning called“Spinner” was recently announced [21]. Our work is dis-tinct in that their application was optimizing batch pro-cessing systems, such as Giraph itself, via increased edgelocality, and our graph partitioning system is embeddedin the Social Hash framework.

Average fanout in a bipartite graph, when presented asa hypergraph, with vertices being one side of the bipartitegraph, and hyper-edges representing the vertices from

466 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

the other side, directly translates into the hypergraph par-titioning problem. Hypergraph partitioning also has anextensive literature [4, 17], and one of the publicly avail-able parallel solutions is PHG [9], which can be found inthe Zoltan toolkit [8].

Partitioning online social networks has previouslybeen used to improve performance of distributed sys-tems. Ugander and Backstrom discuss partitioning largegraphs to optimize Facebook infrastructure [33]. Steinconsidered a theoretical application of partitioning toFacebook infrastructure [29]. Tran and Zhang consid-ered a multi-objective optimization problem based onedge cut motivated by read and write behaviors in onlinesocial networks [31, 32].

Other research has considered data replication in com-bination with partitioning for sharding data for online so-cial networks. Pujol et al. studied low fanout configura-tions via replication of data between hosts [25] and Wanget al. suggested minimizing fan-out by random replica-tion and query optimization [36]. Nguyen et al. con-sidered how to place additional replicas of users givena fixed initial assignment of users to servers [22, 30].

Dean and Barroso [7] investigated the effect of latencyvariability on fanout queries in distributed systems, andsuggested several means to reduce its influence. Jeon etal. [14] argued for the necessity of parallelizing execu-tion of large requests, in order to tame latencies.

Our contribution differs from these lines of researchby presenting a realized framework integrated into pro-duction systems at Facebook. A production applicationto online social networks is provided by Huang et al. whodescribe improving infrastructure performance for Ren-ren through a combination of graph partitioning and datareplication methods [13]. Sharding has been consideredfor distributed social network databases by Nicoara, etal. who propose Hermes [23].

8 Concluding Remarks

We introduced the Social Hash framework for produc-ing, serving, and maintaining assignments of objects tocomponents in distributed systems. The framework wasdesigned for optimizing operations on large social net-works, such as Facebook’s Social Graph. A key aspect ofthe framework is how optimization is decoupled from dy-namic adaptation, through a two-level scheme that usesgraph partitioning for optimization at the first level anddynamic assignment at the second level. The first levelleverages the structure of the social network and its us-age patterns, while the second level adapts to changes inthe data, its access patterns and the infrastructure.

We demonstrated the effectiveness of the Social Hashframework with the HTTP request routing and storagesharding applications. For the former, Social Hash wasable to decrease the cache miss rate by 25%, and forthe latter, it was able to cut the average response time inhalf, as measured on the live Facebook system with livetraffic production workloads. The approaches we tookwith both applications was, to the best of our knowledge,novel; i.e., graph partitioning the Social Graph to opti-mize HTTP request routing, and using query history toconstruct bipartite graphs that are then partitioned to op-timize storage sharding.

Our approach has some limitations. It was designedin the context of optimizing online social networks andhence will not be suitable for every distributed system.To be successful, both the static and dynamic assignmentsteps rely on certain characteristics, which tend to be ful-filled by social networks. For the static step, the under-lying graph must be conducive to partitioning, and thegraph must be reasonably sparse so that the partitioningis computationally tractable; social graphs almost alwaysmeet those characteristics. The social graph cannot bechanging too rapidly; otherwise the optimized static as-signment will be obsolete too quickly and the attendantexception handling becomes too computationally com-plex. For the dynamic step, we assume that the workloadand the infrastructure does not change too rapidly.

While we have been able to obtain impressive effi-ciency gains using the Social Hash framework, we be-lieve there is much room for further improvement. Weare currently: (i) working on improving the performanceof our graph partitioning algorithms, (ii) considering us-ing historical query patterns and bi-partite graph parti-tioning to further improve cache miss rates, (iii) incorpo-rating geo-locality considerations for our HTTP routingoptimizations, and (iv) incorporating alternative repli-cation schemes for further reducing fanout in storagesharded systems.

AcknowledgementsWe would like to thank Tony Savor, Kenny Lau, VenkatVenkataramani and Avery Ching for their support, AlexLaslavic, Praveen Kumar, Jan Jezabek, Alexander Ramirez,Omry Yadan, Michael Paleczny, Jianming Wu, Chunhui Zhu,Deyang Zhao and Pavan Athivarapu for helping integrate withFacebook systems, Sanjeev Kumar, Kaushik Veeraraghavan,Dionysis Logothetis, Romain Thibaux and Rajesh Nishtala fortheir feedback on early drafts, Badr El-Said and Laxman Dhuli-pala for their contributions to the framework, and DimitrisAchlioptas for discussions on graph partitioning. We wouldalso like to thank the reviewers for their constructive and help-ful comments.

USENIX Association 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) 467

References

[1] Apache Giraph. http://giraph.apache.org/.

[2] N. Bronson, Z. Amsden, G. Cabrera, P. Chakka, P. Di-mov, H. Ding, J. Ferris, A. Giardullo, S. Kulkarni, H. Li,M. Marchukov, D. Petrov, L. Puzar, Y. J. Song, andV. Venkataramani. TAO: Facebook’s distributed data storefor the Social Graph. In Proc. 2013 USENIX Annual Tech-nical Conference (USENIX ATC’13), pages 49–60, 2013.

[3] A. Buluc, H. Meyerhenke, I. Safro, P. Sanders, andC. Schulz. Recent advances in graph partitioning. CoRR,abs/1311.3144, 2013.

[4] U. V. Catalyurek and C. Aykanat. Hypergraph-partitioning-based decomposition for parallel sparse-matrix vector multiplication. IEEE Trans. on Parallel andDistributed Systems, 10(7):673–693, 1999.

[5] C. Chevalier and F. Pellegrini. PT-Scotch: A tool forefficient parallel graph ordering. CoRR, abs/0907.1375,2009.

[6] R. Cohen, L. Katzi, and D. Raz. An efficient approxima-tion for the generalized assignment problem. InformationProcessing Letters, 100(4):162–166, 2006.

[7] J. Dean and L. A. Barroso. The tail at scale. CACM,56(2):74–80, Feb. 2013.

[8] K. Devine, E. Boman, L. Riesen, U. Catalyurek, andC. Chevalier. Getting started with Zoltan: A short tutorial.In Proc. 2009 Dagstuhl Seminar on Combinatorial Scien-tific Computing, 2009. Also available as Sandia NationalLabs Tech Report SAND2009-0578C.

[9] K. D. Devine, E. G. Boman, R. T. Heaphy, R. H. Bissel-ing, and U. V. Catalyurek. Parallel hypergraph partition-ing for scientific computing. In Proc. Intl. Parallel andDistributed Processing Symposium (IPDPS), pages 10–20, 2006.

[10] J. E. Gonzalez, Y. Low, H. Gu, D. Bickson, andC. Guestrin. Powergraph: Distributed graph-parallelcomputation on natural graphs. In Proc. 10th Symp. onOperating Systems Design and Implementation (OSDI12), pages 17–30, 2012.

[11] J. E. Gonzalez, R. S. Xin, A. Dave, D. Crankshaw, M. J.Franklin, and I. Stoica. Graphx: Graph processing ina distributed dataflow framework. In Proc. 11th Symp.on Operating Systems Design and Implementation (OSDI14), pages 599–613, Broomfield, CO, Oct. 2014.

[12] Q. Huang, K. Birman, R. van Renesse, W. Lloyd, S. Ku-mar, and H. C. Li. An analysis of facebook photo caching.In Proc. 24th Symp. on Operating Systems Principles(SOSP’13.

[13] Y. Huang, Q. Deng, and Y. Zhu. Differentiating yourfriends for scaling online social networks. In Proc. IEEEIntl. Conf. on Cluster Computing (CLUSTER’12), pages411–419, Sept 2012.

[14] M. Jeon, S. Kim, S.-w. Hwang, Y. He, S. Elnikety, A. L.Cox, and S. Rixner. Predictive parallelization: Tamingtail latencies in Web search. In Proc. 37th Intl. ACM SI-GIR Conference on Research & Development in Informa-tion Retrieval (SIGIR’14), pages 253–262, 2014.

[15] D. Karger, E. Lehman, T. Leighton, R. Panigrahy,M. Levine, and D. Lewin. Consistent hashing and ran-dom trees: Distributed caching protocols for relieving hotspots on the World Wide Web. In Proc. 29th Annual ACMSymp. on Theory of Computing (STOC’97), pages 654–663, 1997.

[16] G. Karypis and V. Kumar. A fast and high quality mul-tilevel scheme for partitioning irregular graphs. SIAM J.Sci. Comput., 20(1):359–392, Dec. 1998.

[17] G. Karypis and V. Kumar. Multilevel k-way hypergraphpartitioning. VLSI design, 11(3):285–300, 2000.

[18] D. Lasalle and G. Karypis. Multi-threaded graph par-titioning. In Proc. IEEE 27th Intl. Symp. on Paralleland Distributed Processing (IPDPS’13), pages 225–236,2013.

[19] H. Lin, K. Sun, S. Zhao, and Y. Han. Feedback-control-based performance regulation for multi-tenant applica-tions. In Proc. 15th Intl. Conf. on Parallel and DistributedSystems (ICPADS’09), pages 134–141, Dec 2009.

[20] G. Malewicz, M. H. Austern, A. J. Bik, J. C. Dehnert,I. Horn, N. Leiser, and G. Czajkowski. Pregel: A systemfor large-scale graph processing. In Proc. ACM SIGMODIntl. Conf. on Management of Data (SIGMOD’10), pages135–146, 2010.

[21] C. Martella, D. Logothetis, and G. Siganos. Spin-ner: Scalable graph partitioning for the cloud. CoRR,abs/1404.3861, 2014.

[22] K. Nguyen, C. Pham, D. Tran, F. Zhang, et al. Preserv-ing social locality in data replication for online social net-works. In Proc. 31st Intl. Conf. on Distributed ComputingSystems Workshops (ICDCSW), pages 129–133, 2011.

[23] D. Nicoara, S. Kamali, K. Daudjee, and L. Chen. Her-mes: Dynamic partitioning for distributed social networkgraph databases. In Proc. 18th Intl. Conf. on Extend-ing Database Technology (EDBT’15), pages 25–36, Mar.2015.

[24] R. Nishtala, H. Fugal, S. Grimm, M. Kwiatkowski,H. Lee, H. C. Li, R. McElroy, M. Paleczny, D. Peek,P. Saab, D. Stafford, T. Tung, and V. Venkataramani.Scaling Memcache at Facebook. In Proc. 10th USENIXConf. on Networked Systems Design and Implementation(NSDI’13), pages 385–398, 2013.

[25] J. M. Pujol, V. Erramilli, G. Siganos, X. Yang,N. Laoutaris, P. Chhabra, and P. Rodriguez. The lit-tle engine(s) that could: Scaling online social networks.SIGCOMM ComputCommun. Rev., 40(4):375–386, Aug.2010.

468 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’16) USENIX Association

[26] D. Shue, M. J. Freedman, and A. Shaikh. Performanceisolation and fairness for multi-tenant cloud storage. InProc. 10th USENIX Symp. on Operating Systems Designand Implementation (OSDI 12), pages 349–362, 2012.

[27] D. D. C. Shue. Multi-tenant Resource Allocation ForShared Cloud Storage. PhD thesis, Princeton University,2014.

[28] Y. Song, Y. Sun, and W. Shi. A two-tiered on-demand re-source allocation mechanism for VM-based data centers.IEEE Trans. on Services Computing, 6(1):116–129, 2013.

[29] D. Stein. Partitioning social networks for data locality ona memory budget. Master’s thesis, University of Illinois,Urbana-Champaign, 2012.

[30] D. A. Tran, K. Nguyen, and C. Pham. S-CLONE:Socially-aware data replication for social networks. Com-puter Networks, 56(7):2001–2013, 2012.

[31] D. A. Tran and T. Zhang. Socially aware data partition-ing for distributed storage of social data. In Proc. IFIPNetworking Conference, pages 1–9, May 2013.

[32] D. A. Tran and T. Zhang. S-PUT: An EA-based frame-work for socially aware data partitioning. Computer Net-works, 75:504–518, Dec. 2014.

[33] J. Ugander and L. Backstrom. Balanced label propaga-tion for partitioning massive graphs. In Proc. 6th ACMIntl. Conf. on Web Search and Data Mining (WSDM-13),pages 507–516, 2013.

[34] J. Ugander, B. Karrer, L. Backstrom, and C. Marlow.The anatomy of the Facebook Social Graph. CoRR,abs/1111.4503, 2011.

[35] V. Venkataramani, Z. Amsden, N. Bronson, G. Cabr-era III, P. Chakka, P. Dimov, H. Ding, J. Ferris, A. Gi-ardullo, J. Hoon, et al. TAO: How Facebook serves theSocial Graph. In Proc. 2012 ACM SIGMOD Intl. Conf.on Management of Data, pages 791–792, 2012.

[36] R. Wang, C. Conrad, and S. Shah. Using set cover to opti-mize a large-scale low latency distributed graph. In Proc5th USENIX Workshop on Hot Topics in Cloud Comput-ing, 2013.

![Optimizing Hash-tries for Fast and Lean Immutable ...homepages.cwi.nl/~jurgenv/presentations/IFIP2014.pdf · [Michelangelo di Lodovico Buonarroti Simoni] SWAT - SoftWare Analysis](https://static.fdocuments.in/doc/165x107/5fcb816f09eeeb64ec54413f/optimizing-hash-tries-for-fast-and-lean-immutable-jurgenvpresentationsifip2014pdf.jpg)