slides session 1

description

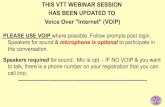

Transcript of slides session 1

DATA MINING - 10 FEBRUARY 2004

Data Mining

Luc Dehaspe

K.U.L. Computer Science Department

-

Marc Van Hulle

K.U.L. Neurofysiologie Department

http://toledo.kuleuven.ac.be/

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Course overview

Data Mining

Session 1: Introduction

Session 2-3: Data warehousing/preparation

Session 4-6: Symbolic Data Mining techniques

Session 7: Application + Evaluation of Data Mining results

Session 8-14: Numeric Data Mining methods• statistical techniques• self-organizing techniques

(Hands-on) Exercise sessions

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Exercise session

Part 1 (L. Dehaspe) 2* 2.5 h “paper-and-pencil” sessions

application of algorithms

Part 2 (M. Van Hulle) hands-on exercises

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Exam

Written exam, closed book

Part 1 (Sessions 1-7): 50% Coverage

Questions RESTRICTED TO CONTENT OF SLIDES Occasional pointers to additional material: I do not expect you to study this

material Questions

One main question: apply+understand algorithm (30%) Two smaller questions: explain concept, compute model quality, … (2*10%)

Part 2 (Sessions 8-14): 50% (explained later by Marc Van Hulle)

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Working definition data mining

tools to search data for patterns and relationships that lead to better business decisions

“business”: commercial/scientific

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Overview

myths and facts

the Data Mining process

methods visual non-visual

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Myths and facts

New technology cycle phase 1: hype

unrealistic expectations “naive” users

phase 2: frustration phase 3: rejection

Alternative: realistic view on vital technology

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Myth 1: tabula rasa (virgin territory) Data mining methods are fundamentally different

from previous methods

Fact Underlying ideas often decades old

neural networks: 1940 k-nearest neighbour: 1950 CART (regression trees): 1960

Novel integrated applications to general “business” problems more data, more computing power non-academic users

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Take home lesson 1

Not: 1 optimal method optimal

But: portfolio of tools, mixture of old and new

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Myth 2: manna from heaven Data mining produces surprising results

that will turn your “business” upside-down without any input of domain expert knowledge without any tuning of the technology

Fact incremental changes rather than revolutionary

long term competitive advantage occasional breakthroughs (e.g. link aspirine-Reyes Syndrome)

technology assistant to the domain expert

careful selection required of: goal technology

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Take home lesson 2

Crucial combination of “business” (application domain) expertise data mining technology expertise

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data Mining process model

Definition

Link with the scientific method

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

The data mining process

process : iterative; learn to ask better questions

valid : patterns can be generalized to new data

novel and useful : offer a competitive advantage

understandable : contribute to insight in the domain

The non-trivial process of finding valid, novel, potentially useful, and ultimately understandable patterns in data

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Interrogating the databaseLook-up queries

What is the average toxicity of cadmium chloride?

Biological dataBiological data

Clinical dataClinical dataChemical dataChemical data

How many earthquakes have occurred last year?

Which customers have a car insurance?

How did HIV patient p123 react to AZT?

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Interrogating the databaseFinding patterns

What is the relation between geological features and the occurrence of earthquakes?

Data MiningData Mining

Biological dataBiological data

Clinical dataClinical dataChemical dataChemical data

What is the relation between in vitro activity and chemical structure?

What is the relation between the HIV patient’s therapy history and response to AZT?

What is the profile of returning customers?

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

2

3 4

1

NON-ACTIVE

6

7

8

5

ACTIVE

Science

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Science

collect data

build hypothesis

verify hypothesis

formulate theory

Tycho Brahe (1546-1601)

observational genius

collected data on Mars

Johannes Kepler (1571-1630)

mined Brahe’s data

discovered laws of planetary motion

The formation of hypotheses is the most mysterious of all the categories of scientific method. Where they come from, no one knows. A person is setting somewhere, minding his own business, and suddenly - flash! - he understands something he didn’t understand before.

Robert M. Pirsig, Zen and the Art of Motorcycle maintenance

The formation of hypotheses is the most mysterious of all the categories of scientific method. Where they come from, no one knows. A person is setting somewhere, minding his own business, and suddenly - flash! - he understands something he didn’t understand before.

Robert M. Pirsig, Zen and the Art of Motorcycle maintenance

The actual discovery of such an explanatory hypothesis is a process of creation, in which imagination as well as knowledge is involved.

Irving Copi, Introduction to Logic, 1986

The actual discovery of such an explanatory hypothesis is a process of creation, in which imagination as well as knowledge is involved.

Irving Copi, Introduction to Logic, 1986

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Evolution of data generation

Data source

Data analyst

Data

< 1950 > 2000

Data RichKnowledge Poor

Data RichKnowledge Poor

Everyone, even the most patient and thorough investigator, must pick and choose, deciding which facts to study and which to pass over.

Irving Copi, Introduction to Logic, 1986

Everyone, even the most patient and thorough investigator, must pick and choose, deciding which facts to study and which to pass over.

Irving Copi, Introduction to Logic, 1986

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

The scientific method

collect data

build hypothesis

verify hypothesis

formulate theory

Data Mining

Statistics - OLAP

care inspiration

Knowledge discovery in Databases

Data warehousing

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data Mining Definition:

Extracting or “mining” knowledge from large amounts of data

CRISP-DM process modelCRISP-DM process model

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data mining in industry

An in silico research assistant allowing researchers to Explore integrated database For variety of research purposes (“business goals”) Using optimal selection of data mining technologies

pattern

knowledge

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data Mining process model CRISP-DM

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Business understanding

Which are the business goals?

Translation to data mining problem definition

Design of a plan to meet objectives

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data understanding

First collection of data

Becoming familiar with the data

Judge data quality

Discovery of first insights interesting subsets

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Data preparation

Extract final data set from original set

Selection of tables records attributes

transformation

data cleaning

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Modelling

Selection modelling techniques

calibrating parameters

regular backtracking to adapt data to technology

(some techniques discussed further on)

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Evaluation

Decide whether to use Data Mining results

Verification of all steps

Check whether business goals have been met

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Deployment

Organisation & presentation of new insights

variable complexity deliver report implement software that allows process to be repeated

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods

Pro image has got broader information-bandwidth than text

(cf., an image tells more than a thousand words)

Con problems with representation of > 3 dimensions not effective in case of color blindness interpretation gives more information on subject than on object

stars, clouds, Hermann Rorschach test

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods Error detection

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods Linkage analysis

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods Conditional probabilities

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods landscapes

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Visual Data Mining methods Scatter plots

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-visual data mining methods Statistics - OLAP

descriptive: average, median, standard deviation, distribution hypothesis testing: (observed differences)/(random variation) discriminant analysis predictive regression analysis: linear, non-linear clustering

Neural networks

Decision trees and rules

Conceptual clustering

Association rules

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

(Non-)visual Data Mining methodsOLAP - Data cubes

CityDate

Pro

du

ct

JuiceColaMilk

CreamToothpaste

SoapPizza

Cheese1 2 3 4 5 6 7

LeuvenNYTokyo

CasablancaRio

10

50

35

60

20

15

70

25

Fact data: sales volume in $100

Online analytical processing

Classical statistical methods

+database technology

real-time calculations

powerful visualisation methods

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsRegression

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-visual Data Mining methodsDiscriminant analysis

R.A. Fischer, 1936

discovers planes that separate classes

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsNeural Networks

Represent functions with output a discrete value, a real value, or a vector

Neurobiological motivation

Parameters network tuned on basis of input-output examples (backpropagation)

e.g. . input from sensors camera (face recognition) microphone (speech recognition)

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsDecision trees

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsDecision trees

Attribute selection information gain “how well does an attribute distribute

the data according to their target class maximal reduction of Entropy =

- pM log2 pM - pF log2 pF

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsDecision rules

IF Frame = 2-Door AND Engine V6 AND Age < 50 AND Cost > 30K AND Color = Red

THEN buyer is highly likely to be male

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methods

Clustering

Eisen et al, PNAS 1998

Cholesterol biosynthesis

Cell cycleEarly responseSignaling and angiogenesis

Wound healing

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsConceptual clustering

Groups examples and provides description of each group

: all examples

A : Age=-20

B : Age =20-40

b1 : Age =20-40 en Frame=2-Door

b2 : Age =20-40 en Frame = 4-Door

C : Age =40-60

D : Age =+60

d1 : Age =+60 en Frame = 2-Door

d2 : Age =+60 en Frame = 4-Door

AC

D

Bb2b1

d1 d2

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Non-Visual Data Mining methodsAssociation rules

40 %60 %

Wine and PizzaWine and Pizza Wine, Pizza, Floppy, and CheeseWine, Pizza, Floppy, and Cheese

item sets

IF Wine and Pizza THEN Floppy and CheeseIF Wine and Pizza THEN Floppy and Cheese

associatio

n-

rule

frequency: 40 %

accuracy: 40% / 60% = 66%

IF-THEN rules show relationships

e.g. . Which products bought together?

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Evaluation: pitfallsPost hoc ergo propter hoc

Everyone who drank Stella in the year 1743 is now dead.

Therefore, Stella is fatal.

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Evaluation: pitfallsCorrelation does not imply Causality

Palm size correlates with your life expectancy

The larger your palm, the less you will live, on average.

Women have smaller palms

and live 6 years longer on average

Why?

!actions inspired by data mining results!

DATA MINING - 10 FEBRUARY 2004 © LUC DEHASPE - 2004

Evaluation: pitfallsHypothesis validation

descriptive statistics: 1 hypothesis

data mining: 1 hypothesis-SPACE much higher probability of random relationships validation on separate data set required