Simulations of Memory Hierarchy LAB 2: CACHE LAB.

-

Upload

nickolas-wheeler -

Category

Documents

-

view

224 -

download

1

Transcript of Simulations of Memory Hierarchy LAB 2: CACHE LAB.

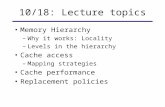

Simulations of Memory Hierarchy

LAB 2: CACHE LAB

OVERVIEW• Objectives

• Cache Set-Up

• Command line parsing

• Least Recently Used (LRU)

• Matrix Transposition

• Cache-Friendly Code

OBJECTIVE• There are two parts to this lab:

• Part A: Cache Simulator

• Simulate a cache table using the LRU algorithm

• Part B: Optimizing Matrix Transpose

• Write “cache-friendly” code in order to optimize cache hits/misses in the implementation of a matrix transpose function

• When submitting your lab, please submit the handin.tar file as described in the instructions.

MEMORY HIERARCHY• Pick your poison: smaller, faster, and costlier, or larger,

slower, and cheaper

CACHE ADDRESSING• X-bit memory addresses (in Part A, X <= 64 bits)

• Block offset: b bits

• Set index: s bits

• Tag bits: X – b – s

• Cache is a collection of S=2^s cache sets

• Cache set is a collection of E cache lines

• E is the associativity of the cache

• If E=1, the cache is called “direct-mapped”

• Each cache line stores a block of B=2^b bytes of data

ADDRESS ANATOMY

CACHE TABLE BASICS• Conditions:

• Set size (S)

• Block size (B)

• Line size (E)

• Note that the total capacity of this cache would be S*B*E

• Blocks are the fundamental units of the cache

CACHE TABLE CORRESPONDENCE WITH ADDRESS

Example for 32 bit address

CACHE SET LOOK-UP• Determine the set index and the tag bits based on the

memory address

• Locate the corresponding cache set and determine whether or not there exists a valid cache line with a matching tag

• If a cache miss occurs:

• If there is an empty cache line, utilize it

• If the set is full then a cache line must be evicted

TYPES OF CACHE MISSES• Compulsory Miss:

• First access to a block has to be a miss

• Conflict Miss:

• Level k cache is large enough, but multiple data objects all map to the same level k block

• Capacity Miss:

• Occurs when the working set of blocks (blocks of memory being used) is larger than the cache

PART A:CACHE SIMULATION

YOUR OWN CACHE SIMULATOR• NOT a real cache

• Block offsets are NOT used but are important in understanding the concept of a cache

• s, b, and E given at runtime

FUNCTIONS TO USE FOR COMMAND LINE PARSING• int getopt(int argc, char*const* argv, const char*

options)

• See: http://www.gnu.org/software/libc/manual/html_node/Example-of-Getopt.html#Example-of-Getopt

• long long int strtoll(const char* str, char** endptr, int base)

• See: http://www.cplusplus.com/reference/cstdlib/strtoll/

LEAST RECENTLY USED (LRU) ALGORITHM

• A least recently used algorithm should be used to determine which cache lines to evict in what order

• Each cache line will need some sort of “time” field which should be update each time that cache line is referenced

• If a cache miss occurs in a full cache set, the cache line with the least relevant time field should be evicted

PART B:OPTIMIZING MATRIX TRANSPOSE

WHAT IS A MATRIX TRANSPOSITION?

• The transpose of a matrix A is denoted as AT

• The rows of AT are the columns of A, and the columns of AT are the rows of A

• Example:

GENERAL MATRIX TRANSPOSITION

CACHE-FRIENDLY CODE• In order to have fewer cache misses, you must make

good use of:

• Temporal locality: reuse the current cache block if possible (avoid conflict misses [thrashing])

• Spatial locality: reference the data of close storage locations

• Tips:

• Cache blocking

• Optimized access patterns

• Your code should look ugly if done correctly

CACHE BLOCKING• Partition the matrix in question into sub-matrices

• Divide the larger problem into smaller sub-problems

• Main idea:

• Iterate over blocks as you perform the transpose as opposed to the simplistic algorithm which goes index by index, row by row

• Determining the size of these blocks will take some amount of thought and experimentation

QUESTIONS TO PONDER• What would happen if instead of accessing each index in row

order you alternated with jumping from row to row within the same column?

• What would happen if you declared only 4 local variables as opposed to 12 local variables?

• Is it possible to get rid of the local variables all together?

• What happens when accessing elements along the diagonal?

• What happens when the program is run in a different directory?

(XKCD)