Simulation-based optimization: Upper Confidence Tree and Direct Policy Search

-

Upload

olivier-teytaud -

Category

Education

-

view

640 -

download

1

Transcript of Simulation-based optimization: Upper Confidence Tree and Direct Policy Search

Simulations for combining heuristics and consistent algorithms

Applications to Minesweeper, the game of Goand Power Grids

O. Buffet, A. Coutoux, H. Doghmen,W. Lin, O. Teytaud,& many others

In France (RTE+Luc Lasne)

Beautiful spatially

distributed problem

Short term (~10s): dispatchingReal-time control, humans in the loop

Days, weeks: combinatorial optimization

Years: hydroelectric stocksStochasticity (provides price of water for week-ahead)

50 years: investmentsExpensive optimization of strategies (parallel)

Uncertainties: Multiobjective (many!) ? Worst-case ?

Goals / tools

Optimizing investmentsFor the next 50 years

In Europe / North Africa

Taking into account power plants / networks

Multi-objective (scenarios), visualization

with:

Collaboration with a company (data, models)

3 ph.D. students full time

dedicated machine 500/1000 cores

Main ideas

We like simulation-based optimizersFor analyzing simulations

For bilevel optimization (anytime criterion: smooth performance improvement)

Required knowledge is a simulator:No dependency on additional knowledge

Simplified model (linearized...) not necessary

But we want to be able to plug expertise in terms of strategy (e.g. handcrafted approximate policy)

Main ideas

We like simulation-based optimizersFor analyzing simulations

For bilevel optimization (anytime criterion: smooth performance improvement)

All we want as required knowledge is a simulator:No dependency on additional knowledge

No simplified model

But we want to be able to plug expertise in terms of strategy (e.g. handcrafted approximate policyTools:

Upper Confidence Tree = Adaptive Simulator, good for combinatorial aspects

Direct Policy Search = Adaptive Simulator, good for long term effects

A great challenge: MineSweeper.

- looks easy- in fact, not easy: many myopic (one-step-ahead)approaches.- partially observable

1. Rules of MineSweeper

2. State of the art

3. The CSP approach

4. The UCT approach

5. The best of both worlds

RULES

At thebeginning,alllocationsareCovered(unkwown).

I playhere!

Good news!

No mine intheneighborhood!

I can clickall theneighbours.

I have 3uncoveredneighbors,and I have 3mines in theneighborhood==> 3 flags!

I knowit's amine,so I puta flag!

No info !

I play here and I lose...

The mostsuccessfulgame ever!

Who in thisroom neverplayedMine-Sweeper ?

1. Rules of MineSweeper

2. State of the art

3. The CSP approach

4. The UCT approach

5. The best of both worlds

Do youthink it'seasy ?(10 mines)

MineSweeperis not simple.

What isthe optimalmove ?

What isthe optimalmove ?

Remark: the question makes sense, withoutKnowing the history.You don't need the history for playing optimaly.==> (this fact is mathematically non trivial!)

What isthe optimalmove ?

This one is easy.

Both remaining locations win with proba 50%.

Moredifficult!

Whichmove is optimal ?

Here, theclassicalapproach(CSP)is wrong.

Probabilityof a mine ?- Top: - Middle: - Bottom:

Probabilityof a mine ?- Top: 33%- Middle: - Bottom:

Probabilityof a mine ?- Top: 33%- Middle: 33%- Bottom:

Probabilityof a mine ?- Top: 33%- Middle: 33%- Bottom: 33%

Probabilityof a mine ?- Top: 33%- Middle: 33%- Bottom: 33%

==> so all moves equivalent ?

Probabilityof a mine ?- Top: 33%- Middle: 33%- Bottom: 33%

==> so all moves equivalent ?==> NOOOOO!!!

Probabilityof a mine ?- Top: 33%- Middle: 33%- Bottom: 33%

Top or bottom: 66% of win!

Middle: 33%!

The myopic (one-step ahead)approach playsrandomly.

The middle is abad move!

Even with sameproba of mine,some moves arebetter than others!

State of the art:- solved in 4x4- NP-complete- Constraint Satisfaction Problem approach: = Find the location which is less likelyto be a mine, play there. ==> 80% success beginner (9x9, 10 mines) ==> 45% success intermediate (16x16, 40 mines) ==> 34% success expert (30x40, 99 mines)

1. Rules of MineSweeper

2. State of the art

3. The CSP approach(and other old known methods)

4. The UCT approach

5. The best of both worlds

- Exact MDP: very expensive. 4x4 solved.- Single Point Strategy (SPS): simple local solving- CSP (constraint satisf. problem): the main approach. - (unknown) state: x(i) = 1 if there is a mine at location i - each visible location is a constraint: If location 15 is labelled 4, then the constraint is x(04)+x(05)+x(06) +x(14)+ x(16) +x(24)+x(25)+x(26) = 4. - find all solutions x1, x2, x3,...,xN - P(mine in j) = (sumi Xij ) / N S'

Example: given the state below, and the action top left, what are the possible next states ?

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

We published a version of UCTfor MineSweeper in which this was implemented usingthe rejection method only.

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

Rejection algorithm:1- randomly draw the mines2- if it's ok, return the new observation3- otherwise, go back to 1.

What do I need for implementing UCT ?

A complete generative model.Given a state and an action, I must be able to simulate possible transitions.

State S, Action a:(S,a) ==> S'

Example: given the state below, and the action top left, what are the possible next states ?

It is mathematically ok, but it is too slow.Then, we used a weak CSP implementation.Still too slow.Now a reasonably fast implementation, withLegendre et al heuristic.

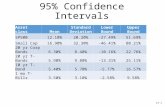

EXPERIMENTAL RESULTSHugecomputationtimeOur results(total = a few days)10 000 UCT-simulationsper move

CONCLUSIONS: amethodology for sequentialdecision making

- When you have a myopic solver (i.e. which neglects long term effects, as too often in industry!) ==> improve it with heuristics (as Legendre et al) ==> combine with UCT (as we did) ==> significant improvements - We have similar experiments onindustrial testbeds

Main ideas

We like simulation-based optimizersFor analyzing simulations

For bilevel optimization (anytime criterion: smooth performance improvement)

All we want as required knowledge is a simulator:No dependency on additional knowledge

No simplified model

But we want to be able to plug expertise in terms of strategy (e.g. handcrafted approximate policyTools:

Upper Confidence Tree = Adaptive Simulator, good for combinatorial aspects

Direct Policy Search = Adaptive Simulator, good for long term effects

What is Direct Policy Search ?

I have a parametric policy decision = p(w,s) Inputs: parameter w, state s

Output: decision p(w,s)

E.g. p(w,s) = w.s (scalar product)

p(w,s) = W0 + W1 x tanh(W2 x s+W3) (neural network)

I have a simulator cost = simulate(p, transition):Inputs = policy p , transition function

Output = cost (possibly noisy)

Principle:state = initial state

While (state not final)decision=p(state)

state=transition(state,decision)

Direct Policy Search( transition , policy p(.,.) ):w = argmin simulate( p(w,.) ) // with your favorite optimization algorithm

return p(w,.)

A big part ofour work.

Example of policy

p(w,state) = decision such that Alpha = W0+W1tanh(W2.state)+W3

Week-Ahead-Reward(decision) + Alpha.Stock(decision) is maximum

==> if linear transition, compliant with huge action dimension ==> non-linearities handled by the neural net

One or two rivers (7 stocks)

Network of rivers (7 rivers)

Investment problem

Summary

Two simulation-based tools for Sequential Decision Making:UCT = a nice tool for short term combinatorial effect

DPS = a stable tool for long term effects

Both:

Are anytime

Provide simulation results

Can take into account non-linear effects

High-dimension of the state space

Thanks for your attention!

9 Mines.What is theoptimal move ?

Click to edit the title text format

Click to edit the outline text formatSecond Outline LevelThird Outline LevelFourth Outline LevelFifth Outline LevelSixth Outline LevelSeventh Outline LevelEighth Outline LevelNinth Outline Level