SCALE 15x Minimizing PostgreSQL Major Version Upgrade Downtime

-

Upload

jeff-frost -

Category

Technology

-

view

524 -

download

1

Transcript of SCALE 15x Minimizing PostgreSQL Major Version Upgrade Downtime

Minimizing Major Version Upgrade DowntimeUsing Slony!Jeff Frost | SCALE | 2017/03/03

Slony = plural for (many) elephantsSlonik = little elephant (in a cute way)Slon = 1 elephant

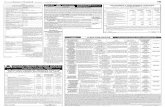

dump / restorepg_upgradelogical replication

Major Version Upgrade Methods

pg_dump mydb | psql -h mynewdbserver mydbpg_dump -Fc -f mydb.dmp mydb && rsync mydb.dmp mynewdbserver:/tmppg_restore -j 8 -d mydb mydb.dmpProbably fine for DBs under 100GB

Dump / Restore

The old fashioned, tried/true method. It always works!

Depending on your maintenance window requirements and disk subsystem

But, you might end up like this poor fella..

ZZZZzzzzzzzz.

A good option if you need to do the upgrade in placeA good option if you are missing primary keys (gasp!) on larger tablesIts a one way trip! (You tested the new PostgreSQL version with your workload, right?)

pg_upgrade

Bucardo - https://bucardo.org/wiki/BucardoLondiste - http://pgfoundry.org/projects/skytoolsSlony! - http://www.slony.info/

Logical Replication

Graceful Switchover**AND**Graceful Switchback!!

Why Slony?

What do I mean by this?

In slony a switchover reverses the direction of the subscription.

Trigger based logical replicationRequires Primary Keys on all replicated tablesKicks off an initial syncTriggers store data modification statements in log tables for later replaySlony Trivia: Slony is Russian for a Group of Elephants

Slony High Level

ClusterNodeSetOriginProviderSubscriber

Slony Basic Terminology

A named set of PostgreSQL database instancescluster name = migration_migration schema created in PostgreSQL DBs that are part of the cluster

Slony Cluster

A database that is part of a clusterUltimately defined by the CONNINFO string'dbname=mydb host=myserver user=slony''dbname=mydb host=mynewserver user=slony''dbname=mydb host=myserver user=slony port = 5433'

Slony Node

A set of tables and sequences that are to be replicatedYou can have multiple sets in a clusterWere not going to do that today

Slony Set

Origin is the read/write masterOrigin is also the first ProviderSubscriber nodes receive their data from ProvidersFor the purpose of this tutorial, we will have an Origin node which is the only Provider node

Slony Origin/Provider/Subscriber

Debian Derivativesapt.postgresql.orgpostgresql-9.5-slony1-2slony1-2-binRedhat Derivativesyum.postgresql.orgslony1-95

Slony Installation

wget http://www.slony.info/downloads/2.2/source/slony1-2.2.5.tar.bz2tar xvfj slony1-2.2.5.tar.bz2cd slony1-2.2.5./configure && make && sudo make install

Slony Installation

Dont make any schema changes while youve got slony running

One item of Note!

Its going to be very different than what youre used to and it can break replication.So just put a freeze on DDL changes till the migration is complete.

Make a schema-only copy of the DBOur first slonik scriptPreambleCluster InitializationNode Path InfoSet CreationTable AdditionSequence AdditionSubscribeKick off replication!

Lets get started!

pg_dump --schema-only mgd |psql --host db2.jefftest mgd

Schema Only Copy of the DB

Lets Not Do That!

Who Wants to See a LIVE Demo?

Schema Only Copy of the DB

Slonik is the Slony command processorYou call it just like any other scripting language with a shebang at the top:#!/usr/bin/slonikTrivia: Slonik means little elephant in Russian

Our First Slonik Script!

Interprets the slony confguration and command scripting language

#!/usr/bin/slonik

CLUSTER NAME = migration;

NODE 1 ADMIN CONNINFO='host=db1.jefftest dbname=mgd user=slony port=5432';

NODE 2 ADMIN CONNINFO='host=db2.jefftest dbname=mgd user=slony port=5432';

Preamble

Define the cluster name

Admin Conninfo is how the slonik interpreter will connect to the nodes.

INIT CLUSTER (id = 1, comment = 'db1.jefftest');

Initialize the Cluster

Creating the _migration slony schema in the primary DBPrefer to make the comment the name of the DB serverMight not make sense if youre replicating to a DB on the same serverIn that case, maybe use something like db1.jefftest.old and db1.jefftest.new

INIT CLUSTER (id = 1, comment = 'db1.jefftest');

This becomes the id of the Origin Node.

Initialize the Cluster

STORE NODE (id = 2, comment = 'db2.jefftest', event node = 1);

Initialize Node 2

STORE PATH (server = 1, client = 2, conninfo = 'host=db1.jefftest dbname=mgd user=slony port=5432');

STORE PATH (server = 2, client = 1, conninfo = 'host=db2.jefftest dbname=mgd user=slony port=5432');

Setup the PATHs

This is how the slon daemons will connect to each node. This is usually the same as the ADMIN CONNINFO, but not necessarily.

CREATE SET (id = 1, origin = 1, comment = 'all tables and sequences');

Create the Set

CREATE SET (id = 1, origin = 1, comment = 'all tables and sequences');

ID of the Origin node.

Create the Set

Got Primary Keys on all your tables?

SET ADD TABLE (SET id = 1, origin = 1, TABLES='public\\.*');SET ADD TABLE (SET id = 1, origin = 1, TABLES='mgd\\.*');

Add Tables to the Set!

Dont do this:

SET ADD TABLE (SET id = 1, origin = 1, TABLES='*');

Add Tables to the Set!

Itll subscribe the slony schema as well and chaos will ensue after the initial sync.

Dont have primary keys on all your tables:

SET ADD TABLE (SET id = 1, origin = 1, FULL QUALIFIED NAME = 'mgd.acc_accession', comment='mgd.acc_accession TABLE');

SET ADD TABLE (SET id = 1, origin = 1, FULL QUALIFIED NAME = 'mgd.acc_accessionmax', comment='mgd.acc_accessionmax TABLE');

SET ADD TABLE (SET id = 1, origin = 1, FULL QUALIFIED NAME = 'mgd.acc_accessionreference', comment='mgd.acc_accessionreference TABLE');

Add Tables to the Set!

SQL to the Rescue:

SELECT 'SET ADD TABLE (SET id = 1, origin = 1, FULL QUALIFIED NAME = ''' || nspname || '.' || relname || ''', comment=''' || nspname || '.' || relname || ' TABLE'');' FROM pg_class JOIN pg_namespace ON relnamespace = pg_namespace.oid WHERE relkind = 'r' AND relhaspkey AND nspname NOT IN ('information_schema', 'pg_catalog');

Add Tables to the Set!

What about the tables that dont have pkeys?

Add primary keys if you canIf not, dump/restore just those tables during the maintenance window

Add Tables to the Set!

* Make sure you script up *and test* that dump / restore to minimize the downtime and also dont forget to script up dump/restoring them the opposite direction in case you need to revert.

SET ADD SEQUENCE (SET id = 1, origin = 1, SEQUENCES = 'public\\.*');

SET ADD SEQUENCE (SET id = 1, origin = 1, SEQUENCES = 'mgd\\.*');

Dont Forget the Sequences!

Or the old school way:

SET ADD SEQUENCE (SET id = 1, origin = 1, FULL QUALIFIED NAME = 'mgd.pwi_report_id_seq', comment='mgd.pwi_report_id_seq SEQUENCE');

SET ADD SEQUENCE (SET id = 1, origin = 1, FULL QUALIFIED NAME = 'mgd.pwi_report_label_id_seq', comment='mgd.pwi_report_label_id_seq SEQUENCE');

Add Sequences to the Set!

SUBSCRIBE SET (id = 1, provider = 1, receiver = 2, forward = yes);

Subscribe the Set!

#!/usr/bin/slonik

CLUSTER NAME = migration;NODE 1 ADMIN CONNINFO='host=db1.jefftest dbname=mgd user=slony port=5432';NODE 2 ADMIN CONNINFO='host=db2.jefftest dbname=mgd user=slony port=5432';INIT CLUSTER (id = 1, comment = 'db1.jefftest');STORE NODE (id = 2, comment = 'db2.jefftest', event node = 1);STORE PATH (server = 1, client = 2, conninfo = 'host=db1.jefftest dbname=mgd user=slony');STORE PATH (server = 2, client = 1, conninfo = 'host=db2.jefftest dbname=mgd user=slony');CREATE SET (id = 1, origin = 1, comment = 'all tables and sequences');SET ADD TABLE (SET id = 1, origin = 1, TABLES='public\\.*');SET ADD TABLE (SET id = 1, origin = 1, TABLES='mgd\\.*');SET ADD SEQUENCE (SET id = 1, origin = 1, SEQUENCES = 'public\\.*');SET ADD SEQUENCE (SET id = 1, origin = 1, SEQUENCES = 'mgd\\.*');SUBSCRIBE SET (id = 1, provider = 1, receiver = 2, forward = yes);

Heres the entire (unreadable on a slide?) script

Kick Off Our Script!

OMG The Site is Down!!!

Add lock_timeout if possibleAdded in 9.3Abort any statement that waits longer than this for a lock.We only need it for trigger addition, so we just add the ENV variable before we call our slonik script:

PGOPTIONS="-c lock_timeout=5000" ./subscribe.slonik

Add lock_timeout if [email protected]: ~$ PGOPTIONS="-c lock_timeout=5000" ./subscribe.slonik

./subscribe.slonik:11: Possible unsupported PostgreSQL version (90601) 9.6, defaulting to 8.4 support./subscribe.slonik:20: PGRES_FATAL_ERROR lock table "_migration".sl_config_lock;select "_migration".setAddTable(1, 1, 'mgd.acc_accession', 'acc_accession_pkey', 'replicated table'); - ERROR: canceling statement due to lock timeoutCONTEXT: SQL statement "lock table "mgd"."acc_accession" in access exclusive mode"PL/pgSQL function _migration.altertableaddtriggers(integer) line 48 at EXECUTE statementSQL statement "SELECT "_migration".alterTableAddTriggers(p_tab_id)"PL/pgSQL function setaddtable_int(integer,integer,text,name,text) line 104 at PERFORMSQL statement "SELECT "_migration".setAddTable_int(p_set_id, p_tab_id, p_fqname,p_tab_idxname, p_tab_comment)"PL/pgSQL function setaddtable(integer,integer,text,name,text) line 33 at PERFORM

Might have to break up your slonik script into multiple SET ADD TABLE scriptsOutside the scope of this talk, but you can talk to me later if youre interested

Slon is the Slony daemon which manages replication.You need one for each node.Trivia: slon is Russian for elephant

Introducing Slon

nohup /usr/bin/slon migration "dbname=mgd host=db1.jefftest user=slony" >> ~/slony.log &

nohup /usr/bin/slon migration "dbname=mgd host=db2.jefftest user=slony" >> ~/slony.log &

Start up the Slons!

Start up the Slons!

[email protected]: ~$ tail -f slony.log2017-02-07 00:43:07 UTC CONFIG remoteWorkerThread_1: prepare to copy table "mgd"."wks_rosetta"2017-02-07 00:43:07 UTC CONFIG remoteWorkerThread_1: all tables for set 1 found on subscriber2017-02-07 00:43:07 UTC CONFIG remoteWorkerThread_1: copy table "mgd"."acc_accession"2017-02-07 00:43:07 UTC CONFIG remoteWorkerThread_1: Begin COPY of table "mgd"."acc_accession"NOTICE: truncate of "mgd"."acc_accession" failed - doing delete2017-02-07 00:44:45 UTC CONFIG remoteWorkerThread_1: 2935201458 bytes copied for table mgd"."acc_accession"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: 369.339 seconds to copy table "mgd"."acc_accession"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: copy table "mgd"."acc_accessionmax"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: Begin COPY of table "mgd"."acc_accessionmax"NOTICE: truncate of "mgd"."acc_accessionmax" succeeded2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: 119 bytes copied for table "mgd"."acc_accessionmax"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: 0.088 seconds to copy table "mgd"."acc_accessionmax"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: copy table "mgd"."acc_accessionreference"2017-02-07 00:49:17 UTC CONFIG remoteWorkerThread_1: Begin COPY of table "mgd"."acc_accessionreference"NOTICE: truncate of "mgd"."acc_accessionreference" succeeded2017-02-07 00:49:37 UTC CONFIG remoteWorkerThread_1: 538589206 bytes copied for table "mgd"."acc_accessionreference"

Watch the Logs (and Exercise Patience!)

SELECT st_lag_num_events, st_lag_time FROM _migration.sl_status\watch

Watch every 2sTue Feb 7 00:52:01 2017

st_lag_num_events | st_lag_time-------------------+----------------- 64 | 00:11:40.097368(1 row)

Watch the sl_status view

2017-02-07 02:11:30 UTC CONFIG remoteWorkerThread_1: Begin COPY of table "mgd"."wks_rosetta"NOTICE: truncate of "mgd"."wks_rosetta" succeeded2017-02-07 02:11:30 UTC CONFIG remoteWorkerThread_1: 5302 bytes copied for table "mgd"."wks_rosetta"2017-02-07 02:11:30 UTC CONFIG remoteWorkerThread_1: 0.060 seconds to copy table "mgd"."wks_rosetta"2017-02-07 02:11:30 UTC INFO remoteWorkerThread_1: copy_set SYNC found, use event seqno 5000000205.2017-02-07 02:11:30 UTC INFO remoteWorkerThread_1: 0.016 seconds to build initial setsync status2017-02-07 02:11:30 UTC INFO copy_set 1 done in 1837.853 seconds2017-02-07 02:11:30 UTC CONFIG enableSubscription: sub_set=1

Initial Sync is done!

* Which is about 30 minutes for our 40GB mgd database

SELECT st_lag_num_events, st_lag_time FROM _migration.sl_status\watch

Watch every 2sTue Feb 7 02:27:51 2017

st_lag_num_events | st_lag_time-------------------+----------------- 1 | 00:00:11.986675(1 row)

Wait for slony to catch up

#!/usr/bin/slonik

CLUSTER NAME = migration;

NODE 1 ADMIN CONNINFO='host=db1.jefftest dbname=mgd user=slony port=5432';NODE 2 ADMIN CONNINFO='host=db2.jefftest dbname=mgd user=slony port=5432';

LOCK SET ( ID = 1, ORIGIN = 1);MOVE SET ( ID = 1, OLD ORIGIN = 1, NEW ORIGIN = 2);

Time to Switchover!

Time to Switchover!

Lets check!

TestTest!Test!!Exercise patience

Now What?

You probably did this off hours or on a weekend!

Wait until youve had at least a day or two running on the new PostgreSQL version before you tear it down.

Thats the best part about Slony!We can switch back!

CLUSTER NAME = migration;

NODE 1 ADMIN CONNINFO='host=db1.jefftest dbname=mgd user=slony port=5432';

NODE 2 ADMIN CONNINFO='host=db2.jefftest dbname=mgd user=slony port=5432';

LOCK SET ( ID = 1, ORIGIN = 2);MOVE SET ( ID = 1, OLD ORIGIN = 2, NEW ORIGIN = 1);

What if we find a regression on Monday?

Lets give it a shot!

Lets rip it out!Can be as simple as:killall slonDROP SCHEMA _migration CASCADE;Watch out for locking!

What if we didnt find a regression?

* Might also want to uninstall the packages

Lets give it a shot!

@ProcoreJobsQuestions?